Abstract

The challenge to predict variations in penetration depth in one-sided fillet welds during robotized gas metal arc welding has been addressed by a pilot investigation of technical possibilities and limitations. The main cause for the variation in penetration depth is considered to be variation in joint gap size. Special attention has been paid in order to adopt the experimental conditions to conform to industrial welding conditions. The employed method uses in-process monitoring of joint gap size together with an empirical model relating penetration depth to gap size in order to predict this depth. The gap size estimates are based on image information from two cameras, one visual and one infrared. The results, that are evaluated off-line, confirm the development of a real-time method providing technical solutions that are industrially tractable. The results also pinpoint areas of further improvements towards increased robustness and reduced estimation uncertainties.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

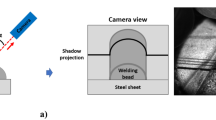

This paper addresses the challenge to predict penetration depth in one-sided fillet welds during robotized metal active gas (MAG) welding [22]. The penetration depth in fillet welds corresponds to the extension of bounding in the root between plates (see Fig. 1), where the distance i is the penetration depth, a is the weld throat thickness and g is the gap size between the plates. The reason for addressing this issue is that imperfections in the weld root has an influence on fatigue life and structural integrity [9]. This in turn has a direct negative effect on the quality and service life of welded structures. Important mechanisms behind variations in penetration depth are for example inaccuracy in the robotic manipulator motions, plate surface condition (joint preparation) and variations in the joint gap. The latter mechanism is considered to be a major source of variance and is caused by wide fit-up tolerances in the included plate members. This situation is very common in welding production in e.g. the heavy vehicle industry.

The explanation to the penetration depth’s influence on fatigue life is that when a one-sided weld is subjected to fatigue loading F, as illustrated in Fig. 1, the stress level due to stress concentrations typically is at a peak in the root [9]. This problem is mitigated by an increased penetration depth since the remaining unwelded area between the plates is reduced. This turns out to give a smoother stress flow in the welded joint [15].

There are a number of empirical studies showing the importance of penetration depth in relation to the relative fatigue strength of fillet welds (see e.g. [7, 8]). One example is the rule of thumb that has been accepted within the design of welded structures where the equivalent weld throat thickness ρ is used to evaluate fatigue strength. The following numerical expression on the equivalent weld throat thickness, ρ = a+2i, was proposed in [7]. Penetration depth i thus has a double impact on the fatigue strength compared to the weld throat thickness a. Typically, only the weld throat is monitored in visual production quality control since the penetration is a hidden quantity in many applications.

Another relevant study shows that a variation in fatigue life corresponds to a variation in weld penetration [8]. It was observed in [8] that a lack of penetration allows a natural stress riser from which a crack may propagate.

Since it normally is very costly to tightening the range of tolerance in manufacturing, it is desirable to find cost-effective means to cope with this problem in other ways. One such way, utilizing in-process monitoring for weld penetration depth prediction, is suggested and evaluated here. A sufficient prediction performance of a real-time system for this purpose will enable adaptive control to minimize the contribution in the variance in penetration depth that is caused by variations in gap size. This system could also be useful for post process quality control where the in-process data can direct inspection activities only to suspicious areas of the welded seam.

Typical fillet welds are shown in Fig. 2 to highlight the problem. Two etched cross cut images of welds are shown, the right image with a technical zero gap (here a technical zero gap refers to g≤ 0.1 mm) and the left with a significant gap. Both welds have been welded with the same nominal parameters; still, it is clearly shown that the penetration (indicated with dashed horizontal lines) is much deeper (in this case >2 mm) with the existence of a gap.

It is difficult to inspect and control the penetration depth in most fillet welds since it is not visible after welding. Non-destructive testing such as ultrasonic testing is for example due to restricted geometrical accessibility not common practise in today’s industry for this type of welds.

A common procedure, however, is to use destructive testing, even though there are many downsides with this approach. The method is in general expensive and time-consuming, and gives only a limited number of samples along the weld seam not showing the whole picture.

The preferable solution for the welding industry would be to be able to predict the penetration depth already before or during welding, the latter by in-process monitoring. Such a possibility to predict and use this information to control the penetration depth would greatly affect the design and production of welded structures. Productivity is in general very much influenced by the existence of uncertainties in the result. If the variation in penetration depth is too large, this will typically be handled by increasing the safety margins, such as adding extra welded material, slowing down the welding travel speed or handling it by extra repairs etc. This is the traditional way for different functions within manufacturing industries to handle uncertainty. These safety margins in each production step of course lead to increased cost due to extra labour and consumables. An even more serious effect is the influence this has on long-term development. When the designer is unsure of the quality, it is unlikely that he or she will decrease safety margins or try new design solutions. Many times, it gives a sense of safety to stick with old solutions since they are proven. That could significantly decrease the rate of production inventions being implemented.

In-process monitoring of MAG welding has been addressed by many researchers and for many purposes during several decades. One major field is directed toward joint tracking systems aiming at compensating for tolerances in fixturing and manipulator motions. These systems are based on identification of geometrical details of the joint edge in a control strategy [3, 21, 26, 29, 32]. An interesting approach is suggested in [10] where the arc current is measured with a rotational arc sensor to detect the deviation and inclination of the welding torch. Another significant field of monitoring is camera-based feature extraction looking at e.g. weld pool shape and size (see [18, 25, 31] for some examples and vision-based systems for welding arc length control [13]). Several evaluations of spectrometer measurements of the arc plasma for monitoring of process stability are reported in e.g. [1, 17, 33]. Simpson has published results about signature images for fault detection such as contaminations, out of position and poor fit up. The signatures are based on acquired signals of the welding current and arc voltage [27, 28]. Some examples of methods using acoustic signals to monitor lack of fusion, burn through and welding arc length can be found in [5, 6, 30]. Other interesting examples employ the use of temperature field monitoring by infrared cameras for monitoring of the heat input [11, 12]. To the author’s knowledge, there is no published results showing in-process monitoring for penetration depth predictions in one-sided fillet welds.

Although all these examples of research endeavours are industrially relevant, they share the common factor that most of the results end up in laboratories. One reason for not being used industrially is most likely the harsh nature of the MAG process. Different physics interact and experience disturbances that make it difficult to make accurate measurements or estimates. The hostility in the welding processes e.g. due to excessive heat flux, and evaporations of material make it a challenge to use sensitive sensor equipment in a close vicinity. Another reason for the limited use of external sensors is the industrial need for mechanical robustness and flexibility in welding tools. External devices such as sensors and cameras can impose restrictions in how the system can be used in real production, e.g. welding in narrow spaces restricting the size of equipment. This constitutes a bottle neck in the endeavours to industrially implement automatic in-process monitoring and adaptive control.

This paper evaluates the novel experimental methods to predict the weld penetration depth during welding by using an estimated gap size obtained from camera images. Measured data of penetration depth from welds with known variation in the gap along the joint reveals a linear relation between penetration and gap which is used for the prediction. The monitoring of the gap is limited to image sequences from vision and infrared cameras. Image fusion is also evaluated because in general it can improve reliability in feature extraction by redundant information and to improve capability by complementary information.

The purpose with this evaluation is to make a pilot investigation of the technical possibilities and limitations with in-process monitoring for weld penetration prediction. Special attention has been paid in order to adopt the experimental conditions to conform to industrial welding conditions. Some of the equipment used (camera and optics) does not have the mechanical robustness and flexibility needed in industry. However, the equipment used is not regarded by the authors as a technical barrier since further robustification is considered as an issue of technical development of solutions that already exists. Other aspects such as financial consequences and organizational issues are not considered here.

2 Experimental setup

A cruciform weld sample was produced from 590-mm-long metal plates of 12-mm-thick standard structural steel, S355, aligned to form two one-sided corner joints. See Fig. 3 which shows the unwelded sample. The geometry has zero gap sections along the joints alternated with three equally spaced segments, each 150 mm long, with a well-defined gap in the form of arches with a maximum height of 1 mm. This joint gap geometry was produced by water jet cutting. Furthermore, the plates were tack welded in the zero gap sections. In total, six segments of known variation in gap size were evaluated.

The studied weld sample was welded using a typical industrial robot cell with a welding power source (Fronius VR7000CMT) with parameters set to achieve a weld throat thickness of a=5 mm. The MAG process mode used was spray arc. Copper-coated 1.2-mm solid mild steel wire-type G3SI1 was used as filler metal, and Mison-18 gas (20 l/min) was used for shielding. The welding robot was programmed to execute torch weaving with a weaving frequency of 2.67 Hz and an amplitude of 2.3 mm. No joint tracking system was applied. The nominal welding parameters are listed in Table 1.

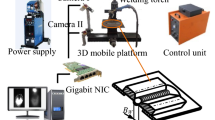

For the purpose of gap size estimation, two types of CMOS cameras were observing the process: one vision camera from Photonfocus, MV1-D1312 (IE)-G2, and one infrared camera from Xenics, Bobcat-640.

The vision camera has a high dynamic range (HDR) sensor with a range of 120 dB. Images from HDR scenes cover a very broad range of illumination intensities with a minimum loss of information. This is typically the case with MAG welding where one would like to capture geometrical features surrounding the melted welding area in the presence of the very high intensity light from the arc plasma. For this reason, it is essential to take full advantage of the quantification levels provided by the image sensor. The Lin-Log CMOS sensor in the camera used satisfies this demand by an adjustable intensity response curve that combines linear and logarithmic responses [4]. The spectral response of this camera is in the range 200 to 1000 nm (see Fig. 4). Figure 4 also shows the spectral responses of both the cameras together with spectral lines from Fe-I, Fe-II, Ar-I and Ar-II (the most dominant peaks in the range of interest) in the arc plasma emission [16]. The infrared camera has an InGaAs detector with a spectral response in the range 900 to 1700 nm (see Fig. 4). Both cameras have an active pixel sensor and global shutter technologies enabling high speed imaging.

In a mirror rig, the two cameras look through a short pass dichroic beam splitter placed in the optical path with 45° angle. It has a 1000 nm cut-off wavelength to separate the spectral ranges of the cameras. The vison camera sees right through the mirror. The infrared camera is placed in 90° angle and captures the reflected image (see Fig. 5).

Additional optical filters have been used to suppress the irradiation from the highly intense arc plasma. These emissions are caused by ionized argon and elements in the base- and filler metal (mainly iron). A dielectric filter with a central wavelength at 550 nm and a 40-nm band pass region is placed in the optical path of the vision camera. Another filter with a central wavelength at 1550 nm and a 40-nm band pass region is placed in the optical path of the infrared camera. See Fig. 4 which shows the narrow passband regions of the filters in ranges without interfering plasma spectral lines.

The frame rate of both synchronized cameras was 200-fps in order to be able to smooth the feature extraction data by temporal image sequence filtering.

Image registration has been used to transform the cameras’ image sequences into one coordinate frame for image alignment. The registration has been accomplished by a manual procedure where a suitable sized checkerboard has been captured by both cameras. These images have in turn been used to manually define some corresponding control points in both images in order to fit a geometric transformation.

Image fusion has been used after image registration to combine information from two images into a single composite image. The reason is that a fused image in general can be more informative and suitable for both visual perception and computer processing. The principle purpose of image fusion is to reduce uncertainty and minimize redundancy in the output while maximizing the relevant information particular to an application or task. Using image fusion has several advantages, including wider spatial and temporal coverage, decreased uncertainty, improved reliability and increased robustness of system performance [20]. Principal component analysis-based image fusion has been chosen for evaluation in this work [19].

Devises from National Instruments were used for image acquisition and controlled by a LabVIEW program in order to capture time-synchronized images from the cameras. Time synchronization is necessary for image fusion and when comparing the estimates from the two camera’s image sequences.

3 Results and discussion

The results contains both the experimental procedure to relate penetration depth to measured gap size and the procedure to find the gap size estimates from the camera information.

3.1 Relation between measured gap size and penetration depth

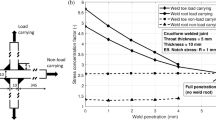

The first result is the establishment and quantification of the relation between measured gap size g m and penetration depth i m along the welded seam. Gap size and penetration depth are measured from images of grind and etched cross cut from along the weld seam showing the fused zone (see Fig. 2 for a typical example of this kind of data). Figure 6 shows the measured gap size and penetration depth at locations distributed along the weld seam together with the nominal gap geometry. The measured data i m and g m is obtained from in total 37 cross cuts and then used in a regression analysis.

The relation between the measured gap size g m and penetration depth i m is shown in Fig. 7 and also given as a robost linear fit together with 95 % confidence bounds. Bi-square weights were used in an iteratively reweighted least-squares algorithm [14]. The reason for employing a robust regression method is that it is designed not to be overly affected by violations of assumptions by the underlying data-generating process. In this work, the underlying statistical distributions of the data is not investigated.

3.2 Estimated gap size from image sequence feature extraction

Estimating gap size from image sequences is a problem of feature extraction based on image binarization followed by a transformation of projections and temporal filtering.

The image binarization was initiated by a median filtering to remove noise. Median filtering is commonly used in image processing since it preserves edges while removing noise [2]. Next, the Otsu multi-threshold method [24] was applied. This is a nonparametric and unsupervised method of automatic threshold selection in images. An optimal threshold is selected by a discriminant criterion to maximize the disjunction of the resultant classes in grey levels. Finally, the point closest to the top of the image among the resultant binarized classes was selected to be used for the gap size estimate g e .

Figure 8 shows images of the melt pool and its surroundings including the shielding gas nozzle, the electrode and the joint corner which is oriented in the vertical direction. In these images, the highest point of the projection of the melt pool, meaning the point closest to the top of the image and belonging to a set of high-intensity image components in the image, gives the information used for the gap size estimate g e . The reason for this choice is that a larger gap results in a projection of light reflections that reaches closer to the top of the image. The images in Fig. 8 show results from the vision camera, the infrared camera and fused images at two subsequent time instances, and clearly display differences in the estimation performance. The top left image demonstrates an erroneously selected point where a reflection from the welding arc at the flange plate constitutes the highest point.

A projection transformation of the set of data from the feature extraction is then employed. This is to scale and offset adjust (calibrate) the image-based gap signal to the known physical gap size along the weld joint. The transformation fits the estimated gap to the nominal joint geometry by solving the normal equations for a least square fit. Figure 9 shows the estimate from the fused image sequence. Even if the signal is very noisy, the low frequency content clearly follows the actual gap variations along the weld joint.

The noise in the signal extracted from the two previous steps includes a significant interference from the robot torch weaving. The temporal frequency content of the (mean-subtracted) signal is given by the periodogram in Fig. 10. The spectral density estimation clearly shows the periodic disturbance corresponding to the torch weaving with a fundamental frequency of 2.67 Hz. It also reveals a region of low frequency components at a fraction of a Hertz carrying the information about the slowly varying gap along the weld joint. A forth-order digital Butterworth low-pass filter has been used for zero-phase filtering [23] to suppress the periodic disturbance and disclose the gap size estimate g e . The filter has a cut-off frequency chosen to attenuate the periodic torch weaving disturbance.

The result from spatial image filtering, image binarization, feature extraction, projection transformation and finally temporal signal filtering is a scalar signal that estimates the gap size. The algorithm used for this estimation is given in Fig. 11.

The curves in Fig. 12 are final estimates from three different sets of image sequences (vision, infrared and fused).

An example of the capability of this system is given in Fig. 13. It is displaying measured penetration depth i m and the corresponding prediction i e based on the gap size estimate g e and the linear fit from deregression analysis in Section 3.1.

3.3 Quality of the estimates

The relation between measured gap size and penetration depth, g m and i m , displays a significant variability. This is explained by two causes. The first cause is the natural variability that is independent of the gap size. This variability is caused by e.g. inaccuracy in the robotic manipulator motions or the plate surface condition. The other cause is due to measurement uncertainties. One measurement uncertainty is related to the exact positioning of the cross cut along the welded seam. This uncertainty emanates from the manual cutting procedure that has been employed (see Fig. 6 where the distribution along the welded joint is seen). The magnitude of this uncertainty is estimated to be in the order of ±0.05 mm. Another uncertainty relates to the gap size measurement g m and is mainly caused by imperfections in the metallographic preparation where burr on the edges are present due to the rough grinding. The magnitude of this uncertainty is estimated to be in the order of ±0.05 mm. The uncertainty in the penetration depth measurement i m is mainly due to the resolution of the microscope used and in the manual judgement of distances in the images. The magnitude of this uncertainty is estimated to be in the order of ±0.01 mm. However, all these uncertainties in the measurements are small compared to those in the natural process variability and also the uncertainty in the gap size estimates g e from the camera image sequences.

The empirical model (linear fit) that relates the penetration depth to gap size is expected to perform sufficiently well when used for predictions. The root-mean-square deviation between the data and the linear fit is 0.42 mm.

The performance of the different gap size estimates using camera monitoring is evaluated by comparing the root-mean-square deviation between the estimates and the nominal joint geometry. Corresponding data is given in Table 2.

This comparison tells that there is no major dissimilarity in performance of the different estimates.

4 Conclusion and future work

It can be concluded that the suggested method, evaluated off-line, is promising to really achieve in-process monitoring for prediction of weld penetration depth during one-sided fillet welding using the MAG process.

A first conclusion might be that the results are rather pessimistic (see Fig. 13) especially if the aim of the method is to be used for online control. It is however believed that further development of the methods used will result in a better prediction performance. Empirical modelling resulted in a linear fit between joint gap size and weld penetration depth in the gap size range between 0 and 1 mm. Even though there is an uncertainty in data, it is regarded reasonable to assume a linear relation in this range.

When comparing the results from the camera setup, one can conclude that the infrared camera performs best and that image fusing requiring two cameras does not add any significant improvement in performance. Especially when considering the additional complexity and cost associated with a double-camera setup, it is concluded that further efforts to reach a better prediction performance should only include one camera. It is expected that improvements can be done in the numerical feature extraction but the major improvements will come from optimizing the physical pose of the camera and the optical setup.

Future work should be directed towards further developments of the method for increased robustness both in the prediction and in an industrially tractable physical setup of the monitoring equipment. The method should also be evaluated in a real-time system. Another interesting option is to evaluate the performance of closed-loop control using penetration monitoring to adopt the welding current to variations in gap size.

In order to implement the proposed method in industrial production, some further steps have to be considered. In-process monitoring requires dedicated hardware (real-time computing) for synchronized image processing, feature extraction and recursive data filtering. The camera and optical system also needs to be embedded and integrated in the welding tool with sufficient shielding from process emissions spatter and heat. This integration should also be made in such way that it does not impose major restrictions on the flexibility of the welding tool. The gap size estimate used for prediction also requires a calibration of the optical set up that is used. The use of other base metals, plate thickness and MAG processes most probably requires separate experiments for each combination in order to establish the relation between penetration and gap for the actual situation.

Finally, the industry needs to have a strategy on how to utilize the added information for quality control.

References

Alfaro SCA, de S. Mendonça D, Matos MS (2006) Emission spectrometry evaluation in arc welding monitoring system. J Mater Process Technol 179(1–3):219–224

Arias-Castro E, Donoho DL (2009) Does median filtering truly preserve edges better than linear filtering? Ann Stat 37(3):1172–1206

Bae KY, Lee TH, Ahn KC (2002) An optical sensing system for seam tracking and weld pool control in gas metal arc welding of steel pipe. J Mater Process Technol 120(1–3):458–465

Bandoh Y, Qiu G, Okuda M, Daly S, Aach T, Au O (2010) Recent advances in high dynamic range imaging technology. In: 2010 17Th IEEE international conference on image processing (ICIP), pp 3125–3128

Cayo E, Alfaro SCA (2008) Weld transference modes identification through sound pressure level in GMAW process. J Achiev Mater Manuf Eng 29(1):57–62

Cayo E, Alfaro SCA (2009) A non-intrusive GMA welding process quality monitoring system using acoustic sensing. Sensors 9(9):7150–7166

Dahle T, Olsson KE, Samuelsson J (1999) Fatigue design optimisation of welded box beams subjected to combined bending and torsion. In: Solin GMAJ (ed) European structural integrity society, fatigue design and reliability, vol 23. Elsevier, pp 103–116

Deshmukh AR, Venkatachalam G (2014) Effect of weld penetration on fatigue life. Procedia Eng 97:783–789

Fricke W (2013) IIW guideline for the assessment of weld root fatigue. Welding in the World 57(6):753–791

Gao Y, Zhang H, Mao Z (2009) Welding gun inclination detection and curved fillet weld joint tracking. Weld J 88(3):45s–53s

Goecke SF (2014) Visualisation and control of the heat input in MAG welding of ultra-high-strength manganese-boron steels. JWRI, Osaka

Goecke SF, Ogino Y, Hirata Y (2015) In-situ temperature determination of MAG welding and its application for heat input control. pp 35–36. IIW, Helsinki, Finland

Hillers B, Graeser A (2009) Real time arc-welding video observation system. In: 62Nd international conference of the IIW. Singapore

Holland PW, Welsch RE (1977) Robust regression using iteratively reweighted least-squares. Commun Stat: Theory and Methods 6(9):813–827

Kainuma S, Mori T (2006) A fatigue strength evaluation method for load-carrying fillet welded cruciform joints. Int J Fatigue 28(8):864–872

Kramida A, Ralchenko Y, Reader J, NISTASD Team (2015) NIST Atomic Spectra Database (ver. 5.3), [Online]. Available: http://physics.nist.gov/asd [2016, March 22]. National Institute of Standards and Technology, Gaithersburg, MD

Lenef AL, Gardner CS (1985) Optical emissions from weld arcs and their effects on the performance of welding robot vision systems. Appl Opt 24(16):2587

Liu J, Fan Z, Olsen SI, Christensen KH, Kristensen JK (2011) Weld pool visual sensing without external illumination. In: 2011 IEEE Conference on automation science and engineering (CASE), pp 145–150

Metwalli MR, Nasr AH, Allah OSF, El-Rabaie S (2009) Image fusion based on principal component analysis and high-pass filter. In: International conference on computer engineering systems, 2009. ICCES 2009, pp 63–70. Cairo

Mitchell H (2010) Image fusion - theories, techniques and applications. Springer, Berlin

Mota CP, Machado MVR, Neto RMF, Vilarinho LO (2015) Near-infrared vision system for monitoring arc welding processes. Weld Int 29(3):187–196

O’Brien RL (ed) (1991) Welding handbook, Welding processes, Welding handbook, 8edn, vol 2. American Welding Society, Miami

Oppenheim AV, Schafer RW, Buck JR (1999) Discrete-time Signal Processing, 2 edn. Prentice Hall, Upper Saddle River, NJ

Otsu N (1979) A threshold selection method from gray-level histograms. IEEE Trans Syst Man Cybern 9 (1):62–66

Romero E, Chapuis J, Bordreuil C, Soulié F, Fras G (2013) Image processing and geometrical analysis for profile detection during pulsed gas metal arc welding. Proc Inst Mech Eng B J Eng Manuf 227(3):396–406

Schreiber D, Cambrini L, Biber J, Sardy B (2008) Online visual quality inspection for weld seams. Int J Adv Manuf Technol 42(5-6):497–504

Simpson SW (2007) Signature images for arc welding fault detection. Sci Technol Weld Join 12(6):481–486

Simpson SW (2008) Fault identification in gas metal arc welding with signature images. Sci Technol Weld Join 13(1):87–96

Steele JPH, Mnich C, Debrunner C, Vincent T, Liu S (2005) Development of closed-loop control of robotic welding processes. Ind Robot 32(4):350–355

Sumesh A, Rameshkumar K, Mohandas K, Babu RS (2015) Use of machine learning algorithms for weld quality monitoring using acoustic signature. Procedia Comput Sci 50:316–322

Wang Z (2014) Monitoring of GMAW weld pool from the reflected laser lines for real-time control. IEEE Trans Ind Inf 10(4):2073–2083

Xu Y, Fang G, Lv N, Chen S, Jia Zou J (2015) Computer vision technology for seam tracking in robotic GTAW and GMAW. Robot Comput Integr Manuf 32:25–36

Zielińska S, Musio K, Dzierga K, Pellerin S, Valensi F, Izarra Cd, Briand F (2007) Investigations of GMAW plasma by optical emission spectroscopy. Plasma Sources Sci Technol 16(4):832

Acknowledgments

The research work behind this paper has been partly funded by The Knowledge Foundation. A great contribution has been made by the staff at the Volvo CE Arvika plant. A special thanks to Xiao Xiao Zhang for preparing the test samples and Anna-Karin Christiansson for proof reading and input to the text.

Author information

Authors and Affiliations

Corresponding author

Additional information

Recommended for publication by Commission XII - Arc Welding Processes and Production Systems

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Sikström, F., Öberg, A.E. Prediction of penetration in one-sided fillet welds by in-process joint gap monitoring—an experimental study. Weld World 61, 529–537 (2017). https://doi.org/10.1007/s40194-017-0448-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40194-017-0448-7