Abstract

Introduction

With no specific overview on simulation-based training for educational purposes among residents in ophthalmology, this narrative review attempts to highlight the current literature on modern educational simulation-based tools used to educate residents in ophthalmology.

Methods

We searched databases Web of Science and PubMed between March 15 and July 21, 2022. Relevant and accessible articles and abstracts published after 2006 and in English only were included.

Results

Simulation-based cataract surgery is associated with better outcomes in the operating room and faster surgeries. Construct validity has been established across different procedures and levels in simulation-based cataract surgery and simulation-based vitreoretinal surgery. Other simulation-based procedures indicate promising results but in general lack evidence-based validity.

Discussion

This narrative review highlights and evaluates the current and relevant literature of modern educational simulation-based tools to train ophthalmology residents in different fundamental skills like simulation-based ophthalmoscopy and complex surgical procedures like simulation-based cataract surgery and vitreoretinal surgery. Some studies attempt to develop simulators for the use in education of ophthalmology residents. Other studies strive to establish validity of the respective procedures or modern education tools and some studies investigate the effect of simulation-based training. The most validated modern educational simulation-based tool is the Eyesi Surgical Simulator (VRmagic, Germany). However, other modern educational simulation-based tools have also been evaluated, including the HelpMeSee Eye Surgery Simulator (HelpMeSee Inc., New York, USA) and the MicroVisTouch Surgical Simulator (ImmersiveTouch, USA).

Conclusion

Simulation-based training has already been established for residents in ophthalmology to benefit the most from skill-demanding procedures resulting in better learning and better patient handling. Future studies should aim to validate more simulation-based procedures for the teaching of ophthalmology residents so that the evidence is kept at a high standard.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Simulation-based cataract surgery and vitreoretinal surgery are well investigated. |

Other procedures are emerging in the field of simulation-based training in ophthalmology. |

Construct validity has been established in different procedures across different levels for simulation-based cataract surgery and simulation-based vitreoretinal surgery. |

Simulation-based training is associated with better outcome in the operating room. |

Future studies should aim to establish construct validity across more different procedures, evaluate the transfer of skill from the simulator to the clinic and try to implement training specifically addressing management of potential complications. |

Introduction

Simulation-based training is becoming increasingly popular for educational purposes among residents in ophthalmology. The main purpose of applying simulation-based training as a part of the education curriculum is to prepare residents to perform different surgical and non-surgical procedures they need to master in the field of ophthalmology. This includes training in both high-technical and fundamental skills [1,2,3]. Furthermore, one of the main goals of this type of training is to ensure safety and proper training before transferring skills to real patients. Simulation-training is paramount and essential for both residents’ confidence and most importantly patients’ safety [4] and a previous abstract found patients were more willing to let residents perform surgery after watching a video explaining simulation-based surgery [5]. Furthermore, implementation of an Eyesi Surgical Simulator may be cost-effective [6]. Multiple studies have tested the use for different surgical procedures including cataract surgery, vitreoretinal-surgery and glaucoma surgery and each requires high level skills of the surgeons. Surgical simulators such as the HelpMeSee Eye Surgery simulator [7] and MicroVisTouch [8] have been assessed for training of residents in simulation-based surgery but Eyesi Surgical Simulator remains frequently assessed. Importantly, simulators used for microsurgical training can give perception of 3D vision and depth in the microscope, thus contributing to a more authentic reflection of real-life surgery for the trainee. While construct validity was indicated in several training procedures for vitreoretinal surgery [3, 9] and cataract surgery [10,11,12,13], not all used a validity framework. Moreover, a national Delphi study identified 25 technical procedures to include in a simulation-based training programme [14].

A vast variety of different simulated training modalities are accessible in the field of ophthalmology and education through simulation-based cataract surgery might pave the way for faster learning and increased patient safety. Systematic reviews have been conducted on simulation-based surgical training including virtual reality vitreoretinal surgery [15], cataract surgery [16] and ophthalmological training in simulation-based training in general [17]. However, an updated overview obtaining overall knowledge about different training modalities more specifically for residents in ophthalmology is missing. Thus, this narrative review aims to highlight relevant literature on simulation-based tools used to educate residents in ophthalmology.

Compliance with Ethics Guidelines

This article is based on previously conducted studies and does not contain any new studies with human participants or animals performed by any of the authors.

Simulation-Based Cataract Surgery

Cataract surgery is a skill-demanding procedure for ophthalmology residents which can be obtained through training. In 2008, Feudner et al. [18] found capsulorhexis training on Eyesi Surgical Simulator improved overall wet-lab performance among both students and residents compared to controls. Capsulorhexis was performed on porcine eyes on day 1 and after 3 weeks. Residents and students were randomized to either controls or intervention who completed two training trials on the simulator in between the two wet-lab performances. While randomization was not described completely sufficiently, resulting in risk of bias, results did indicate a potential effect from simulation-based training. Saleh et al. [19] investigated intra- and inter-user repeatability, variability and reproducibility in ophthalmology trainees (n = 18) with < 2 h simulation and surgical experience. The study evaluated one cataract-specific module and four general surgical tasks repeated three times after a standardised introduction on the Eyesi Surgical Simulator. Intra-user variability was found comparing first attempt to second and third, respectively (p = 0.0001, p = 0.0001). Accordingly, in a prospective randomized study Bergquist et al. [20] concluded repetitive training with four sessions over a month (group A) to be more effective than two sessions (group B) while using the Eyesi Surgical Simulator on four cataract modules. Furthermore, significantly more participants from group A reached a reference score set by two cataract surgeons across the four modules (40% vs. 19%, p < 0.01). Another study by Saleh et al. [21] suggested a structured, supervised cataract simulation-based programme for ophthalmic surgeons in the first year of residency could offer a significant level of skill-transfer in different procedures (capsulorhexis, antitremor training, cracking, chopping and navigation training). Furthermore, Thomsen et al. [2] found novice and intermediate surgeons performed better in the operating room after simulation-based training. Simulator training was performed in between phacoelmulsification procedures in the operating room. Operating room procedures were video-recorded and assessed masked with the Objective Structured Assessment of Cataract Surgical Skill (OSACSS) rating scale before and after simulation training for comparison. However, while both studies lacked a control group [2, 21], it may be emphasized to be unethical holding back residents from potentially important education.

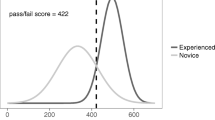

Roohipoor et al. [22] found an interesting association between first-year residents’ Eyesi Surgical Simulator score and surgical performance measures in 3rd year of residency, which might help point out residents in need of extra training. Thomsen et al. [11] aimed to design an evidence-based test by evaluating all modules in cataract surgery on the Eyesi Surgical Simulator. In 7 out of 13 modules expert surgeons could be discriminated from novices providing construct validity. Furthermore, authors found a pass/fail score of 422 to discriminate experts from novices on the Eyesi Surgical Simulator, thus representing the anticipated point of adequate simulator training for novices. As the test had one expert under 422 (false negative) and 5 novices above (false positive), authors suggested to pass the test two consecutive times to eliminate risk of coincidence. Multiple studies investigated if simulation-based cataract training could decrease rate of intraoperative complications and hence increase patient safety. In a randomized, investigator-masked multi-centre study in Sub-Sahara residents, Dean et al. [23] aimed to determine if simulation-based cataract surgery training resulted in improved acquisition of surgical skills compared to conventional cataract training. Intervention group underwent a 5-day simulation course plus conventional training compared to controls with conventional training only. Primary outcome was difference in surgical performance of a small-incision cataract surgery on synthetic simulation eyes after 3 months as assessed on the Ophthalmic Simulation Surgical Competency Assessment Rubric (SIM-OSSCAR). A tool modified from the International Council of Ophthalmology Surgical Competency Assessment Rubric (ICO-OSCAR) assesses the skills of trainees in a simulation environment [24]. Intervention group (n = 25) performed better compared to controls (n = 24) at 3 months with a difference of 15.8 points (95% CI 13.2–18.5, p < 0.001) and at 12 months with a difference of 8.2 points (95% CI 5.5–11.0, p < 0.001) where intervention group (n = 22) also had a lower proportion of posterior capsule rupture than controls (n = 23) (7.8% vs. 26.6%, p = 0.001). At 12 months controls also received the intervention neutralizing the difference between intervention group (n = 10) and controls (n = 16) at 15 months. However, intervention group performed more cataract surgeries in the 12 months following intervention, potentially biasing results. Yet, authors found it unlikely to affect the primary outcome at 3 months. Additionally, there was a difference in simulator accessibility across different sites, but authors tried to account for this through stratification by site. Intention-to-treat analysis was done on 49 participants.

Retrospective studies showed implementation of simulation-based training on the Eyesi Surgical Simulator decreased overall intraoperative complication rate from 27.14% to 12.86% (p = 0.031) in the first ten cataract surgeries among Brazilian residents [25], a reduced rate of errant continuous, curvilinear capsulorrhexis from 15.7% to 5.0% (p < 0.0001) after introduction of a Capsulorhexis Intensive Training Curriculum (CITC) consisting of 33 steps [26], and among 1st and 2nd year residents (n = 265) a decrease in the rate of posterior capsule ruptures from 4.2% in 2009 to 2.6% in 2015 for those with access to an Eyesi Surgical Simulator was reported [27]. Accordingly, lower rate of posterior capsule tear (2.2% vs. 4.8%, p = 0.032) and vitreous prolapse (2.2% vs. 4.8%, p = 0.032) was reported in residents after introduction to Eyesi Surgical Simulator compared to before introduction [28]. Sikder et al. [8] aimed to investigate if operating room experience enhanced residents’ performance on the MicroVisTouch-simulator and could be used as an assessment tool for residents’ cataract surgery skills. Residents from two sites were evaluated on four variables (circularity, accuracy, fluency and overall score) with a possible score of 0–100. The study showed accuracy, fluency and overall scores increased after a 6-month period performing surgery, thus indicating an association between operating room performance and use of the MicroVisTouch surgical simulator. However, the study suffered loss to follow-up (n = 78 at baseline vs. n = 40 at follow-up) proposing a risk of bias. Nair et al.[7] investigated if a structured training curriculum on the HelpMeSee Eye Surgery-Simulator influenced the first 20 manual small incision cataract surgeries in the operating room. Major error rate (uveal prolapse, buttonhole, premature entry, Descemet's detachment, tunnel laceration) was reduced in simulator group compared to controls. However, no differences were found in overall errors and minor errors [7]. Some degree of heterogeneity could be found between groups as intervention group had trained more hours in wet-lab training and performed more small-incision cataract surgeries as first assistant and lead surgeon. Sample size (n = 19) was relatively small and although intention-to-treat analysis was applied, three controls and two from simulator group dropped out after randomization and were not included in the final analyses enhancing risk of attrition bias. In a retrospective study, Pokroy et al. [29] found residents introduced to Eyesi Surgical Simulator plus wet-lab training (n = 10) had faster operating time and fewer surgeries > 40 min duration (32.4% vs 42.3%, p = 0.005) in surgery case 10–50 compared to wet-lab-only group (n = 10). However, no difference was found in complication rate. Daly et al.[30] compared simulation-based cataract training on the Eyesi Surgical Simulator to traditional wet-lab training performed on silicone eyes in a randomized study. Like the study by Pokroy et al. [29] no difference in overall performance was found comparing the groups. However, wet-lab group performed significantly faster in their first continuous curvilinear capsulorhexis (9.48 min vs. 4.70 min, p = 0.038) compared to simulator group. According to the authors it could be due to wet-lab group being more familiarized with the operating room equipment. Limitations were a small sample size (n = 21), and randomization was insufficiently described leading to risk of bias [30]. Lopez-Beauchamp et al. [31] found 3rd-year residents without access to an Eyesi Surgical Simulator were 6.7 min slower (p = 0.0001) and predicted to need 17% more time to perform uncomplicated phacoemulsification in the operating room than residents with access to simulation-based training (p = 0.0001). Accordingly, Belyea et al. [32] found residents training on the Eyesi Surgical Simulator achieved a reduction in time to perform phacoemulsification with reduced power. More phacoemulsification procedures were done per resident in the simulator group (16.8, range 5–45) compared to non-simulator group (12.2, range 4–28).

Is Simulation-Based Cataract Surgery Effective?

In a Cochrane review from 2021, Lin et al. [16] assessed impact of virtual reality simulation-based cataract surgery training on operating performance of postgraduate ophthalmology trainees, measured by intraoperative complications among others. The review contained some of aforementioned studies [7, 18, 30]. No studies had masked participants or personnel and 83.3% of studies did not describe the randomization process. All studies had at least three domains at high or unclear risk of bias. Hence, authors concluded that the Eyesi Surgical Simulator might have a positive effect on intraoperative complications among residents, but included studies were not strong enough to be certain. Thus, potential risk of bias must be considered. However, while simulation-based cataract surgery and the Eyesi Surgical Simulator seem to be especially popular for surgical training, simulation-based cataract training seems effective in bringing down intraoperative complications and operating times among ophthalmology residents with systematic training, but many of the studies had a retrospective design where causality cannot be determined. Furthermore, construct validity was established across different procedures making it a valid training tool.

Simulation-Based Vitreoretinal Surgery

Like simulation-based cataract surgery, simulation-based vitreoretinal surgery was investigated in several studies. In a pilot study, Yeh et al. [33] developed and evaluated their own training module using a non-virtual reality simulator, VitRet Eye Model (Philips Studio, Bristol, UK), with artificial tissue. After watching an instructional video twice, trainees underwent the training module. Performances were evaluated by a masked reviewer using the self-made Casey Eye Institute Vitrectomy Indices Tool for Skills Assessment score (CEIVITS). Linear regression analysis found positive correlation between prior experience in vitreoretinal surgery and total CEIVITS score, CEIVITS score for infusion line placement and CEIVITS score air-fluid exchange. Correlation between wound closure and sclerotomy wound construction was also found when using Pearson correlation. Participating residents reported the training module was helpful in education, helped understanding and adequately mimicked basic steps in vitreoretinal surgery, but the model's artificial tissue did not get predominantly positive feedback. Limitations include small sample size (n = 13), whereof only nine participants reported on the questionnaire. Furthermore, a skilled ophthalmologist yielded help as needed potentially resulting in false-positive correlation.

In a study by Solverson et al. [34], participants completed five iterations on a navigation module (Navigation Training Level 1–4) on the Eyesi Surgical Simulator. Authors indicated the simulator could discriminate residents (n = 18) with 0.2 (± 0.4) years of experience from experts (n = 7) with 9.7 (± 3.7) years of experience in basic navigation skills. Furthermore, the study suggested basic navigation skills could be rapidly obtained as significant difference in ‘total error’ was found at baseline (24.1 vs. 11.3, p < 0.05) but not after five iterations (10.2 vs 8.4, p > 0.05). While the study lacked use of a validity framework, construct validity was established in several vitreoretinal training modules on the Eyesi Surgical Simulator by Cissé et al. [9] (Navigation Training Level 1, Forceps Training Level 1, Epiretinal Membrane Peeling level 1 and level 2) and Vergmann et al. [3] (Navigation Training Level 2, Bimanual training Level 3, Posterior Hyaloid Training level 3 and Internal Limiting Membrane Peeling level 3, (Fig. 1), where each level was selected in collaboration with a vitreoretinal surgeon [3]. Both studies contained small sample sizes, and Vergmann et al. [3] also included medical students where residents only might have been more clinically relevant. Yeh et al. [33] did not test their self-developed training module on residents against experts like Vergmann et al. [3], Cissé et al. [9] and Solverson et al. [34] who all found training modules able to differentiate novices from expert surgeons. Jaud et al. [35] aimed to develop a test to use for assessment of vitreoretinal surgical skills on the Eyesi Surgical Simulator and used the validation studies by Vergmann et al. [3] and Cissé et al. [9] as a basis for design of their test. Authors found expert surgeons outperformed senior residents and junior residents across six out of seven training modules (Navigation Training Level 2, Forceps Training Level 1, Bimanual Training Level 4, Posterior Hyaloid Level 3, Epiretinal Membrane Peeling Level 2, Internal Limiting Membrane Peeling Level 3) on the Eyesi Surgical Simulator. A pass/fail score of 596 was determined as the point where distribution of overall score met for novice surgeons and expert surgeons. These results might be important for certification before entering the operating room. However, limitations were a small sample size (n = 23) and three surgeons with prior experience in simulation-based surgery from a previous study. Yet, as a strength, Messick’s framework for assessment of validity was used.

Still images of each training module. Pictures 1–3 are the three modules for basic training and involve Navigation level 2 (1), Forceps Training level 5 (2) and Bimanual Training level 3 (3). Pictures 4–6 are the more procedural modules and involve Laser Coagulation level 3 (4), Posterior Hyaloid level 3 (5) and Internal Limiting Membrane (ILM) Peeling level 3 (6). Permission to use the image and legend was obtained directly from the copyright holder [3]

In a randomized trial, Thomsen et al. [36] questioned if skill transfer could be found between simulation-based cataract surgery and simulation-based vitreoretinal surgery in residents (n = 12). The notion was time spent in one procedure could be reduced if trainees were already proficient in this. However, no significant skill transfer from cataract surgery to vitreoretinal procedures in a virtual reality training environment was found. Additionally, in another randomized study, Petersen et al. [37] found no skill-transfer from abstract training modules to procedure-specific training modules among medical students (n = 43), suggesting trainees should skip directly to procedure-specific simulation-based training modules. However, while 68 participants were randomized, 25 participants dropped out and intention-to-treat analyses were not applied providing risk of attrition bias. Furthermore, clinical relevance might have been higher had ophthalmology residents been used. However, Deuchler et al. [38] found a warm-up session on the Eyesi Surgical Simulator may improve performance in the operating room in vitreoretinal surgery, even for experienced surgeons.

Is Simulation-Based Vitreoretinal Surgery Effective?

Many training modules on the Eyesi Surgical Simulator have been validated in vitreoretinal simulation-based surgical training for ophthalmology residents. Furthermore, vitreoretinal simulation-based training has potential for training ophthalmology residents before performing surgery on real patients in the operating room. A 2019 systematic review which included seven studies on simulation-based training in vitreoretinal surgery among novice surgeons concluded simulation-based vitreoretinal surgery training may be proficient for teaching a skill-demanding procedure like vitreoretinal surgery. However, authors were unable to reject a potential risk of bias, primarily due to lack of blinding of outcome in five out of seven studies and missing information on reporting in six out of seven studies. The review contained some studies mentioned above [3, 33, 34]. Furthermore, it was proposed future studies should focus on establishing concurrent validity before focusing on transfer of skills to the operating room [15]. Since this review, more quality studies worked on evidence-based vitreoretinal simulation training through implementation of validity framework and in future studies it would be interesting to view more studies investigating transfer of skills, especially for residents in the operating room.

Other Simulation-Based Procedures

Beside from simulation-based cataract and vitreoretinal surgery other procedures have also been evaluated.

In a randomized, controlled, investigator-blinded multi-centre study Dean et al. [39] aimed to evaluate if an intense 5-day simulation-based training course improved simulated glaucoma surgery (trabeculectomy) competence at 3 months as assessed on the Ophthalmic Simulation Surgical Competency Assessment Rubric (SIM-OSSCAR) by two masked graders. Synthetic simulation eyes were used (PS-OS-010, Philips Studio, Bristol, UK). No difference was found at baseline. However, at 3 months, intention-to-treat analysis revealed intervention group (n = 23) differed significantly on the SIM-OSSCAR with 20.6 points (95% CI 18.3–22.9, p < 0.001) from controls (n = 26) and maintained a difference at 12 months of 17.0 points (95% CI 14.8–19.4, p < 0.001). At 12 months controls received the intervention neutralizing inter-group difference at 15 months. Additionally, intervention group rated themselves more confident in glaucoma surgery at 3 months compared to controls. This study suggests simulation-based training in glaucoma surgery to be useful for ophthalmology residents. However, while the study was strengthened by randomized design and intention-to-treat analysis, validated training modules were not used. Thus, validity needs to be established for further simulation-based training in glaucoma.

Given the importance of mastering ophthalmoscopy in ophthalmology training, it was examined in several studies. Rai et al. [1] investigated simulation-based augmented reality ophthalmoscopy in a prospective randomized controlled trial. Residents' allocated to augmented reality simulation (group 2, n = 13) had better total (better at localizing structures and maintaining view) and performance score (score pr. time elapsed) compared to participants with no simulator training (group 1, n = 15). The difference was neutralized after controls received simulation training. The results suggest augmented reality simulator training could help ophthalmology residents achieving skills in ophthalmoscopy. The authors argued that construct validity was established as the simulator could discriminate fellows from group 1 residents (no training). However, this must be interpreted with caution, as no validity framework was used and only three fellows were included. Nevertheless, the results might add clinical relevance as ophthalmoscopy is a crucial skill for residents to master. Additionally, Borgersen et al. [40] revealed Eyesi Direct Ophthalmoscope Simulator (v1.4, VRmagic, Mannheim, Germany) could discriminate medical students (n = 13) from experts (n = 8) when investigating four modules. A pass/fail score of 2615 was constructed, where the mean sum of expert score meets the novice score. While sample size was rather low (n = 21), Messick’s framework for validation was used which enhances evidence-based frames of the study. Jørgensen et al. [41] aimed to develop and gather validity evidence for a multiple-choice questionnaire (MCQ)-based theoretical test in direct ophthalmoscopy and used Messick’s Validity Framework for evaluation of validity. A MCQ with 60 items was established through expert interviews and elimination rounds. Authors found the test able to discriminate medical students from ophthalmology experts (p < 0.001). Additionally, a pass/fail score of 50 correct answers was found to be the point where no students passed and no experts failed. This may be important for ensuring knowledge among novices before direct ophthalmoscopy.

An observational study found a virtual reality application could improve accuracy and performance skills of residents to diagnose strabismus. Accuracy and performance of residents were rated by a strabismus specialist evaluating video recordings of strabismus examinations before and after 30 sessions. However, small sample size (n = 14) and lack of controls could lead to potential risk of bias [42]. Simulation-based strabismus training is interesting because the strabismus diagnosis can be difficult and important because of potential consequences for patients who perhaps are offered either wrong surgery or incorrect prism glass. Hence, further validation of simulation-based strabismus education in ophthalmology residents is needed.

Simpson et al. [43] designed and validated an eye model capable of simulating laser peripheral iridotomy, posterior capsulotomy and retinopexy comparing a non-experienced group (n = 6) to an experienced group comprised of ophthalmology fellows and staff (n = 7). The experienced group showed more complete laser results compared to the non-experienced group (p = 0.039) in retinopexy graded by a blinded staff ophthalmologist on a predetermined retinopexy grading scheme. For posterior capsulotomy the non-experienced group used significantly more shots (p = 0.044) and had more lens pits (p = 0.047). The study suggested their eye model could be used to differentiate between non-experienced and experienced participants and could be used as an objective indicator of laser surgical skills. Laser procedures were validated blinded, hence strengthening the results. However, sample size was relatively low (n = 13), and results lacked 95% confidence intervals making the results less certain. While the idea certainly is interesting, further testing of the method needs to be done.

Zhao et al. [44] aimed to assess a reproducible mannequin-based surgical simulator [Human Eyelid Analog Device for Surgical Training and Skills Reinforcement in Trichiasis (HEAD START, Ho’s Art LLC, Yadkinville, NC] for teaching margin-involved eyelid laceration repair to ophthalmology residents. Six residents performed a minimum of one video-recorded session for validation by blinded graders with a standardized grading system. A questionnaire regarding comfort with eyelid laceration repair was answered before simulation (n = 10) and after simulation (n = 6). Post-simulation was rated higher on a Likert scale (4 vs. 1, p = 0.02). However, while residents rated the simulator superior to fruit peels, surgical skill boards and pig feet (p = 0.03) it was rated inferior to the operating room (p = 0.02). The small sample size is an important limitation of the study and conduction of larger studies using a framework for validation would be interesting and relevant to carry out, especially as minor eyelid procedures are already deemed relevant for integration into simulation-based training [14].

Summary and Future Directions of Simulation-Based Ophthalmology

This review provides an overview of studies that examines simulation in ophthalmology (Table 1). From this narrative review, it can be postulated that simulation-based training in general is an effective tool for teaching skill-demanding surgical procedures and more fundamental skills to ophthalmology residents. This includes training of skill-demanding procedures associated to reduced complication-rates and faster operating times in the operating room.

With construct of validity establishment of several procedures, simulation-based cataract and vitreoretinal surgery remains a feasible application for training ophthalmology residents and enhancing patient safety where especially the Eyesi Surgical Simulator has been evaluated among others. However, conduction of more future studies examining transfer of skills from simulator to operating room would be interesting. Simulation-based glaucoma surgery and other simulation-based training modalities like ophthalmoscopy, external-eye surgery, laser treatment and diagnosis of strabismus surgery show promising results although validity remains quite insufficient. Previously, it was presumed simulation-based tools lacked validity [17], and future studies should aim to establish construct validity across more different procedures and levels of skills. This could be obtained from applying the Messick Framework to ensure simulation-based education is properly evidence based [45, 46]. Furthermore, more randomized controlled trials need to verify potential effects for newer simulation-based modalities. Finally, reduced complication rate from simulation-based training is central for both patients’ safety and surgeons’ self-confidence. However, one may argue if reduction in complication rates also reduces an opportunity for potential training in handling complications. Previously, a study reported only 9.1% of trainees (n = 22) had confidence in handling complications during posterior capsule rupture on their own [47] indicating a dilemma to address in future studies.

In conclusion, simulation-based training is becoming an established educational tool for residents of ophthalmology to benefit by achieving skill-demanding procedures resulting in a safer, profitable time-effective learning environment, ultimately resulting in better patient handling.

References

Rai AS, Rai AS, Mavrikakis E, Lam WC. Teaching binocular indirect ophthalmoscopy to novice residents using an augmented reality simulator. Can J Ophthalmol. 2017;52(5):430–4.

Thomsen AS, Bach-Holm D, Kjærbo H, Højgaard-Olsen K, Subhi Y, Saleh GM, et al. Operating room performance improves after proficiency-based virtual reality cataract surgery training. Ophthalmology. 2017;124(4):524–31.

Vergmann AS, Vestergaard AH, Grauslund J. Virtual vitreoretinal surgery: validation of a training programme. Acta Ophthalmol. 2017;95(1):60–5.

Ng DS, Sun Z, Young AL, Ko ST, Lok JK, Lai TY, et al. Impact of virtual reality simulation on learning barriers of phacoemulsification perceived by residents. Clin Ophthalmol. 2018;12:885–93.

Landis ZC, Fileta J, Scott IU, Kunselman A, Sassani JW. Impact of surgical simulator training on patients’ perceptions of resident involvement in cataract surgery. Invest Ophthalmol Vis Sci. 2015;56(7):130.

Lowry EA, Porco TC, Naseri A. Cost analysis of virtual-reality phacoemulsification simulation in ophthalmology training programs. J Cataract Refract Surg. 2013;39(10):1616–7.

Nair AG, Ahiwalay C, Bacchav AE, Sheth T, Lansingh VC, Vedula SS, et al. Effectiveness of simulation-based training for manual small incision cataract surgery among novice surgeons: a randomized controlled trial. Sci Rep. 2021;11(1):10945.

Sikder S, Luo J, Banerjee PP, Luciano C, Kania P, Song JC, et al. The use of a virtual reality surgical simulator for cataract surgical skill assessment with 6 months of intervening operating room experience. Clin Ophthalmol. 2015;9:141–9.

Cissé C, Angioi K, Luc A, Berrod JP, Conart JB. EYESI surgical simulator: validity evidence of the vitreoretinal modules. Acta Ophthalmol. 2019;97(2):e277–82.

Mahr MA, Hodge DO. Construct validity of anterior segment anti-tremor and forceps surgical simulator training modules: attending versus resident surgeon performance. J Cataract Refract Surg. 2008;34(6):980–5.

Thomsen AS, Kiilgaard JF, Kjaerbo H, la Cour M, Konge L. Simulation-based certification for cataract surgery. Acta Ophthalmol. 2015;93(5):416–21.

Privett B, Greenlee E, Rogers G, Oetting TA. Construct validity of a surgical simulator as a valid model for capsulorhexis training. J Cataract Refract Surg. 2010;36(11):1835–8.

Le TD, Adatia FA, Lam WC. Virtual reality ophthalmic surgical simulation as a feasible training and assessment tool: results of a multicentre study. Can J Ophthalmol. 2011;46(1):56–60.

Thomsen ASS, la Cour M, Paltved C, Lindorff-Larsen KG, Nielsen BU, Konge L, et al. Consensus on procedures to include in a simulation-based curriculum in ophthalmology: a national Delphi study. Acta Ophthalmol. 2018;96(5):519–27.

Rasmussen RC, Grauslund J, Vergmann AS. Simulation training in vitreoretinal surgery: a systematic review. BMC Ophthalmol. 2019;19(1):90.

Lin JC, Yu Z, Scott IU, Greenberg PB. Virtual reality training for cataract surgery operating performance in ophthalmology trainees. Cochrane Database Syst Rev. 2021;12(12):Cd014953.

Thomsen AS, Subhi Y, Kiilgaard JF, la Cour M, Konge L. Update on simulation-based surgical training and assessment in ophthalmology: a systematic review. Ophthalmology. 2015;122(6):1111-30.e1.

Feudner EM, Engel C, Neuhann IM, Petermeier K, Bartz-Schmidt KU, Szurman P. Virtual reality training improves wet-lab performance of capsulorhexis: results of a randomized, controlled study. Graefes Arch Clin Exp Ophthalmol. 2009;247(7):955–63.

Saleh GM, Theodoraki K, Gillan S, Sullivan P, O’Sullivan F, Hussain B, et al. The development of a virtual reality training programme for ophthalmology: repeatability and reproducibility (part of the International Forum for Ophthalmic Simulation Studies). Eye. 2013;27(11):1269–74.

Bergqvist J, Person A, Vestergaard A, Grauslund J. Establishment of a validated training programme on the Eyesi cataract simulator. A prospective randomized study. Acta Ophthalmol. 2014;92(7):629–34.

Saleh GM, Lamparter J, Sullivan PM, O’Sullivan F, Hussain B, Athanasiadis I, et al. The international forum of ophthalmic simulation: developing a virtual reality training curriculum for ophthalmology. Br J Ophthalmol. 2013;97(6):789–92.

Roohipoor R, Yaseri M, Teymourpour A, Kloek C, Miller JB, Loewenstein JI. Early performance on an eye surgery simulator predicts subsequent resident surgical performance. J Surg Educ. 2017;74(6):1105–15.

Dean WH, Gichuhi S, Buchan JC, Makupa W, Mukome A, Otiti-Sengeri J, et al. Intense simulation-based surgical education for manual small-incision cataract surgery: the ophthalmic learning and improvement initiative in cataract surgery randomized clinical trial in Kenya, Tanzania, Uganda, and Zimbabwe. JAMA Ophthalmol. 2021;139(1):9–15.

Dean WH, Murray NL, Buchan JC, Golnik K, Kim MJ, Burton MJ, et al. Ophthalmic simulated surgical competency assessment rubric for manual small-incision cataract surgery. Cataract Refract Surg. 2019;45(9):1252–7.

Lucas L, Schellini SA, Lottelli AC. Complications in the first 10 phacoemulsification cataract surgeries with and without prior simulator training. Arq Bras Oftalmol. 2019;82(4):289–94.

McCannel CA, Reed DC, Goldman DR. Ophthalmic surgery simulator training improves resident performance of capsulorhexis in the operating room. Ophthalmology. 2013;120(12):2456–61.

Ferris JD, Donachie PH, Johnston RL, Barnes B, Olaitan M, Sparrow JM. Royal College of Ophthalmologists' National Ophthalmology Database study of cataract surgery: report 6. The impact of EyeSi virtual reality training on complications rates of cataract surgery performed by first and second year trainees. Br J Ophthalmol. 2020;104(3):324–9.

Staropoli PC, Gregori NZ, Junk AK, Galor A, Goldhardt R, Goldhagen BE, et al. Surgical simulation training reduces intraoperative cataract surgery complications among residents. Simul Healthc. 2018;13(1):11–5.

Pokroy R, Du E, Alzaga A, Khodadadeh S, Steen D, Bachynski B, et al. Impact of simulator training on resident cataract surgery. Graefes Arch Clin Exp Ophthalmol. 2013;251(3):777–81.

Daly MK, Gonzalez E, Siracuse-Lee D, Legutko PA. Efficacy of surgical simulator training versus traditional wet-lab training on operating room performance of ophthalmology residents during the capsulorhexis in cataract surgery. J Cataract Refract Surg. 2013;39(11):1734–41.

Lopez-Beauchamp C, Singh GA, Shin SY, Magone MT. Surgical simulator training reduces operative times in resident surgeons learning phacoemulsification cataract surgery. Am J Ophthalmol Case Rep. 2020;17: 100576.

Belyea DA, Brown SE, Rajjoub LZ. Influence of surgery simulator training on ophthalmology resident phacoemulsification performance. J Cataract Refract Surg. 2011;37(10):1756–61.

Yeh S, Chan-Kai BT, Lauer AK. Basic training module for vitreoretinal surgery and the Casey Eye Institute Vitrectomy Indices Tool for Skills Assessment. Clin Ophthalmol. 2011;5:1249–56.

Solverson DJ, Mazzoli RA, Raymond WR, Nelson ML, Hansen EA, Torres MF, et al. Virtual reality simulation in acquiring and differentiating basic ophthalmic microsurgical skills. Simul Healthc. 2009;4(2):98–103.

Jaud C, Salleron J, Cisse C, Angioi-Duprez K, Berrod JP, Conart JB. EyeSi Surgical Simulator: validation of a proficiency-based test for assessment of vitreoretinal surgical skills. Acta Ophthalmol. 2021;99(4):390–6.

Thomsen ASS, Kiilgaard JF, la Cour M, Brydges R, Konge L. Is there inter-procedural transfer of skills in intraocular surgery? A randomized controlled trial. Acta Ophthalmol. 2017;95(8):845–51.

Petersen SB, Vestergaard AH, Thomsen ASS, Konge L, Cour M, Grauslund J, et al. Pretraining of basic skills on a virtual reality vitreoretinal simulator: a waste of time. Acta Ophthalmol. 2022;100(5):e1074–9.

Deuchler S, Wagner C, Singh P, Müller M, Al-Dwairi R, Benjilali R, et al. Clinical efficacy of simulated vitreoretinal surgery to prepare surgeons for the upcoming intervention in the operating room. PLoS ONE. 2016;11(3): e0150690.

Dean WH, Buchan J, Gichuhi S, Philippin H, Arunga S, Mukome A, et al. Simulation-based surgical education for glaucoma versus conventional training alone: the GLAucoma Simulated Surgery (GLASS) trial. A multicentre, multicountry, randomised controlled, investigator-masked educational intervention efficacy trial in Kenya, South Africa, Tanzania, Uganda and Zimbabwe. Br J Ophthalmol. 2022;106(6):863–9.

Borgersen NJ, Skou Thomsen AS, Konge L, Sørensen TL, Subhi Y. Virtual reality-based proficiency test in direct ophthalmoscopy. Acta Ophthalmol. 2018;96(2):e259–61.

Jørgensen M, Savran MM, Christakopoulos C, Bek T, Grauslund J, Toft PB, et al. Development and validation of a multiple-choice questionnaire-based theoretical test in direct ophthalmoscopy. Acta Ophthalmol. 2019;97(7):700–6.

Moon HS, Yoon HJ, Park SW, Kim CY, Jeong MS, Lim SM, et al. Usefulness of virtual reality-based training to diagnose strabismus. Sci Rep. 2021;11(1).

Simpson SM, Schweitzer KD, Johnson DE. Design and validation of a training simulator for laser capsulotomy, peripheral iridotomy, and retinopexy. Ophthalm Surg Lasers Imaging Retina. 2017;48(1):56–61.

Zhao J, Ahmad M, Gower EW, Fu R, Woreta FA, Merbs SL. Evaluation and implementation of a mannequin-based surgical simulator for margin-involving eyelid laceration repair—a pilot study. BMC Med Educ. 2021;21(1):170.

Cook DA, Brydges R, Zendejas B, Hamstra SJ, Hatala R. Technology-enhanced simulation to assess health professionals: a systematic review of validity evidence, research methods, and reporting quality. Acad Med. 2013;88(6):872–83.

Borgersen NJ, Naur TMH, Sørensen SMD, Bjerrum F, Konge L, Subhi Y, et al. Gathering validity evidence for surgical simulation: a systematic review. Ann Surg. 2018;267(6):1063–8.

Turnbull AM, Lash SC. Confidence of ophthalmology specialist trainees in the management of posterior capsule rupture and vitreous loss. Eye (Lond). 2016;30(7):943–8.

Acknowledgements

Funding

No funding or sponsorship was received for this study or publication of this article.

Author Contributions

Simon Joel Lowater and Anna Stage Vergmann came up with the structure for the manuscript. Simon Joel Lowater screened databases for relevant material and sent relevant articles for assessment to Anna Stage Vergmann. Both Simon Joel Lowater and Anna Stage Vergmann assessed articles for relevance. Most of the manuscript was authored by Simon Joel Lowater except most of the introduction which primarily was authored by Anna Stage Vergmann. The manuscript was read, critically reviewed and edited through several rounds for intellectual content by Anna Stage Vergmann and Jakob Grauslund. The final version of this manuscript was approved by all Simon Joel Lowater, Anna Stage Vergmann and Jakob Grauslund. Simon Joel Lowater, Anna Stage Vergmann and Jakob Grauslund contributed to this manuscript with a substantial amount of work.

List of Investigators

Simon Joel Lowater, Anna Stage Vergmann, Jakob Grauslund.

Disclosures

Neither Simon Joel Lowater, Anna Stage Vergmann or Jakob Grauslund have any conflicts of interest to declare.

Compliance with Ethics Guidelines

This article is based on previously conducted studies and does not contain any new studies with human participants or animals performed by any of the authors.

Data Availability

Data sharing is not applicable to this article as no datasets were generated or analyzed during the current study.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License, which permits any non-commercial use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc/4.0/.

About this article

Cite this article

Lowater, S.J., Grauslund, J. & Vergmann, A.S. Modern Educational Simulation-Based Tools Among Residents of Ophthalmology: A Narrative Review. Ophthalmol Ther 11, 1961–1974 (2022). https://doi.org/10.1007/s40123-022-00559-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40123-022-00559-y