Abstract

Introduction

The aim of this study was to investigate the feasibility of generating synthesized ultrasound biomicroscopy (UBM) images from swept-source anterior segment optical coherent tomography (SS-ASOCT) images using a cycle-consistent generative adversarial network framework (CycleGAN) for iridociliary assessment on a cohort presenting for primary angle-closure screening.

Methods

The CycleGAN architecture was adopted to synthesize high-resolution UBM images trained on the SS-ASOCT dataset from the department of ophthalmology, Xinhua Hospital. The performance of the CycleGAN model was further tested in two separate datasets using synthetic UBM images from two different ASOCT modalities (in-distribution and out-of-distribution). We compared the ability of glaucoma specialists to assess the image quality of real and synthetic images. UBM measurements, including anterior chamber, iridociliary parameters, were compared between real and synthetic UBM images. Intra-class correlation coefficients, coefficients of variation, and Bland–Altman plots were used to assess the level of agreement. The Fréchet Inception Distance (FID) was measured to evaluate the quality of the synthetic images.

Results

The whole trained dataset included anterior chamber angle images, of which 4037 were obtained by SS-ASOCT and 2206 were obtained by UBM. The image quality of real versus synthetic SS-ASOCT images was similar as assessed by two glaucoma specialists. The Bland–Altman analysis also suggested high consistency between measurements of real and synthetic UBM images. In addition, there was fair to excellent agreement between real and synthetic UBM measurements for the in-distribution dataset (ICC range 0.48–0.97) and the out-of-distribution dataset (ICC range 0.52–0.86). The FID was 21.3 and 24.1 for the synthetic UBM images from the in-distribution and out-of-distribution datasets, respectively.

Conclusion

We developed a CycleGAN model to translate UBM images from non-contact SS-ASOCT images. The CycleGAN synthetic UBM images showed fair to excellent reproducibility when compared with real UBM images. Our results suggest that the CycleGAN technique is a promising tool to evaluate the iridociliary and anterior chamber in an alternative non-contact method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Why carry out this study? |

Ultrasound biomicroscopy (UBM) and anterior segment optical coherent tomography (ASOCT) are the most widely used instruments to objectively visualize and evaluate anterior segment parameters. Both demonstrate excellent repeatability and reproducibility, while each has its own specific limitations. |

A new technology that combines the advantages of both devices is necessary; such a tool might have the potential to become the gold standard method for measuring anterior segment parameters. |

We have previously shown that a generative adversarial network (GAN) framework of synthetic OCT images provides good quality images for clinical evaluation and can also be used for developing deep learning algorithms. Recently, the cycle-consistent generative adversarial network framework (CycleGAN) was introduced to generate images from different imaging modalities. |

This aim of this study was to investigate the feasibility of generating synthesized UBM from swept-source anterior segment optical coherent tomography (SS-ASOCT) using CycleGAN for iridociliary assessment. |

What was learned from the study? |

Our results showed that there was good to excellent correlation of anterior segment parameters measured from the synthetic images and those from real UBM images. |

The CycleGAN-based deep learning technique provides a promising strategy to assess iridociliary using easy-to-use and non-contact methods. |

Introduction

Precise ocular biometry is crucial for the diagnosis and treatment of ocular disorders. For anterior ocular biometric measurements, newer imaging instruments have been developed to objectively visualize and evaluate anterior segment parameters instead of traditional subjective techniques, such as slip-lamp examination and gonioscopy. Among these devices, ultrasound biomicroscopy (UBM) and anterior segment optical coherence tomography (ASOCT) are the most widely used imaging modalities. UBM allows high-resolution visualization of the anterior segment and angle structures at an ultrasonic frequency of 35–100 MHz, providing additional information on the posterior chamber not otherwise available through clinical examination [1]. ASOCT is a computerized imaging technology providing optical cross-sectional images of ocular structures, and when updated with the newer technology of swept-source OCT (SS-OCT), it provides a larger number of non-contact higher resolution images, more detailed information, and precise parameters of the angle, corneal, iris, and anterior chamber volumes; in addition, the fast scan rate effectively decreases motion artifact [2].

Research has demonstrated excellent repeatability and reproducibility of UBM and SS-ASOCT [3,4,5]. However, despite their high value as diagnostic tools, both techniques have limitations. UBM is a contact, non-invasive method that takes a relatively longer time, and capturing diagnostic images and evaluation requires great skill and experience of the operator. SS-ASOCT is based on the optical principle. Since the examining beam cannot penetrate through the iris, certain structures in the posterior chamber, such as ciliary processes, zonule fibers, and cysts or intraocular foreign body behind the iris, can not be captured and imaged. Should a specific new technological method be developed that could combine the advantages of both devices, namely, a device that could automatically capture the non-contact high-resolution images of the anterior segment and angle structures with posterior chamber information, as well as unique anterior chamber parameters under rapid scan, it might have the potential to become the gold standard method for measuring the anterior segment parameters.

The recent development of deep learning methods, especially domain adaption using generative adversarial networks (GANs) [6], has attracted much interest in the field of medical imaging analysis. Several studies have demonstrated the capability of GANs to generate synthetic computed tomography (CT) or magnetic resonance (MRI) images of prostate [7], liver [8], brain [9], and head and neck cancer [10]. We have previously shown that GANs of synthetic OCT images have good quality for clinical evaluation and can also be used for developing deep learning algorithms [11,12,13]. More recently, a cycle-consistent generative adversarial network framework (CycleGAN) was introduced to generate images from different imaging modalities. For example, several researchers have demonstrated a CycleGAN-based domain transfer between CT and MRI images [14], traditional retinal fundus photographs, and ultra-widefield images [15], among others. However, cross-modality image transfer between SS-ASOCT and UBM has not yet been reported. Inspired by this domain transfer, the aim of this study was to build a CycleGAN-based deep learning model for the domain transfer from SS-ASOCT to UBM. We also conducted experiments to demonstrate the effectiveness of the CycleGAN method by qualitatively and quantitatively measuring iridociliary parameters from synthetic UBM using this technique with an independent dataset.

Methods

Study Design

We retrospectively collected the development datasets from the PACS ((picture archiving and communication system) of the department of ophthalmology, Xinhua Hospital, Medicine School of Shanghai Jiaotong University. The raw development datasets consisted of 2314 SS-ASOCT images and 2417 UBM images from 163 and 612 patients, respectively, between 16 September 2020, and 10 December 2021 (Fig. 1a). All subjects had visited the glaucoma clinic to screen for primary angle-closure glaucoma, which is a major cause of blindness in Chinese population. We excluded patients with a prior history of trauma, intraocular tumor, intraocular surgery, and laser iridoplasty. Eyes with gross iris atrophy and uveitis were also excluded. Details of SS-ASOCT and UBM imaging have been described previously [16, 17]. In brief, SS-ASOCT (model CASIA2; Tomey, Nagoya, Japan) is a novel imaging modality which, compared to earlier generations of ASOCT, such as time-domain ASOCT (Visante OCT; Carl Zeiss Meditec, Dublin, CA, USA), has a faster scan speed (50,000 vs. 2000 A-scans/s), provides deeper imaging (11 vs. 8 mm), and has a higher resolution (2.4 vs. 10 µm). In the present study, SS-ASOCT (model CASIA2; Tomey Corp., Nagoya, Japan) images were obtained for all participants. A total of 128 two-dimensional cross-sectional SS-ASOCT images were acquired per scan. All UBM images were taken by UBM (model SW-3200L; Suoer Electronic Ltd., Tianjin, China). During the UBM examination, subjects lay supine under standardized dark conditions (illumination 60–70 lx). The probe was then placed perpendicular to the ocular surface, and images of all four quadrants were obtained. A single operator imaged all subjects. Only those images were included in the analysis which clearly visualized the scleral spur, drainage angle, ciliary body (for UBM images only), and a half chord of the iris.

To evaluate the GAN model, we also collected two independent testing datasets as the in-distribution and out-of-distribution testing datasets, respectively. The in-distribution testing dataset included SS-ASOCT images from the same hospital between 1 March 2021 and 4 May 2021. The out-of-distribution testing datasets involved time-domain ASOCT images acquired at a different hospitals from our previous study. All subjects underwent an ophthalmic examination, including best-corrected visual acuity, refraction, slit-lamp examination, and gonioscopic anterior chamber angle (ACA) evaluation by a fellowship-trained glaucoma specialist (Goldmann 2-mirror lens; Haag-Streit AG, Bern, Switzerland). The inclusion and exclusion criteria were identical for the training and independent testing datasets. In the two testing datasets, ASOCT and UBM were performed in terms of the nasal and temporal angle.

This study was approved by the Institutional Review Board of Xinhua Hospital, Medicine School of Shanghai Jiaotong University (identifier: XHEC-D-2021-114). The study was carried out in accordance with the ethical standards set by the Declaration of Helsinki of 1964 as amended in 2008. All SS-ASOCT and UBM images were anonymized and de-identified according to the Health Insurance Portability and Accountability Act Safe Harbor before analysis [18]. Informed consent was exempted by the IRB in the retrospectively collected development and validation datasets. In the prospectively collected testing dataset, informed consent for publication was obtained from all enrolled patients or from their guardians.

CycleGAN Architecture and Generation of Synthesized UBM Images

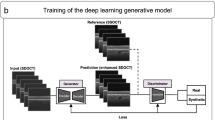

To generate the synthesized UBM images, we adopted a CycleGANs model to translate images from the SS-ASOCT domain to UBM using unpaired data (Fig. 1b). The details of the CycleGAN architecture have been previously described by Zhu et al. In brief, CycleGAN is a type of unsupervised machine learning technique used for mapping different image domains [19]. The whole neural network of CycleGAN consists of two generator-discriminator networks. Figure 1b shows the schematic of the CycleGAN model in the current study. The forward cycle learns a mapping of the first generator to translate SS-ASOCT images to synthetic UBM images and attempts to make synthetic UBM images that are as real as real UBM images to fool the discriminator. On the other hand, the backward cycle learns a mapping of the second generator to transform synthetic UBM images back into synthetic SS-ASOCT images, and to make synthetic SS-ASOCT images as real as the real SS-ASOCT images. This cycle consistency allows CycleGANs to capture the characteristics of two image domains and automatically learn how these characteristics should be translated to transfer the domains without any paired datasets [20].

We adopted the codes of CycleGANs from the Tensorflow tutorial webpage (https://www.tensorflow.org/tutorials/generative/CycleGANs). Since each raw SS-ASOCT image contains two ACA regions, we split each image into two ACA images. Both SS-ASOCT and UBM ACA images were then rescaled to 256 × 256 pixels, normalized between − 1 and + 1, and augmented by random flip, cropping, and contrast perturbations. For training, we used an Adam optimizer learning rate of 0.0002 and a batch size of 1, as described by the same webpage. The model was trained on the Kaggle platform (www.kaggle.com), a free cloud service for deep learning research. Kaggle cloud platform provides free access to NVIDIA TESLA P100 GPUs with 16 GB RAM.

Evaluation of CycleGANs Synthetic UBM Images for Iridociliary Assessment

We sought to determine whether the CycleGANs synthetic UBM images could be utilized to assess the iridociliary. First, a visual Turing test [21] was performed to evaluate the image quality of synthetic UBM images. Then, we applied the CycleGAN to the independent testing dataset, and 30 synthetic UBM images were generated accordingly. All images were displayed on a laptop screen with 256 × 256 pixels. Two glaucoma specialists (HFY and YQH with more than 5 and 10 years of glaucoma experience, respectively) from the same center manually assessed the image quality of both the synthetic and real UBM images. To avoid confirmation bias, the glaucoma specialists were not told that there a

were any synthetic images among the UBM images. We have devised an image quality grading scheme for evaluating the GAN synthetic ophthalmic images [11, 12] that includes: (1) visibility of the scleral spurs, which was defined as the point where there was a change in the curvature of the inner surface of the angle wall [22]; (2) presence of continuity in the anterior segment structures, including iridociliary [11]; and (3) the absence of motion artifacts [23]. After image quality evaluation, two glaucoma specialists were asked to classify every image in that dataset as being either real or fake.

To quantitively assess the synthetic UBM images in the in-distribution (SS-ASOCT) and out-of-distribution (time-domain ASOCT) datasets, imaging analysis in the testing dataset was further processed using q-customized software (Anterior Segment Analysis Program [ASAP] [24]) by a single experienced observer (YW) who was masked to the clinical data. ASAP works as a plug-in of public domain software (ImageJ version 1.38x; public domain software: http://imagej.nih.gov/ij) and based on traditional image processing algorithms. The algorithm then automatically calculated the anterior angle and iridociliary parameters. The following parameters were measured as described previously: (1) ciliary body thickness at the point of the scleral spur (CT0) and at the distance of 1000 um (CT1000) from the scleral spur [25]; (2) angle opening distance (AOD500) [26], which was calculated as the perpendicular distance measured from the trabecular meshwork at 500 um anterior to the scleral spur as described previously; (3) iris thickness (IT500), measured at 500 μm from the scleral spur; (4) trabecular-ciliary process angle (TCA), measured as the angle between the corneal endothelium and superior surface of the ciliary process; and (4) trabecularciliary process distance (TCPD), defined as the length of a line 500 μm from the scleral spur extending from the corneal endothelium, perpendicular through the posterior surface of the iris, to the ciliary process (Fig. 1c).

To further measure the performance of the CycleGAN model, we chose the Fréchet Inception Distance (FID) to evaluate the synthetic images. FID is a widely used metric for evaluating the distance between the distributions of synthetic data and real data by calculating the Wasserstein-2 distance in the feature space of an Inception-v3 network.

Statistical Analysis

The quality of the synthetic and real UBM images’ was graded by two glaucoma specialists, using Pearson’s χ2 test. To evaluate the agreement between the measurements of the devices, Bland–Altman analysis (mean difference and limits of agreement [LoA]) was performed. The measurement correlations were calculated using intra-class correlation coefficients (ICCs) and coefficients of variation (CoVs). An ICC of < 0.4 indicated poor reproducibility, ICC between 0.4 and 0.75 indicated fair to good reproducibility, and ICC > 0.75 indicated excellent reproducibility. All statistical tests were performed using the SPSS version 26.0 software (SPSS IBM Corp., Armonk, NY, USA).

Results

In this study, the CycleGAN model generated UBM images (pixel resolution of 256 × 256) using SS-ASOCT images. After image grading and preprocessing, the trained dataset included 4037 SS-ASOCT ACA and 2206 UBM ACA images. The processing time for one synthetic UBM image was approximately 1.1 s using a commercial laptop (Apple MacBook Air M1; Apple Inc., Cupertino, CA, USA). The examples of synthetic UBM images and corresponding SS-ASOCT images are shown in Fig. 2. Overall, our approach is capable of generating OCT images that are realistic. The results of UBM image quality grading by two glaucoma specialists are shown in Table 1. Both glaucoma specialists graded synthetic UBM images as having approximately the same proportion of good quality images as the real UBM images (all p > 0.05, Pearson Chi-square). Table 2 also summarizes the second part of the Turing test. It was notable that the accuracy for distinguishing between true and fake images was 56.7% and 60%, respectively, which is only slightly better than chance.

In the in-distribution (SS-ASOCT) dataset, there was fair to excellent agreement between the measurements of real and synthetic UBM images of the anterior chamber and of iridociliary parameters (Table 3). ICC values were 0.74 (CT1000), 0.86 (CT0), 0.97 (AOD500), 0.48 (IT500), 0.81 (TCA) and 0.80 (TCPD). Figure 3a shows the Bland–Altman plot for the anterior chamber parameter (AOD500). The mean difference was − 0.06 mm at 95% LoA (− 0.11 to − 0.01 mm). The Bland–Altman plots for iridociliary parameters CT0, CT1000, TCA, and TCPD are shown in Fig. 3b, c, e, and f, respectively; the mean difference was − 0.10 mm (95% LoA − 0.34 to 0.12), − 0.01 mm (95% LoA − 0.12 to 0.09), − 5.1° (95% LoA − 21.91° to 11.71°), and 0.02 mm (95% LoA; − 0.22 to 0.25), respectively. Figure 3d shows the Bland–Altman plot for the iris parameter (IT500); the mean difference was − 0.07 mm at 95% LoA of − 0.20 to 0.07 mm (Fig. 3; Table 3). The CoVs were 7.7% (CT1000), 13.3% (CT0), 25.8% (AOD500), 16.5% (IT500), 8.4% (TCA), and 9.6% (TCPD).

Evaluation of agreement between real and synthetic UBM images measurements of anterior chamber and iridociliary parameters: Bland–Altman plot for AOD500 (a), CT0 (b), CT1000 (c), IT500 (d), TCA (e), and TCPD (f). AOD500 Angle opening distance, CTO ciliary body thickness at the point of the scleral spur, CT1000 ciliary body thickness at the distance of 1000 um from the scleral spur, IT500 iris thickness, measured at 500 μm from the scleral spur. TCA trabecular-ciliary process angle, PCPD trabecularciliary process distance; for more detail, see text

In the out-of-distribution (time-domain ASOCT) dataset, the fair to excellent agreements were also observed between real and synthetic UBM image measurements of the anterior chamber and iridociliary parameters (Table 4). ICC values were 0.70 (CT1000), 0.82 (CT0), 0.86 (AOD500), 0.52 (IT500), 0.73 (TCA), and 0.81 (TCPD).

As no previous data are available on the question addressed in our study, we were unable to determine whether the FID measured in our experiment was good or not. Therefore, we added the synthetic ASOCT images from a precious study performed by our group as reference. Relative to calculations based on the real UBM images, the FID was 21.3, 24.1, and 102.8 for synthetic UBM images from the in-distribution dataset, synthetic UBM images from out-of-distribution dataset, and synthetic ASOCT images, respectively (Fig. 4). It was noted that the FIDs obtained from in-distribution and out-of-distribution UBM images were much smaller than those obtained by synthetic images from different anterior chamber imaging modalities.

We also noted several cases of failure, as shown in Fig. 5. If an input SS-ASOCT image shows an open angle with shallow anterior chamber, the CycleGAN would not be able to generate angle structure correctly.

Discussion

In this study, we proposed a CycleGAN-based deep learning technique for generating UBM images using SS-ASOCT images as the inputs. Our experiments suggest that realistic synthetic UBM images can be generated from SS-ASOCT images using CycleGANs. Moreover, the CycleGAN synthetic UBM images can reveal iridociliary structure. The measurements of iridociliary parameters from the CycleGAN synthetic UBM images showed fair to excellent reproducibility compared with those from the real UBM images. Although the measurements of anterior segment parameters obtained with real and synthetic UBM images cannot be considered interchangeable, there is fair to excellent correlation between them. The potential application of this technique is promising, as most clinical researchers still use UBM as the angle image modality in diagnosing angle-closure.

Assessment of angle structure is an essential part of diagnosing and determining the management of individuals with angle-closure [17, 27]. UBM allows visualization of the anterior segment and angle structures at an ultrasonic frequency of 35–100 MHz, providing high-resolution (50 μm) images of the angle and iridociliary, which adds valuable information regarding causal mechanisms of angle-closure [28]. UBM is characterized to assess the pathologies behind the iris, such as, for example, plateau iris, lens-induced glaucoma, ciliary block, cysts, and solid tumors of the anterior segment. On the other hand, SS-ASOCT has a fast scan speed (50,000 A-scans/s), allows deep imaging (11 mm), and has a higher resolution (2.4 µm); in addition, it provides a simple, user-friendly, and non-contact method of assessing the angle structure and providing more detailed information and precise parameters of the angle and corneal, iris, and anterior chamber volumes. The fast scan rate of SS-ASOCT effectively decreases motion artifacts [2]. Unfortunately, SS-ASOCT cannot visualize structures posterior to the iris [29], which precludes assessment of the impact of the iridociliary in some angle-closure mechanisms, such as plateau iris which is more common in the Chinese population. Therefore, it would be clinically advantageous to develop a diagnostic modality in which these two anterior segment imaging modalities are integrated. For example, Kwon et al. assessed eyes with primary angle-closure (PAC) using both SS-ASOCT and UBM [17]. Based on their results, these authors suggested that by using UBM, clinicians may obtain more clues on the mechanisms of PAC. Moreover, prior SS-ASOCT data can be transferred to UBM and further combined into an anterior segment dataset for medical follow-up, clinical research, and deep learning analysis.

GANs offer a novel method to generate new medical images. Recent studies suggest possible applications of generative methods for retinal image registration and fundus or optical coherence tomography image denoising [30]. Traditional GANs require a large training dataset to synthesize realistic medical images. For example, in our previous studies [11], we proposed a GAN approach to generate realistic ASOCT images using more than 20,000 ASOCT images. Moreover, traditional GAN models, such as progressively grown GANs or deep convolutional GANs, can only work in images from the same domain. To synthesize medical images from different domains, several generative networks have been suggested, including unsupervised GAN models, such as CycleGAN, and supervised networks, such as Pix2Pix GAN. Pix2Pix techniques have shown good performance in image translation settings. However, the critical shortage of paired dataset restricts the real application of supervised GANs. UBM requires a contact immersion scanning technique that is run by skilled operators. Therefore, it is difficult to collect a sufficiently large paired dataset for supervised GAN training, which is also a common issue in medical imaging research. In addition, it is inevitable that the body or eyes will move during different scanning procedures, such as CT and MRI, ASOCT and UBM, which make it a challenge to match or register two different domain images. CycleGAN is a type of unsupervised machine learning technique with the significant advantage of being able to utilize the unpaired dataset with two different domains. Recently, several researchers have demonstrated a CycleGAN-based domain transfer between different image modalities. For example, Li et al. proposed a CycleGAN architecture to synthesize MRI images from brain CT images for MR-guided radiotherapy [31]. In another study, Muhaddisa et al. reported an effective domain adaptation method based on CycleGANs to map MR images from different datasets taken from different hospitals using different scanners and settings [32]. Their technique effectively enlarged the MRI dataset, and the proposed scheme was shown to achieve good diagnostic performance for predicting molecular subtypes in low-grade gliomas. Using a similar CycleGAN model, Yoo et al. showed this technique can synthesize traditional fundus photography images directly from ultra-widefield fundus photography without a manual pre-conditioning process [15]. However, cross-modality domain transfer between SS-ASOCT and UBM is challenging due to the high variability of angle tissue or structure appearance caused by different imaging mechanisms [33]. In the current study, we built a CycleGAN-based deep learning model for the domain transfer from SS-ASOCT to UBM. Our result was encouraging in that we found that the CycleGAN model can generate realistic UBM images of high quality, as assessed by human experts in our Turing test.

Despite the above, our study has a few limitations. First, our CycleGAN model can only generate UBM images with 256 × 256 pixel resolution, which is lower than that of the Casia2 SS-ASOCT system (2129 × 1464 pixel resolution) and the Suoer UBM (1024 × 655 pixel resolution) system. For the evaluation of some small lesions, such as ciliary body cysts or tumors, a higher resolution may be needed. As only part of the iris and lens were synthesized in the UBM image, we also could not assess the reproducibility of some lens or iris parameters (lens vault or iris convexity). On the other hand, some angle structure, such as iris thickness measured at 500 μm from the scleral spur, only has a length of a few pixels which could cause lower ICC in our study. The aim of the present study was to build a CycleGAN-based deep learning model for the domain transfer from SS-ASOCT to UBM. It is possible to generate higher resolutions (e.g., 1024 × 1024 or above), as reported in our previous study [12]. Future work will involve generating small lesions or angle structures, which might help improve the clinical application of GAN models. Second, most of the subjects in our testing datasets had an open-angle. Other angle-closure mechanisms, like pupil block, plateau iris configuration, thick peripheral iris roll, and exaggerated lens vault, were not evaluated in the current study [34]. Generating the iridociliary for different angle-closure mechanisms might help improve our understanding of angle-closure. Third, although we assessed the generalizability of the CycleGAN model by testing it within independent external datasets, the whole dataset is collected from the same center, using the same devices, following the same process. This will result in the method being vulnerable to the domain shift and will also cause over-optimism about the results. The latest module of SS-ASOCT, CASIA2, is only available in very few centers in mainland China, which prevented us from collecting more data from other centers. The application of the CycleGAN technique requires further validation in multi-center and multi-ethnic trials. Fourth, the CycleGAN model does not work in a pairwise fashion. For paired image-to-image translation (i.e., ASOCT images to UBM images with the same corresponding clock-hour position), Pix2Pix architecture (a supervised GAN) is the better model to learn a mapping from input images to corresponding output images. However, supervised GAN needs paired datasets that are not available in the current study. Overlapped examinations of both SS-ASOCT and UBM may cost the patients more and increase the use of human resources. The objective of this study was to evaluate the feasibility of CycleGANs to generate synthesized UBM images from SS-ASOCT images. The real-world applications of UBM include tumors, trauma, and surgical complications, all of which cannot be easily synthesized using the CycleGAN model. Future work is need to evaluate the iridociliary and angle pathologies using other GAN techniques, such as meta-learning-based GANs or semi-supervised GANs, by collecting pairs of ASOCT and UBM images of different pathologies.

Conclusion

In conclusion, we developed a CycleGAN model for generating UBM images using SS-ASOCT images as the inputs. Our preliminary results showed that there has fair to excellent correlation between anterior segment parameters measured from the synthetic and real UBM images. The CycleGAN-based deep learning technique presents a promising way to assess the iridociliary with non-contact methods.

References

Silverman RH. High-resolution ultrasound imaging of the eye—a review. Clin Exp Ophthalmol. 2009;37(1):54–67. https://doi.org/10.1111/j.1442-9071.2008.01892.x.

Ang M, Baskaran M, Werkmeister RM, et al. Anterior segment optical coherence tomography. Prog Retin Eye Res. 2018;66:132–56. https://doi.org/10.1016/j.preteyeres.2018.04.002.

Chansangpetch S, Nguyen A, Mora M, et al. Agreement of anterior segment parameters obtained from swept-source fourier-domain and time-domain anterior segment optical coherence tomography. Invest Ophthalmol Vis Sci. 2018;59(3):1554–61. https://doi.org/10.1167/iovs.17-23574.

Montes-Mico R, Pastor-Pascual F, Ruiz-Mesa R, Tana-Rivero P. Ocular biometry with swept-source optical coherence tomography. J Cataract Refract Surg. 2021;47(6):802–14. https://doi.org/10.1097/j.jcrs.0000000000000551.

Zhang Q, Jin W, Wang Q. Repeatability, reproducibility, and agreement of central anterior chamber depth measurements in pseudophakic and phakic eyes: optical coherence tomography versus ultrasound biomicroscopy. J Cataract Refract Surg. 2010;36(6):941–6. https://doi.org/10.1016/j.jcrs.2009.12.038.

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D. Generative adversarial nets. In: Ghahramani Z, Welling M, Cortes C, Lawrence N, Weinberger KQ, editors. Advances in neural information processing systems 27 (NIPS 2014), 8–13 Dec 2014, Montreal.

Chen S, Qin A, Zhou D, Yan D. Technical Note: U-net-generated synthetic CT images for magnetic resonance imaging-only prostate intensity-modulated radiation therapy treatment planning. Med Phys. 2018;45(12):5659–65. https://doi.org/10.1002/mp.13247.

Liu Y, Lei Y, Wang T, et al. MRI-based treatment planning for liver stereotactic body radiotherapy: validation of a deep learning-based synthetic CT generation method. Br J Radiol. 2019;92(1100):20190067. https://doi.org/10.1259/bjr.20190067.

Kazemifar S, McGuire S, Timmerman R, et al. MRI-only brain radiotherapy: assessing the dosimetric accuracy of synthetic CT images generated using a deep learning approach. Radiother Oncol. 2019;136:56–63. https://doi.org/10.1016/j.radonc.2019.03.026.

Wang Y, Liu C, Zhang X, Deng W. Synthetic CT generation based on T2 weighted MRI of nasopharyngeal carcinoma (NPC) using a deep convolutional neural network (DCNN). Front Oncol. 2019;9:1333. https://doi.org/10.3389/fonc.2019.01333.

Zheng C, Bian F, Li L, et al. Assessment of generative adversarial networks for synthetic anterior segment optical coherence tomography images in closed-angle detection. Transl Vis Sci Technol. 2021;10(4):34. https://doi.org/10.1167/tvst.10.4.34.

Zheng C, Xie X, Zhou K, et al. Assessment of generative adversarial networks model for synthetic optical coherence tomography images of retinal disorders. Transl Vis Sci Technol. 2020;9(2):29. https://doi.org/10.1167/tvst.9.2.29.

Zheng C, Koh V, Bian F, et al. Semi-supervised generative adversarial networks for closed-angle detection on anterior segment optical coherence tomography images: an empirical study with a small training dataset. Ann Transl Med. 2021;9(13):1073. https://doi.org/10.21037/atm-20-7436.

Liu Y, Khosravan N, Liu Y, et al. Cross-modality knowledge transfer for prostate segmentation from CT scans. In: Domain adaptation and representation transfer and medical image learning with less labels and imperfect data. Cham: Springer; 2019. p. 63–71.

Yoo TK, Ryu IH, Kim JK, et al. Deep learning can generate traditional retinal fundus photographs using ultra-widefield images via generative adversarial networks. Comput Methods Programs Biomed. 2020;197: 105761.

Verma S, Nongpiur ME, Oo HH, et al. Plateau Iris distribution across anterior segment optical coherence tomography defined subgroups of subjects with primary angle closure glaucoma. Invest Ophthalmol Vis Sci. 2017;58(12):5093–7. https://doi.org/10.1167/iovs.17-22364.

Kwon J, Sung KR, Han S, Moon YJ, Shin JW. Subclassification of primary angle closure using anterior segment optical coherence tomography and ultrasound biomicroscopic parameters. Ophthalmology. 2017;124(7):1039–47. https://doi.org/10.1016/j.ophtha.2017.02.025.

Office for Civil Rights (OCR). Guidance regarding methods for de-identification of protected health information in accordance with the Health Insurance Portability and Accountability Act (HIPAA) privacy rule. https://www.hhs.gov/guidance/document/guidance-regarding-methods-de-identification-protected-health-information-accordance-0. Accessed 12 Mar 2017.

Zhu J-Y, Park T, Isola P, Efros AA. Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Proceedings of the IEEE international conference on computer vision, 22–19 Oct 2017, Venice. p. 2223–32.

Liu Y, Guo Y, Chen W, Lew MS. An extensive study of cycle-consistent generative networks for image-to-image translation. In: 24th international conference on pattern recognition (ICPR), 20–14 Aug, 2018, Beijing. p. 219–24.

Schlegl T, Seebock P, Waldstein SM, Langs G, Schmidt-Erfurth U. f-AnoGAN: fast unsupervised anomaly detection with generative adversarial networks. Med Image Anal. 2019;54:30–44. https://doi.org/10.1016/j.media.2019.01.010.

Sakata LM, Lavanya R, Friedman DS, et al. Assessment of the scleral spur in anterior segment optical coherence tomography images. Arch Ophthalmol. 2008;126(2):181–5. https://doi.org/10.1001/archophthalmol.2007.46.

Lee RY, Kasuga T, Cui QN, et al. Association between baseline iris thickness and prophylactic laser peripheral iridotomy outcomes in primary angle-closure suspects. Ophthalmology. 2014;121(6):1194–202. https://doi.org/10.1016/j.ophtha.2013.12.027.

Zheng C, Cheung CY, Aung T, et al. In vivo analysis of vectors involved in pupil constriction in Chinese subjects with angle closure. Invest Ophthalmol Vis Sci. 2012;53(11):6756–62. https://doi.org/10.1167/iovs.12-10415.

Li X, Wang W, Huang W, et al. Difference of uveal parameters between the acute primary angle closure eyes and the fellow eyes. Eye (Lond). 2018;32(7):1174–82. https://doi.org/10.1038/s41433-018-0056-9.

Zheng C, Guzman CP, Cheung CY, et al. Analysis of anterior segment dynamics using anterior segment optical coherence tomography before and after laser peripheral iridotomy. JAMA Ophthalmol. 2013;131(1):44–9. https://doi.org/10.1001/jamaophthalmol.2013.567.

Quigley HA. Angle-closure glaucoma-simpler answers to complex mechanisms: LXVI Edward Jackson Memorial Lecture. Am J Ophthalmol. 2009;148(5):657-691.e1. https://doi.org/10.1016/j.ajo.2009.08.009.

Li Y, Wang YE, Huang G, et al. Prevalence and characteristics of plateau iris configuration among American Caucasian, American Chinese and mainland Chinese subjects. Br J Ophthalmol. 2014;98(4):474–8. https://doi.org/10.1136/bjophthalmol-2013-303792.

Nongpiur ME, Atalay E, Gong T, et al. Anterior segment imaging-based subdivision of subjects with primary angle-closure glaucoma. Eye (Lond). 2017;31(4):572–7. https://doi.org/10.1038/eye.2016.267.

Pekala M, Joshi N, Liu TYA, Bressler NM, DeBuc DC, Burlina P. Deep learning based retinal OCT segmentation. Comput Biol Med. 2019;114:103445. https://doi.org/10.1016/j.compbiomed.2019.103445.

Li W, Li Y, Qin W, et al. Magnetic resonance image (MRI) synthesis from brain computed tomography (CT) images based on deep learning methods for magnetic resonance (MR)-guided radiotherapy. Quant Imaging Med Surg. 2020;10(6):1223–36. https://doi.org/10.21037/qims-19-885.

Ali MB, Gu IY, Berger MS, et al. Domain mapping and deep learning from multiple MRI clinical datasets for prediction of molecular subtypes in low grade gliomas. Brain Sci. 2020;0(7):463. https://doi.org/10.3390/brainsci10070463.

Nolan WP, See JL, Chew PT, et al. Detection of primary angle closure using anterior segment optical coherence tomography in Asian eyes. Ophthalmology. 2007;114(1):33–9. https://doi.org/10.1016/j.ophtha.2006.05.073.

Shabana N, Aquino MC, See J, et al. Quantitative evaluation of anterior chamber parameters using anterior segment optical coherence tomography in primary angle closure mechanisms. Clin Exp Ophthalmol. 2012;40(8):792–801. https://doi.org/10.1111/j.1442-9071.2012.02805.x.

Acknowledgements

Funding

This study was supported by the National Natural Science Foundation of China (81371010, 81770963), Hospital Funded Clinical Research, Xinhua Hospital Affiliated to Shanghai Jiao Tong University School of Medicine (21XJMR02), and Interdisciplinary Program of Shanghai Jiao Tong University (YG2021QN52). No funding or sponsorship was received for publication of this article. The journal’s Rapid Service Fee was funded by the authors.

Authorship

All named authors meet the International Committee of Medical Journal Editors (ICMJE) criteria for authorship for this article, take responsibility for the integrity of the work as a whole, and have given their approval for this version to be published.

Author Contributions

Hongfei Ye and Ce Zheng conceived and designed the analysis. Hongfei Ye, Yuan Yang, Kerong Mao, and Yafu Wang collected the data. Yiqian Hu, Yu Xu, Ping Fei, Jiao Lyv, Li Chen, and Ce Zheng contributed data. Ce Zheng performed the analysis. Hongfei Ye and Ce Zheng wrote the paper. Peiquan Zhao and Ce Zheng did the final editing and approved the final version. All authors agree to be accountable for the content of the work.

Disclosures

All authors have nothing to disclose and declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Compliance with Ethics Guidelines

This study was approved by the Institutional Review Board of Xinhua Hospital, Medicine School of Shanghai Jiaotong University (identifier: XHEC-D-2021-114). The study was carried out in accordance with the ethical standards set by the Declaration of Helsinki of 1964 as amended in 2008. All SS-ASOCT and UBM images were anonymized and de-identified according to the Health Insurance Portability and Accountability Act Safe Harbor before analysis [18]. Informed consent was exempted by the IRB in the retrospectively collected development and validation datasets. In the prospectively collected testing dataset, informed consent for publication was obtained from all enrolled patients or from their guardians.

Data Availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

Author information

Authors and Affiliations

Corresponding authors

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License, which permits any non-commercial use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc/4.0/.

About this article

Cite this article

Ye, H., Yang, Y., Mao, K. et al. Generating Synthesized Ultrasound Biomicroscopy Images from Anterior Segment Optical Coherent Tomography Images by Generative Adversarial Networks for Iridociliary Assessment. Ophthalmol Ther 11, 1817–1831 (2022). https://doi.org/10.1007/s40123-022-00548-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40123-022-00548-1