Abstract

The many internal and external factors that contribute to the pathophysiology of dry eye disease (DED) create a difficult milieu for its study and complicate its clinical diagnosis and treatment. The controlled adverse environment (CAE®) model has been developed to minimize the variability that arises from exogenous factors and to exacerbate the signs and symptoms of DED by stressing the ocular surface in a safe, standardized, controlled, and reproducible manner. By integrating sensitive, specific, and clinically relevant endpoints, the CAE has proven to be a unique and adaptable model for both identifying study-specific patient populations with modifiable signs and symptoms, and for tailoring the evaluation of interventions in clinical research studies.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Dry eye disease (DED) is a multifactorial disease of the tear film and ocular surface with an estimated prevalence of 11–22% in the US population, predominantly women over 55 years of age [1,2,3,4,5]. With the aging of the world population, it becomes increasingly important that researchers and clinicians strive to understand, diagnose, and treat DED better. In this review, we will discuss methods for studying DED, and how these techniques can inform clinicians on how to better treat the disease. One tool that has added much to our knowledge of this disease is the controlled adverse environment (CAE®) challenge, which is an ocular surface stress test that exacerbates the signs and symptoms of DED in a safe and controllable manner, in much the same way a stress test is used in cardiovascular medicine to safely provoke a response in subjects.

The tear film is an exquisite balance of aqueous, lipid, and mucin components that serves to protect the ocular surface and to create and maintain a transparent refractive surface for optimal visual performance [6]. Hundreds, if not thousands, of tear components protect the eye from infection, promote rapid healing, and provide adequate nutrition to the avascular cornea. Blinking assists in meibomian gland secretion and spreading of the tear film, as well as mixing and promoting outflow by creating negative pressure in the lacrimal sac. Deficiencies in tear constituents may lead to an unstable tear film, a drying of the ocular surface, and visual disturbances caused by optical aberrations [7]. Exposure of the ocular surface and epithelial desquamation due to tear film breakup will lead to inflammatory and neurogenic signals that manifest as signs of keratitis and symptoms of ocular discomfort commonly described as discomfort, dryness, stinging, burning, foreign body sensation, dryness, and pain [8, 9]. Alterations in blink patterns are characteristic of the disease and contribute to an overall diminution of visual function [10, 11]. Visual tasks such as reading, driving, watching television, and using a computer become particularly troublesome for the DED patient, and can greatly compromise quality of life [12,13,14,15,16].

Tear film deficiencies are caused by a variety of factors including aging and cellular oxidation, neuroendocrine signaling, autoimmune reactions to the lacrimal and/or accessory glands, and inflammation of goblet cells or meibomian glands. Regardless of the underlying cause, DED is associated with chronic inflammation of the ocular surface [2, 17,18,19,20,21], and is exacerbated by harsh environmental conditions. The multifactorial pathophysiology of DED creates many potential therapeutic targets for drug candidates, with a gamut of activities including anti-inflammatories, immunomodulators, secretagogues, anti-evaporatives, receptor agonists and antagonists, wound-healing promoters, hormonal, and nutritional supplements. A brief summary of potential dry eye target therapeutics is presented in Table 1.

A diagnosis of DED can be made with the presence of just symptoms, just signs, or both. The lack of a correlation of signs and symptoms can be, in fact, a characteristic of the disease [22,23,24,25]. The cornea is a highly innervated and exquisitely sensitive tissue, the signaling from which evokes a response in many surrounding systems: the lacrimal gland, goblet cells, meibomian glands, lid musculature, etc. [26]. It has been hypothesized that in the early stages of DED, patients with still healthy innervation may present with symptoms and little corneal and conjunctival staining or other objective measures. As the duration of DED progresses, patients experience damage to corneal nerves serving the lacrimal functional unit, resulting in a loss of sensitivity and diminished symptomatology, impaired compensatory mechanisms such as tearing and blinking, and more severe keratitis [27, 28].

Compliance with Ethics Guidelines

This article is based on previously conducted studies and does not involve any new studies of human or animal subjects performed by any of the authors.

DED and the Environment

One of the difficulties of studying and treating dry eye stems from its variability with environment and behavior. In DED, the inherent milieu created by age, neuroendocrine function, lacrimal and meibomian gland health, and inflammatory state does not allow the subject to respond adequately to environmental stress [29, 30]. Factors such as wind, humidity, temperature, contact lens wear, visual tasking, season, diurnal rhythms, and pollutants all affect tear film stability and the ocular surface. Lifestyle also contributes greatly to ocular drying: outdoor weekend sporting activities; weekday conditions in an arid office environment with extreme air-conditioning, lighting, and visual tasking such as driving, reading, computer terminal [31,32,33], television, and phone viewing; or flying at altitudes where low relative humidity causes hyper-evaporation [34]. When faced with these adverse environmental and behavioral conditions, tear production will increase in a normal subject to maintain a stable protective barrier and optimal refractive conditions. The DED subject lacks one or more compensatory mechanism that offsets these adverse conditions: the secretory capacity to increase aqueous tear production; upregulation of mucin expression to improve tear quality; increased blinking to prevent evaporation and refresh the tear film; and evaporation, desiccation, and damage to the ocular surface ensue [30, 35].

The natural variability of external conditions and internal responses to them creates a difficult paradigm for the study of DED and its treatment. When evaluating a potential therapeutic agent, the ebb and flow of signs and symptoms that occur throughout the day can overcome subtle improvements derived from treatment. This variability is assimilated into the resulting dataset, leading to large standard deviations and a dampening of quantifiable treatment response that together necessitate very large sample sizes to maintain statistical power. Additionally, the powerful effect of placebo acting as a tear substitute in dry eye studies leads to even lesser differentiation between active and control groups, necessitating the exclusion of placebo responders a priori with a run-in period before randomization [30].

The CAE Chamber

During a CAE study, subjects are screened for baseline signs and symptoms of dry eye, as well as confirmation of a positive medical and medication history. They enter the chamber, which allows for a highly standardized atmosphere of low relative humidity, increased airflow, and constant visual tasking. These perfect storm conditions overcome a dry eye subject’s ability to maintain a stable tear film such that we are able to reproducibly study and modify under controlled conditions the ocular surface desiccation and associated signs and symptoms of DED. Measures of dry eye are assessed immediately before and after the 90-min challenge, as well as frequent symptom monitoring during the challenge. See Table 2 for a list of published clinical trials involving CAE exposure and treatments of dry eye.

Patient Selection

When investigators select patients for clinical trials, they must consider severity of DED disease, responder subtypes, the drug candidate’s mechanism of action and other contributory factors, such as duration of disease, age, gender, lifestyle, and concomitant systemic diseases. These studies have very rigidly defined inclusion and exclusion criteria, and as a result, the rate of acceptance into studies is very low. When the criteria for inclusion are not well laid out, subjects are entered with misdiagnosis, concomitant medications or diseases that mask the therapeutic response to the test drug. Examples are conjunctivochalasis and recurrent corneal erosion, which present with similar symptoms of foreign body sensation, grittiness, irritation, blurred vision, and tearing. Other conditions such as allergy, epithelial basement membrane dystrophy, lid wiper epitheliopathy, giant papillary conjunctivitis, Salzmann’s nodular degeneration, and asthenopia can masquerade as DED.

Other subjects who are potential candidates for a DED clinical trial may have underlying systemic diseases that contribute to ocular surface damage. Patients with long-standing acne rosacea may also have advanced meibomian gland dysfunction (MGD) and may pass screening criteria required for a DED trial [36]. However, because the meibomian glands are damaged, very few, if any, topical agents would modify this condition, and treatment would fail [37]. Similarly, patients diagnosed with uncontrolled type 2 diabetes mellitus will continually present with signs and symptoms that resemble DED, yet will not be responsive to treatment [38].

Reflex tearing can confound results of a CAE exposure and thus, subjects with excessive compensatory tearing should also be excluded from CAE trials. Some patients exposed to a CAE may experience a period of temporary relief from their ocular discomfort due to reflex tearing. This natural compensation is inversely correlated with DED severity. Natural compensation occurs more quickly in normal subjects (~ 10 min), and mild-to-moderate dry eye patients (~ 20 min) compared to those with moderate-to-severe dry eye (> 40 min or not at all) [35].

CAE As a Screening and Subject Enrichment Tool

The response to CAE exposure is used in clinical trials as both a screening and subject enrichment tool, and for establishing treatment differences. By selecting subjects who respond to CAE exposure with worsened signs and symptoms of DED, all enrolled subjects present with a similar predictable baseline, and have a modifiable response to demonstrate change with intervention. Thus, the first important function of the CAE is to provide an entering subject population with known and reproducible dry eye disease. By using a positive CAE response as an inclusion criterion, the study is completed with a predefined population whose baseline characteristics and response to an adverse environment have been defined.

It is known that depending on mechanism of action, some therapies might affect primarily symptoms, some signs, and some both. We also know that some DED patients have more signs than symptoms, depending on disease phenotypes and duration. Because the CAE elicits both, distinct pools of dry eye subjects that are symptom responders, sign responders, and a mixture of both can be identified by CAE response. Furthermore, the regulatory environment now recognizes the lack of a contemporaneous association between signs and symptoms [23, 30]. This independence makes it even more critical to choose the right DED population to test a prospective therapy, and the CAE response allows this.

CAE As a Platform for Evaluating Treatments

Use of the CAE model in combination with stringent, study-specific inclusion and exclusion criteria and sensitive diagnostic tools allow for the selection of patients based on their calibrated response to environmental stress and not only on naturally presenting signs and symptoms that fluctuate hourly and daily within and among subjects. CAE-based selection of subjects also minimizes the natural regression to the mean that occurs in an environmental study for which subjects must present at the visit with adequate scores for signs and symptoms. While the CAE approach results in a higher rate of non-eligibility, the smaller pool of subjects is more homogeneous, and provides greater statistical power.

The second critical function of the CAE is for evaluation of interventions. By integrating sensitive and reproducible endpoints into the model, changes in a subject’s response to CAE exposure can be quantified. CAE response as an endpoint evaluates the protective benefit of a drug or its ability to improve a subject’s compensatory mechanisms. This information is extremely useful for honing in on the potential therapeutic activity of the test product. The expected time course for a maximum effect on CAE depends on the drug characteristics, and it is critical to time the primary endpoint around the time of expected maximum drug effect.

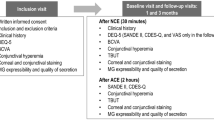

A typical study design includes two baseline CAE challenges prior to randomization. The initial challenge confirms a subject’s response with the protocol-defined degree of sign and/or symptom worsening. Between these two baseline CAE exposures, the subject is usually placed on a placebo regimen, called a run-in period, to exclude subjects whose signs and symptoms are alleviated by supplemental eye drops alone. This is an important step taken prior to initiating treatment, since the drug effect will be compared to the placebo-vehicle effect in double-masked randomized groups. The drug vehicle solutions mandated for use as negative controls in DED and all ophthalmic studies behave as tear substitutes, lubricating the ocular surface, and this benefit will narrow the differences between the study treatment and the placebo. Thus, the run-in period prevents vehicle-treated placebo responders from study entry, thereby minimizing potential noise in the data and optimizing the active drug’s ability to demonstrate improvement. The second confirmatory challenge is thus critical to assure (1) that subjects will respond adequately to the CAE after use of placebo and (2) that subjects have stable and reproducible DED.

As an efficacy tool, CAE challenge has advantages over environmental assessments of drug effects, particularly if the mechanism of action of the drug suggests activity as a protective agent or as an enhancer of compensatory mechanisms. However, assessments of signs and symptoms prior to initiating the challenge also establish the “environmental” status of this enriched population of DED subjects with naturally occurring disease, allowing for multiple opportunities to observe a drug effect. The CAE chamber has both stationary and exact-replica mobile units for use in multi-center studies. Using the same mobile units at various sites is another means of minimizing variability among research centers.

Highly sensitive diagnostic endpoints are integrated into CAE exposure, allowing investigators to subtly tailor study designs to match a therapeutic agent’s mode of action. A drug that increases aqueous tear production or improves meibomian gland function might be expected to cause a significant improvement in the discomfort and keratitis provoked by the CAE. Similarly, a mucin secretagogue-acting drug might stabilize the tear film from within such that corneal epithelial cells are better protected from unfavorable conditions, resulting in significantly less pre- to post-CAE keratitic staining [29, 39].

A variation of the CAE is used to exaggerate adverse environmental conditions more quickly by directing highly focused, rapid airflow to the eye. This model induces greater central corneal staining and a faster onset of symptoms and might be most appropriate for certain mechanisms of action.

Another modification to the CAE is the repeat CAE in which the subject is challenged morning, afternoon and evening. This diurnal model simulates the episodic environmental insults that DED patients experience throughout the day, and useful information can be gained from understanding how the subject’s response changes throughout the day, and how time awake can greatly influence results. This approach is useful for quantifying the cumulative worsening of signs and symptoms and the effectiveness and duration of barrier protective therapies such as artificial tears.

Refining the Assessments of Dry Eye Signs and Symptoms

In studying dry eye, it is critical that measures of disease severity are accurate, reproducible and sensitive. In CAE and non-CAE studies, we must be able to demonstrate a change in signs and symptoms with interventions. A scientific approach to the grading of signs and symptoms has allowed for more calibrated and reproducible assessments, particularly important when more than one site is involved in a study. Grading systems have been validated and tested over time for dry eye redness and vital dye staining of ocular surface damage. Refined methodology for tear film break up time has also been shown to more accurately measure signs of dry eye.

Vital dye staining of damaged ocular surface cells with fluorescein (for the cornea) and/or lissamine green (for the conjunctiva) with grading of severity by region is a common and useful measure of dry eye. Many grading systems exist, and an accurate scoring of the severity of keratitis and conjunctival damage is essential to understanding the effectiveness of an intervention. Finely calibrated grading systems divide the ocular surface into five physiologically and anatomically relevant regions, some of which have been shown to be more sensitive to dry eye and its treatment. Investigators are trained across sites to assure that grading is standardized and reproducible. Software now aids in objectifying these scoring systems [41], and this allows for greater reproducibility across study sites when conducting a multi-centered clinical trial (Fig. 1).

Tear film break-up time (TFBUT) is another measure of dry eye, and involves defining in seconds the time between blinks before a dry spot is observed by slit lamp after instillation of fluorescein. Historically, this measure was performed with excessive amounts of fluorescein (25–40 µl drop) that flooded the tearfilm and so provided inaccurate information on tear film breakup. When the drop quantity was reduced to 2–5 µL, the interblink evaporation of innate tears became a more relevant measure, defined as < 5 s for a definitive DED diagnosis [42]. Improved understanding of the relationship between patient symptoms and TFBUT has resulted in a simple noninvasive measure of tear film instability that is easily implemented during a routine office visit or in a patient’s home. This test is known as the symptomatic tear film break-up time, and involves simply identifying the time in seconds between blinks before symptoms of discomfort ensue, which usually occurs within one second of the patient’s tear break up [43]. The ease of this technique allows patients to independently monitor their condition under various circumstances and evaluate symptom relief with treatments even at home.

Conjunctival vessel dilation is another hallmark sign of dry eye, and its horizontal, fine, linear pattern is subtly different from the redness seen with other anterior segment conditions such as allergy and infection. Many ophthalmic conditions share redness as a clinical sign, but the particular pattern of redness varies considerably across pathological conditions. The conjunctival, sclera, episcleral, and ciliary vessel beds are known to respond to different disease states through variations in color, location, pattern, and degree of redness. In the case of DED, redness presents as fine linear conjunctival and ciliary vessel dilation. Automated software has been developed to analyze conjunctival structure and redness from still image photography [44], detecting vascular patterns and quantifying the change in redness to demonstrate the therapeutic effect of a drug (Fig. 1). This technology is another example of how to improve on basic clinical grading to better standardize a multi-centered trial.

Schirmer’s test is a measure of aqueous tear production that has been long in use as a clinical diagnostic test for aqueous-deficient DED; however, it fails to reveal other types of tear deficiencies and appears to be resistant to modification.

At a unique crossroads between signs and symptoms of DED are the alterations in blink patterns that arise both as cause and effect of an unstable tear film. In fact, the importance of blink was unknown until the tear film breakup time technique was modified to use a greatly reduced quantity of fluorescein. The lower thresholds for breakup that indicate the presence of dry eye were found to be synchronized with blink [42]. Understanding that the drying tear film triggers a blink led to more in-depth study of modifications in blink in the context of dry eye [45, 46]. DED patients were found to have a faster blink rate that can account for some disturbances in visual function, and were less able to prolong the time between blinks during demanding visual tasks, bound as they are by the primary concern of refreshing the tear film [47]. This inability to vary blink with visual task is another major contributor to the visual dysfunction and fatigue common in DED subjects [47]. Furthermore, dry eye subjects were shown to have lid closures of very long duration (apparent microsleeps); these rest the eye and refresh the tear film such that a lengthening of the time between blinks is possible subsequently [10].

Techniques of monitoring blink have been developed to study what happens to the ocular surface between blinks. These are used as endpoints to study DED and the effect of interventions. Initially, from a simple ratio of tear film breakup time to blink rate, called the Ocular Protection Index (OPI), we understand when a subject’s ocular surface is compromised [48]. This measure was improved with the OPI 2.0 System, which evolved to assess tear film stability simultaneously with natural blink under normal visual conditions [45, 46, 49]. The OPI 2.0 System implements fully automated software algorithms that provide real-time measurements of corneal exposure (breakup area) for each inter-blink interval (IBI) during a 1-min video. Utilizing this method, the mean breakup area (MBA) and OPI 2.0 (MBA/IBI) can be calculated and analyzed [45, 46, 49]. Continuous monitoring of blinks with a headset connected to a cell phone can be implemented within the CAE chamber to study how blink is modified under conditions of stress and how blink patterns might be normalized through treatment [12].

Symptom grading is critical in a disease such as dry eye, which has such a subjective component, and unlike most diseases, can actually comprise only symptoms and not signs. To grade symptoms accurately, scoring systems must be implemented that are easy to use and reflect the disease state in an accurate and reproducible manner. Various tools are used for assessing both retrospective and immediate symptoms using 0–4 and 0–5 scales, as well as different qualifiers (discomfort, burning, dryness, grittiness, stinging), to be completed in-office and at home as part of diaries. During the CAE, symptom queries occur throughout and are a key piece to confirmation that the adverse environment challenge is effective, as well as providing valuable data that can be analyzed in multiple ways to reveal treatment modification of symptoms [12, 50, 51].

Quality of life questionnaires are also useful tools for assessing DED. In a CAE-based study, these subjective questionnaires provide additional environmental symptom endpoints along with diary data that together complement the within-CAE symptom measures, adding to the in-depth and multi-faceted understanding of a drug’s effects on symptoms. A short, 4-question questionnaire that focuses on the key aspects in which dry eye disrupts quality of life (daily activities, reading, watching television, and driving at night) has proven most valuable.

Assessments of visual function are a critical component to studying DED. The inter-blink interval visual acuity decay (IVAD) test provides a measurement of visual function in real time, identifying in the time between blinks when blurring occurs [52]. A suite of reading and contrast sensitivity tests [53] can also be incorporated into the CAE model. In the low contrast reading test, subjects are asked to read simple sentences at a constant print size in decreasing contrast levels. The IReST measures reading speed and errors under natural conditions, i.e., reading simple, standardized, and contextual paragraph texts. With the Wilkins reading test, reading rate is measured for 20 lines of text consisting of 15 simple words randomly arranged without context to eliminate any variability introduced by comprehension [54]. The menu reading test assesses scanning/reading function by asking subjects to read a simple restaurant menu.

Newer and more elaborate measures of evaluating DED include confocal microscopy, tear osmolarity, tear film lipid interferometry, meibography, and tear assaying for cytokines, enzymes, and other tear products. Pre- and post-CAE, analyses of various mucins in tears has been integrated as a means of further classifying patients into subgroups predictive of treatment response to secretagogues. Implementation of these techniques across multiple sites and practices would require standardization and inter-rater reliability data to assure their sensitivity and specificity.

Understanding the pharmacological target of a candidate therapeutic agent’s mechanism of action in DED is critical for selecting the sign or symptom endpoint that will best demonstrate drug efficacy. Endpoints must be standardized, reliable, reproducible, and possess the sensitivity to detect clinically relevant changes caused by the agent undergoing evaluation. The timing and order of these endpoint assessments, as well as rigorous investigator training to ensure consistency between visits and sites, are key factors to the success of a study. By assessing these precise standardized endpoints tailored for use in dry eye before and after a CAE challenge, a drug’s activity during conditions of ocular surface stress is reliably evaluated.

A Sampling of CAE Trials

Regardless of a drug’s mechanism of action, its evaluation in the context of a CAE challenge provides a deeper understanding of how a drug can improve DED. Many dry eye therapeutic candidates have been assessed using the CAE either as an efficacy endpoint or for the purposes of subject selection. Anti-inflammatory agents of diverse mechanisms (free radical scavengers, steroids, cytokine inhibitors, integrin inhibitors, calcineurin inhibitors, SYK kinase inhibitors, etc.), secretagogues, wound healing promoters, barrier function molecules, hormonal therapies and devices have been assessed with the aid of a CAE challenge. Several of these studies have been published. Positive findings have been reported for treatment of DED with iontophoresis of dexamethasone phosphate in the CAE chamber [55]. CAE was a critical component in one Phase 2 and in one Phase 3 study of lifitegrast [56, 57]. A thymosin β4 peptide was tested in another Phase 2 CAE trial and positive results were shown for discomfort scores in the CAE after a month of treatment [50]. Mucogenic agents such as MIM-D3, a selective tyrosine kinase (TrkA) receptor agonist and secretagogue, have been shown to reduce the pre- to post-change in staining that occurs in dry eye subjects with the CAE [39]. The mitochondrial antioxidant, SkQ1, was shown to be effective in improving central corneal staining, lid margin redness and dry eye symptoms [51]. Among others, Phase 2 CAE studies were also completed with resolving, an endogenous immune response mediator [58], and a novel formulation of cyclosporine that has been approved for marketing in the EU (Ikervis®, Santen) [59].

The CAE can also be used to evaluate the effects of contact lenses, solutions, or tear supplements in both DED and normal subjects. Situational dry eye is very common in normal subjects who experience contact lens-associated dryness and ocular surface discomfort due to adverse environmental conditions or behaviors such as intensive visual tasking or monitor use. Barrier protection will ameliorate these CAE-induced signs and symptoms of ocular distress even in normal subjects. Evidence of barrier protection in a CAE exposure would be an important differentiator for clinicians challenged with the hundreds of products marketed and the need to make informed recommendations to patients. One example of this was the finding of greater relief of discomfort under CAE-related adverse conditions with senofilcon A lenses compared to habitual lens wear or no lens wear [40]. Protection from ocular discomfort during exposure to the CAE was found to be superior with the senofilcon lenses. The mucosal drying effect of antihistamines is exacerbated with a CAE exposure, providing greater magnitudes of change that allow for differentiation among products. This early CAE study showed that a 4-day loratadine treatment was associated with more dryness and 93% more corneal and conjunctival staining after CAE than cetirizine [40]. Finally, the CAE was also used to validate the OPI 2.0 method of assessing ocular surface compromise as a function of mean break up area across the cornea between blinks [49].

While the CAE is an experimental method that is not for use in widespread clinical testing or for diagnosis of potential DED patients, the information collected from these studies is very valuable to practicing clinicians. Without the noise created by the widely varying external milieu, clinicians can understand how a drug affects DED, whether as a stimulator of aqueous production, a mucogenic protector, an anti-inflammatory, or a simple barrier to evaporation. Future treatments of DED are forthcoming, such as the Allergan intranasal device for tear stimulation (https://clinicaltrials.gov/ct2/show/NCT02798289). Regulatory agencies and clinicians all now recognize that subjects are tired of ineffectual drops that they must continually instill, or therapies that work in only small subgroups of patients. Therapy may indeed have to be combinations of complementary drugs/devices as is used in ocular hypertension and glaucoma, and identifying treatments that might target symptoms or signs separately is essential now that we know these might be independent components of the disease. The ability to compare and contrast onset and duration of activity within the same paradigm also informs the clinician on how new or existing products will behave outside of the clinic. The CAE in fact provides an essential grounding platform for studying the many facets of the fast moving target that is dry eye disease.

Conclusion

To conclude, the CAE reproduces in a safe, clinical setting the challenges and situations that dry eye subjects encounter every day. As a tool in clinical trials, the CAE reduces or mitigates the variability that plagues dry eye studies and limits their ability to demonstrate the effects of interventions. The CAE can be used as an enrichment tool to enroll patients with modifiable and appropriate signs and symptoms of DED, as well as an endpoint that demonstrates drug efficacy. The CAE can be tailored to highlight the mechanism of action of a drug, ultimately making studies smaller in scale, better standardized, and more precise. The information culled from these studies is useful to clinicians who ideally might match a drug’s mechanism of action and activity in the CAE to the type of dry eye of the subject.

References

Schein OD, et al. Prevalence of dry eye among the elderly. Am J Ophthalmol. 1997;124(6):723–8.

Moss SE, Klein R, Klein BE. Prevalence of and risk factors for dry eye syndrome. Arch Ophthalmol. 2000;118(9):1264–8.

Brewitt H, Sistani F. Dry eye disease: the scale of the problem. Surv Ophthalmol. 2001;45(Suppl 2):S199–202.

Schaumberg DA, et al. Prevalence of dry eye syndrome among US women. Am J Ophthalmol. 2003;136(2):318–26.

Schaumberg DA, Sullivan DA, Dana MR. Epidemiology of dry eye syndrome. Adv Exp Med Biol. 2002;506(Pt B):989–98.

Montes-Mico R. Role of the tear film in the optical quality of the human eye. J Cataract Refract Surg. 2007;33(9):1631–5.

Montes-Mico R, et al. The tear film and the optical quality of the eye. Ocul Surf. 2010;8(4):185–92.

Pflugfelder SC, Stern ME. Mucosal environmental sensors in the pathogenesis of dry eye. Expert Rev Clin Immunol. 2014;10(9):1137–40.

Pflugfelder SC. Antiinflammatory therapy for dry eye. Am J Ophthalmol. 2004;137(2):337–42.

Rodriguez JD, et al. Investigation of extended blinks and interblink intervals in subjects with and without dry eye. Clin Ophthalmol. 2013;7:337–42.

Goto E, et al. Impaired functional visual acuity of dry eye patients. Am J Ophthalmol. 2002;133(2):181–6.

Rodriguez JD, et al. Diurnal tracking of blink and relationship to signs and symptoms of dry eye. Cornea. 2016;35(8):1104–11.

Friedman NJ. Impact of dry eye disease and treatment on quality of life. Curr Opin Ophthalmol. 2010;21(4):310–6.

Iyer JV, Lee SY, Tong L. The dry eye disease activity log study. SciWorldJ. 2012;2012:589875.

Le Q, et al. Impact of dry eye syndrome on vision-related quality of life in a non-clinic-based general population. BMC Ophthalmol. 2012;12:22.

Miljanovic B, et al. Impact of dry eye syndrome on vision-related quality of life. Am J Ophthalmol. 2007;143(3):409–15.

Gao J, et al. ICAM-1 expression predisposes ocular tissues to immune-based inflammation in dry eye patients and Sjogrens syndrome-like MRL/lpr mice. Exp Eye Res. 2004;78(4):823–35.

Stern ME, et al. A unified theory of the role of the ocular surface in dry eye. Adv Exp Med Biol. 1998;438:643–51.

Kunert KS, et al. Analysis of topical cyclosporine treatment of patients with dry eye syndrome: effect on conjunctival lymphocytes. Arch Ophthalmol. 2000;118(11):1489–96.

Gao J, et al. The role of apoptosis in the pathogenesis of canine keratoconjunctivitis sicca: the effect of topical cyclosporin A therapy. Cornea. 1998;17(6):654–63.

Pflugfelder SC, et al. Epithelial-immune cell interaction in dry eye. Cornea. 2008;27(Suppl 1):S9–11.

Begley CG, et al. The relationship between habitual patient-reported symptoms and clinical signs among patients with dry eye of varying severity. Invest Ophthalmol Vis Sci. 2003;44(11):4753–61.

Nichols KK, Nichols JJ, Mitchell GL. The lack of association between signs and symptoms in patients with dry eye disease. Cornea. 2004;23(8):762–70.

Schein OD, et al. Relation between signs and symptoms of dry eye in the elderly. A population-based perspective. Ophthalmology. 1997;104(9):1395–401.

Casavant J, et al. A correlation between the signs and symptoms of dry eye and the duration of dry eye diagnosis. Invest Ophthalmol Vis Sci. 2005;46:E-abstract 4455.

Dartt DA. Dysfunctional neural regulation of lacrimal gland secretion and its role in the pathogenesis of dry eye syndromes. Ocul Surf. 2004;2(2):76–91.

Belmonte C, Acosta MC, Gallar J. Neural basis of sensation in intact and injured corneas. Exp Eye Res. 2004;78(3):513–25.

Rosenthal P, Borsook D. The corneal pain system. Part I: the missing piece of the dry eye puzzle. Ocul Surf. 2012;10(1):2–14.

Abelson MB, et al. Dry eye syndromes: diagnosis, clinical trials and pharmaceutical treatment—‘improving clinical trials’. Adv Exp Med Biol. 2002;506(Pt B):1079–86.

Ousler GW, et al. Methodologies for the study of ocular surface disease. Ocul Surf. 2005;3(3):143–54.

Wolkoff P, et al. Eye complaints in the office environment: precorneal tear film integrity influenced by eye blinking efficiency. Occup Environ Med. 2005;62(1):4–12.

Yazici A, et al. Change in tear film characteristics in visual display terminal users. Eur J Ophthalmol. 2015;25(2):85–9.

Ridder WH 3rd, et al. Impaired visual performance in patients with dry eye. Ocul Surf. 2011;9(1):42–55.

Dawson AG. Medical aspects of air travel. In: DuPont HL, Steffen R, editors. Textbook of travel medicine and health, 2nd ed. Toronto: B.C. Decker; 2001. p. 390–408.

Ousler GW 3rd, et al. Evaluation of the time to “natural compensation” in normal and dry eye subject populations during exposure to a controlled adverse environment. Adv Exp Med Biol. 2002;506(Pt B):1057–63.

Nelson JD, et al. The international workshop on meibomian gland dysfunction: report of the definition and classification subcommittee. Invest Ophthalmol Vis Sci. 2011;52(4):1930–7.

Baudouin C, et al. Revisiting the vicious circle of dry eye disease: a focus on the pathophysiology of meibomian gland dysfunction. Br J Ophthalmol. 2016;100(3):300–6.

Misra SL, Braatvedt GD, Patel DV. Impact of diabetes mellitus on the ocular surface: a review. Clin Exp Ophthalmol. 2016;44(4):278–88.

Meerovitch K, et al. Safety and efficacy of MIM-D3 ophthalmic solutions in a randomized, placebo-controlled Phase 2 clinical trial in patients with dry eye. Clin Ophthalmol. 2013;7:1275–85.

Ousler GW 3rd, Anderson RT, Osborn KE. The effect of senofilcon A contact lenses compared to habitual contact lenses on ocular discomfort during exposure to a controlled adverse environment. Curr Med Res Opin. 2008;24(2):335–41.

Rodriguez JD, et al. Automated grading system for evaluation of corneal superficial punctate keratitis associated with dry eye. Invest Ophthalmol Vis Sci. 2015;56:2340–7.

Abelson MB, et al. Alternative reference values for tear film break up time in normal and dry eye populations. Adv Exp Med Biol. 2002;506(Pt B):1121–5.

Nally L, Ousler G, Abelson M. Ocular discomfort and tear film break-up time in dry eye patients: a correlation. Invest Ophthalmol Vis Sci. 2000;41(4):1436.

Rodriguez JD, et al. Automated grading system for evaluation of ocular redness associated with dry eye. Clin Ophthalmol. 2013;7:1197–204.

Abelson R, et al. Measurement of ocular surface protection under natural blink conditions. Clin Ophthalmol. 2011;5:1349–57.

Abelson R, et al. Validation and verification of the OPI 2.0 System. Clin Ophthalmol. 2012;6:613–22.

Johnston PR, et al. The interblink interval in normal and dry eye subjects. Clin Ophthalmol. 2013;7:253–9.

Ousler GW 3rd, et al. The ocular protection index. Cornea. 2008;27(5):509–13.

Abelson R, et al. A single-center study evaluating the effect of the controlled adverse environment (CAE(SM)) model on tear film stability. Clin Ophthalmol. 2012;6:1865–72.

Sosne G, Ousler GW. Thymosin beta 4 ophthalmic solution for dry eye: a randomized, placebo-controlled, Phase II clinical trial conducted using the controlled adverse environment (CAE) model. Clin Ophthalmol. 2015;9:877–84.

Petrov A, et al. SkQ1 ophthalmic solution for dry eye treatment: results of a phase 2 safety and efficacy clinical study in the environment and during challenge in the controlled adverse environment model. Adv Ther. 2016;33(1):96–115.

Torkildsen G. The effects of lubricant eye drops on visual function as measured by the inter-blink interval visual acuity decay test. Clin Ophthalmol. 2009;3:501–6.

Ousler GW 3rd, et al. Optimizing reading tests for dry eye disease. Cornea. 2015;34(8):917–21.

Ridder WH 3rd, Zhang Y, Huang JF. Evaluation of reading speed and contrast sensitivity in dry eye disease. Optom Vis Sci. 2013;90(1):37–44.

Patane MA, et al. Ocular iontophoresis of EGP-437 (dexamethasone phosphate) in dry eye patients: results of a randomized clinical trial. Clin Ophthalmol. 2011;5:633–43.

Semba CP, et al. A phase 2 randomized, double-masked, placebo-controlled study of a novel integrin antagonist (SAR 1118) for the treatment of dry eye. Am J Ophthalmol. 2012;153(6):1050-60 e1.

Sheppard JD, et al. Lifitegrast ophthalmic solution 5.0% for treatment of dry eye disease: results of the OPUS-1 phase 3 study. Ophthalmology. 2014;121(2):475–83.

Hessen M, Akpek EK. Dry eye: an inflammatory ocular disease. J Ophthalmic Vis Res. 2014;9(2):240–50.

Lallemand F, et al. Successfully improving ocular drug delivery using the cationic nanoemulsion, novasorb. J Drug Deliv. 2012;2012:604204.

Condon PI, et al. Double blind, randomised, placebo controlled, crossover, multicentre study to determine the efficacy of a 0.1% (w/v) sodium hyaluronate solution (Fermavisc) in the treatment of dry eye syndrome. Br J Ophthalmol. 1999;83(10):1121–4.

Liu X, et al. The effect of topical pranoprofen 0.1% on the clinical evaluation and conjunctival HLA-DR expression in dry eyes. Cornea. 2012;31(11):1235–9.

Yanai K, et al. Corneal sensitivity after topical bromfenac sodium eye-drop instillation. Clin Ophthalmol. 2013;7:741–4.

Jauhonen HM, et al. Cis-urocanic acid inhibits SAPK/JNK signaling pathway in UV-B exposed human corneal epithelial cells in vitro. Mol Vis. 2011;17:2311–7.

Sosne G, Kleinman HK. Primary mechanisms of thymosin beta4 repair activity in dry eye disorders and other tissue injuries. Invest Ophthalmol Vis Sci. 2015;56(9):5110–7.

Sall K, et al. Two multicenter, randomized studies of the efficacy and safety of cyclosporine ophthalmic emulsion in moderate to severe dry eye disease. CsA Phase 3 Study Group. Ophthalmology. 2000;107(4):631–9.

Liew SH, et al. Tofacitinib (CP-690,550), a Janus kinase inhibitor for dry eye disease: results from a phase 1/2 trial. Ophthalmology. 2012;119(7):1328–35.

Leonardi A, et al. Efficacy and safety of 0.1% cyclosporine A cationic emulsion in the treatment of severe dry eye disease: a multicenter randomized trial. Eur J Ophthalmol. 2016;26(4):287–96.

Matsumoto Y, et al. Efficacy and safety of diquafosol ophthalmic solution in patients with dry eye syndrome: a Japanese phase 2 clinical trial. Ophthalmology. 2012;119(10):1954–60.

Kinoshita S, et al. A randomized, multicenter phase 3 study comparing 2% rebamipide (OPC-12759) with 0.1% sodium hyaluronate in the treatment of dry eye. Ophthalmology. 2013;120(6):1158–65.

Soni NG, Jeng BH. Blood-derived topical therapy for ocular surface diseases. Br J Ophthalmol. 2016;100(1):22–7.

Lambiase A, et al. A two-week, randomized, double-masked study to evaluate safety and efficacy of lubricin (150 mug/mL) eye drops versus sodium hyaluronate (HA) 0.18% eye drops (Vismed(R)) in patients with moderate dry eye disease. Ocul Surf. 2017;15(1):77–87.

Welch D, et al. Ocular drying associated with oral antihistamines (loratadine) in the normal population-an evaluation of exaggerated dose effect. Adv Exp Med Biol. 2002;506(Pt B):1051–5.

Ousler GW, et al. An evaluation of the ocular drying effects of 2 systemic antihistamines: loratadine and cetirizine hydrochloride. Ann Allergy Asthma Immunol. 2004;93(5):460–4.

Acknowledgements

This review was funded by Ora, Inc. All named authors meet the International Committee of Medical Journal Editors (ICMJE) criteria for authorship for this manuscript, take responsibility for the integrity of the work as a whole, and have given final approval for the version to be published.

Disclosures

George Ousler is an employee of Ora, Inc. Lisa Smith is an employee of Ora, Inc. David Rimmer is an employee of Ora, Inc. Mark Abelson is the founder of Ora, Inc. He is retired and no longer has any Board or management capacity at Ora. His wife, Annalee, and sons, Richard and Stuart, are board members of Ora, Inc. Stuart is also CEO and an employee of Ora, Inc. There are no other relationships, conflicts of interest, or commercial relationships to disclose for these authors or their family members. There are also no corporate appointments, consultancies, stock ownership, or other equity interests or patent licensing to them or their families in connection with products or companies mentioned in this article or bearing on the subject matter of the article. Ora has a proprietary and financial interest in various measures, endpoints and technologies in the context of dry eye that are in development for implementation in clinical trials. The CAE chamber is owned and operated by Ora, Inc. Companies mentioned in the article with regard to therapeutics for dry eye sponsored past clinical trials run by Ora, Inc. There are no other pertinent financial relationships of the authors or their families with regard to products or technology mentioned in this review.

Compliance with Ethics Guidelines

This article is based on previously conducted studies and does not involve any new studies of human or animal subjects performed by any of the authors.

Open Access

This article is distributed under the terms of the Creative Commons Attribution-NonCommercial 4.0 International License (http://creativecommons.org/licenses/by-nc/4.0/), which permits any noncommercial use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Author information

Authors and Affiliations

Corresponding author

Additional information

Enhanced content

To view enhanced content for this article go to http://www.medengine.com/Redeem/EB4CF0607B3DD918.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (https://creativecommons.org/licenses/by/4.0), which permits use, duplication, adaptation, distribution, and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Ousler, G.W., Rimmer, D., Smith, L.M. et al. Use of the Controlled Adverse Environment (CAE) in Clinical Research: A Review. Ophthalmol Ther 6, 263–276 (2017). https://doi.org/10.1007/s40123-017-0110-x

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40123-017-0110-x