Abstract

The stress–strength parameter \(R = P(Y < X)\), as a reliability parameter, is considered in different statistical distributions. In the present paper, the stress–strength reliability is estimated based on progressively type II censored samples, in which \(X\) and \(Y\) are two independent random variables with inverse Gaussian distributions. The maximum likelihood estimate of \(R\) via expectation–maximization algorithm and the Bayes estimate of \(R\) are obtained. Furthermore, we obtain the bootstrap confidence intervals, HPD credible interval and confidence intervals based on generalized pivotal quantity for \(R\). Additionally, the performance of point estimators and confidence intervals are evaluated by a simulation study. Finally, the proposed methods are conducted on a set of real data for illustrative purposes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The estimation of stress–strength parameter for showing system efficiency is one of the key subjects in statistical inference, which can be used in various sciences such as lifetime, reliability of mechanical systems, statistics and biostatistics (Simonoff et al. [44]). In reliability, the \(R\) parameter, which describes lifetime for a particular system, places the strength \(X\) against the stress \(Y\). The idea that a system will be disturbed if stress \(Y\) overcomes the strength \(X\) originated by Birnbaum [11]. For example, in clinical studies if \(X\) and \(Y\) are the response of the control group to a therapeutic approach and the response of the treated group, respectively (see Hauck et al. [26]), then \(R\) can be seen as the measure of treatment effect. The readers are strongly urged to refer to Kotz et al. [30] for further details about the stress–strength reliability. Furthermore, the estimation of stress–strength parameters under some statistical distributions has been studied by many authors, such as Awad et al. [5], Gupta and Gupta [23], Ahmad et al. [1], Kundu and Gupta [31]. In addition, various statistical distributions based on different types of data have been considered in various studies. For instance, the estimation of \(P(Y < X)\) was used by Rezaei et al. [40] in generalized Pareto distributions. In another study performed by Hajebi et al. [24], a confidence interval was calculated for \(R\) in generalized exponential distributions. The estimation of \(R\) has been considered by Huang et al. [27] for \(X\) and \(Y\) with gamma distributions. In a study conducted by Asgharzadeh et al. [4], the estimation of \(R\) was proposed in generalized logistic distributions. Moreover, the estimation of the stress–strength reliability \(R = P(Y < X)\) was addressed by Iranmanesh et al. [28] when \(X\) and \(Y\) were two independent inverted gamma distributions. Besides, the maximum likelihood (ML) and Bayesian estimates of stress–strength reliability were addressed by Baklizi [6, 7] for two-parameter exponential distributions based on records. Further, the estimation of \(R\) based on the upper record values in two-parameter bathtub-shaped lifetime distribution was studied by Tarvirdizadeh and Ahmadpour [46].

In many lifetime tests, the cases studied by the experimenter are those in which the units are removed before the failure of the experiment. The process of removal may be unintentional or planned by the experimenter in advance. To further explicate the matter, in clinical experiments, individuals might refuse to continue the experiment due to personal reasons, or the test may be terminated before observing all patients whose lifetime takes longer years. In such cases, the experimenter would face censored data. Hence, a censoring scheme should be particularly defined. One of the key forms of censoring is progressively type II censored sample scheme, which is described as follows: Let \(R = \left( {R_{1} ,R_{2} , \ldots ,R_{m} } \right)\) be a given vector which is defined by the experimenter and indicates the removal pattern of some experimental units before termination. The number of experimental units is represented by \(n\) in this censored scheme \(\left( {m < n} \right)\). Through observing the first failure, \(R_{1}\) of the survival units is randomly removed from the \(n - 1\) units remaining from the experiment. Likewise, \(R_{2}\) of the \(n - 2 - R_{1}\) units is randomly removed when the second failure is observed, and the experiment continues until the mth failure, in which all of the remaining units \(R_{m} = n - m - \mathop \sum \nolimits_{i = 1}^{m - 1} R_{i}\) are removed. The progressively type II censored sample scheme is denoted by \(R = \left( {R_{1} ,R_{2} , \ldots ,R_{m} } \right)\). If \(R_{1} = R_{2} = \cdots = R_{m - 1} = 0\) and \(R_{m} = n - m\), then the censoring scheme is type II censoring.

For further information about the theory, methods and applications of progressively type II censored data, the readers are strongly encouraged to refer to the book authored by Balakrishnan and Aggarwala [8]. In addition, the development of various types of censoring schemes, the likelihood and Bayesian inference of parameters of different distributions based on censored data, and their applications in lifetime analysis and reliability can be found in a book authored by Balakrishnan and Cramer [9]. It is worth mentioning that some of the studies have been carried out about the estimation of stress–strength reliability for some distributions under progressively type II censoring. For example, the estimation of \(R\) in exponential distributions under censored data has been investigated by Saraoglua et al. [41], and the stress–strength reliability of Weibull and inverse Weibull distributions has been studied under progressively censored data by Valiollahi et al. [49] and Yadav et al. [52], respectively. In another study carried out by Shoaee and Khorram [43], the estimation of the stress–strength reliability \(R = P(Y < X)\) was considered based on progressively type II censored samples when \(X\) and \(Y\) were two independent two-parameter bathtub-shaped lifetime distributions. Besides, Akdam et al. [3] obtained the estimation of \(R\) in the exponential power distributions based on censored data.

In the present article, the point and confidence interval estimation of stress–strength reliability \(R\) are investigated under progressively type II censored data when \(X\) and \(Y\) have inverse Gaussian distributions with zero drift \(\left( {IG\left( {\infty ,\lambda } \right)} \right)\) with the following probability density functions (pdfs)

and cumulative distribution functions (cdfs)

respectively, where \(\varPhi \left( \cdot \right)\) is the cdf of standard normal distribution.

Tweedie [48] conducted initial studies on the statistical properties of inverse Gaussian distribution, and various real-life applications were reported for inverse Gaussian distribution by Chhikara and Folks [13,14,15]. Moreover, in a study conducted by Padgett and Wei [35], the ML estimators of three-parameter inverse Gaussian distribution were obtained. Furthermore, some examples and more real-life applications were suggested by Chhikara and Folks [16], Johnson et al. [29] and Seshadri [42] for inverse Gaussian distribution in reliability and lifetime data analysis. In studies done by Padgett [36] and Sinha [45], the Bayes estimate of parameters and reliability function of two-parameter inverse Gaussian distribution were calculated for different types of prior distributions. Moreover, the Gibbs sampling method was used by Ahmad and Jaheen [2] and Pandey and Bandyopadhyay [37] to approximate the Bayes estimate of parameters. In other studies performed by Patel [39] and Basak and Balakrishnan [10], the parameters of inverse Gaussian distribution were assessed based on censored data.

The inverse Gaussian distribution can be used for modeling lifetime, failure time and reliability of a system, and this distribution has various applications in modeling and analyzing positively skewed data. On the other hand, we will usually encounter censored data in life testing experiments, especially progressively type II censored data which contain other type of censoring. These motivate us to estimate the stress–strength parameter \(R\) in inverse Gaussian distribution under progressively type II censored data.

As for the present study, the \(R\) parameter is estimated using Bayes and ML (via expectation–maximization (EM)) methods. In addition, three intervals (namely bootstrap confidence, HPD credible and confidence interval based on generalized pivotal quantity (GPQ)) are obtained.

The rest of the paper is organized as follows. In Sect. 2, the ML estimate of \(R\) is calculated using the fixed point iteration method and EM algorithm. In this section, the Bayes estimate of \(R\) is also calculated using the Gibbs sampling method. Next, in Sect. 3, the normal, percentile, Student’s t bootstrap confidence intervals (CIs), Bayesian HPD credible interval and a confidence interval based on GPQ are presented. Subsequently, the Monte Carlo simulation is used to compare the performance of various estimators and CIs in Sect. 4, and after that in Sect. 5, real data are analyzed for illustrative purposes. Finally, the conclusions are presented in Sect. 6.

Estimation of \(\varvec{R}\)

Let \(X \sim IG\left( {\infty ,\lambda_{1} } \right)\) and \(Y \sim IG\left( {\infty ,\lambda_{2} } \right)\) be independent random variables with pdfs given in (1). Let \(R = P(Y < X)\) be the stress–strength reliability. Then,

In this section, the ML and Bayes estimates of \(R\) are obtained under progressively type II censored data.

Maximum likelihood estimation

If \(\varvec{X} = \left( {X_{{1;m_{1} :n_{1} }} , \ldots ,X_{{m_{1} ;m_{1} :n_{1} }} } \right)\) is a progressively type II censored sample from \(IG\left( {\infty ,\lambda_{1} } \right)\) with scheme \(R_{1} = \left( {R_{1} ,R_{2} , \ldots ,R_{{m_{1} }} } \right)\), and \(\varvec{Y} = \left( {Y_{{1;m_{2} :n_{2} }} , \ldots ,Y_{{m_{2} ;m_{2} :n_{2} }} } \right)\) is a progressively type II censored sample from \(IG\left( {\infty ,\lambda_{2} } \right)\) with scheme \(R_{2}^{\prime } = \left( {R_{1}^{\prime } ,R_{2}^{\prime } , \ldots ,R_{{m_{2} }}^{\prime } } \right)\), then the likelihood function of \(\lambda_{1}\) and \(\lambda_{2}\) is given by (Balakrishnan and Cramer [9])

where

Upon using (1) and (2), the likelihood (4) reduces to

From (5) the log-likelihood function of the observed data \(\varvec{x}\) and \(\varvec{y}\) is

and likelihood equations of \(\lambda_{1}\) and \(\lambda_{2}\) are obtained as

where \(\varphi \left( \cdot \right)\) is the pdf and \(\varPhi \left( \cdot \right)\) is the cdf of standard normal distribution and \(\hat{\lambda }_{1}\) and \(\hat{\lambda }_{2}\) are the ML estimators of the parameters \(\lambda_{1}\) and \(\lambda_{2}\) which is obtained by solving nonlinear Eqs. (6) and (7).

From (6) and (7), \(\hat{\lambda }_{1}\) and \(\hat{\lambda }_{2}\) can be obtained as a fixed point solution of nonlinear equations of the form \(h_{i} \left( {\lambda_{i} } \right) = \lambda_{i} ,\; i = 1,2\) where

Since \(\hat{\lambda }_{i} , i = 1,2\) is a fixed point solution of these nonlinear equations, they can, therefore, be obtained by using a simple iterative procedure as \(h_{i} \left( {\lambda_{ij} } \right) = \lambda_{i,j + 1} , i = 1,2\), where \(\lambda_{ij}\) is the jth iteration of \(\hat{\lambda }_{i}\). The iteration procedure should be stopped when \(\left| {\lambda_{ij} - \lambda_{i,j + 1} } \right|\) is sufficiently small. Therefore, the ML estimate of the stress–strength reliability is computed to be

EM algorithm

To find the ML estimates of \(\lambda_{1} ,\;\lambda_{2}\) and \(R\), numerical methods such as Newton–Raphson (NR) should be used to solve nonlinear Eqs. (6) and (7) or fixed point iteration method \(\lambda_{i,j + 1} = h_{i} \left( {\lambda_{ij} } \right)\). It should be noted that these methods were sensitive to their initial parameter values and did not converge in the all cases. Another way to compute the ML estimates is the EM algorithm. The EM algorithm, first introduced by Dempster et al. [17], is an iterative method with many applications in the calculation of the ML of incomplete and missing data. For more information on the EM algorithm and its applications, the readers are strongly urged to refer to the book authored by McLachlan and Krishnan [32]. Each iteration of the EM algorithm encompasses of two steps: (i) In the E-step, any incomplete data are replaced by their expected values, and (ii) in the M-step, the likelihood function is maximized with the observed data and the expected value of the missing data to produce an update of the parameter estimates. Moreover, the E- and M-steps continue to estimate the ML of parameters until convergence. The censored data can be regarded as missing data; therefore, the EM algorithm can be used to estimate the ML of parameters based on the type II censoring data (see Ng et al., [33]). The observed and censored data can be shown as follows:

respectively, where \(Z_{j}\) is a \(1 \times R_{j}\) vector with \(Z_{j} = \left( {Z_{j1} ,Z_{j2} , \ldots ,Z_{{jR_{j} }} } \right)\) for \(j = 1,2, \ldots ,m\). The vector of censored data \(\varvec{Z}\) can be considered as missing data. By combining the observed data \(\varvec{X}\) and the censored data \(\varvec{Z}\), the complete data set \(\varvec{W}\) is obtained. The corresponding log-likelihood function is represented by \(l_{c} \left( {\varvec{W};\theta } \right).\) As for the inverse Gaussian distribution, there is

The ML estimate of the parameter based on the complete data, using the derivatives of log-likelihood function with respect to \(\lambda\), can be obtained by solving the equation

The EM algorithm consists of two steps: the E-step and the M-step. In the E-step, the missing data are estimated given the observed data and the current estimate of the model parameters, say \(\theta_{\left( h \right)} .\) Then, the E-step of the algorithm requires the computation of

which mainly involves the computation of the conditional expectation of functions of \(\varvec{Z}\) conditional on the observed values \(\varvec{X}\) and the current value of the parameters. Therefore, to facilitate the EM algorithm, the conditional distribution of \(\varvec{Z}\), conditional on \(\varvec{X}\) and the current value of the parameters, needs to be determined. Given \(X_{1;m:n} = x_{1;m:n} , \ldots ,X_{j;m:n} = x_{j;m:n}\), the conditional distribution of \(Z_{jk} , k = 1,2, \ldots ,R_{j}\) is given by

where \(Z_{jk}\) and \(Z_{jl} ,\;k \ne l,\) are conditionally independent given \(X_{j;m:n} = x_{j;m:n}\) (see Ng et al. [33]). According to (9), as for the inverse Gaussian distribution, there is

To obtain the required conditional expectation in the EM algorithm, the recurrence relation is written for the moments in our case, (Patel, [38]). It should be noted that

where

Taking partial differential of (10) with respect to \(z_{jk}\), there is

Multiplying both sides of Eq. (11) by \(z_{jk}^{r}\) and then integrating, a recurrence relation for the moments can be obtained as follows:

where

Thus, in the maximization or M-step of the EM algorithm, the value of θ which maximizes \(Q\left( {\theta |\theta_{\left( h \right)} } \right)\) will be used as the next estimate of \(\theta_{{\left( {h + 1} \right)}}\). In the M-step of the \(\left( {h + 1} \right)\) th iteration of the EM algorithm, using Eq. (8), the value of \(\lambda_{{\left( {h + 1} \right)}}\) is obtained by solving the equation

where the desired expectation is computed from Eq. (12) when \(r = 1\), i.e.,

So,

By repeating E- and M-steps until convergence occurs, the ML estimate of \(\lambda\) can be obtained. As a starting value, \(\lambda_{\left( 0 \right)}\) can be computed based on the pseudo-complete sample by replacing the censored observations \(Z_{j}\) by \(X_{j;m:n} , j = 1,2, \ldots ,m\), i.e.,

and hence,

Bayes estimation of \(R\)

In this subsection, the Bayes estimation of \(R\) is obtained under the assumption that \(\lambda_{1} ,\;\lambda_{2}\) are unknown. Let us assume that \(\lambda_{1}\) and \(\lambda_{2}\) have conjugate priors \({\text{Gamma}}\left( {\alpha_{1} ,\beta_{1} } \right)\) and \({\text{Gamma}}\left( {\alpha_{2} ,\beta_{2} } \right)\) with pdfs given in (14), respectively. The likelihood function of \(\left( {\lambda_{1} ,\lambda_{2} } \right)\) is given by

The prior distribution of \(\lambda_{1}\) and \(\lambda_{2}\) is given by

where \(\alpha_{1} ,\;\beta_{1} ,\;\alpha_{2}\) and \(\beta_{2}\) are known. Therefore, the joint posterior density of \(\lambda_{1} ,\;\lambda_{2}\) is given by

where the numerator using (13) and (14) is given by

According to the form of the posterior density, it is obvious that the explicit Bayes estimate of parameters cannot be obtained. Therefore, a simulation technique is used to compute the Bayes estimate of \(R\). Moreover, the Gibbs sampling technique is used for the conditional posterior distributions of each parameter \(\left( {\lambda_{1} ,\;\lambda_{2} } \right).\) The conditional posterior distributions of \(\lambda_{1} ,\;\lambda_{2}\) are obtained as

The posterior pdfs of \(\lambda_{1}\) and \(\lambda_{2}\) do not follow a particular known pdfs, but their plots bear similarity to the normal distribution. Therefore, to generate random values from these distributions, the Metropolis–Hastings method is used with normal proposal distribution. Hence, the algorithm of Gibbs sampling is described in Algorithm 1.

Now, under the square error loss function, the approximate Bayes estimate of \(R\) is the posterior mean and is given by

where \(M\) is the burn-in period (that is, a number of iterations before the stationary distribution is achieved).

Confidence Interval for \(R\)

In this section, various methods are used to find confidence interval for the stress–strength reliability parameter \(R\).

Bootstrap CIs

It is difficult to obtain the distribution of \(\hat{R}\), the ML estimator of \(R\). Therefore, it is impossible to obtain the exact confidence interval of \(R\). For this reason, the parametric bootstrap method is used for construction of the confidence interval of \(R\), which was first proposed by Efron and Tibshirani [19]. Firstly, using the algorithm suggested in Balakrishnan and Cramer [9], the Algorithm 2 was performed to generate the progressively type II censored samples with censoring scheme \(R = \left( {R_{1} ,R_{2} , \ldots ,R_{m} } \right)\) from inverse Gaussian distribution.

Now, the Algorithm 3 can be used to generate parametric bootstrap samples.

Using the bootstrap samples of \(R\) generated from Algorithm 3, three different bootstrap CIs of \(R\) are obtained as follows:

(I) Standard normal interval:

\(100\left( {1 - \alpha } \right)\%\) bootstrap interval is the standard normal interval as

where \(\hat{s}e_{\text{boot}}\) is the bootstrap estimate of the standard error based on \(\hat{R}_{1}^{*} , \ldots ,\hat{R}_{B}^{*}\).

(II) Percentile bootstrap (Boot-p) interval (Efron [18]):

Let \(G\left( x \right) = P\left( {\hat{R}^{*} \le x} \right)\) be the cdf of \(\hat{R}^{*}\). Define \(\hat{R}_{Bp} = G^{ - 1} \left( x \right)\) for a given \(x\). Then approximate \(100\left( {1 - \alpha } \right)\%\) confidence interval for \(R\) is given by

that is, just use the \(\left( {\frac{\alpha }{2}} \right)\) and \(\left( {1 - \frac{\alpha }{2}} \right)\) quantiles of the bootstrap sample \(\hat{R}_{1}^{*} , \ldots ,\hat{R}_{B}^{*}\).

(III) Student’s t bootstrap (Boot-t) interval (see Hall [25]):

Let

where \(\hat{s}e_{b}^{*}\) is an estimate of the standard error of \(\hat{R}_{b}^{*}\). Then \(100\left( {1 - \alpha } \right)\%\) bootstrap Student’s t interval is given by

where \(t_{\alpha }^{*}\) is the \(\alpha\) quantile of \(\hat{T}_{1}^{*} , \ldots ,\hat{T}_{B}^{*}\).

HPD Credible Interval

In this subsection, the Bayesian method is used to construct the confidence interval for \(R\). Since the construction of HPD credible interval is difficult, the method proposed by Chen and Shao [12] is used to construct the HPD credible interval. Let \(R^{\left( t \right)} , t = M + 1, \ldots , B\) be the posterior sample generated by Algorithm 1 in Sect. 2.2. Then from Chen and Shao [12], the \(100\left( {1 - \alpha } \right) \%\) HPD credible interval is given by the shortest interval of the form \(\left( {R_{\left( i \right)} , R_{{\left( {i + \left[ {\left( {1 - \alpha } \right)B} \right]} \right)}} } \right)\), where \(R_{{\left[ {\left( {1 - \alpha } \right)B} \right]}}\) is the \(\left[ {\left( {1 - \alpha } \right)B} \right]\) th smallest integer of \(\left\{ {R^{\left( t \right)} , t = M + 1, \ldots ,B} \right\}\).

Confidence interval based on GPQ

The concepts of generalized p values and generalized confidence interval (GCI) were introduced by Tsui and Weerahandi [47] and Weerahandi [50], the base of whose studies was GPQ, which can be utilized for complicated problems in statistical inference. The readers are strongly urged to study the book authored by Weerahandi [51].

In this section, the GPQ for \(\lambda_{1}\) and \(\lambda_{2}\) parameters is going to be calculated, after which a confidence interval can be constructed for the stress–strength parameter. The problem of estimating the parameters of the inverse Gaussian distribution based on GPQ has been considered by Zhang [53].

Generalized pivotal quantity

Let \(\varvec{X} = \left( {X_{1} ,X_{2} , \ldots ,X_{n} } \right)\) be an observable random vector with the distribution depending on \(\lambda\). An observation from \(\varvec{X}\) is denoted by \(\varvec{x} = \left( {x_{1} ,x_{2} , \ldots ,x_{n} } \right)\). To construct a GCI for \(\lambda\), first define a GPQ, \(Q\left( {\varvec{X};\, \varvec{x},\lambda } \right)\), which is a function of the random vector \(\varvec{X}\), its observed value \(\varvec{x}\) and the parameter \(\lambda\). Generally, \(Q\left( {\varvec{X};\,\varvec{x},\lambda } \right)\) is a GPQ for \(\lambda\), if it satisfies the following two conditions:

-

1.

For a given \(\varvec{x}\), the distribution of \(Q\left( {\varvec{X};\,\varvec{x},\lambda } \right)\) is free from unknown parameters.

-

2.

The value of \(Q\left( {\varvec{X};\,\varvec{x},\lambda } \right)\) at \(\hat{\lambda } = \hat{\lambda }_{0}\) should be \(\lambda\), where \(\hat{\lambda }_{0}\) is a ML estimate of \(\lambda\) based on the observed sample \(\varvec{x}\).

Now if \(Q_{\beta } \left( {\varvec{X};\, \varvec{x},\lambda } \right)\) is a \(\beta\) th percentile of \(Q\left( {\varvec{X}; \, \varvec{x},\lambda } \right)\) distribution, then the \(100\left( {1 - \alpha } \right)\%\) GCI of \(\lambda\) is any value of \(\lambda\) that satisfies

To find a \(100\left( {1 - \alpha } \right)\%\) GCI of \(R\), the GPQ for \(\lambda_{1}\) and \(\lambda_{2}\) in inverse Gaussian distribution should be found.

If \(X_{1} ,X_{2} , \ldots ,X_{n}\) is a random sample of size \(n\) from \(IG\left( {\infty ,\lambda } \right)\), then the ML estimator of \(\lambda\) is \(\hat{\lambda } = n\left( {\sum\nolimits_{i = 1}^{n} {\frac{1}{{X_{i} }}} } \right)^{ - 1}\). Let \(U = \frac{\lambda }{{\hat{\lambda }}}\), then it is easy to verify that the distribution of \(U\) is free from \(\lambda\) (Gulati and Mi, [22]). Indeed, the likelihood equation is \(\frac{n\lambda }{{\hat{\lambda }}} = \sum\nolimits_{i = 1}^{n} {\frac{1}{{\frac{{x_{i} }}{\lambda }}}}\), where \(Y_{i} = \frac{{X_{i} }}{\lambda }\) has \(IG\left( {\infty ,1} \right)\)-distribution. So, the distribution of \(U = \frac{\lambda }{{\hat{\lambda }}}\) is free from \(\lambda\). Similarly, if \(\varvec{X} = \left( {X_{1;m:n} , \ldots ,X_{m;m:n} } \right)\) is a progressively Type II censored sample from \(IG\left( {\infty ,\lambda } \right)\) with scheme \(R = \left( {R_{1} ,R_{2} , \ldots ,R_{m} } \right)\) and ML estimator \(\hat{\lambda }\), then it is easy to show that distribution of \(U = \frac{\lambda }{{\hat{\lambda }}}\) is free from \(\lambda\).

Now, let \(\hat{\lambda }_{{\left( {1,0} \right)}}\) be a ML estimate of \(\lambda_{1}\) based on the observed sample \(\varvec{x} = \left( {x_{{1;m_{1} :n_{1} }} , \ldots ,x_{{m_{1} ;m_{1} :n_{1} }} } \right)\) from \(IG\left( {\infty ,\lambda_{1} } \right)\) distribution. Then,

is a GPQ for \(\lambda_{1}\), since

-

1.

The distribution of \(Q_{{\lambda_{1} }}\) does not depend on any unknown parameters.

-

2.

\(Q_{{\lambda_{1} }} = \lambda_{1}\) when \(\hat{\lambda }_{1} = \hat{\lambda }_{{\left( {1,0} \right)}}\).

Also, suppose \(\varvec{Y} = \left( {Y_{{1;m_{2} :n_{2} }} , \ldots ,Y_{{m_{2} ;m_{2} :n_{2} }} } \right)\) is a progressively Type II censored sample from \(IG\left( {\infty ,\lambda_{2} } \right)\). Similarly, the GPQ for \(\lambda_{2}\) is

where \(\hat{\lambda }_{2}\) is the ML estimator of \(\lambda_{2}\) and \(\hat{\lambda }_{{\left( {2,0} \right)}}\) is a ML estimate of \(\lambda_{2}\) based on the observed sample \(\varvec{y} = \left( {y_{{1;m_{2} :n_{2} }} , \ldots ,y_{{m_{2} ;m_{2} :n_{2} }} } \right)\).

Based on the above results, the GPQ for \(R\) can be found by replacing the unknown parameters in (3) by (21) and (22). Therefore,

It is clear that the distribution of \(Q_{R}\) is very complicated. Therefore, it is not possible to obtain exact confidence interval of \(R\), but for each \(\left( {\hat{\lambda }_{{\left( {1,0} \right)}} ,\hat{\lambda }_{{\left( {2,0} \right)}} } \right)\) the distribution of \(Q_{R}\) does not depend on any unknown parameters. Therefore, the GCI of \(R\) can be found by a Monte Carlo simulation through the Algorithm 4:

A simulation study

In this section, the performance of the point estimators and CIs presented in this paper is evaluated using a Monte Carlo simulation study. With 10,000 replications, the average absolute biases and root-mean-squared error (RMSE) of the ML estimator via the EM algorithm and the numerical procedure (fixed-point iteration) and the approximate Bayes estimator of \(R\) are reported.

where \(\hat{R}_{i}\) is an approximated Bayes estimate of \(R\) (see Gelman et al. [21]) in ith replication.

To compute the Bayes estimates and HPD credible intervals, we consider the four prior density functions as follows:

Prior 1: \(\alpha_{1} = \alpha_{2} = 1, \beta_{1} = \beta_{2} = 2\)

Prior 2:\(\alpha_{1} = \alpha_{2} = 1, \beta_{1} = \beta_{2} = 4\)

Prior 3:\(\alpha_{1} = \alpha_{2} = 3, \beta_{1} = \beta_{2} = 2\)

Prior 4:\(\alpha_{1} = \alpha_{2} = 3, \beta_{1} = \beta_{2} = 4\)

Based on our computations, the approximate Bayes estimates of \(R\) based on prior 4 functioned better than the Bayes estimators based on other priors in terms of both absolute biases and RMSEs. Therefore, the results are reported for Bayes estimator based on priors 4. In addition, the proposed intervals are compared in terms of their coverage probability and expected length.

Four sets of parameter values \(\left( {\lambda_{1} = 2,\lambda_{2} = 7} \right),\) \(\left( {\lambda_{1} = 7,\lambda_{2} = 7} \right), \left( {\lambda_{1} = 20,\lambda_{2} = 7} \right)\) and \(\left( {\lambda_{1} = 45,\lambda_{2} = 7} \right)\) are used to compare the performance of the different methods and censoring schemes. Therefore, from Eq. (3), \(R = 0.3125, 0.5, 0.6599, 0.7608\). For each set of these parameters, 10,000 data sets are generated and comparisons are performed between Bayes estimates and ML estimates based on numerical procedure and the EM algorithm. Also different CIs, such as bootstrap CIs, GCIs and the HPD credible intervals are computed and then compared in terms of their coverage probabilities and expected lengths.

Three censoring schemes are also used as given in Tables 1, 2 and 3. For convenience, the notation \(0^{*k}\) for k successive zeroes is introduced.

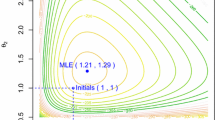

First, the ML estimate of \(R\) is calculated, and then the average estimates, absolute biases and RMSEs of the ML estimate and the approximate Bayes estimate of \(R\) based on priors 4 are computed. The coverage probability and expected length of the 95% CIs for parameter \(R\), such as the bootstrap CIs, namely the percentile interval (Boot-p) based on numerical procedure and EM algorithm, GCIs and the HPD credible intervals, are computed. To compute the bootstrap confidence intervals, 10,000 bootstrap iterations are used. The Bayes estimates and HPD credible intervals are also computed based on 10,000 samples and discard the first 2000 values as burn-in period. In order to examine the convergence of sequences obtained from the MCMC methods, one of the preliminary tools is plotting a graph to show the convergence to the target distribution. For more precisely monitoring the convergence of MCMC simulations, we also use the scale reduction factor estimate \(\hat{R} = \sqrt {\text{var} \left( \psi \right)/W}\) where \(\text{var} \left( \psi \right) = \left( {n - 1} \right)W/n + B/n\) with the iteration number \(n\) for each chain, and \({\text{B}}\) and \({\text{W}}\) are the between and within sequence variances, respectively (see Gelman et al. [21]). In our case, the scale factor value of the MCMC estimates is found below 1.1 which is an acceptable value for their convergence. Note that the censoring schemes \(\left( {r_{i} ,r_{j} } \right), i,j = 1,2,3\) are determined in Tables 1, 2 and 3 according to \(\left( {m_{1} ,m_{2} } \right)\) sample sizes of observed sample \(\varvec{x}\) and \(\varvec{y}\).

Based on our simulation results, from Table 4, the absolute bias for small and large values of \(R\) decreases while the sample size increases. However, as the sample size increases, the RMSE decreases for each value of \(R\), which is the case for all methods. It is observed that the RMSE of the estimators increased for values of \(R\) near \(0.5.\) Also, the absolute bias of all estimators is near zero, and for large values of \(R\), the absolute bias of ML estimator based on numerical procedure and EM algorithm is less than the Bayes estimator. Furthermore, the RMSE performance of the estimators is clear, and the Bayes estimator has smaller RMSE for all values of the stress–strength reliability.

The nominal level for the CIs or the credible intervals is 0.95 in each case. As can be seen in Table 5, the GCIs are close to the bootstrap CIs for different parameter values. Additionally, the coverage probability of the bootstrap and the GPQ intervals are near the nominal level. It is also observed that the HPD intervals with prior 4 provide the smallest average confidence credible lengths for the same censoring schemes, as well as different parameter values. With a rise in sample size, the coverage probability of bootstrap CIs approached 0.95, and it provided a shorter expected length. In contrast, the length of other CIs decreases as the sample size increases. It is also observed that the expected length of all intervals is maximized when \(R = 0.5\), and if the exact value of the parameter \(R\) approached zero or one, then its value would be shorter and shorter. According to the performance of the Bayes estimates based on different priors, it is clear that the Bayes estimates and corresponding credible intervals are sensitive to the values of \(\alpha\) and \(\beta\). Furthermore, comparing priors 1–4 in Table 5, for fixed \(\alpha\) (fixed \(\beta\)) the expected length will decrease when \(\beta\) increases (\(\alpha\) increases).

A Real Example

In this section, two real data are analyzed to illustrate the use of our proposed estimation methods. We consider two real data sets which contained times to breakdown of an insulating fluid between electrodes recorded at different voltages (Nelson [34]). In Tables 6 and 7, the failure times (in minutes) are presented, which are for an insulating fluid between two electrodes subject to a voltage of 34 kV (data set 1) and 36 kV (data set 2).

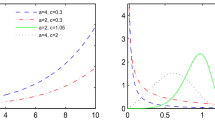

First, the inverse Gaussian distribution is fitted to the two data sets separately. The ML estimates of \(\lambda_{1}\) and \(\lambda_{2}\) are 1.208 and 1.053, respectively. In addition, the Kolmogorov–Smirnov (K–S) distances between the empirical distribution functions and the fitted distribution functions are used to check the goodness of fit. The K–S test statistic is computed as 0.2079 and 0.2682 and the associated p values are 0.3364 and 0.1921, respectively. Based on the p values, one cannot reject the hypothesis that the data are coming from the inverse Gaussian distributions. Moreover, we plot the empirical distribution functions and the fitted distribution functions in Fig. 1 (see Farbod and Gasparian [20]).

The generated data and corresponding censored schemes are shown in Table 8.

The bootstrap interval is calculated based on 100,000 parametric bootstrap resamples using the ML estimates given in Sect. 2, and the interval endpoints are found as the lower and upper \(2.5\%\) quantiles of the estimated bootstrap distribution of \(R\). The ML estimate of \(R\) becomes \(\hat{R} = 0.5046\). The 95% bootstrap CIs from (17)–(19) are computed as (0.3438, 0.6654), (0.3420, 0.6571), (0.4732, 0.5583), respectively. Moreover, the 95% bootstrap CIs based on EM algorithm are computed as (0.3425, 0.6667), (0.3425, 0.6582), (0.4645,0.5694), respectively. Using Algorithm 4 in Sect. 3.3, 100,000 values of \(Q_{R}\) are generated. The 95% lower and upper generalized confidence limits of the stress–strength reliability are obtained as the lower and upper \(2.5\%\) quantiles of the ordered values of \(Q_{R}\). The resultant interval is computed as (0.3489, 0.6647). The Bayesian intervals are obtained based on Gibbs sampling technique. In addition, 10,000 observations are obtained from the posterior distribution of \(R\). The HPD interval based on prior 4 is found through the algorithm presented by Chen and Shao [12]. From (16), the approximate Bayes estimate of R, based on B = 10,000 samples and discard the first M = 2000 values as burn-in period, becomes \(\hat{R}_{B} = 0.5061\). The interval based on Gibbs sampling is given by (0.4929,0.5211). The simulated values and histogram of \(R\) generated by the algorithm of Gibbs sampling are plotted in Fig. 2. In this example, the scale factor value of the MCMC estimates based on 20 sequences is 1.001 which is an acceptable value for their convergence. It can be seen that the HPD interval has the shortest expected length, and all intervals covered the exact value of the stress–strength reliability parameter.

Conclusions

Based on progressively type II censored samples, point estimates and CIs of stress–strength reliability \(R = P(Y < X)\) are considered by different methods where \(X\) and \(Y\) denoted two independent inverse Gaussian distributions with unknown parameters. Based on our simulation results, concerning the performance of the estimators in terms of RMSEs, it is observed that the Bayes estimator for all values of \(R\) functioned better than the ML estimators for stress–strength reliability.

The results demonstrated that the expected lengths of all intervals tend to decrease either the exact value of stress–strength reliability which gets closer to the extremes or the sample size increases. As for the coverage probability, the bootstrap and the GCIs are near the nominal level, and the HPD credible interval based on prior 4 functioned better.

Based on interval lengths, it appears that the HPD interval is the shortest for all values of \(R\). The GCI is close to the bootstrap CIs. Therefore, it can be concluded that the Bayesian interval has the best overall performance in terms of coverage probability and interval length.

References

Ahmad, K., Fakhry, M., Jaheen, Z.: Empirical Bayes estimation of P(Y < X) and characterizations of the Burr-type X model. J. Stat. Plan. Inference 64, 297–308 (1997)

Ahmad, K., Jaheen, Z.: Approximate Bayes estimators applied to the inverse Gaussian lifetime model. Comput. Math. Appl. 29, 39–47 (1995)

Akdam, N., Kinaci, I., Saracoglu, B.: Statistical inference of stress-strength reliability for the exponential power (EP) distribution based on progressive type-II censored samples. J. Math. Stat. 46, 239–253 (2017)

Asgharzadeh, A., Valiollahi, R., Raqab, M.: Estimation of the stress-strength reliability for the generalized logistic distribution. Stat. Methodol. 15, 73–94 (2013)

Awad, A., Azzam, M., Hamdan, M.: Some inference results in P(Y < X) in the bivariate exponential model. Commun. Stat. Theory Methods 10, 2515–2524 (1981)

Baklizi, A.: Interval estimation of the stress-strength reliability in the two-exponential distribution based on records. J. Stat. Comput. Simul. 84, 2670–2679 (2013)

Baklizi, A.: Bayesian inference for Pr(Y < X) in the exponential distribution based on records. J. Appl. Math. Model 38, 1698–1709 (2014)

Balakrishnan, N., Aggarwala, R.: Progressive censoring: theory, methods and applications. Birkh¨auser, Boston (2000)

Balakrishnan, N., Cramer, E.: The Art of Progressive Censoring Applications to Reliability and Quality. Springer, New York (2014)

Basak, P., Balakrishnan, N.: Estimation for the three-parameter inverse Gaussian distribution under progressive Type-II censoring. J. Stat. Comput. Simul. 82, 1055–1072 (2012)

Birnbaum, Z.: On a use of Mann-Whitney statistics. In: Proceedings of the Third Berkley Symposium in Mathematics, Statistics and Probability, University of California Press, Berkley, vol. CA.1, pp. 13–17 (1956)

Chen, M., Shao, Q.: Monte Carlo estimation of Bayesian credible and HPD intervals. J. Comput. Graph. Stat. 8, 69–92 (1999)

Chhikara, R., Folks, J.: Statistical distributions related to the inverse Gaussian. Commun. Stat. Theory Methods 4, 1081–1091 (1975)

Chhikara, R., Folks, J.: Optimum test procedures for the mean of first Passage time distributions in Brownian motion with positive drift. Technometrics 18, 189–193 (1976)

Chhikara, R., Folks, J.: The Inverse Gaussian distribution as a lifetime model. Technometrics 19, 461–468 (1977)

Chhikara, R., Folks, J.: The Inverse Gaussian Distribution-Theory, Methodology, and Applications. Mercel Dekker, NewYork (1989)

Dempster, A., Laird, N., Rubin, D.: Maximum likelihood from incomplete data via the EM algorithm. J. R. Stat. Soc. Ser. B 39, 1–38 (1977)

Efron, B.: The jackknife, the bootstrap and other re-sampling plans. In: Philadelphia, PA: SIAM, CBMSNSF Regional Conference Series in Applied Mathematics, vol. 34 (1982)

Efron, B., Tibshirani, R.: An Introduction to the Bootstrap. Chapman and Hall, New York (1993)

Farbod, D., Gasparian, K.: On the maximum likelihood estimators for some generalized Pareto-like frequency distribution. J. Iran. Stat. Soc. 12, 211–233 (2013)

Gelman, A., Carlin, J., Stern, H., Rubin, D.: Bayesian Data Analysis, 2nd edn. Chapman and Hall, London (2003)

Gulati, S., Mi, J.: Testing for scale families using total variation distance. J. Stat. Comput. Simul. 76, 773–792 (2006)

Gupta, R., Gupta, R.: Estimation of P(a 0 X > b 0 Y) in the multivarite normal case. Stat 1, 91–97 (1990)

Hajebi, M., Rezaei, S., Nadarajah, S.: Confidence intervals for P(Y < X) for the generalized exponential distribution. Stat. Methodol 9, 445–455 (2012)

Hall, P.: Theoretical comparison of bootstrap confidence intervals. Ann. Stat. 16, 927–953 (1988)

Hauck, W., Hyslop, T., Anderson, S.: Generalized treatment elects for clinical trials. Stat. Med. 19, 887–899 (2000)

Huang, K., Mi, J., Wang, Z.: Inference about reliability parameter with gamma strength and stress. J. Stat. Plan. Inference 142, 848–854 (2012)

Iranmanesh, A., Fathi, K., Hasanzadeh, M.: On the estimation of stress-strength reliability parameter of inverted gamma distribution. Math. Sci. 12, 71–77 (2018)

Johnson, N., Kotz, S., Balakrishnan, N.: Continuous univariate distributions. Wiley, NewYork (1994)

Kotz, S., Lumelskii, Y., Pensky, M.: The Stress-Strength Model and its Generalization: Theory and Applications. World Scientific, Singapore (2003)

Kundu, D., Gupta, R.: Estimation of P(Y < X) or the generalized exponential distribution. Metrika 61, 291–308 (2005)

McLachlan, G., Krishnan, T.: The EM Algorithm and Extensions, 2nd edn. Wiley, Hoboken (2008)

Ng, H., Chan, P., Balakrishnan, N.: Estimation of parameters from progressively censored data using EM algorithm. Comput. Stat. Data Anal. 39, 371–386 (2002)

Nelson, W.: Applied Life Data Analysis. Wiley, NewYork (1982)

Padgett, W., Wei, L.: Estimation for the three-parameter inverse Gaussian distribution. Commun. Stat. Theory Methods 8, 129–137 (1979)

Padgett, W.: Bayes estimation of reliability for the inverse Gaussian model. IEEE Trans. Reliab. 30, 384–385 (1981)

Pandey, B., Bandyopadhyay, P.: Bayesian estimation of inverse Gaussian distribution. Int. J. Agric. Stat. Sci. 9, 373–386 (2013)

Patel, R.: Estimates of parameters of truncated inverse Gaussian distribution. Ann. Inst. Stat. Math. 17, 29–33 (1965)

Patel, M.: Progressively censored samples from inverse Gaussian distribution. Aligarh J. Stat. 17, 28–34 (1997)

Rezaei, S., Tahmasbi, R., Mahmoodi, M.: Estimation of P(Y < X) for generalized Pareto distribution. J. Stat. Plan. Inference 140, 480–494 (2010)

Saracoglu, B., Kinaci, I., Kundu, D.: On estimation of R = P(Y < X) for exponential distribution under progressive type-II censoring. J. Stat. Comput. Simul. 82, 729–744 (2012)

Seshadri, V.: The Inverse Gaussian Distribution: Statistical Theory and Application. Springer, New York (1999)

Shoaee, S., Khorram, E.: Stress-strength reliability of a two-parameter bathtub-shape lifetime based on progressively censored samples. Commun. Stat. Theory Methods 44, 5306–5328 (2015)

Simonoff, J., Hochberg, Y., Reiser, B.: Alternative estimation procedures for P(X < Y) in categorized data. Biometrics 42, 895–907 (1986)

Sinha, S.: Bayesian estimation of the reliability function of the inverse Gaussian distribution. Stat. Prob. Lett. 4, 319–323 (1986)

Tarvirdizadeh, B., Ahmadpour, M.: Estimation of the stress-strength reliability for the two-parameter bathtub-shaped lifetime distribution based on upper record values. Stat. Methodol. 31, 58–72 (2016)

Tsui, K., Weerahandi, S.: Generalized p-values in significance testing of hypotheses in the presence of nuisance parameters. J. Am. Stat. Assoc. 84, 602–607 (1989)

Tweedie, M.: Statistical properties of Inverse Gaussian distribution I. Ann. Math. Stat. 28, 362–377 (1957)

Valiollahi, R., Asgharzadeh, A., Raqab, M.: Estimation of P(Y < X) for Weibull distribution based on progressive type-II censoring. Commun. Stat. Theory Methods 42, 4476–4498 (2013)

Weerhandi, S.: Generalized confidence interval. J. Am. Stat. Assoc. 88, 899–905 (1993)

Weerhandi, S.: Exact statistical methods for data analysis. Springer, Berlin (1995)

Yadav, A.S., Singh, S.K., Singh, U.: Estimation of stress–strength reliability for inverse Weibull distribution under progressive type-II censoring scheme. J. Ind. Prod. Eng. 35(1), 48–55 (2018)

Zhang, G.: Simultaneous confidence intervals for several inverse Gaussian populations. Stat. Prob. Lett. 92, 125–131 (2014)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Rostamian, S., Nematollahi, N. Estimation of stress–strength reliability in the inverse Gaussian distribution under progressively type II censored data. Math Sci 13, 175–191 (2019). https://doi.org/10.1007/s40096-019-0289-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40096-019-0289-1