Abstract

The aim of this paper is to discuss the estimation and prediction problems for the Burr type-III distribution under progressive type-II hybrid censored data. We obtained maximum likelihood estimators (MLEs) of unknown parameters using stochastic expectation maximization (SEM) algorithms, and the asymptotic variance–covariance matrix of the MLEs under SEM framework is obtained by Fisher’s information matrix. We provide various Bayes estimators for unknown parameters using Lindley’s approximation method and importance sampling technique from square error, entropy, and linex loss functions. Finally, we analyze a real data set and generate a simulation study to compare the performance of various proposed estimators and predictors under different situations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

In many lifetime and reliability studies, the experimenter may not always obtain complete information on failure times for all experimental units. The experimenters remove some unit from the experiment, or in some cases, it may happen that some units are unintentionally lost from the experiment. So, the censored data obtained from such experiments and censoring occur commonly. Type-I censoring and type-II censoring are the most common censoring schemes [33]. One important characteristic of these two censoring schemes is that they do not allow for units to be removed from the test at any point other than the final termination point. The mixture of type-I and type-II censoring schemes is known as the hybrid censoring scheme which was firstly introduced by Epstein [16], and it becomes quite popular in reliability and life testing experiments. A lot of work has been done on hybrid censoring and many different variations in it (see [6, 11, 14, 21, 22]). For example, Fairbanks et al. [17] introduced the type-I hybrid censoring (HCS) and considered the special case when the lifetime distribution is exponential. The main disadvantage of type-I HCS is that most of the inferential results need to be developed in this case under the condition that the number of observed failures is at least one; moreover, there may be very few failures occurring up to the pre-fixed time, which results in low efficiency of the estimator(s) of the model parameter(s). For this reason, Childs et al. [11] introduced an alternative hybrid censoring scheme that would terminate the experiment at the random time \(T^{*}=\max \{X_{m:m:n},T\}\). This hybrid censoring scheme is called type-II hybrid censoring scheme (type-II HCS), and it has the advantage of guaranteeing at least m failures to be observed by the end of the experiment. If m failures occur before time T, then the experiment would continue up to time T which may end up yielding possibly more than m failures in the data. On the other hand, if the \(m{\mathrm{th}}\) failure does not occur before time T, then the experiment would continue until the time when the \(m{\mathrm{th}}\) failure occurs in which case we would observe exactly m failures in the data. Hybrid censoring schemes have been introduced in the context of progressive censoring as well. Kundu and Joarder [23] discussed type-II progressive hybrid censoring scheme (Type-II PHCS). Type-II PHCS overcomes the drawback of the type-I PHCS that the maximum likelihood (ML) may not always exist. In brief, progressive type-II hybrid censoring scheme can be described as follows. Consider n identical, independent units with distinct distributions which are placed in a lifetime test, each random variable \(X_{1:m:n}\), \(X_{2:m:n}\),...,\(X_{m:m:n}\) is identically distributed, with p.d.f \(f(x;\theta )\) and c.d.f \(F(x;\theta )\), where \(\theta\) denotes the vector of parameters \((\alpha ,\beta )\). The correct value of m \((m<n)\) is a random variable. Suppose \(R_{1},R_{2},\ldots ,R_{m}\) are fixed before the start of the experiment called progressive censoring scheme with \(R_{j}>0\) and \(\sum _{j=1}^{m}R_{j}+m=n\) is specified in the experiment. Under the type-II progressive censoring scheme, at the time of the first failure, \(X_{1:m:n}\) , \(R_{1}\) of the \(n-1\) surviving units are randomly withdrawn from the life test, then at the time of the second failure \(X_{2:m:n}\) , \(R_{2}\) of then \(n-R_{1}-2\) surviving units are withdrawn, and so on, and finally at the time of \(m{\mathrm{th}}\)the failure \(X_{m:m:n}\) all \(R_{m}=n-R_{1}-R_{2}-\cdots -R_{m-1}-m\) surviving units are withdrawn from the life test. Since \(R_{i}^{\prime }\)s are pre-fixed, let us denote these failure times \(X_{1:m:n}\le X_{2:m:n}\le \cdots \le X_{m:m:n}\), though their distributions depend on \(R_{i}^{\prime }\)s. The type-II PHCS involves the termination of the life test at time \(T^{*}=\max \{X_{m:m:n},T\}\) , and let D denote the number of failures that occur before time T, and d shows the observed value of D. Then, if \(X_{m:n}>T\), the experiment would terminate at the \(m{\mathrm{th}}\)failure, with the withdrawal of units occurring after each failure according to the pre-fixed progressive censoring scheme \((R_{1},R_{2},\ldots ,R_{m})\). However, if \(X_{m:n}<T\), then instead of terminating the experiment by removing all remaining \(R_{m}\) units after the \(m{\mathrm{th}}\) failure, the experiment would continue to observe failures without any further withdrawals up to time T. Thus, in this case, we have \(R_{m}=R_{m+1}=\cdots =R_{D}=0,\)and the resulting failures times are indicated by \(X_{1:m:n},X_{2:m:n},\ldots ,X_{m:m:n},X_{m+1:n},\ldots ,X_{d:n}\). We denote the two cases as Case I and Case II, respectively:

In this paper, we consider the estimation and prediction of unknown parameters of Burr type-III distribution under progressive type-II hybrid censored sample from both classical and Bayesian perspectives. Also, we provide predictive estimates and intervals for future unknown observable value based on some priori information. The p.d.f and c.d.f of a random variable X following Burr type-III distribution have the following form

where \(\alpha\) and \(\beta\) are the shape parameters of the distribution. Burr [7] introduced twelve cumulative distribution functions for fitting various failure lifetime data. Among these distributions, Burr type-III distribution can accommodate different hazard lifetime data, so it has received considerable attention in the recent past. Also, it can properly approximate many well-known distributions such as Weibull, gamma, and log-normal for fitting lifetime data. This makes it worthwhile model to these distributions. Inferences for the parameters \(\alpha\) and \(\beta\) of \({\hbox {Burr}}(\alpha ,\beta )\) distribution have been investigated by many researchers such as [3, 4, 9, 26, 28, 31, 32, 37]. We have organized the rest of this paper as follows. The MLEs of unknown parameters are discussed in “Maximum likelihood estimation” section. We have proposed SEM algorithm for this purpose. The asymptotic confidence intervals are also constructed by using fisher’s information matrix in “Fisher’s information matrix” section. Bayes estimators are obtained with respect to loss functions in “Bayesian estimation” section. In “Data analysis” section, a real-life data set is analyzed to illustrate the proposed statistical methods, and also Monte Carlo simulations have been performed for comparison purposes in “Simulation study” section. Finally, a conclusion is given in “Conclusion” section.

Maximum likelihood estimation

In this section, we derive MLEs of the unknown parameters of the Burr type-III distribution when the lifetime data are observed under progressive hybrid type-II censoring. Suppose that \(\varvec{x}=(x_{(1)},x_{(2)},\ldots ,x_{(m)})\) from Case I and \(x_{c}=(x_{(1)},x_{(2)},\ldots ,x_{(m::m:n)},\ldots ,x_{(D:n)})\) from Case II are observed sample from Burr\((\alpha ,\beta )\) distribution under progressive hybrid type-II censoring scheme. Then, the likelihood function of \((\alpha ,\beta )\) given the observed data \(\varvec{x}\) can be written as:

where

and

and \(f(.;\alpha ,\beta )\) and \(F(.;\alpha ,\beta )\) are as defined in Eqs. (1) and (2). Now using the associated log-likelihood function \(l=\ln L(\alpha ,\beta \mid \varvec{x})\), ML estimates of \(\alpha\) and \(\beta\) can be obtained on simultaneously solving the partial derivatives equations of l with respect to \(\alpha\) and \(\beta\). In most cases, the estimators do not admit explicit expressions and some numerical procedures such as Newton–Raphson method (NR) have to be used to determine the estimators. NR is a direct approach for obtaining the MLEs by maximizing the likelihood function and a well-known numerical algorithm for finding the root of a function or equation [39]. It involves calculation of the first and second derivatives of the observed log-likelihood with respect to the parameters. However, when employing NR method, some errors can be occur, such as time-consuming depending on the size of your system, or may fail to convergence. So, the traditional NR method or a numerical technique that can be used to solve the associated log-likelihood partial derivative equations as a closed form for the MLEs does not exist. Here we use the expectation-maximization algorithm for this purpose, see [12]. The main advantage of this algorithm is that: it is more reliable particularly dealing with censored data. Note that the Case I has been discussed by Singh et al. [15]. So, our aim in this paper is that we propose the classical and Bayesian estimation for Case II; they are given in the next sections.

Expectation-Maximization (EM) algorithm

The EM algorithm was introduced an iterative procedure to compute the MLEs in the presence of missing data and consists of an expectation (E) step and a maximization (M) step by Dempster et al. [12]. Dealing with hybrid censored observations, the problem finding MLEs of unknown parameters with the Burr type-XII model can be viewed as an incomplete data problem [30]. Under progressive hybrid type-II censoring, \(X_{(i)}\) shows at the time of ith failure, and \(R_{i}\) shows the number of units which are removed from the experiment. Now suppose that \(Z_{i}=(Z_{i1},Z_{i2},\ldots ,Z_{iR_{i}}),\) \(i=1,\ldots ,m\) and \(\acute{Z}=(\acute{Z}_{1},\acute{Z}_{2},\ldots ,\acute{Z}_{\acute{R}_{D}})\) denote a vector of those \(R_{i}\) and \(\acute{R}_{D}\) number of censored units. Then, the total censored data can be viewed as \(Z=(Z_{1},Z_{2},\ldots ,Z_{m})\) and \(\acute{Z}=(\acute{Z}_{1},\acute{Z}_{2},\ldots ,\acute{Z}_{\acute{R}_{D}})\). Now the complete sample of n number of units can be seen as a combination of the observed data and the censored data, that is, \(W=(X,Z,\acute{Z})\). Consequently, the log-likelihood function of the complete data set can be written as:

Now, by taking the partial derivatives with respect to \(\alpha\) and \(\beta\) and equating them to zero, we get the following expressions

The above two equations need to be solved simultaneously to obtain the ML estimates of \((\alpha ,\beta )\). EM algorithm consists of two steps. In E-step, expression of the censored observations is replaced by their respective expected values. Further, M-step involves the maximization of E-step. Now suppose that at the kth stage in E-step estimate of \((\alpha ,\beta )\) is \((\alpha ^{(k)},\beta ^{(k)})\); then, after using the M-step it can be shown that the \((k+1)\)th stage updated estimators are of the form

where

Notice that the iterative procedure in M-step can be terminated after the convergence is achieved, that is, when we have \(|\alpha ^{(k+1)}-\alpha ^{(k)}|+|\beta ^{(k+1)}-\beta ^{(k)}|\le \epsilon\) for some given \(\epsilon >0\). However, one of the biggest disadvantages of EM algorithm is that it is only a local optimization procedure and can easily get stuck in a saddle point [40], in particular with high-dimensional data or increasing complexity for censored and lifetime models. A possible solution to overcome the computational inefficiencies is to invoke stochastic EM algorithm suggested by Celeux and Diebolt [8, 19, 29, 38]. It can be seen that the above EM expressions \(E_{si}(\alpha ,\beta ),s=1,2,3,4,5,6\) do not turn out to have closed form, and therefore, one needs to compute these expressions numerically. So, we used the stochastic expectation maximization (SEM) algorithm to obtain ML estimators.

SEM algorithm

In this section, the SEM algorithm is used in computing MLEs of unknown parameters. Diebolt and Celeux [13] proposed a stochastic version of EM algorithm that replaces the E-step by stochastic (S-step) and execute it by simulation. Thus, SEM algorithm completes the observed sample by replacing each missing information by a value randomly drawn from the distribution conditional on results from the previous step. The algorithm has been shown to be computationally less burdensome and more appropriate than the EM algorithm in a lot of problems, see [2, 13, 35]. Recently, Zhang et al. [40] considered SEM algorithm to obtain ML estimates for unknown parameters of various models when the data are observed under progressive type-II censoring; also they compared the results with EM algorithm. Next, we use the same idea to generate the independent \(R_{i}\) number of samples of \(z_{ij},i=1,2,\ldots ,m\) and \(j=1,2,\ldots ,R_{i}\) and \(Z_{i}=(Z_{11},\ldots ,Z_{1R_{i}},\ldots ,Z_{m1},\ldots ,Z_{mR_{i}}),\acute{Z}=(\acute{Z} _{1},\acute{Z}_{2},\ldots ,\acute{Z}_{\acute{R}_{D}})\) from the following conditional distribution function

Subsequently, the ML estimators of \(\alpha\) and \(\beta\) at the \((k+1)\)th stage are given by

Further, the above iterative procedure can be terminated after the convergence is achieved.

Fisher’s information matrix

In this section, we present the observed fisher’s information matrix obtained by using the missing value principle of Louis [27]. The observed fisher’s information matrix can be used to construct the asymptotic confidence intervals. The idea of missing information principle is as follows:

This section deals with obtaining fisher’s information matrix which will be further used to compute interval estimates for the unknown parameters of the Burr type-III distribution. The asymptotic variance–covariance matrix of the MLEs of \((\alpha ,\beta )\) can be obtained by inverting the observed information matrix and is given by

where

Next, we use the SEM algorithm to compute observed information matrix. We first generate the censored observations \(z_{ij}\) using Monte Carlo simulation from the conditional density as discussed in the previous section. Subsequently, the asymptotic variance–covariance matrix of the MLEs of \((\alpha ,\beta )\) can be obtained as

where \(l^{*}=\ln L(w; \alpha , \beta )\) given in (5). Further, the involved expressions are given by

Finally, in all the three cases using the idea of large sample approximation the two-sided \(100(1-\gamma )\%,0<\gamma <1\), asymptotic confidence intervals for \(\alpha\) and \(\beta\), respectively, can be obtained as \(\hat{ \alpha }\pm z_{\frac{\gamma }{2}}\sqrt{{\hbox {Var}}(\hat{\gamma })}\) and \(\hat{\beta } \pm z_{\frac{\gamma }{2}}\sqrt{{\hbox {Var}}(\hat{\beta })}\). Here \(z_{\frac{\gamma }{2} }\) is the upper \(\frac{\gamma }{2}\)th percentile of the standard normal distribution. Notice that, here \(\hat{\alpha }\) and \(\hat{\gamma }\) represent the ML estimates of \(\alpha\) and \(\beta\), obtained by NR, EM algorithm and SEM algorithm in the previous section.

Bayesian estimation

Suppose that a sample \(\varvec{x}=(x_{(1)},x_{(2)},\ldots ,x_{(m)})\) is observed from Burr type-III distribution under progressive hybrid type-II censoring scheme \(R=(R_{1},R_{2},\ldots ,R_{m})\). We assume an independent gamma priors for the unknown parameters \(\alpha\) and \(\beta\) having pdfs of the following form:

Here a, b, c and d are the hyper-parameters and are responsible for providing information about the unknown parameters. Now, the joint posterior density of \((\alpha ,\beta )\) can be written as \(\pi (\alpha ,\beta )=\pi (\alpha )\pi (\beta )\). Further, the joint posterior density of \((\alpha ,\beta )\) given the observed data \(\varvec{x}\) is obtained as

where K is the normalizing constant given by

In Bayesian estimation, selection of loss function plays an important role. The most commonly used loss function is square error loss, given by \(\delta _{\mathrm{SL}}(g(\theta ),\hat{g}(\theta ))=(g(\theta )-\hat{g}(\theta ))^{2}\); here \(\hat{g}(\theta )\) represent an estimate of \(g(\theta )\). Bayes estimator of \(g(\theta )\) under the square error loss is the posterior mean given by

It is to be noticed that square error loss is a symmetric loss function and it puts an equal weight to the under estimation and overestimation. In many practical situations, underestimation may be more serious than the overestimation, and vice versa. In such cases, asymmetric loss function can be taken into account. So, we considered linex loss function which was proposed by Varian [36] and is given by \(\delta _{\mathrm{LL}}(g(\theta ),\hat{g}(\theta ))={\mathrm{e}}^{\hat{g}(\theta )-g(\theta )}-\nu (\hat{g}(\theta )-g(\theta ))-1\), where \(\nu \ne 0\) is a shape parameter. Notice that \(\nu >0\) suggests the case when overestimation is more serious than underestimation, and vice versa for \(\nu <0\). The Bayes estimator of \(g(\theta )\) under linex loss is given by

We further consider entropy loss function, given by

Here \(w>0\) suggests the case when overestimation is more serious than underestimation, and vice versa for \(w<0\). The Bayes estimator of \(g(\theta )\) under entropy loss function is given by

Notice that for \(w=-1\), Bayes estimator of \(g(\theta )\) under entropy loss function coincides with Bayes estimator of \(g(\theta )\) under square error loss function. Now, observe that Bayesian estimators given by (17), (18) and (19) do not admit closed-form expressions. Therefore, in the next section we use the approximation method of Lindley [25] and importance sampling technique.

Lindley approximation

In this section, we use the method of Lindley to obtain approximate explicit Bayes estimators of \(\alpha\) and \(\beta\). The Bayesian estimates involve the ratio of two integrals; we consider I(X) defined as:

By applying the Lindley’s method, I(x) can be approximated as:

where

Here l(., .) denotes the log-likelihood function, \(\pi (\alpha ,\beta )\) denotes the corresponding prior distribution, and \(\sigma _{ij}\) represents the (i, j)th element of the variance covariance matrix. All the expressions in Eq. (19) are evaluated at the ML estimates. Suppose, we want to estimate \(\alpha\) under the squared error loss. Then, we take \(g(\alpha ,\beta )=\alpha\) and subsequently observe that \(g_{\beta }=1\) , \(g_{\alpha \alpha }=g_{\alpha }=g_{\beta \beta }=g_{\beta \alpha }=g_{\alpha \beta }=0\). Consequently, the Bayes estimator of \(\alpha\) is obtained as:

where \(\sigma _{ij},\,i,j=1,2\) are the elements of the variance-covariance matrix of \((\hat{\alpha },\hat{\beta })\) as reported in “Fisher’s information matrix” section. Other involved expressions (evaluated at \((\alpha ,\beta )=(\hat{\alpha },\hat{\beta })\)) are reported in “Appendix.” In a similar way, Bayes estimator of \(\mu\) under linex loss and entropy loss is, respectively, obtained as:

where

and with

Finally, the Bayes estimator of Burr type-III under the linex loss function is given by:

where

And with \(g(\alpha ,\beta )=\alpha ^{-w}\) ,\(g_{\alpha }=-w\alpha ^{-(w+1)}\) ,\(g_{\alpha \alpha }=w(w+1)\alpha ^{-(w+2)},g_{\beta }=g_{\beta \beta }=g_{\beta \alpha }=g_{\alpha \beta }=0\). Similarly, Bayes estimator of \(\beta\) under square error, linex and entropy loss functions can easily be obtained. Details are not presented here for the shake of brevity. Further notice that highest posterior density (HPD) interval estimates of \(\alpha\) and \(\beta\) can not be obtained using Lindley approximation. Therefore, we next use importance sampling technique for this purpose.

Importance sampling

Importance sampling is a very useful technique to draw sample from the posterior densities. Observe that posterior density given by (15) can be written as:

where

Now consider the following steps to draw samples from the above posterior density.

- Step 1. :

-

Generate \(\beta _i\) from \(Gamma ( D+c , d+\sum _{i=1}^{m} \ln x_{j}+\sum _{i=m+1}^{D} \ln x_{j} )\).

- Step 2. :

-

Given the value of \(\beta _i\), generate \(\alpha _i\) from \(Gamma( D+a , b+\sum _{i=1} ^{m} \ln (1+x_{i}^{-\beta })+\sum _{i=m+1} ^{D} \ln (1+x_{i}^{-\beta }) )\)

- Step 3. :

-

Repeat the steps 1 and 2 s times to obtain \((\alpha _1, \beta _1), (\alpha _2, \beta _2), \ldots , (\alpha _s, \beta _s)\).

Now Bayes estimator of \(\alpha\) under square error and entropy loss functions are, respectively, obtained as:

Next we use the method of [10] for computing HPD intervals. Suppose that \(\alpha\) is the unknown parameter of interest, and \(\pi (\alpha \mid \varvec{x})\) and \(\Pi (\alpha \mid \varvec{x}).\) respectively, denote its posterior density and posterior distribution functions. If α(p) denotes the pth quantile of \(\alpha.\) then we have \(\alpha ^{(p)}=\inf \{\alpha :\Pi (\alpha \mid \varvec{x})\ge p;\,0<p<1\}\). It can be observed that for a given \(\alpha ^{*}\), a simulation consistent estimator of \(\Pi (\alpha ^{*}\mid \varvec{x})\) can be obtained as: \(\Pi (\alpha ^{*}\mid \varvec{x})=\frac{\sum _{i=1}^{s}1_{\alpha \le \alpha ^{*}}h(\alpha _{i},\beta _{i})}{\sum _{i=1}^{s}h(\alpha _{i},\beta _{i})},\) where \(1_{\alpha \le \alpha ^{*}}\) is the indicator function. Let \(\alpha _{(i)}\) be the ordered values of \(\alpha _{i}\). Then, the corresponding estimate is obtained as

where

Subsequently \(\alpha ^{(p)}\) can be estimated as

To obtain a \(100(1-p)\%\) confidence interval for \(\alpha\), we consider the intervals of the form \(\left( \hat{\alpha }^{(\frac{j}{s})},\hat{ \alpha }^{(\frac{j+[(1-p)s]}{s})}\right) ,i=1,2,\ldots ,s-[(1-p)s]\) with [u] denoting the greatest integer less than or equal to u. The interval with the smallest width is treated as the HPD interval. Similarly, the HPD interval for the parameter \(\beta\) can be constructed.

Data analysis

In this section, we analyze a real data set for illustration purpose. We consider a data set that represents the strength measured in GPA for single carbon fibers of 10 mm in gauge lengths and is given below.

In literature, this data set has been discussed for Burr type-III distribution. In fact authors considered a transformation \(X={\mathrm{e}}^{Y}\), where Y represent the original lifetime data that fits the generalized logistic distribution, see [1, 5]. Notice that the transformed variable X follows Burr type-III distribution. For comparison purpose, we also consider fitting of gamma, Weibull, and generalized exponential distribution. We use the method of negative log-likelihood criterion (NLC), Kolmogorov–Smirnov (KS) test statistics, Akaike’s information criterion (AIC) and Bayesian information criterion to judge the goodness of fit as discussed in Farbod and Gasparian [18]. The results are shown in Table 1. It can be clearly verified that the Burr type-III distribution has better fit to this data set based on the minimum KS test statistics and NLC. Also, from Fig. 1 we observe that the Burr type-III has a good fit for these data. Then, we generate the progressive hybrid type-II censored sample with different schemes as shown in Table 2 when \({T}=65\). The values of SEM and NR estimates are also given in Table 2. Also, the Bayes estimates based on Lindley and Markov Chain Monte Carlo (MCMC) methods are given in Tables 3 and 4.

Simulation study

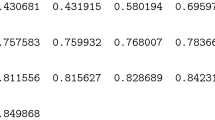

In this section, we conduct a Monte Carlo simulation study to compare the performance of proposed estimators. We simulate the data from Burr (0.5, 1.5) distribution under various progressive hybrid type-II censoring schemes for different combinations of (n, m). For each case, we obtain ML estimates of \(\alpha\) and \(\beta\) using NR method which was introduced in [24] and SEM algorithm. We mention that all the values are based on 5000 Monte Carlo simulations. Further, in the tables we denote the censoring schemes like (5, 0, 0, 0) as \((5,0^{*3})\) for convenience. All the average estimates and means square error (MSE) values of \(\alpha\) and \(\beta\) are reported in Tables 5 and 6. It is seen the table SEM estimates are better than NR estimates in the sense of having lower MSE values. The 95% asymptotic and Bayesian confidence intervals are also included in Tables 4 and 5. It can be observed that the both Bayesian and asymptotic confidence intervals generally have less lengths.

Tables 6 and 7 show the Bayes estimates based on Lindley approximation method. Also, Tables 8 and 9 show the Bayes estimates based on MCMC method that discussed in Rizzo [33] and Givens and Hoeting [20]. For both methods, we used the non-informative prior distribution by setting \({a}={b}={c}={d}=0\). The results show that both methods have very good performance to estimate unknown parameters. However, the Lindley method has generally lower MSEs.

Conclusion

In this study, we consider the estimation of the parameters of Burr type-III model in the presence of progressive type-II hybrid censored data. To do this, we applied classical estimation methods such as NR and SEM as well as Bayesian approximation techniques including Lindley and MCMC approximation method. The results showed that the SEM method is preferable to NR method. Also, the Bayes estimates based on Lindley method have lower MSEs than the MCMC method. All computations have been done by using statistical R software version 3.1.3.

References

Alkasasbeh, M.R., Raqab, M.Z.: Estimation of the generalized logistic distribution parameters comparative study. Stat. Methodol. 6(3), 262–279 (2009)

Arabi Belaghi, R., Valizadeh Gamchi, F., Bevrani, H., Gurunlu Alma, O.: Estimation on Burr type III by progressive censoring using the EM and SEM algorithms. In: 13th Iranian Statistical Conference, Shahid Bahonar University of Kerman, Iran 24–26 Aug 2016

Asgharzade, A., Abdi, M.: Point and interval estimation for the Burr type III distribution. J. Stat. Res. Iran (JSRI) 5(2), 221–233 (2008)

Altindag, O., Cankaya, M.N., Yalcinkaya, A., Aydogdu, H.: Statistical inference for the burr type III distribution under type II censored data. Commun. Fac. Sci. Univ. Ank. Ser. A1 66(2), 297–310 (2017)

Bader, M., Priest, A.: Statistical aspects of fibre and bundle strength in hybrid composites. In: Progress in Science and Engineering of Composites, pp. 1129–1136 (1982)

Balakrishnan, N., Basu, A.P.: The exponential distribution: theory, methods and applications. Technometrics 39(3), 341–341 (1997)

Burr, I.W.: Cumulative frequency function. Ann. Math. Stat. 1, 215–232 (1942)

Celeux, G., Diebolt, J.: The SEM algorithm: a probabilistic teacher algorithm derived from the EM algorithm for the mixture problem. Comput. Stat. Q. 2(1), 73–82 (1985)

Clark, R.M., Laslett, G.M.: Generalizations of power-law distributions applicable to sampled fault-trace lengths: model choice, parameter estimation and caveats. Geophys. J. Int. 136(2), 357–372 (1999)

Chen, M.H., Shao, Q.M.: Monte Carlo estimation of Bayesian credible and HPD intervals. J. Comput. Graph. Stat. 8(1), 69–92 (1999)

Childs, A., Chandrasekhar, B., Balakrishnan, N., Kundu, D.: Estimation exact likelihood inference based on type-I and type-II hybrid censored samples from the exponential distribution. Ann. Inst. Stat. Math. 55(2), 319–330 (2003)

Dempster, A.P., Laird, N.M., Rubin, D.B.: Maximum likelihood from incomplete data. R. Stat. Soc. 39, 1–38 (1977)

Diebolt, J., Celeux, G.: Asymptotic properties of a stochastic EM algorithm for estimating mixing proportions. Stoch. Models 9(4), 599–613 (1993)

Draper, N., Guttman, T.: Estimation Bayesian analysis of hybrid life-test with exponential failure times. Ann. Inst. Stat. Math. 39, 219–255 (1987)

Dey, S., Singh, S., Tripathi, Y.M., Asgharzadeh, A.: Estimation and prediction for a progressively censored generalized inverted exponential distribution. Stat. Methodol. 32, 185–202 (2016)

Epstein, B.: Estimation truncated life-tests in the exponential case. Ann. Math. Stat. 25(3), 555–564 (1954)

Fairbanks, K., Madasan, R., Dykstra, R.: Estimation confidence interval for an exponential parameter from hybrid life-tes. J. Am. Stat. Assoc. 77, 137–140 (1982)

Farbod, D., Gasparian, K.: On the maximum likelihood estimators for some generalized Pareto-like frequency distribution. J. Iran. Stat. Soc. (JIRSS) 12(2), 211–233 (2013)

Gurunlu Alma, O., Arabi Belaghi, R.: On the estimation of the extreme value and normal distribution parameters based on progressive type-II hybrid-censored data. J. Stat. Comput. Simul. 86(3), 569–596 (2015)

Givens, G.H., Hoeting, J.A.: Computational Statistics. Wiley, London (2005)

Gupta, R.D., Kundu, D.: Estimation Hybrid censoring schemes with exponential failure distribution. Commun. Stat. Theory Methods 27(12), 3065–3083 (1998)

Jeong, H.S., Park, J.I., Yum, B.J.: Development of (r; T) hybrid sampling plans for exponential lifetime distributions. J. Appl. Stat. 23, 601–607 (1996)

Kundu, D., Joarder, A.: Analysis of type-II progressively hybrid censored data. Comput. Stat. Data Anal. 50(10), 2509–2528 (2006)

Lambers, J.: (2009) www.math.usm.edu/lambers/mat419/lecture9.pdf

Lindley, D.V.: Approximate Bayesian methods. Trab. Estad. 31, 223–237 (1980)

Lindsay, S.R., Wood, G.R., Woollons, R.C.: Modeling the diameter distribution of forest stands using the Burr distribution. J. Appl. Stat. 23, 609–619 (1996)

Louis, T.A.: Finding the observed information matrix when using the EM algorithm. J. R. Stat. Soc. Ser. B (Methodol.) 44(2), 226–233 (1982)

Mokhlis, N.: Reliability of a stress-strength model with Burr type III distributions. Commun. Stat. Theory Methods 34(7), 1643–1657 (2005)

Nielsen, F.S.: “Newton–Raphson Method” and “Newton’s Method” in CRCconcise encyclopedia of mathematics. Bernoulli 6, 457–489 (2000)

Ng, H.K.T., Chan, P.S., Balakrihnan, N.: Estimation of parameters form progressively censored data using EM algorithm. Comput. Stat. Data Anal. 39, 371–386 (2002)

Pant, M.D., Headrick, T.C.: A characterization of the Burr type III and type XII distributions through the method of percentiles and the Spearman correlation. Commun. Stat. Simul. Comput. 46(2), 1611–1627 (2015)

Quanxi, Sh: Estimation for hazardous concentrations based on NOEC toxicity data: an alternative approach. Environmetrics 11, 583–595 (2000)

Rizzo, M.L.: Statistical Computing with R. Chapmann and Hall, CRC

Rupert, G., Miller, J.R.: Survival analysis. Wiley, 0-471-25218-2 (1997)

Tregouet, D.A., Escolano, S., Tiret, L., Mallet, A., Golmard, J.L.: A new algorithm for haplotype-based association analysis: the stochastic-EM algorithm. Ann. Hum. Genet. 68(2), 165–177 (2004)

Varian, H.R.: A Bayesian approach to real estate assessment. In: Savage, L.J., Feinberg, S.E., Zellner, A. (eds.) Studies in Bayesian and Statistics: In Honor of L. J. Savage, pp. 195–208. North-Holland Pub.Co., Amsterdam (1975)

Wang, F.K., Cheng, Y.F.: EM algorithm for estimating the Burr XII parameters with multiple censored data. Qual. Reliab. Eng. Int. 26(6), 615–630 (2009)

Wei, G.C.G., Tanner, M.A.: A Monte Carlo implementation of the EM algorithm and the poor man’s data augmentation algorithms. J. Am. Stat. Assoc. 85(411), 699–704 (1990)

Weisstein, E.W.: “Newton–Raphson Method” and “Newton’s Method” in CRCconcise Encyclopedia of Mathematics. Chapman & Hall/CRC, Boca Raton (1969)

Zhang, M., Ye, Z., Xie, M.: A stochastic EM algorithm for progressively censored data analysis. Qual. Reliab. Eng. Int. 30(5), 711–722 (2013)

Acknowledgements

The authors would like to thank the anonymous referees for their constructive comments that led to put many details on the paper and substantially improved the presentation.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Valizadeh Gamchi, F., Gürünlü Alma, Ö. & Arabi Belaghi, R. Classical and Bayesian inference for Burr type-III distribution based on progressive type-II hybrid censored data. Math Sci 13, 79–95 (2019). https://doi.org/10.1007/s40096-019-0281-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40096-019-0281-9

Keywords

- Bayesian inference

- Burr type-III model

- SEM algorithm

- Progressive hybrid censored data

- Loss functions

- Highest posterior density (HPD)