Abstract

In the past few years, there has been an increasing interest in developing project control systems. The primary purpose of such systems is to indicate whether the actual performance is consistent with the baseline and to produce a signal in the case of non-compliance. Recently, researchers have shown an increased interest in monitoring project’s performance indicators, by plotting them on the Shewhart-type control charts over time. However, these control charts are fundamentally designed for processes and ignore project-specific dynamics, which can lead to weak results and misleading interpretations. By paying close attention to the project baseline schedule and using statistical foundations, this paper proposes a new ex ante control chart which discriminates between acceptable (as-planned) and non-acceptable (not-as-planned) variations of the project’s schedule performance. Such control chart enables project managers to set more realistic thresholds leading to a better decision making for taking corrective and/or preventive actions. For the sake of clarity, an illustrative example has been presented to show how the ex ante control chart is constructed in practice. Furthermore, an experimental investigation has been set up to analyze the performance of the proposed control chart. As expected, the results confirm that, when a project starts to deflect significantly from the project’s baseline schedule, the ex ante control chart shows a respectable ability to detect and report right signals while avoiding false alarms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

According to the project management body of knowledge (PMBOK), performance measurement baseline (PMB) is an approved integrated cost-schedule plan for the project work, against which project execution and all future measurements are compared to monitor and control performance (Project Management Institute 2013). Although this definition implies that both the cost and schedule dimensions need to be considered, a vast majority of the research papers published in the area of project management has focused on the schedule aspect (Hochwald et al. 1966). Critical path method (CPM), program evaluation and review technique (PERT), graphical evaluation and review technique (GERT), and critical chain and buffer management (CCBM) are mentioned as the well-known examples of baseline scheduling techniques in which different assumptions are made (Kelly and Walker 1959; Kelly 1961; Malcolm et al. 1959; Pritsker and Whitehou 1966; Goldratt 1997; Shahriari 2016).

Before the project is started, project’s baseline schedule acts as a roadmap for the future project performance, while during the execution of the project, it is used as a reference point to show the negative and positive deviations (Browning 2014; Thamhain 2013). For doing so, an efficient project control system needs to be used in which a negative (or even positive) signal is generated when the project starts to deflect significantly from the baseline. Earned duration management (EDM) is such a system that compares the actual project’s schedule performance with the baseline and provides straightforward metrics, by which, after interpretation, both the size and direction of deviations in time can be measured. A difficulty about this interpretation, however, is that these performance indicators are fluctuated over time, which makes it difficult to discriminate between acceptable (as-planned) and non-acceptable (not-as-planned) variations in the project’s schedule performance. Furthermore, there is no understanding of how the schedule risk analysis can be used to define more realistic control limits. Therefore, the practical use of EDM is often faced with some challenges that make it inefficient.

To overcome this drawback and to discern acceptable (as-planned) variabilities from non-acceptable (not-as-planned) variations, statistical process control (SPC) techniques have been recently applied for project’s performance monitoring (Colin 2015). In such techniques, when the gap between planning and actual performance becomes significantly large, an early-warning signal is issued to take corrective/preventive actions, and as a result getting the project back on track. However, in the recent years, statistical project control has been primarily addressed from the classical perspective which has been primarily designed for processes and ignores project-specific assumptions. Although the use of the classical Shewhart-type control charts for the schedule performance indicator has been widely employed on the literature as a possible solution, in practice, however, if the project manager ignores the acceptable variations of the project performance, his/her analysis would be completely ineffective from a practical point of view. That is why many if not most project managers are interested in having a specific control chart with the ability to consider project-specific assumptions. Consequently, in project monitoring and control, a real need for a methodology exists which is capable of detecting cases when the acceptable variations of the project performance can heavily affect the quality of the interpretations. In these cases, to have a relatively careful analysis and taking the right decisions, a project-specific control chart is needed which is the main subject of this paper.

By paying close attention to the project baseline schedule and using statistical foundations, in this paper, a simple yet effective control chart is developed for the project control which discriminates between acceptable and non-acceptable variations. When the project’s schedule performance tends to deviate from the baseline and falls between non-acceptable areas, the proposed control chart generates a warning signal. In this case, if there is a negative gap, the generated signal is used to take corrective and/or preventive actions and as a result getting the project back on track. Otherwise, with the presence of a positive gap, some actions like re-baselining can be taken to exploit project opportunities. It is assumed that a baseline schedule has been constructed through the baseline scheduling. Also, actual durations for each activity are assumed to be simulated from subjective estimates in user-defined ranges. In addition, with paying attention to the fact that the time and cost are generally correlated, this paper uses EDM duration-based metrics in which the schedule and cost dimensions have been decoupled.

The rest of this paper is organized as follows. Section 2 begins by laying out the theoretical dimensions of the research and gives an overview of the earned duration management, and of how the project performance observations are calculated. This section is then continued by providing an overview of statistical process/quality control and ends with a comprehensive review of the researches related to the use of statistical methods for monitoring the project performance. Section 3 is devoted to our statistical project control approach and looks at how the ex ante control chart is constructed. Section 4 is concerned with illustrating how the schedule performance indicators are monitored in an ex ante control chart. Section 5 is concerned with the methodology employed for the performance analysis and shows how the experimental tests are designed before and after the project execution. The paper will then go on to computational results in Sect. 6. The last section includes a discussion of the implications of the findings for future research opportunities and provides our concluding remarks.

Literature review

Earned duration management

Earned value management (EVM) is one of the most widely used systems for project control that was originally developed by the US Department of Defense in 1967 when the contractors were required to comply with Cost/Schedule Control Systems Criteria (C/SCSC) (Anbari 2003). Generally speaking, EVM measures the project’s time and cost performance by providing a set of metrics including key parameters, performance measures, and forecasting indicators (Vanhoucke 2014).

Despite its long practical success, traditional EVM metrics suffer from the lack of a reliable schedule performance indicator and show an unreliable behavior at the end of the project. Also, EVM measures the project schedule performance in monetary terms which is not desirable for its sake. Earned schedule concept and earned duration management, have been developed to overcome these drawbacks, possibly leading to more reliable and more applicable indices (Lipke 2009; Khamooshi and Golafshani 2014). A considerable amount of literature has been published on the key aspects of the use of earned value management, earned schedule concept, and earned duration management. Interested readers are referred to Fleming and Koppelman (2010) and Anbari (2003) which seem to be more comprehensive and address fundamentals, extensions, and some practical implications. For the sake of brevity, we focus only on the abbreviations and important formulas of the EDM which are summarized in Table 1.

Statistical process/quality control

If a product is produced through a stable and repeatable process, customer expectations should be met or exceeded. In such a situation, the values of the product’s quality characteristic are probably on target with the minimum variability. Statistical process/quality control is a set of problem-solving tools, also known as the magnificent seven, for monitoring the quality of the products. A control chart is one of these tools which is used to examine how a product’s quality characteristic changes over time. Figure 1 shows a typical control chart in which the center line (CL) represents the average value or the actual nominal value of the quality characteristic. Two other lines are upper and lower control limits (UCL and LCL) which are used to show the acceptable range of variability of the quality characteristic. As this figure shows, when the process is in the state of statistical control, the values of the quality characteristic over time tend to be around the center line (target) and fall between the upper and lower control limits.

A functional SPC chart requires the calculations of the limits displayed in Fig. 1 and plotting the values of the quality characteristic over time. For this purpose, different assumptions need to be made on the type of the quality characteristics under study, and some other considerations, such as sample size and the number of sub-groups. While the observations are within the control limits (UCL and LCL), the process is assumed to be in the statistical control. However, when observations show an unusual behavior or are placed outside the control limits, a signal is issued which means that significant sources of variability are present. In this situation, some investigations should be made, and corrective/preventive actions need to be taken. Systematic use of such control charts is an effective way for keeping the values of the quality characteristics around the target with minimum variability. For more detail on the use of modern statistical control charts, please refer to Montgomery (2013) and Okland (2007) which provide a comprehensive view of statistical control charts, from basic principles to state-of-the-art concepts and applications.

Classical statistical project control

In the recent years, there has been an increasing interest in the use of statistical process/quality control techniques for monitoring project’s performance indicators which have led to a relatively large volume of published papers in this context. For the sake of brevity, related articles on this subject have been summarized in Table 2.

By reviewing the above table, the alert reader has already noticed that a vast majority of the published studies in the literature have primarily focused on monitoring the schedule or cost performance using Shewhart-type control charts in which the individual performance indicators are plotted against three-sigma limits. One criticism on the use of such control charts, however, is that they ignore project-specific dynamics and can lead to weak results and misleading interpretations. Colin and Vanhoucke (2014) argue that the statistical process control should be applied in a different way to the project management context (Colin and Vanhoucke 2014). They present a novel control chart in which control limits are constructed based on the subjective estimates of the activity duration and its dependencies with the activity’s costs. However, in their control chart, it is assumed that cost has a high correlation with the time performance of the activities, which might not necessarily coincide with the real-life situations. More precisely, when the focus is on controlling the time performance of a project, cost-based data should not be considered as the only proxy for assessing the project schedule performance. As a result, the generalizability of much published researches on this issue is problematic. Therefore, in practice, many if not most project managers are interested in having a project-specific control chart for monitoring the schedule performance of their projects.

Construction of ex ante control chart

In this section, we explain our statistical project control approach. A control chart is a very useful technique for process monitoring which analyzes process variations with data points plotted in time order. Since processes do same things repeatedly in an infinite time span, process data can be gathered over time with different sample sizes. However, commenting on the current literature, we state that projects are fundamentally different from processes since these are executed in a finite time span. In addition, there are few samples in each review period because the number of performance indicators is limited for a certain review period. The alert reader has already noticed that when the standard control charts are used for the project control, some project-specific assumptions are not fairly considered and may be ignored. Therefore, despite having common characteristics with the classical approaches, our control chart is functionally different. In the ex ante control chart, input data are simulated within a user-defined range which can be derived either from historical data or through expert judgments. This is what we do in risk analysis to identify the weak parts of the network which may jeopardize the project. Therefore, ex ante control chart does not suffer from the small sample size per time unit since there are a large number of simulated samples for each review period. In addition, unlike the standard control charts, common cause variations in an ex ante control chart are connected directly to the baseline schedule, and a special cause variation occurs when the schedule performance falls outside the acceptable area.

Our statistical project control approach has two distinct phases. Phase I starts before the project execution once the baseline schedule has been constructed via the baseline scheduling techniques. This phase allows the project manager to define tolerance limits of the schedule performance in such a way that the baseline schedule is fairly considered. Phase II starts with the execution of the project and continues to the project’s close-out. In this phase, we use the ex ante control chart to monitor the schedule performance by comparing the actual progress against the control limits. These phases are described in the following subsections.

Phase I: preliminary analysis

When a baseline schedule is constructed given the project data, it acts as a roadmap for the future project performance and allows the project manager to follow up the as-planned situation. While the project manager tries to keep the actual progress as close to the baseline schedule as possible, uncertain events may lead to some negative and positive deviations which are sometimes natural and inherent variation within any project. Previous researches have assumed that the project progress follows a normal distribution, and consequently, its normal variation is considered acceptable (Salehipour et al. 2016). However, by implementing a risk analysis, we find that the project baseline may have some critical parts in which even the slightest variations are not allowed and lead the project to exceed the schedule baseline. To the best of our knowledge, almost all studies in the literature ignore the connection between the project’s baseline schedule and the construction of the control limits. Also, little is known about how to determine acceptable tolerances for project performance indicators (Willems and Vanhoucke 2015).

Ex ante control chart uses the project’s baseline schedule to calculate the control limits between which natural variability is allowed. The idea behind this is that the project performance is impacted by the variations of the activity durations placed in the different parts of the baseline, as well as estimation, measurement and implementation errors coming from the impossibility to estimate, measure and implement the actual situation as it occurs. Hence, various scenarios of the activity duration estimates should be used here for the Phase I analysis. For this purpose, Monte Carlo simulation (MCS), as a well-known method to understand the impact of risk and uncertainty, is used to simulate actual duration values on certain user-defined ranges (Izadi and Kimiagari 2014; Rao and Naikan 2014; Chiadamrong and Piyathanavong 2017). Once the actual duration estimates of all working days are simulated for a number \({\text{nrs}}\) of simulation runs, project performance indicators of each simulation run can be calculated for all review periods, by which the tolerance limits can be estimated.

Let each performance indicator, like \({\text{EDI}}\), be a project control variable \(X\), such that the periodic or cumulative performance indices can be considered as its values. Once the actual durations are simulated for each review period \(j, j = 1, \ldots ,{\text{RD}}\), there is \({\text{nrs}}\) different scenarios for the project actual progress by which the distribution function of \(X\) can be reconstructed. For this purpose, assume that \({\text{nrs}}\) observations at each particular point \(j\) in time came from an arbitrary continuous probability distribution \(F\), and let \(L\) and \(U\) be the tolerance limits such that for any given values of the confidence level \(\alpha\), and the minimum percentage of the population to cover, i.e., \(P\), the following equation holds where \(L < U\), \(0 < \alpha < 1\) and \(0 < P < 1\).

If Eq. (1) is satisfied, L and U would be the exact tolerance limits of project performance at the jth review period, with a confidence level of \(100\left( {1 - \alpha } \right){\text{\% }}\) and \(P \times 100{\text{\% }}\) coverage of population. For the sake of simplicity, \([L, U]\) can be called a two-sided \(\left( {1 - \alpha , P} \right)\) tolerance interval for the project performance, where in the general form

and \(x\) and \(s\) are the mean and standard deviation of the \({\text{EDI}}\), and \(K\) is the tolerance factor that can be exactly obtained from both parametric and nonparametric methods. In the parametric method, \(K\) is determined by solving the following equation:

where \(F_{{{\text{nrs}} - 1}}\) denotes the Chi-square cumulative distribution function with \({\text{nrs}} - 1\) degrees of freedom, and \(\chi_{1, P}^{2}\) denotes the \(P\) percentile of a non-central Chi-square distribution with \({\text{df}} = 1\) and non-centrality parameter \(P\). Calculation of the tolerance factor \(K\) in this method is subject to the normality assumption of the parent distribution of \({\text{EDI}}^{j}\), which is not the case in many practical situations, especially when the central limit theorem cannot be invoked. In this situation it would be of interest to use the nonparametric method in which the only assumption is the continuous distribution of \({\text{EDI}}^{j}\). According to Krishnamoorthy and Mathew (2009), to construct a nonparametric tolerance interval with the confidence level of \(100 \times \left( {1 - \alpha } \right){\text{\% }}\), a pair of order statistics \(x_{r}\) and \(x_{s}\) need to be determined by which at least \(p \times 100\) percent of the population would be covered. For this purpose, we need to determine the values of \(r < s\) so that

Given that

Equation (5) can be simplified as

Since \(F\left( {X_{s} } \right) - F(X_{r} )\) follows a beta distribution with parameter values \(r\) and \({\text{nrs }} - s + 1\), we can conclude that

where X is distributed as a binomial distribution with parameters \({\text{nrs}}\) and \(P\). The right-hand side of the above equation depends only on the difference \(s - r\). Therefore, the tolerance factor can be exactly calculated by minimizing \(k = s - r\) in such a way that a proportion \(P\) of the population would be covered with at least \(1 - \alpha\) confidence level. By determining the minimum value of the tolerance factor, \((x_{r} , x_{s} )\) is our desired nonparametric tolerance interval for the project performance in the ith review period with the confidence level of \(1 - \alpha\) that covers \(p \times 100\%\) of the population. It is recommended to take \(s = {\text{nrs}} - r + 1\). In this case, tolerance interval \((x_{r} , x_{s} )\) may be re-written as \((x_{r} , x_{{{\text{nrs}} - r + 1}} )\). For the sake of simplicity, the calculated values of \(r\) and \(s\) may be rounded down to the nearest integer.

Phase II: monitoring of actual performance

The purpose of this phase is to compare the actual performance with the constructed control limits and to inform the project manager if the actual performance at a particular review period is outside the limits. For this purpose, we should plot the value of \({\text{EDI}}^{j}\) in each review period \(j\) against the control limits. A hypothesis testing can also be used by defining the null and alternative hypotheses as follows:

The project is statistically in control when its actual schedule performance indicator at the review period \(j\), say \({\text{EDI}}^{j}\), falls between the control limits. On the contrary, the project is out-of-control when the actual schedule performance indicator is outside the limits. In this case, the control chart issues a signal and project manager realizes that there may be a source of variation in the project. Then, the project control team starts to drill down the work breakdown structure (WBS) to find the problem. The previous hypothesis test may be then modified to:

Illustrative example

This section aims at illustrating how the ex ante control chart is constructed in practice. For this purpose, an illustrative project with fictitious data is assumed to be given. Figure 2 displays network of this project with eight non-dummy activities and the total of ten activities. The number above each node denotes the estimated activity duration in days, and the two numbers below each node show the limits between which the simulated actual durations can be chosen. It is good to mention that since EDM does not need cost-based data for measuring the schedule performance, the construction of the ex ante control chart is independent of this kind of data. Table 3 displays the simulated actual durations for five runs, representing the number of working days spent to complete each activity \(i\). For the sake of simplicity, the simulated actual durations have been generated from a uniform distribution with respect to the numbers below each node. However, in the practical sense, the preferred distribution can be used considering the similar projects and historical data.

The second row of Table 4 shows the cumulative planned durations of the example project for all 17 working days. The next five rows show the cumulative earned durations for different simulation runs, resulted by dividing the planned duration of activity \(i\) by its actual duration and adding up all these numbers for a certain working day. In this project, activity 2 has a \({\text{PD}}_{2}\) of 2 working days while, for example, in the first simulation run, it has been completed by the actual duration of 3 working days (\({\text{AD}}_{2} = 3\)). This implies that activity 2 has been effectively contributed to the project’s earned duration with \({\text{ED}}_{2} = \frac{2}{3} = 0.66\) days in each day of its execution. Similarly, earned duration of activity 4 in the first simulation run is \({\text{ED}}_{4} = \frac{8}{4} = 2\). By adding up the earned durations of the ongoing activities at the first working day, \({\text{ED}}^{1}\) is calculated as \({\text{ED}}^{1} = 2 + 0.66 = 2.66\). \({\text{EDI}}^{j}\) can be calculated for each working day by dividing the TPD by the TED of each simulation run. For example, EDI for the first simulation run at the first review period is \({\text{EDI}}_{1}^{1} = \frac{2.66}{2} = 1.33\).

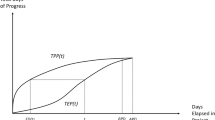

EDIs of each working day under different scenarios can be considered as the values of project control variable \(X = \{ {\text{EDI}}_{1}^{j} , \ldots ,{\text{EDI}}_{\text{nrs}}^{j} \}\) by which the upper and lower control limits can be constructed by Eq. (8). The ex ante control chart can now be constructed with the help of control limits at all \(j\) review periods. In this example, we have set the control level \(\alpha = 0.05\). For illustrative purposes, a center line (CL) is added to the control chart which is obtained by averaging all the \({\text{nrs}}\) EDIs at a particular point in time. Figure 3 displays the ex ante control chart and its lower and upper control limits as well as the center line. The most important characteristic of this chart is that unlike the traditional control charts, the control limits in an ex ante control chart are connected directly to the baseline schedule and not at a distance of \(\pm \;3\) standard deviations from the project performance’s average.

By starting the project, the phase II analysis begins, and the actual project progress is compared to the limits. If everything goes well, project performance should fall between the limits. Otherwise, a signal is generated indicating a possible source of variation. Actual performance greater than the upper control limit probably shows an opportunity where re-baselining should be taken into account to exploit that opportunity. On the contrary, if the actual performance falls below the lower control limit, the project is probably in danger, and corrective actions need to be taken to get the project back on track (see Fig. 3).

Performance analysis methodology

The purpose of this section is to find out how the ex ante control chart is capable of detecting project anomalies in real-life situations. For this purpose, an extensive simulation study has been conducted under different scenarios on various network topologies. Performance analysis methodology is described in the following subsections and can be summarized in Fig. 4.

The project generation

The ex ante control chart is tested on a fictitious data set consisting of 900 project networks with different topological measure \({\text{SP}}\) (stands for serial/parallel indicator) generated by RanGen. \({\text{SP}}\) denotes the closeness of project network to a serial or parallel network and is calculated by

where \(m\) is the maximum number of activities on a single path and \(n\) is the number of project activities (Vanhoucke et al. 2008; Elmaghraby 2000). When \({\text{SP}} = 1\) the project network is completely serial because \(m = n\). By reducing \(m\) to zero, the project network moves toward the parallel topology where \({\text{SP}} = 0\) indicates a completely parallel network. A total of 100 projects have been generated for each \({\text{SP}}\) values between zero and one, i.e., \({\text{SP}} = \{ 0.1, \ldots ,0.9\}\). This allows us to consider different projects with different topological structures from serial to parallel in our experimental analysis.

Phase I simulation modeling

Phase I analysis aims at constructing the control limits used in the ex ante control chart. Hence, it is started with the selection of a logical endpoint for the project activities via the critical path method algorithm as a planned duration. Once the baseline schedule is created, we need to define the limits between which the fictitious actual durations should be generated. The idea behind these limits arises from the fact that activity durations are usually subject to some risks which should be identified and considered in advance. In addition, incomplete knowledge of the way the project is progressed may lead to some uncertainty in the activity durations which needs to be noticed before they occur. The fictitious actual durations in this phase are generated within limits specified by the phase I parameter \(\delta_{\text{I}} = \{ 0.2,0.4,0.6,0.8\}\). \(\delta_{\text{I}}\) determines the distance between which a fictitious activity duration can be generated around its nominal duration. This allows us to consider different degrees of uncertainties for our experiments. For the sake of simplicity, in this paper we have opted to generate durations from the uniform distribution; however, in practice, any other distributions may be used. Monte Carlo simulations are now used to construct the control limits of the ex ante control chart from \({\text{nrs}}\) simulation runs.

Phase II simulation modelling

In practice, projects rarely follow their constructed plans and are either behind or ahead of schedule. To model such situations, we define the phase II parameter \(\delta_{\text{II}} = \{ 0,0.2,0.4,0.6,0.8\}\). In contrast to \(\delta_{\text{I}}\) which produces acceptable variations for the fictitious project activity durations, \(\delta_{\text{II}}\) acts as a source of variation for the actual project progress and produces on schedule, behind-schedule, and ahead-of-schedule situations under the uniform distribution. More precisely, different combinations of these two parameters ensure that all possible scenarios of the project progress have been covered in both phase I and phase II of the Monte Carlo simulations. The third box in Fig. 3 shows the effect of phase I and phase II parameters, \(\delta_{\text{I}}\) and \(\delta_{\text{II}}\), in activity durations. In this box, (a) shows the ahead-of-schedule scenario in which \(\delta_{\text{I}} < \delta_{\text{II}}\); (b) shows the on schedule scenario in which \(\delta_{\text{I}} = \delta_{\text{II}}\); and (c) shows the behind-schedule scenario in which \(\delta_{\text{I}} > \delta_{\text{II}}\). It can be said that with an increase in \(\delta_{\text{I}}\) against \(\delta_{\text{II}}\), an ahead-of-schedule situation is created. On the contrary, a decrease in \(\delta_{\text{I}}\) against \(\delta_{\text{II}}\) leads to the behind-schedule situation.

Project control efficiency measures

Some efficient measures should measure the ability of the ex ante control chart to detect abnormal behavior of the project progress. For this purpose, three performance assessment measures have been proposed. The first measure is the true alarm rate which refers to the probability of truly detecting an abnormal situation for a particular scenario. This measure defines how many correct signals generate among all review periods available during the project execution. It is calculated by dividing the total number of signals produced by the ex ante control chart to the number of review periods in which one or more activities exist with an ahead-of-schedule or behind-of-schedule situation. The second measure is the false alarm rate which refers to the probability of falsely detecting an abnormal situation. This measure defines how many wrong signals generated among all review periods available during the project execution. A false alarm occurs when a signal is generated even if all the activities are executed as planned.

The third measure can be defined by the calculation of the area under the receiver operating characteristic curve (ROC). ROC concept came from the signal detection theory developed in World War II to analyze the radar performance on detecting a correct blip on the radar screen (Hanley and McNeil 1982). ROC is drawn by plotting the true alarm rate against the false alarm rate at various settings. Since the area under the ROC curve, also known as AUC, provides a single and unit-less measure, the discrimination performance which is the ability of the ex ante control chart to classify the true and false signals correctly can be measured. AUC can be computed either through the parametric (trapezoidal procedure) or nonparametric (maximum likelihood estimator) methods. Both methods are available in computer programs such as Minitab and Matlab.

Computational results and discussion

This section presents our findings on the performance of the ex ante control chart in detecting out of plan situations. By the previously described methodology and with respect to the values of \({\text{SP}}\), \(\delta_{\text{I}}\), and \(\delta_{\text{II}}\), we have a total of \(9 \times 4 \times 5 = 180\) simulation experiments. Table 3 summarizes the AUC values for different scenarios as a measure of discrimination. Hosmer and Lemeshow (2000) provide general rules for interpreting AUC values which can be paraphrased as follows (Hosmer and Lemeshow 2000). Value \({\text{AUC}} = 0.5\) indicates the situation in which true and false signals are generated based on a Bernoulli trial. While the AUC value moves towards one, the ex ante control chart provides more adequate discrimination. In this case, the true and false alarm rates tend to one and zero, respectively, which is the optimal situation.

Since almost all the AUC values in Table 3 are greater than 0.9, we can conclude that the ex ante control chart can reasonably identify deviations while avoiding false alarms.

The AUC values reported in Table 5 have been calculated from the true and false alarm probabilities under different network topologies. For example, \({\text{AUC}} = 0.9857\) for the case that \(\delta_{\text{I}} = 0.2\) and \(\delta_{\text{II}} = 0.8\) indicates that the ex ante control chart can adequately detect abnormal behavior of the schedule performance and avoids from false alarms. Figure 5 portrays the area under the ROC curve in this case.

The construction of control limits is affected by another parameter, namely control level \(\alpha\), which is the probability of rejecting a true null hypothesis. More precisely, \(\alpha\) denotes the probability of generating a signal when the project is actually on schedule. When \(\alpha\) is increased, the first and last \(\alpha {\text{th}}\) quintile of the empirical distribution function by which the control limits are constructed is increased. In this situation, since the acceptance area for the null hypothesis decreases, we accept a greater probability for making a wrong decision. Figure 6 depicts the effect of the control level \(\alpha\) on the area under the curve for a particular scenario. For illustrative purposes the true alarm rates for different values of \(\alpha\) have been plotted to 1-false alarm rate.

Both the true and false alarm rates are significant and in the optimal situation, they should be as close as possible to one and zero, respectively. However, we prefer to have a lower false alarm rate than a higher true alarm rate because a false alarm imposes much effort in drilling down the WBS to find out the source of variation. Hence, we recommend setting a lower control level to avoid such costly effort.

When the ex ante control chart is used in practice concerns may arise that must be considered in advance. In the first place, it is good to say that, in this paper, fictitious projects with simulated data have been used to measure the capability of the ex ante control chart. Although, the results reveal that this chart has a relatively reliable performance for all possible scenarios that can occur in practice, however, the projects may differ while having some commonalities. For example, in reality, sometimes a small change in one characteristic of a project (such as uncertainty) may make a critical difference in the final status of the project. Therefore, the performance analysis of the ex ante control chart can be extended to the real project data sets with empirical project data. To better understand and improve the performance of the ex ante control chart, interested readers are encouraged to implement the proposed control chart in their projects. In addition, operations research and scheduling research group has cleverly made an excellent empirical project database which is freely available at www.projectmanagement.ugent.be. At the time of writing this paper, 91 projects are available there that provides information such as baseline schedule, risk analysis, and project control for free.

Another important issue that needs to be considered in monitoring the project schedule is that the critical path may be changed during the project execution. The original critical path is determined based on the CPM analysis on the baseline schedule. However, depending upon actual performance, usually due to significant delays in non-critical activities, the critical path may be changed. So, unlike the common perception, the critical path is dynamic. In this situation, the ex ante control chart is yet applicable by recalculating the control limits based on updated information.

Conclusions and future research avenues

Project performance needs to be monitored during its execution to ensure that the actual performance follows the planning. One way to do this is the use of statistical control charts in which the project performance indicators are plotted over time. Recently, researchers have shown an increasing interest in monitoring of the project performance via different types of control charts. However, their approaches often ignore the project-specific assumptions and may lead to unclear interpretations of the actual situations.

The first thing needs to be considered when the statistical control charts are being used for the project control is that the projects are different from processes. By understanding this difference, in this paper, an ex ante control chart is proposed which has a particular attention on the project-specific assumptions while sharing some common characteristics with the classical charts. The ex ante control chart is constructed in two phases. Phase I analysis looks forward and constructs the control limits in such a way that the weak and strong parts of the baseline are considered. This phase enhances our understanding of how the schedule risk analysis can be used to define more realistic control limits. In addition, the way of data collection, sample sizes per each review period, project time span, and acceptable deviations are considered with a focus on the project-specific properties. Phase II analysis looks backward and compares the actual performance to the previously constructed limits. By doing so, project schedule performance anomalies can be accurately identified both in the positive and negative directions. The project control team can then examine the sources of variations. Taken together, these phases suggest a role for statistical control charts in promoting the project monitoring and control and enable the project manager to keep the project on track.

For illustrative purposes, application of the ex ante control chart has been demonstrated using a fictitious project. In addition, an extensive simulation study has been conducted to examine the performance of the proposed chart in practice. As expected, the simulation result shows that the ex ante control chart is capable of detecting the abnormal behaviors by generating true signals in possible scenarios with respect to the different network topologies. It also has a low rate of false alarms which prevents a lot of unnecessary effort to find out the source(s) of variation.

In conclusion, the ex ante control chart is an easy to setup, understand, and interpret tool which focuses on monitoring the schedule performance of a project. In addition, it can be constructed without needing the cost-related data. However, in this paper, our analysis is limited to monitor the schedule performance of projects, and it may be extended to monitor the other aspects of project performance. Besides, the higher performance of the ex ante control chart in detecting abnormal behavior of the project progress for different possible scenarios does not necessarily assure that this approach is the best available tool for project monitoring and should be investigated more deeply in real-life projects. In addition, the effect of critical and near-critical activities on the performance of the ex ante control chart may require further investigation.

References

Aliverdi R, Moslemi Naeni L, Salehipour A (2013) Monitoring project duration and cost in a construction project by applying statistical quality control charts. Int J Project Manage 31(3):411–423

Anbari FT (2003) Earned value project management, method and extensions. Project Manag J 34(4):12–23

Bauch GT, Chung CA (2001) A statistical project control tool for engineering managers. Project Manag J 32:37–44

Bromilow FJ (1969) Contract time performance expectations and the reality. Building Forum 1(3):70–80

Browning TR (2014) A quantitative framework for managing project value, risk, and opportunity. IEEE Trans Eng Manage 61(4):583–598

Byung-Cheol K (2015) Integrating risk assessment and actual performance for probabilistic project cost forecasting: a second moment Bayesian model. IEEE Trans Eng Manage 62(2):158–170

Chen H-L (2014) Improving forecasting accuracy of project earned value metrics: linear modeling approach. J Manag Eng 30(2):135–145

Chen H-L, Chen W-T, Lin Y-L (2016) Earned value project management: improving the predictive power of planned value. Int J Project Manage 34(1):22–29

Chiadamrong N, Piyathanavong V (2017) Optimal design of supply chain network under uncertainty environment using hybrid analytical and simulation modeling approach. J Ind Eng Int 13:465–478

Colin J (2015) Developing a framework for statistical process control approaches in project management. Int J Project Manage 33(6):1289–1300

Colin J, Vanhoucke M (2014) Setting tolerance limits for statistical project control using earned value management. Omega 49:107–122

Company T (1958) Statistical quality control handbook. Western Electric Company, New York

Demeulemeester E, Vanhoucke M, Herroelen W (2003) A random network generator for activity-on-the-node networks. J Sched 6(1):17–38

DiCiccio TJ, Efron B (1996) Bootstrap confidence intervals. Stat Sci 11:189–212

Du J, Kim B-C, Zhao D (2016) Cost performance as a stochastic process: EAC projection by Markov Chain simulation. J Constr Eng Manage 142(6):04016009

Efron B (1982) The jackknife, the bootstrap and other resampling plans. Society for Industrial and Applied Mathematics, Philadelphia

Elmaghraby SE (2000) On criticality and sensitivity in activity networks. Eur J Oper Res 127(2):220–238

Fleming QW, Koppelman JM (2010) Earned value project management. Project Management Institute, Newtown Square

Goldratt EM (1997) Critical chain: [a business novel]. North River Press, Great Barrington

Hanley JA, McNeil BJ (1982) The meaning and use of the area under a receiver operating characteristic (ROC) curve. Radiology 143(1):29–36

Hochwald W, Miller BU, Ashcraft WD (1966) SCOPE: (schedule, cost, performance) planning and control system. IEEE Trans Eng Manage 1(1):2–16

Holmes DS, Mergen A (1993) Improving the performance of the T2 control chart. Qual Eng 5(4):619–625

Hosmer DW Jr, Lemeshow S (2000) Applied logistic regression. Wiley, New York

Hulett D (2012) Integrated cost-schedule risk analysis. Gower Publishing Ltd, Aldershot

Izadi A, Kimiagari A (2014) Distribution network design under demand uncertainty using genetic algorithm and Monte Carlo simulation approach: a case study in pharmaceutical industry. J Ind Eng Int 10(2):50

Kamyabnya A, Bagherpour M (2014) Risk-based earned value management: a novel perspective in software engineering. Int J Ind Syst Eng 17(2):170–185

Kelly JE Jr (1961) Critical-path planning and scheduling: mathematical basis. Oper Res 9(3):296–320

Kelly JE Jr, Walker MR (1959) Critical path planning and scheduling: an introduction. In: Eastern joint IRE-AIEE-ACM computer conference, pp 160–173

Khamooshi H, Golafshani H (2014) EDM: earned duration management, a new approach to schedule performance management and measurement. Int J Project Manage 32(6):1019–1041

Kim B-C (2014) Dynamic control thresholds for consistent earned value analysis and reliable early warning. J Manag Eng 31(5):04014077

Kim B-C (2015) Probabilistic evaluation of cost performance stability in earned value management. J Manag Eng 32(1):04015025

Kim Y-W, Ballard G (2010) Management thinking in the earned value method system and the last planner system. J Manag Eng 26(4):223–228

Kim B-C, Kim H-J (2014a) Sensitivity of earned value schedule forecasting to S-curve patterns. J Constr Eng Manage 140(7):04014023

Kim S-C, Kim Y-W (2014b) Computerized integrated project management system for a material pull strategy. J Civil Eng Manage 20(6):849–863

Kim T, Kim Y-W, Cho H (2015) Customer earned value: performance indicator from flow and value generation view. J Manag Eng 32(1):04015017

Kopmann J, Kock A, Killen CP, Gemünden HG (2015) Business case control in project portfolios—an empirical investigation of performance consequences and moderating effects. IEEE Trans Eng Manage 62(4):529–543

Krishnamoorthy K, Mathew T (2009) Statistical tolerance regions: theory, applications, and computation. Wiley, New York

Leu S-S, Lin Y-C (2008) Project performance evaluation based on statistical process control techniques. J Constr Eng Manage 134(10):813–819

Lipke WH (2009) Earned schedule. Lulu, Chicago

Malcolm DG, Roseboom JH, Clark CE, Fazar W (1959) Application of a technique for research and development program evaluation. Oper Res 7(5):646–669

McConnell DR (1985) Earned value technique for performance measurement. J Manage Eng 1(2):79–94

Montgomery DC (2013) Introduction to statistical process control. Wiley, New York

Mortaji S, Noorossana R, Bagherpour M (2015) Project completion time and cost prediction using change point analysis. J Manag Eng 31(5):04014086

Nelson LS (1984) The Shewhart control chart—tests for special causes. J Qual Technol 16(4):237–239

Nelson LS (1985) Interpreting Shewhart X control charts. J Qual Technol 17(2):114–116

Okland JS (2007) Statistical process control, 6th edn. Taylor & Francis, Boca Raton

Padalkar M, Gopinath S (2016) Six decades of project management research: thematic trends and future opportunities. Int J Project Manage 34(7):1305–1321

Pritsker AB, Whitehou G (1966) GERT: graphical evaluation and review technique. J Ind Eng 17(6):293–300

Project Management Institute (2013) A guide to the project management body of knowledge. Pa: Project Management Institute, Newtown Square

Rao M, Naikan V (2014) Reliability analysis of repairable systems using system dynamics modeling and simulation. J Ind Eng Int 10:1–10

Runger GC, Alt FB, Montgomery DC (1996) Contributors to a multivariate statistical process control chart signal. Commun Stat 25(10):2203–2213

Russell JS, Jaselskis EJ, Lawrence SP (1997) Continuous assessment of project performance. J Constr Eng Manage 123(1):64–71

Salehipour A, Naeni LM, Khanbabaei R, Javaheri A (2016) Lessons learned from applying the individuals control charts to monitoring autocorrelated project performance data. J Constr Eng Manage 142(5):04015105

Shahriari M (2016) Multi-objective optimization of discrete time–cost tradeoff problem in project networks using non-dominated sorting genetic algorithm. J Ind Eng Int 12(2):159–169

Thamhain HJ (2013) Contemporary methods for evaluating complex project proposals. J Ind Eng Int 9(1):34–40

Van Slyke RM (1963) Monte Carlo methods and the PERT problem. Oper Res 11(5):839–860

Vanhoucke M (2009) Measuring time: improving project performance using earned value management. Springer, Belgium

Vanhoucke M (2012) Project management with dynamic scheduling. Springer, Berling

Vanhoucke M (2014) Integrated project management and control: first comes the theory, then the practice. Springer, London

Vanhoucke M, Coelho J, Debels D, Maenhout B, Tavares LV (2008) An evaluation of the adequacy of project network generators with systematically sampled networks. Eur J Oper Res 187(2):511–524

Wang Q, Jiang N, Gou L, Che M, Zhang R (2006) Practical experiences of cost/schedule measure through earned value management and statistical process control. In: Wang Q, Pfahl D, Raffo DM, Wernick P (eds) Software process change. Springer, Berlin, pp 348–354

Wauters M, Vanhoucke M (2014) Study of the stability of earned value management forecasting. J Constr Eng Manage 141(4):04014086

Western Electric (1956) Statistical quality control handbook. Western Electric Corporation, Indianapolis

Willems LL, Vanhoucke M (2015) Classification of articles and journals on project control and earned value management. Int J Project Manage 33(7):1610–1634

Williams T (1995) A classified bibliography of recent research relating to project risk management. Eur J Oper Res 85(1):18–38

Zhang S, Du C, Sa W, Wang C, Wang G (2013) Bayesian-based hybrid simulation approach to project completion forecasting for underground construction. J Constr Eng Manage 140(1):04013031

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Mortaji, S.T.H., Noori, S., Noorossana, R. et al. An ex ante control chart for project monitoring using earned duration management observations. J Ind Eng Int 14, 793–806 (2018). https://doi.org/10.1007/s40092-017-0251-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40092-017-0251-5