Abstract

This paper presents a new approach to solve Fractional Programming Problems (FPPs) based on two different Swarm Intelligence (SI) algorithms. The two algorithms are: Particle Swarm Optimization, and Firefly Algorithm. The two algorithms are tested using several FPP benchmark examples and two selected industrial applications. The test aims to prove the capability of the SI algorithms to solve any type of FPPs. The solution results employing the SI algorithms are compared with a number of exact and metaheuristic solution methods used for handling FPPs. Swarm Intelligence can be denoted as an effective technique for solving linear or nonlinear, non-differentiable fractional objective functions. Problems with an optimal solution at a finite point and an unbounded constraint set, can be solved using the proposed approach. Numerical examples are given to show the feasibility, effectiveness, and robustness of the proposed algorithm. The results obtained using the two SI algorithms revealed the superiority of the proposed technique among others in computational time. A better accuracy was remarkably observed in the solution results of the industrial application problems.

Similar content being viewed by others

Introduction

This paper, considers the following general Fractional Programming Problem (FPP) mathematical model (Jaberipour and Khorram 2010):

where , , and are linear, quadratic, or more general functions. Fractional programming of the form Eq. (1) arises reality whenever rates such as the ratios (profit/revenue), (profit/time), (-waste of raw material/quantity of used raw material), are to be maximized often these problems are linear or at least concave–convex fractional programming. Fractional programming is a nonlinear programming method that has known increasing exposure recently and its importance, in solving concrete problems, is steadily increasing. Furthermore, nonlinear optimization models describe practical problems much better than the linear optimization, with many assumptions, does. The FPPs are particularly useful in the solution of economic problems in which different activities use certain resources in different proportions. While the objective is to optimize a certain indicator, usually the most favorable return on allocation ratio subject to the constraint imposed on the availability of resources; it also has a number of important practical applications in manufacturing, administration, transportation, data mining, etc.

The methods to solve FFPs can be broadly classified into exact (traditional) and metaheuristics approaches.

The traditional method as: Wolf (1985) who introduced the parametric approach, Charnes and Cooper (1973) solved the linear FFPs by converting FPP into an equivalent linear programming problem and solved it using already existing standard algorithms for LPP, Farag (2012); Hasan and Acharjee (2011); Hosseinalifam (2009); Stancu-Minasian (1997), reviewed some of the methods that treated solving the FPP as the primal and dual simplex algorithm. The crisscross, which is based on pivoting, within an infinite number of iterations, either solves the problem or indicates that the problem is infeasible or unbounded. The interior point method, as well as Dinkelbach algorithms both reduces the solution of the LFP problem to the solution of a sequence of LP problems. Isbell Marlow method, Martos Algorithm, CambiniMarteins Algorithm, Bitran and Novaes Method, Swarups Method, Harvey M. Wagner and John S. C. Yuan, Hasan, B.M., and Acharjee, S., developed a new method for solving FLPP based on the idea of solving a sequence of auxiliary problems so that the solutions of the auxiliary problems converge to the solution of the FPP.

Moreover, there are many recent approaches employing traditional mathematical methods for solving the ratio optimization FPP as: Dür et al. (2007) who introduced an algorithm called dynamic multistart improving Hit-and-Run (DMIHR) and applied it to the class of fractional optimization problems. DMIHR combines IHR, a well-established stochastic search algorithm, with restarts. The development of this algorithm is based on a theoretical analysis of Multistart Pure Adaptive Search, which relies on the Lipschitz constant of the optimization problem. Shen et al. (2009) proposed algorithm for solving sum of quadratic ratios fractional programs via monotonic function. The proposed algorithm is based on reformulating the problem as a monotonic optimization problem. It turns out that the optimal solution, which is provided by the algorithm, is adequately guaranteed to be feasible and to be close to the actual optimal solution. Jiao et al. (2013) presented global optimization algorithm for sum of generalized polynomial ratios problem which arises in various practical problems. The global optimization algorithm was proposed for solving sum of generalized polynomial ratios problem, which arise in various engineering design problems. By utilizing exponential transformation and new three-level linear relaxation method, a sequence of linear relaxation programming of the initial nonconvex programming problems are derived, which are embedded in a branch and bound algorithm. The algorithm was shown to attain finite convergence to the global minimum through successive refinement of a linear relaxation of the feasible region and/or of the objective function and the subsequent solutions of a series of linear programming sub-problems.

A few studies in recent years used metaheuristics approaches to solve FFPs. Sameeullah et al. (2008) presented a genetic algorithm-based method to solve the linear FFPs. A set of solution point is generated using random numbers, feasibility of each solution point is verified, and the fitness value for all the feasible solution points are obtained. Among the feasible solution points, the best solution point is found out which then replaces the worst solution point. A pair-wise solution points is used for crossover and a new set of solution points is obtained. These steps are repeated for a certain number of generations and the best solution for the given problem is obtained. Calvete et al. (2009) developed a genetic algorithm for the class of bi-level problems in which both level objective functions are linear fractional and the common constraint region is a bounded polyhedron. Jaberipour and Khorram (2010) proposed algorithm for the sum-of-ratios problem-based harmony search algorithm. Bisoi et al. (2011) developed neural networks for nonlinear FPP. The research proposed a new projection neural network model. It is theoretically guaranteed to solve variational inequality problems. The multi-objective mini–max nonlinear fractional programming was defined and its optimality is derived by using its Lagrangian duality. The equilibrium points of the proposed neural network model are found to correspond to the Karush Kuhn Trcker point associated with the nonlinear FPP. Xiao (2010) presented a neural network method for solving a class of linear fractional optimization problems with linear equality constraints. The proposed neural network model have the following two properties. First, it is demonstrated that the set of optima to the problems coincides with the set of equilibria of the neural network models which means the proposed model is complete. Second, it is also shown that the model globally converges to an exact optimal solution for any starting point from the feasible region. Pal et al. (2013) used Particle Swarm Optimization algorithm for solving FFPs. Hezam and Raouf (2013ab, c), introduced solution for integer FPP and complex variable FPP-based Swarm Intelligence under uncertainty.

The purpose of this paper is to investigate the solution for the FPP using Swarm Intelligence. The remainder of this paper is organized as follows. “Methodology” will introduce Swarm Intelligence methodology. Illustrative examples and discussion on the results are presented in “Illustrative examples with discussion and results”. “Industry applications” introduces industry applications. Finally, conclusions are presented `in “Conclusions”.

Methodology

Swarm Intelligence (SI) is research inspired by observing the naturally intelligent behavior of biological agent swarms within their environments. SI algorithms have provided effective solutions to many real-world type optimization problems, that are NP-Hard in nature. This study investigates the effectiveness of employing two relatively new SI metaheuristic algorithms in providing solutions to the FPPs. The algorithms investigated are Particle Swarm Optimization (PSO), and Firefly Algorithm (FA). Brief descriptions of these algorithms are given in the subsections below.

1. Particle Swarm Optimization (PSO)

PSO (Yang 2011) is a population-based stochastic optimization technique developed by Eberhart and Kennedy in 1995, inspired by social behavior of bird flocking or fish schooling.

The characteristics of PSO can be represented as follows:

-

The current position of the particle at iteration ;

-

The current velocity of the particle i at iteration k;

-

The personal best position of the particle i at iteration k;

-

The neighborhood best position of the particle.

The velocity update step is specified for each dimension hence, represents the element of the velocity vector of the particle. Thus the velocity of particle is updated using the following equation

where is weighting function, are weighting coefficients, are random numbers between 0 and 1. The current position (searching point in the solution space) can be modified by the following equation:

Penalty functions

In the penalty functions method, the constrained optimization problem is solved using unconstrained optimization method by incorporating the constraints into the objective function thus transforming it into an unconstrained problem.

The detailed steps of the PSO algorithm is given as below:

Step 1 Initialize parameters and population.

Step 2: Initialization Randomly set the position and velocity of all particles, within pre-defined ranges and on dimensions in the feasible space (i.e., it satisfies all the constraints).

Step 3: Velocity updating At each iteration, velocities of all particles are updated according to Eq. (3). After updating, should be checked and maintained within a pre-specified range to avoid aggressive random walking.

Step 4: Position updating Assuming a unit time interval between successive iterations, the positions of all particles are updated according to Eq. (4). After updating, should be checked and limited within the allowed range.

Step 5: Memory updating Update and when the following condition is met.

where is the objective function subject to maximization.

Step 6: Termination checking Repeat Steps 2–4 until definite termination conditions are met, such as a pre-defined number of iterations or a failure to make progress for a fixed number of iterations.

2. Firefly algorithm (FA)

FA Yang (2011), is based on the following idealized behavior of the flashing characteristics of fireflies.

All fireflies are unisex so that one firefly is attracted to other fireflies regardless of their sex.

Attractiveness is proportional to their brightness, thus for any two flashing fireflies, the less bright one will move towards the brighter one. The attractiveness is proportional to the brightness and they both decrease as their distance increases. If no one is brighter than a particular firefly, it moves randomly.

The brightness or the light intensity of a firefly is affected or determined by the landscape of the objective function to be optimized.

The detailed steps of the PSO algorithm is given as below:

Step 1 Define objective function , and generate initial population of fireflies placed at random positions within the n-dimensional search space, . Define the light absorption coefficient .

Step 2 Define the light intensity of each firefly, , as the value of the cost function for .

Step 3 For each firefly, , the light Intensity, , is compared for every firefly .

Step 4 If , , is less than , then move firefly towards in -dimensions.

The value of attractiveness between flies varies relatively the distance between them:

where is the attractiveness at the second term is due to the attraction, while the third term is randomization with the vector of random variables being drawn from a Gaussian distribution . The distance between any two fireflies and at and can be regarded as the Cartesian distance or the norm.

Step 5 Calculate the new values of the cost function for each fly, , and update the light intensity, .

Step 6 Rank the fireflies and determine the current best.

Step 7 Repeat Steps 2–6 until definite termination conditions are met, such as a pre-defined number of iterations or a failure to make progress for a fixed number of iterations.

Illustrative examples with discussion and results

Ten diverse examples were collected from literature to demonstrate the efficiency and robustness of solving FFPs. The obtained numerical results are compared to their relevance found in references; some examples were also solved using exact method and . Table 1 shows they attained the comparison result. The algorithms have been implemented by MATLAB R2011. The simulation parameter settings results of FA are: population size, 50; (randomness), 0.25; minimum value of , 0.20; (absorption), 1.0; iterations, 500; and PSO are population size of 50, the inertia weight was set to change from 0.9 () to 0.4 (warming) over the iterations. Set :0.12 and :1.2, , iterations:500.

The functions related to the difference examples list in the previous table are followers:

:

subject to ;

; .

.

subject to ; ; .

:

subject to ; .

:

subject to ; ; .

:

subject to ; ; .

:

subject to ; ; ; ; .

subject to ; ; ; ; .

:

subject to ; ; .

:

subject to ; ; ; ; ; ; .

:

subject to ; ; ; .

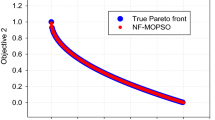

The numerical results obtained using PSO and FA techniques are compared to assorted exact methods and metaheuristic techniques as shown in Table 1. Four exact methods were selected for solving the 10 benchmark functions and carrying out the comparison. The four methods are C.C. Transformation, Dinkelbach algorithm, Goal Setting and Approximation and global optimization. Neural network and harmony search are the other two metaheuristic intelligent techniques incorporated in the compare test. The some calculations are obtained out of the numerical solutions of all the ten functions. PSO and FA proved their capability in obtaining the optimal solution for all the test functions. The results were obtained from the PSO, FA almost identical to the obtained using exact methods. PSO and FA proved also to give better results compared to other intelligent techniques, such as neural network and harmony search . Finally, PSO and FA managed to give solutions to problems that could not be solved with exact method due to difficult mathematical calculation for complex nonlinear. Figures 1 and 2 are sample plots of two maximum and minimum function optimization results. Figure 1a shows the objective function optimized value of (0.333) for function where the blue colored dots on the objective space represent the swarm particle searching for the optimized minimum value. The same particles swarm with the same color could be observed in the decision variable space of Fig. 1b, c at values of (0,0), (0,0) trying to reach the optimized decision variables values using FA, PSO algorithms, respectively. Figure 2a shows the objective function optimized value of (4.0608) for function where the red colored dots on the objective space represent the swarm particle searching for the optimized maximum value. The same particles swarm with the same color could be observed in the decision variable space of Fig. 2b, c at values of (1,1.7438), (1,1.7377) trying to reach the optimized decision variables values using FA, PSO algorithms, respectively.

Industry applications

A. Design of a gear train

A gear train problem is selected from Deb and Srinivasan (2006), and Shen et al. (2011); shown in Fig. 3 below. A compound gear train is to be designed to achieve a specific gear ratio between the driver and driven shafts. It is a pure integer fractional optimization problem used to validate the integer handling mechanism. The gear ratio for gear train is defined as the ratio of the angular velocity of the output shaft to that of the input shaft. It is desirable to produce a gear ratio as close as possible to . For each gear, the number of teeth must be between 12 and 60. The design variables , and are the numbers of teeth of the gears , and , respectively, which must be integers.

.

The optimization problem is expressed as:

The constraint ensures that the error between obtained gear ratio and the desired gear ratio is not more than the 50 % of the desired gear ratio.

The detailed accuracy performance concerning the solution of FA and PSO is listed in Table 2. The comparison is held in terms of the best, error, mean, and standard deviation values. These values were obtained out of 20 independent runs. The table also shows the best optimization value, the convergence time and the amount addressed memory resources. First refereeing to the obtained optimization value indicates a better achievement for FA. On the other hand, the convergence time and the amount of addressed memory resources indicate a better achievement for PSO. The PSO algorithm utilizes memory amount of 483–484 as shown in Fig. 4, while FA algorithm utilizes memory amount of 492–494 as shown in the same figure.

B. Proportional integral derivative (PID) controller

Proportional integral derivative (PID) controllers are widely used to build automation equipment in industries; shown in Fig. 5 below. They are easy to design, implement, and are applied well in most industrial control systems process control, motor drives, magnetic, etc.

Correct implementation of the PID depends on the specification of three parameters: proportional gain , integral time and derivative time . These three parameters are often tuned manually by trial and error, which has a major problem in the time needed to accomplish the task. and the fractional-order PID controller parameters vector is (, , , , ). The PID controller is a special case of the fractional-order PID controller, we simply set

Assume that the system is modeled by an th-order process with time delay :

Here, we assume and the system (6) is stable. The fractional-order PID controller has the following transfer function:

The optimization problem is summarized as follows:

.

.

where the is given by the designer. Note that the constraint is introduced to guarantee the stability of the closed-loop system. Also, the values of five design parameters (, , , , ) are directly determined by solving the above optimization problem.

Simulation example

Consider the following the transfer function presented in Maiti et al. (2008):

The initial parameters are chosen randomly in the following range: . We want to design a controller such that the closed loop system has a maximum peak overshoot and trise = 0.3 s. This translates to (damping ratio), (undamped natural frequency). We then find out the positions of the dominant poles of the closed loop system,

The dominant poles for the closed loop controlled system should lie at and For , the characteristic equation is:

Tables 3 and 4 illustrate the calculated optimized parameters of PID controller using PSO, FA and using the algorithm of Maiti et al. (2008). In Table 3 which gives only the result of the integer-order PID controller parameters, when the variables () and () are set to a value of (1), it could be observed that the optimized parameters calculated using FA algorithm generates the best control step response as illustrate in Fig. 6. It could be concluded also from the same figure that PSO algorithm with tuned parameters introduces a better step response than Maiti et al. (2008). Table 4 introduces optimized parameters of the fractional-order PID controller where the same indicated remarks could be observed as that of the integer order.

Conclusions

The paper presents a new approach to solve FFPs based on two Swarm Intelligence (SI) algorithms. The two types are PSO, and FA. Ten-benchmark problem were solved using the two SI algorithm and many other previous approaches. The results employing the two SI algorithms were compared with the other exact and metaheuristic approaches previously used for handling FPPs. The two algorithms proved their effectiveness, reliability and competences in solving different FPP. The two SI algorithms managed to successfully solve large-scale FPP with an optimal solution at a finite point and an unbounded constraint set. The computational results proved that SI turned out to be superior to other approaches for all the accomplished tests yielding a higher and much faster growing mean fitness at less computational time. A better memory utilization was obtained using the PSO algorithm compared to FA algorithm. Two industrial application problems were solved proving the superiority of FA algorithm over PSO algorithm reaching a better optimized solution. A better optimized ratio was obtained that generated a zero traction error in the gear train application and a better control response was obtained in the PID controller application. In the two applications, the best results were acquired using the two SI algorithms with an advantage to the FA algorithm optimization results and an advantage to the PSO algorithm in the computational time.

References

Bisoi S, Devi G, Rath A (2011) Neural networks for nonlinear fractional programming. Intern J Sci Eng Res 2(12):1–5

Calvete HI, Galé C, Mateo PM (2009) A genetic algorithm for solving linear fractional bilevel problems. Ann Oper Res 166(1):39–56

Charnes A, Cooper W (1973) An explicit general solution in linear fractional programming. Nav Res Logistics Q 20(3):449–467

Deb K, Srinivasan A (2006) Innovization: innovating design principles through optimization. In: Proceedings of the 8th Annual Conference on Genetic and Evolutionary Computation, ACM, New York, pp 1629–1636

Dür M, Khompatraporn C, Zabinsky ZB (2007) Solving fractional problems with dynamic multistart improving hit-and-run. Ann Oper Res 156(1):25–44

Farag TB (2012) A parametric analysis on multicriteria integer fractional decision-making problems. PhD thesis, Faculty of Science, Helwan University, Helwan, Egypt

Hasan MB, Acharjee S (2011) Solving lfp by converting it into a single lp. Intern J Oper Res 8(3):1–14

Hezam IM, Raouf OA (2013a) Particle swarm optimization approach for solving complex variable fractional programming problems. Intern J Eng 2(4)

Hezam IM, Raouf OA (2013b) Employing three swarm intelligent algorithms for solving integer fractional programming problems. Intern J Sci Eng Res (IJSER) 4:191–198

Hezam IM, Raouf MMH (2013c) Osama Abdel: solving fractional programming problems using metaheuristic algorithms under uncertainty. Intern J Adv Comput 46:1261–1270

Hosseinalifam M (2009) A fractional programming approach for choice-based network revenue management. PhD thesis, Universite de Montreal, Montreal, Canada

Jaberipour M, Khorram E (2010) Solving the sum-of-ratios problems by a harmony search algorithm. J Comput Appl Math 234(3):733–742

Jiao H, Guo Y, Shen P (2006) Global optimization of generalized linear fractional programming with nonlinear constraints. Appl Math Comput 183(2):717–728

Jiao H, Wang Z, Chen Y (2013) Global optimization algorithm for sum of generalized polynomial ratios problem. Appl Math Model 37(1):187–197

Maiti D, Biswas S, Konar A (2008) Design of a fractional order pid controller using particle swarm optimization technique. arXiv, preprint arXiv:0810.3776

Mehrjerdi YZ (2011) Solving fractional programming problem through fuzzy goal setting and approximation. Appl Soft Comput 11(2):1735–1742

Pal A, Singh S, Deep K (2013) Solution of fractional programming problems using pso algorithm. In: 2013 IEEE 3rd International Advance Computing Conference (IACC), pp 1060–1064

Sameeullah A, Devi SD, Palaniappan B (2008) Genetic algorithm based method to solve linear fractional programming problem. Asian J Info Technol 7(2):83–86

Shen P, Chen Y, Ma Y (2009) Solving sum of quadratic ratios fractional programs via monotonic function. Appl Math Comput 212(1):234–244

Shen P, Ma Y, Chen Y (2011) Global optimization for the generalized polynomial sum of ratios problem. J Global Optim 50(3):439–455

Stancu-Minasian I (1997) Fractional programming: theory, methods and applications, vol 409. Kluwer academic publishers, Dordrecht

Wang C-F, Shen P-P (2008) A global optimization algorithm for linear fractional programming. Appl Math Comput 204(1):281–287

Wolf H (1985) A parametric method for solving the linear fractional programming problem. Oper Res 33(4):835–841

Xiao L (2010) Neural network method for solving linear fractional programming. In: 2010 International Conference On Computational Intelligence and Security (CIS), pp 37–41

Yang X-S (2011) Nature-inspired metaheuristic algorithms. Luniver Press, United Kingdom

Zhang Q-J, Lu XQ (2012) A recurrent neural network for nonlinear fractional programming. Math Prob Eng 2012

Acknowledgments

The authors are thankful to the learned referee and editor for their valuable comments and suggestions for the improvement of the paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

About this article

Cite this article

Raouf, O.A., Hezam, I.M. Solving Fractional Programming Problems based on Swarm Intelligence. J Ind Eng Int 10, 56 (2014). https://doi.org/10.1007/s40092-014-0056-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40092-014-0056-8