Abstract

In this research article, an improved Multi-criteria group decision-making (MCGDM) strategy has been developed in pentagonal neutrosophic environment incorporating grey relational analysis and method on the removal effects of criteria (MEREC) techniques to address the relative advantages and disadvantages of these aspects in MCGDM. The aim of the study is to improve MCGDM technique which can capture the underlying uncertainties in robust way and can produce consistent results in a more rigorous way. Here, the conception of Hamming distance between two pentagonal neutrosophic number (PNN)s is introduced and the weighted arithmetic and geometric averaging operators in PNN arena are deployed to craft our computational technique more progressive and robust. An agriculture-based numerical problem is illustrated to demonstrate the ranking results of the alternatives by both of the techniques. After evaluating the problem by two aggregation operators, it is found that “plantation crop” is the best alternative under certain circumstances. Lastly, the sensitivity investigation is performed which reveals that with the appliance of arithmetic and geometric aggregation operators the best ranked alternative preserves its position by both of the ranking methods, which definitely exhibit the consistency and robustness of our executed methodology.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

In the last century, to grab the concept of scepticism and unpredictability issues of science, fuzzy set theory is demanded to be as one of the most influential fields, which was first portrayed by Zadeh (Zadeh 1965) in 1965. Vagueness theory plays a prominent role to sort out the problems of engineering, architectural modelling, networking science, decision-making procedures and many more realistic problems. Atanassov (Atanassov 1986) extended the idea of fuzzy set theory and introduced the substantial notion of intuitionistic fuzzy theory with the intriguing interpretation of both membership and non-membership functions. In recent past, researchers have unfolded the uncertainty area into new domains and directions like triangular (Yen et al. 1999), trapezoidal (Abbasbandy and Hajjari 2009), pentagonal (Chakraborty et al. 2019a), hexagonal (Khan et al. 2020a) and heptagonal (Maity et al. 2020) numbers with specific nourishments. Liu and Yuan (Liu and Yuan 2007) and Ye (Ye 2014) constructed the rudimentary conception of triangular and trapezoidal intuitionistic fuzzy set, respectively. Moreover, researchers made origination of numerous kinds of innovative methodologies to sketch analytically the concepts and encouraged few improved versions of uncertain parameters.

In recent time, Smarandache (Smarandache 1998) conceived the perception of neutrosophic set containing three different components namely, (i) truthiness, (ii) indeterminacies and (iii) falseness. Subsequently, Wang et al. (Wang et al. 2010) established the perception of a single-typed neutrosophic set, which is a very pertinent factor to resolve the solution of complicated type of difficulties. Progressing with the research, Chakraborty et al. (Chakraborty et al. 2018, 2021) conceived the vigorous notion of triangular and trapezoidal neutrosophic numbers to many real-life problems.

Bosc and Pivert (Bosc and Pivert 2013) germinated the concept of bipolarity in neutrosophic arena in human decision-making process. Lee (Lee 2000) clarified the view point of bipolar fuzzy set theory in their research papers. Later on, Kang and Kang (Kang and Kang 2012) widened this perception into semi-groups and groups structural domain, whereas Deli et al. (Deli et al. 2015) put forth the constructive theory of a bipolar neutrosophic set and implemented it to decision-making issues. Malik et al. (Malik et al. 2020) implemented the bipolar single-valued neutrosophic graph theoretic knowledge and Quek et al. (Quek et al. 2022) designed penta-partitioned neutrosophic graph theoretic idea in COVID-19-related mathematical modelling. Successively, Chakraborty (Chakraborty et al. 2019b) set forth triangular bipolar number and its categorisation based on distinct logical points. Contemporarily, Wang et al. (Wang et al. 2018) also perceived the theory of operators in a bipolar neutrosophic domain and synchronised it in decision-making theory.

Recently, multi-criteria decision-making (MCDM) problem is one of the highly recommended techniques in the decision science domain. This technique is more appreciated when a group of criteria is employed by a group of decision makers. Those problems in relating multi-criteria group decision-making (MCGDM) have exhibited its intense impact in neutrosophic arena.

Nowadays, MCDM and MCGDM have ample scope of applications in multitude spheres under various unpredictability circumstances. Further, numerous (Garg et al. 2022; Deetae 2021; Chakraborty et al. 2022; Das et al. 2022; Haque et al. 2020) works on MCDM in neutrosophic environment are developed frequently which play an essential role in science and engineering.

Trung et al. (Trung and Thanh 2022) executed fuzzy linguistic MCDM technique in digital marketing technology. Some aggregation operator-based MCGDM/MAGDM techniques have emerged in recent era. Qin et al. (Qin et al. 2020) have implemented weighted Archimedean power partitioned Bonferroni aggregation operator in MCDM problem. Garg (Garg 2021) illustrated sine trigonometric operational law-based Pythagorean fuzzy aggregation operator to executed decision-making problem. Qiyas et al. (Qiyas et al. 2022a) utilised fuzzy credibility Dombi aggregation operators to clarify revised TOPSIS method. Khan et al. (Khan et al. 2020b) utilised and generalised neutrosophic cubic aggregation operators in decision-making process.

In the year 2015, Helen (Helen and Uma 2015) established the idea of pentagonal fuzzy number, which has been extended by Christi (Christi and Kasthuri 2016) into pentagonal intuitionistic number to resolve the transportation problem. Recently, Chakraborty (Chakraborty et al. 2019c, d, 2020; Chakraborty 2020) manifested a legerdemain conception of pentagonal fuzzy number and its various and distinct depiction in transportation field, graph theoretical problem, MCGDM and networking arena.

Different techniques of decision-making have been adopted to enrich this field. Some notable techniques are TOPSIS (Garg 2020), MULTIMOORA (Garg and Rani 2022), AHP (Tas et al. 2022), DEMATEL (Karasan et al. 2022) and EDAS (Liao et al. 2022), etc. In recent time, Adali et al. (Adalı and Tuş 2021; Adalı et al. 2022) resolved some notion of multiattribute decision-making process in to tackle real-life hazards. Also, Deng (Julong 1989; Deng 2005) set forth a grey relation analysis (GRA) to treat vagueness issues. Recently, Wang et al. (Wang et al. 2022) correlated the grey-based decision-making theory to analyse the solar PV power plant selection in Vietnam. Qiyas et.al. (Qiyas et al. 2022b) executed extended GRA technique in MCDM model. Qi (Qi 2021) put forth GRA-CRITIC mechanism for intuitionistic fuzzy MADM-based problem with the application of potentiality evaluation. Recently, Pramanik and Mallik (Pramanik and Mallick 2020) extended GRA-based MADM strategy in single-valued trapezoidal neutrosophic environment.

In this article, we chiefly shed light on the application of PNN and its utility in an agricultural-based MCGDM problem. Additionally, we applied our established PNWAA and PNWGA operators (Chakraborty et al. 2020) in PNN environment in case of solving MCGDM technique separately. A refined GRA skill is developed along with the MEREC strategy with an effective comparative scrutiny to demonstrate the pertinence of the ranking results. Lastly, a sensitivity analysis is performed here which gave an essential force in the research work. This noble idea will assist us to clear up a plethora of each day existence issues in uncertainty area.

Motivation

Our main goal of this research article is to endorse the GRA scheme to encourage the grey system and evaluate the best alternative under imprecise dataset. During survey study, the following questions arise in our mind to better control and effectively execute the decision-making problem, which motivate us to conduct our current research work.

-

How can we incorporate pentagonal neutrosophic imprecise data in realistic MCGDM model?

-

Which mathematical operator is appropriate to aggregate the underlying information?

-

Which technique will be useful to capture the grey knowledge associated with the problem?

-

Is there any need of another technique to analyse a comparative knowledge-based discussion to enrich our study?

-

Is our executed technique robust and stable?

Novelties

Nowadays, researchers have shown their attentiveness to evolve the theories connecting to neutrosophic domain to promote its numerous applications in distinct branches of neutrosophic arena. However, legitimising all the standpoints regarding to PNN theory: different conjectures and problems are yet to be created and solved. In this research paper, our supreme motto is to focus some blurred topics in PNN domain which are listed as follows:

-

(1)

Application of PNNWAA and PNNWGA operators to interpret the MCGDM method.

-

(2)

Suggested new distance measure (Hamming distance).

-

(3)

Discussion the idea of GRA method.

-

(4)

Execute the idea of GRA in pentagonal neutrosophic domain to solve our proposed MCGDM problem.

-

(5)

Strengthen the GRA strategy by MEREC technique with a comparative justification.

-

(6)

Sensitivity analysis of the ranking outcomes.

Definitions of different sets and pentagonal neutrosophic number

Fuzzy Set: (Zadeh 1965) Suppose \(\widetilde{T}\) be a set such that \(\widetilde{\mathrm{T}}=\left\{\left(\upgamma ,{\mathrm{\alpha }}_{\widetilde{\mathrm{T}}}\left(\upgamma \right)\right):\mathrm{\gamma \epsilon T},{\mathrm{\alpha }}_{\widetilde{\mathrm{T}}}\left(\upgamma \right)\upepsilon [\mathrm{0,1}]\right\}\) which is customarily designated by \(\left(\upgamma ,{\mathrm{\alpha }}_{\widetilde{\mathrm{T}}}\left(\upgamma \right)\right)\), and here, \(\gamma\) belongs to the set \(T\) and \(0\le {\mathrm{\alpha }}_{\widetilde{\mathrm{T}}}\left(\upgamma \right) \le 1\), and then, the set \(\widetilde{\mathrm{T}}\) is called a fuzzy set.

Neutrosophic Set: (Smarandache 1998) A set \({\widetilde{T}}_{Neu}\) is the universe of discourse of \(T\) most generally stated as \(\sigma\) is called a neutrosophic set if \({\widetilde{T}}_{Neu}=\left\{\langle \sigma ;\left[{\tau }_{\widetilde{{T}_{Neu}}}\left(\sigma \right),{\pi }_{\widetilde{{T}_{Neu}}}\left(\sigma \right),{\rho }_{\widetilde{{T}_{Neu}}}\left(\sigma \right)\right]\rangle \vdots \sigma \epsilon T\right\}\),where \({\tau }_{\widetilde{{T}_{Neu}}}\left(\sigma \right):T\to \left[\mathrm{0,1}\right]\) stands for the degree of confidence, \({\pi }_{\widetilde{{T}_{Neu}}}\left(\sigma \right):T\to \left[\mathrm{0,1}\right] \mathrm{stands for}\) the degree of uncertainty, and \({\rho }_{\widetilde{{T}_{Neu}}}\left(\sigma \right):T\to [\mathrm{0,1}]\) represents the degree of falseness in the decision-making course of action. Where\(\left[{\tau }_{\widetilde{{A}_{Neu}}}\left(\sigma \right),{\pi }_{\widetilde{{A}_{Neu}}}\left(\sigma \right),{\rho }_{\widetilde{{A}_{Neu}}}\left(\sigma \right)\right]\) satisfies the inequality \(0\le {\tau }_{\widetilde{{T}_{Neu}}}\left(\sigma \right)+{\pi }_{\widetilde{{T}_{Neu}}}\left(\sigma \right)+{\rho }_{\widetilde{{T}_{Neu}}}\left(\sigma \right)\le 3\).

Single-Valued Neutrosophic Set: (Wang et al. 2010) A Neutrosophic set mentioned above \({\widetilde{T}}_{Neu}\) is said to be a Single-Valued Neutrosophic Set \(\left({\widetilde{T}}_{SNeu}\right)\) if \(\sigma\) is a single-valued independent variable.\({\widetilde{T}}_{SNeu}=\left\{\langle \sigma ;\left[{\aleph }_{{\widetilde{T}}_{Neu}}\left(\sigma \right),{\beth }_{{\widetilde{T}}_{Neu}}\left(\sigma \right),{\upomega }_{{\widetilde{T}}_{Neu}}\left(\sigma \right)\right]\rangle \vdots \sigma \epsilon T\right\}\), where \({\aleph }_{{\widetilde{T}}_{Neu}}\left(\sigma \right),{\beth }_{{\widetilde{T}}_{Neu}}\left(\sigma \right) and {\upomega }_{{\widetilde{T}}_{Neu}}\left(\sigma \right)\) signify the notion of accuracy, indeterminacy and falsity membership functions, respectively. \(\widetilde{{T}_{NC}}\) is designated as neut-convex, which implies that. \(\widetilde{{T}_{NC}}\) is a subset of R by satisfying the following norms:

where \({s}_{1}\,\mathrm{ and }\,{s}_{2}\in {\mathbb{R}}\,and\,\varphi \in [\mathrm{0,1}]\)

Single-Valued Pentagonal Neutrosophic Number: (Chakraborty et al. 2020) A Single-Valued Pentagonal Neutrosophic Number \(\left(\widetilde{{Pen}_{N}}\right)\) is defined as \(\left(\widetilde{{Pen}_{N}}\right)=\langle \left[\left({t}_{1},{t}_{2},{t}_{3},{t}_{4},{t}_{5}\right);\theta \right],\left[\left({t}_{1},{t}_{2},{t}_{3},{t}_{4},{t}_{5}\right);\vartheta \right],\left[\left({t}_{1},{t}_{2},{t}_{3},{t}_{4},{t}_{5}\right);\gamma \right]\rangle\), where \(\theta , \vartheta ,\gamma \in \left[\mathrm{0,1}\right]\).The accuracy membership function \(\left( {\chi_{{\tilde{T}}} } \right):{\mathbb{R}} \to \left[ {0,\theta } \right]\), the ambiguity membership function \(\left({\yen }_{\widetilde{T}}\right):{\mathbb{R}}\to \left[\vartheta ,1\right]\) and the falsity membership function \(\left({\pounds }_{\widetilde{T}}\right):{\mathbb{R}}\to \left[\gamma ,1\right]\) are defined by:

Proposed score and accuracy function

The requirement of score function (Chakraborty et al. 2020) in pentagonal neutrosophic domain is to turn over a neutrosophic number into a crisp number. Score function wholly depends on the degree of truthiness, ambiguity and falsity. Here, we define a new score function in pentagonal neutrosophic environment. Thus, for any single-typed pentagonal neutrosophic number,

We define the score function as follows:

Hamming distance between two pentagonal neutrosophic numbers

Let \({\widetilde{L}}_{PtNeu1}= \left({l}_{1}^{1} ,{l}_{2}^{1},{l}_{3}^{1},{l}_{4}^{1},{l}_{5}^{1};{{t}_{Pt}}^{1},{{i}_{Pt}}^{1},{{f}_{Pt}}^{1}\right)\) and \({\widetilde{L}}_{PtNeu2}= \left({l}_{1}^{2} ,{l}_{2}^{2},{l}_{3}^{2},{l}_{4}^{2},{l}_{5}^{2};{{t}_{Pt}}^{2},{{i}_{Pt}}^{2},{{f}_{Pt}}^{2}\right)\)

are two pentagonal neutrosophic numbers. Then, the Hamming distance between two numbers is defined as follows:

Weighted aggregation operators of pentagonal neutrosophic numbers

Aggregation operators are such relevant equipments for clustering information to grasp diplomatically the decision-making policy, and this section gives rise to an apprehension between two weighted aggregation operators to aggregate PNNs.

Pentagonal neutrosophic weighted arithmetic averaging operator: (Chakraborty et al. 2020) Let \({\widetilde{l}}_{j}\)= < (\({l}_{j1}\), \({l}_{j2}\), \({l}_{j3}\), \({l}_{j4}\), \({l}_{j5}\)); \({t}_{Ptj}{,i}_{Ptj},{f}_{Ptj}\)> \((j=\mathrm{1,2},3,\dots .,n)\) be a set of PNNs, then, a PNWAA operator is defined as follows:

where \({\Omega }_{j}\) is the weight of \({\widetilde{l}}_{j}\)(j = 1,2,3,….,n) such that \({\Omega }_{j}>0\) and \(\sum_{j=1}^{n}{\Omega }_{j}=1.\)

Pentagonal neutrosophic weighted geometric averaging operator: (Chakraborty et al. 2020) Let \({\widetilde{l}}_{j}\)= < (\({l}_{j1}\), \({l}_{j2}\), \({l}_{j3}\), \({l}_{j4}\), \({l}_{j5}\)); \({t}_{Ptj}{,i}_{Ptj},{f}_{Ptj}\)> \((j=\mathrm{1,2},3,\dots .,n)\) be a set of PNNs, then, a PNWGAA operator is defined as follows:

where \({\Omega }_{j}\) is the weight of \({\widetilde{l}}_{j}\)(j = 1,2,3,….,n) such that \({\Omega }_{j}>0\) and \(\sum_{j=1}^{n}{\Omega }_{j}=1.\)

Multi-criteria group decision-making problem in pentagonal neutrosophic environment

In this current decade, MCGDM problem is one of the authentic, rational and well organised topics for handling the notion of uncertainty and vagueness issues. The chief objective of this method is to detect the finest alternatives amongst the finite distinct alternatives on the basis of their finite different attribute values. Thus, decision-making procedure can be built up vigorously by the strategies of MCGDM which is immensely favourable to generate decision recommendation and suggests procedure conveniences in terms of improved decision attributes, provides enhanced communication capabilities and boosts up aspirations of decision makers. The accomplishment of the procedure is quite tactful in pentagonal neutrosophic domain. Applying some established mathematical operators, score function and accuracy function, we evolve an algorithm to equip this MCGDM problem.

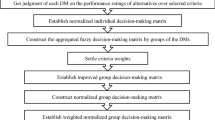

In this section, we study a MCGDM-based agricultural issue in which we need to choose the best alternative crop for maximum financial gain according to distinct view points from three different agriculturalists. The proposed algorithm is sketched briefly as follows:

Materials and Methods

Suppose that \(A\)={\({A}_{1}\),\({A}_{2}, { A}_{3}\),…….\({A}_{m}\}\) be the set of m alternatives and \(B\)={\({B}_{1}\),\({B}_{2}, {B}_{3}\),…….\({B}_{n}\}\) be the set of n attributes. Also, the \(\partial =\{ {\partial }_{1},{\partial }_{2},{\partial }_{3},\dots .{\partial }_{n}\}\) be the connecting weight set attributes where each \({\partial }_{i}\ge\) 0 and also satisfies the relation \(\sum_{i=1}^{n}{\partial }_{i}=1\). Therefore, we regard the set of decision makers \(D=\{ {D}_{1},{D}_{2},{D}_{3}\dots \dots \dots ..{D}_{r}\}\) connected with alternatives whose weight set is considered as \(\delta =\left\{{\delta }_{1},{\delta }_{2},{\delta }_{3}\dots \dots \dots ..{\delta }_{r}\right\}\) where each \({\delta }_{i}\ge\) 0 and also satisfies the relation \(\sum_{i=1}^{r}{\delta }_{i}=1\), and this weight vector will be selected according to the capability of judgement, proficiency of decision makers’ experience and knowledge and their inventive thinking capability. The strategy to resolve our problem is depicted in Fig. 1.

GRA mechanism in pentagonal neutrosophic environment

Grey relational analysis (GRA) was designed by a Chinese Professor Julong Deng (Julong 1989) which is extensively applied in Grey system theory. It explains circumstances with no data as black and those with precise information as white. Briefly, none of these idealised circumstances ever occurs in realistic problems. Moreover, conditions between these acute situations, which contain incomplete information, are specified as grey, indistinct or fuzzy.

Algorithm of GRA technique

-

Step 1 Composition of Decision Matrices

Here, we build up all decision matrices in accordance with the decision maker’s opinion corresponding to the finite alternatives and finite set of attributes. The noteworthy point is that the entities \({\mathrm{t}}_{\mathrm{ij}}\) for each matrix are all pentagonal neutrosophic numbers. The matrix is given as follows:

-

Step 2 Standardisation of decision matrices

Let \({V}^{C}\)= (\({t}_{ij}^{c}{)}_{\mathrm{mn}}\) be the finalised decision matrix where each entity of the decision matrix is pentagonal neutrosophic number where \({t}_{ij}^{c}\) =([\({t}_{ij}^{1c}\),\({t}_{ij}^{2c}\),\({t}_{ij}^{3c}\), \({t}_{ij}^{4c}\),\({t}_{ij}^{5c}\)];\({t}_{Ptijc}{,i}_{Ptijc},{f}_{Ptijc}\)) is the evaluation value of alternative \({A}_{i}\) w.r.t. the attribute\({B}_{j}\).We consider the following skill of normalisation to obtain the standardised decision matrix where \({V}^{*c}\)=\(({{\overline{t} }_{ij}^{c})}_{mn}\) in which the entity \({\overline{t} }_{ij}^{c}\) = ([\({\overline{t} }_{ij}^{1c}\),\({\overline{t} }_{ij}^{2c}\),\({\overline{t} }_{ij}^{3c}\),\({\overline{t} }_{ij}^{4c}\),\({\overline{t} }_{ij}^{5c}\)];\({\overline{t} }_{ptijc}\),\({\overline{i} }_{ptijc}\),\({\overline{f} }_{ptijc}\)) is formulated as follows:

Thus, we attain the following standardised matrix:

-

Step 3 Aggregation and composition of single decision matrix

For producing a single decision matrix V, we have employed the legitimate pentagonal neutrosophic weighted arithmetic averaging operator (\(\mathrm{PNWAA})\) \(t^{\prime }_{ij} = \sum\nolimits_{j = 1}^{n} {\delta_{j} t_{ij}^{c} }\) and weighted geometric averaging operator \((\mathrm{PNWGA})\) \(t^{\prime }_{ij} = \mathop \prod \nolimits_{j = 1}^{n} t_{ij}^{{c}{\delta_{j} }}\) to aggregate the decision matrices to form an individual one which is mainly represented as \(V.\) Here, we get two single decision matrices using \(\mathrm{PNWAA}\) and \(\mathrm{PNWGA}\) operators. The matrices are defined as below:

-

Step 4 Formulating positive and negative ideal solution

In this step, we formulate Positive Ideal Solution and Negative Ideal Solution from the aggregated individual decision matrices. Here, we obtain two sets of Positive and Negative Ideal Solutions for the two different individual decision matrices (both the cases of Arithmetic and Geometric Averaging Operators). The formulae are defined as follows:

where

Here, \(l=\mathrm{1,2},\dots m\)

-

Step 5 Composing weighted modified grey relational coefficients

Determining both positive and negative weighted modified grey relational coefficients both in the case of aggregated matrices by arithmetic and geometric operators, we construct the following formulae:

-

Step 6 Formulating Positive and Negative Index of Grey Relational Coefficients

In this step, we utilise the weight vector of the attribute set and construct the following formulae of Positive and Negative Index of Grey Relational Coefficients:

Where \(p=\mathrm{ 1,2},\dots m\)

-

Step 7 Determining relative affinity coefficients

For evaluating relative grey affinity coefficients of each of the alternatives \({A}_{i}\) for \(i= \mathrm{1,2},..m,\) we construct the following formula:

-

Step 8 Ranking

Ranking the alternatives is settled in accordance with their relative affinity coefficient values.

Illustrative example

In this research article, we consider a tropical agricultural-based problem in continental and sub continental region in which there are three different kinds of crops are cultivated for maximum financial gain. Our objective is to find the best alternative with proper justification of pentagonal neutrosophic theory. Here, we consider three different attributes such as climate factor, landscape and soil factor and farming technique. We also consider here three different categories of decision makers: i) young agriculturalist with meagre experience and having knowledge of modern technique of farming, ii) adult aged agriculturalist with moderate experience and having fair knowledge of farming technique and iii) old aged agriculturalist with sound experience and having knowledge of some old fashioned technique of farming. In accordance with their opinions, we construct three different decision matrices based on pentagonal neutrosophic environment which are described as follows:\({A}_{1}=\) Food Crops,\({A}_{2}=\) Plantation Crops \(and {A}_{3}=\) Horticulture Crops are the alternatives.\({ B}_{1}=\) Climate Factor \(, {B}_{2}=\) Landscape and Soil Factor and \({B}_{3}=\) Farming Technique are the three different features. Let, \({D}_{1}=\) Young agriculturalist \(, {D}_{2}=\) Adult age agriculturalist and \({D}_{3}=\) Old age agriculturalist having weight assignment \(\delta =\{ 0.33, 0.36, 0.31 \}\) and the weight assignment in different attribute function is \(\partial =\left\{\mathrm{0.3,0.4,0.3}\right\}.\) Using two aggregator operators, two verbal matrices are constructed according to the decision makers’ opinions.

-

Step 1 Composition of decision matrices

In this step, three decision matrices are constructed according to the opinions of three different types of decision makers.

Weights of the alternatives—Young Agriculturalist: 0.33, Adult Agriculturalist: 0.36,

Old Agriculturalist: 0.31 and Weights of the attributes are: 0.3, 0.4 and 0.3.

-

Step 2 Standardisation of decision matrices

In this step, we need to standardise the above mentioned decision matrices. Thus, we take help in Eq. (7) and standardise decision matrices as below:

-

Step 3 Aggregation and Composition Of Single Decision matrix

In this step, we aggregate the standardised decision matrices by applying arithmetic and geometric operators and build up two single decision matrices for the two different cases which are given as follows:

-

Step 4 Formulating Positive and Negative Ideal Solution

Composing Positive and Negative Ideal Solution, we employ the above-mentioned Eqs. (10) and (11) and obtain two sets of Positive and Negative Ideal Solutions for two different single matrices.

Positive ideal solution

Negative ideal solution

-

Step 5 Composing weighted modified grey relational coefficients

For calculating Modified Grey Relational Coefficients, we make use of Eqs. (12) and (13) and obtain two sets of Modified Grey Relational Coefficient vectors for two distinct single matrices which are given as follows:

-

Step 6 Formulating Positive and Negative Index of Grey Relational Coefficients

In this step, we estimate the indexes of Positive and Negative Grey Relational Coefficient values. For that estimation, we make use of Eqs. (14) and (15) and the estimated values are given as below:

-

Step 7 Determining Relative Affinity Coefficients

Evaluating the values of Relative Affinity Coefficients, we employ Eq. (16) and calculate two sets of affinity coefficient values which are given as follows:

-

Step 8 Ranking

In accordance with the estimated values of the affinity coefficient of the alternatives, we categorise the alternatives and order them for both the cases.

\({A}_{2}={ A}_{1}\)>\({A}_{3}\) (When geometric operator is used)

Sensitivity analysis

A sensitivity analysis is performed to examine how the attribute weights of each criterion affect the relative matrix and their ranking. Here, we consider PNWAA-based sensitivity analysis in Table1 and PNGWA-based sensitivity analysis in Table 2 to classify different ranking. The sensitivity analytical graphs are demonstrated clearly in Fig. 2, 3, 4. In Fig. 2 several trials are conducted with the weight variation for checking the best alternative. Also, Fig. 3 depicts the ranking of the alternatives which are evaluated using PNWAA operator and Fig. 4 depicts the ranking of the alternatives using PNWGA operator.

Method based on the removal effects of criteria (MEREC)

Method based on the removal effects of criteria (MEREC) is incorporated here to decide the criteria’s optimal weights in the multi-criteria decision-making process to draw a justified analytic comparative view of our hypothetical weight with the optimal weight vectors obtained by this technique for examining the similarity of the ranking outcomes through both of the procedures. MEREC technically applies each criterion’s elimination consequence on the performance value. M. Keshavarz-Ghorabaee et al. (Keshavarz-Ghorabaee et al. 2021) introduced the MEREC tactics for the MCDM problem and drew some analytical perspectives with some objective weighting methods. Another decision-making technique on MEREC has also studied by Trung et al. (Trung and Thinh 2021). Very recently, MEREC technique is applied in hybrid intuitionistic fuzzy domain by Hezam et al. (Hezam et al. 2022). For this analysis, a simple logarithmic measure is prolifically utilised with equal weight assignment to compute alternatives’ performances. The procedure of this technique is discussed below.

Algorithm of MEREC technique

-

Step 1 Composition of score-valued decision matrices

In this step, we compute the score values (\({n}_{ij})\) of the pentagonal neutrosophic entities of the two aggregated decision matrices from (9) to form two individual decision matrices (\({S}_{i})\)(by both arithmetic and geometric operators) in crispified format with the help of score function mentioned in Eq. (2).

-

Step 2Calculation of the overall performances of the alternatives

In this step, we calculate the overall performance value (\({N}_{i}\)) of the alternatives. A logarithmic nonlinear function is erected with equal criteria weight age to attain the alternatives’ overall performance value (\({N}_{i})\). The aggregated normalised score-valued decision matrix is used to pertain the results. If there is m number of alternatives and n number of criterion, then, the below-mentioned equation is used for this calculation:

-

Step 3 Calculation of the overall performances of the alternatives by removal of criteria

In this step, we compute the performance value of the alternatives by removing each criterion. In this step, we eliminate one criterion and observe the effect of performance value by it. We denote \({N}_{ij}^{^{\prime}}\) be the removal performance value of the kith alternative by eliminating the jth criteria.

-

Step 4 Computation of the deviational values

In this step, we add up the absolute deviational values of the corresponding performance valued from the removal value. \({D}_{j}\) is calculated as follows:

-

Step 5 Computation of optimal weight

In this step, we calculate the final optimal weight of the criteria. Let \({\sigma }_{j}\) be the final optimal weight of each criterion. We construct the function with the help of step 4:

To reach at the ranking outcomes, the remaining steps are similar as GRA technique. So, we omit these steps.

Computational process by MEREC technique

-

Step 1 Composition of Score-valued Decision Matrices: In this step, we compute two score-valued decision matrices.

$${S}_{1}=\left(\begin{array}{cccc}.& {A}_{1}& {A}_{2}& {A}_{3}\\ {B}_{1}& 0.18& \begin{array}{c}0.19\end{array}& \begin{array}{c}0.21\end{array}\\ {B}_{2}& 0.17& 0.18& 0.14\\ {B}_{3}& \begin{array}{c}0.23\end{array}& 0.20& \begin{array}{c}0.20\end{array}\end{array}\right),{S}_{2}=\left(\begin{array}{cccc}.& {A}_{1}& {A}_{2}& {A}_{3}\\ {B}_{1}& 0.17& \begin{array}{c}0.18\end{array}& \begin{array}{c}0.21\end{array}\\ {B}_{2}& 0.18& 0.18& 0.14\\ {B}_{3}& \begin{array}{c}0.20\end{array}& 0.19& \begin{array}{c}0.18\end{array}\end{array}\right)$$ -

Step 2 Calculation of the overall performances of the alternatives

In this step, we compute the set of overall performance values using Eq. (17):

-

Step 3: Calculation of the overall performances of the alternatives by removal of criteria

In this step, we compute the overall performance values of the alternatives by Eq. (18):

-

Step 4 Computation of the deviational values

In this step, we compute the deviational values using Eq. (19):

\({D}_{1\mathrm{arith}}= 0.68, {D}_{2\mathrm{arith}}= 0.68, {D}_{3\mathrm{arith}}=0.71\)

-

Step 5 Computation of optimal weight

In this step, we compute the optimal weight vector using Eq. (20):

-

Step 6 Formulating of positive and negative index

In this step, we estimate the indexes of Positive and Negative index to make use of Eqs. (14) and (15) and the estimated values are given as below:

-

Step 7 Determining relative affinity coefficients

Evaluating the values of Relative Affinity Coefficients, we employ Eq. (16) and calculate two sets of affinity coefficient values which are given as follow:

-

Step 8 Ranking

In accordance with the estimated values of the affinity coefficient of the alternatives, we categorise the alternatives and order them for both the cases:

Results and discussion

In this section, we try to uphold the comparative study of the GRA strategy and the MEREC approach in pentagonal neutrosophic domain. Note worthily, here, we demonstrate the MCGDM technique with two operators (PNWAA, PNWGA) for both of the procedures. It is noted that, for both of the techniques, \({A}_{2}\) is the best alternative through arithmetic and geometric aggregation operators. More specifically, while we apply the arithmetic operator, the ranking results for both of the processes remain unchanged; on the other hand, in the case of geometric aggregation operator, though the best ranked alternative preserves its position in both of the ranking methods but there occurs a little fluctuation of the ranking values of the alternatives. So, comparing the ranking result, we conclude that MEREC technique vehemently supports the GRA ranking result from all aspects. (Fig. 5, 6).

Conclusion and future research scope

It is well established that PNN theory is captivating, proficient and capable of handling the vagueness theory with immense productivity. In this research study, we have introduced a new MCGDM technique in PNN environment endorsing the GRA scheme and MEREC strategies to encourage the grey system and evaluate the best alternative under imprecise dataset. Here, we have executed GRA and MEREC strategies to find the best crop under the optimal agricultural-based scenario by taking the opinions of several experts. Appropriate arithmetic and geometric aggregation operators are introduced to capture and compute the numerical imprecise data in PNN field. Sensitivity analysis is performed to show the efficiency of our executed techniques. Some major findings of our studies are listed as follows:

-

(i)

Here, two logical aggregation operators namely PNWAA and PNWGA are deployed to execute the MCGDM technique, and it is found that the “plantation crop” (i.e. alternative A2) is the best alternative under both the underlying aggregation operators. Even this result sustained when the GRA and MEREC methods are applied.

-

(ii)

During sensitivity analysis and numerical simulation it is observed that the output is more robust under arithmetic operator than geometric operator. It is observed that the best alternative A2 can preserve its position with the fluctuation of underlying weights in a certain range when the arithmetic operator is used, whereas the result alters under the same fluctuation of underlying weights when the geometrical operator is used. Thus, we prefer to recommend our MCGDM strategy endorsing the GRA and MEREC scheme in PNN environment with the arithmetic aggregation operator for future study.

-

(iii)

Here, we also observe that MEREC technique strongly supports the GRA analysis in PNN environment.

As future scope of this research study, it can be mentioned that this research idea can implement in various research domains like engineering-based structural issues, medical diagnoses problem, clustering analysis, various selection and orientation problems, image processing, big data analysis and pattern recognition, etc.

References

Abbasbandy S, Hajjari T (2009) A new approach for ranking of trapezoidal fuzzy numbers. Comput Math Appl 57(3):413–419

Adalı EA, Tuş A (2021) Hospital site selection with distance-based multi-criteria decision-making methods. Int J Healthcare Manag 14(2):534–544

Adalı EA, Öztaş T, Özçil A, Öztaş GZ, Tuş A (2022) A new multi-criteria decision-making method under neutrosophic environment: ARAS method with single-valued neutrosophic numbers. Int J Inf Technol Decis Mak. https://doi.org/10.1142/S0219622022500456

Atanassov K (1986) Intuitionistic fuzzy sets. Fuzzy Sets Syst 20(1):87–96

Bosc P, Pivert O (2013) On a fuzzy bipolar relational algebra. Inf Sci 219:1–16

Chakraborty A (2020) A new score function of pentagonal neutrosophic number and its application in networking problem. Int J Neutrosophic Sci 1(1):40–51

Chakraborty A, Mondal SP, Ahmadian A, Senu N, Alam S, Salahshour S (2018) Different forms of triangular neutrosophic numbers, de-neutrosophication techniques, and their applications. Symmetry 10(8):327

Chakraborty A, Mondal SP, Alam S, Ahmadian A, Senu N, De D, Salahshour S (2019a) The pentagonal fuzzy number: its different representations, properties, ranking, defuzzification and application in game problems. Symmetry 11:248. https://doi.org/10.3390/sym11020248

Chakraborty A, Mondal SP, Alam S, Ahmadian A, Senu N, De D, Salahshour S (2019b) Disjunctive representation of triangular bipolar neutrosophic numbers, de-bipolarization technique and application in multi-criteria decision-making problems. Symmetry 11(7):932

Chakraborty A, Banik B, Mondal SP, Alam S (2020) Arithmetic and geometric operators of pentagonal neutrosophic number and its application in mobile communication service based MCGDM problem. Neutrosophic Sets Syst 32:61–79

Chakraborty A, Mondal SP, Mahata A, Alam S (2021) Different linear and non-linear form of trapezoidal neutrosophic numbers, de-neutrosophication techniques and its application in time-cost optimization technique, sequencing problem. RAIRO-Op Res 55:S97–S118

Chakraborty A, Banik B, Broumi S, Salahshour S (2022) Graded mean integral distance measure and VIKOR strategy based MCDM skill in trapezoidal neutrosophic number. Int J Neutrosophic Sci 18(2):210–226

Chakraborty A, Broumi S, Singh PK (2019c) Some properties of pentagonal neutrosophic numbers and its applications in transportation problem environment. Neutrosophic Sets Syst 28:200–215

Chakraborty A, Mondal S, Broumi S (2019d) De-neutrosophication technique of pentagonal neutrosophic number and application in minimal spanning tree. Neutrosophic Sets Syst 29:1–18

Christi MA, Kasthuri B (2016) Transportation problem with pentagonal intuitionistic fuzzy numbers solved using ranking technique and Russell’s method. Int J Eng Res Appl 6(2):82–86

Das S, Shil B, Pramanik S (2022) HSSM-MADM strategy under SVPNS environment. Neutrosophic Sets Syst 50(1):22

Deetae N (2021) Multiple criteria decision making based on bipolar fuzzy sets application to fuzzy TOPSIS. J Math Comput Sci 12

Deli I, Ali M, Smarandache F (2015) Bipolar neutrosophic sets and their application based on multi-criteria decision making problems. In: 2015 International conference on advanced mechatronic systems (ICAMechS) (pp. 249–254). IEEE

Deng JL (2005) The primary methods of grey system theory. Huazhong University of Science and Technology Press, Wuhan

Garg H, Nancy N (2020) Algorithms for single-valued neutrosophic decision making based on TOPSIS and clustering methods with new distance measure. AIMS Mathematics 5(3):2671–2693

Garg H (2021) Sine trigonometric operational laws and its based pythagorean fuzzy aggregation operators for group decision-making process. Artif Intell Rev 54(6):4421–4447

Garg H, Rani D (2022) An efficient intuitionistic fuzzy MULTIMOORA approach based on novel aggregation operators for the assessment of solid waste management techniques. Appl Intell 52(4):4330–4363

Garg H, Saad M, Rafiq A (2022) Analysis of T-spherical fuzzy matrix and their application in multiattribute decision-making problems. Math Prob Eng. https://doi.org/10.1155/2022/2553811

Haque TS, Chakraborty A, Mondal SP, Alam S (2020) Approach to solve multi-criteria group decision-making problems by exponential operational law in generalised spherical fuzzy environment. CAAI Trans Intell Technol 5(2):106–114

Helen R, Uma G (2015) A new operation and ranking on pentagon fuzzy numbers. Int Jr. of Math Sci Appl 5(2):341–346

Hezam IM, Mishra AR, Rani P, Cavallaro F, Saha A, Ali J, Štreimikienė D (2022) A hybrid intuitionistic fuzzy-MEREC-RS-DNMA method for assessing the alternative fuel vehicles with sustainability perspectives. Sustainability 14(9):5463

Julong D (1989) Introduction to grey system theory. J Grey Syst 1(1):1–24

Kang MK, Kang JG (2012) Bipolar fuzzy set theory applied to sub-semigroups with operators in semigroups. Pure Appl Math 19(1):23–35

Karasan A, Ilbahar E, Cebi S, Kahraman C (2022) Customer-oriented product design using an integrated neutrosophic AHP & DEMATEL & QFD methodology. Appl Soft Comput 118:108445

Keshavarz-Ghorabaee M, Amiri M, Zavadskas EK, Turskis Z, Antucheviciene J (2021) Determination of objective weights using a new method based on the removal effects of criteria (MEREC). Symmetry 13(4):525

Khan NA, Razzaq OA, Chakraborty A, Mondal SP, Alam S (2020a) Measures of linear and nonlinear interval-valued hexagonal fuzzy number. Int J Fuzzy Syst Appl (IJFSA) 9(4):21–60

Khan M, Gulistan M, Ali M, Chammam W (2020b) The generalized neutrosophic cubic aggregation operators and their application to multi-expert decision-making method. Symmetry 12(4):496

Lee KM (2000) Bipolar-valued fuzzy sets and their operations. In: Proceedings international conference on intelligent technologies, Bangkok, Thailand, (pp. 307–312)

Liao N, Gao H, Lin R, Wei G, Chen X (2022) An extended EDAS approach based on cumulative prospect theory for multiple attributes group decision making with probabilistic hesitant fuzzy information. Artificial Intell Rev 1–33. https://doi.org/10.1007/s10462-022-10244-y

Liu F, Yuan X (2007) Fuzzy number intuitionistic fuzzy set. Fuzzy Syst Math 21(1):88–91

Maity S, Chakraborty A, De SK, Mondal SP, Alam S (2020) A comprehensive study of a backlogging EOQ model with nonlinear heptagonal dense fuzzy environment. RAIRO-Op Res 54(1):267–286

Malik MA, Rashmanlou H, Shoaib M, Borzooei RA, Taheri M, Broumi S (2020) A study on bipolar single-valued neutrosophic graphs with novel application. Neutrosophic Sets Syst 32(1):15

Pramanik S, Mallick R (2020) Extended GRA-based MADM strategy with single-valued trapezoidal neutrosophic numbers. In: Neutrosophic sets in decision analysis and operations research, (pp. 150–179). IGI Global

Qi QS (2021) GRA and CRITIC method for intuitionistic fuzzy multiattribute group decision making and application to development potentiality evaluation of cultural and creative garden. Math Prob Eng. https://doi.org/10.1155/2021/9957505

Qin Y, Qi Q, Scott PJ, Jiang X (2020) Multiple criteria decision making based on weighted Archimedean power partitioned Bonferroni aggregation operators of generalised orthopair membership grades. Soft Comput 24(16):12329–12355

Qiyas M, Madrar T, Khan S, Abdullah S, Botmart T, Jirawattanapaint A (2022a) Decision support system based on fuzzy credibility Dombi aggregation operators and modified TOPSIS method. AIMS Math 7(10):19057–19082

Qiyas M, Yahya M, Abdullah S, Khan N, Naeem M (2022b) Extended GRA method for multi-criteria group decision making problem based on fuzzy credibility geometric aggregation operator. https://doi.org/10.21203/rs.3.rs-1419758

Quek SG, Selvachandran G, Ajay D, Chellamani P, Taniar D, Fujita H, Giang NL (2022) New concepts of pentapartitioned neutrosophic graphs and applications for determining safest paths and towns in response to COVID-19. Comput Appl Math 41(4):1–27

Smarandache F (1998) Neutrosophy: neutrosophic probability, set, and logic: analytic synthesis & synthetic analysis

Tas K, Tas A, Isin FB (2022) I-valued neutrosophic AHP: an application to assess airline service quality after covid-19 pandemy. Neutrosophic Sets Syst 49(1):28

Trung NQ, Thanh NV (2022) Evaluation of digital marketing technologies with fuzzy linguistic MCDM methods. Axioms 11(5):230

Trung DD, Thinh HX (2021) A multi-criteria decision-making in turning process using the MAIRCA, EAMR, MARCOS and TOPSIS methods: a comparative study. Adv Prod Eng Manag 16(4):443–456

Wang H, Smarandache F, Zhang Q, Sunderraman R (2010) Single valued neutrosophic sets. Multispace Multistructure 4:410–413

Wang L, Zhang HY, Wang JQ (2018) Frank Choquet Bonferroni mean operators of bipolar neutrosophic sets and their application to multi-criteria decision-making problems. Int J Fuzzy Syst 20(1):13–28

Wang CN, Dang TT, Wang JW (2022) A combined data envelopment analysis (DEA) and grey based multiple criteria decision making (G-MCDM) for solar PV power plants site selection: a case study in Vietnam. Energy Rep 8:1124–1142

Ye J (2014) Prioritized aggregation operators of trapezoidal intuitionistic fuzzy sets and their application to multicriteria decision-making. Neural Comput Appl 25(6):1447–1454

Yen KK, Ghoshray S, Roig G (1999) A linear regression model using triangular fuzzy number coefficients. Fuzzy Sets Syst 106(2):167–177

Zadeh LA (1965) Fuzzy sets. Inf Control 8(3):338–353

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest regarding this research.

Additional information

Editorial responsibility: Maryam shabani.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Banik, B., Alam, S. & Chakraborty, A. Comparative study between GRA and MEREC technique on an agricultural-based MCGDM problem in pentagonal neutrosophic environment. Int. J. Environ. Sci. Technol. 20, 13091–13106 (2023). https://doi.org/10.1007/s13762-023-04768-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13762-023-04768-1