Abstract

An effective and fast hybrid metaheuristic is proposed for solving the pickup and delivery problem with time windows. The proposed approach combines local search, large neighbourhood search and guided ejection search in a novel way to exploit the benefits of each method. The local search component uses a novel neighbourhood operator. A streamlined implementation of large neighbourhood search is used to achieve an effective balance between intensification and diversification. The adaptive ejection chain component perturbs the solution and uses increased or decreased computation time according to the progress of the search. While the local search and large neighbourhood search focus on minimising travel distance, the adaptive ejection chain seeks to reduce the number of routes. The proposed algorithm design results in an effective and fast solution method that finds a large number of new best-known solutions on a well-known benchmark dataset. Experiments are also performed to analyse the benefits of the components and heuristics and their combined use to achieve a better understanding of how to better tackle the subject problem.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The pickup and delivery problem (PDP) is a vehicle routing problem in which customers are paired together and a pair must be serviced by the same vehicle (Savelsbergh and Sol 1995). In other words, a load must be collected from one location and delivered to another location by a single vehicle. Clearly there are also ordering or precedence constraints to ensure that the collection site is visited before the delivery site. If there are time windows during which the customers must be visited then the problem is known as pickup and delivery problem with time windows (PDPTW) (Dumas et al. 1991). The problem commonly arises in real-world logistics and solution methodologies have significant practical application. As such, a large number of techniques have been developed for PDPTW. These include approaches based on exact methods as well as heuristics. The exact based methods have advantages such as solving to provable optimality or providing bounds. They also tend to perform very well on smaller and medium sized instances. The significant disadvantage with these methods though is that they sometimes perform poorly on larger difficult instances. Heuristic methods on the other hand tend to scale well and are more robust for larger instances but are easily outperformed on smaller instances. It could also be argued that some heuristic methods are easier to develop and maintain and to adapt to new problem requirements. These could be the reasons that the majority of commercial vehicle routing software packages use heuristic-based methods (Hall and Partyka 2016).

Most of the exact methods proposed for the PDPTW are versions of branch and cut and/or branch and price (Berbeglia et al. 2007; Parragh et al. 2008). Branch and price is a branch and bound approach where each node in the branch and bound tree is solved using column generation. For PDPTW, the nodes are linear programming, set partitioning formulations of the problem and the columns (variables) represent possible routes. Generating the new columns, known as the pricing problem, could be solved by a variety of exact and heuristic methods. Most approaches use a dynamic programming method applied to a shortest path type formulation. Solving the pricing problem efficiently is the key to a successful approach because this is where most of the computation time is used. Very efficient pricing problem solving can be achieved though through the use of heuristics and problem structure exploitation. Other significant speed ups and algorithm improvements can often be achieved through other ideas such as branching strategies, stabilisation, column management, approximations and other heuristics.

One of the earliest applications of branch and price to PDPTW is given by Dumas et al. (1991) although it was clearly limited by the computing hardware available at the time. A branch and price method published later in the decade by Savelsbergh and Sol (1998) was already able to solve larger instances of the problem in practical computation times. One of the most recent examples of branch and price applied to PDP is from Venkateshan and Mathur (2011) and an example of a column generation based heuristic applied to PDPTW is given by Xu et al. (2003).

An alternative exact method is branch and cut. Branch and cut differs from branch and price in that a different formulation is used in which all the variables are present at the start rather than being dynamically generated. New constraints are generated at the nodes of the branch and bound tree. These cuts aim to accelerate the discovery of the optimal integer solution. For PDPTW and other vehicle routing problems different families of cuts have been proposed. Examples of branch and cut methods applied to other pickup and delivery problems include (Lu and Dessouky 2004; Ruland and Rodin 1997). A recent paper by Ropke and Cordeau (2009) presents a branch and cut and price method and test it on one of the most commonly used sets of benchmark instances by Li and Lim (2003). The results show that although the smaller instances can be solved to optimality very efficiently, the larger instances are still out of reach for exact methods.

Although there are several examples of exact methods for PDP, the majority of publications are on heuristic methods and in particular metaheuristics. Many of these approaches use a variant of neighbourhood search. Due to the pairing and precedence constraints present in PDP but not present in other variants of vehicle routing problems, there is less choice of neighbourhood operators available for the problem. There are, however, three operators which were employed in the earlier metaheuristics. The first is to simply move a pickup and delivery pair from one route to another. The second is to swap a pair of pickups and deliveries between two routes. The third is to move the pickup and delivery within a route. Li and Lim (2003) and Nanry and Barnes (2000) both present metaheuristics built around these local search operators. These simpler metaheuristics were later outperformed though by methods based on large neighbourhood search (LNS). As the name implies, LNS uses much larger neighbourhoods and searches them using heuristic and/or exact methods. The motivation is that larger neighbourhoods should provide better local optima. The disadvantage is that they can be slower to search due to their increased size. One of the first examples of LNS applied to PDP is from Bent and Van Hentenryck (2006). They explore large neighbourhoods using a branch and bound method. Ropke and Pisinger (2006) then published an alternative LNS which uses a simpler heuristic method for creating and searching large neighbourhoods. Their heuristic is based on the “disrupt and repair” or “ruin and recreate” heuristics. Customers are iteratively removed from solution routes using heuristics such as similarity measures and then re-inserted using heuristics such as regret assignment or greedy assignment. The parameters for these heuristics are dynamically adapted based on their success rate. Their LNS produced many new best-known solutions on the Li and Lim benchmark instances. Another paper that has achieved notable results on these benchmark instances is the Guided Ejection Search of Nagata and Kobayashi (2010a). The algorithm only aims to optimise the single objective of reducing the number of vehicles used but does so very effectively. It is a relatively simple but effective iterative heuristic that randomly adjusts the solution using customer swaps and moves. At each iteration, attempts are made to insert heuristically selected customers from removed routes. They later adapted this method into an evolutionary approach to again further improve their results with respect to the full objective function (Nagata and Kobayashi 2010b). There are also several publications detailing metaheuristics applied to specific variants of PDP. For example, PDP with transfers (Masson et al. 2012), PDP with LIFO loading (Cherkesly et al. 2015), single vehicle PDP (Gendreau et al. 1999; Kammarti et al.2004) and dynamic PDP (Gendreau et al. 2006). For further reading on PDP there are also several survey and overview papers (Battarra et al. 2014; Berbeglia et al. 2007; Parragh et al. 2008; Savelsbergh and Sol 1995).

From the above review of related literature, it is clear that PDPTW is a well-studied variant of the vehicle routing problem. A variety of exact and heuristic methods have been proposed and this has helped to advance our knowledge into how to tackle this difficult combinatorial optimization problem. Nevertheless, it is also clear that large instances of PDPTW remain a difficult challenge to state-of-the-art techniques. In this paper, an effective and efficient heuristic method is proposed which uses several tailored neighbourhood moves within a streamlined version of adaptive large neighbourhood search (Ropke and Pisinger 2006) also incorporating guided ejection search (Nagata and Kobayashi 2010a). The proposed technique is not only competitive with state-of-the-art methods but it produces a number of new best-known solutions for the well-known Li and Lim benchmark instances (2003). Moreover, this paper also makes a contribution to increase our understanding of which combination of search mechanisms can result in a highly effective and fast hybrid metaheuristic algorithm capable of solving large instances of the PDPTW. Experimental results also show that each of the components plays an essential role to make the overall algorithm perform very well over a wide range of problem sizes.

In the next section, we provide the problem definition for the benchmark PDPTW instances tested on. Section 3 introduces the algorithm and Sect. 4 details the computational results. Finally we present some conclusions and suggested future research in Sect. 5.

2 Problem definition

A solution to the pickup and delivery routing problem with time windows or PDPTW is a set of routes for a fleet of vehicles. Each route is executed by one vehicle and consists of a sequence of pickups and deliveries at customers’ locations. Each transportation request is a pickup and delivery pair which must be executed in that order by the same vehicle while satisfying the vehicle capacity and the given time windows. The goal is to minimise the number of routes, hence the number of vehicles needed and to minimise the total travelled distance.

2.1 Parameters

- M :

-

Set of customers 1…m

- L :

-

Set of locations 0, 1…m where 0 is the depot and 1…m are the customers

- P :

-

Set of pickup customers

- D :

-

Set of delivery customers

The intersection of P and D is the empty set \((P\mathop \cap \nolimits D = \emptyset )\) and the union of P and D is M \((P\mathop \cup \nolimits D = M).\) Each pickup pi ∈ P is associated with he corresponding delivery di ∈ D. Let:

- t ij :

-

The travel time between locations i and j

- d ij :

-

The distance between locations i and j

- s i :

-

The service duration for location i

- e i :

-

The earliest time at which the service at location i can start

- l i :

-

The latest time at which the service at location i it must start

A service duration and service window have been included for the depot to make the model tidier but in the test instances the service duration for the depot is zero and the service window for the depot is unbounded. This is done in previously published models also, for example: (Nagata and Kobayashi 2010a).

2.2 Constraints

A route is a sequence of locations visited by a vehicle. A vehicle must start at a depot, visit at least two customers (corresponding to a pickup and a delivery) and return to the depot. A route of length n is therefore denoted by \(v_{0} , v_{1} \ldots v_{n} , v_{n + 1}\) where v0 and vn+1 are the depot and visits v1…vn are customers. If a route contains a pickup pi then it must also contain its corresponding delivery di (and vice versa) and pi must precede di in the sequence. These are the pairing and precedence constraints, respectively. Each pickup pi has a nonnegative demand qi and the corresponding delivery di has the demand − qi.

The current total load \(c_{{v_{i} }}\) carried by a vehicle v at a visit i where i ≥ 1 is

All vehicles have an identical capacity Q and at all visits in a route the total carried load must not exceed the vehicle capacity,

The begin time bv for each visit’s service in the route is calculated as

In a route the services must begin before or at a location’s latest service start time

A solution to this PDPTW described above is set of feasible routes which together service all customers exactly once according to the conditions established in the formulation.

2.3 Objectives

The primary objective is to minimise the number of routes, hence the number of vehicles, in the solution. To compare solutions which have the same number of routes, a secondary objective is commonly used. This secondary objective is to minimise the total distance of all routes where distance of a route of length n is

3 Hybrid large neighbourhood search and guided ejection search

As discussed in the introduction, a number of methods have been proposed in the literature to tackle the PDPTW. The algorithmic design proposed here incorporates specialised neighbourhood operators to enhance the effectiveness of the local search, adaptive ejection search to reduce the number of routes and streamlined large neighbourhood search to enhance the efficiency of the search. The motivation behind the proposed algorithm design was to identify the essential mechanisms to reduce the number of routes and the total travelled distance and combine them into a streamlined yet effective and fast method.

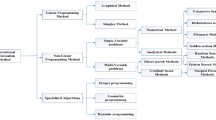

The algorithm proposed here is a combination of three separate methods:

-

(1)

A local search which uses four tailored neighbourhood operators.

-

(2)

A simplified version of the adaptive large neighbourhood search (ALNS) of Ropke and Pisinger (2006). One of the simplifications is to remove the adaptive feature so this sub-routine will be referred to as LNS only.

-

3)

A version of the guided ejection search (GES) by Nagata and Kobayashi (2010a).

An outline of the overall algorithm approach is given in Fig. 1 and each of the steps is described in detail in the following subsections. The overall strategy is to perform an effective large neighbourhood search on a solution while exploiting guided ejection chain to reduce the number of routes or perturbing the current solution if reducing the number of routes is not possible. This balance between intensification and diversification results in an effective algorithm as shown by the experimental results presented later in the paper.

3.1 Local search

The main purpose of the local search is to construct good-quality initial solutions quickly. To do this it uses four neighbourhood structures and the corresponding operators perform an exhaustive search until no further improvements can be made with respect to all neighbourhoods. The first three neighbourhood moves are used in (Li and Lim 2003; Nanry and Barnes 2000). The neighbourhood moves are:

- M1::

-

Insert an un-assigned pickup and delivery (PD) pair into an existing route or create a new route for the PD pair

- M2::

-

Un-assign an assigned PD pair and try and insert it into a different route or create a new route for the PD pair

- M3::

-

Un-assign a PD pair (pd1) from a route (r1), un-assign a PD pair (pd2) from a route (r2) and then try and insert pd1 into route r2 and pd2 into route r1

- M4::

-

Un-assign a PD pair (pd1) from a route (r1), un-assign a PD pair (pd2) from a route (r2) and then try and insert pd1 into route r2 and pd2 into a third route r3

Note that in all of the neighbourhoods above, when trying to insert a PD pair into a route the local search tries every possible position for the pickup and for each feasible position for the pickup, also tries every possible feasible position for the drop (position refers to order position in the route). This means that each neighbourhood is explored exhaustively and the best of all neighbour solutions is selected. Hence, this is part of the intensification mechanism in the proposed approach. We are not aware of the move M4 being used for PDPTW previously. The rationale behind this move is to have another mechanism for transferring PD pairs between routes. M3 does this while maintaining the same number of PD pairs in each of the two routes involved. In M4 the transfer ends up with two routes having a different number of PD pairs after the move.

A single neighbour operator is applied exhaustively until no more improving moves can be made using that operator. The next operator is then similarly applied exhaustively until no more improving moves are available, and then the next operator and so on. The order the operators are applied is M1 to M4 as in the list above. When operator M4 has been exhausted then the local search returns to operator M1. This process is repeated until there are no available improvements using any of the operators. For the smaller neighbourhoods defined by operators M1 and M2, when testing a possible insertion, a best improvement strategy is used, meaning that the insertion is tested on all available routes and the best improvement move is used. For the larger operators M3 and M4, a first found improvement strategy is used, meaning that as soon as an improving move is found then it is accepted. The local search uses the hierarchical objective because it is possible to reduce the number of routes in the solution using operators M2 and M4.

The intensified local search described above can be completed quickly but the solutions can often still be significantly improved with respect to the objectives of minimising the number of routes and minimising total distance. The next step in the algorithm is to focus on minimising the number of routes used.

3.2 Guided ejection search

Guided ejection search was originally proposed by Nagata and Braysy (2009) for the vehicle routing problem with time windows (VRPTW). Nagata and Kobayashi (2010a) then developed a version for PDPTW. It only focuses on the objective of minimising the number of routes and their analysis showed that it was very effective on this single objective. An overview of the procedure is given in Fig. 2.

The method starts by randomly selecting a route and un-assigning all the PD pairs in it. It then proceeds to try and re-insert the un-assigned pairs over the remaining routes. When it cannot insert a pair, it un-assigns (ejects) another pair(s) to allow it to insert it. It then perturbs the partial solution and tries again to insert an un-assigned pair. This is repeated until either there are no un-assigned pairs, in which case a route has been successfully removed, or a maximum number of iterations have been reached. If a route is removed then the procedure is repeated by selecting another route and un-assigning the pairs within it and then trying to insert them again over the remaining routes and so on.

The next pair selected for insertion is selected from an un-assigned pairs list on a last in first out (LIFO) basis. LIFO was also used in (Nagata and Kobayashi 2010a), possibly because it improves the efficacy of the ejection heuristic which will be described later. When trying to insert the pair each route is tested in a random order and every possible position for the pair in the route is tested. If a feasible position for the pair is found then it is inserted. If more than one feasible position is found then the position for insertion is selected randomly. As with the local search, testing each possible position means trying each possible position for the pickup and for each possible position for the pickup also trying each possible position for the drop.

If the pair cannot be inserted then an attempt is made to insert it by ejecting one or two pairs from another route. First an attempt is made to insert it by ejecting a single pair and if this fails then every set of two pairs is tested to see if their ejection would allow the insertion. A maximum of two pairs was used for increased speed. If more than one set of pairs can be ejected to allow the insertion of the pair, then the set to eject is selected heuristically. Every time an attempt is made to insert a pair, a counter for that pair is increased by one. The heuristic for choosing which pair to eject is the pair with the lowest sum of the counter values (i.e. the set that has been previously attempted to be inserted the least number of times). The motivation behind the heuristic is that if a pair was previously difficult to insert (i.e. the counter value is high) then try not to eject it because it may be difficult to insert again.

The perturbation procedure at line 16 of Fig. 2 not only creates the possibility of later being able to insert pairs but it also reduces the risks of cycling. The perturbation randomly selects one of two possible move operators (each with 0.5 probability) and then executes the move on the current partial solution. The first move (PairMove) randomly selects a route and a PD pair within it, then randomly selects a second route and attempts to move the pair to a feasible position in the second route. If there is more than one feasible position in the second route then one is randomly selected. The second move (SwapMove) randomly selects two routes and a pair within each route. It then un-assigns the pairs and attempts to insert them into feasible positions in the opposite route. This time it selects the best possible positions (according to the secondary objective function—minimise total distance) rather than a random position. The perturbation finishes when ten moves have been executed.

The implementation in the present work is similar to the original version by Nagata and Braysy except for two changes. The first difference is at line 14. The original algorithm examines all sets of pairs for ejection up to a fixed size. The larger the fixed size, the more sets there are to examine and the longer the algorithm takes. The approach in this paper only examines sets of length one first. That is, it tries ejecting a single pair first and then if this fails in allowing the insertion, then it tries ejecting two pairs. Again the two pairs are selected by minimising the sum of their previous insertion attempt counters.

The second main difference is the stopping condition. Instead of finishing after a certain number of iterations or a fixed time limit, the number of iterations is extended based on the progress of reducing the number of un-assigned pairs. Every time a new partial solution with a new smallest number of un-assigned pairs is found then a counter is reset to zero. The counter is increased by one each time an attempt is made to perturb the solution by doing either PairMove or SwapMove. The procedure terminates if the counter reaches a predefined value (one million in our implementation). The motivation behind this heuristic is to terminate quickly if the progress suggests that the route will not be removed but to provide more time when the number of un-assigned pairs is being reduced but more slowly. This modified guided ejection chain mechanism maintains the intensification ability of the original approach but it also incorporates an adaptive ability to push the intensification or not according to the current solution.

3.3 Large neighbourhood search

After the modified GES, the local search is applied again followed by a large neighbourhood search. An overview of the LNS is shown in Fig. 3.

The LNS can be described as a “disrupt and repair” heuristic. It repeatedly un-assigns some PD pairs from a solution and then attempts to heuristically re-assign them but creating an improved solution. The method is based on the ALNS of Ropke and Pisinger but with several changes. One of the main changes was to replace a simulated annealing + noise acceptance criterion with late acceptance hill climbing (LAHC) (Burke and Bykov 2016). The main reason for this was to have a streamlined version by simplifying parameter setting because LAHC has only one parameter to set. LAHC is very similar to SA in that it accepts non-improving solutions but it replaces the probability-based acceptance criterion by a time-based deterministic one. At the start of the algorithm, LAHC may accept many non-improving solutions and so provide more search diversification whereas at the end the search intensifies as less and less non-improving solutions are accepted. LAHC is described by Fig. 4. In the figure, the initial solution is the solution created by the GES phase followed by the local search and the candidate solutions are the solutions generated by the removal and re-assignment heuristics. The LHC_LEN parameter was set as 2000 in all the experiments. A small amount of testing was performed in selecting this parameter but these initial tests suggested that this parameter did not have a large impact on the overall performance of the entire algorithm. It is possible that some additional performance gains could be achieved by tuning this parameter or more advanced sensitivity analysis (or even dynamically adapting it).

In the LNS, two removal heuristics are used: Shaw removal (1998) and random removal (Ropke and Pisinger 2006; Shaw 1998). At each iteration, one of the removal heuristics is randomly selected and applied. The Shaw removal heuristic aims to select a set of PD pairs that are similar. The idea is that if the pairs are similar then there is more possibility of re-arranging them in a new and possibly better way. If the pairs are all very different then they will probably be replaced exactly where they were originally assigned. The pair characteristics that are used to measure their similarity are: distance from each other, arrival times and demand. The formula for calculating the similarity is the same as given in Ropke and Pisinger (2006). The second heuristic is to simply randomly select a set of pairs. The probability of selecting the Shaw heuristic is set at 0.6, else the random selection heuristic is used. This creates a slight bias towards using the intelligent Shaw heuristic over the un-intelligent random heuristic. The number of pairs to remove by each heuristic is a number randomly selected from the range 4–80. These values were selected based on the results and guidance from (Ropke and Pisinger 2006).

To re-assign the pairs, the regret assignment heuristic only is used (Ropke and Pisinger 2006). The regret heuristic tries to improve upon greedy assignment by incorporating look-ahead. It does so by not only considering the best possible route for a pair insertion but by also the second, third, fourth… kth best routes. When selecting which pair to insert next it selects pairs that have less possible positions for insertion that are low cost relative to their other possible positions. The motivation is that if that pair is not inserted now there may be regret later if that position is no longer available due to a previous insertion in the route. The parameter k is randomly selected from 2, 3, 4, 5, #R, where #R is the number of routes in the current solution.

Although the GES only uses the objective of minimising the number of routes and ignores the objective of minimising distance, the LNS uses the full hierarchical objective. It is possible for the LNS to remove routes if a removal heuristic selects a set of pairs which includes all the pairs for an entire route and then the assignment heuristic re-assigns them over other routes. During the testing we did observe the LNS reducing the number of routes in a solution occasionally but as will be shown, the GES is far more effective for minimising the total number of routes.

The LNS stops when a minimum number of iterations (800 in this paper) without improvement have been done or both of the following are satisfied:

-

(1)

There was no improvement in the last 400 iterations.

-

(2)

And a minimum time limit has been reached, set as twice the time taken to complete the GES phase.

This streamlined LNS maintains the diversification and intensification ability but at the same time it excludes the adaptive mechanism which was shown to provide only a few extra percent benefit in performance (Ropke and Pisinger 2006).

3.4 Restarts

After the LNS is completed, the overall algorithm goes to step 3 in Fig. 1 to try reducing the number of vehicles again in the best solution found so far, using GES. After, if the number of vehicles was not reduced then the best solution so far is perturbed using the same perturbation function as in GES. The number of perturbation moves is set as the instance’s number of PD pairs multiplied by 0.2. This is larger than the perturbation used within the GES phase where only ten moves are made. A larger perturbation is performed here to increase the search diversification, whereas within the GES phase the perturbation is to try and allow a single PD pair to be inserted. The algorithm then continues by applying the local search followed by LNS again and so on. The algorithm terminates when a maximum time limit is reached. Hence, the heuristic approach proposed in this paper alternates between a random (when no route is removed) and a greedy (when a route is removed) perturbation to the current solution to then perform an effective LNS that balances intensification and diversification. All algorithm parameters are summarised in Table 1.

4 Results

To test the algorithm, the benchmark instances of Li and Lim (2003) are used.Footnote 1 There are approximately 360 instances categorised into six groups of different sizes ranging from approximately 50 PD pairs up to 500 PD pairs. Each group is also subdivided into instances with clustered locations, randomly distributed locations and randomly clustered locations. Each subgroup is then further split by instances with short planning horizons and instances with long planning horizons.

Three sets of experiments were performed. The first was to investigate the benefit of the adaptive heuristic added to the GES. As described earlier, this heuristic terminates the GES phase more quickly when the progress suggests that an extra route will not be eliminated but allows more time when the progress suggests it is getting closer to removing a route. The second set of experiments was to investigate different configurations of the individual components and their combined benefit. The third analysis was to simply compare solutions generated with the current best knowns.

For the first two sets of experiments, five different algorithms were applied to all the test instances. The algorithms tested are the full algorithm and then four other versions, each with different components removed. The aim was to investigate the impact of the individual components or whether there was not any benefit in combining components when given the same computation time, or if combining the algorithms produces a more effective overall algorithm. The configurations were as follows:

-

1.

LS + AGES + LNS (Algo1): The full algorithm as described and using the adaptive heuristic for the GES (labelled Adaptive GES).

-

2.

LS + GES + LNS (Algo2): The same as 1 but without the adaptive heuristic in GES.

-

3.

LS + AGES (Algo3): The same as 1 but without the LNS phase, to see if the LS alone is sufficient at minimising the distance objective.

-

4.

LS + LNS (Algo4): The same as 1 but without the AGES phase which aims at minimising total routes. LS and LNS are both also able to minimise total routes on their own but this test was to investigate whether they are sufficient on their own if given the extra time not used by the removed AGES phase.

-

5.

AGES + LNS (Algo5): The same as 1 but with the LS phase removed. Previous papers indicated that LNS is much more effective than LS so there may be no benefit in including the LS phase, and instead just giving more time to the LNS phase.

Note that GES will exit sooner than AGES and so LNS in Algo2 will also have less time than LNS in Algo1 per iteration. However, because all algorithms are being run for the same fixed time Algo2 will complete more iterations than Algo1 and so the overall CPU time distributed between the different phases will be similar overall.

On the ‘100’ group of instances, 5 min of computation time was allowed. On the ‘200’ and ‘400’ groups, 15 min. On the ‘600’ group, 30 min and on the ‘800’ and ‘1000’ groups, 60 min. These values were chosen based on similar run times in other papers (Ropke and Pisinger 2006; Nagata and Kobayashi 2010a). All runs were performed on an Intel Xeon CPU E5-1620 @ 3.5 GHz utilising a single core per run. 32 GB RAM was available (although testing showed the algorithm requires a maximum of 70 MB on the largest instances). The code was written in C#.

Table 2 lists the total number of vehicles used and the total distance for all the solutions for each group of instances, for each algorithm. These results are further broken down in Table 3 in which we rank the algorithms by how they performed against each other. For each group of instances, we record the total number of times that each algorithm found the best solution, the second best solution, the third best, fourth best and fifth best out of the five algorithms. Kendall’s non-parametric test is applied to the rankings to determine if the pairwise comparisons between two algorithms are statistically significant. The mean values and P values used in the statistical test are given in Table 4. Pairwise comparisons are made to see if the differences are statistically significant at the 0.05 level.

For the pairwise comparisons we define A < B as meaning A has lower rank than B but the pairwise comparison is not significant. We define A ≪ B as meaning A has lower rank than B and the pairwise comparison is statistically significant. The rankings are as follows:

Over all instances we may rank the five algorithms as Algo1 < Algo2 ≪ Algo5 ≪ Algo4 < Algo3.

-

On the ‘100’ instances the rank result is Algo2 < Algo1 < Algo4 < Algo3 < Algo5.

-

On the ‘200’ instances the rank result is Algo2 < Algo1 ≪ Algo4 < Algo5 < Algo3.

-

On the ‘400’ instances the rank result is Algo2 < Algo1 ≪ Algo5 < Algo3 < Algo4.

-

On the ‘600’ instances the rank result is Algo1 < Algo2 < Algo5 ≪ Algo3 < Algo4. (Algo1 ≪ Algo5).

-

On the ‘800’ instances the rank result is Algo1 < Algo2 < Algo5 ≪ Algo3 < Algo4.

-

On the ‘1000’ instances the rank result is Algo1 < Algo2 < Algo5 ≪ Algo3 < Algo4.

Looking at Table 2 adaptive GES produces solutions with less routes than the GES (apart from the 100 and 200 instances where they are the same). When we compare the rankings pairwise, GES is better on the smaller instances but AGES is better on the larger instances and over all instances. However, the mean rankings are too similar to say the difference is statistically significant.

Investigating the benefit of including the (A)GES phase, it is clear that it is very effective. Algo4 (no GES) is always worse than Algo1 and Algo2 (the full algorithms with AGES or GES) and the pairwise comparisons are statistically significant. Similarly, it is clear than the LNS is an important component of the algorithm. When the LNS phase is removed (Algo3), the full algorithms (Algo1 and Algo2) are significantly better. It is clear than giving extra time and more iterations to LS and GES is not as effective as including the LNS albeit with less time for each phase and less iterations. Algo5 is also statistically better than Algo3 showing that using LNS instead of LS is more effective. Finally, we can conclude that over all instances, including the LS phase is more effective than not including it (Algo5) but on the largest instances 800 and 1000, the superiority is still visible in the mean rankings but is not large enough to be statistically significant. These results show that combining the three components in this configuration, local search with specialised moves, streamlined large neighbourhood search and adaptive guided ejection search, is more effective than using just two of the components. Using all three components means there is less time available for each method but it still more effective than just using two of the components even if there is more time available for each individual phase.

Next, we compare the results against the best-known results in peer-reviewed publications and the current best knowns that have been verified on the SINTEF website but have not been published in peer-reviewed outlets and for which no information is available about computation times and methods used. For comparing against published methods (Tables 2, 5), we use the results of the adaptive LNS method of (Ropke and Pisinger 2006) and the GES method of (Nagata and Kobayashi 2010a). These are the current best-known published results. Comparing against these results is not simple though. For the best results of (Ropke and Pisinger 2006) we do not know the computation times. For their best results the authors “report the best solutions obtained in several experiments with our ALNS heuristic and with various parameter settings”. We do not know how many experiments were run but we have an indication of computation times which are from 66 s per run on the smallest instances to 5370 s per run on the largest instances. We also know that the heuristic was run at least “5 or 10 times on each instance” (not including the different parameter setting testing) and that a 1.5 GHz Pentium IV processor was used. Again, comparing against Nagata and Kobayashi is difficult because their algorithm only minimises the number of vehicles used and we know from our results that using less vehicles often increases the total distance. They used an Opteron 2.6 GHz processor. Due to the unknown computation times, the differences in computing power and the difficulty in comparing summed values for a problem with hierarchical objectives we cannot have strong conclusions. The GES method produces solutions with less vehicles in total but the algorithm uses all its time minimising this objective where as our method only uses a large proportion of its time also minimising distance. The ALNS uses both objectives but produces solutions with more vehicles in total. Comparing total distance is not helpful because often solutions with less vehicles have longer distance. To assist future researchers and facilitate future comparisons, we have included in this paper in Table 12. Results for a Single Run of Algo1 Table 12 our results for a single run for a fixed run time for a single configuration (Algo1).

For the next comparison, we compare against best knowns from published and unpublished methods. Tables 5, 6, 7, 8, 9 list the solutions found after applying the LS + AGES + LNS algorithm on all instances. The algorithm was allowed 1 h computation but the best reported may be the best from several tests with different random seeds. Each table lists the solutions for each set of instances grouped by the number of locations. The tables also list the previous best-known solutions for each instance, and the date it was found. The information is taken from SINTEF’s website which is regularly updated.Footnote 2 Solutions in italics are equal to previous best knowns and solutions in bold italics are new best knowns.

The results show that the algorithm was able to find a large number of new best-known solutions. On the 100 site instances the algorithm equalled the best knowns on all instances. On the 200 site instances 35 best knowns were equalled and seven new best knowns were found (out of 60). Of the seven new best knowns 3 were improvements in terms of the number of vehicles. For example, on the instance LR2_2_6, the new best known has a solution of three vehicles, whereas the previous had four vehicles. This was an impressive result because the previous best known had stood for 15 years. On the 400 site instances there are 19 equal best knowns and 22 new best knowns (out of 60). On the 600 site instances there are six equal best knowns and 33 new best knowns (out of 60). On the 800 site instances there are five equal best knowns and 45 new best knowns (out of 60). On the 1000 site instances there are four equal best knowns and 35 new best knowns (out of 58). For many of the new best knowns the primary objective of reducing the number of vehicles is improved. This is particularly noticeable on the larger instances where the number of vehicles is reduced by more than one vehicle. For example, on instance LRC1_10_5, the previous best known required 76 vehicles, whereas the new best known has only 72 vehicles. This demonstrates the benefit of using the AGES within the algorithm specifically for reducing the number of vehicles.

5 Conclusion

This paper proposes an effective and fast hybrid metaheuristic algorithm to tackle the pickup and delivery problem with time windows (PDPTW). The approach performs a large neighbourhood search (LNS) that incorporates mechanisms for intensification and diversification. The approach also incorporates mechanisms to perturb the current solution. Such perturbation can be greedy by removing a full route from the solution through guided ejection search, or random when such removal is not successful. Then, alternating the LNS with guided ejection search and local search has resulted in a relatively simple but demonstrably effective framework. The guided ejection search is specifically designed for minimising the number of routes within solutions. An adaptive heuristic is developed for the guided ejection search phase which provides more time to the heuristic when its progress suggests it is close to removing a route. The local search and large neighbourhood search are more focused on minimising travel distances. The aim is to combine these strengths into an overall robust and successful method. A new search neighbourhood operator was added to the local search method and the LNS was streamlined and simplified without loss of efficacy.

The results show that when any one of the components is removed the results are significantly worse. In other words, two components given more time is not as effective as the three components but with less time for each component. The adaptive heuristic for the GES phase is particularly effective on the larger instances. Including the local search phase benefits the smaller instances and including the LNS phase is better for all instance sizes. When tested on a large and well used benchmark dataset, the algorithm is able to find 142 (out of 354 instances) new best-known solutions, confirming its efficacy. The many new best-known solutions obtained with the LS + AGES + LNS heuristic algorithm proposed here have been already verified and hence published in the SINTEF’s website.

Although a large amount of research, development and testing was required to develop this algorithm, there are still possibilities for further research. The Li and Lim benchmark instances are a very useful resource that have stimulated and enabled innovative research within a competitive and verifiable environment. Although that research forms the basis for many commercial vehicle routing problem solvers (Hall and Partyka 2016) it could be argued that more realistic benchmark instances could lead to even more effective methods for real-world problems. Datasets that contain requirements, such as driver break rules, maximum driving hours, working time constraints, and soft time windows, could have significant practical benefit. We believe that the algorithm presented could be adapted to handle these requirements but there would undoubtedly be new research required for such new challenges within benchmark datasets.

Notes

Retrieved from http://www.sintef.no/projectweb/top/pdptw/li-lim-benchmark/ on 25-Feb-2016.

References

Battarra M, Cordeau J, Iori M (2014) Chapter 6: pickup-and-delivery problems for goods transportation. In: Vehicle routing. Society for Industrial and Applied Mathematics. pp 161–191

Bent R, Hentenryck PV (2006) A two-stage hybrid algorithm for pickup and delivery vehicle routing problems with time windows. Comput Oper Res 33(4):875–893

Berbeglia G et al (2007) Static pickup and delivery problems: a classification scheme and survey. TOP 15(1):1–31

Burke EK, Bykov Y (2016) The late acceptance hill-climbing heuristic. Eur J Oper Res 258:70–78

Cherkesly M, Desaulniers G, Laporte G (2015) A population-based metaheuristic for the pickup and delivery problem with time windows and LIFO loading. Comput Oper Res 62:23–35

Dumas Y, Desrosiers J, Soumis F (1991) The pickup and delivery problem with time windows. Eur J Oper Res 54(1):7–22

Gendreau M, Laporte G, Vigo D (1999) Heuristics for the traveling salesman problem with pickup and delivery. Comput Oper Res 26(7):699–714

Gendreau M et al (2006) Neighborhood search heuristics for a dynamic vehicle dispatching problem with pick-ups and deliveries. Transp Res Part C Emerg Technol 14(3):157–174

Hall R, Partyka J (2016) Vehicle routing software survey: higher expectations drive transformation. ORMS-Today 43(1):40–44

Kammarti R et al. (2004) A new hybrid evolutionary approach for the pickup and delivery problem with time windows. In: systems, man and cybernetics, 2004 IEEE International Conference on

Li H, Lim A (2003) A metaheuristic for the pickup and delivery problem with time windows. Int J Artif Intell Tools 12(02):173–186

Lu Q, Dessouky M (2004) An exact algorithm for the multiple vehicle pickup and delivery problem. Transp Sci 38(4):503–514

Masson R, Lehuédé F, Péton O (2012) An adaptive large neighborhood search for the pickup and delivery problem with transfers. Transp Sci 47(3):344–355

Nagata Y, Bräysy O (2009) A powerful route minimization heuristic for the vehicle routing problem with time windows. Oper Res Lett 37(5):333–338

Nagata Y, Kobayashi S (2010a) Guided ejection search for the pickup and delivery problem with time windows. In: Cowling P, Merz P (eds) Evolutionary computation in combinatorial optimization: 10th European Conference, EvoCOP 2010, Istanbul, Turkey, April 7–9, 2010. Proceedings, Springer: Berlin, Heidelberg. pp 202–213

Nagata Y, Kobayashi S (2010b) A memetic algorithm for the pickup and delivery problem with time windows using selective route exchange crossover. In: Schaefer R et al. (ed) Parallel problem solving from nature, PPSN XI: 11th international conference, Kraków, Poland, September 11–15, 2010, proceedings, Part I, Springer: Berlin, Heidelberg. pp 536–545

Nanry WP, Barnes JW (2000) Solving the pickup and delivery problem with time windows using reactive tabu search. Transp Res Part B Methodol 34(2):107–121

Parragh S, Doerner K, Hartl R (2008) A survey on pickup and delivery problems. J für Betriebswirtschaft 58(1):21–51

Ropke S, Cordeau J-F (2009) Branch and cut and price for the pickup and delivery problem with time windows. Transp Sci 43(3):267–286

Ropke S, Pisinger D (2006) An adaptive large neighborhood search heuristic for the pickup and delivery problem with time windows. Transp Sci 40(4):455–472

Ruland KS, Rodin EY (1997) The pickup and delivery problem: faces and branch-and-cut algorithm. Comput Math Appl 33(12):1–13

Savelsbergh MWP, Sol M (1995) The general pickup and delivery problem. Transp Sci 29(1):17–29

Savelsbergh M, Sol M (1998) DRIVE: dynamic routing of independent vehicles. Oper Res 46(4):474–490

Shaw P (1998) Using constraint programming and local search methods to solve vehicle routing problems. In: Maher M, Puget JF (eds) Principles and practice of constraint programming—CP98: 4th international conference, CP98 Pisa, Italy, October 26–30, 1998 Proceedings. Springer: Berlin, Heidelberg. pp 417–431

Venkateshan P, Mathur K (2011) An efficient column-generation-based algorithm for solving a pickup-and-delivery problem. Comput Oper Res 38(12):1647–1655

Xu H et al (2003) Solving a practical pickup and delivery problem. Transp Sci 37(3):347–364

Funding

The funding was provided by Innovate UK (102037)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Curtois, T., Landa-Silva, D., Qu, Y. et al. Large neighbourhood search with adaptive guided ejection search for the pickup and delivery problem with time windows. EURO J Transp Logist 7, 151–192 (2018). https://doi.org/10.1007/s13676-017-0115-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13676-017-0115-6