Abstract

Metallic eutectics play an important role in casting technology and properties. For this reason, the study of eutectics microstructure is indispensable in the casting qualification. Eutectics have many similar characteristics, including the morphology, size and spatial arrangement of eutectic phases. This makes it possible to develop a method of general use based on analyzing eutectic microscopy images. The method presented in this article performs a posteriori background correction for OM images. The shape and size of phases are determined using cellular automata and machine learning. Another cellular automaton and cluster analysis characterizes the spatial arrangement of eutectic phases. It can also be used to determine the distance between objects both locally and within a given object group. The algorithm is suitable for exploring and examining the spatial clustering of objects. The methods can be included in an algorithm, so a detailed examination of the eutectic microstructure can be carried out. The method was tested on micrographs of Al-Cu, Al-Ni, Al-Si and cast irons.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Eutectics are encountered in a wide range of material systems [1,2,3], but metallic eutectics have industrial importance [4, 5]. Casting exploits their low melting point and favorable properties for mass production [6]. Aluminum and its alloys are being increasingly studied for industrial applications [7, 8], and aluminum castings play a major role in this field [9, 10]. In addition, cast irons are produced in large quantities as high-performance castings [11, 12]. The properties of the eutectic microstructures, and thus the properties of the castings, are largely influenced by eutectic crystallization and its structure [13, 14]. Eutectics can be grouped according to the phase shape, such as plate, rod and vermicular [15]. The degree of regularity of the phase arrangement can be determined by the regularity or irregularity of the eutectic [16]. In addition, the size and arrangement of the phases are also determined by the crystallization parameters [17]. In other words, describing the eutectic microstructure is a complex task. Stereology has long been concerned with describing the arrangement and average geometric properties of regular or irregular, rod-like or lamellar structures [18]. Based on the stereological context, various elaborate image analysis procedures were developed to provide solutions to a given testing problem of a given eutectic. The description of a regular lamellar structure is a well-defined problem [19]. However, in the case of irregular plates, the problem is not as clear, and therefore, several solutions have been developed [20,21,22]. The driving force for this is the investigation and characterization of aluminum-silicon eutectics. Another frequently studied problem is the investigation of cast irons, including gray and CGI (compacted graphite iron) castings [23,24,25,26]. Separating spheroidal and nonspheroidal graphite grains by shape is also necessary, especially for CGI castings, for which several solutions have been proposed [27, 28]. Characterizing regular rod-like eutectics can also be considered a simple task if we do not consider eutectic cells and degenerated parts [29, 30]. We also encounter several issues if we consider this task in terms of complexity. Almost all eutectic characterizations raise the issue of phase arrangement, which is the exploration of eutectic cell structures [31]. Numerous tasks have initiated several solutions that share common stereological principles but are nevertheless distinguished by the procedures and evaluation methods used. In all mentioned cases, for eutectic structures, it is useful to develop a method that can be applied in almost all cases, for all morphologies, either for examining castings with hypo- or hypereutectic compositions or for the examination of castings with hypoeutectic or hypereutectic compositions, and to provide qualified results. Today's digital tools provide a wide range of possibilities in image processing and analysis tasks [32]. Digital image analysis is, therefore, currently used in almost every aspect of life [33]. Many computational methods have been applied that would not have been conceivable without the spread of digital computers [34]. One of these old and well-developed computational methods is cellular automata, which can be found in many cases in digital image processing methods [35, 36]. Cellular automata are discrete dynamic systems which consist of a regular grid of cells. Each cell has one of a finite number of predefined states. The automata compute the evolution of states based on the local interactions between the neighboring cells in the grid. A great advantage of cellular automata is that both the digital representation of the image and the imaging devices fit well with the basis of the method, which provides the aforementioned applications [37]. Cellular automata were chosen as the basis of the developed method for analyzing eutectic micrographs because of the computational advantage it provides [38]. Of course, this is true for image processing, i.e., the description of visual information with numerical data. However, evaluating these data requires other state-of-the-art solutions. Cluster analysis [39] and machine learning algorithms [27] are widely used in image processing and analysis. Cluster analysis provides several possibilities and algorithms for classifying similar or close objects in each manifold into groups [40]. Of course, when analyzing eutectic structures, the spatial arrangement of the eutectic phases is the main issue where these methods can be applied. Machine learning algorithms are also preferentially used in image analysis, for example, shape classification [41] and, thus, for shape characterization [42]. The role of shape description is twofold in the analysis of eutectic structures. The morphology of the eutectic phases must be recognized. For a known morphology, the stereological relations already define the linear dimensions, such as distances. However, as mentioned in the case of cast irons, the individual shapes must also be recognized to qualify the cast iron. Several possibilities for classification exist. We have chosen the support vector machine (SVM) algorithm for its simplicity and robustness [43]. Although it is basically a binary classifier, it can be easily used to create a multiclass classifier. Cellular automata, cluster analysis and SVM have the advantage of allowing microscopy images to be analyzed without user intervention. However, this requires images of a quality that can be processed by the algorithms in all cases and the correct result obtained. This requires an analysis of the characteristics of microscopy images [44, 45]. Images of eutectic specimens need to be segmented before analysis, and segmentation requires images with good contrast ratios [46]. This can be easily achieved for both OM and SEM images, considering that the algorithms chosen should not involve user intervention. Choosing an algorithm that is not parameterizable is recommended so that it can be applied to most images [47]. A method that does not require setting parameters from image to image ensures that it can be applied to all planned photo recordings without user intervention. A common problem in OM images is uneven background illumination, which can be easily addressed by a priori correction [48]. However, it should also be prepared for the case where background correction must be performed a posteriori [49]. The conditions for applying such an algorithm are either defined or designed such that it does not cause adverse changes in the corresponding background illumination image. For example, a grayscale image can be seen as a terrain, where height is represented by the intensity values of the pixels. Lighter areas of the image are higher in this relief, while dark areas lie lower. Uneven illumination appears as an image-sized terrain element, while details in the image are displayed in smaller elevations and valleys. Accordingly, we can develop an efficient computation procedure for correcting the illumination of the image. The lava-flow automaton, a cellular automata method, has also provided inspiration [50, 51] for this purpose. Segmentation can now be solved using a histogram analysis-based method [52]. A method is presented in this paper that can numerically analyze OM or SEM images taken from eutectic microstructures by characterizing the main geometric properties of the eutectic with minimal user intervention. Advanced algorithms provide a detailed picture of the eutectic under investigation. User intervention is increased during the evaluation phase when the classification criteria and acceptance criteria are defined. Their fulfillment is verified.

Materials and Methods

Our aim was to develop an automatable method requiring minimal user intervention, which can be generally applied to the analysis and classification of eutectics of multiple morphologies, even in hypo- or hypereutectic alloys. In addition, another aim was to develop a method suitable for the analysis of OM and SEM images. The automatic image analysis algorithm can extract the following data from microscopy images of the eutectic microstructures under investigation: (i) the morphology of the eutectic phases (plate, spherical/rod, compact, vermicular), (ii) the characteristic linear size of the phase corresponding to the morphologies and (iii) the distances between objects and the fact of clustering. When clusters are defined, the size of the clusters can also be investigated. The first step in the computation is to determine the morphology of the second phase since this is the basis for interpreting its characteristic linear size (Fig. 1). For this purpose, a cellular automaton implementing deterministic ultimate erosion was used. In each step, each pixel of the contours of the second phase (i.e., objects) is removed. In each cellular automata step, the perimeter (P) and area (A) of the objects are recorded in a database. The area is determined by counting the pixels belonging to the object, and the perimeter is determined by counting the pixels that make up the contour, as shown in Fig. 2. Several other geometrically more accurate approximations of the digital perimeter are also used, but they represent more detailed calculations than simple counting. In our effort to be as close as possible to cellular automata applications, counting is technically the appropriate estimation method. However, it follows from the method that this approximation also provides adequate accuracy for the goal. The cellular automaton, which performs an ultimate erosion, runs until all pixels associated with all objects have disappeared from the image. Once the database has been built, the relation (1), which approximates the compactness of the objects, is used. The compactness value (R) of each object is calculated at each cellular automata step. This dataset serves as input to a support vector machine-based classification procedure, which performs the classification as follows.

Measuring method of an object's perimeter. The measured perimeter are those of the pixel sides of the pixel whose Moore's neighbor is the background. The green color indicates the boundaries of the pixels—for ease of understanding, the pixels of the object are white and the background is black. The counted pixels are along the red line

For each cellular automata time step, the compactness of each object is classified. Since the SVM is a binary classifier, the procedure separates objects with a plate morphology from those with a nonplate morphology in the first step. Then, it separates the vermicular from the non-vermicular objects, which excludes plate-like objects. It then separates the compact objects from the spherical objects in the same way. Three SVMs classify the objects one after the other in the order described. In each automata step, the object is assigned to one of the classes. At the end of the process, the object is assigned to the class that has received the most votes until the last step. In training the SVM, we used all the eutectic objects under consideration and all the morphologies of the objects that occurred to make the classification as broad as possible. The training dataset consisted of 40 elements. Testing showed 94.4% accuracy. The SVM parameters are as follows: Cost = 1.00 (penalty term for loss in SVM method); ε = 0.10 (distance from true values within which no penalty is associated with predicted values in SVM method); kernel function: tanh(gx·y + c) where g = 0.02; and c = 10. At the end of the classification process, information on the morphology of all objects is available, allowing the size to be interpreted. The size is interpreted by class, as shown in Fig. 1. The size of the objects is d = tn*sf/2, where d is the characteristic linear size, tn is the cellular automata step needed to make the object disappear, and sf is the scaling factor (µm/pix), which is the real size of a pixel. Information on both shape class and linear size is available for each object. Thus, both individual and average evaluations can be performed based on the given parameters. However, for the characterization of eutectic structures, this is not enough data. An analysis of the spatial distribution of phases is also necessary. The task of the second measuring cellular automaton is to determine the distances between objects. In this case, each object is given a unique identifier (labels) before the computation. During the cellular automaton operation, the objects undergo stochastic dilatation at each cellular automaton time step. When the objects are merged, the number of dilatation steps and the identifiers of the merged objects are recorded in a database. A dendrogram can be subsequently generated, from which the distance between the objects can be read and the clustering can be displayed after the operation of the mentioned automaton. Images taken with a Zeiss Axio Imager M1m optical microscope at different magnifications were used to study the materials under investigation. In addition, to ensure the fineness of the microstructure, images were taken with a Zeiss EVO MA10 scanning electron microscope. The materials investigated are Al-Cu, Al-Ni, graphite cast iron and Al-Si eutectics. The selected material samples from various research projects were provided by the Laboratory of Metallography of the Faculty of Materials and Chemical Engineering at the University of Miskolc [2, 3, 29,30,31]. For optical microscopes in brightfield mode, uneven background illumination can prevent automatic segmentation of images based on histogram analysis. A lava-flow automata-inspired algorithm was developed to overcome this error that can be applied to all types of images. Images that have this error are corrected, but images without the error are left unchanged. Therefore, automatic measurement algorithms can process images with uniform intensity ratios. The algorithm also has the advantage of performing a true a posteriori correction so that no image other than the original image is needed. The introduced parts of the analysis algorithm used are shown in Fig. 3. First, the color images are converted to grayscale. Background correction is then applied to the resulting image. Then, the method segments the images using the ITER (iterative selection method) procedure [53, 54]. The matrix phase and the second phase are selected (the largest area of the matrix phase is bounded by the edge of the image). The objects are labeled, and the method ultimately results in erosion. Moreover, classification is also performed. Then, stochastic dilation of the segmented and labeled images is performed. The dendrogram is built from the registered merging of the objects. The evaluation of the dendrogram can be performed manually, but our method proposes an automatic solution. From the dendrogram, the number of merges associated with each cellular automata step can be derived by counting. When there is a sudden jump in the number of merges, the method proposes a clustering option. Of course, when the groups are also grouped, the procedure provides several suggestions, from which the user only needs to choose the group to use for evaluation and qualification. However, this is no longer the result of the image analysis but part of the dendrogram analysis, from which more detailed information can be extracted (distance within groups, distance between groups, etc.).

The workflow of the automatic image analysis. In the first step, input image is converted to YCC (luma, blue- and red-difference chroma) color encoding, only the intensity value remains. The next step is lava-flow background correction. The following stage is the ITER autosegment algorithm. In the fourth point, the first cellular automaton collects the data of objects by erosion. Black is the background, the brighter parts of objects are the more eroded parts. In the following step, the morphology of objects can be determined by SVM. In the next stage, the second cellular automaton collects the data between objects. In this case, the background is white, the initial state of objects is black, and during the dilatation, the brighter parts are more dilated. Using these data, the clustering can be visualized in eight and ninth steps

Results and discussion

Several methods have been proposed and used to correct a posteriori data for uneven background illumination. The reason for developing a new method is to ensure a good fit with the other methods of the whole procedure, i.e., to allow as much of the workflow as possible to be performed without changing the architecture. Uneven backlight illumination significantly affects the measurements of OM images because it complicates the segmentation operation. Automatic segmentation algorithms without arguments do not work properly on images with this error (Fig. 4). This issue has been addressed in the literature [48, 49], but microscope manufacturers also include background correction algorithms in their own software. A common feature of these methods is that the correction requires another image. This image contains only a well-illuminated background. However, these images are not always available, which is why it is necessary to perform a posteriori correction. A method is needed that eliminates this major drawback when segmenting. In fact, the developed method is used to produce missing images for background correction. The method chosen to correct the background illumination is based on the lava-flow algorithm, which is used to predict the direction of lava flows. Using this method does not require even a preprocessed image of the background; only the existing micrograph is needed for the correction. For the analysis, the color images are also converted to grayscale images, so the algorithm is based on a 256-state cellular automaton. In the first step, the position of the area of maximum brightness and its value are determined in the image. This is, in fact, the location of the lava eruption. In determining the area, the image is first cropped to four equal pieces, then the brightest area is selected, and the brightest area is cropped again in the same way; then, the brightest area is selected. This process continues until the area is no larger than ~ 3x4 pixels. The boundary conditions are then defined. In the present case, this means that the two adjacent edge pixel rows at the edges of the image are made equal regarding the intensities. Then, a gray linear close morphological operation is performed on these pixel rows and columns. Along each border, the intensity value is changed by the maximum intensity value of that pixel row or column. This operation creates a barrier that then controls the flow of lava off the image, i.e., the lava does not flow off the image abruptly. In the case of flowing lava, the density of the lava is assumed to be constant, i.e., the flow velocity in all directions is determined only by the intensity gradient, and we obtain Equation (2). The relief that determines the lava flow is the surface built up from the intensity values of the pixels in the image. This formula can, in fact, be derived from the finite difference method; only in this case can the states be integer values between 0 and 255. This operation can be interpreted as a flowing lava analogy, in which case the lava has a lower viscosity and does not contain solids or erodes the surface. The lack of erosion affects the character of the original image only in that the lava fills the trenches and holes represented by the dark objects and spreads out across the image to the extent defined by the barrier at the edges of the image. During cellular automaton operation, the lava spreads out, as shown in Fig. 4. At each step, the cellular automaton performs the operation described by the following formula:

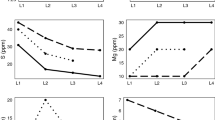

where P is the intensity value of a given pixel, n is the current step of the automaton calculating the dilatation, i,j is the coordinate of the pixel in the image, and A is the flow constant. The convergence condition of the finite difference method holds for its value, and the proposed value is 0.1. The process described thus far works correctly only if the objects in the image are dark; otherwise, the image must be inverted pixelwise. The matrix phase and the objects can be determined by their intensity values and the fact that the matrix phase is the object with the largest area in the image that is in contact with the boundary of the image. The automaton operation continuously fills the trenches and holes provided by the dark objects. The termination condition is when there is no longer a change in the intensity surface of the image due to lava flow. Of course, if images to be inverted are known and similar, a fixed automatic step can be defined as a termination condition. If the automaton stops, then this correction image will return the illumination error. After running the cellular automaton, the corrected image is subtracted from the original image and inverted if necessary, and an autocontrast operation is performed. The image no longer shows the error caused by the uneven background (Fig. 4). The autocontrast algorithm searches the image for the exact minimum and maximum intensity values and then provides the current pixel intensity value for the full range of values. Two cellular automata have been developed to perform measurements on images. One method is based on continuous stochastic dilatation of objects, and the other method is based on ultimate erosion. The first is suitable for analyzing the shape and size of objects, while the second provides a method for analyzing the arrangement of objects and clustering. The shape recognition algorithm is also based on a cellular automaton that generates data to analyze the shape and size of objects. In this cellular automaton, the first step in the segmented image is to label the objects. The image is also the cellular automata universe using neutral boundary conditions. An ultimate erosion of the objects occurs. In other words, the automaton erodes the binary image until the objects disappear completely. At each cellular automata step, the area and perimeter of the objects are registered. When determining the perimeter, pixels that belong to an object and have neighbors that belong to the matrix phase are counted. When the area of an object is defined, the number of object pixels is counted. Both tasks can thus be performed by simple pixel counting. From the area and perimeter data of the objects, the compactness value is calculated in each cellular automata step. Compactness is one of the most widely used basic features. We make it much more specific by giving compactness values as a function of cellular automata steps. Microscopy images of the eutectic phases revealed four basic groups of shapes: (i) lamellar, (ii) vermicular, (iii) compact and (iv) spherical. It is necessary to mention that evaluating rod-like eutectics requires care due to the specific rod sections. Shape classification cannot be resolved by a single shape attribute, but the number of shape attributes that can be derived from a simple count of pixels is limited. For this reason, following the change in compactness throughout erosion is necessary. The resulting automaton step–compactness function will more clearly separate these mentioned groups. Considering the shape functions given by discrete points, the classification can be solved efficiently using an SVM. The SVM is a binary classifier, but four groups must be distinguished from each other. Here, the advantage of the compactness interpretation is taken. Looking at the functions, Fig. 5 shows that the plate morphology can be separated from the other three subsets in the first step. The full automaton step–compactness function can be evaluated using SVM by performing the classification at each step so that for each step, whether the SVM considers the object to be plate-like is obtained. The decisions as votes are analyzed rather than for the entire function. If most of the votes consider the object to be a plate, then the object is lamellar; if not, then it is put into a set to be reclassified. If the object is lamellar, then the number of steps for total erosion is half the thickness of the plate in terms of the pixel values, as shown in Fig. 1. The nonplate residual object set is classified by another SVM, which separates vermicular objects from compact and spherical objects. The classification operation is fully equivalent to the plate case. The basic parameters of the SVM are identical. Classification is performed at each erosion step. During complete erosion, the object is classified by the SVM into the vermicular class most of the time, and then the object is considered vermicular. Additionally, in this case, the thickness of the object will be two times the number of steps for the total erosion. The last step is to separate the compact and the spherical classes. In this case, the algorithm proceeds in the same way as for the previous two shapes. A third SVM classifies the objects in the erosion steps. In this case, a truly binary classification is implemented, i.e., the SVM classifies the eroded versions of the object into either a compact or a spherical class. Regardless of which subclass receives the most votes, the classifier assigns the object to it. In either case, the longest string that can be drawn into the object, i.e., the diameter, can be calculated from the number of steps for the total erosion as described above. A classifier is a multiclass method that uses three SVMs to perform classification. In addition, SVMs of the same structure operate in the algorithm, which performs the classification at each erosion step. The classification results can be determined simply by counting the votes as described above. In each case, we can calculate the characteristic linear size of the object from the steps required for ultimate erosion, which is either the sheet thickness or diameter, depending on the shape. Of course, during evaluations, the main issue is not the individual size of individual objects but the average values projected over the eutectic as a whole or, in the case of clustering, over the whole of the objects constituting the group. Classification accuracy is important for value correctness. This is also important for individual SVMs, but how the entire classification algorithm works is much more important. In the case of machine learning algorithms, classification accuracy depends on the training, especially on the quality of the learning dataset. During training, we ensured that all the classes were adequately represented in the learning database. In addition, we ensured that various eutectics were also sufficiently represented. The training was carried out by taking pictures of 40 objects. This is why we obtained 94.4% accuracy (95.6% sensitivity and 98.8% specificity) for classification when testing the method (Fig. 6). We also examined the size determination in the case of the test data series. Here, we used classic manual measurements to estimate the thickness and diameter of the test objects. With the presented procedure, the measured dimensions were obtained with an accuracy of + /- 2%. Both the shape classification and size determination are significantly influenced by the image resolution, i.e., the magnification of the microscopy image. The specified precision values are available for objects greater than 10 pixels, which gives the minimum magnification given the resolution of the imaging device. The method developed for analyzing the spatial arrangement of objects is based on stochastic cellular automaton. After labeling the objects in the original binary image, boundary conditions are set. The neutral boundary condition used in the cellular automaton procedure is applied. The automaton performs stochastic dilation of objects at each step. In stochastic dilation, the displacement of the contour depends on the value of a probability variable Pg. The occurrence of dilation interpreted for individual contour points is controlled by a random number generator. The relation between the probability determined by the random number generator and Pg determines whether the dilation of individual contour points occurs. The disadvantage of deterministic, i.e., classically applied, binary dilatation is that during the contour propagation steps, the shape of the dilated object is distorted depending on the shape of the applied kernel. This distortion can be reduced by using a properly selected Pg value. In this method, the recommended value is Pg = 0.5. The dilatation of objects continues until the objects touch. When two or more objects come together, the dilatation step of the merge and the label values of the two objects are stored in a database. Merged objects are treated as one object and are identified as the lowest label value of the merged objects. Thus, during the process, fewer and fewer objects will form the image and occupy an ever-larger area. Finally, only one large object remains in the image. At this step, the continuous dilation stops. The spatial arrangement of objects and the distance between the objects, and in the case of clustering, the groups and the number of groups can be obtained by analyzing the database that registers these merging events. The easiest way to do this is to construct a dendrogram used in agglomerative clustering procedures. This can be done clearly and automatically from the database (Fig. 7b). The literature on cluster analysis also provides many possibilities for the analysis of dendrograms [55]. The first important question is whether clustering can be detected in the image (Fig. 7a). The recommended method for this purpose is to plot the number of branches of the dendrogram as a function of the dilation steps. This is actually the number of merges created in a given step. When there is no clustering in the microstructure and the location of the objects in the image is random, we obtain a steadily decreasing trend as a function of the dilatation steps. However, if in the trend, i.e., in the differential of the resulting discrete function, a value suddenly increases at a given step, then object clustering can be identified. With this analysis method, all typical clustering options can be filtered. If more than one cluster configuration can be identified, the user can choose which one is appropriate for analysis. Of course, analyzing the same function can also provide information about the regularity of the arrangement. Clustering knowledge is important when determining the distance between objects. After all, two times the number of dilatation steps associated with the merging, in this case, is the shortest distance between the contours of objects. This value is important when analyzing or estimating the mechanical properties of eutectics. Of course, in this step, the linear characteristic size of the objects is known, which makes it possible to calculate distances according to other distance definitions. In the current solution, the shortest distance between the described contours is measured as the distance. This calculation was also verified by comparing it with manual measurements in similarly processed images. In this case, the results of the manual measurements were also obtained with an accuracy of + /− 2%. The same can be said about the resolution and magnification of the images as for the method used for shape and characteristic linear size analysis. Of course, the distance between two selected objects is not relevant for describing the eutectic microstructure. In addition to determining the average for the total measures, determining the average characteristic of the clusters and determining the distances between the clusters may be important in clustering. These values can also be easily extracted both from the dendrograms and from the underlying database. For this analysis, it is important that the user is interested in the eutectic microstructure.

Automatic background correction using modified lava-flow algorithm. Input image of correction algorithm, with incorrect background. Red line indicates the cross section. a Corrected gray image after autocontrast applied. Red line indicates the cross section. b Intensity values along the marked cross section on the input image. The slope can be observed which is caused by uneven background illumination. c Intensity values along the marked cross section on the output image. The slope was eliminated. d Segmented image by ITER algorithm. The uneven background prevents automatic segmentation algorithms from working correctly. e Segmented image by ITER algorithm. Using the corrected image as input of autosegment algorithm. The result is a usable binary image by CA algorithms (f)

Input data of SVM classifiers. Typical data-points of different morphologies' circularity values. Each morphology has different circularity values during erosion. This phenomenon can be utilized by SVM classifiers in order to determine the type of morphology. a In each CA time step, the votes are counted. Type of morphology was determined by sum of total votes. Blue field indicates data-points which belong to the determined class. In the first step, SVM classifies the lamellar and non-lamellar objects. b In the second step, another SVM classifies the vermicular and non-vermicular objects. c In the following step, third SVM classifies the compact and spheroidal objects (d)

Clusters in spheroidal graphite cast iron. Objects with the same color belong to the same group. This state is the 53th step of CA. Each color represents a separate cluster. Object identifiers are indicated in order to better understand the dendrogram. a The dendrogram drawn by data of the second CA. Colored rectangles indicate different clusters in CA step 53 (b)

As shown in the workflow in Fig. 3, the first is an image processing step, in which the uneven backlight is corrected, and then the eutectic phases are segmented by the algorithm. The first cellular automaton determines the characteristic size of the phases and prepares the phase measurement data for classification. The trained SVM classifies the dataset and determines the class of shape for each object, which can be: spherical, compact, vermicular, lamellar. Knowing the shape, the characteristic dimension can now be assigned to the given phases. This dimension is the diameter in the case of sphere and compact, and the thickness of the lamellae in the case of vermicular and lamellar. The other cellular automaton then determines the distance between the phases, which in this case is the smallest distance that can be measured between the contours of adjacent phases. This value is used to construct a dendrogram. So far, the operation is automatic, requiring no user intervention. In the evaluation of the dendrogram, the algorithm also makes a suggestion, but here the user can re-analyze this to examine the clustering in detail. Of course, the dendrogram not only gives average distances but also distances within groups. Several eutectic microstructures are shown in Fig. 6, where the phases are classified by shape. While the analysis of Al-Ni eutectic yields mostly rod (sphere) objects, in the case of Al-Cu eutectic almost all phases are lamellar. In the other cases, it is also possible to see the class into which the algorithm has classified the objects. For example, for Al-Ni eutectic the diameter of the rods is 0.47 µm, while the lamellae thickness for Al-Cu eutectic is 0.335 µm. In the case where both spherical and vermicular phases are present in the image, using an average value can only be evaluated by knowing the shape of the phases. This is because the average 21.5 µm for spherical phases is the diameter, while for vermicular phases, it is the thickness. Of course, knowing the shape, it is also possible to specify that the average diameter of spherical graphite phases is 23.2 µm, while the average thickness of vermicular phases is 15.5 µm. When defining distances, only the definition of the distance should be kept in mind, because it is not the distance between characteristic points, such as the centroid, but the minimum distance between contours. This value is preferably used in mechanical models. For example, for Al-Ni eutectic structure 1.22 µm, while for Al-Cu 0.24 µm. In an ordered structure, this result is easy to interpret, but to interpret 86.6 µm in a lamellar graphite structure, the morphology and the arrangement must be taken into account. In addition to the microscope image itself, the dendrogram is a great help here. Of course, the values provided by the method need to be validated, which can be done on the basis of the above values and classical metallographic measurements on microscopic images in specific cases, with a proper understanding of the dimensions and shape. The method measures linear dimensions in two cases. To determine the shape, the contour is measured and the specified distance is determined. However, by validating these two dimensions, the algorithm can be verified in general. For the dataset used for shape classification, the contour of the phases and the distance between phases were measured using ImageJ open source software. The relationship between the automata and the data provided by ImageJ is shown in Fig. 8. It can be seen that a linear relationship as a function of size is obtained, showing a strong correlation between the linear size measured in both ways for both contour lengths and distances. However, the size measured in the two ways is systematically different because the slopes of the lines are 1.13 in case of distance measurement and 1.21 in case of perimeter measurement. This difference is due to the difference in the interpretation of distance in the two methods. For this reason, Fig. 2 records how linear size is defined in the automata which is slightly different from the interpretation of ImageJ. This difference is not a problem in a qualification procedure given the strong correlation, and in the case of comparing different measurements, the recalculation based on this analysis is straightforward. The presented method is suitable for the characterization of eutectics with the above morphology, which means the determination of the size, shape arrangement and spacing of the eutectic phases. The method can do this without user intervention for OM and SEM images, as intended. User intervention is only required for the analysis of clustering. The method has been tested on microscopic images of aluminum alloys and graphite cast irons, where the analyses have been successfully performed.

Conclusions

The eutectic microstructure is substantially similar in terms of the analysis of microscopy images, regardless of the morphology of the phases, the structure of the eutectic, or whether it is examined in hypo- or hypereutectic alloys. This provides an opportunity to develop a generally usable method for the analysis of microscopy images of eutectic microstructures. There are many possible solutions to this problem. Our motivation in the development was to use and create procedures and algorithms as close as possible to the possibilities of the digital electronic tools used in imaging. The digital image provides an opportunity to use the cellular automata method, which is a frequently and effectively used method in several areas of image analysis. A workflow for the analysis of eutectic microstructures was built so that cellular automata can be used in most cases. The necessary measurement and analysis procedures should also fit the possibilities of the tools used. With this, we should develop an effective method that can be used as widely as possible and provides as much detail as possible. An algorithm is useful for performing a posteriori background correction while processing OM images. A modified lava-flow automaton is applied, the results of which can also be derived from a numerical procedure used in other flow problems. The automaton can estimate the background image that can be used to make the correction. Among automatic segmentation procedures, solutions without parameters can be successfully applied to corrected images. The ITER algorithm proved to be the best for determining binary images of eutectic microstructures. With a process based on ultimate erosion, the phases in the image can be divided into four predefined shape classes. If the shape is known, the characteristic linear size of the objects is two times the number of erosion steps required for the disappearance of the objects in pixel units. A method based on stochastic dilation was developed to investigate and determine the clustering of eutectic microstructure phases. Depending on the dilatation steps, the merging of the objects is recorded. From this, a dendrogram can be derived. Dendrogram analysis provides the average distance, possible clusters and distances within these clusters. The method was tested for regular and irregular, plate, rod, spherical (and rod-like) and vermicular eutectics. OM and SEM images of the microstructures of Al-Cu, Al-Ni, Al-Si and cast irons were used for testing. In each case, the algorithm was useful for describing the eutectic microstructure.

References

J. LLorca, V.M. Orera, Directionally solidified eutectic ceramic oxides. Progr. Mater. Sci. 51, 711–809 (2006)

P. Bárczy, V. Mertinger, Z. Gácsi, N. Babcsán, M. Meier, Melt motions during unidirectional solidification of Al-Al3Ni eutectics. Mater. Sci. Eng. A. 173(1–2), 137–141 (1993)

K. Al-Omari, A. Roósz, A. Rónaföldi, Z. Veres, Effect of forced melt flow on Al–Si eutectic-alloy microstructures. Crystals. 12(5), 731 (2022)

M. Tokár, G. Fegyverneki, V. Mertinger, Analysis of the antimony and strontium cross-effects in Al-Si foundry alloys. Mater. Sci. Forum. 790–791, 464–469 (2014)

M. Zimmermann, M. Carrard, W. Kurz, Rapid Solidification of Al-Cu eutectic alloy by LASER remelting. Acta metall. 37(12), 3305–3313 (1989)

D.M. Stefanescu, Science and Engineering of Casting Solidification (Springer, Berlin, 2015)

G. Sathiyaseelan, C. Bhagyanathan, P. Srinath, J. Gottmyers Melwyn, Enhancing the strength and structure of Al–Si alloys for biomedical applications through the addition of Sr and Zr grain refiners on secondary AlSi7Mg aluminum alloys. Chem. Phys. Impact. 8, 100557 (2024)

X. Li, Z. Ren, Y. Fautrelle, Effect of a high axial magnetic field on the microstructure in a directionally solidified Al-Al2Cu eutectic alloy. Acta Mater. 54(20), 5349–5360 (2006)

M. Glazoff, A.V. Khvan, V. Zolotorevsky, N. Belov, A. Dinsdale, Casting Aluminum Alloys: Their Physical and Mechanical Metallurgy (Elsevier, Rotterdam, 2007)

G. Gyarmati, L. Bogoly, M. Stawarz, G. Fegyverneki, Z. Kéri, M. Tokár, T. Mende, Grain refiner settling and its effect on the melt quality of aluminum casting alloys. Materials. 15(21), 7679 (2022)

R. Elliott, Cast Iron Technology (Butterworth-Heinemann, 1988)

P.-M. Geffroy, M. Lakehal, J. Goñi, E. Beaugnon, J.-F. Silvain, Thermal and mechanical behaviour of grey cast iron and ductile iron castings using magnetic molding and lost foam processes. J. Mater. Process. Technol. 209(8), 4103–4111 (2009)

R. Elliott, Eutectic Solidification Processing (Butterworth-Heinemann, 1983)

A. Roósz, A. Rónaföldi, Y. Li, N. Mangelinck-Noel, G. Zimmermann, H. Nguyen-Thi, M. Svéda, Z. Veres, Microstructure analysis of Al-7 wt% Si alloy solidified on earth compared to similar experiments in microgravity. Crystals. 12(9), 1226 (2022)

R. Kakitani, R. Oliveira, R.V. Reyes, V. Adilson, A.V. Rodrigues, F. Bertelli, A. Garcia, J.E. Spinelli, N. Cheung, Metal/mold thermal conductance affecting ultrafine scale microstructures in aluminum eutectic alloys. Case Stud. Therm. Eng. 26, 101144 (2021)

M. Plapp, S. Bottin-Rousseau, G. Faivre, S. Akamatsu, Eutectic solidification patterns: interest of microgravity environment. C. R. Mecanique. 345, 56–65 (2017)

B. Dutta, O. Pompe, M. Rettenmayr, Quantitative image analysis for evaluation of eutectic fractions in as cast microstructures. Mater. Sci. Technol. 20, 1011 (2004)

J.C. Russ, R.T. Dehoff, Practical Stereology (Springer, New York, 2012)

G.F. Vander Voort, A. Roósz, Measurement of the interlamellar spacing of pearlite. Metallography. 17(1), 1–17 (1984)

R. Zhang, C. Zou, Z. Wei, H. Wang, Effect of high pressure and temperature on the evolution of Si phase and eutectic spacing in Al-20Si alloys. Crystals. 11, 705 (2021)

J.M. Liu, Z.C. Wu, Z.G. Liu, Response dynamics of the lamellar spacing for Al-Si eutectic during directional solidification. J. Mater. Sci. 30, 5755–5762 (1995)

K. Al-Omari, A. Rónaföldi, Z. Veres, Complex characterization of irregular eutectic structure. Mater. Sci. Eng. 45(1), 171–181 (2020)

M. Durand-Charre, Microstructure of Steel and Cast Irons (Springer, Berlin, 2004)

X. Chen, J. Xu, H. Hu, H. Mohrbacher, M. Kang, W. Zhang, A. Guo, Q. Zhai, Effects of niobium addition on microstructure and tensile behavior of as-cast ductile iron. Mater. Sci. Eng. A. 688, 416–428 (2017)

U. Tewary, D. Paul, H.K. Mehtani, S. Bhagavath, A. Alankar, G. Mohapatra, S.S. Sahay, A.S. Panwar, S. Karagadde, I. Samajdar, The origin of graphite morphology in cast iron. Acta Mater. 226, 117660 (2022)

D.M. Stefanescu, G. Alonso, P. Larrañaga et al., A comparative study of graphite growth in cast iron and in analogous systems. Inter. Metalcast. 12, 722–752 (2018)

K. Alrfou, A. Kordijazi, P. Rohatgi, T. Zhao, Synergy of unsupervised and supervised machine learning methods for the segmentation of the graphite particles in the microstructure of ductile iron. Mater. Today Commun. 30, 103174 (2022)

A. Scozzafava, L. Tomesani, A. Zucchelli, Image analysis automation of spheroidal cast iron. J. Mater. Process. Technol. 153–154, 853–859 (2004)

Z. Veres, A. Roósz, J. Kovács, G. Szakács, Effect of rotating magnetic field on solidified structure of Al-Al3Ni eutectic, in Third International Conference on Advances in Solidification Processes (ICASP-3) Aachen. ed. by Z. Gerhard, R. Lorenz (IOP Publishing Ltd, UK, 2012), pp.101–106

Z. Veres, A. Rónaföldi, J. Kovács, A. Roósz, Periodically changing rod distance in unidirectional solidified Al-Al3Ni eutectic. J. Cryst. Growth. 506, 127–130 (2019)

K. Bortnyik, P. Barkóczy, Examination of clustering in eutectic microstructure. Arch. Metall. Mater. 62(2B), 1155–1159 (2017)

J.A. Kalomiros, J. Lygouras, Design and evaluation of a hardware/software FPGA-based system for fast image processing. Microprocess. Microsyst. 32(2), 95–106 (2008)

F.Y. Shih, Image Processing and Mathematical Morphology: Fundamentals and Applications (CRC Press, 2017)

T. Gramβ, S. Bornholdt, M. Groβ, M. Mitchell, T. Pellizzari, Non-Standard Computation (Wiley-VCH, 2000)

P. Rosin, A. Adamatzky, X. Sun, Cellular automata in image processing and geometry (Springer, Berlin, 2016)

T. Kumar, G. Sahoo, A novel method of edge detection using cellular automata. Int. J. Comput. Appl. 9(4), 38–44 (2010)

A.R. Khan, P.P. Choudhury, K. Dihidar, S. Mitra, P. Sakar, VLSI architecture of a cellular automata machine. Comput. Math. Applic. 33(5), 79–94 (1997)

M.D. White, A. Tarakanov, P.J. Withers, C.P. Race, K.J.H. Law, Digital fingerprinting of microstructures. Comput. Mater. Sci. 218, 111985 (2023)

M. Seleznev, K.Y. Wong, D. Stoyan, A. Weidner, H. Biermann, Cluster detection of non-metallic inclusions in 42CrMo4 steel. Steel Res. Int. 89, 1800216 (2018)

M. Rusanovsky, O. Beeri, G. Oren, An end-to-end computer vision methodology for quantitative metallography. Sci. Rep. 12, 4776 (2022)

F.M.G. da Otávio, P. Sidnei, Automatic classification of graphite in cast iron. Microsc. Microanal. 11(4), 363–371 (2005)

Romanowska-Pawliczek, A., Siwek, A., Głowacki, M., Warmuzek, M.: Image Recognition, Identification and Classification Algorithms in Cast Alloys Microstructure Analysis. IMETI 2011: Proc. of 4th International Multi-conference on Engineering and Technological Innovation At: Orlando, Florida, USA (2), 56–61 (2011)

F. Iacoviello, D. Iacoviello, V. Di Cocco, A. De Santis, L. D’Agostino, Classification of ductile cast iron specimens based on image analysis and support vector machine. Procedia Structural Integrity. 3, 283–290 (2017)

S. Amelinckx, D. van Dyck, J. van Landuyt, G. van Tendeloo, Handbook of Microscopy (Wiley-VCH, 1997)

N. Dey, Uneven illumination correction of digital images: a survey of the state-of-the-art. Optik. 183, 483–495 (2019)

J. Sauvola, M. Pietaksinen, Adaptive document image binarization. Pattern Recogn. 33(2), 225–236 (2000)

C.H. Li, P.K.S. Tam, An iterative algorithm for minimum cross entropy thresholding. Pattern Recogn. Lett. 18(8), 771–776 (1998)

F. Piccinini, E. Lucarelli, A. Gherardi, A. Bevilacqua, Multi-image based method to correct vignetting effect in light microscopy images. J. Microsc. 248, 6–22 (2012)

T. Peng, K. Thorn, T. Schroeder et al., A BaSiC tool for background and shading correction of optical microscopy images. Nat. Commun. 8, 14836 (2017)

G.M. Crisci, D. Gregorio, O. Pindaro, G.A. Ranieri, Lava flow simulation Bv a discrete cellular model: first implementation. Int. J. Model. Simul. 6(4), 137–140 (1986)

D. Barca, G.M. Crisci, S. De Gregorio, F. Nicoletta, Cellular automata for simulating lava flows: A method and examples of the Etnean eruptions. Transp. Theor. Stat. Phys. 23(1–3), 195–232 (1994)

M. Sezgin, B. Sankur, Survey over image thresholding techniques and quantitative performance evaluation. J. Electron. Imaging. 13(1), 146–165 (2004)

T.W. Ridler, S. Calvard, Picture thresholding using an iterative selection method. IEEE Trans. Syst. Man Cybernet. SMC. 8(8), 630–632 (1978)

H.J. Trussell, Comments on picture thresholding using an iterative selection method. IEEE Trans. Syst. Man Cybernet. 9(5), 311 (1979)

B.S. Everitt, S. Landau, M. Leese, D. Stahl, Cluster analysis (Wiley, 2011)

Funding

Open access funding provided by University of Miskolc.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This invited article is part of a special topical focus in the journal Metallography, Microstructure, and Analysis on Quantitative Metallography and Microstructure Modeling.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bortnyik, K., Gyöngyösi, S. & Barkóczy, P. Microstructural Characterization of Eutectics using Digital Image Analysis. Metallogr. Microstruct. Anal. (2024). https://doi.org/10.1007/s13632-024-01112-y

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s13632-024-01112-y