Abstract

In this work the Monte Carlo method, introduced recently by the authors for orders of differentiation between zero and one, is further extended to differentiation of orders higher than one. Two approaches have been developed on this way. The first approach is based on interpreting the coefficients of the Grünwald–Letnikov fractional differences as so called signed probabilities, which in the case of orders higher than one can be negative or positive. We demonstrate how this situation can be processed and used for computations. The second approach uses the Monte Carlo method for orders between zero and one and the semi-group property of fractional-order differences. Both methods have been implemented in MATLAB and illustrated by several examples of fractional-order differentiation of several functions that typically appear in applications. Computational results of both methods were in mutual agreement and conform with the exact fractional-order derivatives of the functions used in the examples.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This paper is a continuation of our paper [8], where the Monte Carlo method for fractional differentiation was introduced and used for the approximation and evaluation of the Grünwald–Letnikov fractional derivative of order \(0<\alpha <1\). The goal of the present work is the extension of the Monte Carlo method for fractional-order differentiation to higher orders.

Let us recall that the Grünwald–Letnikov fractional derivative is a non-local operator on \(L_1({\mathbb {R}}\)) defined as [10]

where

For \(f(t)=0\) for \(t < 0\), the fractional-order difference (1.2) can be used for numerical evaluation of the Grünwald–Letnikov fractional order derivative, the Riemann–Liouville fractional derivative, and the Caputo fractional derivative when it is equivalent to the Riemann–Liouville one, [10].

2 A bit of history: signed probabilities and fractions of a coin

Since for the coefficients \(w_k = \gamma (\alpha ,k)\), \(k = 1, 2, \ldots \), we have [8, 9],

we can look at the coefficients \(w_k\) as probabilities. However, not always all coefficients \(w_k = \gamma (\alpha ,k)\) are positive, and in such situations we can consider them as signed probabilities, that can be positive or negative, while preserving the property (2.1).

The notion of negative probability goes back to 1932 to Wigner’s remark [16] that some considered expressions

“...cannot be really interpreted as the simultaneous probability for coordinates and momenta, as is clear from the fact, that it may take negative values. But of course this must not hinder the use of it in calculations as an auxiliary function which obeys many relations we would expect from such a probability.”

In 1942, Dirac [4] who used negative energy and negative probabilities in quantum mechanics, emphasized that negative energy states occur with negative probability and positive energy states occur with positive probability and that it is possible to develop a theory would allow its application “to a hypothetical world in which the initial probability of certain states is negative, but transition probabilities calculated for this hypothetical world are found to be always positive”, and concluded that

“Negative energies and probabilities should not be considered as nonsense. They are well-defined concepts mathematically, like a negative sum of money, since the equations which express the important properties of energies and probabilities can still be used when they are negative. Thus negative energies and probabilities should be considered simply as things which do not appear in experimental results.”

It appears that Dirac’s paper directly motivated Bartlett in 1945 to introduce signed probabilities [1], and one of the important consequences of allowing negative probabilities was that

“...a negative probability implies automatically a complementary probability greater than unity...”

This idea was also elaborated by Feynman [6] in 1987. Similarly to Bartlett, he mentioned that using negative probabilities leads to simultaneously allowing probabilities greater than one, but “probability greater than unity presents no problem different from that of negative probabilities”, because those are used in intermediate calculations and the final result is always expressed in positive numbers between 0 and 1.

This development of the idea of allowing probabilities to be negative and positive naturally leads to the algebraic theory of probability, see [14]. As quoted above, negative probabilities can be used in the intermediate computations, while the final result must be physical (which means – positive, measurable).

This is similar to using, say, negative resistors or capacitors in electrical circuits: there are no passive elements having negative resistance, but it is possible to create circuits exhibiting negative resistances, negative capacitances, and negative inductances. This was pointed out by Bode in his classical book [2, Chapter IX]. Such circuits are called negative-impedance converters ([5, 12]) and are based on using operational amplifiers. For example, the negative resistance of −10 k\(\varOmega \) means that if such an element is connected in series with a classical 20 k\(\varOmega \) resistor, then the resistance of the resulting connection is 10 k\(\varOmega \).

A beautiful example of the interpretation of negative probabilities was developed by Székely [15], who introduced half-coins as random variables that take the values n with signed probabilities – positive for odd values of n and negative for even values. A fair coin is a random variable X that take the values 0 and 1 with probability 1/2. Its probability generating function is \({\mathbb {E}} \, z^X =(1+z)/2\). The addition of independent random variables corresponds to multiplication of their probability generating functions. Therefore, the probability generating function of the sum of two fair coins is equal to \(((1+z)/2)^2\), and it is natural to define a half-coin via its probability generating function

where for \(\mu = 1/2\) the coefficients \(p_n = \left( {\begin{array}{c}1/2\\ n\end{array}}\right) \) have alternating signs. When “flipping” two half-coins, the sum of the outcomes is 0 or 1 with probability 0.5, like in the case of flipping a normal coin.

Taking \(\mu = 1/3\) yields third-coins and “flipping” three of them makes a normal coin; \(\mu = 1/4\) defines a quarter-coin, etc. Non-integer values of \(\mu \) produce \(\mu \)-coins, and to get a normal coin it is necessary to “flip” \(1/\mu \) of such \(\mu \)-coins, which depending on \(\mu \) can be a finite or even infinite number of such “fractions of coins”.

Extending the Monte Carlo method introduced in our paper [8] for the case of \(0<\alpha <1\) to orders higher than one leads to working with signed probability distributions, because in such case the coefficients \(w_k=\gamma (\alpha ,k)\) in the fractional-order difference (1.2) have different signs, and some of them have values outside of the interval (0, 1), as mentioned by Bartlett and Feynman, while still satisfying the property (2.1).

3 Monte Carlo fractional differentiation using signed probabilities

Since we deal with signed probabilities, it is necessary to follow the ideas of Dirac, Bartlett, and Feynman, which means that for using the Monte Carlo method we have to transform the signed probabilities to the positive probabilities. As a result, the expression used for Monte Carlo simulations will consist of two parts – one of them might contains terms with different signs and is independent of the random variables used in the Monte Carlo draws (trials), and the other contains the terms of the same (positive) sign and is dependent of those random variables. Below we first illustrate this for the cases \(1<\alpha <2\) and \(2<\alpha <3\), and then present a framework for the general case.

3.1 The case of order \(1<\alpha <2\)

When \(1<\alpha <2\), we have \(w_1=\gamma (\alpha ,1) = -\alpha < 0\), and \(w_k > 0\) for \(k=2, 3, \ldots \)

Let \(Y \in \{1, 2, \ldots \}\) be a discrete random variable such that

and

since

or

Note that \({\mathbb {E}} \, Y < \infty \), but \(\text{ Var } \, Y = \infty \).

We have the following:

if the stochastic process \(\zeta _h(t) = f(t - Y h)\) is such that for a fixed f, t, and h \({\mathbb {E}}\, f(t-Yh) < \infty \), where the random variable Y is defined by (3.1).

Let \(Y_1\), \(Y_2\), ..., \(Y_n\), ...be independent copies of the random variable Y, then by the strong law of large numbers

with probability one for any fixed t and h, and hence, as \(N \rightarrow \infty \),

with probability one converges to \(A_h^\alpha f(t)\), \(\alpha \in (1,2)\), defined by (1.2).

Moreover, if for fixed t and h

then by the central limit theorem and Slutsky lemma, as \(N \rightarrow \infty \), we have

where \(\rightarrow ^D\) denotes convergence in distributions and N(0, 1) is the standard normal law, and \(v_N\) is a sample variance of random variables \(f(t-Y_n h)h^{-\alpha }\), \(n=1, 2, \ldots , N\). This allows us to build asymptotic confidence intervals (see details in [8]).

The above results can be used as the basis of the Monte Carlo method for numerical approximation and evaluation of the Grünwald–Letnikov fractional derivatives.

Indeed, we can replace the sample \(Y_1\), \(Y_2\), ..., \(Y_N\) by its Monte Carlo simulations.

For the simulation of the random variable Y with the distribution (3.1), we define

where \(p_i = p_i(\alpha )\) are defined in (3.1).

Then

If U is a random variable uniformly distributed on [0, 1], then

and to generate \(Y \in {1, 2, \ldots }\) we set \(Y=k\) if \(F_{k-1} \le U < F_k\).

3.2 The case of order \(2<\alpha <3\)

When \(2< \alpha <3\), we have

Using the binomial series

we easily obtain

and denoting

we have

and

Thus, putting \(p_2 = q_1 + q_2\) and \(p_k = q_k = -w_k\) for \(k\ge 3\),

where \(0< p_k < 1\) for \(k = 2, 3, \ldots \)

Now, introducing the discrete random variable

such that

we have the following:

Let \(Y_1\), \(Y_2\), ..., \(Y_n\), ...be independent copies of the random variable Y, then for for fixed t and h such that

and by the strong law of large numbers we obtain

with probability one as \(N \rightarrow \infty \).

Thus under assumption (3.5) we have the following convergence with probability one:

and this can be used for evaluation of the Grünwlad–Letnikov fractional derivative by the Monte Carlo method.

The simulation of \(Y_1\), \(Y_2\), ..., \(Y_n\), ...is similar to the previous cases of \(\alpha \in (0,1)\) and \(\alpha \in (1,2)\), with the only difference that

and if \(F_{k-1}< U < F_k\), then \(Y = k+1\) (k = 1, 2, ...), where U is a random variable uniformly distributed on [0, 1].

3.3 The general case for any \(\alpha >0\)

It turns out that the methodology presented above for \(\alpha \in (1,2)\) and \(\alpha \in (2,3)\) can be extended to the case of arbitrary \(\alpha > 0\) in terms of generalized (signed) probabilities.

Let us consider a generalized random variable \({\bar{Y}} \in {1, 2, \ldots }\) such that

where we interpret \(q_k\) as generalized probability \(\bar{{\mathbb {P}}} ({\bar{Y}}=k)\), which are allowed to be negative, but for which there exists an integer \(r \ge 1\) such that

Then we can write the following formal identity for the Borel function g(k), \(k = 1, 2, \ldots \):

which can be finally written as

Thus, denoting

we define the generalized expectation

where the ordinary discrete random variable Y is defined as

and \({\bar{g}} (k)\), \(k \in {\bar{K}}\), be the above function.

If \(Y_1\), \(Y_2\), ..., \(Y_n\), ...are independent copies of the discrete random variable Y such that \({\mathbb {E}} \, {\bar{g}} (Y) < \infty \), then by the strong law of large numbers

with probability one, and hence for the generalized random variable \({\bar{Y}}\) with probability one

where \(\bar{{\mathbb {E}}} \, g({\bar{Y}})\) is defined by (3.12).

The subsection 3.2 serves as an easy example of the presented general case, where for \(\alpha \in (2,3)\) we can take \(r=1\), \(K = \{1, 2, \ldots , n, \ldots \}\) and \({\bar{K}} = \{2, 3, \ldots , n, \ldots \}\). The generalized (signed) probabilities in this case are

but \(\pi _2 = q_1 + q_2 \in (0,1)\), as well as for the rest \(q_j = -w_j \in (0,1)\), \(j = 3, 4, \ldots \), and \(g(k) = f(t-kh)\), \(k = 0, 1, 2, \ldots \), for a given f and fixed r and h.

4 Monte Carlo method for fractional differentiation using the semi-group property of fractional differences

Another approach can be based on using the semigroup property [3, 7] of the fractional-order finite difference operator defined in (1.2). In fact, in this way we can simply follow our paper [8], where the function f(t) should be replaced by an integer-order fractional difference. Using the semi-group property of fractional-order differences, we can write

where

Hence [8],

where if \({\tilde{\alpha }} = \alpha -n \in (0,1)\), then

and

Let \(Z \in \{ 1, 2, \ldots \}\) be a discrete random variable with

Then from (4.1) we have

If \(Z_1\), \(Z_2\), ..., \(Z_m\), ...are independent copies of the random variable Z, then, as \(N \rightarrow \infty \), by the strong law of large numbers

with probability one for \(\alpha \in (n, n+1)\), assuming that the last expectation exists, and hence with probability one as \(N \rightarrow \infty \) we have convergence to \(A_{h}^\alpha f(t)\) defined by (1.2):

Moreover, if for fixed f, t, and h the following inequality holds

then by the central limit theorem and Slutsky lemma, as \(N \rightarrow \infty \), we have

where \(\rightarrow ^D\) denotes convergence in distributions and N(0, 1) is the standard normal law, and \(v_N\) is a sample variance of random variables

This allows us to build asymptotic confidence intervals (see details in [8]).

The above results can be used as the basis of the Monte Carlo method for numerical approximation and evaluation of the Grünwald–Letnikov fractional derivatives. Indeed, we can replace the sample \(Z_1\), \(Z_2\), ..., \(Z_N\) by its Monte Carlo simulations.

For the simulation of the random variable Z with the distribution (4.3), we define

where \(b_i = p_i(\alpha )\) are defined in (4.2).

Then

If U is a random variable uniformly distributed on [0, 1], then

and to generate \(Z \in {1, 2, \ldots }\), we set \(Z=k\) if \(F_{k-1} \le U < F_k\).

5 Examples

Both proposed methods have been implemented in MATLAB [13]. This allows their mutual comparison, as well as useful visualizations and numerical experiments with the functions that are frequently used in the applications of the fractional calculus and fractional-order differential equations. The Mittag-Leffler function [10]

that appears in some of the provided examples, is computed using [11]. In all examples the considered interval is sufficiently large, namely \(t \in [0, 10]\).

The exact fractional derivatives are plotted using solid lines, the results of the proposed Monte Carlo method are shown by bold points, the results of K individual trials (draws) are shown by vertically oriented small points (in all examples, \(K=200\)), and the confidence intervals are shown by short horizontal lines above and below the bold points.

5.1 Example 1. The power function

The particular case of \(\nu =0\) is the Heaviside unit-step function, and its derivatives of orders \(\alpha =1.7\) and \(\alpha =2.6\) are shown in Fig. 1.

The derivatives of the power function for \(\nu =1.3\) and orders \(\alpha =1.7\) and \(\alpha =2.6\) are shown in Fig. 2.

5.2 Example 2. The exponential function

Since the difference of the exponential function and the first terms of its power series can be expressed in terms of the Mittag-Leffler function, we can easily obtain the explicit expression for the corresponding fractional derivative [10]:

The derivative of order \(\alpha =1.7\) of the function \(y(t) = e^{\lambda t} - 1 - \lambda t\) for \(\lambda =-1.4\) and \(\lambda =-0.4\) are shown in Fig. 3.

5.3 Example 3. The sine function

Similarly to the previous example, the fractional-order derivatives of the sine function can be obtained using its representation in terms of the Mittag-Leffler function:

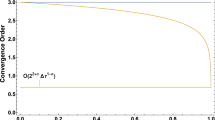

The results of evaluation of the derivatives of order 1.7 of the sine function using both methods are shown in Fig. 4 (method P is based on the signed probabilities approach, method S is based on using the semi-group property of fractional differences). The computed values of the fractional-order derivative are in mutual agreement and conform with the exact fractional-order derivatives. The confidence intervals are also of similar size, while the variance is much smaller in the case of the method based on the semi-group property of fractional-order differences; however, the computational cost is much higher. This observation holds for all other examples.

5.4 Example 4. The Mittag-Leffler function

The product of the power function and the Mittag-Leffler function appears frequently in solutions of fractional differential equations with Riemann-Liouville fractional derivatives, so it is also a suitable example:

The results of computations for such function are shown in Fig. 5 for \(\alpha =1.7\), \(\mu =1.5\), \(\beta =3.5\), and \(\lambda =-1.4\), resp. \(\lambda =-0.4\).

6 Conclusions

Our extension of the Monte Carlo approach to fractional differentiation of orders higher than one led to working with signed probabilities that are not necessarily positive. We have demonstrated how this can be processed and used for computations using the Monte Carlo method.

The results of computations by the method based on signed probabilities are compared with the results obtained by the method that uses the semi-group property of fractional-order differences. Both methods produce practically the same values of fractional derivatives, which are in agreement with the values computed using the explicit formulas. The method based on the semi-group property is, by its construction, significantly less efficient as it requires more computations; at the same time it is characterized by smaller variance of the outputs of trials at the points of evaluations. The method based on signed probabilities is, on the contrary, faster and is characterized by larger values of variance of the trials at the points of evaluations. In both cases the confidence intervals are sufficiently small for practical purposes. The presented method can be further enhanced using standard approaches for improving the classical Monte Carlo method, such as reduction of variance, importance sampling, stratified sampling, control variates or antithetic sampling.

References

Bartlett, M.: Negative probability. Mathematical Proceedings of the Cambridge Philosophical Society 41(1), 71–73 (1945). https://doi.org/10.1017/S0305004100022398

Bode, H.W.: Network Analysis and Feedback Amplifier Design. D. Van Nostrand Company Inc, Prinston-Toronto-New York-London (1945)

Diaz, J., Osler, T.: Differences of fractional order. Mathematics of Computation 28(125), 185–202 (1974). https://doi.org/10.1090/S0025-5718-1974-0346352-5

Dirac, P.: The physical interpretation of quantum mechanics. Proc. of the Royal Soc. A 180, 1–40 (1942). https://doi.org/10.1098/rspa.1942.0023

Dostal, J.: Operational Amplifiers. Butterworth-Heinemann, Boston (1993)

Feynman, R.: Negative probability. In: Hiley, B. J., and Peat, F. D. (Eds.) Quantum Implications. Essays in Honour of David Bohm. Routledge, London and New York, ISBN 9780415069601, Chap. 13, 235–248 (1987)

Kuttner, B.: On differences of fractional order. Proc. London Math. Soc. 7(3), 453–466 (1957). https://doi.org/10.1112/plms/s3-7.1.453

Leonenko, N., Podlubny, I.: Monte Carlo method for fractional-order differentiation. Fract. Calc. Appl. Anal. 25(2), 346–361 (2022). https://doi.org/10.1007/s13540-022-00017-3

Machado, J.A.T.: A probabilistic interpretation of the fractional-order differentiation. Fract. Calc. Appl. Anal. 6(1), 73–80 (2003)

Podlubny, I.: Fractional Differential Equations. Academic Press, San Diego, ISBN 0125588402 (1999)

Podlubny, I., and Kacenak, M.: Mittag-Leffler function. MATLAB Central File Exchange, Submission No. 8738 (2006).https://www.mathworks.com/matlabcentral/fileexchange/8738

Podlubny, I., Petras, I., Vinagre, B.M., O’Leary, P., Dorcak, L.: Analogue realizations of fractional-order controllers. Nonlinear Dynamics 29(1–4), 281–296 (2002). https://doi.org/10.1023/A:1016556604320

Podlubny, I.: MCFD Toolbox. MATLAB Central File Exchange, Submission No. 108264 (2022). https://www.mathworks.com/matlabcentral/fileexchange/108264

Ruzsa, I., and Székely, G.: Algebraic Probability Theory. Wiley, New York, ISBN 0471918032 (1988)

Székely, G.: Half of a coin: negative probabilities. WILMOTT Magazine, 66–68 (July 2005)

Wigner, E.: On the quantum correction for thermodynamic equilibrium. Phys. Rev. 40, 749–759 (1932)

Acknowledgements

The authors would like to thank the Isaac Newton Institute for Mathematical Sciences for support and hospitality during the programme Fractional Differential Equations (FDE2). This work was supported by EPSRC grant number EP/R014604/1. Nikolai Leonenko is also partially supported by LMS grant 42997 and ARC grant DP220101680. The work of Igor Podlubny is also supported by grants VEGA 1/0365/19, APVV-18-0526, and SK-SRB-21-0028.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflicts of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article was updated to include the license text.

Rights and permissions

This article is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

About this article

Cite this article

Leonenko, N., Podlubny, I. Monte Carlo method for fractional-order differentiation extended to higher orders. Fract Calc Appl Anal 25, 841–857 (2022). https://doi.org/10.1007/s13540-022-00048-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13540-022-00048-w

Keywords

- fractional calculus (primary)

- fractional differentiation

- numerical computations

- Monte Carlo method

- stochastic processes