Abstract

Recent advancements in simulation technology facilitated maritime training in various modalities such as full-mission, desktop-based, cloud-based, and virtual reality (VR) simulators. Each of the simulator modality has unique pros and cons considering their technical capabilities, pedagogical opportunities, and different organizational aspects. On the other hand, enhanced training opportunity and diversity of training depends on the proper utilization of simulators. In this context, the absence of an unbiased, transparent, and robust simulator selection process poses a complex decision-making challenge for the maritime instructors and decision-makers at the institutions. In this study, a hybrid multi-criteria decision-making (MCDM) approach is proposed to evaluate four major types of simulator modalities. For the evaluation, a MCDM framework is developed based on 13 key factors (sub-criteria) for simulator selection grouped under three higher-level criteria—technical, instructional, and organizational criteria. Data was collected using a structured best-worst method (BWM) survey from subject matter experts. The Bayesian BWM is used for ranking of the 13 sub-criteria, and the Preference Ranking Organization Method for Enrichment Evaluation (PROMETHEE) is used to evaluate the four simulator modalities utilizing sub-criteria ranking scores from Bayesian BWM. The results reveal that the regulatory compliance of simulators is the most important criterion, while the cost of simulators is considered the least important criterion during the simulator selection process. Overall, full-mission simulators are the most preferred followed by VR simulators, cloud-based and desktop simulators. However, a sensitivity analysis demonstrated context-specific preferences for certain simulator types over others.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Seafarers’ competence have been regarded as a crucial determinant of maritime safety (Kongsvik et al., 2020; A. Wahl et al., 2020). Competency-based training (CBT) was introduced by the International Maritime Organization (IMO) through the International Convention on Standards of Training, Certification and Watch keeping for Seafarers (STCW) 1995 as amended to increase seafarers’ competence and address the human-error-related incidents in the maritime domain (Emad & Roth, 2008). The essence of CBT is to operationalize “training and assessment” activities in the workplace or in a job-like environment (Fletcher & Buckley, 1991) thereby making simulators a significant medium of CBT in maritime education and training (MET) (Martes, 2020). Maritime simulators have been proposed as a solution for bridging the “experiential learning gap” of entry-level seafarers (A. Wahl et al., 2020) in addition to them being a key element for efficient training in high-risk domains (Moroney & Lilienthal, 2009), for emergency training (Billard et al., 2020) as well as for enhancing behavioural and performance outcomes (Röttger & Krey, 2021). Consequently, simulator training for critical navigation components in maritime operations such as automatic radar plotting aid (ARPA), and radio detection and ranging (RADAR) have been made mandatory by the STCW regulations in the Section B-I/12 – Guidance regarding Use of Simulators (IMO, 2010). Since then, simulator training has become a standard aid for training of seafarers in maritime institutes, where it is utilized to bridge the gap between theory and practice by providing an opportunity to experience the real maritime work environment in a virtual medium (Hontvedt & Arnseth, 2013).

Over the years, maritime simulators have evolved across various modalities, depending on their functionality, scale, and purpose. On the other hand, continuously changing training needs of seafarers with the emerging technical and operational stature of the maritime industry makes it impossible to use an all-in-one simulator for training. For example, a full-mission bridge simulator may be suitable to replicate basic to complex navigation scenarios for training, whereas desktop-based simulators may be considered more suitable for procedural training or equipment familiarization (Kim et al., 2021). Similarly, cloud-based simulators may seem suitable for remote-training accessible at any time and location, whereas Virtual Reality (VR) simulators may be used to provide highly immersive training in 3D environment (Mallam et al., 2019). Moreover, the multifaceted demands considering the factors such as training duration, instructors’ competence, and evaluation methods (Nazir et al., 2019) generate diverse training needs requiring a comprehensive institutional strategy. Thus, the availability of various simulator modalities, each with distinct characteristics, combined with numerous emerging factors to consider, creates a decision-making challenge for maritime instructors when selecting the appropriate simulator to meet specific training needs. Kim et al. (2021) assessed four different modalities of maritime simulators – full-mission, cloud-based, desktop-based, and virtual reality (VR) simulators—using a qualitative approach. Such an approach offers the pros and cons of using different modalities of maritime simulators but does not provide a structured framework for decision-making nor any in-depth insight into the factors that dictate the simulator selection process. Therefore, the research question of this study is formed as: “What factors influence the selection of simulator modalities for maritime training, and how can their importance rankings be used to evaluate simulators?”

This study proposes a multi-criteria decision-making (MCDM) framework for the evaluation of the four modalities of maritime simulators—full-mission, desktop-based, cloud-based, and VR simulators considering 13 relevant factors (or sub-criteria). First, the underlying factors affecting the selection of maritime simulators are extracted from published literature, and grouped under three higher level criteria—technical, instructional, and organizational criteria. Then, these criteria and their corresponding sub-criteria are ranked by their weights derived from a survey of experts utilizing the Bayesian best-worst method (BWM). Finally, an evaluation of the four modalities of simulators is performed using the Preference Ranking Organization Method for Enrichment Evaluation (PROMETHEE) method. A sensitivity analysis is conducted to explore and discuss the influence of varying weights of the 13 sub-criteria on the preferential ranking of simulator modalities.

The subsequent sections of this study include: the description of methodology employed in this study delineating how two MCDM methods, i.e., Bayesian best-worst method (BBWM) and PROMETHEE have been utilized in conjunction in Section. 2, the results of analysis in Section. 3, discussion of results along with a few practical implications in Section. 4, and the conclusions with future research directions in Section. 5.

2 Methodology

MCDM methods have traditionally been used in classic decision-making or assessment contexts such as equipment selection in process industries (Standing et al., 2001; Tabucanon et al., 1994), performance-based ranking of universities in the education domain (H.-Y. Wu et al., 2012) or assessing the effects of multiple criteria in cloud technology adoption in healthcare domain (Sharma & Sehrawat, 2020). Different types of MCDM methods are being utilized including analytic hierarchy process (AHP), analytic network process (ANP), technique for order preference by similarity to ideal solution (TOPSIS), multi-criteria optimization and compromise solution (VIKOR), decision making trial and evaluation laboratory (DEMATEL), simple additive weighting (SAW), PROMETHEE, and elimination and choice translating reality (ELECTRE), along with their variants (Zavadskas et al., 2014). A combination of multiple methods is also common in the literature (Dağdeviren, 2008; Kheybari et al., 2021; Nabeeh et al., 2019).

In this study, a combination of MCDM methods, i.e., Bayesian BWM-PROMETHEE, is used to first, rank the factors and criteria influencing the selection of maritime simulators, then to evaluate four simulator modalities considering those factors. A systematic literature review approach was followed to identify the relevant factors for the selection of maritime simulators. Figure 1 presents the methodological workflow of this study.

2.1 Literature review

The proposed MCDM framework related to the evaluation of maritime simulators along with their associated selection criteria require data from two specific dimensions: (1) criteria and/or sub-criteria affecting the selection of simulators, and (2) types of maritime simulator modalities to be evaluated. First, a systemic approach is followed to identify the criteria and sub-criteria from scientific literature. The literature search was performed using the following Boolean search strings:

(“maritime” OR “shipping”) AND (“seafarer* training” OR “maritime education and training” OR “MET” OR “training” OR “education”) AND (“simulator*”)

The search was conducted in two databases—Scopus and Web of Science (WOS)— returned a total of 168 documents, after excluding duplicates and including only peer-reviewed articles written in English language. After the initial screening of abstracts, a total of 69 articles were finally selected for full-text review. Table 1 provides a summary of the literature search process.

2.1.1 Criteria and sub-criteria affecting the selection of simulators

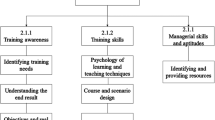

The 69 selected articles were reviewed systematically using an Excel file to identify items relevant for maritime simulator training. The review followed an iterative process involving the authors, which lead to clustering of the items under 13 thematic sub-criteria: (C1) fidelity, (C2) immersivity, (C3) possibility of remote training, (C4) possibility of team training, (C5) ease of training, (C6) ease of assessment, (C7) pedagogic value, (C8) appropriate methods for training, (C9) diversity of training scenario, (C10) training efficiency, (C11) regulatory compliance, (C12) cost of training and (C13) capacity of institutions. These sub-criteria were then categorized under three higher-level criteria: technical (C1–C5), instructional (C6–C10) and organizational (C11–C13) criteria. Table 2 presents the identified items from the published literature along with the thematic sub-criteria, and criteria.

2.1.2 Identifying maritime simulator modalities

Maritime simulators can be classified in several ways considering the difference in their capabilities and training objectives. For example, DNV GL (2021) classified all types of maritime simulators into four different categories: class A, class B, class C, and class S for full-mission, multi-task, limited-task, and special-task, respectively. The categorization considers each individual maritime operations having separate training objectives such as ship navigation, engine room operation, cargo handling, etc. However, maritime training institutes may use one simulator for multiple training needs, such as a full-mission bridge simulator for both navigation and communication training, which makes it difficult to further categorize simulators solely based on their task-relevance. Therefore, this study opted for the analysis of simulators categorized according to their physical modalities, reliance on internet and hardware usage as proposed by Kim et al. (2021). A description of the four selected simulator modalities is presented in Table 3.

Following the identification of the relevant criteria, sub-criteria, and simulator modalities, MCDM methods are operationalized in the subsequent sections.

2.2 Bayesian BWM for criteria ranking

A structured BWM survey was designed for data collection, where the practitioners and experts in MET, such as maritime instructors (MI), maritime education researchers (MR), and head of departments (HOD) with relevant backgrounds from different maritime institutions participated. The potential respondents including the members of the International Association of Maritime Universities (IAMU) were reached out through email and other professional contacts. The survey link was kept open for participation for 1 month from 1 June 2022 until 1 July 2022. A total of 41 responses were received, among which 8 were removed for inconsistent answers and a further 8 responses were set aside due to their lack of direct experience with maritime simulators. Consequently, 25 respondents with hands-on teaching experience with simulators (mean = 9.87 years, SD = 7.21) were selected for final analysis. Table 4 provides an overview of respondents.

Since the inception of BWM as a Bayesian probabilistic group decision-making method (Mohammadi & Rezaei, 2020; Rezaei, 2015), it has been used in many decision-making studies in various domain, e.g., in aviation domain for evaluating green performance of the airports (Kumar et al., 2020), in healthcare for selecting waste disposal location (Torkayesh et al., 2021) etc. In this study, the Bayesian BWM has been employed to rank three (03) criteria and their corresponding 13 sub-criteria that influence the selection of maritime simulators. A stepwise approach was followed for estimating the local weights of the criteria and sub-criteria, which are then used for calculating global (or overall) weights of all the sub-criteria.

-

Step 1: Identification of different criteria and sub-criteria affecting simulator selection

The first step of Bayesian BWM is to identify criteria for evaluation. In Section. 2.1, 13 sub-criteria concerning simulator training in the maritime domain were identified under three criteria, as presented in the proposed MCDM framework in Fig. 2.

-

Step 2: Identifying the most important (MI) and the least important (LI) criterion and sub-criterion

Experts determined the most important (MI) and the least important (LI) criteria through the survey. The respondents’ evaluation about the MI and LI criteria is generated for the three criteria and their corresponding sub-criteria. The identified MI and LI from the survey can be found in the supplementary data.

-

Step 3: Comparing the most important (MI) with other criteria (j)

Experts were asked to rate the importance of their selected MI criterion with respect to the other criteria on a scale of 1-to-7 (“1” being “equally” important and “7” being “very strongly” important than). Thus, the vector for the most important-to-others (MO) is formed as:

Here, xMIj denotes the preference of most important (MI) criterion over the criterion j, where xMI. MI = 1.

-

Step 4: Comparing the other criteria (j) to the least important (LI)

Subsequently, the experts were asked to rate the importance of other criteria with respect to their selected least important (LI) criteria on a scale of 1-to-7 (“1” being “equally” important and “7” being “very strongly” important than). Thus, the vector for the others-to-the least important (OL) is formed as:

Here, xjLI denotes the preference of another criterion j over the least important (LI) criterion, where xLI. LI = 1.

-

Step 5: Estimating the overall weight

In Bayesian BWM, the weights of the criteria can be estimated based on the MO and OL vectors as inputs. Following (Mohammadi & Rezaei, 2020), the probability mass function (PMF) of the OL vector can be expressed as a multinomial distribution as follows:

considering w as the probability distribution of weights. Since OL vector represents the preference of other criteria over the LI criteria, the MO vector represents the preference of the MI criteria over the others.

Hence, the weight vector can be estimated through Dirichlet distribution shown below since MCDM weights are non-negative and have sum-to-one characteristics.

The aggregated weight (wggg) and individual expert weight (w1 : k) corresponding to their inputs can be calculated using the most important-to-others vector (MO1 : k) and others-to-least important vector (OL1 : k) for all experts ∀k = 1, 2, 3, ⋯. . , K. Therefore, the joint probability distribution can be expressed as:

The individual expert weight (wk) should be within the bounds given by the aggregate weight (wggg) as below:

where γ follows a gamma (.01,.01) distribution parameter.

2.3 PROMETHEE for evaluating of the simulator modalities

Developed by Brans and Vincke (1985), PROMETHEE have become an established MCDM method for ranking or evaluating different alternatives. It has been used both as stand-alone and in combination with other MCDM methods for evaluation of finite number of alternatives (Albadvi et al., 2007); for example, in the selection of manufacturing systems (Anand & Kodali, 2008) or sustainable energy planning (Pohekar & Ramachandran, 2004).

In this study, the weight of each criterion is estimated using Bayesian BWM. Subsequently, these criteria weights are utilized as inputs for evaluating the 4 simulator modalities as alternatives using PROMETHEE. In PROMETHEE, we consider a preference function P (the difference between two alternatives a and b) for a particular criterion. The degree of preference (P) ranges from 0 to 1.

In Eq. (8), the preference function is related to the criterion fj(i), where Gj represents a non-decreasing function of the deviation between fi(a) and fj(b).

The PROMETHEE calculations use the following functions:

Here, π(a, b) denotes the overall preference index of alternative a over b, where both belong to the set of alternatives A. The leaving flow, ϕ+(a) measures how a dominates all other alternatives of A (the outranking characteristic of a). A higher value of ϕ+(a) indicates a better position of alternative a over others. The entering flow, ϕ−(a) measures how a is dominated by all other alternatives of A (the outranked characteristic of a). A lower value of ϕ−(a) indicates a better position of a over others. On the other hand, a higher value of the net flow, ϕ(a) represents a better position of alternative a.

Consequently, the sub-tools of PROMETHEE—PROMETHEE I, PROMETHEE II, and PROMETHEE rainbow—are utilized respectively for partial ranking, complete ranking, and visually representing all criteria according to their order of importance for each corresponding simulator modality (i.e., alternative).

2.3.1 PROMETHEE I: for partial ranking

PROMETHEE I provide a partial ranking of alternatives; for example, between alternative a and b, it can estimate preference (aPb), indifference (aIb), and incomparability (aRb) using the following functions:

aPb (alternative a is preferred over b) if:

aIb (indifference between alternative a and b) if:

aRb (alternative a and b is incomparable) if:

Here, in aPb both the outranking and the outranked flows are consistent, meaning a higher power of a is associated with a lower weakness of b in all cases. Therefore, the comparison between alternatives a and b can be considered as sure.

However, in aRb, the outranking and the outranked flows are inconsistent with regard to power-weakness analysis, meaning that a true preference of one alternative over the other cannot be determined. The decision-maker holds the responsibility to make a choice in this situation.

2.3.2 PROMETHEE II: complete ranking

PROMETHEE II provides a solution to the lack of definitive ranking of PROMETHEE I. It considers the net outranking flow: ϕ(a) = ϕ+(a) − ϕ−(a) where the higher the net flow, the better the alternative.

In scientific literature, both PROMETHEE I and PROMETHEE II are used in conjunction for complex decision-making scenarios since PROMETHEE I ensures the inclusion of indifferent and incomparable alternatives in the calculations, which may be left out in PROMETHEE II (Brans & De Smet, 2016).

2.3.3 PROMETHEE rainbow

The PROMETHEE rainbow is used to visualize a disaggregated view of the complete ranking derived from PROMETHEE II. It represents the details of net outranking flow: ϕ(a) = ϕ+(a) − ϕ−(a), where both the most-significant and less-significant criteria for each simulator modality can be displayed in their order of importance.

The model, along with the mathematical formulations as described above is processed through Visual PROMETHEE Academic Edition software (version 1.4.0.0). The adopted Bayesian BWM-PROMETHEE approach provides a clear and concise graphical representation that simplifies the decision-making process. Such an approach not only enhances the transparency of the decision-making process but also enables stakeholders to comprehend and interpret the decision outcomes with ease.

3 Results

3.1 Weights of the criteria and their ranking

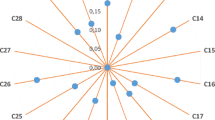

The estimation of both criteria and sub-criteria weights was performed using the Bayesian BWM syntax in MATLAB software (Mohammadi & Rezaei, 2020). First, the local weights derived from MATLAB were used to construct a visual credal ranking depicting a probabilistic comparison of criteria (see Fig. 3). For example, it can be inferred with 100% confidence that both instructional criteria (INST) and technical criteria (TECH) of simulators are the more important than the organizational criteria (ORG), while the confidence level decreases to 66% if the instructional criteria are compared with technical criteria (TECH) and so on (see Fig. 3a). In the sub-criteria level, fidelity (FID) is the most important criterion among the technical criteria of simulators (see Fig. 3b). Similarly, pedagogic value (PED) is the most important among the instructional criteria (Fig. 3c) and regulatory compliance (REG) among the organizational ones (see Fig. 3d). Table 5 reports the global weight for each sub-criteria, calculated by multiplying their local weights to their respective criteria-level weights. For instance, the global weight of fidelity (FID) is 0.0884, derived by multiplying local weight of technical (TECH) criteria (0.3737) with local weight of fidelity (FID) (0.2365).

Credal ranking of criteria (a) and sub-criteria (b–d). Criteria level: technical (TECH), instructional (INST), and organizational (ORG). Sub-criteria level: fidelity (FID), immersivity (IMM), possibility of remote training (RMT), possibility of team training (TMT), ease of training (ESY), ease of assessment (ASM), pedagogic value (PED), appropriate methods of training (MTH), diversity of training scenario (DSC), training efficiency (TRE), regulatory compliance (REG), cost of simulators (COS), and capacity of institutions (CAP)

The global weights of the sub-criteria (see Fig. 4) reveal that regulatory compliance (REG), pedagogic value (PED), and training efficiency (TRE) are the top three factors for evaluating simulator modalities. On the other hand, possibility of remote training (RMT), ease of assessment (ASM), and cost of simulators (COS) are the three least important factors, while the others—fidelity (FID), possibility of team training (TMT), appropriate methods of training (MTH), immersivity (IMM), the capacity of institutions (CAP), diversity of training scenario (DSC), and ease of training (ESY)—appear in decreasing order of importance.

Global ranking according to the weights of sub-criteria. Criteria level: technical (TECH), instructional (INST), and organizational (ORG). Sub-criteria level: fidelity (FID), immersivity (IMM), possibility of remote training (RMT), possibility of team training (TMT), ease of training (ESY), ease of assessment (ASM), pedagogic value (PED), appropriate methods of training (MTH), diversity of training scenario (DSC), training efficiency (TRE), regulatory compliance (REG), cost of simulators (COS), and capacity of institutions (CAP)

3.2 Evaluation of simulator modalities

PROMETHEE I and PROMETHEE II were employed to evaluate the four simulator modalities considering the global weights of 13 sub-criteria as derived from Bayesian BWM. Phi (+ve) score for the outranking flow and Phi (-ve) scores for the outranked flow as described in the methodology section were estimated using the Visual PROMETHEE software (see Table 6).

The unique Phi scores of each alternative demonstrate that they are not indifferent (see Eq. 14), enabling the ranking possibility of alternatives. PROMETHEE I was used to represent the partial ranking and PROMETHEE II was used for the complete ranking. Two columns in Fig. 5a represent the outranking flow and the outranked flow for the partial ranking, while the net flow is represented by a single column in Fig. 5b for the complete ranking. The horizontal lines in the flow columns account for the position of different simulators in their estimated ranking. The ranking starts at the top, where full-mission and VR simulator modalities rank higher followed by the cloud-based and desktop-based simulators (see Fig. 5).

As the horizontal lines in Fig. 5a neither overlap nor cross, the simulators are neither indifferent nor incomparable, signifying their distinct characteristics and comparability of alternatives in PROMETHEE ranking.

The PROMETHEE rainbow provides a graphical representation of the comprehensive evaluation of various simulator modalities by ranking them according to their preference. This evaluation considers both the criteria of utmost significance and those of relatively lesser significance, arranged in descending order of importance for each modality. In Fig. 6, the simulator modalities are ranked from left to right, while the criteria are ranked from top to bottom. For example, considering the full-mission simulators in the leftmost column, the upper portion of the PROMETHEE rainbow emphasizes the most-significant criteria, such as regulatory compliance, pedagogic value, and training efficiency. Meanwhile, the lower portion of the diagram focuses on less significant criteria, i.e., institutional capacity, simulator cost, and remote training capabilities (see Fig. 6).

4 Discussion

The results offer an evaluation of maritime simulators based on the ranked importance of various selection factors. Full-mission simulators appear to be the most preferred alternative among the experts, followed by VR, cloud-based, and desktop-based modalities.

At the criteria level, the preferences emphasize the instructional features of simulators, while the sub-criteria level focuses on aspects such as regulatory compliance, pedagogical value, training efficiency, fidelity, and team training. Contemporary research in maritime simulator training echoes these findings, emphasizing the need for pedagogical utility (Sellberg, 2018), fidelity (de Oliveira et al., 2022) and team training (Kandemir et al., 2018) capabilities of simulators. This illustrates a trend towards prioritizing educational effectiveness and adhering to industry standards while selecting and implementing maritime simulator training. On the other hand, remote training, assessment convenience, and cost are perceived as less significant factors in the context of simulator training at maritime institutions. This is likely because maritime institutions currently do not prioritize remote training and predominantly rely on traditional assessment methods. Additionally, simulator costs are not viewed as a major concern, as long as other essential selection criteria are satisfied.

A clearer picture emerges when the importance-based criteria ranking is examined with respect to each simulator modalities. The results reveal that the remote training capability (C3), cost of simulators (C12), and institutional capacity (C13) are the least significant criteria for full-mission simulators, while the same factors become important considerations for cloud-based simulators. In contrast, VR simulators have the potential to facilitate higher pedagogic value (C7), training efficiency (C10), fidelity (C1), team training capabilities (C4), appropriate training methods (C8), immersivity (C2), diverse training scenarios (C9), and remote training capabilities (C3). However, cloud-based simulators are perceived as less suitable when considering the exact criteria, except for their remote training capabilities (C3). Desktop-based simulators, on the other hand, are viewed as suitable when prioritizing organizational criteria (cost, institutional capacity, and regulatory compliance) and easier assessment procedures. Yet, they are perceived as less beneficial in terms of technical (fidelity, team training, etc.) and most instructional (pedagogical value, training efficiency, etc.) criteria, as shown in Fig. 6 (PROMETHEE rainbow).

A sensitivity analysis representing the effect of varying weights for each sub-criterion on the priority ranking of simulator modalities is presented in Fig. 7. For example, the initial priority ranking stays the same when only considering fidelity (C1) and immersivity (C2) with 100% weight. However, when focusing exclusively on remote training (C3), cloud-based simulators rank highest, followed by VR, desktop-based, and full-mission simulators (see Fig. 7 (C3)). Similarly, when emphasizing ease of training (C5), cloud-based simulators are preferred over desktop-based and VR simulators. In contrast, when prioritizing ease of assessment (C6), desktop-based simulators rank above VR and cloud-based alternatives, while full-mission simulators remain the top choice in both scenarios. Consistent with this trend, cloud-based simulators are least preferred when prioritizing pedagogic value (C7) but become most preferred option when institutional capacity (C13) is the highest priority (see Fig. 7 (C13)).

The study highlights the perception of experts and practitioners of maritime education and training (MET) for the evaluation of differing simulator modalities which has significant current and future implications.

4.1 Implications in maritime training

It is necessary to instil the required level of competence for future seafarers corresponding to the increasing level of complexity in real environment to ensure workplace safety. Maritime institutes usually find it challenging to utilize appropriate tools in the competence development process, especially due to the lack of understanding and available information. Therefore, an evaluation of simulator modalities along with the ranking of different relevant criterion is particularly useful for the maritime instructors, providing them with greater insights about the capabilities of available simulator modalities. This study provides a systemic framework for existing practices about how instructors optimize limited training resources to address specific training needs. For example, full-mission simulators are used as highly efficient team training solution, while VR simulators are suited for immersive remote training. In contrast, cloud-based simulators serve as cost-effective, user-friendly remote solutions, and desktop-based simulators are suitable for budget-conscious situations where remote access is not a priority.

The in-depth analysis reveals a perceived lack of pedagogic value in cloud-based and desktop-based simulators, despite its importance in educational technologies (Anderson & Dron, 2011; Fowler, 2015). Moreover, experts perceive that there are performance assessment challenges in VR and cloud-based simulators, which coupled with the emerging assessment technologies for seafarers assessment (e.g., eye tracking, accelerometers, heart-rate monitors) (Kim et al., 2021; Mallam et al., 2019), paves new ways for future research in performance assessment within maritime simulator training.

It is widely recognized that instructors’ knowledge and familiarity with technology-based tools is essential for the success of technology-based teaching and learning (Ghavifekr & Rosdy, 2015). Similarly, trainee familiarity with these tools is also important as it increases engagement and enhances learning outcomes (Chiu, 2021). Therefore, it would be beneficial for instructors and trainees to have a comprehensive understanding of the available simulator modalities, including their strengths and weaknesses. The mapping of different modalities of simulators along with associated criteria and sub-criteria as presented in this study would enable more informed decision-making and expected to facilitate enhanced learning outcomes.

4.2 Industrial and policy implications

The fusion of technology, pedagogy, and content is essential for effectively integrating new technologies into educational contexts (Mishra & Koehler, 2006). Key technological features, such as fidelity, immersivity, and usability, are determined by manufacturers, who also enable remote and team training capabilities. Thus, it is vital for manufacturers to address both technological and instructional aspects of simulators to enhance training outcomes. Additionally, the results of this study highlight that regulatory compliance, pedagogic value, and training efficiency serve as distinguishing factors between traditional full-mission simulators and emerging, less-expensive cloud-based alternatives (see Fig. 6). This presents an opportunity for technology developers to enhance regulatory compliance and improve instructional capabilities of low-cost simulators.

In practice, the adoption of learning technology (e.g., simulators) often lacks a comprehensive long-term strategy, as the procurement process tends to focus on immediate needs rather than future goals (Ringstaff & Kelley, 2002). Such shortsighted approach can lead to investment decisions that overlook broader educational goals, such as the 13 sub-criteria identified for maritime simulator selection. The selection process for simulator providers usually involves a pre-bidding and subsequent post-bidding stages, during which the bidders must meet a set of criteria to advance to the next phase. This evaluation process does not emphasize any single factor but assesses a combination of factors that contribute to the final decision where the proposed MCDM approach and associated identified sub-criteria could provide a comprehensive and systematic framework to evaluate potential simulator providers. The sensitivity analysis could be particularly useful in contexts where it is necessary to determine the effect of putting higher importance to a specific sub-criterion and seeing how it might affect the overall simulator selection process.

The results also suggest that VR simulators are perceived as more costly than cloud-based or desktop-based simulators. The reason could be the general perception about the high cost of VR scenario development, customization and procurement of professional-grade VR hardware (Su et al., 2020). However, VR training could be a cost-effective option in the long term than other high-fidelity simulator alternatives as evidenced in other domains such as in healthcare and in engineering training instances (Joshi et al., 2021; Perrenot et al., 2012). In addition, VR simulators would likely be used in situations where the other important criteria (e.g., pedagogic value, training efficiency, fidelity, team training, appropriate methods of training, immersivity, diversity of training scenario, and remote training) outweighs the cost considerations.

5 Conclusion and future directions

This study evaluates the state-of-the-art maritime simulator modalities based on 13 relevant factors. The proposed MCDM framework provides the opportunity to evaluate four available simulator modalities (i.e., full-mission, desktop-based, cloud-based and VR simulators) based on factor importance rankings. A sensitivity analysis revealed that the priority ranking of simulator modality selection could be influenced by the varying weights of the 13 factors. The findings of this study could be beneficial for both the academic and the industrial stakeholders aiming to provide quality education for maritime trainees.

Future research, involving criterion-specific analysis of simulators could facilitate developing hybrid simulator training modules and curriculums. Such modules could employ a weighted combination of differing simulator technologies to be used for a specific training scenario where separate simulator modalities could complement each other to address highly contextual training needs. For example, determining the most efficient combination of full-mission and VR simulators for training in a fire emergency scenario.

The study’s framework and criteria weights were developed through a two-step process: initially deriving criteria from literature, followed by using in-depth expert assessment to assign weights to each criterion. Future studies should assess the MCDM framework's performance in specific organizational settings, while accounting for evolving simulation technologies and incorporating emerging criteria (e.g., manufacturer’s timely service provisions, ease of data extraction, etc.). In future studies, it could be valuable to explore the integration of other approaches, such as the Delphi method with other MCDM methods. This combination could facilitate expert consensus on both the criteria and alternatives, leading to more robust and well-informed decision-making processes.

References

Ahvenjärvi S, Lahtinen J, Lóytókorpi M, Marva M-M (2021) ISTLAB – new way of utilizing a simulator system in testing and demonstration of intelligent shipping technology and training of future maritime professionals. TransNav 15(3):569–574. https://doi.org/10.12716/1001.15.03.09

Albadvi A, Chaharsooghi SK, Esfahanipour A (2007) Decision making in stock trading: An application of PROMETHEE. Eur J Oper Res 177(2):673–683. https://doi.org/10.1016/j.ejor.2005.11.022

Alexandrov C, Grozev G, Dimitrov G, Hristov A (2021) On education and training in maritime communications and the GMDSS during the Covid-19. Педагогика 93(S6):112–120. https://doi.org/10.53656/ped21-6s.09on

Anand G, Kodali R (2008) Selection of lean manufacturing systems using the PROMETHEE. J Model Manag. https://doi.org/10.1108/17465660810860372

Anderson T, Dron J (2011) Three generations of distance education pedagogy. Int Rev Res Open Dist Learn 12(3):80–97. https://doi.org/10.19173/irrodl.v12i3.890

Balcita RE, Palaoag TD (2020) Augmented reality model framework for maritime education to alleviate the factors affecting learning experience. Int J Inf Educ Technol 10(8):603–607. https://doi.org/10.18178/ijiet.2020.10.8.1431

Baldauf M, Schröder-Hinrichs J-U, Kataria A, Benedict K, Tuschling G (2016) Multidimensional simulation in team training for safety and security in maritime transportation. J Transport Saf Secur 8(3):197–213. https://doi.org/10.1080/19439962.2014.996932

Benedict K, Baldauf M, Felsenstein C, Kirchhoff M (2006) Computer-based support for the evaluation of ship handling exercise results. WMU J Marit Aff 5(1):17–35. https://doi.org/10.1007/BF03195079

Benedict K, Fischer S, Gluch M, Kirchhoff M, Schaub M, Baldauf M, Müller B (2017) Innovative fast time simulation tools for briefing / debriefing in advanced ship handling simulator training and ship operation. Trans Marit Sci 6(1):24–38. https://doi.org/10.7225/toms.v06.n01.003

Billard R, Smith J, Veitch B (2020) Assessing lifeboat coxswain training alternatives using a simulator. J Navig 73(2):455–470. https://doi.org/10.1017/S0373463319000705

Brandsæter A, Osen OL (2021) Assessing autonomous ship navigation using bridge simulators enhanced by cycle-consistent adversarial networks. Proc Inst Mech Eng O J Risk Reliab:1748006X2110210. https://doi.org/10.1177/1748006X211021040

Brans J-P, De Smet Y (2016) PROMETHEE methods. In: Multiple criteria decision analysis, vol 233. Springer, pp 187–219. https://doi.org/10.1007/978-1-4939-3094-4_6

Brans J-P, Vincke P (1985) A preference ranking organisation method: the PROMETHEE method for multiple criteria decision-making. Manag Sci 31(6):647–656. https://doi.org/10.1287/mnsc.31.6.647

Casareale C, Bernardini G, Bartolucci A, Marincioni F, D’Orazio M (2017) Cruise ships like buildings: wayfinding solutions to improve emergency evacuation. Build Simul 10(6):989–1003. https://doi.org/10.1007/s12273-017-0381-0

Chambers TP, Main R (2016) The use of high-fidelity simulators for training maritime pilots. J Ocean Technol 11(1):117–131 Accessed on 2nd May 2023, https://www.thejot.net/article-preview/?show_article_preview=756

Chauvin C, Clostermann J-P, Hoc J-M (2009) Impact of training programs on decision-making and situation awareness of trainee watch officers. Saf Sci 47(9):1222–1231. https://doi.org/10.1016/j.ssci.2009.03.008

Chiu TK (2021) Digital support for student engagement in blended learning based on self-determination theory. Comput Human Behav 124:106909. https://doi.org/10.1016/j.chb.2021.106909

Cooke N, Stone R (2013) RORSIM: A warship collision avoidance 3D simulation designed to complement existing Junior Warfare Officer training. Virtual Reality 17(3):169–179. https://doi.org/10.1007/s10055-013-0223-z

Crichton MT (2017) From cockpit to operating theatre to drilling rig floor: five principles for improving safety using simulator-based exercises to enhance team cognition. Cogn Technol Work 19(1):73–84. https://doi.org/10.1007/s10111-016-0396-9

Cwilewicz R, Tomczak L (2006) Application of 3D computer simulation for marine engineers as a hazard prevention tool. Risk Anal 1:303–311. https://doi.org/10.2495/RISK060291

da ConceiçÌo VFP, Mendes JB, Teodoro MF, Dahlman J (2019) Validation of a behavioral marker system for rating cadet’s non-technical skills. TransNav 13(1):89–96. https://doi.org/10.12716/1001.13.01.08

Dağdeviren M (2008) Decision making in equipment selection: an integrated approach with AHP and PROMETHEE. J Intell Manuf 19(4):397–406. https://doi.org/10.1007/s10845-008-0091-7

de Oliveira RP, Carim Junior G, Pereira B, Hunter D, Drummond J, Andre M (2022) Systematic literature review on the fidelity of maritime simulator training. Educ Sci 12(11):817. https://doi.org/10.3390/educsci12110817

Dimitrios G (2012) Engine control simulator as a tool for preventive maintenance. Journal of Maritime Research 9(1):39–44 Accessed on 2nd May 2023, https://www.jmr.unican.es/index.php/jmr/article/view/166

DNV GL (2021) Maritime simulator systems. DNV GL. https://standards.dnv.com/explorer/document/7F7CF68D20E949B39DC44D9C2B07EB2F/10. Accessed 2 May 2023.

Duan Z-L, Cao H, Ren G, Zhang J-D (2017) Assessment method for engine-room resource management based on intelligent optimization. J Mar Sci Technol 25(5):571–580. https://doi.org/10.6119/JMST-017-0710-1

Emad G, Roth WM (2008) Contradictions in the practices of training for and assessment of competency: A case study from the maritime domain. Educ Train 50(3):260–272. https://doi.org/10.1108/00400910810874026

Ernstsen J, Nazir S (2020) Performance assessment in full-scale simulators–A case of maritime pilotage operations. Saf Sci 129:104775. https://doi.org/10.1016/j.ssci.2020.104775

Fletcher S, Buckley R (1991) Designing competence-based training. Kogan page, London

Fowler C (2015) Virtual reality and learning: Where is the pedagogy? Br J Educ Technol 46(2):412–422. https://doi.org/10.1111/bjet.12135

Ghavifekr S, Rosdy WAW (2015) Teaching and learning with technology: Effectiveness of ICT integration in schools. Int J Res Educ Sci 1(2):175–191 Accessed on 2nd May 2023, https://eric.ed.gov/?id=EJ1105224

Ghosh S (2017) Can authentic assessment find its place in seafarer education and training? Aust J Marit Ocean Aff 9(4):213–226. https://doi.org/10.1080/18366503.2017.1320828

Halonen J, Lanki A (2019) Efficiency of maritime simulator training in oil spill response competence development. TransNav 13(1):199–204. https://doi.org/10.12716/1001.13.01.20

Halonen J, Lanki A, Rantavuo E (2017) New learning methods for marine oil spill response training. TransNav 11(2):153–159. https://doi.org/10.12716/1001.11.02.18

Hjelmervik K, Nazir S, Myhrvold A (2018) Simulator training for maritime complex tasks: An experimental study. WMU J Marit Aff 17(1):17–30. https://doi.org/10.1007/s13437-017-0133-0

Hontvedt M (2015) Professional vision in simulated environments—examining professional maritime pilots’ performance of work tasks in a full-mission ship simulator. Learn Cult Soc Interact 7:71–84. https://doi.org/10.1016/j.lcsi.2015.07.003

Hontvedt M, Arnseth HC (2013) On the bridge to learn: analysing the social organization of nautical instruction in a ship simulator. Int J Comput Support Collab Learn 8(1):89–112. https://doi.org/10.1007/s11412-013-9166-3

International Maritime Organization (IMO). (2010). International Convention on Standards of Training, Certification and Watchkeeping for Seafarers, 1978, STCW 1978 : 2010 amendments. London: IMO. http://dmr.regs4ships.com/docs/international/imo/stcw/2010/code/pt_b_chp_01.cfm.. Accessed on 2nd May 2023.

Joshi S, Hamilton M, Warren R, Faucett D, Tian W, Wang Y, Ma J (2021) Implementing Virtual Reality technology for safety training in the precast/prestressed concrete industry. Appl Ergon 90:103286. https://doi.org/10.1016/j.apergo.2020.103286

Jung J, Ahn YJ (2018) Effects of interface on procedural skill transfer in virtual training: Lifeboat launching operation study: A comparative assessment interfaces in virtual training. Comput Anim Virtual Worlds 29(3–4):e1812. https://doi.org/10.1002/cav.1812

Kandemir C, Celik M (2021) A human reliability assessment of marine engineering students through engine room simulator technology. Simul Gaming 52(5):635–649. https://doi.org/10.1177/10468781211013851

Kandemir C, Soner O, Celik M (2018) Proposing a practical training assessment technique to adopt simulators into marine engineering education. WMU J Marit Aff 17(1):1–15. https://doi.org/10.1007/s13437-018-0137-4

Kara G, Arıcan OH, Okşaş O (2020) Analysis of the effect of electronic chart display and information system simulation technologies in maritime education. Mar Technol Soc J 54(3):43–57. https://doi.org/10.4031/MTSJ.54.3.6

Kheybari S, Javdanmehr M, Rezaie FM, Rezaei J (2021) Corn cultivation location selection for bioethanol production: An application of BWM and extended PROMETHEE II. Energy 228:120593. https://doi.org/10.1016/j.energy.2021.120593

Kim T, Sharma A, Bustgaard M, Gyldensten WC, Nymoen OK, Tusher HM, Nazir S (2021) The continuum of simulator-based maritime training and education. WMU J Marit Aff 20(2):135–150. https://doi.org/10.1007/s13437-021-00242-2

Kobayashi H (2005) Use of simulators in assessment, learning and teaching of mariners. WMU J Marit Aff 4(1):57–75. https://doi.org/10.1007/BF03195064

Kocak G (2019) The re-design of a marine engine room simulator in consideration to ergonomics design principles. Int J Marit Eng 161(A1). https://doi.org/10.5750/ijme.v161iA1.1082

Kongsvik T, Haavik T, Bye R, Almklov P (2020) Re-boxing seamanship: From individual to systemic capabilities. Saf Sci 130:104871. https://doi.org/10.1016/j.ssci.2020.104871

Kumar A, Aswin A, Gupta H (2020) Evaluating green performance of the airports using hybrid BWM and VIKOR methodology. Tour Manag 76:103941. https://doi.org/10.1016/j.tourman.2019.06.016

Last P, Kroker M, Linsen L (2017) Generating real-time objects for a bridge ship-handling simulator based on automatic identification system data. Simul Model Pract Theory 72:69–87. https://doi.org/10.1016/j.simpat.2016.12.011

Li G, Mao R, Hildre HP, Zhang H (2020) Visual attention assessment for expert-in-the-loop training in a maritime operation simulator. IEEE Trans Industr Inform 16(1):522–531. https://doi.org/10.1109/TII.2019.2945361

Lian J, Yang X (2016) Simulation of ship-borne gps navigator based on virtual navigation environment. ICIC Express Lett B Appl 7(3):513–518. https://doi.org/10.24507/icicelb.07.03.513

Mallam SC, Nazir S, Renganayagalu SK (2019) Rethinking maritime education, training, and operations in the digital era: applications for emerging immersive technologies. J Mar Sci Eng 7(12):428. https://doi.org/10.3390/jmse7120428

Mangga C, Tibo-oc P, Lacson JB, Montaño R, Lacson JB (2021) Impact of engine room simulator as a tool for training and assessing bsmare students’ performance in engine watchkeeping. Pedagogika-Pedagogy 93(6s):88–100. https://doi.org/10.53656/ped21-6s.07eng

Martes L (2020) Best practices in competency-based education in maritime and inland navigation. TransNav 14(3):557–562. https://doi.org/10.12716/1001.14.03.06

Mishra P, Koehler MJ (2006) Technological pedagogical content knowledge: a framework for teacher knowledge. Teach Coll Rec 108(6):1017–1054. https://doi.org/10.1111/j.1467-9620.2006.00684.x

Mohammadi M, Rezaei J (2020) Bayesian best-worst method: a probabilistic group decision making model. Omega 96:102075. https://doi.org/10.1016/j.omega.2019.06.001

Moroney WF, Lilienthal MG (2009) Human factors in simulation and training. Human Factors in Simulation and Training. CRC Press, pp 3–38 (https://books.google.com.my/books?hl=en&lr=&id=cgT56UW6aPUC&oi=fnd&pg=PA3&ots=8kCHUWZibJ&sig=cwKCfIb06XjVlKI2nuNH7mktn4g&redir_esc=y#v=onepage&q&f=false)

Murai K, Wakida S, Fukushi K, Hayashi Y, Stone LC (2009) Enhancing maritime education and training: measuring a ship navigator’s stress based on salivary amylase activity. Interact Technol Smart Educ 6(4):293–302. https://doi.org/10.1108/17415650911009272

Nabeeh NA, Abdel-Monem A, Abdelmouty A (2019) A novel methodology for assessment of hospital service according to BWM, MABAC, PROMETHEE II. Neutrosophic Sets Syst 31:63–79 https://digitalrepository.unm.edu/nss_journal/vol31/iss1/5

Nazir S, Jungefeldt S, Sharma A (2019) Maritime simulator training across Europe: a comparative study. WMU J Marit Aff 18(1):197–224. https://doi.org/10.1007/s13437-018-0157-0

Nazir S, Øvergård KI, Yang Z (2015) Towards effective training for process and maritime industries. Procedia Manuf 3:1519–1526. https://doi.org/10.1016/j.promfg.2015.07.409

Pan Y, Oksavik A, Hildre HP (2020) Making sense of maritime simulators use: a multiple case study in Norway. Technol Knowledge Learn. https://doi.org/10.1007/s10758-020-09451-9

Perrenot C, Perez M, Tran N, Jehl J-P, Felblinger J, Bresler L, Hubert J (2012) The virtual reality simulator dV-Trainer(A (R)) is a valid assessment tool for robotic surgical skills. Surg Endosc Other Interv Tech 26(9):2587–2593. https://doi.org/10.1007/s00464-012-2237-0

Pohekar SD, Ramachandran M (2004) Application of multi-criteria decision making to sustainable energy planning—a review. Renew Sust Energ Rev 8(4):365–381. https://doi.org/10.1016/j.rser.2003.12.007

Przeniosło Ł, Peschke J, Hering J (2020) Improvement of good seamanship using specialized processes and algorithms onboard ships, in fleet operation centers, and in simulations. Sci J Marit Univ Szczecin 133(61):83–88. https://doi.org/10.17402/403

Renganayagalu SK, Mallam S, Hernes M (2022) Maritime Education and Training in the COVID-19 Era and Beyond. TransNav 16(1):59–69. https://doi.org/10.12716/1001.16.01.06

Renganayagalu SK, Mallam S, Nazir S, Ernstsen J, Haavardtun P (2019) Impact of simulation fidelity on student self-efficacy and perceived skill development in maritime training. TransNav 13(3):663–669. https://doi.org/10.12716/1001.13.03.25

Rezaei J (2015) Best-worst multi-criteria decision-making method. Omega 53:49–57. https://doi.org/10.1016/j.omega.2014.11.009

Ringstaff C, Kelley L (2002) The learning return on our educational technology investment: A review of findings from research. https://eric.ed.gov/?id=ED462924. Accessed on 2 May 2023.

Röttger S, Krey H (2021) Experimental study on the effects of a single simulator-based bridge resource management unit on attitudes, behaviour and performance. J Navig 74(5):1127–1141. https://doi.org/10.1017/S0373463321000436

Sanfilippo F (2017) A multi-sensor fusion framework for improving situational awareness in demanding maritime training. Reliab Eng Syst Saf 161:12–24. https://doi.org/10.1016/j.ress.2016.12.015

Sardar A, Garaniya V, Anantharaman M, Abbassi R, Khan F (2022) Comparison between simulation and conventional training: Expanding the concept of social fidelity. Process Saf Prog:prs.12361. https://doi.org/10.1002/prs.12361

Saus E-R, Johnsen BH, Saus JE-R, Eid J (2010) Perceived learning outcome: The relationship between experience, realism and situation awareness during simulator training. Int Marit Health 62(4):258–264 Accessed on 2nd May 2023, https://journals.viamedica.pl/international_maritime_health/article/view/26215

Seddiek IS (2019) Viability of using engine room simulators for evaluation machinery performance and energy management onboard ships. Int J Marit Eng 161(A3). https://doi.org/10.5750/ijme.v161iA3.1099

Sellberg C (2017a) Simulators in bridge operations training and assessment: a systematic review and qualitative synthesis. WMU J Marit Aff 16(2):247–263. https://doi.org/10.1007/s13437-016-0114-8

Sellberg C (2017b) Representing and enacting movement: the body as an instructional resource in a simulator-based environment. Educ Inf Technol 22(5):2311–2332. https://doi.org/10.1007/s10639-016-9546-1

Sellberg C (2018) From briefing, through scenario, to debriefing: the maritime instructor’s work during simulator-based training. Cogn Technol Work 20(1):49–62. https://doi.org/10.1007/s10111-017-0446-y

Sellberg C (2020) Pedagogical dilemmas in dynamic assessment situations: perspectives on video data from simulator-based competence tests. WMU J Marit Aff 19(4):493–508. https://doi.org/10.1007/s13437-020-00210-2

Sellberg C, Lindmark O, Lundin M (2019) Certifying navigational skills: a video-based study on assessments in simulated environments. TransNav 13(4):881–886. https://doi.org/10.12716/1001.13.04.23

Sellberg C, Lindmark O, Rystedt H (2018) Learning to navigate: the centrality of instructions and assessments for developing students’ professional competencies in simulator-based training. WMU J Marit Aff 17(2):249–265. https://doi.org/10.1007/s13437-018-0139-2

Sellberg C, Lindwall O, Rystedt H (2021a) The demonstration of reflection-in-action in maritime training. Reflective Pract 22(3):319–330. https://doi.org/10.1080/14623943.2021.1879771

Sellberg C, Lundin M (2017) Demonstrating professional intersubjectivity: The instructor’s work in simulator-based learning environments. Learn Cult Soc Interact 13:60–74. https://doi.org/10.1016/j.lcsi.2017.02.003

Sellberg C, Lundin M (2018) Tasks and instructions on the simulated bridge: Discourses of temporality in maritime training. Disc Stud 20(2):289–305. https://doi.org/10.1177/1461445617734956

Sellberg C, Lundin M, Säljö R (2021b) Assessment in the zone of proximal development: Simulator-based competence tests and the dynamic evaluation of knowledge-in-action. Classr Disc:1–21. https://doi.org/10.1080/19463014.2021.1981957

Sellberg C, Wiig AC (2020) Telling stories from the sea: facilitating professional learning in maritime post-simulation Debriefings. Vocat Learn 13(3):527–550. https://doi.org/10.1007/s12186-020-09250-4

Sencila V, Zažeckis R, Jankauskas A, Eitutis R (2020) The use of a full mission bridge simulator ensuring navigational safety during the Klaipeda Seaport Development. TransNav 14(2):417–424. https://doi.org/10.12716/1001.14.02.20

Sharma M, Sehrawat R (2020) A hybrid multi-criteria decision-making method for cloud adoption: Evidence from the healthcare sector. Technol Soc 61:101258. https://doi.org/10.1016/j.techsoc.2020.101258

Shen H, Zhang J, Cao H (2017) Research of marine engine room 3-D visual simulation system for the training of marine engineers. J Appl Sci Eng 20(2):229–242. https://doi.org/10.6180/jase.2017.20.2.11

Shen H, Zhang J, Yang B, Jia B (2019) Development of an educational virtual reality training system for marine engineers. Comput Appl Eng Educ 27(3):580–602. https://doi.org/10.1002/cae.22099

Standing G, Flores B, Olson D (2001) Understanding managerial preferences in selection equipment. J Oper Manag 19(2337):00047-4. https://doi.org/10.1016/S0272-6963(00)00047-4

Su SM, Perry V, Bravo L, Kase S, Roy H, Cox K, Dasari VR (2020) Virtual and augmented reality applications to support data analysis and assessment of science and engineering. Comput Sci Eng 22(3):27–38. https://doi.org/10.1109/MCSE.2020.2971188

Tabucanon MT, Batanov DN, Verma DK (1994) Decision support system for multicriteria machine selection for flexible manufacturing systems. Comput Ind 25(2):131–143. https://doi.org/10.1016/0166-3615(94)90044-2

Torkayesh AE, Zolfani SH, Kahvand M, Khazaelpour P (2021) Landfill location selection for healthcare waste of urban areas using hybrid BWM-grey MARCOS model based on GIS. Sustain Cities Soc 67:102712. https://doi.org/10.1016/j.scs.2021.102712

Tsoukalas VD, Papachristos DA, Tsoumas NK, Mattheu EC (2008) Marine engineers’ training: Educational assessment for an engine room simulator. WMU J Marit Aff 7(2):429–448. https://doi.org/10.1007/BF03195143

Veitch B, Billard R, Patterson A (2009) Evacuation Training Using Lifeboat Simulators. Sea Technol 50:4

Wahl A, Kongsvik T, Antonsen S (2020) Balancing Safety I and Safety II: learning to manage performance variability at sea using simulator-based training. Reliab Eng Syst Saf 195:106698. https://doi.org/10.1016/j.ress.2019.106698

Wahl AM (2020) Expanding the concept of simulator fidelity: the use of technology and collaborative activities in training maritime officers. Cogn Technol Work 22(1):209–222. https://doi.org/10.1007/s10111-019-00549-4

Wahl AM, Kongsvik T (2018) Crew resource management training in the maritime industry: A literature review. WMU J Marit Aff 17(3):377–396. https://doi.org/10.1007/s13437-018-0150-7

Wu H-Y, Chen J-K, Chen I-S, Zhuo H-H (2012) Ranking universities based on performance evaluation by a hybrid MCDM model. Measurement 45(5):856–880. https://doi.org/10.1016/j.measurement.2012.02.009

Wu Y, Miwa T, Uchida M (2017a) Using physiological signals to measure operator’s mental workload in shipping – an engine room simulator study. J Mar Eng Technol 16(2):61–69. https://doi.org/10.1080/20464177.2016.1275496

Wu Y, Miwa T, Uchida M (2017b) Advantages and obstacles of applying physiological computing in real world: lessons learned from simulator based maritime training. Int J Marit Eng 159(A2). https://doi.org/10.3940/rina.ijme.2017.a2.404

Zavadskas EK, Turskis Z, Kildienė S (2014) State of art surveys of overviews on MCDM/MADM methods. Technol Econ Dev Econ 20(1):165–179. https://doi.org/10.3846/20294913.2014.892037

Funding

Open access funding provided by University Of South-Eastern Norway. This study has received funding from the Directorate for Internationalisation and Quality Development in Higher Education (HK-dir) in Norway. Project code: SFU/10021-COAST.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tusher, H.M., Munim, Z.H. & Nazir, S. An evaluation of maritime simulators from technical, instructional, and organizational perspectives: a hybrid multi-criteria decision-making approach. WMU J Marit Affairs 23, 165–194 (2024). https://doi.org/10.1007/s13437-023-00318-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13437-023-00318-1