Abstract

Montgomery’s and Barrett’s modular multiplication algorithms are widely used in modular exponentiation algorithms, e.g. to compute RSA or ECC operations. While Montgomery’s multiplication algorithm has been studied extensively in the literature and many side-channel attacks have been detected, to our best knowledge no thorough analysis exists for Barrett’s multiplication algorithm. This article closes this gap. For both Montgomery’s and Barrett’s multiplication algorithm, differences of the execution times are caused by conditional integer subtractions, so-called extra reductions. Barrett’s multiplication algorithm allows even two extra reductions, and this feature increases the mathematical difficulties significantly. We formulate and analyse a two-dimensional Markov process, from which we deduce relevant stochastic properties of Barrett’s multiplication algorithm within modular exponentiation algorithms. This allows to transfer the timing attacks and local timing attacks (where a second side-channel attack exhibits the execution times of the particular modular squarings and multiplications) on Montgomery’s multiplication algorithm to attacks on Barrett’s algorithm. However, there are also differences. Barrett’s multiplication algorithm requires additional attack substeps, and the attack efficiency is much more sensitive to variations of the parameters. We treat timing attacks on RSA with CRT, on RSA without CRT, and on Diffie–Hellman, as well as local timing attacks against these algorithms in the presence of basis blinding. Experiments confirm our theoretical results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In his famous pioneer paper [19], Kocher introduced timing analysis. Two years later, [13] presented a timing attack on an early version of the Cascade chip. Both papers attacked unprotected RSA implementations which did not apply the Chinese remainder theorem (CRT). While in [19], the execution times of the particular modular multiplications and squarings are at least approximately normally distributed, this is not the case for the implementation in [13] since the Cascade chip applied the wide-spread Montgomery multiplication algorithm [21]. Due to conditional integer subtractions (so-called extra reductions), the execution times can only attain two values, and the probability whether an extra reduction occurs depends on the preceding Montgomery operations within the modular exponentiation. This fact caused substantial additional mathematical difficulties.

In [24], the random behaviour of the occurrence of extra reductions within a modular exponentiation was studied. The random extra reductions were modelled by a non-stationary time-discrete stochastic process. The analysis of this stochastic process (combined with an efficient error detection and correction strategy) allowed to drastically reduce the sample size, i.e. the number of timing measurements, namely from 200,000 to 300,000 [13] down to 5000 [28].

The analysis of the above-mentioned stochastic process turned out to be very fruitful also beyond this attack scenario. First, the insights into the probabilistic nature of the occurrence of extra reductions within modular exponentiations enabled the development of a completely new timing attack against RSA with CRT and Montgomery’s multiplication algorithm [22]. This attack was extended to an attack on the sliding-window-based RSA implementation in OpenSSL v.0.9.7b [8], which caused a patch. The efficiency of this attack (in terms of the sample size) was increased by a factor of \(\approx 10\) in [3]. Years later, it was shown that exponent blinding (cf. [19], Sect. 10) does not suffice to prevent this type of timing attack [26, 27].

Moreover, in [2, 14, 23] local timing attacks were considered. There, a side-channel attack (e.g. a power attack or an instruction cache attack) is carried out first, which yields the execution times of the particular Montgomery operations. This plus of information (compared to ‘pure’ timing attacks) allows to overcome basis blinding (a.k.a. message blinding, cf. [19], Sect. 10), and the attack works against both RSA with CRT and RSA without CRT. We mention that [2] led to a patch of OpenSSL v.0.9.7e.

Barrett’s (modular) multiplication algorithm (a.k.a. Barrett reduction) [4] is a well-known alternative to Montgomery’s algorithm. It is described in several standard manuals covering RSA, Diffie–Hellman (DH) or elliptic curve cryptosystems (e.g. [10, 20]). The efficiency (e.g. running time) of Barrett’s algorithm compared to Montgomery’s algorithm has been analysed for both software implementations [6] and hardware implementations [18]. However, to our knowledge, there do not exist thorough security evaluations of Barrett’s multiplication algorithm. In this paper, we close this gap. For the sake of comparison with previous work on Montgomery’s algorithm, we focus again on RSA with and without CRT. In addition, we cover static DH, which can be handled almost identically to RSA without CRT.

Similar to Montgomery’s algorithm, timing differences in Barrett’s multiplication algorithm are caused by conditional subtractions (so-called extra reductions), which suggests to apply similar mathematical methods. However, for Barrett’s algorithm, the mathematical challenges are significantly greater. One reason is that more than one extra reduction may occur. In particular, in place of a stochastic process over \(\{0,1\}\), a two-dimensional Markov process over \([0,1)\times \{0,1,2\}\) has to be analysed and understood. Again, probabilities can be expressed by multidimensional integrals over \([0,1)^\ell \), but the integrands are less suitable for explicit computations than in the Montgomery case. This causes additional numerical difficulties in particular for the local attacks, where \(\ell \) is usually very large. Our results show many parallels to the Montgomery case, and after suitable modifications, all the known attacks on Montgomery’s algorithm can be transferred to Barrett’s multiplication algorithm. However, there are also significant differences. First of all, for Barrett’s multiplication algorithm the attack efficiency is very sensitive to deviations of the modulus (i.e. of the RSA primes \(p_1\) and \(p_2\) if the CRT is applied), and attacks on RSA with CRT require additional attack steps.

The paper is organized as follows: in Sect. 2, we study the stochastic behaviour of the execution times of Barrett’s multiplication algorithm in the context of the square & multiply exponentiation algorithm. We develop, prove and collect results, which will be needed later to perform the attacks. In Sect. 3, properties of Montgomery’s and Barrett’s multiplication algorithms are compared, and furthermore, a variant of Barrett’s algorithm is investigated. In Sect. 4, the particular attacks are described and analysed, while Sect. 5 provides experimental results which confirm the theoretical considerations. Interesting in its own right is also an efficient look-ahead strategy. Finally, Sect. 6 discusses countermeasures.

2 Stochastic modelling of modular exponentiation

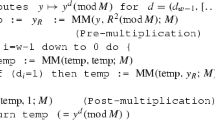

In this section, we analyse the stochastic timing behaviour of modular exponentiation algorithms when Barrett’s multiplication is applied. We consider a basic version of Barrett’s multiplication algorithm (cf. Algorithm 1). The slightly optimized version of this algorithm due to Barrett [4] will be discussed in Sect. 3.2.2 (cf. Algorithm 5), where we show that the same analysis applies, albeit with additional algorithmic noise. Since we assume that Steps 1 to 3 of Algorithm 1 run in constant time for fixed modulus length and base (see Justification of Assumption 2 in Sect. 3.2.1), we focus on the stochastic behaviour of the number of extra reductions in Algorithm 1. This knowledge will be needed in Sect. 4 when we consider concrete attacks. In Sect. 2.1, we investigate the Barrett’s multiplication algorithm in isolation, and in Sect. 2.2, we use our results to study the square & multiply exponentiation algorithm if Barrett’s multiplication is applied. This approach can be transferred to table-based exponentiation algorithms. Finally, we aim at equivalent results, which are already known for Montgomery’s multiplication algorithm. We reach this goal, but the technical difficulties are significantly larger than they are for Montgomery’s multiplication algorithm (cf. Sect. 3.1). In Sect. 2.3, we summarize the facts that are relevant for the attacks in Sect. 4. This allows to skip the technical Sects. 2.1 and 2.2 during the first reading of this paper.

2.1 Barrett’s modular multiplication algorithm

In this subsection, we study the basic version of Barrett’s modular multiplication algorithm (cf. Algorithm 1).

Definition 1

Let \(\mathbb {N}:= \{0, 1, \dotsc \}\). For \(M \in \mathbb {N}_{\ge 2}\), let \(\mathbb {Z}_M := \{0, 1, \dotsc , M-1\}\). Given \(x \in \mathbb {Z}\), we denote by \(x \bmod {M}\) the unique integer in \(\mathbb {Z}_M\) congruent to x modulo M, i.e. \(x \bmod {M} = x - \lfloor x/M \rfloor M\). We define the fractional part of a real number \(x \in \mathbb {R}\) by \(\{x\} := x - \lfloor x \rfloor \in [0, 1)\).

Let \(M \in \mathbb {N}_{\ge 2}\) be a modulus of length \(k = \lfloor \log _b M \rfloor + 1\) in base \(b \in \mathbb {N}_{\ge 2}\), i.e. we have \(b^{k-1} \le M \le b^k - 1\). The multiplication modulo M of two integers \(x,y \in \mathbb {Z}_M\) can be computed by an integer multiplication, followed by a modular reduction. The resulting remainder is \(r := (x \cdot y) \bmod {M} = z - q M\), where \(z := xy\) and \(q := \lfloor z/M \rfloor \). The computation of q is the most expensive part, because it involves an integer division. The idea of Barrett’s multiplication algorithm is to approximate q by

If the integer reciprocal \(\mu := \lfloor b^{2k}/M \rfloor \) of M has been precomputed and if b is a power of 2, then \(\widetilde{q}\) can be computed using only multiplications and bit shifts, which on common computer architectures are cheaper operations than divisions. From \(\widetilde{q}\) an approximation \(\widetilde{r} := z - \widetilde{q}M\) of r can be obtained. Since \(\widetilde{q}\) can be smaller than q, it may be necessary to correct \(\widetilde{r}\) by some conditional subtractions of M, which we call extra reductions. This leads to Algorithm 1.

Barrett showed that at most two extra reductions are required in Algorithm 1. The following lemma provides an exact characterization of the number of extra reductions and is at the heart of our subsequent analysis. In particular, the lemma identifies two important constants \(\alpha \in [0,1)\) and \(\beta \in (b^{-1},1]\) associated with M and b.

Lemma 1

On input \(x, y \in \mathbb {Z}_M\), the number of extra reductions carried out in Algorithm 1 is

where

Proof

Set \(z := xy\), \(q := \lfloor z/M \rfloor \), and \(\widetilde{q} := \lfloor \lfloor z/b^{k-1} \rfloor \mu /b^{k+1} \rfloor \). Since \(z \bmod {M} = z - qM\), the number of extra reductions is

Since \(\alpha ,\beta \in [0,1]\), this number of extra reductions is in \(\{0,1,2\}\). Finally, we note that \(\lfloor b^{2k}/M\rfloor \ge \lfloor b^{2k}/(b^k-1)\rfloor \ge b^k+1\), hence \(\beta > b^{-1}\). \(\square \)

Remark 1

Note that \(\alpha =0\) if and only if M divides \(b^{2k}\). In order to exclude this corner case (which is not relevant to our applications anyway), we assume \(\alpha > 0\) for the remainder of this paper. Typically, \(b=2^{\mathsf {ws}}\) for some word size \(\mathsf {ws}\ge 1\) and \(\alpha =0\) can only happen if M is a power of two, and then, modular multiplication is easy anyway. More special cases of \(\alpha \) and \(\beta \) will be discussed in Sect. 3.2.3.

We first study the distribution of the number of extra reductions which are needed in Algorithm 1 for random inputs. To this end, we introduce the following stochastic model. Random variables are denoted by capital letters, and realizations of these random variables (i.e. values taken on by these random variables) are denoted with the corresponding small letters.

Stochastic Model 1

Let \(s, t \in [0, 1)\). We define the random variable

where U, V are independent, uniformly distributed random variables on [0, 1).

A realization r of R(s, t) expresses the random quantity of extra reductions which is required in Algorithm 1 for normalized inputs \(x/M, y/M\in M^{-1}\mathbb {Z}_M\) within a small neighbourhood of s and t in [0, 1).

Justification of Stochastic Model 1: Assume that N(s) and N(t) are small neighbourhoods of s and t in [0, 1), respectively. Let \(s_{\min }, s' \in N(s) \cap M^{-1}\mathbb {Z}_M\) such that \(s_{\min }\) is minimal. Analogously, let \(t_{\min }, t' \in N(t) \cap M^{-1}\mathbb {Z}_M\) such that \(t_{\min }\) is minimal. Then, there are \(m,n \in \mathbb {Z}\) such that \(s' = s_{\min } + m/M\) and \(t' = t_{\min } + n/M\). Then, \(x' := Ms', x_{\min } := Ms_{\min }, y' := Mt', y_{\min }:=Mt_{\min }\) are integers in \(\mathbb {Z}_M\) such that

The integers m and n assume values in

respectively. For cryptographically relevant modulus sizes, these numbers are very large so that one may assume that the admissible terms \(\{(m y_{\min } + n x_{\min } + mn) \bmod {M}\}\) are essentially uniformly distributed on \(\mathbb {Z}_M\), justifying the model assumption that the random variable V is uniformly distributed on [0, 1). The assumptions on the uniformity of U and the independence of U and V have analogous justifications. \(\square \)

Remark 2

-

(i)

In Stochastic Model 1 and Stochastic Model 2, we follow a strategy which has been very successful in the analysis of Montgomery’s multiplication algorithm. Of course, the number of extra reductions needed for a particular computation \((xy)\bmod {M}\) is deterministic. On the other hand, by Lemma 1 the number of extra reductions only depends on the fact whether in (1) the sum within the brackets \(\lceil \cdot \rceil \) is contained in \((-1,0]\), (0, 1] or (1, 2). We exploit the fact that concerning the number of extra reductions \(x,x'\in \mathbb {Z}_M\) have similar stochastic properties if \(\vert x/M - x'/M\vert \) is small in \(\mathbb {R}\). Furthermore, for moduli M that are used in cryptography even very small intervals in [0, 1) contain a gigantic number of elements of \(\mathbb {Z}_M/M\).

-

(ii)

For extremely small input x or y of Algorithm 1, Stochastic Model 1 may not apply. In particular, if one of the inputs x, y is 0 or 1, the integer product \(x \cdot y\) is already reduced modulo M; hence, \(\widetilde{q} = 0\) and no extra reductions occur (cf. Remark 4).

Definition 2

For \(x \in \mathbb {R}\), we write \((x)_+ := \max \{0, x\}\). Moreover, we set \((x)_+^0 := 1_{\{x \ge 0\}}\) and \((x)_+^n := ((x)_+)^n\) for \(n \in \mathbb {N}_{>0}\).

Lemma 2

Let \(s, t \in [0,1)\).

-

(i)

The term \((an)^{-1} (ax+b)_+^n\) is an antiderivative of \((ax+b)_+^{n-1}\) for all \(a,b \in \mathbb {R}\) with \(a \ne 0\) and all \(n \in \mathbb {N}_{>0}\).

-

(ii)

Let \(\mathscr {B}([0,1))\) be the Borel \(\sigma \)-algebra on [0, 1). For \(r \in \mathbb {Z}_3\) and \(B \in \mathscr {B}([0,1))\), we have

$$\begin{aligned}&\Pr \bigl ( R(s,t) \le r, V \in B \bigr ) \\&\quad = \beta ^{-1} \int _B \bigl ( (r {-}\alpha st {+} v)_+ - (r -\alpha st - \beta + v)_+ \bigr )\mathrm{{d}}{v}. \end{aligned}$$ -

(iii)

For \(r \in \mathbb {Z}_3\), we have

$$\begin{aligned}&\Pr \bigl ( R(s,t) \le r \bigr ) \\&\quad ={} (2\beta )^{-1} \bigl ( - (r - \alpha st)_+^2 + (r - \alpha st - \beta )_+^2 \\&\quad + (r - \alpha st + 1)_+^2 - (r -\alpha st - \beta + 1)_+^2 \bigr ) . \end{aligned}$$ -

(iv)

We have \({{\,\mathrm{E}\,}}\bigl (R(s, t)\bigr ) = \alpha st + \beta /2\).

-

(v)

We have \({{\,\mathrm{Var}\,}}\bigl (R(s,t)\bigr ) = \alpha st + \beta /2 - (\alpha st + \beta /2)^2 + \beta ^{-1} (\alpha st + \beta - 1)_+^2\).

Proof

For assertion (i), see, for example, [9]. Now let \(r \in \mathbb {Z}_3\) and \(v \in [0,1)\). By (2), we have

Now let \(B \in \mathscr {B}([0,1))\). Since V is uniformly distributed on [0, 1), we obtain

Setting \(B=[0,1)\), we get

Distinguishing the cases \(\alpha st + \beta \le 1\) and \(\alpha st + \beta > 1\), the expectation and variance of R(s, t) can be determined by careful but elementary computations. \(\square \)

Lemma 3

Let S be a uniformly distributed random variable on [0, 1) and let \(t \in (0,1)\).

-

(i)

For \(r \in \mathbb {Z}_3\), we have

$$\begin{aligned}&\Pr \bigl ( R(S,t) \le r \bigr ) \\&\quad ={} (6\alpha \beta t)^{-1} \bigl ( - (r)_+^3 + (r - \alpha t)_+^3 + (r - \beta )_+^3 \\&\quad + (r + 1)_+^3 - (r - \alpha t - \beta )_+^3 - (r - \alpha t + 1)_+^3 \\&\quad - (r - \beta + 1)_+^3 + (r - \alpha t - \beta + 1)_+^3 \bigr ) . \end{aligned}$$ -

(ii)

We have \({{\,\mathrm{E}\,}}\bigl (R(S, t)\bigr ) = \alpha t/2 + \beta /2\).

-

(iii)

We have \({{\,\mathrm{Var}\,}}\bigl (R(S, t)\bigr ) = \alpha t/2 + \beta /2 - (\alpha t/2 + \beta /2)^2 + (3\alpha \beta t)^{-1}(\alpha t + \beta - 1)_+^3\).

Proof

Let \(r \in \mathbb {Z}_3\). We have

By Lemma 2 (iii), we obtain

Distinguishing the cases \(\alpha t + \beta \le 1\) and \(\alpha t + \beta > 1\), the expectation and variance of R(S, t) can be determined by careful but elementary computations. \(\square \)

Lemma 4

Let S be a uniformly distributed random variable on [0, 1).

-

(i)

For \(r \in \mathbb {Z}_3\), we have

$$\begin{aligned} \Pr \bigl ( R(S,S)&\le r \bigr ) \\ ={}&(30\alpha ^{1/2}\beta )^{-1} \bigl ( - 15 r^2 \min \{\alpha , (r)_+\}^{1/2} \\&+ 10 r \min \{\alpha , (r)_+\}^{3/2} \\&- 3 \min \{\alpha , (r)_+\}^{5/2} \\&+ 15 (r-\beta )^2 \min \{\alpha , (r-\beta )_+\}^{1/2} \\&- 10 (r-\beta ) \min \{\alpha , (r-\beta )_+\}^{3/2} \\&+ 3 \min \{\alpha , (r-\beta )_+\}^{5/2} \\&+ 15 (r+1)^2 \min \{\alpha , (r+1)_+\}^{1/2} \\&- 10 (r+1) \min \{\alpha , (r+1)_+\}^{3/2} \\&+ 3 \min \{\alpha , (r+1)_+\}^{5/2} \\&- 15 (r-\beta +1)^2 \min \{\alpha , (r-\beta +1)_+\}^{1/2} \\&+ 10 (r-\beta +1) \min \{\alpha , (r-\beta +1)_+\}^{3/2} \\&- 3 \min \{\alpha , (r-\beta +1)_+\}^{5/2} \bigr ) . \end{aligned}$$ -

(ii)

We have \({{\,\mathrm{E}\,}}\bigl (R(S,S)\bigr ) = \alpha /3 + \beta /2\).

-

(iii)

If \(\alpha + \beta \le 1\), then \({{\,\mathrm{Var}\,}}\bigl (R(S,S)\bigr ) = \alpha /3 + \beta /2 - (\alpha /3 + \beta /2)^2\). If \(\alpha + \beta > 1\), then \({{\,\mathrm{Var}\,}}\bigl (R(S,S)\bigr ) = \alpha /3 + \beta /2 - (\alpha /3 + \beta /2)^2 + (15\beta )^{-1}\bigl ( 15(1-\beta )^2 - 10\alpha (1-\beta ) + 3\alpha ^2 \bigr ) - 8 (15\alpha ^{1/2}\beta )^{-1} (1-\beta )^{5/2}\).

Proof

Let \(r \in \mathbb {Z}_3\). We have

By Lemma 2 (iii), we obtain

For \(c \in \{r, r-\beta , r+1, r-\beta +1\}\), we have

This implies (i). Distinguishing the cases \(\alpha + \beta \le 1\) and \(\alpha + \beta > 1\), the expectation and variance of R(S, S) can be determined by careful but elementary computations. \(\square \)

The number of extra reductions in Barrett’s multiplication algorithm depends decisively on the parameters \(\alpha \) and \(\beta \). In Table 1, we illustrate this phenomenon with several numerical examples.

Remark 3

-

(i)

For a random modulus M that is uniformly distributed on \(\{b^{k-1}, \dotsc , b^k-1\}\), the parameters \((\alpha , \beta )\) can be modelled as realizations of the random vector \((A, B) := (X^2 Y, (bX)^{-1})\), where X and Y denote independent random variables that are uniformly distributed on \([b^{-1}, 1)\) and [0, 1), respectively. We have

$$\begin{aligned} {{\,\mathrm{E}\,}}(A)&= \frac{1}{2} \frac{1}{1-b^{-1}} \int _{b^{-1}}^1 x^2 {\text {d}}{x} = \frac{1}{6} (1+b^{-1}+b^{-2}) , \\ {{\,\mathrm{E}\,}}(B)&= \frac{b^{-1}}{1-b^{-1}}\int _{b^{-1}}^1 x^{-1} {\text {d}}{x} = \frac{\log (b)}{b-1} . \end{aligned}$$For example, we get \(\alpha \approx 0.29 \) and \(\beta \approx 0.69\) on average if \(b=2\).

-

(ii)

By (2), two extra reductions can only occur if \(\alpha + \beta > 1\) and the probability of this event increases for larger sums \(\alpha + \beta \). This sum can be bounded by

$$\begin{aligned} b^{-1}< \alpha + \beta \le \frac{M^2}{b^{2k}} + \frac{b^{k-1}}{M} < 1 + b^{-1} , \end{aligned}$$in particular \(\alpha \) and \(\beta \) cannot attain their individual maxima simultaneously. For \(b=2\), we numerically determined \(\Pr (A+B > 1) \approx 0.5\), which means that two extra reductions do occur for roughly one half of the moduli in this case.

-

(iii)

Although the probability of two extra reductions can be very small or even 0, it does not simplify the stochastic representations (2) and (3) in Stochastic Model 1 and Stochastic Model 2. In particular, it does not simplify the analysis unless \(\alpha \approx 0\) or \(\beta \approx 0\) (cf. Sect. 3.2.3).

-

(iv)

Although the impact of the extra reductions in terms of running time efficiency is only marginal for both Barrett’s multiplication algorithm and Montgomery’s multiplication algorithm, extra reductions are the source of several side-channel attacks (cf. Sect. 4).

2.2 Modular exponentiation (square and multiply algorithms)

Now we consider the left-to-right binary exponentiation algorithm (see Algorithm 2), where modular squarings and multiplications are performed using Barrett’s algorithm (see Algorithm 1). Our goal is to define and analyse a stochastic process which allows to study the stochastic behaviour of the execution time of Algorithm 2. Sect. 2.2 provides a sequence of technical lemmata which be needed later.

Definition 3

Let \(d \in \mathbb {N}_{>0}\). The binary representation of d is denoted by \((d_{\ell -1},\dotsc ,d_0)_2\) with \(d_{\ell -1}=1\) and \(\ell \) is called bit-length of d. The Hamming weight of d is defined as \({{\,\mathrm{ham}\,}}(d) := d_0 + \cdots + d_{\ell -1}\).

Let \(y \in \mathbb {Z}_M\) be an input basis of Algorithm 2. We denote the intermediate values computed in the course of Algorithm 2 by \(x_0, x_1, \dotsc \in \mathbb {Z}_M\) and associate the sequence of squaring and multiplication operations with a string \(\mathtt {O}_1\mathtt {O}_2 \cdots \) over the alphabet \(\{\mathtt {S},\mathtt {M}\}\). For the sake of defining an infinite stochastic process, we assume that Algorithm 2 may run forever; hence, \(x_0, x_1, \dotsc \) is an infinite sequence and \(\mathtt {O}_1\mathtt {O}_2 \cdots \in \{\mathtt {S},\mathtt {M}\}^{\omega }\). Consequently, we have \(x_0 = y\) and

for all \(n \in \mathbb {N}\). Note that \(\mathtt {O}_1\mathtt {O}_2 \cdots \) does not contain the substring \(\mathtt {MM}\). We will refer to strings \(\mathtt {O}_1\mathtt {O}_2 \cdots \) without substring \(\mathtt {MM}\) as operation sequences.

Note that the square and multiply algorithm applied to an exponent d corresponds to a particular finite (d-specific) operation sequence \(\mathtt {O}_1\mathtt {O}_2\cdots \mathtt {O}_{\ell +{{\,\mathrm{ham}\,}}(d)-2}\), where \(\ell \) is the bit-length of d.

Stochastic Model 2

Let \(t \in [0,1)\) and let \(\mathtt {O}_1 \mathtt {O}_2 \cdots \in \{\mathtt {S},\mathtt {M}\}^{\omega }\) be an operation sequence. We define a stochastic process \((S_n, R_n)_{n \in \mathbb {N}}\) on the state space \(\mathscr {S} := [0, 1) \times \mathbb {Z}_3\) as follows. Let \(S_0, S_1, \dotsc \) be independent random variables on [0, 1). The random variable \(S_0\) is uniformly distributed in \(N(s_0)\cap M^{-1}\mathbb {Z}_M\), where \(N(s_0)\) is a small neighbourhood of \(s_0=y/M\). Further, the random variables \(S_1, S_2, \dotsc \) are uniformly distributed random variables on [0, 1), while \(R_0\) is arbitrary (e.g. \(R_0=0\)), and for \(n \in \mathbb {N}\) we define

where \(U_1, U_2, \dotsc \) are independent, uniformly distributed random variables on [0, 1).

The value t represents a normalized input y/M of Algorithm 2, realizations \(s_0, s_1, \dotsc \) of \(S_0, S_1, \dotsc \) represent the normalized intermediate values \(x_0/M, x_1/M, \dotsc \in M^{-1}\mathbb {Z}_M\), and a realization \(r_{n+1}\) of \(R_{n+1}\) represents the number of extra reductions which are necessary to compute \(x_{n+1}\) from \(x_n\) (and, additionally, from y if \(\mathtt {O}_{n+1} = \mathtt {M}\)).

Justification of Stochastic Model 2: This follows from the justification of Stochastic Model 1 by observing that, for inputs \(x, y \in \mathbb {Z}_M\) of Algorithm 1, the term \(\{xy/M\}\) in (1) equals the normalized value \(\bigl ( (xy)\bmod {M} \bigr ) / M\) of the product modulo M. As in Stochastic Model 1, we first conclude that \(U_1\) and \(S_1\) are uniformly distributed on [0, 1). It follows by induction that \(S_2,S_3,\ldots \) are uniformly distributed on [0, 1), and \(S_0,S_1,S_2,\dotsc \) are independent. \(\square \)

Remark 4

Remark 2 (ii) applies to the stochastic Stochastic Model 2 as well. In Sect. 4.3, we will adjust the stochastic process \((S_n,R_n)_{n\in \mathbb {N}}\) to a table-based exponentiation algorithm (fixed window exponentiation), where multiplications by 1 occur frequently. Multiplications by 1 will therefore be handled separately.

Lemma 5

Let \(\lambda \) be the Lebesgue measure on the Borel \(\sigma \)-algebra \(\mathscr {B}([0,1))\), let \(\eta \) be the counting measure on the power set \(\mathscr {P}(\mathbb {Z}_3)\) (i.e. \(\eta (0) = \eta (1) = \eta (2) = 1\)), and let \(\lambda \otimes \eta \) be the product measure on the product \(\sigma \)-algebra \(\mathscr {B}([0,1)) \otimes \mathscr {P}(\mathbb {Z}_3)\).

-

(i)

The stochastic process \((S_n, R_n)_{n\in \mathbb {N}}\) is a non-homogeneous Markov process on \(\mathscr {S}\).

-

(ii)

The random vector \((S_n, R_n)\) has a density \(f_n(s_n, r_n)\) with respect to \(\lambda \otimes \eta \).

-

(iii)

For \(B \in \mathscr {B}([0,1))\), \(r_{n+1} \in \mathbb {Z}_3\), and fixed \((s_n, r_n) \in \mathscr {S}\), we have

$$\begin{aligned} \Pr ( S_{n+1} \in B, R_{n+1}&= r_{n+1} \mid S_n = s_n, R_n = r_n) \\&= \int _{B \times \{r_{n+1}\}} h_{n+1}(s_{n+1}, r \mid s_n) \mathrm{{d}}{s_{n+1}} \mathrm{{d}}{\eta (r)} \\&= \int _B h_{n+1}(s_{n+1}, r_{n+1} \mid s_n) \mathrm{{d}}{s_{n+1}} , \end{aligned}$$where the conditional density is given by

$$\begin{aligned}&h_{n+1}(s_{n+1}, r_{n+1} \mid s_n) \\&\quad = \beta ^{-1} \bigl ( (r_{n+1} - \alpha s_n^2 + s_{n+1})_+ \\&\quad - (r_{n+1} - \alpha s_n^2 + s_{n+1} - \beta )_+ \\&\quad - (r_{n+1} - \alpha s_n^2 + s_{n+1} - 1)_+ \\&\quad + (r_{n+1} - \alpha s_n^2 + s_{n+1} - \beta - 1)_+ \bigr ) \end{aligned}$$if \(\mathtt {O}_{n+1} = \mathtt {S}\) (i.e. the \((n+1)\)-th operation is squaring) and

$$\begin{aligned}&h_{n+1}(s_{n+1}, r_{n+1} \mid s_n) \\&\quad = \beta ^{-1} \bigl ( (r_{n+1} - \alpha s_n t + s_{n+1})_+ \\&\quad - (r_{n+1} - \alpha s_n t + s_{n+1} - \beta )_+ \\&\quad - (r_{n+1} - \alpha s_n t + s_{n+1} - 1)_+ \\&\quad + (r_{n+1} - \alpha s_n t + s_{n+1} - \beta - 1)_+ \bigr ) \end{aligned}$$if \(\mathtt {O}_{n+1} = \mathtt {M}\) (i.e. the \((n+1)\)-th operation is multiplication by y). In particular, the conditional density \(h_{n+1}(s_{n+1}, r_{n+1} \mid s_n)\) does not depend on \(r_n\). (This justifies the omission of \(r_n\) from the list of arguments.)

Proof

Assertion (i) is an immediate consequence of the definition of Stochastic Model 2. To show (ii), let \(C\subseteq [0,1)\times \mathbb {Z}_3\) be a \((\lambda \otimes \eta )\)-zero set. Then, \(C=\bigcup _{j\in \mathbb {Z}_3} B_j\times \{j\}\) (disjoint union) for measurable \(\lambda \)-zero sets \(B_0,B_1\) and \(B_2\). Hence, \(\lambda (B_u)=0\) for \(B_u:=B_0\cup B_1 \cup B_2\). Finally, we get

This shows that the pushforward measure of \((S_n, R_n)\) is absolutely continuous with respect to \(\lambda \otimes \eta \); therefore, assertion (ii) follows from the Radon–Nikodym theorem. Assertion (iii) follows from Lemma 2 (ii) with \((v,r)=(s_{n+1},r_{n+1})\) and \((s,t)=(s_n,s_n)\) (if \(\mathtt {O}_{n+1} = \mathtt {S}\)) or \((s,t)=(s_n,t)\) (if \(\mathtt {O}_{n+1} = \mathtt {M}\)) and \(V=S_{n+1}\). \(\square \)

Lemma 6

-

(i)

For \(r_{n+1} \in \mathbb {Z}_3\), we have

$$\begin{aligned} \Pr (R_{n+1}&= r_{n+1}) \\&= \int _{[0,1)\times \mathbb {Z}_3} \biggl ( \int _0^1 h_{n+1}(s_{n+1}, r_{n+1} \mid s_n) \mathrm{{d}}{s_{n+1}} \biggr ) \\&f_n(s_n, r_n) \mathrm{{d}}{s_n} {\mathrm{d}\eta (r_n)}\\&= \int _0^1 \biggl ( \int _0^1 h_{n+1}(s_{n+1}, r_{n+1} \mid s_n) {\text {d}}{s_{n+1}} \biggr ) \mathrm{{d}}{s_n} . \end{aligned}$$In particular, the distribution of \(R_{n+1}\) does not depend on n but only on the operation type \(\mathtt {O}_{n+1} \in \{\mathtt {S},\mathtt {M}\}\).

-

(ii)

There exist real numbers \(\mu _{\mathtt {S}}, \mu _{\mathtt {M}}, \sigma _{\mathtt {S}}^2, \sigma _{\mathtt {M}}^2 \in \mathbb {R}\) such that \({{\,\mathrm{E}\,}}(R_{n+1}) = \mu _{\mathtt {O}_{n+1}}\) and \({{\,\mathrm{Var}\,}}(R_{n+1}) = \sigma _{\mathtt {O}_{n+1}}^2\) for all \(n \in \mathbb {N}\). In particular, we have

$$\begin{aligned}&\begin{aligned} \mu _{\mathtt {S}}&= \alpha /3 + \beta /2 , \\ \mu _{\mathtt {M}}&= \alpha t/2 + \beta /2 , \end{aligned} \end{aligned}$$(4)$$\begin{aligned}&\begin{aligned} \sigma _{\mathtt {S}}^2 ={}&\alpha /3 + \beta /2 - (\alpha /3 + \beta /2)^2 , \quad \text {if}\,\alpha + \beta \le 1 , \\ \sigma _{\mathtt {S}}^2 ={}&\alpha /3 + \beta /2 - (\alpha /3 + \beta /2)^2 \\&+ (15\beta )^{-1}\bigl ( 15(1-\beta )^2 -10\alpha (1-\beta ) + 3\alpha ^2 \bigr ) \\&- 8 (15\alpha ^{1/2}\beta )^{-1} (1-\beta )^{5/2} , \quad \text {if}\, \alpha + \beta > 1 , \end{aligned} \end{aligned}$$(5)$$\begin{aligned}&\begin{aligned} \sigma _{\mathtt {M}}^2 ={}&\alpha t/2 + \beta /2 - (\alpha t/2 + \beta /2)^2 \\&+ (3\alpha \beta t)^{-1}(\alpha t + \beta - 1)_+^3 . \end{aligned} \end{aligned}$$(6)The expectation \(\mu _{\mathtt {M}}\) is strictly monotonously increasing in \(t=y/M\).

Proof

Let \(r_{n+1} \in \mathbb {Z}_3\). Since

the first equation of assertion (i) follows from Lemma 5. The second equation of (i) follows from the fact that

because \(S_n\) is uniformly distributed on [0, 1). Assertion (ii) is an immediate consequence of (i). The formulae (4), (5), and (6) follow from Lemma 4 (ii), Lemma 3 (ii), Lemma 4 (iii), and Lemma 3 (iii). The final assertion of (ii) is obvious since \(\alpha >0\). \(\square \)

Definition 4

A sequence \(X_1, X_2, \dotsc \) of random variables is called m-dependent if the random vectors \((X_1, \dotsc , X_u)\) and \((X_v, \dotsc , X_n)\) are independent for all \(1 \le u < v \le n\) with \(v-u > m\).

Lemma 7

-

(i)

For \(r_{n+1}, \dotsc , r_{n+u} \in \mathbb {Z}_3\), we have

$$\begin{aligned}&\Pr (R_{n+1} = r_{n+1}, \dotsc , R_{n+u} = r_{n+u}) \\&\begin{aligned} ={}&\int _{[0,1) \times \mathbb {Z}_3} \biggl ( \int _0^1 \biggl ( \cdots \\&\int _0^1 h_{n+u}(s_{n+u}, r_{n+u} \mid s_{n+u-1}) \mathrm{{d}}{s_{n+u}} \cdots \biggr ) \\&h_{n+1}(s_{n+1}, r_{n+1} \mid s_n) \mathrm{{d}}{s_{n+1}} \biggr ) f_n(s_n, r_n) \mathrm{{d}}{s_n} {\mathrm{d}\eta (r_n)} \end{aligned} \\&\begin{aligned} ={}&\int _0^1 \biggl ( \int _0^1 \biggl ( \cdots \\&\int _0^1 h_{n+u}(s_{n+u}, r_{n+u} \mid s_{n+u-1}) \mathrm{{d}}{s_{n+u}} \cdots \biggr ) \\&h_{n+1}(s_{n+1}, r_{n+1} \mid s_n) \mathrm{{d}}{s_{n+1}} \biggr ) \mathrm{{d}}{s_n} . \end{aligned} \end{aligned}$$In particular, the joint distribution of \(R_{n+1}, \dotsc , R_{n+u}\) does not depend on n but only on the operation types \(\mathtt {O}_{n+1}, \dotsc , \mathtt {O}_{n+u} \in \{\mathtt {S},\mathtt {M}\}\).

-

(ii)

There exist real numbers \({{\,\mathrm{cov}\,}}_{\mathtt {SS}}, {{\,\mathrm{cov}\,}}_{\mathtt {SM}}, {{\,\mathrm{cov}\,}}_{\mathtt {MS}} \in \mathbb {R}\) such that \({{\,\mathrm{Cov}\,}}(R_n, R_{n+1}) = {{\,\mathrm{cov}\,}}_{\mathtt {O}_n\mathtt {O}_{n+1}}\) for all \(n \in \mathbb {N}_{>0}\).

-

(iii)

The sequence \(R_1, R_2, \dotsc \) is 1-dependent. In particular, we have \({{\,\mathrm{Cov}\,}}(R_n, R_{n+s}) = 0\) for all \(s \ge 2\).

Proof

Let \(r_{n+1}, \dotsc , r_{n+u} \in \mathbb {Z}_3\). Then,

and assertion (i) follows from Lemma 5, Lemma 6 (i), and the Ionescu–Tulcea theorem. Assertion (ii) is an immediate consequence of (i). To prove (iii), let \(1 \le u < v \le n\) such that \(v-u>1\), let \(r_1, \dotsc , r_u, r_v, \dotsc , r_n \in \mathbb {Z}_3\), and define the events

Using the Markov property of \((S_n, R_n)_{n \in \mathbb {N}}\) and (i), we obtain

hence, \((R_1, \dotsc , R_u)\) and \((R_v, \dotsc , R_n)\) are independent. We conclude that \(R_1, R_2, \dotsc \) is a 1-dependent sequence. \(\square \)

Definition 5

The normal distribution with mean \(\mu \) and variance \(\sigma ^2\) is denoted by \(\mathscr {N}(\mu , \sigma ^2)\), and

is the cumulative distribution function of \(\mathscr {N}(0,1)\).

For strings \(x, y \in \{\mathtt {S},\mathtt {M}\}^*\), we denote by \(\#_x(y) \in \mathbb {N}\) the number of occurrences of x in y.

Below we will use the following version of the central limit theorem for m-dependent random variables due to Hoeffding & Robbins.

Lemma 8

(Cf. [17, Theorem 1]) Let \(X_1, X_2, \dotsc \) be an m-dependent sequence of random variables such that \({{\,\mathrm{E}\,}}(X_i) = 0\) and \({{\,\mathrm{E}\,}}(|X_i |^3)\) is uniformly bounded for all \(i \in \mathbb {N}_{>0}\). For \(i \in \mathbb {N}_{>0}\) define \(A_i := {{\,\mathrm{Var}\,}}(X_{i+m}) + 2 \sum _{j=1}^m {{\,\mathrm{Cov}\,}}(X_{i+m-j},X_{i+m})\). If the limit \(A := \lim _{u\rightarrow \infty } u^{-1} \sum _{h=1}^u A_{i+h}\) exists uniformly for all \(i \in \mathbb {N}\), then \((X_1 + \cdots + X_s)/\sqrt{s}\) has the limiting distribution \(\mathscr {N}(0, A)\) as \(s \rightarrow \infty \).

Lemma 9

Let \(\mathtt {O}_1\mathtt {O}_2\cdots \in \{\mathtt {S},\mathtt {M}\}^{\omega }\) be an operation sequence such that the limit

exists uniformly for all \(i \in \mathbb {N}\) and define

Then, \(\lim _{s \rightarrow \infty } {{\,\mathrm{Var}\,}}(R_{n+1} + \cdots + R_{n+s})/s = A\) and

has the limiting distribution \(\mathscr {N}(0, A)\).

Proof

Let \(R'_i := R_i - {{\,\mathrm{E}\,}}(R_i)\) for all \(i \in \mathbb {N}_{>0}\). Then, \(R'_1, R'_2, \dotsc \) is a 1-dependent sequence of random variables with \({{\,\mathrm{E}\,}}(R'_i) = 0\) and \({{\,\mathrm{E}\,}}(|R'_i |^3) \le 2^3 = 8\) for all i. Define \(A_i := {{\,\mathrm{Var}\,}}(R'_{i+1}) + 2 {{\,\mathrm{Cov}\,}}(R'_{i}, R'_{i+1})\). For \(x \in \{\mathtt {S},\mathtt {M}\}^*\) and \(0 \le i \le j\), we set \(\#_x^{i, j} := \#_x(\mathtt {O}_i\cdots \mathtt {O}_j)\). With this notation, we have

Using the identities

we obtain

As \(u \rightarrow \infty \), the ratio \(\#_\mathtt {M}^{i+1, i+u}/u\) converges to \(\rho \) uniformly for all i by assumption; therefore, \(u^{-1} \sum _{h=1}^u A_{i+h}\) converges to A uniformly for all i. Since

\({{\,\mathrm{Var}\,}}(R'_{n+1} + \cdots + R'_{n+s})/s\) converges to A as \(s \rightarrow \infty \). Finally, \((R'_{n+1} + \cdots + R'_{n+s})/\sqrt{s}\) has the limiting distribution \(\mathscr {N}(0, A)\) by Lemma 8. \(\square \)

We note that for random operation sequences \(\mathtt {O}_1\mathtt {O}_2\cdots \) (corresponding to random exponents with independent and unbiased bits), the convergence of (7) is not uniform with probability 1. However, for any given finite operation sequence \(\mathtt {O}_{n+1}\cdots \mathtt {O}_{n+s}\) we may construct an infinite sequence \(\mathtt {O}_1\mathtt {O}_2\cdots \) with subsequence \(\mathtt {O}_{n+1}\cdots \mathtt {O}_{n+s}\) for which convergence of (7) is uniform and \(\rho \approx \#_{\mathtt {M}}(\mathtt {O}_{n+1} \cdots \mathtt {O}_{n+s})/s\). Therefore, if s is sufficiently large, it is reasonable to assume that the normal approximation

is appropriate. We mention that in our experiments in Sect. 5.1 approximation (8) is applied and leads to successful attacks.

2.3 Summary of the relevant facts

In this section, we studied the random behaviour of the number of extra reductions when Barrett’s modular multiplication algorithm is used within the square and multiply exponentiation algorithm. In Sect. 4.3, we generalize this approach to table-based exponentiation algorithms.

We defined a stochastic process \((S_n,R_n)_{n\in \mathbb {N}}\). The random variable \(S_n\) represents the (random) normalized intermediate value (= intermediate value divided by the modulus M) in Algorithm 2 after the n-th Barrett operation, and the random variable \(R_n\) represents the (random) number of extra reductions needed for the n-th Barrett operation.

Algorithm 1 needs 0, 1 or 2 extra reductions. The stochastic process \((S_n,R_n)_{n\in \mathbb {N}}\) is a non-homogeneous Markov chain on the state space \(\mathcal{{S}}=[0,1)\times \mathbb {Z}_3\). The projection onto the first component gives independent random variables \(S_1,S_2,\ldots \), which are uniformly distributed on the unit interval [0, 1). However, we are interested in the stochastic process \(R_1,R_2,\ldots \) on \(\mathbb {Z}_3\), which is more difficult to analyse. In particular, \({{\,\mathrm{E}\,}}(R_n)\) and \({{\,\mathrm{Var}\,}}(R_n)\) depend on the operation type \(\mathtt {O}_{n}\) of the n-th Barrett operation (multiplication \(\mathtt {M}\) or squaring \(\mathtt {S}\)), while the covariances \({{\,\mathrm{Cov}\,}}(R_n,R_{n+1})\) depend on the operation types of the n-th and the \((n+1)\)-th Barrett operation (\(\mathtt {SM}\), \(\mathtt {MS}\) or \(\mathtt {SS}\)). The formulae (4), (5) and (6) provide explicit formulae for the expectations and the variances, while Lemma 7 (i), (ii) explains how to compute the covariances. Further, the stochastic process \((R_n)_{n\in \mathbb {N}_{\ge 1}}\) is 1-dependent. In particular, a version of the central limit theorem for dependent random variables can be applied to approximate the distribution of standardized finite sums (cf. (8)).

3 Montgomery multiplication versus Barrett multiplication

In Sect. 3.1, we briefly treat Montgomery’s multiplication algorithm (MM) [21] and summarize relevant stochastic properties. This is because in Sect. 4 we consider the question whether the (known) pure and local timing attacks against Montgomery’s multiplication algorithm can be transferred to implementations that apply Barrett’s algorithm.

3.1 Montgomery’s multiplication algorithm in a nutshell

Montgomery’s multiplication algorithm is widely used to compute modular exponentiations because it transfers modulo operations and divisions to moduli and divisors, which are powers of 2.

For an odd modulus M (e.g. an RSA modulus or a prime), the integer \(R:=2^t>M\) is called Montgomery’s constant, and \(R^{-1}\in \mathbb {Z}_M\) denotes its multiplicative inverse modulo M. Moreover, \(M^*\in \mathbb {Z}_R\) satisfies the integer equation \(RR^{-1}-MM^*=1\). Montgomery’s algorithm computes

with a version of Algorithm 3. Here, \(\mathsf {ws}\) denotes the word size of the arithmetic operations (typically, depending on the platform \(\mathsf {ws}\in \{8,16,32,64\}\)), which divides the exponent t. Further, \(r=2^{\mathsf {ws}}\), so that \(R=r^v\) with \(v=t/\mathsf {ws}\). In Algorithm 3, the operands x, y and s are expressed in the r-adic representation. That is, \(x = (x_{v-1},...,x_0)_r\), \(y = (y_{v-1},...,y_0)_r\) and \(s = (s_{v-1},...,s_0)_r\) with \(r=2^{\mathsf {ws}}\). Finally, \(m^* = M^* \bmod {r}\). After Step 3 the intermediate value \(s\equiv abR^{-1} \pmod {M}\) and \(s\in [0,2M)\). The instruction \(s:=s-M\) in Step 4, called ‘extra reduction’ (ER), is carried out iff \(s\in [M,2M)\). This conditional integer subtraction is responsible for timing differences, and thus is the source of side channel attacks.

Assumption 1

(Montgomery modular multiplication) For fixed modulus M and fixed Montgomery constant R,

which means that an MM operation costs time c if no ER is needed, and \(c_{\text {ER}}\) equals the time for an ER. (The constants c and \(c_{\text {ER}}\) depend on the concrete implementation.)

Justification of Assumption 1: (See [26], Remark 1, for a comprehensive analysis.) For known-input attacks (with more or less randomly chosen inputs), Assumption 1 should usually be valid. An exception is pure timing attacks on RSA with CRT implementations in old versions of OpenSSL [3, 7], cf. Sect. 4.2. The reason is that OpenSSL applies different subroutines to compute the for-loop in Algorithm 3, depending on whether x and y have identical word size or not. The before-mentioned timing attacks on RSA with CRT are adaptive chosen input attacks, and during the attack certain MM-operands become smaller and smaller. This feature makes the attack to some degree more complicated but does not prevent it because new sources for timing differences occur. RSA implementations on smart cards and microcontrollers usually should not care about word lengths (and meet Assumption 1 in any case) because in normal use operands with different word size rarely occur so that an optimization of this case seems to be useless. \(\square \)

In the following, we summarize some well-known fundamental stochastic properties of Montgomery’s multiplication algorithm, or more precisely, on the distribution of random extra reductions within a modular exponentiation algorithm. Their knowledge is needed to develop (effective and efficient) pure or local timing attacks [3, 7, 22,23,24,25,26,27,28].

We interpret the normalized intermediate values of Algorithm 4 as realizations of random variables \(S_0,S_1,\ldots \). With the same arguments as in Sect. 2.2 (for Barrett’s multiplication), one concludes that for Algorithm 4 the random variables \(S_{1},S_2,\ldots \) are iid uniformly distributed on [0, 1). We set \(w_i=1\) if the i-th Montgomery operation requires an ER and \(w_i=0\) otherwise. We interpret the values \(w_1,w_2,\ldots \) as realizations of \(\{0,1\}\)-valued random variables \(W_1,W_2,\ldots \).

Interestingly, it does not depend on the word size \(\mathsf {ws}\) whether an ER is necessary but only on (a, b, M, R). This allows to consider the case \(\mathsf {ws}= t\) (i.e. \(v=1\)) when analysing the stochastic behaviour of the random variables \(W_i\) in modular exponentiation algorithms. In particular, the computation of \({{\,\mathrm{MM}\,}}(a,b;M)\) requires an ER iff

This observation allows to express the random variable \(W_i\) in terms of \(S_{i-1}\) and \(S_i\). For Algorithm 4, this implies

The random variables \(W_1,W_2,\ldots \) have interesting properties which are similar to those of \(R_1,R_2,\ldots \). In particular, they are neither stationary distributed nor independent but 1-dependent and under weak assumption they fulfil a version of the central limit theorem for dependent random variables. Relation (11) allows to represent joint probabilities \(\Pr (W_{i}=w_i,\ldots ,W_{i+k-1}=w_{i+k-1})\) as integrals over the \((k+1)\)-dimensional unit cube. We just note that

3.2 A closer look at Barrett’s multiplication algorithm

In this subsection, we develop and justify equivalents to Assumption 1 for two (different) Barrett multiplication algorithms (Algorithm 1 and Algorithm 5). Therefrom, we deduce stochastic representations, which describe the random timing behaviour of modular exponentiations \(y\mapsto y^d\bmod M\).

3.2.1 Modular exponentiation with Algorithm 1

At first we formulate an equivalent to Assumption 1.

Assumption 2

(Barrett modular multiplication) For fixed modulus M,

for all \(a,b\in \mathbb {Z}_M\), which means that a Barrett multiplication (BM) costs time c if no ER is needed, and \(c_{\text {ER}}\) equals the time for one ER. (The constants c and \(c_{\text {ER}}\) depend on the concrete implementation.)

Justification of Assumption 2: The justification of Assumption 2 is rather similar to the justification of Assumption 1 in Sect. 3.1. In Line 1 of Algorithm 1, \(x,y\in \mathbb {Z}_M\) are multiplied in \(\mathbb {Z}\), and in Line 2 the rounding off brackets \(\lfloor \cdot \rfloor \) are simple shift operations if b equals 2 or a suitable power of two (e.g. \(b=2^{\mathsf {ws}}\), where \(\mathsf {ws}\) denotes the word size of the underlying arithmetic). For known-input attacks (with more or less randomly chosen inputs), and for smart cards and microcontrollers in general it is reasonable to assume that the Lines 1–3 cost identical time for all \(x,y\in \mathbb {Z}_M\) and, consequently, that Assumption 1 is valid. Exceptions may exist for adaptive chosen input timing attacks on RSA implementations (cf. Sect. 4.2) on PCs, which use large general libraries. Even then it seems to be very likely that (as for Montgomery’s multiplication algorithm) such optimizations allow timing attacks anyway. \(\square \)

This leads to the following stochastic representation of the (random) timing of a modular exponentiation \(y^d \bmod {M}\) if it is calculated with Algorithm 2.

Here, the ‘setup-time’ \(t_{\text {set}}\) summarizes the time needed for all operations that are not part of Algorithm 1, e.g. the time needed for input and output and maybe for the computation of the constant \(\mu \) (if not stored). The random variable N quantifies the ‘timing noise’. This includes measurement errors and possible deviations from Assumption 2. We assume that \(N\sim \mathscr {N}(0,\sigma ^2)\). We allow \(\sigma ^2=0\), which means ’no noise’ (i.e. \(N\equiv 0\)), while a nonzero expectation \({{\,\mathrm{E}\,}}(N)\) is ‘moved’ to \(t_{\text {set}}\). The data-dependent timing differences are quantified by the stochastic process \(R_1,R_2,\ldots ,R_{\ell +{{\,\mathrm{ham}\,}}(d)-2}\), which is thoroughly analysed in Sect. 2. Recall that the distribution of this stochastic process depends on the secret exponent d and on the ratio \(t=y/M\).

Remark 5

-

(i)

Without blinding mechanisms \({{\,\mathrm{Time}\,}}(y^d \bmod M)\) is identical for repeated executions with the same basis y if we neglect possible measurement errors. At first sight, the stochastic representation (14) may be surprising but the stochastic process \(R_1,R_2,\ldots \) describes the random timing behaviour for bases \(y'\in \mathbb {Z}_M\) whose ratio \(y'/M\) is close to \(t=y/M\) (cf. Stochastic Model 1).

-

(ii)

A similar stochastic representation exists for table-based exponentiation algorithms, see Sect. 4.3.

3.2.2 Modular exponentiation with Algorithm 5

Algorithm 5 is a modification of Algorithm 1 containing an optimization which was already proposed by Barrett [4]. Its Lines 3-6 substitute Line 3 of Algorithm 1. We may assume \(b=2^\mathsf {ws}>2\), where \(\mathsf {ws}\) is the word size of the integer arithmetic (typically, \(\mathsf {ws}\in \{8,16,32,64\}\)). Line 3 of Algorithm 1 computes a multiplication \(\widetilde{q} \cdot M\) of two integers, which are in the order of \(b^k\), and a subtraction of two integers, which are in the order of \(b^{2k}\). In contrast, Line 3 of Algorithm 5 only requires a modular multiplication \((\widetilde{q} \cdot M)\bmod {2^{\mathsf {ws}(k+1)}}\), the subtraction of two integers in the order of \(b^{k+1}\) and Line 5 possibly one addition by \(b^{k+1}\).

After Line 6 of Algorithm 5, \(r \equiv z - (\widetilde{q} \cdot M) \pmod {b^{k+1}}\), and further \(0\le r < b^{k+1}\). Since \(\lfloor \log _b M \rfloor \le \log _b M < k = \lfloor \log _b M \rfloor + 1\), we have \(b^{k-1}\le M < b^k\), hence \(3M < 3b^k \le b^{k+1}\). By Lemma 1, the values r after Line 3 of Algorithm 1 and r after Line 6 of Algorithm 5 thus coincide, which means that the number of extra reductions is the same for both algorithms. If \(c_{\text {add}}\) denotes the time needed for an addition of \(b^{k+1}\) in Line 5 of Algorithm 5, this leads to an equivalent of Assumption 2.

Assumption 3

(Barrett modular multiplication, optimized) For fixed modulus M,

for all \(a,b \in \mathbb {Z}_M\), which means that a Barrett multiplication (BM) with Algorithm 5 (without extra reductions or an extra addition by \(b^{k+1}\)) costs time c, while \(c_{\text {ER}}\) and \(c_{\text {add}}\) equal the time for one ER or for an addition by \(b^{k+1}\), respectively. (The constants c, \(c_{\text {ER}}\) and \(c_{\text {add}}\) depend on the concrete implementation.) When implemented on the same platform, the constant c in (15) should be smaller than in (13).

Justification of Assumption 3: The justification is rather similar to that of Assumption 2. The relevant arguments have already been discussed at the beginning of this subsection. This also concerns the general expositions to the impact of possible optimizations of the integer multiplication algorithm. \(\square \)

The if-condition in Line 4 of Algorithm 5 introduces an additional source of timing variability, which has to be analysed. We have already explained that this if-condition does not affect the number of extra reductions. Next, we determine the probability of an extra addition by \(b^{k+1}\).

Let \(x, y \in \mathbb {Z}_M\) and let \(z = x \cdot y\). We denote by \(r_a\) the number of extra additions by \(b^{k+1}\) in the computation of \(z \bmod {M}\). Obviously, \(r_{a} = 1\) iff \(z \bmod {b^{k+1}}<\widetilde{q}M\bmod {b^{k+1}}\) (and \(r_{a} = 0\) otherwise), or equivalently, when dividing both terms by \(b^{k+1}\),

Next, we derive a more convenient characterization of (16). Let \(\gamma := M/b^{k+1} \in (0, b^{-1})\). We have

Since \(0 \le \alpha \gamma (z/M^2) + \beta \gamma \{z/b^{k-1}\} + \gamma \{\beta \lfloor z/b^{k-1} \rfloor \} \le 3\gamma < 3/b \le 1\), we can rewrite characterization (16) as

Our aim is to develop a stochastic model for the extra addition. We start with a closer inspection of the right-hand side of (17). Let \(z = (z_{2k-1},\ldots ,z_0)_b\) be the b-ary representation of z, where leading zero digits are permitted. Then,

We now assume that Algorithm 5 is applied in the modular exponentiation algorithm Algorithm 2. In analogy to the extra reductions, we interpret the number of extra additions by \(b^{k+1}\) in the \((n+1)\)-th Barrett operation, denoted by \(r_{a;n+1}\), as a realization of a \(\{0,1\}\)-valued random variable \(R_{a;n+1}\). In particular, \((x \cdot y) \bmod {M}\) either represents a squaring (\(x = y\)) or a multiplication of the intermediate value x by the basis y, respectively.

As in Stochastic Model 2, we model \(v_1 = (xy)/M^2\) as a realization of \(S_n^2\) (squaring) or \(S_n t\) (multiplication by the basis y) with \(t=y/M\), respectively, and \(v_2\) as a realization of the random variable \(U_{n+1}\) which is uniformly distributed on [0, 1). With the same argumentation as for \(v_2\), we model \(u'_{n+1} := u' =\{xy/b^{k+1}\}\) as a realization of a random variable \(U'_{n+1}\) that is uniformly distributed on [0, 1).

It remains to analyse \(v_3\). Let for the moment \((a_k, \dotsc , a_0)_b\) be the b-ary representation of \(\lfloor b^{2k}/M\rfloor \) (note that we have \(b^k \le \lfloor b^{2k}/M\rfloor < b^{k+1}\) since we excluded the corner case \(M=b^{k-1}\)) and \(a_{-1} = a_{-2} = 0\). Then,

We model \(v_{n+1} := v_3\) as a realization of a random variable \(V_{n+1}\) that is uniformly distributed on [0, 1).

By (18) and (19), the values \(u', v_1,v_2\) and \(v_3\) essentially depend on \(z_{k}\) (resp., on \((z_k,z_{k-1})\) if \(\mathsf {ws}\) is small), on the most significant digits of z in the b-ary representation, on \(z_{k-2}\) (resp. on \((z_{k-2},z_{k-3})\) if \(\mathsf {ws}\) is small) and on the weighted sum of the b-ary digits \(z_{2k-1},\ldots ,z_{k-1}\), respectively. This justifies the assumption that the random variables \(U'_{n+1}, S_n, U_{n+1}, V_{n+1}\) (essentially) behave as if they were independent.

We could extend the non-homogeneous Markov chain \((S_n,R_n)_{n\in \mathbb {N}}\) on the state space \([0,1)\times \mathbb {Z}_3\) from Sect. 2 to a non-homogeneous Markov chain \((S_n,R_n,R_{a;n})_{n\in \mathbb {N}}\) on the state space \([0,1)\times \mathbb {Z}_3\times \mathbb {Z}_2\). Its analysis is analogous to that in Sect. 2 but more complicated in detail. Since for typical word sizes w the impact of the extra additions on the execution time is by an order of magnitude smaller than the impact of the extra reductions (due to the factor \(\gamma \), cf. (20) and (21)), we do not elaborate on this issue. We only mention that

and analogously

For the reason mentioned above, we treat the extra additions as noise. Equation (22) is the equivalent to (14) for the modified version of Barrett’s multiplication algorithm. We use the same notation as in (14).

with \(t_{\text {set}}^* = t_{\text {set}} + (\mu _{a,\mathtt {S}}(\ell -1) + \mu _{a,\mathtt {M}}({{\,\mathrm{ham}\,}}(d)-1)) c_{\text {add}}\) and \(N^*\sim \mathscr {N}(0,(\mu _{a,\mathtt {S}}(1-\mu _{a,\mathtt {S}})(\ell -1) + \mu _{a,\mathtt {M}}(1-\mu _{a,\mathtt {M}})({{\,\mathrm{ham}\,}}(d)-1))c_{\text {add}}^2+\sigma ^2)\). The expected time for all extra additions has been moved to the setup time, and the variance became part of \(N^*\). While the formula for the expectation is exact, we used a coarse approximation for the variance which neglects any dependencies. We just mention that \(R_{n+1}\) and \(R_{a;n+1}\) are positively correlated. This follows from the fact that apart from the factor \(\gamma \) the terms ‘\(\alpha \gamma S_n^2\)’, ‘\(\alpha \gamma S_n t\)’, and ‘\(\beta \gamma U_{n+1}\)’ in (20) and (21) coincide with terms in (3) and since \(R_{a;n+1}\) and \(R_{n+1}\) are both ‘large’ if the corresponding terms in (20), (21) and (3) are ‘large’.

Remark 6

-

(i)

The stochastic representations (14) and (22) are essentially identical although (22) has slightly larger noise.

-

(ii)

The ratio \(c_{\text {add}}/c_{\text {ER}}\) depends on the implementation.

3.2.3 Special values for \(\alpha \) and \(\beta \)

The stochastic behaviour of the Barrett multiplication algorithm depends on \(\alpha \) and \(\beta \). In particular, \(\alpha \) has significant impact on most of the attacks discussed in Sect. 4. In this subsection, we briefly analyse the extreme cases \(\alpha \approx 0\) and \(\beta \approx 0\).

The condition \(k=\lfloor \log _b M \rfloor +1\) (cf. Algorithm 1 and Algorithm 5) implies \(b^{k-1}\le M < b^k\). Now assume that \(b^k/2< M < b^k\), which is typically fulfilled if the modulus M has ‘maximum length’ (e.g. 1024 or 2048 bits). Then,

If \(b=2^\mathsf {ws}\) with \(\mathsf {ws}\gg 1\) (e.g. \(\mathsf {ws}\ge 16\)), then \(\beta \approx 0\). In this case, one may neglect the term ‘\(\beta U\)’ in (2), accepting a slight inaccuracy of the stochastic model.

Going the next step, cancelling ‘\(\beta U_{n+1}\)’ in (3) simplifies Stochastic Model 2 as (3) can be rewritten as \(R_{n+1}=1_{\{S_{n+1} < \alpha S_n^2\}}\) and \(R_{n+1}=1_{\{S_{n+1} < \alpha S_n t\}}\), respectively. This representation is equivalent to the Montgomery case (11), simplifying the computation of the variances, covariances and the probabilities in Lemma 7 (i) considerably (as for the Montgomery multiplication). The Barrett-specific features and difficulties yet remain.

Now assume that \(b^{k-1} \le M < 2b^{k-1}\). Then,

If again \(b=2^\mathsf {ws}\) with \(\mathsf {ws}\gg 1\), e.g. \(\mathsf {ws}\ge 8\), then \(\alpha \approx 0\). As above we may neglect the terms ‘\(\alpha st\)’ in (2) and analogously ‘\(\alpha S_n^2\)’, resp. ‘\(\alpha S_n t\)’, in (3), which yields the representation \(R_{n+1} = 1_{\{S_{n+1} < \beta U_{n+1}\}}\). Consequently, \(R_1,R_2,\dotsc \) are iid \(\{0,1\}\)-valued random variables with \(\Pr (R_j=1)=\beta /2\), while \(\beta \approx 0\) is quite likely the case \(\alpha \approx 0\) should occur rarely because the bit length of the modulus would be slightly larger than a power of \(b = 2^{\mathsf {ws}}\).

More generally, let us assume that \(b^{k-1}\le 2^{w'}b^{k-1}\le M < 2^{w'+1}b^{k-1}\le b^k\) for some \(w'\in \{0,\ldots ,\mathsf {ws}-1\}\). With the same strategy as in (23) and (24), we conclude

The impact of \(\alpha \approx 0\) and \(\beta \approx 0\) on the attacks in Sect. 4 will be discussed in Sect. 4.4.

3.3 A short summary

The stochastic process \(R_1,R_2,\ldots \) is the equivalent to \(W_1,W_2,\ldots \) (Montgomery multiplication). Both stochastic processes are 1-dependent. Hence, it is reasonable to assume that attacks on Montgomery’s multiplication algorithm can be transferred to implementations which use Barrett’s multiplication algorithm. In Sect. 4, we will see that this is indeed the case.

However, for Barrett’s multiplication algorithm additional problems arise. In particular, there is no equivalent to the characterization (10), which allows to directly analyse the stochastic process \(W_1,W_2,\ldots \) For Barrett’s algorithm, a ‘detour’ to the two-dimensional Markov process \((S_i,R_i)_{i\in \mathbb {N}}\) is necessary. Moreover, for Montgomery’s multiplication algorithm, the respective integrals can be computed much easier than for Barrett’s algorithm since simple closed formulae exist. If \(\beta \approx 0\), the evaluation of the integrals becomes easier (as for Montgomery’s algorithm), and if \(\alpha \approx 0\), the computations become absolutely simple. For CRT implementations, the parameter estimation is more difficult for Barrett’s multiplication algorithm than for Montgomery’s algorithm. We return to these issues in Sect. 4.

4 Timing attacks against Barrett’s modular multiplication

The conditional extra reduction in Montgomery’s multiplication algorithm is the source of many timing attacks and local timing attacks [2, 3, 7, 13, 14, 22,23,24, 26,27,28,29]. Some of them even work in the presence of particular blinding mechanisms. When applied to modular exponentiation, the stochastic representations of the execution times are similar for Montgomery’s and Barrett’s multiplication algorithms. The analysis of Barrett’s algorithm, however, is mathematically more challenging as explained in Sects. 2 and 3. In Sects. 4.1 to 4.3, we transfer attacks on Montgomery’s multiplication algorithm to attacks on Barrett’s multiplication algorithm, where we assume that the ‘basic’ Algorithm 1 is applied. We point out that our attacks can be adjusted to Algorithm 5 (cf. Sect. 3.2.2).

4.1 Timing attacks on RSA without CRT and on DH

In this subsection, we assume that M is an RSA modulus or the modulus of a DH-group (i.e. a subgroup of \(\mathbb {F}_M^*\)) and that \(y^d \bmod {M}\) is computed with Algorithm 2, where \(d=(d_{\ell -1},\ldots ,d_0)_2\) is a secret exponent. Blinding techniques are not applied. We transfer the attack from Sect. 6 in [24] to Barrett’s multiplication algorithm and extend it by a look-ahead strategy.

The attacker (or evaluator) measures the execution times \(t_j={{\,\mathrm{Time}\,}}(y_j^d\bmod {M})\) for \(j=1,\ldots ,N\) for known bases \(y_j\). The \(t_j\) may be noisy (cf. (14)). Moreover, we assume that the attacker knows (or has estimated) c and \(c_{\text {ER}}\). (Sect. 6 in [29] explains a guessing procedure for Montgomery’s multiplication algorithm.) In a pre-step, the sum \(\ell +{{\,\mathrm{ham}\,}}(d)\) can be estimated in a straight-forward way. We may assume that the attacker knows \(\ell \) and thus also \({{\,\mathrm{ham}\,}}(d)\). (If necessary the attack could be restarted with different candidates for \(\ell \). However, apart from its end the attack is robust against small deviations from the correct value \(\ell \).) At the beginning, the attacker subtracts the data-independent terms \(t_{\text {set}}\) and \((\ell +{{\,\mathrm{ham}\,}}(d)-2)c\) from the timings \(t_j\) and divides the differences by \(c_{\text {ER}}\), yielding the ‘discretized’ execution times \(t_{\text {d},1},\ldots ,t_{\text {d},N}\).

The attack strategy is to guess subsequently the exponent bits \(d_{\ell -1}=1,d_{\ell -2},\ldots \). For the moment we assume that the guesses \(\widetilde{d}_{\ell -1}=1, \widetilde{d}_{\ell -2}, \dotsc , \widetilde{d}_{k+1}\) have been correct. Now we focus on the guessing procedure of the exponent bit \(d_k\). Currently, Algorithm 2 ‘halts’ before the if-statement (for \(i=k\)) so that k squarings and m (calculated from \({{\,\mathrm{ham}\,}}(d)\) and the guesses \(\widetilde{d}_{\ell -1}, \dotsc , \widetilde{d}_{k+1}\)) multiplications still have to be carried out. On the basis of the previous guesses, the attacker computes the intermediate values \(x_j\), and the number of extra reductions needed for the squarings and multiplications executed so far are subtracted from the \(t_{\text {d},j}\), yielding the discretized remaining execution times \(t_{\text {drem},j}\). In terms of random variables, this reads

for \(1 \le j \le N\) with \(N_{\text {d},j} \sim \mathscr {N}(0,\sigma ^2/c_{\text {ER}}^2)\). Recall that the distribution of those \(R_i\), which belong to multiplications, depends on the basis \(y_j\); see, for example, the stochastic representation (3).

To optimize our guessing strategy, we apply statistical decision theory. We point the interested reader to [25], Sect. 2, where statistical decision theory is introduced in a nutshell and the presented results are tailored to side-channel analysis. In the following, \(\Theta := \{0,1\}\) denotes the parameter space, where \(\theta \in \Theta \) corresponds to the hypothesis \(d_k=\theta \).

We may assume that the probability that the exponent bit \(d_k\) equals \(\theta \) is approximately 0.5. (In the case of RSA, \(d_0=1\) and for indices k close to \(\lceil \log _2(n)\rceil \) the exponent bits may be biased.) More formally, if we view \(d_k,d_{k-1},\ldots \) as realizations of iid uniformly \(\{0,1\}\)-distributed random variables \(Z_k,Z_{k-1},\ldots \) we obtain the a priori distribution

To guess the next exponent bit \(d_k\), we employ a look-ahead strategy. For look-ahead depth \(\lambda \in \mathbb {N}_{\ge 1}\), the decision for exponent bit \(d_k\) is based on information obtained from the next \(\lambda \) exponent bits. As the attacker knows the intermediate value \(x_j\) (of sample j), he is able to determine the number of extra reductions needed to process the \(\lambda \) exponent bits \(d_k,\ldots ,d_{k-\lambda +1}\) for each of the \(2^{\lambda }\) admissible values \(\varvec{\rho }= (\rho _0,\ldots ,\rho _{\lambda -1})\in \{0,1\}^\lambda \). This yields the discretized time needed to process the left-over exponent bits \(d_{k-\lambda },\ldots ,d_0\), which is fictional except for the correct vector \(\varvec{\rho }\).

In this subsection, \(t_{\varvec{\rho }, j}\) denotes the number of extra reductions required to process the next \(\lambda \) exponent bits for the basis \(y_j\) if \((d_k,\ldots ,d_{k-\lambda +1}) = \varvec{\rho }\). For these computations, \(\lambda \) modular squarings and \({{\,\mathrm{ham}\,}}(\varvec{\rho })\) modular multiplications by \(y_j\) are performed. Furthermore, \(t_{\text {drem}, j} - t_{\varvec{\rho }, j}\) may be viewed as a realization of

By (8), Lemma 6 (ii), and Lemma 7 (ii), (iii), this random variable is approximately \(\mathscr {N}(e_{\varvec{\rho }, j}, v_{\varvec{\rho }, j})\)-distributed where

We define the observation space \(\varOmega := (\varOmega ')^N\) consisting of vectors \(\varvec{\omega }= (\omega _1,\dotsc ,\omega _N)\) of timing observations

for \(1 \le j \le N\). For the remainder of this subsection, we denote the Lebesgue density of \(\mathscr {N}(e_{\varvec{\rho }, j}, v_{\varvec{\rho }, j})\) by \(f_{\varvec{\rho },j}(\cdot )\). The joint distribution of all N traces is given by the product density \(f_{\varvec{\rho },1}(\cdot ) \cdots f_{\varvec{\rho },N}(\cdot )\) with the arguments \(t_{\text {drem}, 1}- t_{\varvec{\rho }, 1}, \ldots ,\) \(t_{\text {drem}, N}- t_{\varvec{\rho }, N}\). For \(\lambda =1\) we have \(\varvec{\rho }= \rho _0=\theta \), and for hypothesis \(d_k=\theta \) the distribution of the discretized computation time needed for the left-over exponent bits \(d_{k-\lambda },d_{k-\lambda -1},\ldots \) has the product density \(\prod _{j=1}^N f_{\theta ,j}(\cdot )\).

If \(\lambda > 1\), the situation is more complicated. More precisely, for hypothesis \(\theta \in \Theta \) the distribution of the left-over time is given by a convex combination of normal distributions with density \(\overline{f}_{\theta }:\varOmega \rightarrow \mathbb {R}\) given by

where the coefficients \(\mu _{\varvec{\rho }}\) are given by

Finally, we choose \(A = \Theta \) as the set of alternatives and consider the loss function

i.e. we penalize the wrong decisions (‘0’ instead of ‘1’, ‘1’ instead of ‘0’) equally since all forthcoming guesses then are useless. We obtain the following optimal decision strategy for look-ahead depth \(\lambda \).

Decision Strategy 1

(Guessing \(d_k\)) Let \(\varvec{\omega }= (\omega _1,\ldots ,\omega _N)\) be a vector of N timing observations \(\omega _j\in \varOmega '\) as in (27). Then, the indicator function

is an optimal strategy (Bayes strategy) against the a priori distribution \(\eta \).

Proof

We interpret \(\omega _1,\omega _2,\ldots \omega _N\) as realizations of independent random vectors \(X_j := (T_{\text {drem}, j}, (T_{\varvec{\rho }, j})_{\varvec{\rho }\in \{0,1\}^{\lambda }})\) with values in \(\varOmega '\) for \(1 \le j \le N\), which has already been assumed when (28) was developed. We denote by \(\mu \) the product of the Lebesgue measure on \(\mathbb {R}\) and the counting measure on \(\mathbb {N}^{2^\lambda }\). We equip \(\varOmega '\) with the product \(\sigma \)-algebra \(\mathscr {B}(\mathbb {R}) \otimes \mathscr {P}(\mathbb {N}^{2^\lambda })\). Then, \(\mu \) is a \(\sigma \)-finite measure on \(\varOmega '\), and the N-fold product measure \(\mu ^N = \mu \otimes \cdots \otimes \mu \) is \(\sigma \)-finite on the observation space \(\varOmega = \varOmega ' \times \cdots \times \varOmega '\). Note that \(\Pr ((T_{\varvec{\rho }, j})_{\varvec{\rho }\in \{0,1\}^{\lambda }} = (t_{\varvec{\rho }, j})_{\varvec{\rho }\in \{0,1\}^{\lambda }})\) is independent of \((d_k, \dotsc , d_{k-\lambda +1})\) and thus in particular independent of \(d_k\). Furthermore, this probability is \(>0\) because all alternatives \(\varvec{\rho }\) are principally possible. Define \(C_j := \mathbb {R}\times \prod _{\varvec{\rho }\in \{0,1\}^\lambda } \{t_{\varvec{\rho }, j}\}\) and \(C := \prod _{j=1}^N C_j\subseteq \varOmega \). (Here, \(\prod \) denotes the Cartesian product of sets.) If \(d_k = \theta \), then the conditional probability distribution of \((X_1, \dotsc , X_N)\) given C is \(\overline{f}_{\theta }\cdot \mu ^N\). Thus, all conditions of Theorem 1 (iii) in [25] are fulfilled, and this completes the proof. \(\square \)

For look-ahead depth \(\lambda =1\), Decision Strategy 1 is essentially equivalent to Theorem 6.5 (i) in [24].

Remark 7

All decisions after a wrong bit guess are useless because then the attacker computes wrong intermediate values \(x'_1,\ldots ,x'_N\) and therefore values \(t'_{\varvec{\rho }, j}\) that are not correlated to the correct number of extra reductions \(t_{\varvec{\rho }, j}\). However, the situation is not symmetric in 0 and 1 because for \(d_k=0\) one uncorrelated term and for \(d_k=1\) two uncorrelated terms are subtracted. In [28] (look-ahead depth \(\lambda =1\)) for Montgomery’s multiplication algorithm, an efficient three-option error detection and correction strategy was developed, which allowed to reduce the number of attack traces by \(\approx 40 \%\). We do not develop an equivalent strategy for Barrett’s multiplication algorithm but apply a dynamic look-ahead strategy. This is much more efficient as we will see in Sect. 5.1. To the best of our knowledge, this look-ahead strategy is new if we ignore the fact that the idea was very roughly sketched in [24], Remark 4.1.

4.2 Timing attacks on RSA with CRT

The references [3, 7, 22] introduce and analyse or improve timing attacks on RSA implementations which use the CRT and Montgomery’s multiplication algorithm, including the square & multiply exponentiation algorithm and table-based exponentiation algorithms. Even more, these attacks can be extended to implementations which are protected by exponent blinding [26, 27].

Unless stated otherwise, we assume in this subsection that RSA with CRT applies Algorithm 6 with Barrett’s multiplication (Algorithm 1). Let \(n=p_1p_2\) be an RSA modulus, let d be a secret exponent, and let \(y \in \mathbb {Z}_n\) be a basis. We set \(y_{(i)} := y \bmod {p_i}\) and \(d_{(i)} := d \bmod {(p_i-1)}\) for \(i=1,2\). For \(y \in \mathbb {Z}_n\) let \(T(y):={{\,\mathrm{Time}\,}}(y^d \bmod {n})\).

Let \(\nu := \lfloor \log _2 n \rfloor + 1\) be the bit-length of n. We may assume that \(p_1, p_2\) have bit-length \(\approx \nu /2\) and that \(d_{(1)}, d_{(2)}\) have Hamming weight \(\approx \nu /4\). From (4), we obtain

where \(t_i := y_{(i)}/p_i\). Now assume that \(0<u_1<u_2<n\) with \(u_2-u_1 \ll p_1,p_2\). Three cases are possible:

- Case A::

-

\(\{u_1+1,\ldots ,u_2\}\) does not contain a multiple of \(p_1\) or \(p_2\).

- Case B\({}_{i}\)::

-

\(\{u_1+1,\ldots ,u_2\}\) contains a multiple of \(p_i\), but not of \(p_{3-i}\).

- Case C::

-

\(\{u_1+1,\ldots ,u_2\}\) contains a multiple of both \(p_1\) and \(p_2\).

By (31), we conclude

because if \(p_i\) is in \(\{u_1+1,\ldots ,u_2\}\), then

For RSA without CRT, the parameters \(\alpha \) and \(\beta \) can easily be calculated, while for RSA with CRT, the parameters \(\alpha _1,\alpha _2,\beta _1,\beta _2\) are unknown and thus need to be estimated.

We note that

The parameter \(\beta _i\) is not sensitive against small deviations of \(p_i\) and could be approximated by \(\lfloor b^{2k}/p_i\rfloor / b^{k+1}\approx b^{k-1}/p_i\approx b^{k-1}/\sqrt{n}\in (0,1)\). However, this estimate can be improved at the end of attack phase 1 below because then more precise information on \(p_1\) and \(p_2\) is available. We mention that in the context of this timing attack the knowledge of \(\beta _1\) and \(\beta _2\) is only relevant to estimate \({{\,\mathrm{Var}\,}}(T(y))\), which allows to determine an appropriate sample size for the attack steps. Unlike \(\beta _i\), the second term \(\{b^{2k}/p_i\}\) of \(\alpha _i=(p_i^2/b^{2k})\{b^{2k}/p_i\}\) and thus \(\alpha _i\) is very sensitive against deviations of \(p_i\) since \(p_i\ll b^{2k}\).

In the remainder of this subsection, we assume \(p_1< p_2 < 2 p_1\), i.e. that \(p_1, p_2\) have bit-length \(\ell \approx \nu /2\). It follows that the interval \(I_1 := \bigl (\sqrt{n/2}, \sqrt{n}\bigr )\) contains \(p_1\) but no multiple of \(p_2\) and the interval \(I_2 := \bigl (\sqrt{n}, \sqrt{2n}\bigr )\) contains \(p_2\) but no multiple of \(p_1\). (In the general case, we would have to guess \(r \in \mathbb {N}_{\ge 2}\) such that \((r-1) p_1< p_2 < r p_1\). Then, \(I_1 := \bigl (\sqrt{n/r}, \sqrt{n/(r-1)}\bigr )\) contains \(p_1\) but no multiple of \(p_2\) and \(I_2 := \bigl (\sqrt{(r-1)n}, \sqrt{rn}\bigr )\) contains \(p_2\) but no multiple of \(p_1\).) Let \(u'_0 := \lceil \sqrt{n/2}\rceil< u'_1< \dotsc < u'_h := \lfloor \sqrt{n}\rfloor \) be approximately equidistant integers in \(I_1\) and let \(u''_0 := \lceil \sqrt{n}\rceil< u''_1< \dotsc < u''_h := \lfloor \sqrt{2n}\rfloor \) be approximately equidistant integers in \(I_2\), where \(h \in \mathbb {N}\) is a small constant (say \(h=4\)). Further, define

The goal of attack phase 1 is to identify \(j', j''\) such that \(p_1 \in [u'_{j'-1}, u'_{j'}]\) and \(p_2 \in [u''_{j''-1}, u''_{j''}]\). The selection of \(j'\) and \(j''\) follows from the quantitative interpretation of (32). If \(\alpha _i\) is small but \(\alpha _{3-i}\) is significantly larger, the decision (for \(j'\), resp., for \(j''\)) in attack phase 1 might be incorrect, but this is of minor importance since attack phase 2 searches \(p_{3-i}\) anyway. If both \(\alpha _1\) and \(\alpha _2\) are small, the efficiency of the attack is low anyway. To be on the safe side one then may repeat phase 1 with larger sample size \(N_1\). Moreover, (33) allows to check the selection of \(j'\) and \(j''\).

Attack Phase 1

-

(1)

Select an appropriate integer \(N_1\).

-

(2)

For \(j = 1, \dotsc , h\), compute

$$\begin{aligned} \delta '_j&\leftarrow {{\,\mathrm{MeanTime}\,}}(u'_{j-1}, N_1) - {{\,\mathrm{MeanTime}\,}}(u'_j, N_1) \quad \text {and} \\ \delta ''_j&\leftarrow {{\,\mathrm{MeanTime}\,}}(u''_{j-1}, N_1) - {{\,\mathrm{MeanTime}\,}}(u''_j, N_1) . \end{aligned}$$ -

(3)

Set \(j' \leftarrow {\mathop {\hbox {arg max}}\limits }_{1 \le j \le h} \{\delta '_j\}\) and \(j'' \leftarrow {\mathop {\hbox {arg max}}\limits }_{1 \le j \le h} \{\delta ''_j\}\). (The attacker believes that \(p_1 \in [u'_{j'-1}, u'_{j'}]\) and \(p_2 \in [u''_{j''-1}, u''_{j''}]\).)

-

(4)

Set \(\widetilde{\alpha }_1 \leftarrow 8 \delta '_{j'}/(\nu c_{\text {ER}})\) and \(\widetilde{\alpha }_2 \leftarrow 8 \delta ''_{j''}/(\nu c_{\text {ER}})\) (estimates for \(\alpha _1\) and \(\alpha _2\)).

-

(5)

Set \(\widetilde{\beta }_1 \leftarrow 2 b^{k-1}/(u'_{j'-1}+u'_{j'})\) and \(\widetilde{\beta }_2 \leftarrow 2 b^{k-1}/(u''_{j''-1}+u''_{j''})\) (estimates for \(\beta _1\) and \(\beta _2\)).

Attack Phase 2

-

(1)

If \(\widetilde{\alpha }_1 > \widetilde{\alpha }_2\), then set \(i \leftarrow 1\), \(u_1 \leftarrow u'_{j'-1}\), and \(u_2 \leftarrow u'_{j'}\); else set \(i \leftarrow 2\), \(u_1 \leftarrow u''_{j''-1}\), and \(u_2 \leftarrow u''_{j''}\). (Attack phase 2 searches for \(p_i\) iff \(\widetilde{\alpha }_i > \widetilde{\alpha }_{3-i}\). This prime is assumed to be contained in \([u_1,u_2]\).)

-

(2)

Select \(N_2\) (depending on \(\widetilde{\alpha }_i\) and \({{\,\mathrm{Var}\,}}_{\widetilde{\alpha }_1,\widetilde{\alpha }_2,\widetilde{\beta }_1,\widetilde{\beta }_2}(T(u))\)).

-

(3)

While \(\log _2(u_2-u_1) > \ell /2 - 6\), do the following:

-

(a)

Set \(u_3 \leftarrow \lfloor (u_1+u_2)/2 \rfloor \).

-

(b)

If

$$\begin{aligned}&{{\,\mathrm{MeanTime}\,}}(u_2,N_2)-{{\,\mathrm{MeanTime}\,}}(u_3,N_2) \\&> -\tfrac{1}{16} \nu \widetilde{\alpha }_i c_{\text {ER}}, \end{aligned}$$then set \(u_2 \leftarrow u_3\) (the attacker believes that Case A is correct); else set \(u_1 \leftarrow u_3\) (the attacker believes that Case B\({}_i\) is correct).

-

(a)

The decision rule follows from (32) (Case A vs. Case B\({}_i\)). After phase 2 more than half of the upper bits of \(u_1\) and \(u_2\) coincide, which yields more than half of the upper bits of \(p_i\) (more precisely, \(\approx \ell /2+6\)). This enables attack phase 3.

Attack Phase 3

-

(1)

Compute \(p_i\) with Coppersmith’s algorithm [11].

Of course, all decisions in attack phase 2 (including the initial choice of \(u_1\) and \(u_2\)) need to be correct. However, it is very easy to verify from time to time whether all decisions in attack phase 2 have been correct so far, or equivalently, whether the current interval \((u_1,u_2)\) indeed contains \(p_i\). If

this confirms the assumption that \((u_1,u_2)\) contains \(p_i\), and \((u_1,u_2)\) is called a ‘confirmed interval’, but if not, one computes \({{\,\mathrm{MeanTime}\,}}(u_2+2N_2,N_2)-{{\,\mathrm{MeanTime}\,}}(u_3+2N_2,N_2)\). If this difference is \(<-\frac{1}{16} \nu \widetilde{\alpha }c_{\text {ER}}\), then \((u_1,u_2)\) becomes a confirmed interval. Otherwise, the attack goes back to the preceding confirmed interval \((u_{1;c},u_{2;c})\) and restarts with values in the neighbourhood of \(u_{1;c}\) and \(u_{2;c}\), which have not been used before when the attack already was at this stage.

Remark 8

-

(i)

Similarities to Montgomery’s multiplication algorithm. By (4), the expected number of extra reductions needed for a multiplication by \(y_i:=y \bmod \,p_i\) is an affine function in \(t_i=y_i/p_i\). (For Montgomery’s multiplication algorithm, it is a linear function in \((yR \bmod \,p_i)/p_i\), cf. (12).) As for Montgomery’s multiplication algorithm, (31) allows to decide whether an interval contains a prime \(p_1\) or \(p_2\) and finally to factorize the RSA modulus n.

-

(ii)

Differences to Montgomery’s multiplication algorithm. If \(y_1<p_i<y_2\), the expectation \({{\,\mathrm{E}\,}}(T(y_1)-T(y_2))\) is linear in \(\alpha _i\), which is very sensitive to variations in \(p_i\). Consequently, the attack efficiency may be very different whether the attacker targets \(p_1\) or \(p_2\). This is unlike to Montgomery’s multiplication algorithm where the corresponding expectation is linear in \(p_i/R\approx \sqrt{n}/R\). As a consequence, attack phase 1 is very different in both cases, depending on whether the targeted implementation applies Barrett’s or Montgomery’s multiplication algorithm.

It should be noted that this timing attack against Barrett’s multiplication algorithm can be adapted to fixed window exponentiation and sliding window exponentiation and also works against exponent blinding. For table-based methods, the timing difference in (32) gets smaller, while exponent blinding causes large algorithmic noise. In both cases, the parameters \(N_1\) and \(N_2\) must be selected considerably larger, which of course lowers the efficiency of the timing attack. This is rather similar to timing attacks on Montgomery’s multiplication algorithm [26, 27].

4.3 Local timing attacks

Unlike for the ‘pure’ timing attacks discussed in Sects. 4.1 and 4.2, we assume that a potential attacker is not only able to measure the overall execution time but also the timing for each squaring and multiplication, which means that he knows the number of extra reductions. This may be achieved by power measurements. In [2], an instruction cache attack was applied against Montgomery’s multiplication algorithm. The task of a spy process was to realize when a particular routine from the BIGNUM library is applied, which is only used to calculate the extra reduction. This approach may not be applicable against Barrett’s multiplication algorithm because here more than one extra reduction is possible.