Abstract

In this paper, we introduce a proximal point-type of viscosity iterative method with double implicit midpoint rule comprising of a nonexpansive mapping and the resolvents of a monotone operator and a bifunction. Furthermore, we establish that the sequence generated by our proposed algorithm converges strongly to an element in the intersection of the solution sets of monotone inclusion problem, equilibrium problem and fixed point problem for a nonexpansive mapping in complete CAT(0) spaces. In addition, we give a numerical example of our method each in a finite dimensional Euclidean space and a non-Hilbert space setting to show the applicability of our method . Our results complement many recent results in the literature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A curve c (or simply a geodesic path) joining x to y is an isometry \(c:I=[0,d(x,y)] \rightarrow X\) such that \(c(0)=x\), \(c(d(x,y))=y\) and \(d(c(t),c(t'))=|t-t'|\) for all \(t,t'\in I\). The image of a geodesic path is called the geodesic segment, which is denoted by [x, y] whenever it is unique. We say that a metric space X is a geodesic space if for every pair of points \(x,y \in X\), there is a minimal geodesic from x to y. A geodesic triangle \(\Delta (x_1,x_2,x_3)\) in a geodesic metric space (X, d) consists of three vertices (points in X) with unparameterized geodesic segment between each pair of vertices. For any geodesic triangle, there is a comparison (Alexandrov) triangle \(\bar{\Delta }\subset \mathbb {R}^2\) such that \(d(x_i,x_j)=d_{\mathbb {R}^2}(\bar{x}_i,\bar{x}_j)\) for \(i,j\in \{1,2,3\}\). A geodesic space X is a CAT(0) space if the distance between arbitrary pair of points on a geodesic triangle \(\Delta \) does not exceed the distance between its pair of corresponding points on its comparison triangle \(\bar{\Delta }\). If \(\Delta \) is a geodesic triangle and \(\bar{\Delta }\) is it comparison triangle in X, then \(\Delta \) is said to satisfy the CAT(0) inequality for all points x, y of \(\Delta \) and \(\bar{x},\bar{y}\) of \(\bar{\Delta }\), if

Let x, y, z be points in X and \(y_0\) be the midpoint of the segment [y, z], then the CAT(0) inequality implies

Inequality (1.2) is known as CN inequality of Bruhat and Titus [12]. A geodesic space X is said to be a CAT(0) space if all geodesic triangles satisfy the CAT(0) inequality. Equivalently, X is called a CAT(0) space if and only if it satisfies the CN inequality. Examples of CAT(0) spaces includes Hilbert space, Hadamard manifold, \(\mathbb {R}\)-trees [28], pre-Hilbert space [11], hyperbolic spaces [42], and Hilbert ball [21].

Monotone operator theory remains one of the most important aspects of nonlinear and convex analysis. It plays essential role in optimization, variational inequalities, semigroup theory and evolution equations. One of the vital problems in monotone operator theory is the following nonlinear stationary problem:

where A is a monotone operator and \(\mathbb {D}(A)\) is the domain of A defined by \(\mathbb {D}(A) = \{x \in X : Ax \ne \emptyset \}.\) Problem (1.3) is called Monotone Inclusion Problem (MIP) and its solution set denoted by \(A^{-1}(0)\) is closed and convex. The MIP is known to be very useful in solving some well known problems like the minimization problem and variational inequality problem. Therefore, it is of great importance in convex and nonlinear analysis, and optimization theory.

An equally significant optimization problem is the Equilibrium Problem (EP) which also extends and unifies other optimization problems such as the minimization problems, variational inequality problems, Nash equilibrium problems, complementarity problems, fixed point problems among others (see [2, 3, 38, 39, 48] and the references therein). Thus, the EPs are of high importance in optimization theory. Let D be a nonempty subset of X and \(f : D \times D \rightarrow \mathbb {R}\) be a bifunction. The EP for f is to find \(x^*\in D\) such that

The point \(x^*\) for which (1.4) is satisfied is called an equilibrium point of f and we denote the solution set of problem (1.4) by EP(f, D). Several types of bifunctions for EPs have been studied extensively in Hilbert, Banach and topological vector spaces, as well as in Hadamard manifolds by many researchers (see [10, 13, 14, 22, 35, 40, 49] and other references therein). In an attempt to study the EP in complete CAT(0) spaces, Kumam and Chaipunya [30] introduced the resolvent of the bifunction f associated with the EP (1.4) (see also [23]). Define a perturbed bifunction \(\bar{f}_{\bar{x}}: D \times D \rightarrow \mathbb {R}\) of f by

The perturbed bifunction \(\bar{f}_{\bar{x}}\) has a unique equilibrium called the resolvent operator \(J^f : X \rightarrow 2^D\) defined by

It was established in [30] that \(F(J^f) = EP(f, D),\) \(J^f\) is well defined, single valued and \(\mathbb {D}(J^f) = X,\) under some assumptions.

The Proximal Point Algorithm (PPA) is one of the most effective methods for finding solutions of optimization problems. The PPA was introduced by Martinet [33] in Hilbert spaces. Later, Rockafellar [43] developed it and proved that it converges weakly to a zero of a monotone operator. The PPA was first studied by Bačák [7] in complete CAT(0) spaces to find minimizers of proper, convex and lower semicontinuous functionals and he established the \(\Delta \)-convergence of the PPA. Khatibzadeh and Ranjbar [27] also studied the PPA in complete CAT(0) spaces for approximating solutions of (1.3). They established that the PPA involving a monotone operator \(\Delta \)-converges to a zero of the monotone operator in complete CAT(0) spaces. Very recently, Dehghan et al. [15] proposed a Halpern-type PPA for approximating a common solution of a finite family of MIPs. They proved that the proposed PPA converges strongly to an element in the set of solutions of the MIPs. The PPA was also studied by Kumam and Chaipunya [30] for approximating solutions of (1.4) in CAT(0) spaces.

The Viscosity Iterative Method (VIM) is another reliable iterative method because of it advantage over other iterative methods. In fact, the Halpern iterative method is a particular case of the VIM. In 2000, the VIM was introduced by Moudafi [34] in a real Hilbert space where he used a strict contraction to regularize a nonexpansive mapping for the sole aim of obtaining a fixed point of the nonexpansive mapping. Since then, many researchers have also obtained convergence results with the use of VIM in some more general spaces than Hilbert spaces (see for example [24, 26, 31, 56] and other references therein). The VIM have also been studied extensively in the framework of complete CAT(0) spaces (see [5, 23, 46, 53, 54]). Like other types of iterative methods, the PPA type of VIM have also been studied in the setting of CAT(0) spaces. For instance in [19], Eskandani and Raeisi studied the PPA type of VIM associated with product of finitely many resolvents of monotone operators to find a common zero of a finite family of monotone operators. They obtained the strong convergence of the PPA under appropriate conditions. Also in [23], Izuchukwu et al. introduced a PPA type of VIM, which comprises of a nonexpansive mapping and a finite sum of resolvent operators associated with monotone bifunctions. They proved a strong convergence theorem for approximating solutions of a finite family of equilibrium problems and fixed point problem for a nonexpansive mapping in a complete CAT(0) space.

In the same vein, the Implicit Midpoint Rule (IMR) is one of the most potent techniques for solving differential algebraic equations and Ordinary Differential Equations (ODE) due to it ability to eliminate stability errors of system of ODE (see [6, 8, 44, 47, 52] for details). Therefore, the implicit midpoint rule is known to improve numerical method by adding a midpoint in the step which increases the accuracy by one order. Consider an ODE

where \(g:\mathbb {R}^N \rightarrow \mathbb {R}^N\) is a continuous function. The IMR (see [4]) is a recursion procedure that generates the sequence \(\{x_n\}\) by

where \(h > 0\) is the step size. It is generally known that if g is Lipschitz continuous and sufficiently smooth, then the sequence \(\{x_n\}\) converges to an exact solution of (1.6) as \(h\rightarrow 0\) uniformly over \(t\in [0,T]\) for any fixed \(T > 0.\) In 2015, Xu et al. [57] introduced a unification of the VIM and IMR associated with a nonexpansive mapping in a real Hilbert space. They established that the sequence generated from the unification converges to a fixed point of the nonexpansive mapping which is also a unique solution of some variational inequality problem. Based on the work of Xu et al. [57], Zhao et al. [58] proposed a VIM for IMR in complete CAT(0) spaces as follows:

where g is a contraction, \(\alpha _n \in (0, 1)\) and T is a nonexpansive mapping on D. They established that (1.8) converges to a fixed point of the nonexpansive mapping T. Ahmad and Ahmad [1] also proposed a VIM type of IMR as follows: For arbitrary initial point \(x_1\in D,\) the sequence \(\{x_n\}\) is generated by

where \(\{\alpha _n\}, \{\beta _n\}\) and \(\{\gamma _n\}\) are sequences in (0, 1), g is a contraction with a coefficient \(\theta \in [0, 1)\) and T is a nonexpansive mapping on D. They also obtained that (1.9) converges strongly to a fixed point of the nonexansive mapping which is also the unique solution of some variational inequality.

Motivated by the results of Khatibzadeh and Ranbar [27], Kumam and Chaipunya [30], Izuchukwu et al. [23], Zhao et al. [58], Ahmad and Ahmad [1], we introduce a PPA-type of VIM with a double IMR comprising of a nonexpansive mapping and the resolvents of a monotone operator and a bifunction. With a double midpoint in our method, it tends to have better accuracy than (1.8) and (1.9). We establish that the sequence generated by our proposed algorithm converges strongly to an element in the intersection of the solution sets of MIP, EP and fixed point problem for nonexpansive mapping in complete CAT(0) spaces. In addition, we give a numerical example of our method each in a finite dimensional Euclidean space and a non-Hilbert space setting to show the applicability of our method. Our results complement many recent results in the literature.

2 Preliminaries

We state some known and useful results which will be needed in the proof of our main results, (see [36, 51] for details). In the sequel, we denote strong and \(\Delta \)-convergence by “\(\rightarrow \)” and “\(\rightharpoonup \)” respectively.

Definition 2.1

Let \(\{x_n\}\) be a bounded sequence in X and \(r(\cdot , \{x_n\}): X\rightarrow [0, \infty )\) be a continuous functional defined by \(r(x, \{x_n\}) = \limsup \limits _{n\rightarrow \infty }d(x, x_n).\) The asymptotic radius of \(\{x_n\}\) is given by \(r(\{x_n\}):= \inf \{r(x, \{x_n\}): x\in X\},\) while the asymptotic center of \(\{x_n\}\) is the set \(A(\{x_n\}) = \{x\in X : r(x, \{x_n\})= r(\{x_n\})\}.\) It is well known that in an Hadamard space X, \(A(\{x_n\})\) consists of exactly one point. A sequence \(\{x_n\}\) in X is said to be \(\Delta -\)convergent to a point \(x\in X\) if \(A(\{x_{n_k}\}) = \{x\}\) for every subsequence \(\{x_{n_k}\}\) of \(\{x_n\}.\) In this case, we write \(\Delta -\lim \limits _{n\rightarrow \infty }x_n = x.\)

Remark 2.2

The notion of \(\Delta -\)convergence is weaker than usual metric convergence but it is equivalent to the weak convergence in Hilbert spaces.

Definition 2.3

[9] Let a pair \((a,b)\in X\times X\), denoted by \(\overrightarrow{ab},\) be called a vector in \(X\times X\). The quasilinearization map \(\langle .,.\rangle :(X\times X)\times (X\times X)\rightarrow \mathbb {R}\) is defined by

It is easy to see that \(\langle \overrightarrow{ba}, \overrightarrow{cd}\rangle =-\langle \overrightarrow{ab}, \overrightarrow{cd}\rangle ,~\langle \overrightarrow{ab}, \overrightarrow{cd}\rangle =\langle \overrightarrow{ae}, \overrightarrow{cd}\rangle +\langle \overrightarrow{eb}, \overrightarrow{cd}\rangle \) and \(\langle \overrightarrow{ab}, \overrightarrow{cd}\rangle =\langle \overrightarrow{cd}, \overrightarrow{ab}\rangle \) for all \(a,b,c,d,e\in X\). Furthermore, a geodesic space X is said to satisfy the Cauchy-Schwarz inequality if

It is known from [18] that a geodesically connected space is a CAT(0) space if and only if it satisfies the Cauchy-Schwarz inequality.

The notion of duality in CAT(0) space was introduced by Kakavandi and Amini [25] as follows:

Definition 2.4

Let (X, d) be a complete CAT(0) space. Consider the map \(\Theta :\mathbb {R}\times X\times X\rightarrow C(X, \mathbb {R})\) defined by

where \(C(X,\mathbb {R})\) is the space of all continuous real-valued functions on X. The pseudometric space \((\mathbb {R}\times X\times X, \mathcal {D})\) is a subspace of the pseudometric space \((Lip(X, \mathbb {R}),L)\) of all real-valued Lipschitz functions. Also, \(\mathcal {D}\) defines an equivalence relation on \((\mathbb {R}\times X\times X)\), where the equivalence class of (t, a, b) is

The set \(X^*=\{[t\overrightarrow{ab}]:(t,a,b)\in \mathbb {R}\times X\times X \}\) is a metric space with metric \(\mathcal {D}\), and the pair \((X^*,\mathcal {D})\) is the dual space of X.

Definition 2.5

Let (X, d) be a metric space and D be a nonempty closed and convex subset of X. Let T be a nonlinear mapping on D into itself. Denote by \(F(T) = \{x \in D: Tx = x\}\) the set of fixed points of T. The mapping T is said to be nonexpansive, if

and firmly nonexpansive (see [27]), if

Definition 2.6

Let X be a complete CAT(0) space and \(X^*\) be its dual space. A multivalued operator \(A:X\rightarrow 2^{X^*}\) is monotone, if for all \(x,y\in \mathbb {D}(A)\) with \(x\ne y,\) we have

The graph of the operator \(A:X\rightarrow 2^{X^*}\) is the set

The monotone operator A is called a maximal monotone operator if Gr(A) is not properly contained in the graph of any other monotone operator.

Definition 2.7

[41] Let X be a complete CAT(0) space and \(X^*\) be its dual space. The resolvent of a monotone operator A of order \(\lambda >0\) is the multivalued mapping \(J^A_{\lambda }: X\rightarrow 2^X\) defined by

The multivalued operator A is said to satisfy the range condition if \(\mathbb {D}(J_{\lambda }^A)=X,\) for every \(\lambda >0.\)

The following results depict the relationship between monotone operators and their resolvents in the settings of CAT(0) spaces.

Lemma 2.8

[27] Let X be a CAT(0) space and \(J^A_\lambda \) be the resolvent of a multivalued mapping A of order \(\lambda .\) Then

-

(i)

for any \(\lambda > 0,~R(J^A_\lambda ) \subset \mathbb {D}(A)\) and \(F(J^A_\lambda ) = A^{-1}(0),\) where \(R(J^A_\lambda )\) is the range of \(J^A_\lambda ,\)

-

(ii)

if A is monotone, then \(J^A_\lambda \) is a single-valued and firmly nonexpansive mapping,

-

(iii)

if \(0 < \lambda _1 \le \lambda _2,\) then \(d(J^A_{\lambda _2} x, J^A_{\lambda _1} x) \le \frac{\lambda _2 - \lambda _1}{\lambda _2 + \lambda _1}~d(x, J^A_{\lambda _2} x),\) which implies that \(d(x, J^A_{\lambda _1} x) \le 2d(x, J^A_{\lambda _2} x)~~\forall ~x \in X.\)

Remark 2.9

If X is a CAT(0) space and \(A : X \rightarrow 2^{X^*}\) is a multivalued monotone mapping, then

for all \(v \in A^{-1}(0), ~x \in \mathbb {D}(J^{A}_\lambda )\) and \(\lambda > 0\) (see [50]);

and

Definition 2.10

Let X be a CAT(0) space and D be a nonempty closed and convex subset of X. A function \(f : D \times D \rightarrow \mathbb {R}\) is called monotone if

Lemma 2.11

[30] Let D be a nonempty closed and convex subset of a CAT(0) space X. Suppose that f is monotone and \(\mathbb {D}(J^f) \ne \emptyset .\) Then, the following properties hold:

-

(i)

\(J^f\) is single-valued.

-

(ii)

If \(\mathbb {D}(J^f) \supset D,\) then \(J^f\) is nonexpansive restricted to D.

-

(iii)

If \(\mathbb {D}(J^f) \supset D\) then \(F(J^f) = EP(f, D).\)

Remark 2.12

[23] It follows easily from (1.5) that the resolvent \(J^f_\mu \) of the bifunction f and order \(\mu > 0\) is given as

where \(\bar{f}\) is defined in this case as

Lemma 2.13

[23] Let D be a nonempty, closed and convex subset of a complete CAT(0) space X and \(f : D \times D \rightarrow \mathbb {R}\) be a monotone bifunction such that \(D \subset \mathbb {D}(J^f_\mu )\) for \(\mu > 0.\) Then, the following hold:

-

(i)

\(J^f_\mu \) is firmly nonexpansive restricted to D.

-

(ii)

If \(F(J_\mu ) \ne \emptyset ,\) then

$$\begin{aligned} d^2(J^f_\mu x, x) \le d^2(x, v) - d^2(J^f_\mu x, v) ~\forall ~x \in D,~v \in F(J^f_\mu ). \end{aligned}$$ -

(iii)

If \(0 < \mu _1 \le \mu _2,\) then \(d(J^f_{\mu _2} x, J^f_{\mu _1} x) \le \sqrt{1 - \frac{\mu _1}{\mu _2}}d(x, J^f_{\mu _2} x),\) which implies that \(d(x, J^f_{\mu _1} x) \le 2d(x, J^f_{\mu _2} x)~~\forall ~x \in D.\)

Theorem 2.14

[30, Theorem 5.2] Let D be a nonempty, closed and convex subset of a complete CAT(0) space X. Suppose that f has the following properties

-

(i)

\(f(x, x) = 0\) for all \(x \in D,\)

-

(ii)

f is monotone,

-

(iii)

for each \(x \in D,\) \(y \mapsto f(x, y)\) is convex and lower semicontinuous.

-

(iv)

for each \(x \in D,\) \(f(x, y) \ge \limsup _{t\downarrow 0} f((1 - t)x \oplus tz, y)\) for all \(x, z \in D.\)

Then \(\mathbb {D}(J^f) = X\) and \(J^f\) single-valued.

Remark 2.15

[23] If the bifunction f satisfies assumption (i)-(iv) of Theorem 2.14, then the conclusions of Lemma 2.13 hold in the whole of X.

Definition 2.16

Let D be a nonempty closed and convex subset of a CAT(0) space X. The metric projection is a mapping \(P_D:X\rightarrow D\) which assigns to each \(x\in X,\) the unique point \(P_Dx\in D\) such that

Lemma 2.17

[18, 36] Let X be a CAT(0) space. Then for all \(x,y,z \in X\) and all \(t, s \in [0,1],\) we have

-

(i)

\(d(tx\oplus (1-t)y,z)\le td(x,z)+(1-t)d(y,z),\)

-

(ii)

\(d^2(tx\oplus (1-t)y,z)\le td^2(x,z)+(1-t)d^2(y,z)-t(1-t)d^2(x,y),\)

-

(iii)

\(d^2(z, t x \oplus (1-t) y)\le t^2 d^2(z, x)+(1-t)^2 d^2(z, y)+2t (1-t)\langle \overrightarrow{zx}, \overrightarrow{zy}\rangle ,\)

-

(iv)

\(d(tw \oplus (1 - t)x, ty \oplus (1 - t)z) \le td(w, y) + (1 - t)d(x, z),\)

-

(v)

\(d(tx \oplus (1 - t)y, sx \oplus (1 - s)y) \le |t - s|d(x, y).\)

Lemma 2.18

[36] Every bounded sequence in a complete CAT(0) space has a \(\Delta \)-convergent subsequence.

Lemma 2.19

[16] Let D be a nonempty, closed and convex subset of a CAT(0) space X, \(x\in X\) and \(u\in D.\) Then \(u=P_{D}x\) if and only if \(\langle \overrightarrow{xu},\overrightarrow{yu}\rangle \le 0\) for all \(y\in D.\)

Definition 2.20

Let D be a nonempty, closed and convex subset of a complete CAT(0) space X. A mapping \(T : D \rightarrow D\) is said to be \(\Delta \)-demiclosed, if for any bounded sequence \(\{x_n\}\) in X, such that \(\Delta -\lim \limits _{n\rightarrow \infty }x_n = x\) and \(\lim \limits _{n\rightarrow \infty }d(x_n, Tx_n) = 0,\) then \(x = Tx\).

Lemma 2.21

[17] Let X be a complete CAT(0) space and \(T:X\rightarrow X\) be a nonexpansive mapping. Then T is \(\Delta \)-demiclosed.

Lemma 2.22

[55] Let \(\{a_{n}\}\) be a sequence of non-negative real numbers satisfying

where \(\{\alpha _{n}\}\) and \(\{\delta _{n}\}\) satisfy the following conditions:

-

(i)

\(\{\alpha _n\}\subset [0,1],~\Sigma _{n=0}^{\infty }\alpha _{n}=\infty \),

-

(ii)

\(\limsup _{n\rightarrow \infty }\frac{\delta _{n}}{\alpha _n}\le 0\) or \(\Sigma _{n=0}^{\infty }|\delta _{n}|<\infty .\)

Then \(\lim _{n\rightarrow \infty }a_{n}=0.\)

3 Main results

We begin with the following Lemma which is crucial in establishing our main result.

Lemma 3.1

Let D be a nonempty, closed and convex subset of a complete CAT(0) space X. Let \(X^*\) be the dual space of X and \(A : X \rightarrow 2^{X^*}\) be a multivalued monotone operator which satisfies the range condition. Let \(f : D \times D \rightarrow \mathbb {R}\) be a bifunction satisfying assumptions (i)-(iv) of Theorem 2.14 and \(T: X \rightarrow X\) be a nonexpansive mapping. If \(F(T) \cap F(J^A_{\lambda _2}) \cap F(J^f_{\mu _2}) \ne \emptyset ,\) then for \(0 < \lambda _1 \le \lambda _2\) and \(0 < \mu _1 \le \mu _2,\) we have that \(F(T\circ J^A_{\lambda _2}\circ J^f_{\mu _2}) = F(T) \cap F(J^A_{\lambda _1}) \cap F(J^f_{\lambda _1}).\)

Proof

It is obvious that \( F(T) \cap F(J^A_{\lambda _1}) \cap F(J^f_{\mu _1}) \subseteq F(T\circ J^A_{\lambda _2}\circ J^f_{\mu _2}) .\) We only need to show that \(F(T\circ J^A_{\lambda _2}\circ J^f_{\mu _2}) \subseteq F(T) \cap F(J^A_{\lambda _1}) \cap F(J^f_{\mu _1}).\) Let \(x \in F(T\circ J^A_{\lambda _2}\circ J^f_{\mu _2})\) and \(y \in F(T) \cap F(J^A_{\lambda _2}) \cap F(J^f_{\mu _2}).\) Then by nonexpansivity of T, we have

which implies

Similarly, from Lemma 2.13(ii), (3.1) and (3.2) we have

which implies

From (3.2) and (3.3), we obtain that

which implies that

Furthermore, from (2.8) and Lemma 2.11 we have

and

Adding (3.6) and (3.7), and using the fact that f is monotone, we obtain

Using the quasilinearization properties on (3.8), we obtain

Since \(\frac{\mu _1}{\mu _2} \le 1,\) we have that

which implies that

By triangle inequality and (3.9), we obtain that

Therefore from (3.3), we obtain that \(x \in F(J^f_{\mu _1}).\) By similar argument as in (3.6)–(3.10), we obtain that \(x \in F(J^A_{\lambda _1})\) and then we have that \(F(T\circ J^A_{\lambda _2}\circ J^f_{\mu _2}) \subseteq F(T) \cap F(J^A_{\lambda _1}) \cap F(J^f_{\mu _1}).\) Hence, this completes the proof. \(\square \)

Theorem 3.2

Let D be a nonempty, closed and convex subset of a complete CAT(0) space X, \(X^*\) be the dual space of X and \(A : X \rightarrow 2^{X^*}\) be a multivalued monotone operator satisfying the range condition. Let \(f: D \times D \rightarrow \mathbb {R}\) be a bifunction satisfying assumption (i)-(iv) in Theorem 2.14. Let \(T: X \rightarrow X\) be nonexpansive mapping and \(g: X \rightarrow X\) be a contraction mapping with coefficient \(\theta \in (0, 1).\) Suppose that \(\Gamma : = F(T) \cap A^{-1}(0) \cap EP(f, D) \ne \emptyset \) and for arbitrary \(x_1 \in X,\) the sequence \(\{x_n\}\) is generated by

where \(\{\alpha _n\} \in (0,1)\) and \(\{\lambda _n\},~\{\mu _n\}\) are sequences in \((0,\infty )\) such that the following conditions are satisfied:

-

(A1)

\(\lim \limits _{n \rightarrow \infty } \alpha _n = 0\) and \(\sum \limits ^{\infty }_{n=1}\alpha _n=\infty ,\)

-

(A2)

\(\sum \limits _{n=1}|\alpha _{n+1}-\alpha _n| <\infty ,\)

-

(A3)

\(0< \lambda _{n-1} \le \lambda _n,~\sum \limits _{n=1}^\infty \left( \sqrt{1-\frac{\lambda _{n-1}}{\lambda _n}}\right) <\infty \) and \(0< \mu _{n-1} \le \mu _n, ~\sum \limits _{n=1}^\infty \left( \sqrt{1-\frac{\mu _{n-1}}{\mu _n}}\right) <\infty ~\forall ~n \ge 1.\)

Then, the sequence \(\{x_n\}\) converges to a point \(\bar{x}\) in \(\Gamma \) which is also a unique solution of the following variational inequality

Remark 3.3

With a midpoint at each step of our method, it tends to have better accuracy than (1.8), (1.9) and other methods with single or no midpoint rule.

Proof

STEP 1: We show that \(\{x_n\}\) is bounded. Let \(p\in \Gamma ,\) then by Lemma 2.17(i) and (3.11) we have

This implies that

which implies by mathematical induction that

Thus, \(\{x_n\}\) is bounded. Consequently \(\{y_n\},\) \(\{Ty_n\}\) and \(\left\{ g\left( \frac{x_n \oplus x_{n+1}}{2}\right) \right\} \) are also bounded.

STEP 2: We show that \(\lim \limits _{n \rightarrow \infty }d(x_{n+1},x_n)=0.\) From Lemma 2.17(iv),(v) and (3.11) we have

Again, from (2.7) and (3.9) we obtain that

Substituting (3.13) in (3.12) we have

where

It implies from (3.15) that

Then, by Lemma 2.22, conditions (A2) and (A3), we obtain that

STEP 3: We show that \(\lim \limits _{n \rightarrow \infty }d(\bar{x}_n,T\bar{x}_n)=0=\lim \limits _{n \rightarrow \infty }d(\bar{x}_n,Ty_n),\) where \(\bar{x}_n=\frac{x_n\oplus x_{n+1}}{2}.\) From Lemma (2.17)(ii) we have

Also, from (2.6), we have that

Substituting (3.18) in (3.17), we obtain

which implies

Then from (3.16) and condition (A1), we obtain that

Similarly, from Lemma 2.13(ii) we have that

Again, substituting (3.22) in (3.19), we have

which also implies

By the same argument as in (3.20), we obtain that

Hence, from (3.21) and (3.25) we obtain that

Also, from (3.16), we have that

Then from (3.16), (3.26), (3.27) and condition (A1), we obtain that

Also, from (3.26) and (3.28) we have

STEP 4: We show that \(\limsup \limits _{n \rightarrow \infty }\langle \overrightarrow{g(x^{*})x^{*}},\overrightarrow{x_nx^{*}}\rangle \le 0,\) where \(x^{*}=P_{\Gamma }g(x^{*}).\)

Since \(\{x_n\}\) is bounded and X is a complete CAT(0) space, then we obtain from Lemma 2.18 that there exists a subsequence \(\{x_{n_i}\}\) of \(\{x_n\}\) such that \(\Delta -\lim \limits _{i\rightarrow \infty }x_{n_i}=\bar{z}.\) Thus, by (3.27), we obtain that \(\Delta -\lim \limits _{i\rightarrow \infty }\bar{x}_{n_i}=\bar{z}.\) Also, since \(T\circ J^A_{\lambda _n}\circ J^f_{\mu _n}\) is nonexpansive, then it implies from Lemma 2.21 that it is demiclosed. Therefore, from Lemma 2.11(iii), Lemma 2.8(i), Lemma 3.1 and (3.29) we obtain that \(\bar{z}\in F(T\circ J^A_{\lambda _n}\circ J^f_{\mu _n})\subseteq F(T)\cap F(J^A_{\lambda })\cap F(J^f_{\mu })=\Gamma .\) Since \(\{x_n\}\) is bounded, we can choose without loss of generality, \(\{x_{n_i}\}\) of \(\{x_n\}\) such that

Now, using this and Lemma 2.19 we obtain that

STEP 5: Finally, we show that \(x_n\rightarrow x^{*}~~ \text {as}~~n \rightarrow \infty \) and then \(x^{*}\) is also the unique fixed point of the contraction \(P_{\Gamma }\circ g.\) From Lemma 2.17(iii) and (3.11), we have

where

Then

which implies that

where

Let \(k:(0,\infty )\rightarrow \mathbb {R}\) be a function defined by

Then \(\lim \limits _{t \rightarrow 0}k(t)=4(1-\theta ).\) Let \(\delta >0\) such that \(0<t<\delta \) and \(\epsilon =4(1-\theta )>0.\) Then

which implies that

Since \(\alpha _n \rightarrow 0\) as \( n \rightarrow \infty ,\) there exists an integer \(n\in \mathbb {N}\) such that \(\alpha _n <\delta \) for \(n\ge \mathbb {N}.\)

From (3.15) and (3.16) we obtain

From (3.31) we have

Then by (3.30) and condition (A1) we obtain that

Similarly, from (3.30) and condition (A1) we obtain that

By Lemma 2.22, (3.35), and condition (A1) we obtain that

Hence, (3.36) implies that \(x_n\rightarrow x^{*}\) as \(n \rightarrow \infty .\) Therefore \(\{x_n\}\) converges to \(x^{*} \in \Gamma .\) \(\square \)

By setting \(J^f_{\mu _n} \equiv I\) (where I is an identity mapping) in Theorem 3.2, we obtain the following result:

Corollary 3.4

Let X be a complete CAT(0) space, \(X^*\) be the dual space of X and \(A : X \rightarrow 2^{X^*}\) be a multivalued monotone operator satisfying the range condition. Let \(T: X \rightarrow X\) be nonexpansive mapping and \(g: X \rightarrow X\) be a contraction mapping with coefficient \(\theta \in (0, 1).\) Suppose that \(\Gamma : = F(T) \cap A^{-1}(0)\ne \emptyset \) and for arbitrary \(x_1 \in X,\) the sequence \(\{x_n\}\) is generated by

where \(\{\alpha _n\} \in (0,1)\) and \(\{\lambda _n\}\) is a sequence in \((0,\infty )\) such that the following conditions are satisfied:

-

(A1)

\(\lim \limits _{n \rightarrow \infty } \alpha _n = 0\) and \(\sum \limits ^{\infty }_{n=1}\alpha _n=\infty ,\)

-

(A2)

\(\sum \limits _{n=1}|\alpha _{n+1}-\alpha _n| <\infty ,\)

-

(A3)

\(0 < \lambda _{n-1} \le \lambda _n\) and \(\sum \limits _{n=1}^\infty \left( \sqrt{1-\frac{\lambda _{n-1}}{\lambda _n}}\right) <\infty ~\forall ~n \ge 1.\)

Then, the sequence \(\{x_n\}\) converges to a point \(\bar{x}\) in \(\Gamma \) which is also a unique solution of the following variational inequality

The following corollaries are single and non-implicit midpoint rule cases of Theorem 3.2.

Corollary 3.5

Let D be a nonempty, closed and convex subset of a complete CAT(0) space X, \(X^*\) be the dual space of X and \(A : X \rightarrow 2^{X^*}\) be a multivalued monotone operator satisfying the range condition. Let \(f: D \times D \rightarrow \mathbb {R}\) be a bifunction satisfying assumption (i)-(iv) in Theorem 2.14. Let \(T: X \rightarrow X\) be nonexpansive mapping and \(g: X \rightarrow X\) be a contraction mapping with coefficient \(\theta \in (0, 1).\) Suppose that \(\Gamma : = F(T) \cap A^{-1}(0) \cap EP(f, D) \ne \emptyset \) and for arbitrary \(x_1 \in X,\) the sequence \(\{x_n\}\) is generated by

where \(\{\alpha _n\} \in (0,1)\) and \(\{\lambda _n\},~\{\mu _n\}\) are sequences in \((0,\infty )\) such that the conditions (A1) - (A3) of Theorem 3.2 are satisfied. Then, the sequence \(\{x_n\}\) converges to a point \(\bar{x}\) in \(\Gamma \) which is also a unique solution of the following variational inequality

Corollary 3.6

Let D be a nonempty, closed and convex subset of a complete CAT(0) space X, \(X^*\) be the dual space of X and \(A : X \rightarrow 2^{X^*}\) be a multivalued monotone operator satisfying the range condition. Let \(f: D \times D \rightarrow \mathbb {R}\) be a bifunction satisfying assumption (i)-(iv) in Theorem 2.14. Let \(T: X \rightarrow X\) be nonexpansive mapping and \(g: X \rightarrow X\) be a contraction mapping with coefficient \(\theta \in (0, 1).\) Suppose that \(\Gamma : = F(T) \cap A^{-1}(0) \cap EP(f, D) \ne \emptyset \) and for arbitrary \(x_1 \in X,\) the sequence \(\{x_n\}\) is generated by

where \(\{\alpha _n\} \in (0,1)\) and \(\{\lambda _n\},~\{\mu _n\}\) are sequences in \((0,\infty )\) such that the conditions (A1) - (A3) of Theorem 3.2 are satisfied. Then, the sequence \(\{x_n\}\) converges to a point \(\bar{x}\) in \(\Gamma \) which is also a unique solution of the following variational inequality

Let X be a CAT(0) space. A function \(h : X \rightarrow (-\infty , \infty ]\) is called convex, if

h is proper, if \(\mathbb {D}(h) := \{x \in X : h(x) < +\infty \} \ne \emptyset .\) The function \(h:\mathbb {D}(h)\subseteq X \rightarrow (-\infty , \infty ]\) is said to be lower semicontinuous at a point \(x \in \mathbb {D}(h),\) if

for each sequence \(\{x_n\} \in \mathbb {D}(h),\) such that \(\lim \limits _{n\rightarrow \infty }x_n = x.\) h is said to be lower semicontinuous on \(\mathbb {D}(h)\) if it is lower semicontinuous at any point in \(\mathbb {D}(f).\) For any \(\mu > 0,\) the resolvent of a proper, convex and lower semicontinuous function h in X is defined as (see [7]) \(J^h_{\mu } (x) = \arg \min \limits _{y \in X} \big [h(y) + \frac{1}{2\mu }d^2(y, x)\big ].\)

A minimization problem is to find \(x \in X\) such that \(h(x) = \min \limits _{y\in X} h(y).\) The solution set of such problem is denoted by \(\arg \min \limits _{y\in X}h (y).\)

Suppose we replace the bifunction f with a proper, convex and lower semicontinuous function h and \(g(x_n)\) with u for a fixed \(u\in X\) in Corollary 3.6, we have the following result as a consequence.

Corollary 3.7

Let X be a complete CAT(0) space, \(X^*\) be the dual space of X and \(A : X \rightarrow 2^{X^*}\) be a multivalued monotone operator satisfying the range condition. Let \(h:X\rightarrow [-\infty , +\infty )\) be a proper, convex and lower semicontinuous function and \(T: X \rightarrow X\) be nonexpansive mapping. Suppose that \(\Gamma : = F(T) \cap A^{-1}(0) \cap \arg \min \limits _{y\in X}f (y) \ne \emptyset \) and for arbitrary \(x_1 \in X,\) the sequence \(\{x_n\}\) is generated by

where \(\{\alpha _n\} \in (0,1)\) and \(\{\lambda _n\},~\{\mu _n\}\) are sequences in \((0,\infty )\) such that the conditions (A1) - (A3) of Theorem 3.2 are satisfied. Then, the sequence \(\{x_n\}\) converges to a element x in \(\Gamma .\)

4 Numerical example

In this section, we present some numerical experiments to illustrate the applicability of the proposed algorithms.

Example 4.1

Let \(X = \mathbb {R}^2,\) endowed with the Euclidean norm. For each \(\bar{x} \in X,\) we define a mapping T as follows:

Then, T is nonexpansive. Let \(A : \mathbb {R}^2 \rightarrow \mathbb {R}^2\) be defined by

Then A is a monotone. We note from [16] that \([t\overrightarrow{ab}] \equiv t(b - a),\) for all \(t \in \mathbb {R}\) and \(a, b \in \mathbb {R}^2\) (see [25]). Therefore, for each \(\bar{x} \in \mathbb {R}^2\) we have

Computing \(\bar{z} = J^A_{\lambda _n}(\bar{x})\) for (4.1), we have

Thus

Also, let \(f : D \times D \rightarrow \mathbb {R}\) be a bifunction defined by

Then, (4.2) satisfies assumption (i)-(iv) in Theorem 2.14. Let \(\mu _n = 1~\forall ~n\ge 1,\) then

Computing \(\bar{z} = J^f_{1}(\bar{x})\) for (4.2), we have

which implies that \(\bar{z} = \frac{1}{2}(\bar{x} - 8).\) Hence, \(J^f_{1}(\bar{x}) = \frac{1}{2}(x_1 - 8,~x_2 - 8).\)

Let \(g : \mathbb {R}^2 \rightarrow \mathbb {R}^2\) be defined by \(g(\bar{x}) = \frac{3}{5}\bar{x}.\) We choose \(\alpha _n = \frac{1}{100n+1}\) then conditions (A1)-(A3) of Theorem 3.2 are satisfied. Hence, for \(x_1 \in \mathbb {R}^2,\) Algorithm 3.11 becomes

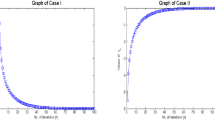

Case 1: Take \(x_1=[0.5,~0.25]^T\) and \(\lambda _n=1.\)

Case 2: Take \(x_1=[2,~-3]^T\) and \(\lambda _n=1.\)

Case 3: Take \(x_1=[0.5,~0.25]^T\) and \(\lambda _n=\frac{4n+1}{n+4}.\)

Case 4: Take \(x_1=[2,~-3]^T\) and \(\lambda _n=\frac{4n+1}{n+4}.\)

Matlab version R2019a is used to obtain the graphs of errors against number of iterations.

Remark 4.2

Using different choices of the initial points \(x_1\) and \(\lambda \) (that is, Case 1-Case 4), we obtain the above numerical results (Fig. 1). We see that the error values converge to 0 which implies that choosing arbitrary starting points, the sequence \(\{x_n\}\) converges to an element in the solution set \(\Gamma .\)

In the following example, we consider a numerical example of our method in a non-Hilbert space setting.

Example 4.3

[15] Let \(Y =\mathbb {R}^2\) be an \(\mathbb {R}-\)tree with the radial metric \(d_r,\) where \(d_r(x, y) = d(x, y)\) if x and y are situated on a Euclidean straight line passing through the origin and \(d_r(x, y) = d(x, \textbf{0}) + d(y, \textbf{0}) := \Vert x\Vert + \Vert y\Vert \) otherwise. Let \(p = (1, 0)\) and \(X = B \cup C,\) where

Then X is an Hadamard space. Thus, for each \([\overrightarrow{tab}]\in X^*,\) we obtain that

Now, define \(A : X \rightarrow 2^{X^*}\) by

Then A is a multivalued monotone operator and

5 Conclusion

In this paper, we introduce a proximal point-type of viscosity iterative method with double implicit midpoint rule comprising of a nonexpansive mapping and the resolvents of a monotone operator and a bifunction in Hadamard spaces. Furthermore, we prove a strong convergence result under some mild conditions and provide some numerical experiments to show the accuracy and efficiency of the proposed method in a finite dimensional space and a non-Hilbert space. This improves some existing methods on implicit midpoint point rule in the literature.

References

Ahmad, I., Ahmad, M.: An implicit viscosity technique of nonexpansive mapping in CAT(0) spaces. Open J. Math. Sci. 1(1), 1–12 (2017)

Alakoya, T.O., Jolaoso, L.O., Taiwo, A., Mewomo, O.T.: Inertial algorithm with self-adaptive stepsize for split common null point and common fixed point problems for multivalued mappings in Banach spaces. Optimization (2021). https://doi.org/10.1080/02331934.2021.1895154

Alakoya, T.O., Taiwo, A., Mewomo, O.T., Cho, Y.J.: An iterative algorithm for solving variational inequality, generalized mixed equilibrium, convex minimization and zeros problems for a class of nonexpansive-type mappings. Ann. Univ. Ferrara Sez. VII Sci. Mat. 67(1), 1–31 (2021)

Alghamdi, M.A., Alghamdi, M.A., Shahzad, N., Xu, H.K.: The implicit midpoint rule for nonexpansive mappings. Fixed Point Theory Appl. 2014, 9 (2014)

Aremu, K.O., Izuchukwu, C., Ogwo, G.N., Mewomo, O.T.: Multi-step Iterative algorithm for minimization and fixed point problems in p-uniformly convex metric spaces. J. Ind. Manag. Optim. 17(4), 2161–2180 (2021)

Auzinger, W., Frank, R.: Asymptotic error expansions for stiff equations: an analysis for the implicit midpoint and trapezoidal rules in the strongly stiff case. Numer. Math. 56, 469–499 (1989)

Bacak, M.: The proximal point algorithm in metric spaces. Israel J. Math. 194, 689–701 (2013)

Bader, G., Deuflhard, P.: A semi-implicit midpoint rule for stiff systems of ordinary differential equations. Numer. Math. 41, 373–398 (1983)

Berg, I.D., Nikolaev, I.G.: Quasilinearization and curvature of Alexandrov spaces. Geom. Dedicata 133, 195–218 (2008)

Bianchi, M., Schaible, S.: Generalized monotone bifunctions and equilibrium problems. J. Optim Theory Appl. 90, 31–43 (1996)

Bridson, M.R., Haeiger, A.: Metric Spaces of Non-Positive Curvature, Fundamental Principle of Mathematical Sciences, vol. 319. Springer, Berlin, Germany (1999)

Bruhat, F., Tits, J.: Groupes réductits sur un cor local, I. Donneés Radicielles Valueés, Institut. des Hautes Études Scientifiques, 41, (1972)

Colao, V., López, G., Marino, G., Martín-Márquez, V.: Equilibrium problems in Hadamard manifolds. J. Math. Anal. Appl. 388, 61–77 (2012)

Combetes, P.L., Hirstoaga, S.A.: Equilibrium programming in Hilbert spaces. J. Nonlinear Convex Anal. 6, 117–136 (2005)

Dehghan, H., Izuchukwu, C., Mewomo, O.T., Taba, D.A.: G. C. Ugwunnadi Iterative algorithm for a family of monotone inclusion problems in CAT(0) spaces. Quaest. Math. 43, 975–998 (2020)

Dehghan, H., Rooin, J.: Metric projection and convergence theorems for nonexpansive mapping in Hadamard spaces, arXiv:1410.1137VI [math.FA], 5 Oct. (2014)

Dhompongsa, S., Kirk, W.A., Panyanak, B.: Nonexpansive set-valued mappings in metric and Banach spaces. J. Nonlinear Convex Anal. 8, 35–45 (2007)

Dhompongsa, S., Panyanak, B.: On \(\Delta \)-convergence theorems in CAT(0) spaces. Comp. Math. Appl. 56, 2572–2579 (2008)

Eskandani, G.Z., Raeisi, M.: On the zero point problem of monotone operators in Hadamard spaces. Numer. Algorithms 80(4), 1155–1179 (2019)

Espinola, R., Kirk, W.A.: Fixed point theorems in \(\mathbb{R} -\)trees with applications to graph theory. Topol. Appl. 153(7), 1046–1055 (2006)

Goebel, K., Reich, S.: Uniform Convexity. Hyperbolic Geometry and Nonexpansive Mappings. Marcel Dekker, New York (1984)

Iusem, A.N., Kassay, G., Sosa, W.: On certain conditions for the existence of solutions of equilibrium problems. Math. Program. Ser. B 116, 259–273 (2009)

Izuchukwu, C., Aremu, K.O., Mebawondu, A.A., Mewomo, O.T.: A viscosity iterative technique for equilibrium and fixed point problems in a Hadamard space. Appl. Gen. Topol. 20(1), 193–210 (2019)

Jung, J.S.: Iterative approaches to a common fixed points of nonexpansive mappings in Banach spaces. J. Math. Anal. Appl. 116, 509–520 (2005)

Kakavandi, B.A., Amini, M.: Duality and subdifferential for convex functions on complete CAT(0) metric spaces. Nonlinear Anal. 73, 3450–3455 (2010)

Kesornprom, S., Cholamjiak, P.: Proximal type algorithms involving linesearch and inertial technique for split variational inclusion problem in hilbert spaces with applications. Optimization 68, 2365–2391 (2019)

Khatibzadeh, H., Ranjbar, S.: Monotone operators and the proximal point algorithm in complete CAT(0) metric spaces. J. Aust. Math. Soc. 103, 70–90 (2017)

Kirk, W.A.: Fixed point theorems in CAT(0) spaces and \(\mathbb{R} -\)trees. Fixed Point Theory Appl. 2004(4), 309–316 (2004)

Kirk, W.A.: Some recent results in metric fixed point theory. Fixed Point Theory Appl. 2007(2), 195–207 (2007)

Kumam, P., Chaipunya, P.: Equilibrium problems and proximal algorithms in Hadamard spaces, arXiv:1807.10900v1 [math.oc]

Kunrada, K., Pholasa, N., Cholamjiak, P.: On convergence and complexity of the modified forward-backward method involving new linesearches for convex minimization. Math. Meth. Appl. Sci. 42, 1352–1362 (2019)

Laowang, W., Panyanak, B.: Strong and \(\Delta -\)convergence theorems for multivalued mappings in CAT(0) spaces. J. Inequal. Appl. 2009, 16 (2019)

Martinet, B.: Regularisation d’ inequations varaiationnelles par approximations successives. Rev. Fr. Inform. Rec. Oper. 4, 154–158 (1970)

Moudafi, A.: Viscosity approximation methods for fixed point problems. J. Math. Anal. Appl. 241, 46–55 (2000)

Noor, M.A., Noor, K.I.: Some algorithms for equilibrium problems on Hadamard manifolds. J. Inequal. Appl. 230, 8 (2012)

Ogwo, G.N., Izuchukwu, C., Aremu, K.O., Mewomo, O.T.: A viscosity iterative algorithm for a family of monotone inclusion problems in an Hadamard space. Bull. Belg. Math. Soc. Simon Stevin 27(1), 127–152 (2020)

Ogwo, G.N., Izuchukwu, C., Aremu, K.O., Mewomo, O.T.: On \(\theta \)-generalized demimetric mappings and monotone operators in Hadamard spaces. Demonstr. Math. 53(1), 95–111 (2020)

Ogwo, G.N., Izuchukwu, C., Mewomo, O.T.: A modified extragradient algorithm for a certain class of split pseudo-monotone variational inequality problem. Numer. Algebra Control Optim. (2021). https://doi.org/10.3934/naco.2021011

Ogwo, G.N., Izuchukwu, C., Mewomo, O.T.: Inertial methods for finding minimum-norm solutions of the split variational inequality problem beyond monotonicity. Numer. Algorithms (2021). https://doi.org/10.1007/s11075-021-01081-1

Olona, M.A., Alakoya, T.O., Owolabi, A.O.-E., Mewomo, O.T.: Inertial shrinking projection algorithm with self-adaptive step size for split generalized equilibrium and fixed point problems for a countable family of nonexpansive multivalued mappings. Demonstr. Math. 54(1), 47–67 (2021)

Ranjbar, S., Khatibzadeh, H.: Strong and \(\Delta -\)convergence to a zero of a monotone operator in CAT(0) spaces. Mediterr. J. Math. 14, 15 (2017)

Reich, S., Shafrir, I.: Nonexpansive iterations in hyperbolic spaces. Nonlinear Anal. 15, 537–558 (1990)

Rockafellar, R.T.: Monotone Operators and the proximal point algorithm. SIAM J. Control Optim. 14, 877–898 (1976)

Schneider, C.: Analysis of the linearly implicit mid-point rule for differential algebra equations, it Electron. Trans. Numer. Anal. 1, 1–10 (1993)

Shahzad, N.: Fixed points for multimaps in CAT(0) spaces. Topol. Appl. 156(5), 997–1001 (2009)

Suparatulatorn, R., Cholamjiak, P., Suantai, S.: On solving the minimization problem and the fixed point problem for nonexpansive mappings in CAT(0) spaces. Optim. Meth. Softw. 3(2), 182–192 (2017)

Somalia, S.: Implicit midpoint rule to the nonlinear degenerate boundary value problems. Int. J. Comput. Math. 79, 327–332 (2002)

Taiwo, A., Alakoya, T.O., Mewomo, O.T.: Halpern-type iterative process for solving split common fixed point and monotone variational inclusion problem between Banach spaces. Numer. Algorithms 86(4), 1359–1389 (2021)

Taiwo, A., Alakoya, T.O., Mewomo, O.T.: Strong convergence theorem for solving equilibrium problem and fixed point of relatively nonexpansive multi-valued mappings in a Banach space with applications. Asian Eur. J. Math. (2021). https://doi.org/10.1142/S1793557121501370

Ugwunnadi, G.C., Izuchukwu, C., Mewomo, O.T.: Strong convergence theorem for monotone inclusion problem in CAT(0) spaces. Afr. Mat. 30(1–2), 151–169 (2019)

Ugwunnadi, G.C., Izuchukwu, C., Mewomo, O.T.: On nonspreading-type mappings in Hadamard spaces. Bol. Soc. Parana. Mat. 39(3), 175–197 (2021)

Veldhuxzen, M.V.: Asymptotic expansions of the global error for the implicit midpoint rule (stiff case). Computing 33, 185–192 (1984)

Wangkeeree, R., Preechasilp, P.: Viscosity approximation methods for nonexpansive mappings in CAT(0) spaces. J. Inequal. Appl. 2013, 15 (2013)

Wangkeeree, R., Preechasilp, P.: Viscosity approximation methods for nonexpansive semigroups in CAT(0) spaces. Fixed Point Theory Appl. 2013, 16 (2013)

Xu, H.K.: Iterative algorithms for nonlinear operators. J. Lond. Math. Soc. 66, 240–256 (2002)

Xu, H.K.: Viscosity approximation methods for nonexpansive mappings. J. Math. Anal. Appl. 298, 279–291 (2004)

Xu, H.K., Alghamdi, M.A., Shahzad, N.: The viscosity technique for the implicit midpoint rule of nonexpansive mappings in Hilbert spaces. Fixed Point Theory Appl. 2015, 12 (2015)

Zhao, L.C., Chang, S.S., Wang, L., Wang, G.: Viscosity approximation methods for the implicit midpoint rule of nonexpansive mappings in CAT (0) Spaces. J. Nonlinear Sci. Appl. 10, 386–394 (2017)

Acknowledgements

The authors sincerely thank the reviewers for their careful reading, constructive comments and fruitful suggestions that improved the manuscript. The second author acknowledges with thanks the bursary and financial support from Department of Science and innovation and National Research Foundation, Republic of South Africa Center of Excellence in Mathematical and Statistical Sciences (DST-NRF COE-MaSS) Doctoral Bursary. The third author acknowledge with thanks the bursary and financial support from African Institute for Mathematical Sciences (AIMS), South Africa. The fourth author is supported by the National Research Foundation (NRF) of South Africa Incentive Funding for Rated Researchers (Grant Number 119903). Opinions expressed and conclusions arrived are those of the authors and are not necessarily to be attributed to the COE-MaSS, AIMS and NRF.

Funding

Open access funding provided by University of KwaZulu-Natal.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Aremu, K.O., Izuchukwu, C., Ogwo, G.N. et al. A modified viscosity iterative method for implicit midpoint rule for optimization and fixed point problems in CAT(0) spaces. Afr. Mat. 34, 23 (2023). https://doi.org/10.1007/s13370-023-01040-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s13370-023-01040-0

Keywords

- Viscosity iterative method

- Implicit midpoint rule

- Monotone inclusion problem

- Equilibrium problem

- Fixed point problem

- Hadamard space