Abstract

We introduce and study coordinate-wise powers of subvarieties of \({\mathbb {P}}^n\), i.e. varieties arising from raising all points in a given subvariety of \({\mathbb {P}}^n\) to the r-th power, coordinate by coordinate. This corresponds to studying the image of a subvariety of \({\mathbb {P}}^n\) under the quotient of \({\mathbb {P}}^n\) by the action of the finite group \({\mathbb {Z}}_r^{n+1}\). We determine the degree of coordinate-wise powers and study their defining equations, in particular for hypersurfaces and linear spaces. Applying these results, we compute the degree of the variety of orthostochastic matrices and determine iterated dual and reciprocal varieties of power sum hypersurfaces. We also establish a link between coordinate-wise squares of linear spaces and the study of real symmetric matrices with a degenerate eigenspectrum.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Recently, Hadamard products of algebraic varieties have been attracting attention of geometers. These are subvarieties \(X \star Y\) of projective space \({\mathbb {P}}^n\) that arise from multiplying coordinate-by-coordinate any two points \(x \in X\), \(y \in Y\) in given subvarieties X, Y of \({\mathbb {P}}^n\). In applications, they first appeared in Cueto et al. (2010), where the variety associated to the restricted Boltzmann machine was described as a repeated Hadamard product of the secant variety of \({\mathbb {P}}^1 \times \cdots \times {\mathbb {P}}^1 \subset {\mathbb {P}}^{2^n-1}\) with itself. Further study in Bocci et al. (2016, 2018), Friedenberg et al. (2017), Calussi et al. (2018) made progress towards understanding Hadamard products.

Of particular interest are the r-th Hadamard powers \(X^{\star r} \,{:}{=}\, X \star \cdots \star X\) of an algebraic variety \(X \subset {\mathbb {P}}^n\). They are the multiplicative analogue of secant varieties that play a central role in classical projective geometry: The r-th secant variety \(\sigma _r(X)\) is the closure of the set of coordinate-wise sums of r points in X. Its subvariety corresponding to sums of r equal points is the original variety X. In the multiplicative setting, the Hadamard power \(X^{\star r}\) replaces \(\sigma _r(X)\), but it does not typically contain X if \([1:\ldots :1] \notin X\). As a multiplicative substitute for the inclusion \(X \subset \sigma _r(X)\), it is natural to study the subvariety of \(X^{\star r}\) given by coordinate-wise products of r equal points in X.

Formally, for a projective variety \(X \subset {\mathbb {P}}^n\) and an integer \(r\in {\mathbb {Z}}\) (possibly negative), we are interested in studying its image under the rational map

We call the image, \(X^{\circ r}\), of X under \(\varphi _r\) the r-th coordinate-wise power of\(X \subset {\mathbb {P}}^n\).

In this article, we investigate these coordinate-wise powers \(X^{\circ r}\) with a main focus on the case \(r>0\). These varieties show up naturally in many applications. For the Grassmannian variety \({{\,\mathrm{Gr}\,}}(k,{\mathbb {P}}^n)\) in its Plücker embedding, the intersection with its r-th coordinate-wise power \({{\,\mathrm{Gr}\,}}(k,{\mathbb {P}}^n) \cap {{\,\mathrm{Gr}\,}}(k,{\mathbb {P}}^n)^{\circ r}\) was described combinatorially in terms of matroids in Lenz (2020) for even r. In Bonnafé (2018), highly singular surfaces in \({\mathbb {P}}^3\) have been constructed as preimages of a specific singular surface under the morphism \(\varphi _r\) for \(r>0\). In the case \(r=-\,1\), the map \(\varphi _r\) is a classical Cremona transformation and images of varieties under this transformation are called reciprocal varieties whose study has received particular attention in the case of linear spaces, see De Loera et al. (2012), Kummer and Vinzant (2019) and Fink et al. (2018).

For \(r > 0\), the coordinate-wise powers \(X^{\circ r}\) of a variety \(X \subset {\mathbb {P}}^n\) have the following natural interpretation: The quotient of \({\mathbb {P}}^n\) by the finite subgroup \({\mathbb {Z}}_r^{n+1}\) of the torus \(({\mathbb {C}}^*)^{n+1}\) is again a projective space. The image of a variety \(X \subset {\mathbb {P}}^n\) in \({\mathbb {P}}^n/{\mathbb {Z}}_r^{n+1} \cong {\mathbb {P}}^n\) is the variety \(X^{\circ r}\), since \(\varphi _r :{\mathbb {P}}^n \rightarrow {\mathbb {P}}^n\) is the geometric quotient of \({\mathbb {P}}^n\) by \({\mathbb {Z}}_r^{n+1}\). In other words, coordinate-wise powers of algebraic varieties are images of subvarieties of \({\mathbb {P}}^n\) under the quotient by a certain finite group. The case \(r=2\) has the special geometric significance of quotienting by the group generated by reflections at the coordinate hyperplanes of \({\mathbb {P}}^n\). We are, therefore, especially interested in coordinate-wise squares of varieties.

A particular application of interest is the variety of orthostochastic matrices. An orthostochastic matrix is a matrix arising by squaring each entry of an orthogonal matrix. In other words, they are points in the coordinate-wise square of the variety of orthogonal matrices. Orthostochastic matrices play a central role in the theory of majorization (Marshall et al. 2011) and are closely linked to finding real symmetric matrices with prescribed eigenvalues and diagonal entries, see Horn (1954) and Mirsky (1963). Recently, it has also been shown that studying the variety of orthostochastic matrices is central to the existence of determinantal representations of bivariate polynomials and their computation, see Dey (2018).

As a further application, we show that coordinate-wise squares of linear spaces show up naturally in the study of symmetric matrices with a degenerate spectrum of eigenvalues.

The article is structured as follows: As customary when studying any variety, first and foremost, we compute the degree of \(X^{\circ r}\). We use this to derive the degree of the variety of orthostochastic matrices. In Sect. 3, we dig a little deeper and find explicitly the defining equations of the coordinate-wise powers of hypersurfaces. We define generalised power sum hypersurfaces and give relations between their dual and reciprocal varieties.

We study in more detail coordinate-wise powers of linear spaces in the final section. We show the dependence of the degree of the coordinate-wise powers of a linear space on the combinatorial information captured by the corresponding linear matroid. Particular attention is drawn to the case of coordinate-wise squares of linear spaces. For low-dimensional linear spaces we give a complete classification. We also describe the defining ideal for the coordinate-wise square of general linear spaces of arbitrary dimension in a high-dimensional ambient space, and we link this question to the study of symmetric matrices with a codimension 1 eigenspace.

2 Degree formula

Throughout this article, we work over \({\mathbb {C}}\). We denote the homogeneous coordinate ring of \({\mathbb {P}}^n\) by \({\mathbb {C}}[{\mathbf {x}}]\, {:}{=}\, {\mathbb {C}}[x_0,\ldots ,x_n]\). For any integer \(r \in {\mathbb {Z}}\), we consider the rational map

For \(r \ge 0\), the rational map \(\varphi _r\) is a morphism. Throughout, let \(X \subset {\mathbb {P}}^n\) be a projective variety, not necessarily irreducible. We denote by \(X^{\circ r} \subset {\mathbb {P}}^n\) the image of X under the rational map \(\varphi _r\). More explicitly,

For \(r < 0\), we will only consider the case that no irreducible component of X is contained in any coordinate hyperplane of \({\mathbb {P}}^n\). We call the image \(X^{\circ r} \subset {\mathbb {P}}^n\) the r-th coordinate-wise power of X. In the case \(r=-\,1\), the variety \(X^{\circ (-\,1)}\) is called the reciprocal variety of X. We primarily focus on positive coordinate-wise powers in this article, and therefore we will from now on always assume \(r > 0\) unless explicitly stated otherwise. Observe that \(\varphi _r :{\mathbb {P}}^n \rightarrow {\mathbb {P}}^n\) is a finite morphism, and hence, the image \(X^{\circ r}\) of X under \(\varphi _r\) has same dimension as X.

The cyclic group \({\mathbb {Z}}_r\) of order r is identified with the group of r-th roots of unity \(\{\xi \in {\mathbb {C}}\mid \xi ^r = 1\}\). We consider the action of the \((n+1)\)-fold product \({\mathbb {Z}}_r^{n+1} \,{:}{=}\, {\mathbb {Z}}_r\times \cdots \times {\mathbb {Z}}_r\) on \({\mathbb {C}}[{\mathbf {x}}]\) given by rescaling the variables \(x_0, \ldots , x_n\) with r-th roots of unity. We denote the quotient of \({\mathbb {Z}}_r^{n+1}\) by the subgroup \(\{(\xi ,\xi ,\ldots ,\xi ) \in {\mathbb {C}}^r \mid \xi ^r = 1\} \subset {\mathbb {Z}}_r^{n+1}\) as \({\mathcal {G}}_{r}\,{:}{=}\, {\mathbb {Z}}_r^{n+1}/{\mathbb {Z}}_r\). The group action of \({\mathbb {Z}}_r^{n+1}\) on \({\mathbb {C}}[{\mathbf {x}}]\) determines a linear action of \({\mathcal {G}}_{r}\) on \({\mathbb {P}}^n\). In this way, we can also view \({\mathcal {G}}_{r}\) as a subgroup of \({{\,\mathrm{Aut}\,}}({\mathbb {P}}^n)\). For \(r=2\), this has the geometric interpretation of being the linear group action generated by reflections at coordinate hyperplanes. Note that \({\mathcal {G}}_{r}\) does not act on the vector space \({\mathbb {C}}[{\mathbf {x}}]_d\) of homogeneous polynomials of degree d, instead it acts on \({\mathbb {P}}({\mathbb {C}}[{\mathbf {x}}]_d)\).

Given a projective variety, the following proposition describes set-theoretically the preimage under \(\varphi _r\) of its coordinate-wise r-th power.

Proposition 2.1

(Preimages of coordinate-wise powers) Let \(X \subset {\mathbb {P}}^n\) be a variety and let \(X^{\circ r} \subset {\mathbb {P}}^n\) be its coordinate-wise r-th power. The preimage \(\varphi _r^{-1}(X^{\circ r})\) is given by \(\bigcup _{\tau \in {\mathcal {G}}_{r}}\tau \cdot X\).

Proof

This follows from \(X^{\circ r} = \varphi _r(X)\) and the fact that \(\varphi _r^{-1}(\varphi _r(p))=\{\tau \cdot p \mid \tau \in {\mathcal {G}}_{r}\}\) for all \(p\in X\). \(\square \)

In particular, for \(r=2\), we obtain the following geometric description.

Corollary 2.2

The preimage of \(X^{\circ 2}\) under \(\varphi _2 :{\mathbb {P}}^n \rightarrow {\mathbb {P}}^n\) is the union over the orbit of X under the subgroup of \({{\,\mathrm{Aut}\,}}({\mathbb {P}}^n)\) generated by the reflections in the coordinate hyperplanes.

In the following theorem, we give a degree formula for the coordinate-wise powers of an irreducible variety.

Theorem 2.3

(Degree formula) Let \(X \subset {\mathbb {P}}^n\) be an irreducible projective variety. Let \({{\,\mathrm{Stab}\,}}_r(X) \,{:}{=}\, \{\tau \in {\mathcal {G}}_{r}\mid \tau \cdot X = X\}\) and \({{\,\mathrm{Fix}\,}}_r(X) \,{:}{=}\, \{\tau \in {\mathcal {G}}_{r}\mid {\left. \tau \right| _{X}} = {{\,\mathrm{id}\,}}_X\}\). Then the degree of the r-th coordinate-wise power of X is

Proof

Let \(H_1, \ldots , H_k \subset {\mathbb {P}}^n\) for \(k \,{:}{=}\, \dim X^{\circ r} = \dim X\) be general hyperplanes whose common intersection with \(X^{\circ r}\) consists of finitely many reduced points. We want to determine \(|X^{\circ r} \cap \bigcap _{i=1}^k H_i|\). By Proposition 2.1, we have

Note that each \(\varphi _r^{-1} H_i\) is a hypersurface of degree r fixed under the \({\mathcal {G}}_{r}\)-action, and their common intersection with X consists of finitely many reduced points by Bertini’s theorem (as in Flenner et al. 1999, 3.4.8). By Bézout’s theorem,

We note that \(Z \,{:}{=}\, X \cap \left( \bigcup _{\tau \in {\mathcal {G}}_{r}{\setminus } {{\,\mathrm{Stab}\,}}_r(X)} \tau \cdot X \right) \) is of dimension \({<}k\) by irreducibility of X. Therefore, the common intersection of k general hyperplanes \(H_i\) with \(\varphi _r(Z)\) is empty. This implies that the intersection of \(\tau \cdot X\) and \(\tau ' \cdot X\) does not meet \(\bigcap _{i=1}^k \varphi _r^{-1} H_i\) for all \(\tau , \tau ' \in {\mathcal {G}}_{r}\) with \(\tau ' \cdot \tau ^{-1} \notin {{\,\mathrm{Stab}\,}}_r(X)\).

Hence, the above can be written as a disjoint union

where \(\tau _1, \ldots , \tau _s \in {\mathcal {G}}_{r}\) for \(s = |{\mathcal {G}}_{r}|/|{{\,\mathrm{Stab}\,}}_r(X)|\) represent the cosets of \({{\,\mathrm{Stab}\,}}_r(X)\) in \({\mathcal {G}}_{r}\).

In particular,

For a general point \(p \in X\), we have \(\{\tau \in {\mathcal {G}}_{r}\mid \tau \cdot p = p\} = {{\,\mathrm{Fix}\,}}_r(X)\). Then Proposition 2.1 shows that a general point of \(X^{\circ r} = \varphi _r(X)\) has \(|{\mathcal {G}}_{r}|/|{{\,\mathrm{Fix}\,}}_r(X)|\) preimages under \(\varphi _r\), so for general hyperplanes \(H_i\) we conclude

\(\square \)

2.1 Orthostochastic matrices

We use Proposition 2.3 to compute the degree of the variety of orthostochastic matrices. By \({\mathbb {O}}(m) \subset {\mathbb {P}}^{m^2}\) (resp. \({\mathbb {SO}}(m) \subset {\mathbb {P}}^{m^2}\)) we mean the projective closure of the affine variety of orthogonal (resp. special orthogonal) matrices in \({\mathbb {A}}^{m^2}\). It was shown in Dey (2018) that the problem of deciding whether a bivariate polynomial can be expressed as the determinant of a definite/monic symmetric linear matrix polynomial (a determinantal representation) is closely linked to the problem of finding the defining equations of the variety \({\mathbb {O}}(m)^{\circ 2}\). In the case \(m=3\), the defining equations of \({\mathbb {O}}(3)^{\circ 2}\) are known (Chterental and Ɖoković 2008, Proposition 3.1) and based on this knowledge, it was shown in (Dey 2017, Section 4.2) how to compute a determinantal representation for a cubic bivariate polynomial or decide that none exists. For arbitrary m, the ideal of defining equations may be very complicated, but we are still able to compute its degree:

Proposition 2.4

(Degree of \({\mathbb {O}}(m)^{\circ 2}\)) We have \({\mathbb {O}}(m)^{\circ 2} = {\mathbb {SO}}(m)^{\circ 2}\) and its degree is

Proof

The variety \({\mathbb {O}}(m)\) consists of two connected components that are isomorphic to \({\mathbb {SO}}(m).\) The images of these components under \(\varphi _2 :{\mathbb {P}}^{m^2} \rightarrow {\mathbb {P}}^{m^2}\) coincide. In particular, \({\mathbb {O}}(m)^{\circ 2} = {\mathbb {SO}}(m)^{\circ 2}\) and \(\deg {\mathbb {O}}(m) = 2 \deg {\mathbb {SO}}(m)\). We determine \({{\,\mathrm{Fix}\,}}_2({\mathbb {SO}}(m))\) and \({{\,\mathrm{Stab}\,}}_2({\mathbb {SO}}(m))\).

Identify elements of \({\mathcal {G}}_{2}\) with \(m\times m\)-matrices whose entries are \(\pm 1\). Then a group element \(S \in {\mathcal {G}}_{2} = \{\pm 1\}^{m \times m}\) acts on the affine open subset \({\mathbb {A}}^{m^2} \subset {\mathbb {P}}^{m^2}\) corresponding to \(m \times m\)-matrices \(M \in {\mathbb {C}}^{m\times m}\) as \(S \circ M\), where \(S \circ M\) denotes the Hadamard product (i.e. entry-wise product) of matrices. Clearly, \({{\,\mathrm{Fix}\,}}_2({\mathbb {SO}}(m))\) is trivial, or else every special orthogonal matrix would need to have a zero entry at a certain position.

We claim that \({{\,\mathrm{Stab}\,}}_2({\mathbb {SO}}(m)) \subset \{S \in \{\pm 1\}^{m \times m} \mid {{\,\mathrm{rk}\,}}S = 1\}\). Indeed, assume that \(S \in \{\pm 1\}^{m \times m}\) lies in \({{\,\mathrm{Stab}\,}}_2({\mathbb {SO}}(m))\), but is not of rank 1. Then \(m \ge 2\) and we may assume that the first two columns of S are linearly independent. Consider the vectors \(u,v \in {\mathbb {C}}^m\) given by

Since u and v are orthogonal, we can find a special orthogonal matrix \(M \in {\mathbb {C}}^{m \times m}\) whose first two columns are \(M_{\bullet 1} = u/\Vert u \Vert _2\) and \(M_{\bullet 2} = v/\Vert v \Vert _2\). But \(S \in {{\,\mathrm{Stab}\,}}_2({\mathbb {SO}}(m))\), so the matrix \(S \circ M\) must be a special orthogonal matrix. In particular, the first two columns of \(S \circ M\) must be orthogonal, i.e.

Since \(S_{i1} S_{i2} = \pm 1\) for all i, we have \(|\sum _{i=1}^{m-1} (S_{i1} S_{i2}) 2^{i-1}| \le 2^{m-1}-1\), and equality in (2.1) holds if and only if \(S_{i1} S_{i2} = S_{j1} S_{j2}\) for all \(i,j \in \{1,\ldots ,m\}\). However, this contradicts the linear independence of the first two columns of S. Hence, the claim follows.

Any rank 1 matrix in \(\{\pm 1\}^{m\times m}\) can be uniquely written as \(u v^T\) with \(u,v \in \{\pm 1\}^m\) and \(u_1=1\). Such a rank 1 matrix \(S = uv^T\) lies in \({{\,\mathrm{Stab}\,}}_2({\mathbb {SO}}(m))\) if and only if for each special orthogonal matrix \(M \in {\mathbb {C}}^{m \times m}\) the matrix

is again a special orthogonal matrix. This is true if and only if \(\prod _{i=1}^m u_i = \prod _{i=1}^m v_i\). Therefore,

and, thus, \(|{{\,\mathrm{Stab}\,}}_2({\mathbb {SO}}(m))| = 2^{2m-2}\).

Since \({\mathbb {SO}}(m) \subset {\mathbb {P}}^{m^2}\) is irreducible, applying Proposition 2.3 gives

Finally, we observe that the affine variety of orthogonal matrices in \({\mathbb {A}}^{m^2}\) is an intersection of \(\smash {\left( {\begin{array}{c}m+1\\ 2\end{array}}\right) }\) quadrics which correspond to the polynomials given by the equation \(M^T M = {{\,\mathrm{id}\,}}\) satisfied by orthogonal matrices \(M \in {\mathbb {C}}^{m \times m}\). Therefore, its projective closure \({\mathbb {O}}(m) \subset {\mathbb {P}}^{m^2}\) must satisfy \(\smash {\deg {\mathbb {O}}(m) \le 2^{\left( {\begin{array}{c}m+1\\ 2\end{array}}\right) }}\). This shows \(\deg {\mathbb {O}}(m)^{\circ 2} \le 2^{(m-1)^2}\). \(\square \)

Remark 2.5

The degree of \({\mathbb {O}}(m)\) (resp. \({\mathbb {SO}}(m)\)) is known for all m by Brandt et al. (2017), namely

Table 1 shows the resulting degrees of \({\mathbb {O}}(m)^{\circ 2} = {\mathbb {SO}}(m)^{\circ 2}\) for some values of m.

2.2 Linear spaces

We now determine the degree of coordinate-wise powers \(L^{\circ r}\) for a linear space \(L \subset {\mathbb {P}}^n\), based on Proposition 2.3. It can be expressed in terms of the combinatorics captured by the matroid of \(L \subset {\mathbb {P}}^n\). We briefly recall some basic definitions for matroids associated to linear spaces in \({\mathbb {P}}^n\). We refer to Oxley (2011) for a detailed introduction to matroid theory.

Let \(L \subset {\mathbb {P}}^n\) be a linear space. The combinatorial information about the intersection of L with the linear coordinate spaces in \({\mathbb {P}}^n\) is captured in the linear matroid\(\mathcal {M}_L\). It is the collection of index sets \(I \subset \{0,1,\ldots ,n\}\) such that L does not intersect \(V(\{x_i \mid i \notin I\})\). Formally,

Different conventions about linear matroids exist in the literature, and some authors take a dual definition for the linear matroid of L.

The set \(\{0,1,\ldots ,n\}\) is the ground set of the matroid. Index sets \(I \in \mathcal {M}_L\) are called independent, while index sets \(I \in {{\,\mathrm{Pow}\,}}(\{0,1,\ldots ,n\}) {\setminus } \mathcal {M}_L\) are called dependent. An index \(i \in \{0,1,\ldots ,n\}\) is called a coloop of \(\mathcal {M}_L\) if, for all \(I \subset \{0,1,\ldots ,n\}\), the condition \(I \in \mathcal {M}_L\) holds if and only if \(I \cup \{i\} \in \mathcal {M}_L\) holds. Geometrically, an index \(i \in \{0,1,\ldots ,n\}\) is a coloop of \(\mathcal {M}_L\) if and only if \(L \subset V(x_i)\).

A subset \(E \subset \{0,1,\ldots ,n\}\) is called irreducible if there is no non-trivial partition \(E = E_1 \sqcup E_2\) with

The maximal irreducible subsets of \(\{0,1,\ldots ,n\}\) are called components of \(\mathcal {M}_L\) and they form a partition of \(\{0,1,\ldots ,n\}\). Geometrically, a component of \(\mathcal {M}_L\) is a minimal non-empty subset of \(\{0,1,\ldots ,n\}\) with the property that \(L \cap V(x_i \mid i \in I)\) and \(L \cap V(x_i \mid i \notin I)\) together span the linear space L.

In the following result, we determine the degree of \(L^{\circ r} \subset {\mathbb {P}}^n\) as an invariant of the linear matroid \(\mathcal {M}_L\).

Theorem 2.6

Let \(L \subset {\mathbb {P}}^n\) be a linear space of dimension k. Let s be the number of coloops and t the number of components of the associated linear matroid \(\mathcal {M}_L\). Then

Proof

By Proposition 2.3, we need to determine the cardinality of the groups

Consider the affine cone over L, which is a \((k+1)\)-dimensional subspace \(W \subset {\mathbb {C}}^{n+1}\). We denote the canonical basis of \({\mathbb {C}}^{n+1}\) by \(e_0, \ldots , e_n\).

We observe that \(|{{\,\mathrm{Fix}\,}}_r(L)| = |\{\tau \in {\mathbb {Z}}_r^{n+1}\mid {\left. \tau \right| _{W}} = {{\,\mathrm{id}\,}}\}|\). For \(\tau \in {\mathbb {Z}}_r^{n+1}\), we have

From this, we see that \(|{{\,\mathrm{Fix}\,}}_r(L)| = r^s\).

For the stabiliser of L, we have \(|{{\,\mathrm{Stab}\,}}_r(L)| = \frac{1}{r}{\,} |\{\tau \in {\mathbb {Z}}_r^{n+1}\mid \tau \cdot W = W\}|\). If \(\tau \in {\mathbb {Z}}_r^{n+1}\), then

In particular, there are precisely \(r^t\) elements \(\tau \in {\mathbb {Z}}_r^{n+1}\) with \(\tau \cdot W = W\). We deduce that \(|{{\,\mathrm{Stab}\,}}_r(L)| = r^{t-1}\), which concludes the proof by Proposition 2.3. \(\square \)

Corollary 2.7

The degree of the coordinate-wise r-th power of a linear space only depends on the associated linear matroid. If \(L_1, L_2 \subset {\mathbb {P}}^n\) are linear spaces such that the linear matroids \(\mathcal {M}_{L_1}\) and \(\mathcal {M}_{L_2}\) are isomorphic (i.e. they only differ by a permutation of \(\{0,1,\ldots ,n\}\)), then \(L_1^{\circ r} \subset {\mathbb {P}}^n\) and \(L_2^{\circ r} \subset {\mathbb {P}}^n\) have the same degree.

Corollary 2.8

Let \(L \subset {\mathbb {P}}^n\) be a linear space of dimension k. Then \(\deg L^{\circ r} \le r^k\). For general k-dimensional linear spaces in \({\mathbb {P}}^n\), equality holds.

Proof

Every coloop of \(\mathcal {M}_L\) forms a component of \(\mathcal {M}_L\) and the set \(\{0,1,\ldots ,n\}{\setminus } \{\text {coloops}\}\) is a union of components, hence \(t\le s+1\). Therefore, by Proposition 2.6, \(\deg L^{\circ r} \le r^k\). A general linear space \(L \in {{\,\mathrm{Gr}\,}}(k,{\mathbb {P}}^n)\) intersects only those linear coordinate space in \({\mathbb {P}}^n\) of dimension at least \(n-k\). Therefore, the linear matroid of a general linear space is the uniform matroid:

It is easily checked from the definitions that this matroid has no coloops and only one component. \(\square \)

Example 2.9

We illustrate Proposition 2.6 for hyperplanes. Up to permuting and rescaling the coordinates of \({\mathbb {P}}^n\), each hyperplane is given by \(L = V(f)\) with \(f = x_0+\cdots +x_m\) for some \(m \in \{0,1,\ldots ,n\}\). Its linear matroid is

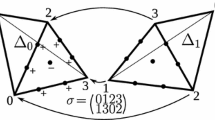

The components of this matroid are the set \(\{0,1,\ldots ,m\}\) and the singletons \(\{i\}\) for \(i \ge m+1\). The matroid \(\mathcal {M}_L\) has no coloops for \(m \ge 1\) and the unique coloop 0 if \(m = 0\). Then Proposition 2.6 shows \(\deg L^{\circ r} = r^{m-1}\) for \(m \ge 1\), and \(\deg L^{\circ r} = 1\) for \(m = 0\). For \(m = 3\), \(n = 3\) and \(r=2\), we obtain a quartic surface which we illustrate in Fig. 1.

3 Hypersurfaces

In this section, we study the coordinate-wise powers of hypersurfaces. Here, by a hypersurface, we mean a pure codimension 1 variety. In particular, hypersurfaces are assumed to be reduced, but are allowed to have multiple irreducible components. We describe a way to find the explicit equation describing the image of the given hypersurface under the morphism \(\varphi _r\). We define generalised power sum symmetric polynomials and we give a relation between duality and reciprocity of hypersurfaces defined by them. Finally, we raise the question whether and how the explicit description of coordinate-wise powers of hypersurfaces may lead to results on the coordinate-wise powers for arbitrary varieties.

3.1 The defining equation

The defining equation of a degree d hypersurface is a square-free (i.e. reduced) polynomial unique up to scaling, corresponding to a unique \(f \in {\mathbb {P}}({\mathbb {C}}[{\mathbf {x}}]_d)\). We work with points in \({\mathbb {P}}({\mathbb {C}}[{\mathbf {x}}]_d)\), i.e. polynomials up to scaling. We do not always make explicit which degree d we are talking about if it is irrelevant to the discussion. The product of \(f \in {\mathbb {P}}({\mathbb {C}}[{\mathbf {x}}]_d)\) and \(g \in {\mathbb {P}}({\mathbb {C}}[{\mathbf {x}}]_{d'})\) is well-defined up to scaling, i.e. as an element \(fg \in {\mathbb {P}}({\mathbb {C}}[{\mathbf {x}}]_{d+d'})\). Equally, we talk about irreducible factors etc. of elements of \({\mathbb {P}}({\mathbb {C}}[{\mathbf {x}}]_d)\).

Since the finite morphism \(\varphi _r \) preserves dimensions, the coordinate-wise r-th power of a hypersurface is again a hypersurface, leading to the following definition.

Definition 3.1

Let \(f\in {\mathbb {P}}({\mathbb {C}}[{\mathbf {x}}]_d)\) be square-free and \(V(f) \subset {\mathbb {P}}^n\) be the corresponding hypersurface. We denote by \(f^{\circ r} \in {\mathbb {P}}({\mathbb {C}}[{\mathbf {x}}]_{d'})\) the defining equation of the hypersurface \(V(f)^{\circ r}\), i.e.

For a given square-free polynomial f, we want to compute \(f^{\circ r}\). To this end, we introduce the following auxiliary notion.

Definition 3.2

Let \(f \in {\mathbb {P}}({\mathbb {C}}[{\mathbf {x}}]_d)\) be square-free. We define \({{\,\mathrm{{\mathfrak {s}}}\,}}_r(f) \in {\mathbb {P}}({\mathbb {C}}[{\mathbf {x}}]_{d'})\) as follows:

-

(i)

If f is irreducible and \(f \ne x_i \ \forall i \in \{0,1,\ldots ,n\}\), then we define \({{\,\mathrm{{\mathfrak {s}}}\,}}_r(f) \in {\mathbb {P}}({\mathbb {C}}[{\mathbf {x}}]_{d'})\) to be the product over the orbit \({\mathcal {G}}_{r}\cdot f \subset {\mathbb {P}}({\mathbb {C}}[{\mathbf {x}}]_d)\). For \(f=x_i\), we define \({{\,\mathrm{{\mathfrak {s}}}\,}}_r(f)\,{:}{=}\,x_i^r.\)

-

(ii)

If \(f=f_1 f_2 \ldots f_m\) where \(f_i \in {\mathbb {P}}({\mathbb {C}}[{\mathbf {x}}]_d)\) are irreducible, then we define

$$\begin{aligned} {{\,\mathrm{{\mathfrak {s}}}\,}}_r(f)\,{:}{=}\,{{\,\mathrm{lcm}\,}}\{{{\,\mathrm{{\mathfrak {s}}}\,}}_r(f_1), {{\,\mathrm{{\mathfrak {s}}}\,}}_r(f_2), \ldots , {{\,\mathrm{{\mathfrak {s}}}\,}}_r(f_m)\}. \end{aligned}$$

Observe that in case (ii), determining \({{\,\mathrm{{\mathfrak {s}}}\,}}_r(f)={{\,\mathrm{lcm}\,}}\{{{\,\mathrm{{\mathfrak {s}}}\,}}_r(f_1), {{\,\mathrm{{\mathfrak {s}}}\,}}_r(f_2), \ldots , {{\,\mathrm{{\mathfrak {s}}}\,}}_r(f_m)\}\) is straightforward, assuming the decomposition of f into irreducible factors \(f_1, \ldots , f_m\) is known. Indeed, the irreducible factors of each \({{\,\mathrm{{\mathfrak {s}}}\,}}_r(f_i)\) are immediate from case (i) of the definition, so determining the least common multiple does not require any additional factorisation.

Lemma 3.3

Let \(f\in {\mathbb {P}}({\mathbb {C}}[{\mathbf {x}}]_d)\) be square-free. Then \({{\,\mathrm{{\mathfrak {s}}}\,}}_r(f)\in {\mathbb {P}}({\mathbb {C}}[x_0^r,\ldots ,x_n^r]_{d'})\), and the principal ideal generated by \({{\,\mathrm{{\mathfrak {s}}}\,}}_r(f)\) in the subring \({\mathbb {C}}[x_0^r,\ldots ,x_n^r] \subset {\mathbb {C}}[{\mathbf {x}}]\) is \((f) \cap {\mathbb {C}}[x_0^r,\ldots ,x_n^r]\).

Proof

It is enough to show the claim for f irreducible because we can deduce the general case in the following manner. If f factors into irreducible factors as \(f = f_1 f_2 \ldots f_m\), then

We now assume that f is irreducible. If \(f = x_i\) for some \(i \in \{0,1,\ldots ,n\}\), then the claim holds trivially by the definition of \({{\,\mathrm{{\mathfrak {s}}}\,}}_r(f)\). Let \(f \ne x_i\) for all i and g be a polynomial representing \({{\,\mathrm{{\mathfrak {s}}}\,}}_r(f) \in {\mathbb {P}}({\mathbb {C}}[{\mathbf {x}}]_{md})\). By definition, \({{\,\mathrm{{\mathfrak {s}}}\,}}_r(f)\) is fixed under the action of \({\mathcal {G}}_{r}\), hence \(\tau \cdot g\) is a multiple of g for all \(\tau \in {\mathbb {Z}}_r^{n+1}\). Since g is not divisible by \(x_i\), it must contain a monomial not divisible by \(x_i\). This shows that g is fixed by \(\tau ^{\smash [t]{(i)}} = (1, \ldots , 1, \zeta , 1, \ldots , 1) \in {\mathbb {Z}}_r^{n+1}\), where the i-th position of \(\tau ^{\smash [t]{(i)}}\) is a primitive r-th root of unity. Since \(\tau ^{\smash [t]{(0)}}, \tau ^{\smash [t]{(1)}}, \ldots , \tau ^{\smash [t]{(n)}}\) generate the group \({\mathbb {Z}}_r^{n+1}\), we have \(\tau \cdot g = g\) for all \(\tau \in {\mathbb {Z}}_r^{n+1}\). Hence, g lies in the invariant ring \({\mathbb {C}}[{\mathbf {x}}]^{{\mathbb {Z}}_r^{n+1}} = {\mathbb {C}}[x_0^r,\ldots ,x_n^r]\), i.e. \({{\,\mathrm{{\mathfrak {s}}}\,}}_r(f) \in {\mathbb {P}}({\mathbb {C}}[x_0^r,\ldots ,x_n^r]_{d^{\prime }})\).

If \(h \in (f)\) is a polynomial in \({\mathbb {C}}[x_0^r,\ldots ,x_n^r]\), then h is invariant under the action of \({\mathbb {Z}}_r^{n+1}\) on \({\mathbb {C}}[{\mathbf {x}}]\), so \(h \in (\tau \cdot f)\) for all \(\tau \in {\mathcal {G}}_{r}\). By the definition of \({{\,\mathrm{{\mathfrak {s}}}\,}}_r(f)\) and irreducibility of \(\tau \cdot f\), this shows \(h \in {{\,\mathrm{{\mathfrak {s}}}\,}}_r(f)\). We conclude \((f) \cap {\mathbb {C}}[x_0^r,\ldots ,x_n^r] = ({{\,\mathrm{{\mathfrak {s}}}\,}}_r(f))\). \(\square \)

Based on Definition 3.2 and Lemma 3.3, the following proposition gives a method to find the equation of the coordinate-wise power of a hypersurface.

Proposition 3.4

(Powers of hypersurfaces) Let \(V(f) \subset {\mathbb {P}}^n\) be a hypersurface. The defining equation \(f^{\circ r}\) of its coordinate-wise r-th power is given by replacing each occurrence of \(x_i^r\) in \({{\,\mathrm{{\mathfrak {s}}}\,}}_r(f)\) by \(x_i\) for all \(i \in \{0,1,\ldots ,n\}\).

Proof

Since \(V(f)^{\circ r} \subset {\mathbb {P}}^n\) is the image of V(f) under \(\varphi _r :{\mathbb {P}}^n \rightarrow {\mathbb {P}}^n\), its ideal \((f^{\circ r}) \subset {\mathbb {C}}[{\mathbf {x}}]\) is the preimage under the ring homomorphism \(\psi :{\mathbb {C}}[{\mathbf {x}}]\rightarrow {\mathbb {C}}[{\mathbf {x}}]\), \(x_i \mapsto x_i^r\) of the ideal \((f) \subset {\mathbb {C}}[{\mathbf {x}}]\). The claim is therefore an immediate consequence of Lemma 3.3. \(\square \)

For clarity, we illustrate the above results for a hyperplane in \({\mathbb {P}}^3\).

Example 3.5

For \(n=3\) and \(f \,{:}{=}\, x_0+x_1+x_2+x_3 \in {\mathbb {P}}({\mathbb {C}}[{\mathbf {x}}]_1)\), we have

Expanding this expression, we obtain a polynomial in \({\mathbb {C}}[x_0^2,x_1^2,x_2^2,x_3^2]\) and, substituting \(x_i^2\) by \(x_i\), we obtain by Proposition 3.4 that the coordinate-wise square \(V(f)^{\circ 2} \subset {\mathbb {P}}^3\) is the vanishing set of

This rational quartic surface is illustrated in Fig. 1. It is a Steiner surface with three singular lines forming the ramification locus of \({\left. \varphi _2\right| _{V(f)}} :V(f) \rightarrow V(f)^{\circ 2}\).

Example 3.6

(Squaring the circle) Consider the plane conic \(C = V(f) \subset {\mathbb {P}}^2\) given by \(f \,{:}{=}\, (x_1-ax_0)^2+(x_2-bx_0)^2-(c x_0)^2\) for some \(a,b,c \in {\mathbb {R}}\) with \(c > 0\). In the affine chart \(x_0=1\), this corresponds over the real numbers to the circle with center (a, b) and radius c. From Proposition 3.4, we show that the coordinate-wise square of the circle \(C \subset {\mathbb {P}}^2\) can be a line, a parabola or a singular quartic curve. See Fig. 2 for an illustration of the following three cases:

-

(i)

If the circle C is centered at the origin (i.e. \(a=b=0\)), then \({{\,\mathrm{{\mathfrak {s}}}\,}}_r(f) = f\) and \(C^{\circ 2} \subset {\mathbb {P}}^2\) is the line defined by the equation \(f^{\circ 2} = x_1+x_2-c^2x_0.\)

-

(ii)

If the center of the circle lies on a coordinate-axis and is not the origin (i.e. \(ab=0\), but \((a,b) \ne (0,0)\)), then \(C^{\circ 2} \subset {\mathbb {P}}^2\) is a conic. Say \(a=0\), then \(C^{\circ 2}\) is defined by the equation \(f^{\circ 2} = (x_1+x_2)^2 + 2(b^2-c^2)x_0x_1-2(b^2+c^2)x_0x_2 + (b^2-c^2)^2x_0^2.\) In the affine chart \(x_0 = 1\), C is a circle and \(C^{\circ 2}\) is a parabola.

-

(iii)

If the center of the circle does not lie on a coordinate-axis, then \(|{\mathcal {G}}_{r}\cdot f| = 4\). Therefore, \(C^{\circ 2}\) is a quartic plane curve. Its equation can be computed explicitly using Proposition 3.4. Being the image of a conic, the quartic curve \(C^{\circ 2}\) is rational, hence it cannot be smooth. In fact, its singularities are the two points \([0:1:-\,1]\) and \([a^2+b^2:b^2(c^2-a^2-b^2):a^2(c^2-a^2-b^2)]\) in \({\mathbb {P}}^2\). They form the branch locus of \({\left. \varphi _2\right| _{C}} :C \rightarrow C^{\circ 2}\). The point \([0:1:-\,1] \in {\mathbb {P}}^2\) is the image of the two complex points \([0:1:\pm i]\) at infinity lying on all of the four conics \(\tau \cdot C\) for \(\tau \in {\mathcal {G}}_{2}\). The other singular point of \(C^{\circ 2}\) is the image under \(\varphi _2\) of the two intersection points of the two circles C and \(\tau \cdot C\) for \(\tau = [1:-\,1:-\,1] \in {\mathcal {G}}_{2}\) inside the affine chart \(x_0=1.\)

Remark 3.7

(Newton polytope of \(f^{\circ r}\)) Let \(f \in {\mathbb {P}}({\mathbb {C}}[{\mathbf {x}}]_d)\) be irreducible and \(f \ne x_i\). Then the Newton polytope of \(f^{\circ r} \) arises from the Newton polytope of f by rescaling according to the cardinality of the orbit \({\mathcal {G}}_{r}\cdot f \subset {\mathbb {P}}({\mathbb {C}}[{\mathbf {x}}]_d)\):

Indeed, we have \({{\,\mathrm{Newt}\,}}(\tau \cdot f) = {{\,\mathrm{Newt}\,}}(f)\) for all \(\tau \in {\mathcal {G}}_{r}\), and since \({{\,\mathrm{Newt}\,}}(g h) = {{\,\mathrm{Newt}\,}}(g) + {{\,\mathrm{Newt}\,}}(h)\) holds for all polynomials g, h, we have \({{\,\mathrm{Newt}\,}}({{\,\mathrm{{\mathfrak {s}}}\,}}_r(f)) = |{\mathcal {G}}_{r}\cdot f| \cdot {{\,\mathrm{Newt}\,}}(f)\) by Definition 3.2. Replacing \(x_i^r\) by \(x_i\) rescales the Newton polytope with the factor \(\frac{1}{r}\), so the claim follows.

3.2 Duals and reciprocals of power sum hypersurfaces

We now highlight the interactions between coordinate-wise powers, dual and reciprocal varieties for the case of power sum hypersurfaces\(V(x_0^p+\cdots +x_n^p) \subset {\mathbb {P}}^n\). Specifically, we determine explicitly all hypersurfaces that arise from power sum hypersurfaces by repeatedly taking duals and reciprocals as the coordinate-wise r-th power of some hypersurface. In this subsection, we also allow r to take negative integer values.

Recall that the reciprocal variety\(V(f)^{\circ (-1)}\) of a hypersurface \(V(f) \subset {\mathbb {P}}^n\) not containing any coordinate hyperplane of \({\mathbb {P}}^n\) is defined as the closure of \(\varphi _{-1}(V(f){\setminus } V(x_0 x_1 \ldots x_n))\) in \({\mathbb {P}}^n\). We denote it also by \({{\,\mathrm{{\mathcal {R}}}\,}}V(f)\). For linear spaces the reciprocal variety and its Chow form has been studied in detail in Kummer and Vinzant (2019).

We also recall the definition of the dual variety of \(V(f) \subset {\mathbb {P}}^n\). Consider the set of hyperplanes in \({\mathbb {P}}^n\) that arise as the projective tangent space at a smooth point of V(f). This is a subset of the dual projective space \(({\mathbb {P}}^n)^*\) and its Zariski closure is the dual variety of V(f), which we denote by \(V(f)^*\) or \({{\,\mathrm{{\mathcal {D}}}\,}}V(f)\). We identify \(({\mathbb {C}}^{n+1})^*\) with \({\mathbb {C}}^{n+1}\) via the standard bilinear form and therefore identify \(({\mathbb {P}}^n)^*\) with \({\mathbb {P}}^n\).

Consider the power sum polynomial\({\mathfrak {f}}_{p} \,{:}{=}\, x_0^p+\cdots +x_n^p \in {\mathbb {P}}({\mathbb {C}}[{\mathbf {x}}]_p)\) for \(p \in {\mathbb {N}}\). As before, we regard polynomials only up to scaling. For power sums with negative exponents we consider the numerator of the rational function as

In particular, \({\mathfrak {f}}_{-1} \in {\mathbb {P}}({\mathbb {C}}[{\mathbf {x}}]_n)\) is the elementary symmetric polynomial of degree n.

Recall that the morphism \(\varphi _r :{\mathbb {P}}^n \rightarrow {\mathbb {P}}^n\) for \(r > 0\) is finite, hence preserves dimension. Since \(\varphi _{-1} :{\mathbb {P}}^n \dashrightarrow {\mathbb {P}}^n\) is a birational map, the rational map \(\varphi _{-r} = \varphi _{-1} \circ \varphi _r\) also preserves dimensions: \(\dim V({\mathfrak {f}}_{p})^{\circ (-r)} = \dim V({\mathfrak {f}}_{p})\). We therefore extend Definition 3.1 to include the defining equation of \(V({\mathfrak {f}}_{p})^{\circ r}\) by \({\mathfrak {f}}_{p}^{\circ r}\) for all \(p,r \in {\mathbb {Z}}{\setminus } \{0\}\). For the constant polynomial \({\mathfrak {f}}_{0} = 1 \in {\mathbb {P}}({\mathbb {C}}[{\mathbf {x}}]_0) \), we define \({\mathfrak {f}}_{0}^{\circ r} \,{:}{=}\, 1 \) for all \(r \in {\mathbb {Z}}{\setminus } \{0\}\).

Lemma 3.8

For all \(s \in {\mathbb {Z}}\) and \(r, \lambda \in {\mathbb {Z}}{\setminus } \{0\}\), we have \({\mathfrak {f}}_{\lambda s}^{\circ (\lambda r)} = {\mathfrak {f}}_{s}^{\circ r}\).

Proof

For \(\lambda > 0\), we have \(\varphi _{\lambda }^{-1} (V({\mathfrak {f}}_{s})) = V({\mathfrak {f}}_{\lambda s})\), hence

where we have used the surjectivity of \(\varphi _\lambda :{\mathbb {P}}^n \rightarrow {\mathbb {P}}^n\). For \(\lambda < 0\), we use the above to see

The reciprocal variety of \(V({\mathfrak {f}}_{-s})\) is \(V({\mathfrak {f}}_{s})\) for all \(s \in {\mathbb {Z}}\). Hence, \(V({\mathfrak {f}}_{\lambda s}^{\circ (\lambda r)}) = V({\mathfrak {f}}_{s})^{\circ r}\). \(\square \)

This naturally leads us to the our next definition.

Definition 3.9

(Generalised power sum polynomial) For any rational number \(p = \frac{s}{r} \in {\mathbb {Q}}\) (\(r,s \in {\mathbb {Z}}\), \(r \ne 0\)), we define the generalised power sum polynomial\({\mathfrak {f}}_{p} \,{:}{=}\, {\mathfrak {f}}_{s}^{\circ r} \in {\mathbb {P}}({\mathbb {C}}[{\mathbf {x}}]_d)\).

By Lemma 3.8, the generalised power sum polynomial \({\mathfrak {f}}_{p}\) is well-defined. With this definition, we get the following duality result for hypersurfaces generalising Example 4.16 in Gelfand et al. (1994).

Proposition 3.10

(Duality of generalised power sum hypersurfaces) Let \(p, q \in {\mathbb {Q}}{\setminus } \{0\}\) be such that \(\frac{1}{p}+\frac{1}{q} = 1\). Then \(V({\mathfrak {f}}_{p})^* = V({\mathfrak {f}}_{q})\).

Proof

Write \(p = \frac{s}{r}\) with \(r \in {\mathbb {Z}}{\setminus } \{0\}\), \(s \in {\mathbb {Z}}_{>0}\). Let \(b \in V({\mathfrak {f}}_{p}) = \varphi _r(V({\mathfrak {f}}_{s}))\) be a smooth point of \(V({\mathfrak {f}}_{p}) {\setminus } V(x_0 x_1 \ldots x_n)\), and let \(a \in V({\mathfrak {f}}_{s}) {\setminus } V(x_0 x_1 \ldots x_n)\) be such that \(b = \varphi _r(a)\). The morphism \(\varphi _r :{\mathbb {P}}^n {\setminus } V(x_0 x_1 \ldots x_n) \rightarrow {\mathbb {P}}^n {\setminus } V(x_0 x_1 \ldots x_n)\) induces a linear isomorphism on projective tangent spaces \(\mathbb {T}_a {\mathbb {P}}^n = {\mathbb {P}}^n \rightarrow {\mathbb {P}}^n = \mathbb {T}_{b} {\mathbb {P}}^n\) given by \({{\,\mathrm{diag}\,}}(ra_0^{r-1}, ra_1^{r-1},\ldots , ra_n^{r-1})\). This maps

In particular, \(V({\mathfrak {f}}_{p})^* \subset {\mathbb {P}}^n\) is the image of the rational map

From \(\partial _i {\mathfrak {f}}_{s} = s x_i^{s-1}\) we conclude that \(V({\mathfrak {f}}_{p})^* = \varphi _{s-r}(V({\mathfrak {f}}_{s})) = V({\mathfrak {f}}_{s/(s-r)}) = V({\mathfrak {f}}_{q})\). \(\square \)

Remark 3.11

This statement can be understood as an algebraic analogue of the duality theory for \(\ell ^p\)-spaces \(({\mathbb {R}}^n, |\cdot |_p)\). Indeed, let \(p,q \ge 1\) be rational with \(\frac{1}{p}+\frac{1}{q} = 1\). The unit ball in \(({\mathbb {R}}^n,|\cdot |_p)\) is \(U_p \,{:}{=}\, \{v \in {\mathbb {R}}^n \mid \sum _{i} v_i^p = 1\}\) and, by \(\ell _p\)-duality, hyperplanes tangent to \(U_p\) correspond to the points on the unit ball \(U_q\) of the dual normed vector space \(({\mathbb {R}}^n, |\cdot |_q)\). The complex projective analogues of the unit balls \(U_p \subset {\mathbb {R}}^n\) are the generalised power sum hypersurfaces \(V({\mathfrak {f}}_{p}) \subset {\mathbb {P}}^n\) and Proposition 3.10 shows the previous statement in this setting.

Using Proposition 3.4 we can compute \({\mathfrak {f}}_{p}\) for any \(p \in {\mathbb {Q}}\) explicitly. In particular, we make the following observation:

Lemma 3.12

Let \(s \in {\mathbb {N}}\) and \(r \in {\mathbb {Z}}{\setminus } \{0\}\) be relatively prime. Then \({\mathfrak {f}}_{s/r}\) arises from \({\mathfrak {f}}_{1/r}\) by substituting \(x_i \mapsto x_i^s\) for all \(i \in \{0,1,\ldots ,n\}\).

Proof

This follows from the explicit description of the polynomials \({\mathfrak {f}}_{s/r} = {\mathfrak {f}}_{s}^{\circ r}\) and \({\mathfrak {f}}_{1/r} = {\mathfrak {f}}_{1}^{\circ r}\) given by Proposition 3.4. \(\square \)

By Lemma 3.12, in order to determine the generalised power sum polynomials \({\mathfrak {f}}_{p}\), we may restrict our attention to \({\mathfrak {f}}_{1/r}\). These have a particular geometric interpretation as repeated dual-reciprocals of the linear space \(V(x_0+x_1+\cdots +x_n) \subset {\mathbb {P}}^n\) as in Corollary 3.14.

Theorem 3.13

The repeated dual-reciprocals of generalised power sum hypersurfaces \(V({\mathfrak {f}}_{p})\) are given by

Proof

We show the claim for \(V({\mathfrak {f}}_{p})\) by induction on k. For \(k=0\), the claim is trivial. For \(k > 0\), we get by induction hypothesis:

where \((*)\) holds by Lemma 3.8 and \((**)\) by Proposition 3.10. From this, we also see

concluding the proof. \(\square \)

Corollary 3.14

For \(r > 0\), the repeated alternating reciprocals and duals of the linear space \(V({\mathfrak {f}}_{1}) \subset {\mathbb {P}}^n\) are the coordinate-wise powers of \(V({\mathfrak {f}}_{1})\) given as

Example 3.15

Let \(n = 3\) and \(f\,{:}{=}\,x_0+x_1+x_2+x_3\). The reciprocal variety of the plane \(V(f)\subset {\mathbb {P}}^3\) is given by \({\mathfrak {f}}_{-1} = x_1 x_2 x_3+x_0x_2x_3+x_0x_1x_3+x_0x_1x_2\). Its dual is \(V({\mathfrak {f}}_{1/2}) = V({\mathfrak {f}}_{1})^{\circ 2} \subset {\mathbb {P}}^3\) by Proposition 3.10. This is the quartic surface from Example 3.5. Higher iterated dual-reciprocal varieties of V(f) can be explicitly computed analogous to Example 3.5 via Corollary 3.13. For instance, the surface \({{\,\mathrm{{\mathcal {D}}}\,}}{{\,\mathrm{{\mathcal {R}}}\,}}{{\,\mathrm{{\mathcal {D}}}\,}}{{\,\mathrm{{\mathcal {R}}}\,}}V(f) \subset {\mathbb {P}}^3\) is the coordinate-wise cube of V(f) which is the degree 9 surface illustrated in Fig. 3.

Remark 3.16

(Coordinate-wise rational powers) The construction of the generalised power sum hypersurfaces \(V({\mathfrak {f}}_{p})\) may be understood in a broader context of coordinate-wise powers with rational exponents: For a subvariety \(X \subset {\mathbb {P}}^n\), and a rational number \(p = r/s\) with \(r \in {\mathbb {Z}}\) and \(s \in {\mathbb {Z}}_{>0}\) relatively prime, we may define the coordinate-wise p-th power \(X^{\circ p} \,{:}{=}\, \varphi _s^{-1}(X^{\circ r}) = (\varphi _s^{-1}(X))^{\circ r}\). This is a natural generalisation of the coordinate-wise integer powers \(X^{\circ r}\). With this definition, the generalised power sum hypersurface \(V({\mathfrak {f}}_{p})\) is the 1/p-th coordinate-wise power of \(V({\mathfrak {f}}_{1})\). While we focus on coordinate-wise powers to integral exponents in this article, many results easily transfer to the case of rational exponents. For instance, the defining ideal of \(X^{\circ (r/s)}\) is obtained by substituting \(x_i \mapsto x_i^s\) in each of the generators of the vanishing ideal of \(X^{\circ r}\). In particular, the number of minimal generators for these two ideals agree.

3.3 From hypersurfaces to arbitrary varieties?

We briefly discuss to what extent Proposition 3.4 can be used to determine coordinate-wise powers of arbitrary varieties, and mention the difficulties involved in this approach.

Let \(r > 0\) and let \(f_1, \ldots , f_m\) be homogeneous polynomials vanishing on a variety \(X \subset {\mathbb {P}}^n\). Their \(r-\)th coordinate-wise powers give rise to the inclusion \(X^{\circ r} \subset V(f_1^{\circ r}, \ldots , f_m^{\circ r})\). We may ask when equality holds, which leads us to the following definition, reminiscent of the notion of tropical bases in Tropical Geometry (Maclagan and Sturmfels 2015, Section 2.6).

Definition 3.17

(Power basis) A set of homogeneous polynomials \(f_1, \ldots , f_m \subset {\mathbb {C}}[{\mathbf {x}}]\) is an r-th power basis of the ideal \(I = (f_1, \ldots , f_m)\) if the following equality of sets holds:

We show the existence of such power bases for a given ideal in the following proposition.

Proposition 3.18

(Existence of power bases) Let \(I \subset {\mathbb {C}}[{\mathbf {x}}]\) be a homogeneous ideal. Then for each r, there exists an r-th power basis of I.

Proof

Let J denote the defining ideal of \(V(I)^{\circ r} \subset {\mathbb {P}}^n\). If J is generated by homogeneous polynomials \(g_1, \ldots , g_m \in {\mathbb {C}}[{\mathbf {x}}]\), we define \(f_1, \ldots , f_m \in {\mathbb {C}}[{\mathbf {x}}]\) to be their images under the ring homomorphism \({\mathbb {C}}[{\mathbf {x}}]\rightarrow {\mathbb {C}}[{\mathbf {x}}]\), \(x_i \mapsto x_i^r\). Then \(f_i\in I\), since

On the other hand, we have \(f_i^{\circ r} = g_i\), since \(V(f_i)^{\circ r} = \varphi _r(\varphi _r^{-1}(V(g_i))) = V(g_i)\) by surjectivity of \(\varphi _r\). Therefore, \(f_1^{\circ r}, \ldots , f_m^{\circ r}\) generate J. Enlarging \(f_1, \ldots , f_m\) to a generating set of I gives an r-th power basis of I. \(\square \)

Remark 3.19

Proposition 3.18 shows the existence of r-th power bases, but explicitly determining one a priori is nontrivial. For the variety of orthostochastic matrices \({\mathbb {O}}(m)^{\circ 2}\) as in Sect. 2.1, it is natural to suspect that the quadratic equations defining the variety of orthogonal matrices would form a power basis for \(r = 2\). This is the question discussed in (Chterental and Ɖoković 2008, Section 3), where it was shown that this is true for \(m = 3\), but not for \(m \ge 6\). The cases \(m = 4,5\) are an open problem (Chterental and Ɖoković 2008, Problem 6.2). Our results on the degree of \({\mathbb {O}}(m)^{\circ 2}\) reduce this open problem to the computation whether explicitly given polynomials \(f_1^{\circ 2}, \ldots , f_k^{\circ 2}\) describe an irreducible variety of the correct dimension and degree. Straightforward implementations of this computation seem to be beyond current computer algebra software.

In the following two examples, we will see that even in the case of squaring codimension 2 linear spaces, obvious candidates for \(f_1, \ldots , f_m\) do not form a power basis.

Example 3.20

Let \(I \,{:}{=}\, (f_1, f_2) \subset {\mathbb {C}}[{\mathbf {x}}]\) be the ideal defining the line in \({\mathbb {P}}^3\) that is given by \(f_1 \,{:}{=}\, x_0+x_1+x_2+x_3\) and \(f_2 \,{:}{=}\, x_1+2x_2+3x_3\).

The polynomials \(f_1^{\circ 2}\) and \(f_2^{\circ 2}\) have degrees 4 and 2, respectively, by Proposition 3.4. Note that the polynomial \(f_3 \,{:}{=}\, 3x_0^2-x_1^2+x_2^2-3x_3^2 = 3(x_0-x_1-x_2-x_3)f_1+2(x_1+x_2)f_2\) also lies in I, so the ideal of \(V(I)^{\circ 2}\) contains the linear form \(f_3^{\circ 2} = 3x_0-x_1+x_2-3x_3\). The polynomials \(f_1,f_2\) do not form a power basis of I. In fact, one can check that \(V(f_1^{\circ 2}, f_2^{\circ 2}) \subset {\mathbb {P}}^3\) is the union of four rational quadratic curves, one of which is \(V(I)^{\circ 2}\), see Fig. 4 for an illustration. A power basis of I is given by \(f_1,f_2,f_3\).

Example 3.21

Another natural choice for polynomials \(f_1, \ldots , f_m\) in the ideal of a linear space \(X \subset {\mathbb {P}}^n\) consists of the circuit forms, i.e. linear forms vanishing on X that are minimal with respect to the set of occurring variables. However, for

these circuit forms are

and one can check that the point \([16:16:1:36:9] \in {\mathbb {P}}^4\) lies in \(V(f_1^{\circ 2}, \ldots , f_5^{\circ 2})\), but not in \(X^{\circ 2}\). In particular, \(f_1, \ldots , f_5\) is not an r-th power basis for \(r = 2\).

We have seen in Examples 3.20 and 3.21 that even for the case of linear spaces of codimension 2 it is not an easy task to a priori identify an r-th power basis.

The following proposition shows how one can straightforwardly find a very large r-th power basis of an ideal I, without first computing the ideal of \(V(I)^{\circ r}\).

Proposition 3.22

If \(g_1, \ldots , g_k \in {\mathbb {C}}[{\mathbf {x}}]_d\) are forms of equal degree d, then taking \((k-1)r^n+1\) general linear combinations of \(g_1, \ldots , g_k\) produces an r-th power basis of \((g_1, \ldots , g_k)\).

Proof

We assume that \(g_1, \ldots , g_k\) are linearly independent, or else we can replace them with a linearly independent subset. For \(m \,{:}{=}\, (k-1)r^n+1\), let \(f_1, \ldots , f_m \in \langle g_1, \ldots , g_k \rangle \) be such that no k of them are linearly dependent.

For \(X \,{:}{=}\, V(g_1, \ldots , g_k)\), we will show that \(V(f_1^{\circ r}, \ldots , f_m^{\circ r}) = X^{\circ r}\) by comparing the preimages of both sides under \(\varphi _r :{\mathbb {P}}^n \rightarrow {\mathbb {P}}^n\).

By Proposition 2.1, we have \(\varphi _r^{-1}(X^{\circ r}) = \bigcup _{\tau \in {\mathcal {G}}_{r}} \tau \cdot X\) and

Let \(p \in \varphi _r^{-1} (V(f_1^{\circ r}, \ldots , f_m^{\circ r})) \subset {\mathbb {P}}^n\). Then for each \(i \in \{1,\ldots ,m\}\) there exists some \(\tau \in {\mathcal {G}}_{r}\) with \(p \in \tau \cdot V(f_i)\) using the last equality above. Since \(m > (k-1)|{\mathcal {G}}_{r}|\), by pigeonhole principle there must exist \(\tau \in {\mathcal {G}}_{r}\) and \(i_1, i_2,\ldots , i_k \in \{1,\ldots ,m\}\) distinct with \(p \in \bigcap _{j=1}^k \tau \cdot V(f_{i_j}) = \tau \cdot V(f_{i_1},\ldots ,f_{i_k})\). Since, by assumption, no k of them are linearly dependent \(f_{i_1}, \ldots , f_{i_k}\) span \(\langle g_1, \ldots , g_k\rangle \). Therefore, \(V(f_{i_1},\ldots ,f_{i_k}) = X\), and hence, \(p\in \tau \cdot V(f_{i_1},\ldots ,f_{i_k})\) implies that \(p \in \tau \cdot X \subset \varphi _r^{-1}(X^{\circ r})\). This shows \(\varphi _r^{-1} (V(f_1^{\circ r}, \ldots , f_m^{\circ r})) \subset \varphi _r^{-1}(X)\). The reverse inclusion is trivial. \(\square \)

In particular, Proposition 3.22 shows that for a subvariety of \({\mathbb {P}}^n\) defined by k forms of degree d, its coordinate-wise r-th power can be described set-theoretically by the vanishing of \((k-1)r^n+1\) forms of degree \(\le d r^{n-1}\). However, we will see in Sect. 4 that for linear spaces this bound is rather weak in many cases and should be expected to allow dramatic refinement in general. We raise the following as a broad open question:

Question 3.23

When does a set of homogeneous polynomials form an r-th power basis? For a given ideal I, do there exist polynomials \(f_1, \ldots , f_m \in I\) that simultaneously form an r-th power basis for all r?

4 Linear spaces

In this section, we specialise to linear spaces \(L \subset {\mathbb {P}}^n\) and investigate their coordinate-wise powers \(L^{\circ r}\). First, we highlight the dependence of \(L^{\circ r}\) on the geometry of a finite point configuration associated to \(L \subset {\mathbb {P}}^n\). For \(r=2\), we point out its relation to symmetric matrices with degenerate eigenvalues. Based on this, we classify the coordinate-wise squares of lines and planes. Finally, we turn to the case of squaring linear spaces in high-dimensional ambient space.

4.1 Point configurations

We study the defining ideal of \(L^{\circ r}\) for a k-dimensional linear space \(L \subset {\mathbb {P}}^n\). The degrees of its minimal generators do not change under rescaling and permuting coordinates of \({\mathbb {P}}^n\), i.e. under the actions of the algebraic torus \(\mathbb {G}_m^{n+1} = ({\mathbb {C}}^*)^{n+1}\) and the symmetric group \(\mathfrak {S}_{n+1}\). Fixing a \((k+1)\)-dimensional vector space W, we have the identification

Hence, we may express coordinate-wise powers of a linear space L in terms of the corresponding finite multi-set \(Z \subset {\mathbb {P}}W^*\). In fact, it is easy to check that the degrees of the minimal generators of the defining ideal only depend on the underlying set Z, forgetting repetitions in the multi-set. We study coordinate-wise powers of a linear space in terms of the corresponding non-degenerate finite point configuration.

For the entirety of Sect. 4, we establish the following notation: Let \(L\subset {\mathbb {P}}^n\) be a linear space of dimension k. We understand L as the image of a chosen linear embedding  , where W is a \((k+1)\)-dimensional vector space and \(\ell _0, \ldots , \ell _n \in W^*\) are linear forms defining \(\iota \). Consider the finite set of points \(Z \subset {\mathbb {P}}W^*\) given by

, where W is a \((k+1)\)-dimensional vector space and \(\ell _0, \ldots , \ell _n \in W^*\) are linear forms defining \(\iota \). Consider the finite set of points \(Z \subset {\mathbb {P}}W^*\) given by

Since \(\ell _0, \ell _1, \ldots , \ell _n \in W^*\) define the linear embedding \(\iota \), they cannot have a common zero in W. Hence, the linear span of Z is the whole space \({\mathbb {P}}W^*\). We denote by \(I(Z) \subset {{\,\mathrm{Sym}\,}}^\bullet W\) the defining ideal of \(Z \subset {\mathbb {P}}W^*\). The subspace of degree r forms vanishing on Z is written as \(I(Z)_r \subset {{\,\mathrm{Sym}\,}}^r W\).

The main technical tool is the following observation that \(L^{\circ r} \subset {\mathbb {P}}^n\) equals (up to a linear re-embedding) the image of the r-th Veronese variety \(\nu _r({\mathbb {P}}W)\subset {\mathbb {P}}{{\,\mathrm{Sym}\,}}^r W\) under the projection from the linear space \({\mathbb {P}}(I(Z)_r) \subset {\mathbb {P}}{{\,\mathrm{Sym}\,}}^r W\).

Lemma 4.1

The diagram

commutes, where \(\nu _r\) is the r-th Veronese embedding, \(\pi \) is the linear projection of \({\mathbb {P}}{{\,\mathrm{Sym}\,}}^r W\) from the linear space \({\mathbb {P}}(I(Z)_r)\), \(\psi \) is a morphism and \(\vartheta \) is a linear embedding.

Proof

We observe that the morphism \(\varphi _r \circ \iota \) is given by

The \(n+1\) elements \(\ell _i^r \in {{\,\mathrm{Sym}\,}}^r W^*\) correspond to a linear map \(\chi :{{\,\mathrm{Sym}\,}}^r W \rightarrow {\mathbb {C}}^{n+1}\) via the natural identification \(({{\,\mathrm{Sym}\,}}^r W^*)^{n+1} = {{\,\mathrm{Hom}\,}}_{\mathbb {C}}({{\,\mathrm{Sym}\,}}^r W, {\mathbb {C}}^{n+1})\).

The rational map \(\bar{\chi }\) between projective spaces corresponding to the linear map \(\chi \) gives the following commuting diagram:

where \(\vartheta \) is the linear embedding of projective spaces induced by factoring \(\chi \) over \({{\,\mathrm{Sym}\,}}^r W/\ker \chi \). In particular, \(\nu _r({\mathbb {P}}W) \cap {\mathbb {P}}(\ker \chi ) = \emptyset \), since \(\varphi _r \circ \iota \) is defined everywhere on \({\mathbb {P}}W\). Hence, \({\left. \pi \right| _{\nu _r({\mathbb {P}}W)}} :\nu _r({\mathbb {P}}W) \rightarrow {\mathbb {P}}({{\,\mathrm{Sym}\,}}^r W/\ker \chi )\) is a morphism.

Finally, we claim that \(\ker \chi = I(Z)_r\). Once we know this, defining \(\psi \,{:}{=}\, {\left. \pi \right| _{\nu _r({\mathbb {P}}W)}} \circ \nu _r\) completes the claimed diagram.

Let \(f \in {{\,\mathrm{Sym}\,}}^r W\) such that \(f\in I(Z)_r\). Naturally identifying W and \(W^{**}\), we may view f as a form of degree r on \(W^*\). Then, the condition that \(f \in I(Z)_r\) translates to \(f(\ell _i) = 0 \ \forall i\). Viewing f as a symmetric r-linear form \(W^* \times \cdots \times W^* \rightarrow {\mathbb {C}}\), we have \(f(\ell _i, \ldots , \ell _i) = 0 \ \forall i\). Also, when f is considered as a linear form on \({{\,\mathrm{Sym}\,}}^r W^*\), \(f(\ell _i^r) = 0 \ \forall i\). The latter expression is equivalent to \(f \in \ker \chi \), via the identification of W and \(W^{**}\). We conclude \(I(Z)_r = \ker \chi \). \(\square \)

In particular, we deduce the following:

Proposition 4.2

Let L be a linear space such that the finite set of points Z does not lie on a degree r hypersurface. Then the ideal of \(L^{\circ r}\) is generated by linear and quadratic forms.

Proof

Since \(I(Z)_r = 0\), we deduce from Lemma 4.1 that \(L^{\circ r} = \varphi _r(L)\) is a linear re-embedding of the k-dimensional r-th Veronese variety \(\nu _r({\mathbb {P}}W) \subset {\mathbb {P}}{{\,\mathrm{Sym}\,}}^r W\). The ideal of this Veronese variety is generated by quadrics. Since \(\dim {{\,\mathrm{Sym}\,}}^r W = \genfrac(){0.0pt}1{k+r}{r}\), the linear re-embedding \(\vartheta :{\mathbb {P}}{{\,\mathrm{Sym}\,}}^r W \hookrightarrow {\mathbb {P}}^n\) adds \(n-\smash {\left( {\begin{array}{c}k+r\\ r\end{array}}\right) }+1\) linear forms to the ideal. \(\square \)

4.2 Degenerate eigenvalues and squaring

We now specialise to the case of coordinate-wise squaring, i.e. \(r = 2\). This case has special geometric importance, since it corresponds to computing the image of a linear space under the quotient of \({\mathbb {P}}^n\) by the reflection group generated by the coordinate hyperplanes. In this section through Proposition 4.3 we point out that the case of coordinate-wise square of a linear space is closely related to studying symmetric matrices with a degenerate spectrum of eigenvalues. Here, we interpret \({\mathbb {P}}{{\,\mathrm{Sym}\,}}^2 {\mathbb {F}}^{k+1}\) (for \({\mathbb {F}}= {\mathbb {R}}\) or \({\mathbb {C}}\)) as the projective space consisting of symmetric \((k+1) \times (k+1)\)-matrices up to scaling with entries in \({\mathbb {F}}\).

Proposition 4.3

Let \(X \subset {\mathbb {P}}{{\,\mathrm{Sym}\,}}^2 {\mathbb {R}}^{k+1}\) be the set of real symmetric \((k+1)\times (k+1)\)-matrices with an eigenvalue of multiplicity \(\ge k\). Then the Zariski closure of X in \({\mathbb {P}}{{\,\mathrm{Sym}\,}}^2 {\mathbb {C}}^{k+1}\) is projectively equivalent to the projective cone over the coordinate-wise square \(L^{\circ 2}\) of any k-dimensional linear space L whose point configuration \(Z \subseteq {\mathbb {P}}W^*\) lies on a unique and smooth quadric.

Proof

Let \(L \subset {\mathbb {P}}^n\) be a k-dimensional linear space such that \(I(Z)_2\) is spanned by a smooth quadric \(q \in {\mathbb {P}}{{\,\mathrm{Sym}\,}}^2 W\). Choosing coordinates of \(W \cong {\mathbb {C}}^{k+1}\), we identify points in \({\mathbb {P}}{{\,\mathrm{Sym}\,}}^2 W\) with complex symmetric \((k+1) \times (k+1)\)-matrices up to scaling and we can assume \(q = {{\,\mathrm{id}\,}}\in {\mathbb {P}}{{\,\mathrm{Sym}\,}}^2 W\). The second Veronese variety \(\nu _2({\mathbb {P}}W) \subset {\mathbb {P}}{{\,\mathrm{Sym}\,}}^2 W\) consists of rank 1 matrices. Let \(X_0 \subset {\mathbb {P}}({{\,\mathrm{Sym}\,}}^2 W/\langle q \rangle )\) be the image of \(\nu _2({\mathbb {P}}W)\) under the natural projection. By Lemma 4.1, \(X_0\) is the coordinate-wise square \(L^{\circ 2}\) up to a linear re-embedding.

The projective cone over \(X_0 \cong L^{\circ 2}\) is the subvariety \(X_1 \subset {\mathbb {P}}{{\,\mathrm{Sym}\,}}^2 W\) consisting of complex symmetric matrices M such that the set \(M+\langle {{\,\mathrm{id}\,}}\rangle \) contains a matrix of rank \(\le 1\). We observe that the rank of \(M-\lambda {{\,\mathrm{id}\,}}\) is the codimension of the eigenspace of M with respect to \(\lambda \in {\mathbb {C}}\). Hence,

We are left to show that \(X_1\) is the Zariski closure in \({\mathbb {P}}{{\,\mathrm{Sym}\,}}^2 {\mathbb {C}}^{k+1}\) of \(X \subset {\mathbb {P}}{{\,\mathrm{Sym}\,}}^2 {\mathbb {R}}^{k+1}\). Since real symmetric matrices are diagonalizable, the multiplicity of an eigenvalue is the dimension of the corresponding eigenspace. Hence, \(X_1 \cap {\mathbb {P}}{{\,\mathrm{Sym}\,}}^2 {\mathbb {R}}^{k+1}= X\). The set X is the orbit of the line \(V \,{:}{=}\, \{{{\,\mathrm{diag}\,}}(\lambda , \ldots , \lambda , \mu ) \mid [\lambda :\mu ] \in {\mathbb {P}}_{\mathbb {R}}^1\}\) under the action of \(O(k+1).\) The action is given by conjugation with orthogonal matrices and the stabiliser is \(O(k) \times \{\pm 1\}\). Therefore, X has real dimension \(\dim V + \dim O(k+1) - \dim O(k) = k+1\). Also, \(X_1\) is the projective cone over \(X_0 \cong L^{\circ 2}\), so it is a \({(k+1)}\)-dimensional irreducible complex variety. We conclude that \(X_1\) is the Zariski closure of X in \({\mathbb {P}}{{\,\mathrm{Sym}\,}}^2 {\mathbb {C}}^{k+1}\). \(\square \)

We illustrate Proposition 4.3 in the case of \(3 \times 3\)-matrices:

Example 4.4

Consider the set of real symmetric \(3 \times 3\)-matrices with a repeated eigenvalue. We denote its Zariski closure in \({\mathbb {P}}{{\,\mathrm{Sym}\,}}^3 {\mathbb {C}}^2\) by Y. By Proposition 4.3, it can be understood in terms of the coordinate-wise square \(L^{\circ 2}\) for some plane L. We make this explicit as follows: Consider the planar point configuration

lying only on the conic \(V(x^2+y^2+z^2)\). Let L be the corresponding plane in \({\mathbb {P}}^4\), given as the image of

Under the linear embedding

the plane L gets mapped into Y. Indeed, it is easily checked that a point [x : y : z] gets mapped to the matrix \(-4(x^2+y^2+z^2){{\,\mathrm{id}\,}}+12(x,y,z)^T(x,y,z)\) under the composition \(\psi \circ \iota :{\mathbb {P}}^2 \rightarrow {\mathbb {P}}{{\,\mathrm{Sym}\,}}^2 {\mathbb {C}}^3\); note that this matrix has a repeated eigenvalue. More precisely, Proposition 4.3 shows that Y is the projective cone over \(\psi (L^{\circ 2})\) with the vertex \({{\,\mathrm{id}\,}}\).

In Sect. 4.4 we give an explicit set-theoretic description of the coordinate-wise square of a linear space in high-dimensional ambient space. We will show the following result as a special case of Theorem 4.11. Given a matrix \(A \in {\mathbb {C}}^{s \times s}\), we denote a \(2\times 2\) minor of A by \(A_{ij|k\ell }\) where i, j are the rows and \(k,\ell \) are the columns of the minor.

Corollary 4.5

Let \(s \ge 4\). A symmetric matrix \(A \in {\mathbb {C}}^{s \times s}\) has an eigenspace of codimension \(\le 1\) if and only if its \(2\times 2\)-minors

satisfy the following for \(i,j,k,\ell \le s\) distinct:

These equations describe the Zariski closure in the complex vector space \({{\,\mathrm{Sym}\,}}^2 {\mathbb {C}}^s\) of the set of real symmetric matrices with an eigenvalue of multiplicity \(\ge s-1\).

4.3 Squaring lines and planes

In this subsection we consider the low-dimensional cases and classify the coordinate-wise squares of lines and planes in arbitrary ambient spaces.

Theorem 4.6

(Squaring lines) Let L be a line in \({\mathbb {P}}^n\).

-

(i)

If \(|Z| = 2\), then \(L^{\circ 2}\) is a line in \({\mathbb {P}}^n\).

-

(ii)

If \(|Z| > 2\), then \(L^{\circ 2}\) is a smooth conic in \({\mathbb {P}}^n\).

Proof

Since \(Z \subset {\mathbb {P}}W^*\) spans the projective line \({\mathbb {P}}W^*\), we must have \(|Z| \ge 2\).

If \(|Z| > 2\), then \(I(Z)_2 = 0\), since no non-zero quadratic form on the projective line \({\mathbb {P}}W^*\) vanishes on all points of Z. Then Lemma 4.1 implies that \(L^{\circ 2} = (\varphi _2 \circ \iota )({\mathbb {P}}W) \) is a linear re-embedding of \(\nu _2({\mathbb {P}}W) \), which is a smooth conic in the plane \({\mathbb {P}}{{\,\mathrm{Sym}\,}}^2 W \cong {\mathbb {P}}^2\).

If \(|Z| = 2\), then \(\dim I(Z)_2 = 1\), since up to scaling there is a unique quadric vanishing on the points Z. By Lemma 4.1, the image \(\varphi _2(L)\) lies in a projective line \({\mathbb {P}}^1 \cong \vartheta ({\mathbb {P}}({{\,\mathrm{Sym}\,}}^2 W/I(Z)_2)) \subset {\mathbb {P}}^n\). On the other hand \(\dim L^{\circ 2} = \dim L = 1\). Hence, \(L^{\circ 2} = \varphi _2(L)\) is a line in \({\mathbb {P}}^n\). \(\square \)

Remark 4.7

We observe that the two possibilities in Theorem 4.6 for the coordinate-wise square of a line L differ in degree. In particular, Corollary 2.7 shows that it only depends on the linear matroid \(\mathcal {M}_L\) whether \(L^{\circ 2} \) is a line or a (re-embedded) plane conic.

Remark 4.8

In the Grassmannian of lines \({{\,\mathrm{Gr}\,}}(1, {\mathbb {P}}^n)\), consider the locus \(\Gamma \subset {{\,\mathrm{Gr}\,}}(1, {\mathbb {P}}^n)\) of those lines L whose coordinate-wise square \(L^{\circ 2}\) is a line. Considering Plücker coordinates \(p_{ij}\) on the Grassmannian \({{\,\mathrm{Gr}\,}}(1, {\mathbb {P}}^n)\), we observe that \(\Gamma \) is the subvariety of \({{\,\mathrm{Gr}\,}}(1, {\mathbb {P}}^n)\) given by the vanishing of \(p_{ij} p_{jk} p_{ki}\) for all \(i,j,k \in \{0,1, \ldots , n\}\) distinct:

Indeed, if L is the image of an embedding  given by a chosen rank 2 matrix \(B \in {\mathbb {C}}^{(n+1)\times 2}\), then \(Z \subset ({\mathbb {P}}^1)^*\) is the set of points corresponding to the non-zero rows of B. Then \(|Z|=2\) if and only if among any three distinct rows of B there always exist two linearly dependent rows. In terms of the Plücker coordinates, which are given by the \(2 \times 2\)-minors of B, this translates into the vanishing condition above.

given by a chosen rank 2 matrix \(B \in {\mathbb {C}}^{(n+1)\times 2}\), then \(Z \subset ({\mathbb {P}}^1)^*\) is the set of points corresponding to the non-zero rows of B. Then \(|Z|=2\) if and only if among any three distinct rows of B there always exist two linearly dependent rows. In terms of the Plücker coordinates, which are given by the \(2 \times 2\)-minors of B, this translates into the vanishing condition above.

Theorem 4.9

(Squaring planes) Let L be a plane in \({\mathbb {P}}^n\). The defining ideal \(I \subset {\mathbb {C}}[{\mathbf {x}}]\) of \(L^{\circ 2}\) depends on the geometry of the planar configuration of \(Z\subset {\mathbb {P}}W^*\) as follows (see Fig. 5):

-

(i)

If Z is not contained in any conic, then I is minimally generated by \(n-5\) linear forms and 6 quadratic forms.

-

(ii)

If Z is contained in a unique conic \(Q \subset {\mathbb {P}}W^*\), we distinguish two cases:

-

(a)

If Q is irreducible, then I is minimally generated by \(n-4\) linear forms and 7 cubic forms.

-

(a)

If Q is reducible, then \(L^{\circ 2}\) is the complete intersection of \(n-4\) hyperplanes and 2 quadrics.

-

(a)

-

(iii)

If Z is contained in several conics, we distinguish three cases:

-

(a)

If \(|Z| = 3\), then I is minimally generated by \(n-2\) linear forms.

-

(b)

If \(|Z| = 4\) and no three points of Z are collinear, then I is minimally generated by \(n-3\) linear forms and one quartic form.

-

(c)

If \(|Z| \ge 3\) and all but one of the points of Z lie on a line, then I is minimally generated by \(n-3\) linear forms and one quadratic form.

-

(a)

Proof

Notice that \(k=2\), so \(\dim W = 3\).

-

(i)

If \(I(Z)_2 = 0\), then \(L^{\circ 2} \subset {\mathbb {P}}^n\) is by Lemma 4.1 a linear re-embedding of the Veronese surface \(\nu _2({\mathbb {P}}W) \subset {\mathbb {P}}{{\,\mathrm{Sym}\,}}^2 W\). The ideal of the \(\nu _2({\mathbb {P}}W)\) is minimally generated by six quadrics. Indeed, choosing a basis for W, we may understand points in \({\mathbb {P}}{{\,\mathrm{Sym}\,}}^2 W\) as symmetric \(3\times 3\)-matrices up to scaling. Then \(\nu _2({\mathbb {P}}W)\) is the subvariety corresponding to symmetric rank 1 matrices, which is the vanishing set of the six quadratic polynomials corresponding to the \(2 \times 2\)-minors. Since \(\dim {\mathbb {P}}{{\,\mathrm{Sym}\,}}^2 W = 5\), the linear re-embedding \({\mathbb {P}}{{\,\mathrm{Sym}\,}}^2 W \hookrightarrow {\mathbb {P}}^n\) adds \(n-5\) linear forms to I.

-

(ii)

We can choose a basis \(\{z_0,z_1,z_2\}\) of W such that the unique reduced plane conic through \(Z \subset {\mathbb {P}}W^*\) is with respect to these coordinates given by the vanishing of either \(q_1 \,{:}{=}\, z_0^2-2z_1 z_2 \in {{\,\mathrm{Sym}\,}}^2 W\) or \(q_2 \,{:}{=}\, z_1 z_2 \in {{\,\mathrm{Sym}\,}}^2 W\). We consider the basis \(\{z_1^2, z_2^2, 2z_0 z_1, 2z_0 z_2, 2z_1 z_2\}\) of \({{\,\mathrm{Sym}\,}}^2 W/\langle q_1\rangle \) and the basis \(\{z_0^2, z_1^2, z_2^2, 2z_0 z_1, 2z_0 z_2\}\) of \({{\,\mathrm{Sym}\,}}^2 W/\langle q_2\rangle \). With respect to these choices of bases, the morphism \(\psi :{\mathbb {P}}W \rightarrow {\mathbb {P}}({{\,\mathrm{Sym}\,}}^2 W/I(Z)_2)\) is given as

$$\begin{aligned}&\psi :{\mathbb {P}}^2 \rightarrow {\mathbb {P}}^4, \quad [a_0:a_1:a_2] \mapsto [a_1^2 : a_2^2 : a_0 a_1 : a_0 a_2 : a_0^2+a_1a_2] \\ \text {or} \qquad&\psi :{\mathbb {P}}^2 \rightarrow {\mathbb {P}}^4, \quad [a_0:a_1:a_2] \mapsto [a_0^2 : a_1^2 : a_2^2 : a_0 a_1 : a_0 a_2]. \end{aligned}$$In the first case, we checked computationally with Macaulay2 (Grayson and Stillman 2018) that the ideal is minimally generated by seven cubics. A structural description of these quadrics and cubics will be given in the proof of Theorem 4.11. The image of the second morphism is a complete intersection of two binomial quadrics. By Lemma 4.1, the coordinate-wise square \(L^{\circ 2}\) arises from the image of \(\psi \) via a linear re-embedding \({\mathbb {P}}^4 \hookrightarrow {\mathbb {P}}^n\), producing additional \(n-4\) linear forms in I.

-

(iii)

In case (a), the set Z consists of three points spanning the projective plane \({\mathbb {P}}W^*\), so \(\dim {{\,\mathrm{Sym}\,}}^2 W/I(Z)_2 = 3\). Then by Lemma 4.1, the coordinate-wise square \(L^{\circ 2}\) is contained in a plane \({\mathbb {P}}^2 \cong \vartheta ({\mathbb {P}}({{\,\mathrm{Sym}\,}}^2 W/I(Z)_2)) \subset {\mathbb {P}}^n\). On the other hand, \(\dim L^{\circ 2} = \dim L = 2\), so \(L^{\circ 2} \subset {\mathbb {P}}^n\) must be a plane in \({\mathbb {P}}^n\). For case (b), we may assume that

$$\begin{aligned} Z = \{[1:0:0],[0:1:0],[0:0:1],[-\,1:-\,1:-\,1]\} \end{aligned}$$for a suitably chosen basis \(\{\ell _0,\ell _1,\ell _2\}\) of \(W^*\). By Lemma 4.1, \(L^{\circ 2} \subset {\mathbb {P}}^n\) is a linear re-embedding of the image of \(\psi :{\mathbb {P}}W \rightarrow {\mathbb {P}}({{\,\mathrm{Sym}\,}}^2 W/I(Z)_2)\). On the other hand, the plane \(L' \,{:}{=}\, V(x_0+x_1+x_2+x_3) \subset {\mathbb {P}}^3\) is the image of

, so Z can also be viewed as the finite set of points associated to \(L'\). Applying Lemma 4.1 to \(L' \subset {\mathbb {P}}^3\) shows that the image of \(\psi :{\mathbb {P}}W \rightarrow {\mathbb {P}}({{\,\mathrm{Sym}\,}}^2 W/I(Z)_2)\) is the coordinate-wise square \({L'}^{\circ 2} \subset {\mathbb {P}}^3\). Hence, \(L^{\circ 2} \subset {\mathbb {P}}^n\) is a linear re-embedding of the quartic surface from Example 3.5 into higher dimension. Finally, we consider case (c). Consider three points \(p_1,p_2,p_3 \in Z\) lying on a line \(T \subset {\mathbb {P}}W^*\). Then T must be an irreducible component of each conic through Z. Since Z spans the projective plane \({\mathbb {P}}W^*\), there must also be a point \(p_0 \in Z\) outside of T. All points in \(Z {\setminus } \{p_0\}\) must lie on the line T, as otherwise there could be at most one conic passing through Z. If \(Z' \,{:}{=}\, \{p_0,p_1,p_2,p_3\} \subset Z\), then each conic passing through \(Z'\) also passes through Z, i.e. \(I(Z)_2 = I(Z')_2\). We may choose a basis \(z_0,z_1,z_2\) of W such that \(Z' \subset {\mathbb {P}}W^*\) with respect to these coordinates is given by $$\begin{aligned} Z' = \{[1:0:0],[0:1:0],[0:0:1],[0:1:1]\}. \end{aligned}$$

, so Z can also be viewed as the finite set of points associated to \(L'\). Applying Lemma 4.1 to \(L' \subset {\mathbb {P}}^3\) shows that the image of \(\psi :{\mathbb {P}}W \rightarrow {\mathbb {P}}({{\,\mathrm{Sym}\,}}^2 W/I(Z)_2)\) is the coordinate-wise square \({L'}^{\circ 2} \subset {\mathbb {P}}^3\). Hence, \(L^{\circ 2} \subset {\mathbb {P}}^n\) is a linear re-embedding of the quartic surface from Example 3.5 into higher dimension. Finally, we consider case (c). Consider three points \(p_1,p_2,p_3 \in Z\) lying on a line \(T \subset {\mathbb {P}}W^*\). Then T must be an irreducible component of each conic through Z. Since Z spans the projective plane \({\mathbb {P}}W^*\), there must also be a point \(p_0 \in Z\) outside of T. All points in \(Z {\setminus } \{p_0\}\) must lie on the line T, as otherwise there could be at most one conic passing through Z. If \(Z' \,{:}{=}\, \{p_0,p_1,p_2,p_3\} \subset Z\), then each conic passing through \(Z'\) also passes through Z, i.e. \(I(Z)_2 = I(Z')_2\). We may choose a basis \(z_0,z_1,z_2\) of W such that \(Z' \subset {\mathbb {P}}W^*\) with respect to these coordinates is given by $$\begin{aligned} Z' = \{[1:0:0],[0:1:0],[0:0:1],[0:1:1]\}. \end{aligned}$$The plane \(L' \,{:}{=}\, V(x_1+x_2-x_3) \subset {\mathbb {P}}^3\) is the image of

, so \(Z'\) can be viewed as the finite set of points associated to \(L'\). Lemma 4.1 shows that \({L'}^{\circ 2} \subset {\mathbb {P}}^3\) coincides with the image of the morphism \(\psi :{\mathbb {P}}W \rightarrow {\mathbb {P}}({{\,\mathrm{Sym}\,}}^2 W/I(Z')_2)\). On the other hand, Lemma 4.1 shows that \(L^{\circ 2} \subset {\mathbb {P}}^n\) is a linear re-embedding of \({\mathbb {P}}W \rightarrow {\mathbb {P}}({{\,\mathrm{Sym}\,}}^2 W/I(Z)_2)\). From \(I(Z)_2 = I(Z')_2\), we deduce that \(L^{\circ 2} \subset {\mathbb {P}}^n\) is a linear re-embedding of the quadratic surface $$\begin{aligned} {L'}^{\circ 2} = V(x_1+x_2-x_3)^{\circ 2} = V(x_1^2+x_2^2+x_3^2-2x_1 x_2-2x_2 x_3 -2x_3x_1) \subset {\mathbb {P}}^3, \end{aligned}$$

, so \(Z'\) can be viewed as the finite set of points associated to \(L'\). Lemma 4.1 shows that \({L'}^{\circ 2} \subset {\mathbb {P}}^3\) coincides with the image of the morphism \(\psi :{\mathbb {P}}W \rightarrow {\mathbb {P}}({{\,\mathrm{Sym}\,}}^2 W/I(Z')_2)\). On the other hand, Lemma 4.1 shows that \(L^{\circ 2} \subset {\mathbb {P}}^n\) is a linear re-embedding of \({\mathbb {P}}W \rightarrow {\mathbb {P}}({{\,\mathrm{Sym}\,}}^2 W/I(Z)_2)\). From \(I(Z)_2 = I(Z')_2\), we deduce that \(L^{\circ 2} \subset {\mathbb {P}}^n\) is a linear re-embedding of the quadratic surface $$\begin{aligned} {L'}^{\circ 2} = V(x_1+x_2-x_3)^{\circ 2} = V(x_1^2+x_2^2+x_3^2-2x_1 x_2-2x_2 x_3 -2x_3x_1) \subset {\mathbb {P}}^3, \end{aligned}$$as we compute from Proposition 3.4. \(\square \)

Remark 4.10

Opposed to Remark 4.7, the structure of the coordinate-wise square of a plane \(L \subset {\mathbb {P}}^n\) does not only depend on the linear matroid of L: For \(n=5\), it can happen both in case (i) and case (ii).(a) of Theorem 4.9 that \(\mathcal {M}_L = \{I \subset \{0,1,\ldots ,5\} \mid |I| \le 3\}\).

4.4 Squaring in high ambient dimensions

Consider the case of k-dimensional linear spaces in \({\mathbb {P}}^n\) for \(n \gg k\). For a general linear space \(L \in {{\,\mathrm{Gr}\,}}(k,{\mathbb {P}}^n)\), the finite set of points Z does not lie on a quadric. We know from Proposition 4.2 that the coordinate-wise square \(L^{\circ 2}\) is a linear re-embedding of the k-dimensional second Veronese variety. In this subsection, we investigate the first degenerate case where the point configuration Z is a unique quadric.

The following theorem gives the structure of coordinate-wise squares as the one appearing in Proposition 4.3. We will also prove Corollary 4.5 by deriving the polynomials vanishing on the set of symmetric matrices with a comultiplicity 1 eigenvalue. Proposition 4.3 shows that Corollary 4.5 is a special case of the theorem stated below.

Theorem 4.11

Let \(L \subset {\mathbb {P}}^n\) be linear space of dimension k. If the point configuration Z lies on a unique quadric of rank s, then \(L^{\circ 2}\) can set-theoretically be described as the vanishing set of \(n-\left( {\begin{array}{c}k+2\\ 2\end{array}}\right) +2\) linear forms and

In fact, for \(s \ge 3\), we show that the claim holds scheme-theoretically, see Remark 4.17. We believe that in fact for arbitrary s the claim is even true ideal-theoretically.

The remainder of this subsection is dedicated to the proof of Theorem 4.11. It reduces to the following elimination problem. Let \(k \ge 1\) and \(s \ge 2\). Consider a symmetric \((k+1)\times (k+1)\)-matrix of variables \(Y \,{:}{=}\, (y_{ij})_{1\le i,j \le k+1}\) and the corresponding polynomial ring \({\mathbb {C}}[{\mathbf {y}}]\,{:}{=}\, {\mathbb {C}}[y_{ij} ]/(y_{ij}-y_{ji}).\) Over the polynomial ring \({\mathbb {C}}[{\mathbf {y}},t]\), we consider the matrix \(M \,{:}{=}\, Y+tI_s\), where we define the matrix

Henceforth, we denote the \(2\times 2\)-minors of Y with rows \(i\ne j\) and columns \(\ell \ne m\) by \(Y_{ij|\ell m} \,{:}{=}\, y_{i\ell } y_{j m} - y_{im} y_{j \ell } \in {\mathbb {C}}[{\mathbf {y}}],\) and correspondingly \(M_{ij|\ell m} \in {\mathbb {C}}[{\mathbf {y}},t]\) for the \(2 \times 2\)-minors of M. Let \(J_0 \subset {\mathbb {C}}[{\mathbf {y}},t]\) denote the ideal generated by the \(2 \times 2\)-minors of M. By \(J \,{:}{=}\, J_0 \cap {\mathbb {C}}[{\mathbf {y}}]\) we denote the ideal in \({\mathbb {C}}[{\mathbf {y}}]\) obtained by eliminating t from \(J_0\). We explicitly describe the elimination ideal J for all values of k and s.

Proposition 4.12

The vanishing set \(V(J) \subset {\mathbb {P}}^{\left( {\begin{array}{c}k+2\\ 2\end{array}}\right) -1}\) can set-theoretically be described as the zero set of

First, we observe that Theorem 4.11 follows directly from Proposition 4.12.

Proof of Theorem 4.11

Analogous to the proof of Proposition 4.3, we identify \({\mathbb {P}}{{\,\mathrm{Sym}\,}}^2 W\) with \({\mathbb {P}}{{\,\mathrm{Sym}\,}}^2 {\mathbb {C}}^{k+1}\) such that \(q = I_s\). By Lemma 4.1, the coordinate-wise square \(L^{\circ 2} \) is a linear re-embedding of the variety obtained by the projection of \(\nu _2({\mathbb {P}}W)\) from the point \(q=I_s \in {\mathbb {P}}{{\,\mathrm{Sym}\,}}^2 W\). Note that V(J) describes the set of points \(Y\in {\mathbb {P}}{{\,\mathrm{Sym}\,}}^2 W\) lying on the line joining q with some point in \(\nu _2({\mathbb {P}}W)\). Hence, the projection from q is given by intersecting V(J) with a hyperplane \(H \subset {\mathbb {P}}{{\,\mathrm{Sym}\,}}^2 W\) not containing \(q = I_s\).