Abstract

As the prevalence of dementia and Alzheimer’s disease (AD) increases worldwide, it is imperative to reflect on the major clinical trials in the prevention of dementia and the challenges that surround them. The pharmaceutical industry has focused on developing drugs that primarily affect the Aβ cascade and tau proteinopathy, while academics have focused on repurposed therapeutics and multi-domain interventions for prevention studies. This paper highlights significant primary, secondary, and tertiary prevention trials for dementia and AD, overall design, methods, and systematic issues to better understand the current landscape of prevention trials. We included 32 pharmacologic intervention trials and 9 multi-domain trials. Fourteen could be considered primary prevention, and 18 secondary or tertiary prevention trials. Major categories were Aβ vaccines, Aβ antibodies, tau antibodies, anti-inflammatories, sex hormones, and Ginkgo biloba extract. The 9 multi-domain studies mainly focused on lifestyle modifications such as blood pressure management, socialization, and physical activity. The lack of validated drug targets, and the complexity of the diagnostic frameworks, eligibility criteria, and outcome measurements for trials, make it difficult to show efficacy for both pharmacological and multi-domain interventions. We hope that this summative analysis of trials will stimulate discussion for scientists and clinicians interested in reviewing and developing preventative interventions for AD.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Dementia and Alzheimer’s disease may affect more than 5 million people in the USA and will likely increase to more than 13 million people by 2050. Worldwide estimates are around 50 million people living with dementia and 152 million by 2050 [1]. For AD, there is a general consensus in academic medicine that its pathology develops decades before symptoms are expressed. Since the advent of the amyloid cascade hypothesis in the early 1990s, which posits that the deposition of amyloid-β peptide in the brain sets in motion a series of events that lead to cognitive impairment, dozens of potential therapeutics have been offered with the intent to reduce amyloid-β peptide production or aggregation [2]. Most of these suggestions were not advanced to efficacy trials. Of those that did, we are not aware of any that showed success in phase 2 and 3 clinical trials on its primary outcome [3]. A prevalent view in the academic community is that treatment after the onset of symptoms is too late in a presumed ongoing neurodegenerative process to be impactful. Treatment before symptom onset is considered preferable but requires that people with presymptomatic or preclinical illness can be identified. In this sense, primary prevention in its purest form can be considered as interventions taken before the appearance of canonical Alzheimer pathology, neuronal loss, amyloid-β plaques, neurofibrillary tangles, and granulovacuolar degeneration [4]. Alternatively, and more pragmatically, as mild cognitive impairment must precede dementia syndrome, it may be taken as the earliest clinical expression of Alzheimer pathology and might serve both as eligibility criteria for secondary prevention trials and as an essential clinical outcome in primary prevention studies. Yet the definitions of these standards are themselves subjective and vary across research groups and funders [5].

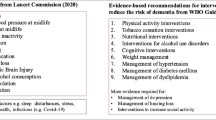

The 2020 Lancet Commission on dementia prevention, intervention, and care discussed that many modifiable environmental factors were risk factors for dementia, including low childhood education, hypertension, hearing impairment, smoking, obesity, depression, physical inactivity, diabetes, and low social contact [6]. Dementia in late life is most commonly characterized by Alzheimer pathology, but is associated with other pathology such as cerebrovascular disease, ischemia, Lewy bodies, and TAR-DNA-binding protein 43 (TDP-43) proteinopathy, among others [7].

The current standard pharmacological treatment for clinically diagnosed AD is with cholinesterase inhibitors such as donepezil, galantamine, and rivastigmine, which are small molecules that cross the blood–brain barrier and might improve symptoms or slow clinical progression for a time, but are not considered to otherwise modify the expression or effects of the illness [8]. Thus, the pharmaceutical industry and many academics have focused on developing pharmaceuticals that would affect the amyloid-β cascade and more recently tau proteinopathy. Some academics, on the other hand, have focused on repurposed therapeutics and multi-domain interventions that usually include psychosocial, nutritional, and metabolic interventions for prevention studies. Here, we present a selected review of the current landscape, the methods and outcomes in dementia prevention trials, and barriers to their success.

Prevention of Dementia

Prevention of dementia and Alzheimer’s disease can be considered within the three traditional categories of prevention: primary, secondary, and tertiary. Primary prevention intends to prevent the disease before it manifests. Secondary prevention aims to prevent further expression of illness in people in the earliest stages of cognitive impairment and who are still functionally independent in daily activities. Tertiary prevention focuses on delaying progression or improving symptoms of patients who have early clinical signs of the illness, with cognitive or functional impairment. These definitions, however, are somewhat fluid; and their respective patient populations are summarized in Table 1.

There are different considerations for undertaking primary, secondary, and tertiary prevention trials and variations in how these trials are designed. True primary prevention trials are more formidable endeavors because they require earlier, generally longer interventions and follow-up of larger numbers of participants, usually with less frequent clinical and outcomes assessment than shorter, symptomatic treatment trials require. Some degree of sample stratification is also needed to define various risk levels, and to minimize confounding by indication.

The sample sizes and durations of primary prevention, compared to symptomatic studies, for a range of interventions, can vary greatly. Sample sizes will be substantially influenced by the expected outcome, its magnitude or the effect size to be detected, duration of a study, and the ability of participants and their study partners to comply with the intervention. Duration of treatment and follow-up also vary, ranging from a brief 1-year treatment period to over 7 years follow-up. Thus, scalability and administrative burden become important for prevention studies, and—with that consideration—electronic or e-health interventions may prove essential for future trials as they have a greater capacity for scalability. Other considerations include the use and choice of biomarkers for screening, their relevance to the intervention or overall health status, and whether they can substitute for clinical outcomes.

Potentially Modifiable Risks Associated with Late-life Dementia

A growing body of evidence strongly suggests several potentially modifiable risk factors that contribute to an increased risk of developing dementia in later life, with the total proportion of dementia that is potentially avoidable approximated at 40%. One critical investigation organized potentially modifiable risks by early life (ages < 18 years old), midlife (ages between 45 and 65 years old), and later life (ages > 65 years) stages, as discussed in the 2020 Lancet Commission [6]. Table 2 outlines these potentially modifiable risk factors along with the population attributable risk, organized by life stage.

The best-defined potentially modifiable risk factor in early life is the level of education attained in childhood, with higher childhood educational level associated with a decreased risk for later development of dementia [9]. Several risk factors associated with increased risk of dementia stem from midlife, lifestyle-related factors, such as obesity, excessive alcohol consumption, hypertension, traumatic brain injury, and hearing loss. The latter has the highest population attributable fraction of midlife risk factors and is fairly prevalent with unilateral hearing loss affecting about 20.3% of Americans older than 12 years old, and bilateral hearing loss affecting about 12.7% [10]. Dementia risk appears to increase with the extent of hearing loss, and is potentially mitigated with hearing aids [11, 12]. Risks in later life include smoking, depression, social isolation, and type 2 diabetes as well as environmental risks such as air pollution. A systematic review of 13 published studies of air pollutants and dementia risk found an association between AD and air pollutants such as O3 (ozone), NO2, NO, CO, and PM2.5 (i.e., particulate matter less than 2.5 µ in size) [13]. Interventions to modify these risk factors form the overarching hypothesis of modern prevention trials and have influenced both pharmacological and non-pharmacological approaches.

Biological and Pathophysiological Rationales for Prevention

Multiple altered biochemical processes are implicated in the pathophysiology of AD, such as amyloid precursor protein (APP) metabolism, tau hyperphosphorylation, oxidative stress, and mitochondrial dysfunction. The amyloid cascade hypothesis proposes that the accumulation of neurotoxic amyloid-β (Aβ) protein species drives the neurodegeneration seen in AD [2]. Amyloid precursor protein is aberrantly cleaved by beta- and gamma-secretase enzymes, producing soluble amyloid-β peptides, including Aβ42—a form of Aβ that is used as a biomarker in many AD studies. These small peptides may aggregate into oligomers, then fibrils, finally forming extracellular amyloid plaques. The most well-known single cause of AD is in the autosomal dominantly inherited mutation of amyloid precursor protein. The amyloid cascade hypothesis has provided the rationale for several interventional drug targets.

Axonal neurofibrillary tangles made up of tau protein are another pathological hallmark of AD. Tau is a microtubule-associated protein (MAP) that participates in the assembly and stabilization of microtubules, part of the axonal cytoskeleton [14]. In the disease state, tau can become detached from microtubules because of excess phosphorylation and aggregate with other tau molecules, leading to impaired axonal transport and synaptic disruption [15, 16].

Oxidative stress and damage by reactive oxygen species are implicated in Alzheimer pathophysiology. This is evidenced by increased lipid peroxidation, protein and DNA/RNA oxidation, as well as decreased antioxidant levels and antioxidant enzyme activity seen in AD brains [17]. Oxidative stress mechanisms are of particular interest when considering preventative therapies for AD as some of the changes indicative of brain oxidative stress were shown to predate the onset of symptomatic dementia in individuals with MCI [18]. Other potential mechanisms include inflammation, neurotransmitter dysfunction, and lipid metabolism disorders.

Dominantly inherited Alzheimer’s disease (DIAD) is a rare disorder that comprises less than 1% of people with Alzheimer’s disease [19]. Yet, it is an important population for prevention studies. Patients with DIAD have mutations in APP, presenilin 1 (PSEN1), or presenilin 2 (PSEN2) genes, which have roles in the production and modification of amyloid protein. Since there is a 100% probability that these mutations will result in the development of dementia and the age of onset can be predicted by considering family history and mutation, prevention trials with DIAD participants can be efficiently designed [20]. They are relatively younger and have fewer comorbidities than people with late-onset sporadic Alzheimer’s disease, allowing for important information about disease progression and biomarkers.

The Dominantly Inherited Alzheimer Network (DIAN) and the Dominantly Inherited Alzheimer Network Trials unit (DIAN-TU) were established to assess the safety, efficacy, and development of biomarkers and interventions for DIAD. The estimated years to symptom onset (EYO) as a function of metabolism, family history, and age is an important metric in studying DIAD [21]. The shorter the estimated time to onset, the closer the patient is to developing functional impairment and dementia. The use of EYO in the DIAN-TU prevention trials was a major methodological advance in that time to symptom onset could be better predicted and planned for. For example, a metric based only on age would have been confounded by regional, environmental, and individual differences in access to medical care to even receive a diagnosis. Accounting for family history captures the variation in disease course among those with DIAD. However, as EYO is calculated in part based on the mean parental age of illness, it cannot be applied consistently in studies of sporadic, late-onset dementia [21].

In addition to autosomal dominantly inherited mutations that directly cause Alzheimer’s disease, several genetic variants are associated with increased risk of dementia. The best-known variants code for apolipoprotein E (APOE), which has important functions in the transport and metabolism of lipids and cholesterol. The APOE 4 allele variant not only increases risk for AD, but APOE4 allele carriers also tend to have an earlier age of onset, with homozygous E4/E4 carriers being more likely affected than heterozygotes [22]. While the underlying mechanism for APOE4 risk is not fully understood, it appears to play an important role in Aβ metabolism, with APOE4 carriers showing increased Aβ deposition in the brain [23]. As a risk and timing gene, it may affect eligibility for and outcomes of prevention trials.

Other genetic modifiers implicated in late-onset AD and identified from genome-wide association studies (GWAS) include catechol-O-methyltransferase (COMT), triggering receptor expressed on myeloid cells 2 (TREM2), and translocase of outer mitochondrial membrane 40 (TOMM40) genes [24]. COMT is an enzyme that is involved in dopamine metabolism, with some polymorphisms linked to reductions in cognitive performance [25]. COMT is also implicated in several other psychiatric and cognitive disorders, such as schizophrenia. TREM2 is a transmembrane receptor in myeloid lineage cells and is associated with inflammatory pathways in AD. There appears to be reduced phagocytosis of Aβ plaques in AD brains with high expression of mutant TREM2 as well as increased microglial clustering around these Aβ plaques [26]. The mechanisms by which TREM2 may augment AD pathology are not well understood.

TOMM40 encodes a translocase that is essential for protein transport into mitochondria. It has a high linkage disequilibrium with the APOE genomic region, which presents challenges for confounding with the APOE variants. Several studies evidence that TOMM40, including TOMM40′523 polymorphisms, is independently associated with hippocampal and cortical abnormalities as well as increased likelihood of AD pathology through the study of APOE E3/E3 carriers [27,28,29]. Although not well elucidated, TOMM40 may contribute to mitochondrial dysfunction that is associated with underlying AD pathophysiology.

These genetic associations provide further clues to modifiers of dementia pathophysiology, drug targets for prevention, and target populations for more “personalized” therapy. Indeed, inclusion criteria requiring both TOMM40 and APOE4 were used to enrich sample selection in the TOMMORROW prevention trial assessing low dose pioglitazone effect at delaying the onset of MCI due to AD [29]. It is also important to account for the increased risk in these populations, for example, using genotyping and stratification when randomizing, to minimize the risk of confounding by indication.

A range of other pathological targets are associated with cognitive impairment. Dementia with Lewy bodies (DLB) is characterized by alpha-synuclein neuronal cytoplasmic inclusion bodies associated with neuronal loss, particularly leading to dopaminergic and cholinergic deficit [30, 31]. In addition to the hyperphosphorylated tangles in AD, tau is implicated in the pathophysiology of several neurodegenerative conditions including frontotemporal dementia (FTD), progressive supranuclear palsy, and corticobasal degeneration with autosomal-dominant mutations of the microtubule-associated protein tau gene (MAPT) being an established cause of some chromosome 17-linked FTDs. In other forms of FTD, TAR-DNA-binding protein 43 (TDP-43) has been implicated; however, mechanisms by which TDP-43 contributes to neurodegeneration remain unclear. Importantly, many of these underlying pathologies may co-exist in any given type of dementia and may exert an effect on neuropathological and clinical presentation.

Pharmacological Interventions in Primary and Secondary Prevention Trials

Ongoing research extends across a wide range of potential therapies. These range from anti-amyloid and anti-tau treatments to agents that are proposed to have anti-neuroinflammatory, neuroprotective and neurorestorative therapies, and to neurotransmitter modifiers and cognitive enhancers [32]. Many of these interventions are derived from the current understanding of the underlying pathophysiology of AD. This principle led to the FDA marketing approval of acetylcholinesterase inhibitors in the 1990s wherein their use derived from observations of cholinergic neuron loss and depletion of neuronal acetylcholine and precursors in the brains of patients with dementia. The original, phase 3 licensing trials of the four cholinesterase inhibitors that received marketing approval were notable as they resulted in modest clinical improvements in patients with mild-to-moderate AD. Clinical trials evidence later emerged for modest clinical benefit in both more severely impaired and more mildly impaired AD patients, extending into the early-stage AD range [33,34,35]. While symptomatic improvements delay the clinical signs of disease progression, strangely they are dismissed as not having disease-modifying properties in that they do not change the underlying pathophysiology of AD. The modest effects of acetylcholinesterase inhibitors have led to increased efforts to find more effective therapies, with many prevention trials focusing on slowing the progression of dementia after onset.

We discuss in this section selected interventions that have been used in prevention and provide an overview of selected trials in Table 3. It has been difficult to prove efficacy for any intervention for prevention, disease-modification, or symptomatic improvement in dementia. Barriers include a relative dearth of potential, druggable agents, many potential targets, but lack of validated drug targets, and the complexities of planning and executing large trials. One strategy for prevention trials investigated the role of repurposed medications, i.e., marketed drugs which were approved by the FDA or products marketed as food supplements. As examples, this list includes antihypertensive medications, estrogen and progesterone for postmenopausal women, vitamin E and selenium, omega-3 fatty acids, and nonsteroidal anti-inflammatory drugs [36,37,38,39]. These investigations did not show beneficial effects on cognitive function or the onset of dementia and in some cases, outcomes with some interventional agents were worse compared with placebo [40].

A notable example of one of the early prevention trials is the effects of Ginkgo biloba standardized extract, conducted by DeKosky and colleagues [41]. G. biloba is a herbal extract that has been used in traditional Chinese medicine for centuries and is taken in some parts of the world to preserve memory [41]. EGb-761, a standardized extract of G. biloba leaves, shows increased antioxidant enzymatic activity in the hippocampus, striatum, and substantia nigra of rats; and the finding of oxidative stress in the brains of AD patients provides the translational rationale for prevention trials [42]. The DeKosky trial concluded that EGb-761 at 120 mg twice a day was not effective in reducing the overall incidence rate of dementia or AD in elderly subjects with normal cognition or MCI, nor did it slow the decline in participants with MCI [41].

Another primary prevention strategy is the development of novel therapeutics specifically against known pathophysiological targets. Aβ vaccination can theoretically leverage the immune system to generate antibodies that can target the amyloid-β proteins in AD brains by introducing an Aβ antigen to activate an adaptive immune response. In theory, depending on the antigen used, these antibodies against Aβ can interact with the pathological protein at several points during the aggregation process, leading to clearance at all stages from monomers to extracellular plaques. Another potential approach for these Aβ-lowering therapeutics is to disrupt the production of pathological Aβ protein. β-site amyloid precursor protein cleaving enzyme 1 (BACE-1) inhibition works on the principle of blocking the rate-limiting step of neurotoxic Aβ production, by preventing aberrant cleavage of amyloid precursor protein, eventually producing neurotoxic species such as Aβ42. Primary and secondary prevention trials of BACE-1 inhibitors were stopped early because of cognitive toxicity, possibly involving unknown off-target effects.

The Generation Study enrolled patients without cognitive impairment who were APOE4 homozygous carriers and at high risk for developing dementia before age 80 to investigate two anti-amyloid agents. Study participants were genotyped and randomized to receive either the anti-amyloid-β vaccine, CAD106, or the BACE-1 inhibitor, umibecestat, or both. Differences in levels of Aβ42 and Aβ40 were of interest. Neither interventional agent was successful, with off-target effects of umibecestat resulting in cognitive toxicity, and CAD106 showing low antibody titers and lack of efficacy.

Secondary and tertiary prevention trials are those undertaken in patients with prodromal, MCI, or mild-to-moderate AD with the aim to slow progression or improve symptoms by altering the underlying pathophysiology (Table 4). The majority of phase 3 secondary prevention trials investigating monoclonal antibodies (mAb) against Aβ require participants to be biomarker positive on Aβ PET scans. Bapineuzumab was one of the first anti-Aβ antibodies to be tested in trials and worked by clearing both soluble Aβ and aggregated amyloid fibrils. Bapineuzumab failed to show effectiveness on its primary and secondary outcomes in both its phase 2 and 3 trials. In a subset of participants who had pre- and post-treatment Aβ-PET, however, there was evidence that bapineuzumab was successful in clearing plaques [46]. Evidence emerged quickly in an early phase ascending dose trial that patients were developing MRI abnormalities, then interpreted as vasogenic edema, as well as confusion, cognitive worsening, or encephalopathy. This led to a conceptualization of “amyloid-related imaging abnormalities” (ARIA) to describe MRI changes associated with amyloid-reducing therapies—most commonly vasogenic edema/effusion (ARIA-E) and microhemorrhages and hemosiderosis (ARIA-H) [47]. ARIA has proven to be an ongoing challenge in trials of Aβ antibodies, accounting for over a third of significant adverse events in most reported Aβ-fibril antibody therapies, particularly at doses that better lower plaques.

Solanezumab is a monoclonal antibody which is designed to clear monomers by binding to the mid-domain of Aβ. While displaying a favorable safety profile in three phase 3 trials, including the third trial limited to participants with mild AD (EXPEDITION 3), solanezumab failed to show clinical benefits on its primary outcomes after 80 weeks of treatment [48]. As a result, efforts to use solanezumab as therapy for patients with diagnosed AD dementia were abandoned. Solanezumab also did not show evidence for effectiveness in preventing symptom progression in trial participants at risk for DIAD [45]. There is, however, a large, ongoing trial of solanezumab, the “A4” trial, for preclinical AD, defined as no or borderline cognitive impairment and a positive Aβ-plaque PET scan (see Tables 3 and 7).

The secondary prevention trials with the most notoriety are the truncated aducanumab, anti-Aß fibril antibody, phase 3, randomized placebo-controlled trial for early-stage Alzheimer’s. These trials, stopped for futility, were the basis upon which FDA gave aducanumab accelerated marketing approval in June 2021. The randomized-controlled trials, ENGAGE and EMERGE, recruited about 1650 patients each with prodromal AD or mild AD dementia for treatment with aducanumab. When stopped for futility, only about 55% of participants had the opportunity to be treated for the planned 18 months. Post hoc efficacy analyses showed discrepant outcomes with one showing a slight effect in favor of placebo, and the other a small − 0.39 drug-placebo difference on the CDR-sb primary outcomes. The trials also, as expected, showed markedly lowered amyloid plaques in each treatment group. The inconsistent and, if present, very small effect in one of the trials did not warrant marketing approval. However, in a controversial decision the FDA gave aducanumab-accelerated approval which does not require clinical benefit, but only an effect on a biomarker that is thought to indicate the likelihood of clinical benefit. Following this decision, the Center for Medicare and Medicaid Services decided not to provide reimbursement coverage to Medicare beneficiaries who might be prescribed aducanamab, reasoning that it was not a beneficial, safe, “reasonable or necessary” treatment. With the future of aducanumab uncertain, the FDA approval begs the question whether treatments that alter amyloid-β plaques but show no clinical benefit can be considered as successful treatments or secondary prevention measures.

There appears to have been a shift in research to using anti-amyloid-β antibodies earlier in the disease course. Several trials over the past decade have investigated their role in both primary and secondary prevention including A4 (solanezumab), AHEAD 3–45 (lecanemab), and TRAILBLAZER-ALZ-3 (donanemab) for “preclinical AD” (see Table 7), pragmatically defined as a positive amyloid-β biomarker and no or very slight memory impairment (Table 4).

Non-Pharmacological Interventions for Prevention

Non-pharmacological interventions can be used in tandem with drug treatments for prevention using similar clinical trial approaches. Multi-domain intervention trials are aimed toward the mitigation of modifiable risk factors by enhancing changes in lifestyle through the use of over-the-counter products, food supplements, nutrition, physical activity, and blood pressure management. Some of these interventions and trials are summarized in Table 5. Conducting prevention trials that involve multiple interventions can be complicated and involve a range of design considerations that are different from prevention trials that use only a single drug intervention and will often have less stringent eligibility criteria. Non-pharmacological interventions also provide an attractive prospect for preventative studies as these multi-domain lifestyle modifications are widely available to patients all over the world and are hypothesized to work together in a synergistic way to reduce the risk of AD.

One of the early trials to use the more holistic approach to risk modification and prevention of AD was the Multidomain Alzheimer Preventive Trial (MAPT) study. Undertaken in sites across France, patients from general medical practices were randomized into 4 groups to receive docosahexaenoic acid (DHA) or placebo alone or DHA/placebo as well as multi-domain intervention. The latter consisted of physical and cognitive training as well as detailed nutritional advice and close monitoring of any comorbid medical conditions. Participants were followed up for 3 years, with the primary outcome being progression of memory decline as measured by the free and cued selective reminding test (FCRST). The trial failed to show significant differences between any of the groups [53]. This trial also highlighted some of the challenges of conducting multi-domain interventional trials. For example, the difficulties in achieving double-blinding when patients are undergoing intensive, supervised activity and training as part of a lifestyle intervention. Another consideration, particularly when investigating food supplements, may be the variation in coincidental dietary intake between the participants, as participants may be receiving other sources of DHA through food that was not regulated in the trial.

Another important trial in multi-domain prevention is the Finnish Geriatric Intervention Study to Prevent Cognitive Impairment and Disability (FINGER), which was a randomized-controlled trial showing the potential of multi-domain lifestyle interventions to prevent cognitive decline in at-risk individuals. Here, “at-risk” was defined as CAIDE Dementia Risk Score of 6 points or more, with scores at mean or slightly below expected for their age on selected items of the CERAD neuropsychological battery (CERAD-NB) [54, 55]. Participants were randomized to receive specific nutrition, exercise, and cognitive training programs, comprising several hours per week, as well as close management of metabolic and vascular risk factors in the intervention group, or general health advice in the control group. The primary outcome was the change in cognition measured by a neuropsychological battery at 2 years, which is a short duration in the context of primary prevention. FINGER may be the only trial to report significant improvement on its primary endpoint neuropsychological battery but with a very small, standardized effect size of 0.10 [56].

Some non-pharmacological trials focus not on multi-domain interventions but on specific modifiable risk factors. The Systolic Blood Pressure Intervention Trial (SPRINT) was a large-scale, multicenter randomized-controlled trial that aimed to investigate any differences in intensive (systolic blood pressure < 120 mmHg) versus standard (SBP < 140 mmHg) blood pressure control on cardiovascular disease risk. Built into the study was the “SPRINT—Memory and cognition IN Decreased hypertension” (SPRINT-MIND) trial, to determine whether this more intensive hypertensive control would have any effect on the risk for MCI or dementia. Participants were eligible if they were 50 years or older, hypertensive and judged at risk of having increased vascular risk, though were excluded if they had a diagnosis of type 2 diabetes or history of stroke. These exclusions raise some concerns about external validity. Antihypertensive medications were used to achieve hypertensive control. However, it is notable that the study did not mandate any particular drugs as long as the systolic blood pressure was in the correct range. SPRINT was terminated early due to the significant benefits of maintaining systolic blood pressure less than 120 mmHg on cardiovascular risk and reduced cardiovascular events, leading to potential under-powering of the SPRINT-MIND trial [57]. The latter failed to show any significant difference on the primary outcome of onset of probable dementia, but reported modest reductions in the occurrence of MCI (hazard ratio = 0.8) and a combined rate of MCI and probable dementia (HR = 0.9) in the intensive blood pressure control group, which were secondary outcomes [58]. While the evidence from the SPRINT-MIND prevention study, as well as others, only show small and uncertain effects on risk reduction with antihypertensives, the World Health Organization included a recommendation of antihypertensives to hypertensive patients in their 2019 guidelines on reduction of risk for cognitive decline and dementia [59].

There were several trials investigating the effectiveness of exercise, particularly aerobic exercise, as a non-pharmacological primary prevention strategy for dementia. Modest cognitive benefit was seen in several small trials with sample sizes of 50 to 86, undertaken in participants with MCI on the basis of which the American Academy of Neurology practice guidelines were updated to include the recommendation of regular exercise for this population [60,61,62]. There is evidence as well for benefit for both aerobic exercise training compared to a less intensive “stretching and toning” in MCI, with both groups showing marginal improvement on neuropsychological testing, albeit with no significant change in measured imaging biomarkers [63]. However, two multicenter, randomized-controlled trials, with larger samples enrolled and longer periods of intervention, failed to show improvement in cognitive performance with aerobic exercise compared with control groups receiving health education in one study, or usual care in the other [64, 65]. Of these, the NeuroExercise trial also found improved cardiovascular fitness in the exercise intervention group, which may confer uncertain but potential benefits in the long-term progression of dementia [65]. Despite the inconclusive evidence, these trials highlight exercise as a potentially modifiable risk factor that requires further exploration.

Diagnostic Frameworks for Eligibility Criteria in Prevention Trials

It is important to consider the role of eligibility criteria in defining the populations under investigation and critical to creating a standardized language for investigators to use. There is a wide spectrum of clinical presentations—from the potentially difficult to define preclinical AD or MCI, all the way through to severe AD causing significant cognitive and functional impairment. In prevention trials, recruitment from cognitively normal but at-risk, MCI or mild AD populations is preferable, so there has been a need to develop frameworks to consistently describe these individuals. As it is almost impossible to identify those cognitively unimpaired, but potentially at-risk participants on clinical grounds, biomarkers have emerged in the research context, and form key components of many diagnostic frameworks. Table 6 compares commonly used diagnostic criteria by disease state. Heterogeneity in eligibility criteria poses challenges for interpretation and comparison between treatment trials.

The National Institute on Aging and Alzheimer’s Association (NIA-AA) produced a diagnostic framework for AD in 2011 for use in either a clinical or research setting. Before this, the 1984 National Institute of Neurological and Communicative Disorders and Stroke and the Alzheimer’s Disease and Related Disorders Association (NINCDS-ADRDA) Criteria was the mainstay of diagnostic definition, though was limited to categories of “definite,” “probable,” “possible,” and “unlikely” Alzheimer’s disease dementia and required histopathological evidence of AD for “definite” AD [70]. The 2011 NIA-AA framework aimed to account for advances in the understanding of biological processes, as well as the inclusion of the preclinical and MCI stages of AD. Clinical history and neuropsychological testing formed the basis of this framework, with genetic and biomarker status increasing certainty of AD diagnosis. These 2011 guidelines, while adding MCI due to AD and preclinical stages, adhered to the classifications of “probable AD dementia,” “possible AD dementia,” “probable or possible AD dementia with evidence of AD pathophysiological process,” and “dementia unlikely to be due to AD” and were not intended to be used to stage AD dementia once diagnosed [70, 71].

In 2018, an “NIA-AA research framework” was created, intended for use in a research context and not a clinical context. This meant a shift away from the use of clinical history and signs/symptoms towards a more biological-based definition, using the presence of amyloid-ß (A), pathologic tau (T), and neurodegeneration (N) biomarkers in an “ATN” classification system [73]. The ATN framework creates a precise language to describe the pathology represented by imaging and fluid biomarkers obtained from study populations and potentially renders biomarkers as surrogate clinical ratings for illness severity in place of cognitive, behavioral, or functional assessment. Furthermore, it is easy-to-use and adjustable for future research landscapes, allowing any novel contributory pathophysiological process or biomarker to be easily added to the framework. Compared to other frameworks, however, ATN is reductionist, simplifying Alzheimer diagnosis to a checklist of biomarkers and clinical staging wherein the biomarkers are presumed to be valid reflections of disease state. For example, if a cognitively unimpaired individual is found to be Aβ positive on PET scan, then they would qualify or receive a diagnosis of “preclinical AD” despite the fact there is a high likelihood that they will never go on to develop cognitive or functional decline. This biological definition has been adopted by the FDA and incorporated into their 2018 AD guidelines and has the potential to change the terms of what are considered clinical outcomes and for determining clinical benefit for regulatory and drug approval purposes [74].

The International Working Group research criteria (IWG) for the diagnosis of AD, first devised in 2007 and updated in 2014 (IWG-2), offers a more practical approach and uses both phenotypical and biomarker status, reserving diagnosis until there are clinical symptoms [72]. The IWG describes distinct categories along the spectrum of AD from preclinical to prodromal AD (i.e., MCI due to AD), and finally to AD dementia. Notably, the IWG criteria splits preclinical AD into two discrete groups, taking into consideration the ambiguity in the predictive reliability of positive biomarkers in cognitively unimpaired individuals. Firstly, those who are “asymptomatic at-risk for AD,” describing positive biomarker status but with no guarantee they will go on to develop AD, and secondly, “presymptomatic AD” to describe those with known autosomal-dominant mutations, who virtually certainly will develop AD. This “new lexicon” for AD aimed to provide a frame of reference that bridged both clinical and research settings, as well as supporting the distinction between “Alzheimer’s disease” and “Alzheimer’s pathology” [75].

Methods and Outcomes in Prevention Trials

A key consideration for any clinical trial is the methodology and measurable outcomes. Individual clinical rating scores such as ADAS-cog and CDR-sb have been useful as common score standards for cohort studies such as the Alzheimer’s Disease Neuroimaging Initiative and with the Uniform Data Set among the 38 AD Research Centers for quantifying cognitive improvement or decline. Yet, these scores have been criticized for not capturing the range of potential treatment-induced outcomes in trials. Composite scores such as iADRS, ADCOMS, and PACC attempt to address these shortcomings by using weighted averages of certain scores [76]. Endpoints such as MCI or dementia are also used in studies such as the TOMMORROW trial. These require more holistic and clinical judgment and often are overseen by an adjudication committee [77, 80].

It is remarkable that the same outcomes used for earlier symptomatic trials, for example the cholinesterase inhibitor trials in the 1990s, continue to be used in long-term trials, early-stage, and prevention trials. These include the ADAS-cog in both the 11-item and the extended 13- or 14- item versions that include delayed memory and executive function tasks, activities of daily living scales including the ADCS-ADLs, and more comprehensive or global assessments such as the Clinical Dementia Rating Scale, and the clinician’s global impression of change (CGIC). The ADAS-cog, first advanced in the 1980s, remains the primary neuropsychological outcome for most trials and is the backbone for the newer composite scales. Other neurocognitive scales and subscales may be used as additions.

The ADAS-cog was used in the first clinical trials of cholinesterase inhibitors, generally showing about a 2- to 3-point drug-placebo difference in 12- to 30-week-long trials [76]. Although considered an uncertain and trivial effect at the time, this difference is now considered large compared to the effects seen with amyloid antibodies and other putative disease-modifying drugs. The ADAS-cog proved to be sufficient to show symptomatic effects in acetylcholinesterase inhibitor trials while showing no overall significant effects in the mild-to-moderate AD memantine trials. However, its metrics, mode of administration, and limitations need to be understood. For example, the error scores are not interval scale, ordinal or hierarchic. A change in the number of points at one end of the scale is not the same as the same number of points in the middle. A patient could score better on the ADAS-cog and yet be more cognitively impaired than another patient. Errors in test administration are common and often systematic since certain errors are correlated with specific site locations. Therefore, real issues emerge about what the differences in scores between drug and placebo mean. For example, it is unclear if a 3-point mean difference between drug and placebo is clinically meaningful. Different neuropsychological batteries have been offered to replace the ADAS-cog, also without adequately considering the characteristics or clinical meaning of their scoring, and have not been fully accepted, yet they may be more sensitive to change in mild AD.

Table 7 summarizes trial design features. CSF tau changes have been shown to occur around 15 years before symptom onset [21, 78]. Eligibility for trials ranges from asymptomatic preclinical dementia participants for primary prevention studies if they are amyloid biomarker positive (or participants are considered “at-risk” if amyloid biomarkers are not required), to mild dementia for secondary or tertiary prevention. All secondary and tertiary prevention trials rely on amyloid biomarkers for eligibility, with some also requiring tau markers. As above, interventions range from psychosocial and multi-domain to nutritional supplements, repurposed drugs, and newly developed experimental medications and antibodies. Sample sizes are much larger for preclinical trials ranging from 1000 to over 5000 and proportionately smaller for prodromal and mild dementia secondary and tertiary prevention trials. Trial durations, similarly, are much longer for preclinical trials than for prodromal.

Why Most Prevention Clinical Trials are Challenging

Reasons primary and secondary prevention trials fail include, first and foremost, that the intervention is ineffective. Other reasons have to do with scientific inference, trial design, outcomes, assumptions, and execution. A main consideration is that observations that inform prevention approaches are made on cohorts, population-based, or epidemiologic samples. Here the observed risks, pathology, and pathological progression will likely not translate to controlled treatment trials or experiments where study samples are highly selective [79]. Moreover, most of these findings or observations are correlative, not causative. Observing plaques several years before symptoms or that no one develops Alzheimer’s disease without plaques does not mean that plaques are causative or valid disease targets.

Imprecision in estimating the intervention and dosage also undermines the ability to detect outcomes. This leads to errors in measurement and possibly misclassifications of participants’ eligibility or risk. There is a dearth of large and long-term RCTs that could serve as models for dementia prevention trials; and it may be unrealistic to expect that an RCT could be implemented to address the most important therapeutic questions. The closest models are large trials intended for other illnesses and outcomes, such as cancer and cardiovascular disease, into which a cognitive or dementia outcome sub-study is nested. Here, participants are selected for their risk status for the other illness and not for dementia or Alzheimer’s disease. As there is some evidence that people with cancer or taking some anti-neoplastic, or anti-inflammatory drug may have some protection against late-life cognitive impairment it is easy to see how dementia trials nested into very large trials for other conditions can be misleading (Table 3). Furthermore, once an experimental treatment has not proven effective, then there is a gray area between attempting to give the medication at even earlier time points or giving up altogether.

Randomized clinical trials in which the outcomes are clinical endpoints or stages, such as an MCI or dementia, are relatively uncommon. The TOMMORROW trial (Table 3) is an example wherein the outcome was the onset of “MCI due to AD” and was adjudicated by committee. Reasons that discrete clinical endpoints, stages, or diagnoses are often not used as outcomes are that the scale and investment required to do such trials are large. The TOMMORROW trial originally planned for enrollment of 5800 participants but terminated early after only 3494 had been enrolled. Here, it appeared that there were far fewer than expected MCI endpoints occurring early in follow-up, possibly disheartening the investigators [80]. Most cognitively unimpaired people in their 70s have a higher risk of developing dementia, but still, only a small proportion will develop dementia [81]. This proportion is generally found to be lower when actual clinical trials are undertaken. The research community may find more significant results by considering prevention trials developed using pooled previous clinical trial data.

Designers of prevention trials use a range of information to help plan trials and determine the extent of linkage between an intervention and its effect on a dementia-related outcome. These include animal models, pilot trials with small sample sizes and short durations, longer term tertiary prevention trials, and information from observational cohort studies such as Alzheimer’s Disease Neuroimaging Initiative (ADNI) or National Health and Nutrition Examination Survey [82]. This observational data can contribute to planning for dose–response correlations, proposed interventions, and clinically relevant outcomes. Cohort studies could provide information over a longer period than any planned prevention trial and can be used to suggest outcomes that may not have been realizable in previous clinical trials designs. They may also reveal different information on progression and outcomes than randomized interventional trials. However, passive observation over many years of community-dwelling people at risk for dementia living in non-regimented environments may not be the equivalent to clinical trial volunteers who agree to follow rules in a trial and who are followed for shorter periods of time. Studies that exemplify this include the national estimates of Alzheimer’s disease by Brookmeyer, and rates of dementia by age or microhemorrhages in the Rotterdam study [83, 84]. Ages are likely to be constrained in clinical trials and participants tend to be healthier than the overall at-risk populations due to restrictive inclusion criteria.

Targeted trials may control the population eligibility criteria and identify priorities and opportunities for interventions to define specific hypotheses [85]. A potential threat to an assumption that a cohort’s (such as the ADNI cohort) outcome is predictive of a randomized trial’s outcome is that cohorts are relatively unrestrained and based on choice, environments, participant preference, and convenience, while trial populations are more restrictive and interventions are randomly assigned. An example is including only participants with a positive amyloid PET scan in an RCT where it is later found that fewer than expected from observational data meet study criteria or progress to the endpoint of the trial. Prevention trials will require adjustments for environmental factors to obtain a valid estimate of the treatment effect on outcomes such as time-to-dementia.

Poor study design such as non-specific endpoints, inappropriate or constrained eligibility criteria, and small sample sizes contribute to the challenge of prevention trials. Endpoint selection is a difficult process, and poor selection makes interpretation of the findings challenging. Moreover, non-specific endpoints have been associated with increased costs of biomedical research [86]. In a standard clinical trial, a single endpoint may not capture much of the clinically meaningful or beneficial effects of an intervention. It is therefore remarkable that the endpoints for prevention trials are, by and large, the same as for the generally shorter symptomatic trials and have not changed over 30 years. The ADAS-cog, MMSE, ADL scales, CDR, and global scales have remained the same. The only subtle changes are that the ADAS-cog or the CDR might be designated as a primary outcome with the other as a secondary. More recently, these scales have been combined into “composite” scales, combining the ADAS-cog with the ADLs, or the elements of the CDR with items of the ADAS-cog and MMSE, or an added memory test might be bolted on. These sum-of-the-parts outcomes, of course, are no different than the parts themselves and are questionable advances [76].

Yet, setting up primary, secondary, and exploratory outcomes often lead to confusion and multiplicity when the primary endpoint is negative, failing to support the main hypothesis. Under this circumstance, secondary or tertiary outcomes often misdirect rather than support novel research questions. To be of value, an endpoint should capture the clinical outcome accurately and be measured easily as a part of routine clinical care. This is something that is usually not the case in Alzheimer’s or dementia trials [87].

In regards to eligibility criteria, a balance must be struck in that outcomes need to be specific enough to capture a change in the population in which one expects to see a treatment effect, but not so strict as to create challenges with enrollment or limit the external validity of the trial. If the sample size is too small, then there is not enough data to power the study. If the sample size is too large then recruitment may take too long, unnecessarily extending the experiment and delaying evidence synthesis. Including known risk factors for dementia and MCI in the eligibility criteria for prevention studies may also support the use of more effective sample sizes and shorter follow-up periods, while still capturing the proposed treatment effect. An effective design helps lay the groundwork for stronger statistical tests in analyzing the results.

Participant recruitment for dementia trials is challenging. There are many trials in which recruitment is prolonged over years and sites fail to meet enrollment goals [88, 89]. Recruitment methods are often ad hoc, left to individual sites, centralized advertising, or a recruitment agency. Finally, it is underappreciated that eligibility criteria used in dementia trials inherently tend to limit prospective participants to the higher educated, healthier, wealthier, and less culturally heterogeneous [90, 91]. This is a problem both for the internal and external validity of a trial, and ultimate acceptance of the outcomes.

Future Considerations

Moving forward we need to advance prevention trials design more directly than we do currently. Drugs and targets need some degree of validation before they are employed in large sample, long duration trials. It is necessary to know that the drug is engaging its target and inducing an effect. There are examples of very large trials launched where it is not known that the drug or intervention being tested is in fact exerting a biological effect. There are other trials where doses were increased midway through the trial because it became apparent that the initially assumed dosing was suboptimal. Changing up a trial that is underway substantially risks undermining validity unless it is carefully pre-planned as an adaptive design. An over-reliance on past development programs and trial methods—even as they are mostly negative and have shown methodological limitations—tends to recapitulate previous errors. The abundance of so far unsuccessful secondary prevention trials, mainly with amyloid antibodies, demonstrate this. The use of small, phase 2 proof-of-concept trials employing biomarkers as intermediate outcomes may also help to filter out unsuccessful interventions before investing time and resources on a large phase 3 and 4 trials.

Simplified diagnostic frameworks and eligibility criteria are needed. Prevention trials are intended as the equivalent to phase 3 or 4 trials. In principle, participants are expected to represent typical patients with the illness. The substantial majority of cognitively unimpaired, at-risk participants are not going to progress over the duration of a primary prevention trial. Similarly, most participants with mild cognitive impairment, with or without an amyloid or tau biomarker, will not meaningfully progress over the typical 1.5 years of treatment and follow-up in those secondary prevention trials. Applying complex eligibility criteria tends to further limit or skew the sample to the relatively healthier participants and further lowers the proportion of people who would progress. Often the effects of elaborate eligibility criteria are the inclusion of a relatively healthier, socially advantaged, and non-diverse group in preference to a more typically representative group. Realizing this, less weight ought to be placed on trying to bracket eligible participants within certain ranges of cognitive function, not too high, not too low, to require an educational threshold, or to require performance demands.

Improved understanding of causes and disease processes in dementia is associated with an expansion in novel potential therapeutics. Therefore, future trials may employ treatments that have not been as widely used and understood, as the many repurposed treatments used previously (Tables 3, 4, and 5 above). It is important to know the clinical pharmacology, potential therapeutic levels, tolerability, and longer term safety before launching large and long trials.

Simpler, more relevant outcomes than many of the newer composite scores need to be utilized [76]. Observational studies are already underway for the validation of some of these ratings, defining inter-test reliability and correlation with disease symptom progression [92]. It is important to ensure that statistically significant changes in these ratings correlate with clinically meaningful differences in the participants.

Current designs do not necessarily need to be replaced because, empirically, it would take a significant amount of time and effort to find the balance between generalizability and size. A sustainable approach would typically involve the consideration of several trial designs: targeted trials, platform studies, cluster randomized, stepped wedge, and futility trials. Targeted trials might be considered when there is a characteristic or perhaps biomarker that might be predictive of potential trial participants who would be more responsive to the particular intervention, compared to a control or placebo treatment. A simple, historical example are trials that select only APOE4 carriers when the intervention is expected to preferentially affect such participants. Targeted designs might be relatively more efficient than non-targeted designs with the right treatment and the right target, but the efficiency of such designs depends on the prevalence of the subgroup of people who respond preferentially, and the distribution of the treatment effect across the subgroups.

Platform trials are examples of adaptive trial design in which multiple interventions can be compared simultaneously under one protocol. These platform designs may share control and placebo groups. They might employ sequential interim analyses to help decide ineffective treatments so that losses can be stopped, and new treatments might be started. Efficiency of recruitment may be gained because the several treatments are conducted under the same protocol. Along with the several interim analyses, however, come various amendments needed to adjust the interventions or informed consent documents for the various treatments.

Cluster randomized trials compromise of the randomization of individual patients by identifying and randomizing existing groups (for example, residents of a specific assisted living facility or clinical practice) into treatment or control. These approaches can be substantially efficient. Risks of bias can be mitigated by using outcomes assessors who are blinded to the clinic group or even the trial itself. Stepped wedge cluster randomized-controlled trials employ cluster randomization but, rather than using parallel group assignment, the treatment clusters are made to crossover at fixed intervals or “steps” from control to intervention. A primary or secondary prevention trial might then progress from an initial period, in which few or none of the clusters receive intervention in a delayed start to treatment, to more clusters crossing over to receive intervention with each step. In this way, clusters contribute outcomes under both control and intervention conditions.

Trials need to be rigorous in terms of maintaining randomized treatment allocation and minimizing the potential for bias. As discussed above, the large, traditional trials usually done by industry and large academic groups can have a big impact, however, as we have seen, negative results are the rule, and positive outcomes controversial. The controversy is often due to planning for small, clinically unimportant effects, and not accepting the test on the primary outcome. Outcomes of big trials may be less certain in part because expected effect sizes are small, dropouts large, and compliance demands unmet, and p values greater than 0.05. Moreover, increasing sample size and double-blinded treatment allocation does not necessarily ensure rigor.

Conclusion

Most prevention trials for dementia or Alzheimer’s are single pharmacologic interventions targeting a point on the amyloid cascade. Several multi-domain trials, have considered more complex interventions such as controlling lifestyle factors, blood pressure, diet and weight, and activity. The main challenges of both kinds of trials are the variations and validity of the diagnostic frameworks, trial methods, eligibility criteria, and measured outcomes. In addition, there is difficulty designing trials of adequate duration that can modify risk factors since most people in the demographic of interest do not develop dementia within any reasonable time frame, making it challenging to recruit to the scale of large RCTs. We hope this summary of the current state of prevention trials may be used to stimulate discussion in the future of AD treatments.

References

Patterson C. World Alzheimer report. Alzheimers Dis Int. 2018.

Hardy JA, Higgins GA. Alzheimer’s disease: the amyloid cascade hypothesis. Science. 1992;256:184–5. https://doi.org/10.1126/science.1566067.

Karran E, Mercken M, De Strooper B. The amyloid cascade hypothesis for Alzheimer’s disease: an appraisal for the development of therapeutics. Nat Rev Drug Discov. 2011;10:698–712. https://doi.org/10.1038/nrd3505.

Blessed G, Tomlinson BE, Roth M. The association between quantitative measures of dementia and of senile change in the cerebral grey matter of elderly subjects. Br J Psychiatry J Ment Sci. 1968;114:797–811. https://doi.org/10.1192/bjp.114.512.797.

Brookmeyer R, Evans DA, Hebert L, Langa KM, Heeringa SG, Plassman BL, et al. National estimates of the prevalence of Alzheimer’s disease in the United States. Alzheimers Dement J Alzheimers Assoc. 2011;7:61–73. https://doi.org/10.1016/j.jalz.2010.11.007.

Livingston G, Huntley J, Sommerlad A, Ames D, Ballard C, Banerjee S, et al. Dementia prevention, intervention, and care: 2020 report of the Lancet Commission. Lancet Lond Engl. 2020;396:413–46. https://doi.org/10.1016/S0140-6736(20)30367-6.

Raz L, Knoefel J, Bhaskar K. The neuropathology and cerebrovascular mechanisms of dementia. J Cereb Blood Flow Metab. 2016;36:172–86. https://doi.org/10.1038/jcbfm.2015.164.

Oliver DMA, Reddy PH. Small molecules as therapeutic drugs for Alzheimer’s disease. Mol Cell Neurosci. 2019;96:47–62. https://doi.org/10.1016/j.mcn.2019.03.001.

Norton S, Matthews FE, Barnes DE, Yaffe K, Brayne C. Potential for primary prevention of Alzheimer’s disease: an analysis of population-based data. Lancet Neurol. 2014;13:788–94. https://doi.org/10.1016/S1474-4422(14)70136-X.

Lin FR, Niparko JK, Ferrucci L. Hearing loss prevalence in the United States. Arch Intern Med. 2011;171:1851–3. https://doi.org/10.1001/archinternmed.2011.506.

Loughrey DG, Kelly ME, Kelley GA, Brennan S, Lawlor BA. Association of age-related hearing loss with cognitive function, cognitive impairment, and dementia: a systematic review and meta-analysis. JAMA Otolaryngol- Head Neck Surg. 2018;144:115–26. https://doi.org/10.1001/jamaoto.2017.2513.

Ray J, Popli G, Fell G. Association of cognition and age-related hearing impairment in the English longitudinal study of ageing. JAMA Otolaryngol- Head Neck Surg. 2018;144:876–82. https://doi.org/10.1001/jamaoto.2018.1656.

Peters R, Ee N, Peters J, Booth A, Mudway I, Anstey KJ. Air pollution and dementia: a systematic review. J Alzheimers Dis JAD. 2019;70:S145–63. https://doi.org/10.3233/JAD-180631.

Weingarten MD, Lockwood AH, Hwo SY, Kirschner MW. A protein factor essential for microtubule assembly. Proc Natl Acad Sci U S A. 1975;72:1858–62. https://doi.org/10.1073/pnas.72.5.1858.

Alonso AC, Grundke-Iqbal I, Iqbal K. Alzheimer’s disease hyperphosphorylated tau sequesters normal tau into tangles of filaments and disassembles microtubules. Nat Med. 1996;2:783–7. https://doi.org/10.1038/nm0796-783.

Mandelkow E-M, Stamer K, Vogel R, Thies E, Mandelkow E. Clogging of axons by tau, inhibition of axonal traffic and starvation of synapses. Neurobiol Aging. 2003;24:1079–85. https://doi.org/10.1016/j.neurobiolaging.2003.04.007.

Wang X, Wang W, Li L, Perry G, Lee H, Zhu X. Oxidative stress and mitochondrial dysfunction in Alzheimer’s disease. Biochim Biophys Acta. 2014;1842:1240–7. https://doi.org/10.1016/j.bbadis.2013.10.015.

Praticò D, Clark CM, Liun F, Rokach J, Lee VY-M, Trojanowski JQ. Increase of brain oxidative stress in mild cognitive impairment: a possible predictor of Alzheimer disease. Arch Neurol. 2002;59:972–6. https://doi.org/10.1001/archneur.59.6.972.

Van Cauwenberghe C, Van Broeckhoven C, Sleegers K. The genetic landscape of Alzheimer disease: clinical implications and perspectives. Genet Med. 2016;18:421–30. https://doi.org/10.1038/gim.2015.117.

Ryman DC, Acosta-Baena N, Aisen PS, Bird T, Danek A, Fox NC, et al. Symptom onset in autosomal dominant Alzheimer disease: a systematic review and meta-analysis. Neurology. 2014;83:253–60. https://doi.org/10.1212/WNL.0000000000000596.

Bateman RJ, Xiong C, Benzinger TLS, Fagan AM, Goate A, Fox NC, et al. Clinical and biomarker changes in dominantly inherited Alzheimer’s disease. N Engl J Med. 2012;367:795–804. https://doi.org/10.1056/NEJMoa1202753.

Corder EH, Saunders AM, Strittmatter WJ, Schmechel DE, Gaskell PC, Small GW, et al. Gene dose of apolipoprotein E type 4 allele and the risk of Alzheimer’s disease in late onset families. Science. 1993;261:921–3. https://doi.org/10.1126/science.8346443.

Polvikoski T, Sulkava R, Haltia M, Kainulainen K, Vuorio A, Verkkoniemi A, et al. Apolipoprotein E, dementia, and cortical deposition of beta-amyloid protein. N Engl J Med. 1995;333:1242–7. https://doi.org/10.1056/NEJM199511093331902.

Grupe A, Abraham R, Li Y, Rowland C, Hollingworth P, Morgan A, et al. Evidence for novel susceptibility genes for late-onset Alzheimer’s disease from a genome-wide association study of putative functional variants. Hum Mol Genet. 2007;16:865–73. https://doi.org/10.1093/hmg/ddm031.

Malhotra AK, Kestler LJ, Mazzanti C, Bates JA, Goldberg T, Goldman D. A functional polymorphism in the COMT gene and performance on a test of prefrontal cognition. Am J Psychiatry. 2002;159:652–4. https://doi.org/10.1176/appi.ajp.159.4.652.

Ulrich JD, Ulland TK, Colonna M, Holtzman DM. Elucidating the role of TREM2 in Alzheimer’s disease. Neuron. 2017;94:237–48. https://doi.org/10.1016/j.neuron.2017.02.042.

Cruchaga C, Nowotny P, Kauwe JSK, Ridge PG, Mayo K, Bertelsen S, et al. Association and expression analyses with single-nucleotide polymorphisms in TOMM40 in Alzheimer disease. Arch Neurol. 2011;68:1013–9. https://doi.org/10.1001/archneurol.2011.155.

Chiba-Falek O, Gottschalk WK, Lutz MW. The effects of the TOMM40 poly-T alleles on Alzheimer’s disease phenotypes. Alzheimers Dement J Alzheimers Assoc. 2018;14:692–8. https://doi.org/10.1016/j.jalz.2018.01.015.

Roses A, Sundseth S, Saunders A, Gottschalk W, Burns D, Lutz M. Understanding the genetics of APOE and TOMM40 and role of mitochondrial structure and function in clinical pharmacology of Alzheimer’s disease. Alzheimers Dement J Alzheimers Assoc. 2016;12:687–94. https://doi.org/10.1016/j.jalz.2016.03.015.

Tiraboschi P, Hansen LA, Alford M, Sabbagh MN, Schoos B, Masliah E, et al. Cholinergic dysfunction in diseases with Lewy bodies. Neurology. 2000;54:407–11. https://doi.org/10.1212/wnl.54.2.407.

Piggott MA, Marshall EF, Thomas N, Lloyd S, Court JA, Jaros E, et al. Striatal dopaminergic markers in dementia with Lewy bodies, Alzheimer’s and Parkinson’s diseases: rostrocaudal distribution. Brain J Neurol. 1999;122(Pt 8):1449–68. https://doi.org/10.1093/brain/122.8.1449.

Huang L-K, Chao S-P, Hu C-J. Clinical trials of new drugs for Alzheimer disease. J Biomed Sci. 2020;27:18. https://doi.org/10.1186/s12929-019-0609-7.

Winblad B, Kilander L, Eriksson S, Minthon L, Båtsman S, Wetterholm A-L, et al. Donepezil in patients with severe Alzheimer’s disease: double-blind, parallel-group, placebo-controlled study. Lancet Lond Engl. 2006;367:1057–65. https://doi.org/10.1016/S0140-6736(06)68350-5.

Seltzer B, Zolnouni P, Nunez M, Goldman R, Kumar D, Ieni J, et al. Efficacy of donepezil in early-stage Alzheimer disease: a randomized placebo-controlled trial. Arch Neurol. 2004;61:1852–6. https://doi.org/10.1001/archneur.61.12.1852.

Petersen RC, Smith GE, Waring SC, Ivnik RJ, Tangalos EG, Kokmen E. Mild cognitive impairment: clinical characterization and outcome. Arch Neurol. 1999;56:303–8. https://doi.org/10.1001/archneur.56.3.303.

Kryscio RJ, Abner EL, Schmitt FA, Goodman PJ, Mendiondo M, Caban-Holt A, et al. A randomized controlled Alzheimer’s disease prevention trial’s evolution into an exposure trial: the PREADViSE Trial. J Nutr Health Aging. 2013;17:72–5. https://doi.org/10.1007/s12603-012-0083-3.

Peters R, Beckett N, Forette F, Tuomilehto J, Clarke R, Ritchie C, et al. Incident dementia and blood pressure lowering in the Hypertension in the Very Elderly Trial cognitive function assessment (HYVET-COG): a double-blind, placebo controlled trial. Lancet Neurol. 2008;7:683–9. https://doi.org/10.1016/S1474-4422(08)70143-1.

Alzheimer’s Disease Anti-inflammatory Prevention Trial Research Group. Results of a follow-up study to the randomized Alzheimer’s Disease Anti-inflammatory Prevention Trial (ADAPT). Alzheimers Dement J Alzheimers Assoc. 2013;9:714–23. https://doi.org/10.1016/j.jalz.2012.11.012.

Craig MC, Maki PM, Murphy DGM. The Women’s Health Initiative Memory Study: findings and implications for treatment. Lancet Neurol. 2005;4:190–4. https://doi.org/10.1016/S1474-4422(05)01016-1.

Schneider LS. Prevention therapeutics of dementia. Alzheimers Dement J Alzheimers Assoc. 2008;4:S122-130. https://doi.org/10.1016/j.jalz.2007.11.005.

DeKosky ST, Williamson JD, Fitzpatrick AL, Kronmal RA, Ives DG, Saxton JA, et al. Ginkgo biloba for prevention of dementia: a randomized controlled trial. JAMA. 2008;300:2253–62. https://doi.org/10.1001/jama.2008.683.

Bridi R, Crossetti FP, Steffen VM, Henriques AT. The antioxidant activity of standardized extract of Ginkgo biloba (EGb 761) in rats. Phytother Res PTR. 2001;15:449–51. https://doi.org/10.1002/ptr.814.

ADAPT Research Group, Martin BK, Szekely C, Brandt J, Piantadosi S, Breitner JCS, et al. Cognitive function over time in the Alzheimer’s Disease Anti-inflammatory Prevention Trial (ADAPT): results of a randomized, controlled trial of naproxen and celecoxib. Arch Neurol. 2008;65:896–905. https://doi.org/10.1001/archneur.2008.65.7.nct70006.

Vellas B, Coley N, Ousset PJ, Berrut G, Dartigues JF, Dubois B, et al. GuidAge Study Group. Long-term use of standardised Ginkgo biloba extract for the prevention of Alzheimer’s disease (GuidAge): a randomised placebo-controlled trial. Lancet Neurol. 2012;11(10):851–9. https://doi.org/10.1016/S1474-442(12)70206-5.

Salloway S, Farlow M, McDade E, Clifford DB, Wang G, Llibre-Guerra JJ, Hitchcock JM, Mills SL, Santacruz AM, Aschenbrenner AJ, Hassenstab J, et al. Dominantly inherited alzheimer Network-trials unit. A trial of gantenerumab or solanezumab in dominantly inherited Alzheimer’s disease. Nat Med. 2021;27(7):1187–96. https://doi.org/10.1038/s41591-021-01369-8.

Rinne JO, Brooks DJ, Rossor MN, Fox NC, Bullock R, Klunk WE, et al. 11C-PiB PET assessment of change in fibrillar amyloid-beta load in patients with Alzheimer’s disease treated with bapineuzumab: a phase 2, double-blind, placebo-controlled, ascending-dose study. Lancet Neurol. 2010;9:363–72. https://doi.org/10.1016/S1474-4422(10)70043-0.

Sperling RA, Jack CR, Black SE, Frosch MP, Greenberg SM, Hyman BT, et al. Amyloid-related imaging abnormalities in amyloid-modifying therapeutic trials: recommendations from the Alzheimer’s Association Research Roundtable Workgroup. Alzheimers Dement. 2011;7:367–85. https://doi.org/10.1016/j.jalz.2011.05.2351.

Honig LS, Vellas B, Woodward M, Boada M, Bullock R, Borrie M, et al. Trial of solanezumab for mild dementia due to Alzheimer’s disease. N Engl J Med. 2018;378:321–30. https://doi.org/10.1056/NEJMoa1705971.

Petersen RC, Thomas RG, Grundman M, Bennett D, Doody R, Ferris S, et al. Vitamin E and donepezil for the treatment of mild cognitive impairment. N Engl J Med. 2005;352(23):2379–88. https://doi.org/10.1056/NEJMoa050151.

Gilman S, Koller M, Black RS, Jenkins L, Griffith SG, Fox NC, et al. Clinical effects of Abeta immunization (AN1792) in patients with AD in an interrupted trial. Neurology. 2005;64:1553–62. https://doi.org/10.1212/01.WNL.0000159740.16984.3C.

Salloway S, Sperling R, Fox NC, Blennow K, Klunk W, Raskind M, et al. Two phase 3 trials of bapineuzumab in mild-to-moderate Alzheimer’s disease. N Engl J Med. 2014;370:322–33. https://doi.org/10.1056/NEJMoa1304839.

Mintun MA, Lo AC, Duggan Evans C, Wessels AM, Ardayfio PA, Andersen SW, et al. Donanemab in early Alzheimer’s disease. N Engl J Med. 2021;384:1691–704. https://doi.org/10.1056/NEJMoa2100708.

Andrieu S, Guyonnet S, Coley N, Cantet C, Bonnefoy M, Bordes S, et al. Effect of long-term omega 3 polyunsaturated fatty acid supplementation with or without multidomain intervention on cognitive function in elderly adults with memory complaints (MAPT): a randomised, placebo-controlled trial. Lancet Neurol. 2017;16:377–89. https://doi.org/10.1016/S1474-4422(17)30040-6.

Chandler MJ, Lacritz LH, Hynan LS, Barnard HD, Allen G, Deschner M, et al. A total score for the CERAD neuropsychological battery. Neurology. 2005;65:102–6. https://doi.org/10.1212/01.wnl.0000167607.63000.38.

Rossetti HC, Munro Cullum C, Hynan LS, Lacritz L. The CERAD Neuropsychological Battery total score and the progression of Alzheimer’s disease. Alzheimer Dis Assoc Disord. 2010;24:138–42. https://doi.org/10.1097/WAD.0b013e3181b76415.

Ngandu T, Lehtisalo J, Solomon A, Levälahti E, Ahtiluoto S, Antikainen R, et al. A 2 year multidomain intervention of diet, exercise, cognitive training, and vascular risk monitoring versus control to prevent cognitive decline in at-risk elderly people (FINGER): a randomised controlled trial. Lancet Lond Engl. 2015;385:2255–63. https://doi.org/10.1016/S0140-6736(15)60461-5.

SPRINT Research Group, Wright JT, Williamson JD, Whelton PK, Snyder JK, Sink KM, et al. A randomized trial of intensive versus standard blood-pressure control. N Engl J Med. 2015;373:2103–16. https://doi.org/10.1056/NEJMoa1511939.

SPRINT MIND Investigators for the SPRINT Research Group, Williamson JD, Pajewski NM, Auchus AP, Bryan RN, Chelune G, et al. Effect of intensive vs standard blood pressure control on probable dementia: a randomized clinical trial. JAMA. 2019;321:553–61. https://doi.org/10.1001/jama.2018.21442.

World Health Organitzation. Risk reduction of cognitive decline and dementia. 2019. https://www.who.int/publications-detail-redirect/risk-reduction-of-cognitive-decline-and-dementia. Accessed 28 Jan 2022.

Suzuki T, Shimada H, Makizako H, Doi T, Yoshida D, Tsutsumimoto K, et al. Effects of multicomponent exercise on cognitive function in older adults with amnestic mild cognitive impairment: a randomized controlled trial. BMC Neurol. 2012;12:128. https://doi.org/10.1186/1471-2377-12-128.

Nagamatsu LS, Handy TC, Hsu CL, Voss M, Liu-Ambrose T. Resistance training promotes cognitive and functional brain plasticity in seniors with probable mild cognitive impairment. Arch Intern Med. 2012;172:666–8. https://doi.org/10.1001/archinternmed.2012.379.

Petersen RC, Lopez O, Armstrong MJ, Getchius TSD, Ganguli M, Gloss D, et al. Practice guideline update summary: mild cognitive impairment: report of the Guideline Development, Dissemination, and Implementation Subcommittee of the American Academy of Neurology. Neurology. 2018;90:126–35. https://doi.org/10.1212/WNL.0000000000004826.

Tarumi T, Rossetti H, Thomas BP, Harris T, Tseng BY, Turner M, et al. Exercise training in amnestic mild cognitive impairment: a one-year randomized controlled trial. J Alzheimers Dis JAD. 2019;71:421–33. https://doi.org/10.3233/JAD-181175.

Sink KM, Espeland MA, Castro CM, Church T, Cohen R, Dodson JA, et al. Effect of a 24-Month physical activity intervention vs health education on cognitive outcomes in sedentary older adults: the LIFE randomized trial. JAMA. 2015;314:781–90. https://doi.org/10.1001/jama.2015.9617.

Stuckenschneider T, Sanders ML, Devenney KE, Aaronson JA, Abeln V, Claassen JAHR, et al. NeuroExercise: the effect of a 12-month exercise intervention on cognition in mild cognitive impairment—a multicenter randomized controlled trial. Front Aging Neurosci. 2021;12.

Kivipelto M, Solomon A, Ahtiluoto S, Ngandu T, Lehtisalo J, Antikainen R, et al. The Finnish geriatric intervention study to prevent cognitive impairment and disability (FINGER): study design and progress. Alzheimers Dement J Alzheimers Assoc. 2013;9:657–65. https://doi.org/10.1016/j.jalz.2012.09.012.

van Charante EPM, Richard E, Eurelings LS, van Dalen J-W, Ligthart SA, van Bussel EF, et al. Effectiveness of a 6-year multidomain vascular care intervention to prevent dementia (preDIVA): a cluster-randomised controlled trial. Lancet Lond Engl. 2016;388:797–805. https://doi.org/10.1016/S0140-6736(16)30950-3.

Richard E, van Charante EPM, Hoevenaar-Blom MP, Coley N, Barbera M, van der Groep A, et al. Healthy ageing through internet counselling in the elderly (HATICE): a multinational, randomised controlled trial. Lancet Digit Health. 2019;1:e424–34. https://doi.org/10.1016/S2589-7500(19)30153-0.

Kivipelto M, Mangialasche F, Snyder HM, Allegri R, Andrieu S, Arai H, et al. World-Wide FINGERS Network: a global approach to risk reduction and prevention of dementia. Alzheimers Dement. 2020;16:1078–94. https://doi.org/10.1002/alz.12123.

McKhann GM, Knopman DS, Chertkow H, Hyman BT, Jack CR, Kawas CH, et al. The diagnosis of dementia due to Alzheimer’s disease: recommendations from the National Institute on Aging-Alzheimer’s Association workgroups on diagnostic guidelines for Alzheimer’s disease. Alzheimers Dement J Alzheimers Assoc. 2011;7:263–9. https://doi.org/10.1016/j.jalz.2011.03.005.

Albert MS, DeKosky ST, Dickson D, Dubois B, Feldman HH, Fox NC, et al. The diagnosis of mild cognitive impairment due to Alzheimer’s disease: recommendations from the National Institute on Aging-Alzheimer’s Association workgroups on diagnostic guidelines for Alzheimer’s disease. Alzheimers Dement J Alzheimers Assoc. 2011;7:270–9. https://doi.org/10.1016/j.jalz.2011.03.008.

Dubois B, Feldman HH, Jacova C, Hampel H, Molinuevo JL, Blennow K, et al. Advancing research diagnostic criteria for Alzheimer’s disease: the IWG-2 criteria. Lancet Neurol. 2014;13:614–29. https://doi.org/10.1016/S1474-4422(14)70090-0.

Jack CR, Bennett DA, Blennow K, Carrillo MC, Dunn B, Haeberlein SB, et al. NIA-AA Research Framework: toward a biological definition of Alzheimer’s disease. Alzheimers Dement J Alzheimers Assoc. 2018;14:535–62. https://doi.org/10.1016/j.jalz.2018.02.018.

Center for Drug Evaluation and Research. Alzheimer’s disease: developing drugs for treatment guidance for industry. US Food Drug Adm. 2020. https://www.fda.gov/regulatory-information/search-fda-guidance-documents/alzheimers-disease-developing-drugs-treatment-guidance-industy. Accessed 21 Feb 2018.

Dubois B, Feldman HH, Jacova C, Cummings JL, Dekosky ST, Barberger-Gateau P, et al. Revising the definition of Alzheimer’s disease: a new lexicon. Lancet Neurol. 2010;9:1118–27. https://doi.org/10.1016/S1474-4422(10)70223-4.

Schneider LS, Goldberg TE. Composite cognitive and functional measures for early stage Alzheimer’s disease trials. Alzheimers Dement Diagn Assess Dis Monit. 2020;12:e12017. https://doi.org/10.1002/dad2.12017.

Burns DK, Chiang C, Welsh-Bohmer KA, Brannan SK, Culp M, O’Neil J, et al. The TOMMORROW study: design of an Alzheimer’s disease delay-of-onset clinical trial. Alzheimers Dement Transl Res Clin Interv. 2019;5:661–70. https://doi.org/10.1016/j.trci.2019.09.010.

Dubois B, Hampel H, Feldman HH, Scheltens P, Aisen P, Andrieu S, et al. Preclinical Alzheimer’s disease: definition, natural history, and diagnostic criteria. Alzheimers Dement J Alzheimers Assoc. 2016;12:292–323. https://doi.org/10.1016/j.jalz.2016.02.002.

Ioannidis JPA. Why Most Published research findings are false. PLoS Med. 2005;2:e124. https://doi.org/10.1371/journal.pmed.0020124.

Burns DK, Alexander RC, Welsh-Bohmer KA, Culp M, Chiang C, O’Neil J, et al. Safety and efficacy of pioglitazone for the delay of cognitive impairment in people at risk of Alzheimer’s disease (TOMMORROW): a prognostic biomarker study and a phase 3, randomised, double-blind, placebo-controlled trial. Lancet Neurol. 2021;20:537–47. https://doi.org/10.1016/S1474-4422(21)00043-0.