Abstract

The dissemination of disinformation has become a formidable weapon, with nation-states exploiting social media platforms to engineer narratives favorable to their geopolitical interests. This study delved into Russia’s orchestrated disinformation campaign, in three times periods of the 2022 Russian-Ukraine War: its incursion, its midpoint and the Ukrainian Kherson counteroffensive. This period is marked by a sophisticated blend of bot-driven strategies to mold online discourse. Utilizing a dataset derived from Twitter, the research examines how Russia leveraged automated agents to advance its political narrative, shedding light on the global implications of such digital warfare and the swift emergence of counter-narratives to thwart the disinformation campaign. This paper introduces a methodological framework that adopts a multiple-analysis model approach, initially harnessing unsupervised learning techniques, with TweetBERT for topic modeling, to dissect disinformation dissemination within the dataset. Utilizing Moral Foundation Theory and the BEND Framework, this paper dissects social-cyber interactions in maneuver warfare, thereby understanding the evolution of bot tactics employed by Russia and its counterparts within the Russian-Ukraine crisis. The findings highlight the instrumental role of bots in amplifying political narratives and manipulating public opinion, with distinct strategies in narrative and community maneuvers identified through the BEND framework. Moral Foundation Theory reveals how moral justifications were embedded in these narratives, showcasing the complexity of digital propaganda and its impact on public perception and geopolitical dynamics. The study shows how pro-Russian bots were used to foster a narrative of protection and necessity, thereby seeking to legitimize Russia’s actions in Ukraine whilst degrading both NATO and Ukraine’s actions. Simultaneously, the study explores the resilient counter-narratives of pro-Ukraine forces, revealing their strategic use of social media platforms to counteract Russian disinformation, foster global solidarity, and uphold democratic narratives. These efforts highlight the emerging role of social media as a digital battleground for narrative supremacy, where both sides leverage information warfare tactics to sway public opinion.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In today’s age of social media, state actors utilize digital propaganda and manipulation to shape narratives, and foster discord to push their geopolitical agendas. This form of propaganda is a calculated and coordinated process designed to disseminate and amplify deceptive content across multiple social media platforms (Jowett and O’Donnell 2012). The Russian state’s persistent efforts to create confusion and chaos within social media mediums to achieve their goal is a testament to the systematic manipulation that they employ (Council 2022). For instance, during the Crimea crisis in 2014, the Russian state employed an extensive network of bots and trolls to flood social media platforms with pro-Russian stances, spreading misinformation about the situation in Ukraine and creating a narrative that justified its annexation of Crimea (Helmus et al. 2018). This strategy exemplifies the principles of social cybersecurity, which focuses on understanding and forecasting cyber-mediated changes in human behavior, as well as social, cultural, and political outcomes (Carley 2020).

With diminishing international prestige, the Russian state employs a situational and diligence-driven approach, meticulously exploiting vulnerabilities to their advantage (Berls 2019). This approach is demonstrated by Russia’s Internet Research Agency (IRA), known for its role in online influence operations. The IRA carefully crafts messages that are tailored to specific audiences and use data analytics to maximize the impact of their campaigns (Robert S. Mueller 2019), a tactic that aligns with the methodologies used in social cybersecurity for analyzing digital fingerprints of state-led propaganda campaigns (Carley 2020). This is further shown in Syria where Russian forces used disinformation campaigns as a tool to weaken the opposition forces and shape international perception of the conflict, including spreading false narratives about the actions of the rebel groups and the humanitarian situation (Paul and Matthews 2016).

Putin’s regime’s information operation campaigns against Ukraine are a significant development in their warfare strategy, with disinformation campaigns becoming an integral part of their military operations. The development of Russia’s “Gerasimov Doctrine,” which emphasizes the role of psychological warfare, cyber operations, and disinformation campaigns, marks this new shift in their military strategy (Fridmam 2019). This doctrine emphasizes the importance of non-military tactics, such as disinformation campaigns to achieve strategic military objectives. The Russian state has continuously increased the use of cyber warfare in its military objectives, utilizing social media platforms to spread its objectives through disinformation campaigns to curate public opinion. Through bot-driven strategies, the Russian state aims to polarize communities and nations, destabilizing political stability while undermining democratic values under a facade of disinformation operations (Schwartz 2017).

The escalation of Russian disinformation operations following the February 2022 invasion of Ukraine was met with a robust Ukrainian response. Ukraine has strengthened its information and media resilience by establishing countermeasures against Russian narratives, including disseminating accurate information and regulating known Russian-affiliated media outlets (Paul and Matthews 2016).

To understand the complexities of information warfare campaigns and counter-narratives to those campaigns, this research studies the impact of these bot-driven strategies on social media platforms. The study uses a blend of stance analysis, topic modeling, network dynamics, and information warfare analysis, integrated with principles of social cybersecurity, to understand the prominent themes, and influence that bot communities have on platforms like Twitter, while also exposing the most influential actors and communication patterns.

Research Question: The central question of this investigation is: How have bot-driven strategies influenced the landscape of digital propaganda and counter-narratives? We follow up by inquiring, what are the implications of these strategies for Ukraine’s political and democratic landscape and the broader geopolitical arena.

This paper seeks to understand the role that bot communities have in the propagation and amplification of these narratives that nation-states are pushing. By examining only bot-driven ecosystems and their effectiveness in promoting Russia’s political agenda through narrative manipulation, we aim to assess the global impact that these bot communities have in achieving their overall strategic objectives.

It is important to note that when referring to ’Russia’ in this paper, we distinguish between the ’Russian state’, ’Putin’s regime’, and the broader population of Russia. The term ’Russian state’ refers to the governmental and institutional structures of Russia. ’Putin’s regime’ specifically refers to the current administration and its policies under President Vladimir Putin. Meanwhile, ’Russia’ encompasses a diverse populace, many of whom may not align with Putin’s strategies or values but are unable to protest due to political repression. This distinction is crucial for understanding the multifaceted nature of Russian involvement in information warfare and the varied perspectives within the country.

The study will expand this research to include an analysis of the counter-narrative of the overwhelming support for Ukraine, which has also been overwhelmingly exemplified within these bot communities. Utilizing BotHunter, a tool specifically designed to detect bot activity (Beskow and Carley 2018), this research will identify and analyze the bot networks that have been central to the propagation of this support. This counter-narrative, emerging from the data and shaped by bot-driven dialogues, represents a significant aspect of the overall response to the conflict.

By integrating the following: the BEND framework to analyze information warfare strategies, TweetBERT’s domain-specific language modeling, and the moral compass provided by Moral Foundations Theory, with insights from social cybersecurity, this study aims to provide a comprehensive understanding of the strategic narratives and counter-narratives in the wake of the conflict. The goal is to contribute a detailed analysis of what these effects have on geopolitical stability and to delineate the methods by which narratives can be both a weapon of division and a shield of unity.

2 Literature review

Russian disinformation tactics

The Russian state’s disinformation tactics have substantially evolved over the last several years, especially since the 2008 incursion into Georgia. Their tactics intensified during the 2014 annexation of Crimea and have continued vigorously throughout the ongoing conflicts in Ukraine and Syria. These tactics are not only a continuation of Cold War-era methods but also leverage the vast capabilities of modern technology and media (Paul and Matthews 2016). The digital landscape has become a fertile ground for Russia to deploy an array of propaganda tools, including the strategic use of bots, which create noise and spread disinformation at an unprecedented scale (Politico 2023).

The modus operandi of Russian disinformation has been aptly termed “the firehose of falsehood,” characterized by high-volume and multichannel distribution (Paul and Matthews 2016). This approach capitalizes on the sheer quantity of messages and utilizes bots and paid trolls to amplify their reach, not only to disrupt the information space but also to pose a significant challenge to geopolitical stability (Paul and Matthews 2016). By flooding social media platforms with a barrage of narratives, the Russian state ensures that some of its messaging sticks, even if they are contradictory or lack a commitment to objective reality (Organisation for Economic Co-operation and Development 2023). This relentless stream of content is designed not just to persuade but to confuse and overpower the audience, making it difficult to discern fact from fiction, demonstrating how narratives can be weaponized to create division.

Moreover, these tactics exploit the psychological foundations of belief and perception. The frequency with which a message is encountered increases its perceived credibility, regardless of factual accuracy (Paul and Matthews 2016). Russian bots contribute to this effect by continuously posting, re-posting, and amplifying content, thereby creating an illusion of consensus or support for viewpoints. This strategy has the potential to influence public opinion, thereby extending the reach of disinformation campaigns (Politico 2023). This demonstrates how narratives may undermine democratic values and geopolitical stability.

Recent research has expanded on these findings, highlighting the sophisticated nature of bot-driven propaganda. Chen and Ferrara (2023) present a comprehensive dataset of tweets related to the Russia-Ukraine conflict, demonstrating how social media platforms like Twitter have become critical battlegrounds for influence campaigns (Chen and Ferrara 2023). Their work highlights the significant engagement with state-sponsored media and unreliable information sources, particularly in the early stages of the conflict, which saw spikes in activity coinciding with major events like the invasion and subsequent military escalations (Chen and Ferrara 2023). The use of bots in this military campaign is notable for their ability to operate around the clock, mimic human behavior, and engage with real users (Politico 2023). These bots are programmed to push Russian narratives, attack opposing viewpoints, and inflate the appearance of grassroots support (Paul and Matthews 2016). These bots are a key component in Russia’s strategy to structure public opinion and influence political outcomes. Note that we use the terms the “Russian state” and “Putin’s regime” in this article to indicate the group of people who align with the political values of the regime.

Russian disinformation efforts have shown a lack of commitment to consistency, often broadcasting contradictory messages that may seem counter-intuitive to effective communication (Paul and Matthews 2016). However, this inconsistency can be a tactic, as it can lead to uncertainty and ambiguity, ultimately challenging trust in reliable information sources. By constantly shifting narratives, Russian propagandists keep their opponents off-balance and create a fog of war that masks the truth (Organisation for Economic Co-operation and Development 2023). This strategy emphasizes the dual role of narratives in geopolitical conflicts, serving as a shield of unity for one’s own political agenda whilst being a weapon of division against adversaries.

The advancement of Russian disinformation tactics represents a complex blend of traditional influence strategies and the use of modern technological tools. Russia has crafted a formidable approach to push propaganda narratives by leveraging bots, social media platforms, and the vulnerabilities of human psychology (Alieva et al. 2022). The international community, in seeking to counter these tactics needs to understand the threat that this poses and develop comprehensive strategies to defend against the flood of disinformation that undermines democratic processes and geopolitical stability (Organisation for Economic Co-operation and Development 2023).

Information warfare analysis

Information warfare in social media is the strategic use of social-cyber maneuvers to influence, manipulate, and control narratives and communities online (Blane 2023). It is used to manipulate public opinion, spread disinformation, and create divisive discourses. This form of warfare employs sophisticated strategies to exploit the interconnected nature of social networks and the tendencies of users to consume and share content that aligns with their existing beliefs (Prier 2017). The strategy of “commanding the trend” in social media involves leveraging algorithms to amplify specific messages or narratives (Prier 2017). This is achieved by tapping into existing online networks, utilizing bot accounts to create a trend or messaging, and then rapidly disseminating that narrative. This exploits the natural inclination towards homophily-the tendency of individuals to associate and bond with others over like topics (Prier 2017). Social media platforms enable this by creating echo chambers where like-minded users share and reinforce each other’s views. Consequently, when a narrative that is disinformation aligns with the user’s pre-existing beliefs, it is more likely accepted and propagated within these networks (Prier 2017).

Peng (2023) adds to this understanding by conducting a cross-platform semantic analysis of the Russia-Ukraine war on Weibo and Twitter, showing how platform-specific factors and geopolitical contexts shape the discourse (Peng 2023). The study found that Weibo posts often reflect the Chinese government’s stance, portraying Russia more favorably and criticizing Western involvement, while Twitter hosts a more diverse range of opinions (Peng 2023). This comparative analysis highlights the role of different social media environments in influencing public perception and the spread of narratives, emphasizing the multifaceted nature of information warfare across platforms.

The Russian state illustrates the effective use of social media in information warfare, where they have used it for social media propaganda, creating discourse and confusion, and manipulating both supporters and adversaries through targeted messaging (Brown 2023). The goal is to exploit existing social and political divisions, amplifying and spreading false narratives to manipulate public opinion and discredit established institutions and people (Alieva et al. 2022).

Social cybersecurity, integrating social and behavioral sciences research with cybersecurity, aims to understand and counteract these cyber-mediated threats, including the manipulation of information for nefarious purposes (National Academies of Sciences, Engineering, and Medicine (2019)). The emergence of social cybersecurity science, focusing on the development of scientific methods and operational tools to enhance security in cyberspace, highlights the need for multidisciplinary approaches to identify and mitigate cyber threats effectively.

There are many methods and frameworks to analyze information warfare strategies and techniques. One such is SCOTCH, a methodology for rapidly assessing influence operations (Blazek 2023). It comprises six elements: source, channel, objective, target, composition, and hook. Each plays a crucial role in the overall strategy of an influence campaign. Source identifies the originator of the campaign, Channel refers to the platforms and features used to spread the narrative, Objective is the goal of the operation, Target defines the intended audience, Composition is the specific language used, and Hook is the tactics utilized to exploit the technical mechanisms.

While SCOTCH provides a structured approach to characterizing influence operations, focusing on the operational aspects of campaigns, the BEND framework offers a more nuanced interpretation of social-cyber maneuvers. BEND categorizes maneuvers into community and narrative types, each with positive and negative aspects, providing a comprehensive view of how online actors manipulate social networks and narratives (Blane 2023). This framework is particularly effective in analyzing the subtle dynamics of influence operations within social media networks, where the nature of communication is complex and multi-layered (Ng and Carley 2023b). Therefore, when deliberating what framework to utilize, while SCOTCH excels in operational assessment, BEND offers greater insights into the social and narrative aspects of influence operations, making it more suitable for analyzing the elaborate nature of social media-based information warfare operations.

Moral Realism Analysis

Moral realism emphasizes the complex interplay between moral and political beliefs and suggests that political and propaganda narratives are not only policy tools but also reflect and shape societal moral beliefs (Kreutz 2021). This perspective suggests that the political narratives and propaganda disseminated by countries imply that moral justifications embedded in these narratives, both Russian and Ukrainian, are likely shaped by deeper political ideologies, influencing how these narratives are constructed and perceived on the global stage (Hatemi et al. 2019). Similar findings can be seen where political ideologies significantly influenced the framing of vaccines for COVID-19, this understanding becomes essential in the geopolitical concept, particularly in the Russian-Ukraine conflict (Borghouts et al. 2023).

Developed by social psychologists, the Moral Foundations Theory delineates human moral reasoning into five foundational values: Care/Harm, Fairness/Cheating, Loyalty/Betrayal, Authority/Subversion, Sanctity/Degradation, and Liberty/Oppression (Theory 2023). This theory was applied and utilized to understand the moral reasoning of political narratives and public opinion. For instance, this theory helps to understand the moral reasoning behind major political movements and policy decisions, emphasizing how different groups may prioritize certain moral values over others (Kumankov 2023). Application of the theory in a study on attitudes towards the COVID-19 vaccine reveals that liberals and conservatives expressed different sets of moral values in their discourse (Borghouts et al. 2023).

In the context of the Russian-Ukraine War, moral realism is an essential method in understanding international politics. Russia’s narrative often emphasizes the protection of Russian speakers in Ukraine, which can be interpreted as an appeal to the Loyalty/Betrayal Foundation (Dill 2022). On the other hand, Ukraine’s emphasis on self-determination and resistance to aggression may resonate more with Care/Harm and Fairness/Cheating foundations (Polinder 2022).

While moral realism hasn’t been directly applied to analyzing information warfare discourse, the COVID-19 case study shows the impact of understanding moral reasoning on political narratives (Kumankov 2023). Moral realism, in the context of information warfare for this conflict, provides a way to analyze the moral justifications and narratives used by Russia and Ukraine. We can then identify the ethical implications and the underlying values that they are trying to promote. By integrating moral realism, we can begin to understand the effectiveness that these narratives have in shaping public opinion and influencing international response to the Russian-Ukraine conflict.

Topic Modeling

Topic modeling is a machine learning technique used to discover hidden thematic structures within document collections, or “corpora” (Hong and Davison 2011). This technique allows researchers to extract and analyze dominant themes from large datasets, such as millions of tweets, to understand public discourse and the spread of propaganda or counter-propaganda narratives. It is particularly useful for examining social media data, where bots often attempt to control narratives (Hong and Davison 2011).

The topic modeling process involves several steps:

-

1.

Data Collection Gathering tweets related to key events and statements.

-

2.

Pre-processing Cleaning the data by removing noise, such as stop words, URLs, and user mentions, to focus on relevant content.

-

3.

Vectorization Transforming the pre-processed text into a numerical form usable by statistical models (Ramage et al. 2009).

-

4.

Algorithm Application Using methods like Latent Dirichlet Allocation (LDA) to identify topics (Ramage et al. 2009). Each topic is characterized by a distribution of words, highlighting topics relevant to our research.

For Twitter data, topic modeling faces unique challenges due to the platform’s character limit and the use of non-standard language like hashtags and abbreviations. This requires models that can capture the concise and often informal nature of tweets. The conflict-specific jargon, hashtags associated with the war, and the multilingual nature of the involved parties make traditional models like Document-Term Matrix (DTM), Term Frequency-Inverse Document Frequency (TF-IDF), and LDA less effective (Qudar and Mago 2020).

Ultimately, we chose TweetBERT, a variant of the BERT (Bidirectional Encoder Representations from Transformers) model pre-trained on Twitter data, which is designed to handle the peculiarities of Twitter’s text (Qudar and Mago 2020).

3 Data and methodology

3.1 Data collection

The data for this study was sourced from Twitter, a widely used social media platform known for its role in news consumption across global regions including Western, African, and Asian countries (Orellana-Rodriguez and Keane 2018). Unlike other platforms with narrower user bases, Twitter’s widespread popularity enabled us to explore multiple sources that are either pro-Ukraine or pro-Russian support.

We utilized a pre-existing curated Twitter dataset that focused on English-language content featuring specific keywords: “Russian invasion,” “Russian military,” “military buildup,” and “invasion of Ukraine.” The dataset covered the period from January 2022 to November 2022, aligning with critical events including the Russian invasion of Ukraine in February 2022, the Russian advancement into Ukraine in May 2022, and the Ukrainian Kherson counteroffensive in August 2022.

Our dataset consisted of 4.5 million tweets. In our analysis, we concentrated on 1.6 million tweets of a refined social-network discourse exclusively centered on bot-generated content. This focus stems from the increasing recognition of bot communities have in information warfare, often surpassing that of human interactions. Bots, programmed to amplify specific narratives and disinformation, can operate continuously, creating an echo chamber effect that significantly distorts public perception (Smith et al. 2021). This approach enabled us to thoroughly investigate interactions within bot communities by excluding human conversations.

We employed temporal segmentation to focus on specific high-impact timeframes: (1) the Russian Invasion (08 February–15 March 2022), (2) the Mid-point (15 May–15 June 2022, and (3) the Kherson counteroffensive (20 July–30 August 2022).

The Russian invasion (08 February–15 March 2022): this marked the initial phase of the invasion, characterized by the rapid advance of Russian forces into Ukraine, including the capture of key cities and regions (Meduza 2022). This timeframe marked the beginning of intense fighting, particularly around Kyiv and in the Donbas region, and significant civilian displacement and casualties (TASS 2022). International responses included widespread condemnation and the imposition of sanctions against Russia.

The mid-point of the escalation of the war (15 May–15 June 2022): during this period, the conflict transitioned into a prolonged war of attrition. Russian forces focused on consolidating control in the east and south (News 2022), facing stiff resistance from Ukrainian forces (Axe 2022). This timeframe saw significant urban warfare and efforts by Russia to absorb occupied territories. The international community continued to respond with humanitarian aid to Ukraine and further sanctions on Russia (Desk 2024).

The Ukrainian Kherson counteroffensive (20 July–30 August 2022): this phase was marked by a strategic shift with Ukraine launching successful counteroffensives, particularly in the Kherson region (Blair 2022). Ukrainian forces made significant territorial gains, reversing some of Russia’s earlier advances (Sands and Lukov 2022). This period highlighted Ukraine’s resilience and the effectiveness of its strategy, significantly impacting the course of the war.

3.2 Impact of Twitter policies on data collection and propaganda campaigns

Our analysis considers the influence of Twitter’s content moderation policies on the visibility and spread of propaganda during the 2022 Russian-Ukraine War. Initially, Twitter’s policies aimed to curb misinformation, using automated algorithms and human reviewers to filter out harmful content (de Keulenaar et al. 2023).

However, significant shifts occurred with Twitter’s change in ownership in late 2022. Under Elon Musk’s management, the platform adopted a more lenient approach to misinformation, emphasizing ”free speech” and ceasing strict enforcement against misleading information (Kern 2022). This included stopping the enforcement of its COVID-19 misinformation policy, which previously led to many account suspensions and content removals.

These policy changes impacted our dataset. During data collection, tweets with overt disinformation, hate speech, or content inciting violence were more likely to be blocked, while tweets with subtle propaganda or opinion-based misinformation were more likely to remain. This selective enforcement likely skewed our data, with more pro-Ukraine stance observed early in 2022 and a rise in pro-Russian stance later in the year. Fewer pro-Russian hashtags were found and used early in 2022 compared to later.

By examining these policy shifts and their impacts, we understand how moderation influenced narrative visibility. Initial strict policies likely contributed to dominant pro-Ukraine stance early on, while later leniency allowed for increased pro-Russian content. This context is critical for interpreting temporal changes in propaganda and narrative dominance within our dataset.

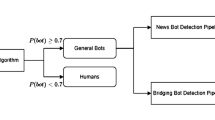

3.3 Data pre-processing

In this portion, we describe our data pre-processing framework which involves extraction of bot tweets, removal of duplicate tweets, data cleaning, and data processing (i.e., stance detection, topic modeling, stance analysis). Figure 1 illustrates the data processing framework used in this paper.

The data that was originally collected through the Twitter API contained text not needed for further analysis. To remove these texts, we employed comprehensive data pre-processing methods to enhance the effectiveness of our study methodology. As depicted in Fig. 1, our pipeline began with the use of a Bot Detector to ensure that only bot tweets were analyzed, emphasizing our focus on automated accounts which are a significant component in the spread of digital propaganda. We then conducted a temporal analysis to understand bot behavior over three specific timeframes, which allowed us to track the evolution of narratives in sync with the development of the conflict. Utilizing the NLTK library,Footnote 1 known for its text-handling features, was a critical step. Pre-processing included transforming tweets into a structured format via tokenization, removing stop words, and employing lemmatization techniques.

Tokenization, a key process in our methodology, involved breaking down textual content into discrete tokens such as words, terms, and sentences. This turned unstructured text into a structured numerical data form, enabling a more concentrated stance analysis. NLTK’s ability to filter out irrelevant words and stop words greatly shaped our focus on significant textual elements from the dataset.Footnote 2 Additionally, RegexpTokenizer, a part of the NLTK library, allows us to customize our tokenization process. This enabled us to define specific models for tokenization, making it particularly useful for handling the unique characteristics of social media text.

3.4 Bot detection

Bot detection algorithms utilize various methods to identify automated accounts on social media platforms. One simple approach is analyzing temporal features, which involves examining patterns in the timing and frequency of posts (Chavoshi et al. 2016). Bots often post at higher rates with more regular intervals compared to human users. Another advanced method involves deep-learning-based algorithms that assess complex patterns in account behavior, language use, and network interactions (Ng and Carley 2023a). These algorithm distinguish bots from human users by learning from large datasets of known bot and human behaviors.

To further refine the dataset for this study, we utilized a bot detector to parse through the collection, identifying and isolating accounts likely to be automated bots. BotHunter, a hierarchical supervised machine learning algorithm (Beskow and Carley 2018), differentiates automated bot agents from human users via features such as post texts, user metadata, and friendship networks. This algorithm can be implemented on pre-collected data rather than requiring live input. Its proficiency in processing data, particularly during large-scale runs, proves BotHunter as a valuable tool for bot detection.

We refined our initial dataset, which consisted of 4.5 million tweets, down to a more manageable subset of over 1.5 million tweets. Within this subset, we identified and categorized accounts as probable bots if they achieved a BotHunter score of 0.7 or higher. This threshold was established based on findings from a previous systematic study that determined optimal values for bot detection algorithms. We used both original and retweets of the data because these two types of tweets, in totality, represent the content disseminated by bots, thereby enhancing the precision of our study’s insights into automated activity on Twitter (Ng et al. 2022). By focusing on accounts that surpassed this reliability score, we concentrated our analysis on the influence and behavior of bots within the discourse of the Russian-Ukraine conflict.

3.5 Stance detection

After undergoing pre-processing, the dataset was parsed through the NetMapper software to enhance narrative understanding.Footnote 3 This software contains lexicons of language traits in over 40 languages, including those studied within this work. These lexicons enable insights into stance dynamics that enhance later stance analyses.

A major step of our stance analysis involved classifying tweets based on their stances, which were indicated using specific hashtags. We adopted the hashtag propagation method, which entails identifying and categorizing the 130,000 tweets according to their usage of pro-Russian (including anti-Ukraine stance) and pro-Ukraine (including anti-Russian stance) hashtags. The hashtag propagation method involves the tracking and analysis of network interaction among Twitter users based on specific hashtags (Darwish et al. 2023). This method identifies clusters of users sharing similar hashtags, revealing their stances and associated hashtags. Examining these networks allows us to understand the predominant stances and interactions within each group (Darwish et al. 2023).

For effective hashtag selection representing both pro-Russian and pro-Ukraine stances, two criteria were applied: firstly, the exclusivity of the hashtag to a specific stance pole, and secondly, its prevalence to ensure reliable agent stance detection. Initially, The process involved sorting hashtags based on frequency to identify probable pro-Russian and pro-Ukraine stances. Subsequently, selected hashtags were examined further using network analysis to confirm their exclusive association with the intended stance and to uncover any additional related hashtags. We manually reviewed a few hundred tweets for specific hashtags within each timeframe, identifying over 2000 unique hashtags to categorize tweets according to their stances.

By accurately classifying tweets into pro-Russian or pro-Ukraine stance, we can track the shifts in public opinion facilitating the ability to identify misinformation campaigns and understand how digital solidarity or opposition is manifested. Furthermore, stance detection aids in showing bots’ influence in shaping narratives, providing a more comprehensive view of the conflict.

3.6 Validation of stance analysis and bot detection

To ensure the reliability and accuracy of our stance analysis, we utilized the TweetBERT model, pre-trained on a large dataset of tweets annotated by human coders. This pre-training involved rigorous manual annotations to capture the nuances of stance, as recommended by Song et al. (2020). The human annotations provided a robust foundation for training the model, allowing it to accurately classify stances in the large dataset of tweets related to the Russia-Ukraine conflict.

Our validation approach involved several key steps to ensure the robustness of the stance analysis:

Human Annotations The stance analysis model was initially trained using manually annotated tweets. We reviewed a few hundred tweets for specific hashtags within each timeframe, identifying over 2,000 unique hashtags to categorize stances. This manual validation ensured accurate stance detection, providing a solid foundation for sentiment analysis with examples of positive, negative, and neutral stances.

Sample Size and Sampling Methods Our dataset comprised over 1.6 million tweets. We used stratified random sampling to select a representative subset for manual annotation, ensuring the sample reflected the diversity of stances and topics in the full dataset.

Quality Assurance in Pre-processing During data pre-processing, we used the NLTK library for tokenization, stop word removal, and lemmatization. This ensured clean and consistent text data for accurate sentiment analysis. Removing stop words like ’the’, ’is’, and ’in’ streamlined the dataset, allowing algorithms to focus on words with substantial emotional or contextual weight. Lemmatization ensured different forms of a word were analyzed as a single entity, enhancing sentiment assessment consistency.

Validation of Stance and Bot Analysis We grabbed a random subset of tweets and labeled them as pro-Russia, pro-Ukraine, or Neutral. We compared these labels to the model’s predictions. This step ensured that our analysis was reliable and accurately reflected the sentiments expressed in the tweets. For stance detection, we achieved an accuracy score of 91.28% for all pro-Ukraine stances and 84.78% for all pro-Russian stances. This highlights the model’s high accuracy in identifying the stance of tweets related to the Russia-Ukraine conflict. We also manually reviewed a subset of accounts labeled as bots by the model, examining activity patterns, content, and other indicators to confirm classification. We only analyzed accounts with a bot probability score above 0.7, ensuring high confidence in bot detection results. The results of this validation, along with the comparison between human annotations and model predictions for both stances and bot detection, can be found in Appendices A and B.

3.7 Topic modeling

Topic modeling uses algorithms to sift through large text datasets, like social media posts, to identify recurring themes or topics. In our research, it is crucial to understand the narratives, sentiments, and discussions on Twitter during the Russian-Ukraine conflict. This method helps pinpoint prominent themes, phrases, and words characterizing the war’s narratives.

In this context, topic modeling shows how different actors within the bot community shape the conversation. By tracking topics or hashtags over time, we can identify misinformation campaigns, state-sponsored propaganda, and grassroots movements, often correlating with events like military escalations or diplomatic negotiations.

Twitter’s informal language and brevity pose challenges for traditional NLP models like BERT and BioBERT (Qudar and Mago 2020). TweetBERT, designed for large Twitter datasets, handles these challenges by analyzing text that deviates from standard grammar and includes colloquial expressions. It effectively detects trends and movements, making it ideal for analyzing diverse datasets (Qudar and Mago 2020).

TweetBERT’s capabilities are vital for this study, focusing on Russia’s bot-driven campaigns and Ukraine’s counter-narratives. By using TweetBERT, we can uncover attempts of narrative manipulation methods and assess the impact of bot-driven campaigns on public opinion and geopolitical dynamics. We further used word clouds to explore the strategic deployment of themes and terms by bots during key conflict phases.

This approach shows how bots exploit social media algorithms, favoring content that generates interaction. Consequently, bots create and perpetuate echo chambers. Topic modeling with TweetBERT provides insights into how information warfare campaigns are conducted, demonstrating how bots systematically disseminate polarizing content to influence public opinion.

3.8 BEND framework and moral foundations theory integration

The BEND framework offers a robust method for interpreting social-cyber maneuvers in information warfare, distinguishing between community and narrative maneuvers with positive and negative stances (Blane 2023). This framework enables a detailed examination of social media dynamics, addressing content, intent, and network effects. By employing BEND, we can identify strategies to build or dismantle communities, engage with or distort narratives, and enhance or discredit messages, which is critical for understanding the impact of these campaigns.

The BEND maneuvers are categorized as follows:

-

Community Maneuvers

-

Positive (’B’): Back, Build, Bridge, Boost.

-

Negative (’N’): Neutralize, Negate, Narrow, Neglect.

-

-

Narrative Maneuvers

-

Positive (’E’): Engage, Explain, Excite, Enhance.

-

Negative (’D’): Dismiss, Distort, Dismay, Distract.

-

For instance, the “build” maneuver creates groups by mentioning other users, while the “neutralize” maneuver discredits opposing opinions. Narrative maneuvers like “excite” elicit positive emotions, whereas “distort” alters perspectives through repeated messaging (Blane 2023).

To further contextualize these maneuvers, we integrate Moral Foundations Theory, which segments moral reasoning into core values: Care/Harm, Fairness/Cheating, Loyalty/Betrayal, Authority/Subversion, Sanctity/Degradation, and Liberty/Oppression (Graham et al. 2013). This theory provides insights into the stances and narratives in our dataset, revealing moral undertones that resonate with and mobilize individuals on an ethical level. For example, Care/Harm is evident in protective rhetoric in pro-Russian narratives and victimization themes in Ukrainian messaging. Fairness/Cheating surfaces in accusations of deception, while Loyalty/Betrayal, Authority/Subversion, and Sanctity/Degradation are present in discussions of national allegiance and cultural institutions.

Integrating the BEND framework with Moral Foundations Theory offers a multi-dimensional approach to sentiment analysis, mapping out the emotional and ethical dimensions of the narratives. This analysis uncovers how orchestrated maneuvers resonate with foundational moral values, magnifying their impact. The dual-framework application explains the strategies and moral appeals used to shape public perception and international responses, highlighting the potency of sentiment as a weapon in modern information warfare.

4 Results and discussion

4.1 Dominance of pro-Ukraine stance versus pro-Russian stance

While we don’t know for sure whether these contents are bot-created or human-created content that is propagated by bots, we know that these contents have a high probability of being bot-communicated. The study of bot-communicated content is important, as it reveals the content and extent of the information that bots prioritize to communicate to the general reader during the period of study. This distinction is critical for understanding the mechanisms of influence and narrative control within the digital warfare domain, especially in the context of the temporal segmentation employed to focus on specific high-impact timeframes.

It is also important to note, that while there is a significant concern about the potential of bots to manipulate public opinion, the evidence remains inconclusive. For example, a study by Eady et al. (2023) found minimal and statistically insignificant relationships between exposure to posts from Russian foreign influence accounts on Twitter and changes in voting behavior in the 2016 U.S. election (Eady et al. 2023). This suggests that while attempts to manipulate public opinion are evident, actual successful manipulation may be far less effective than often assumed. It is important to distinguish between the presence of such attempts and their effectiveness in achieving the intended outcomes.

During the initial invasion phase (08 February–15 March 2022), these bots disseminated misleading information, justifying Russian state military actions and downplaying the severity of the invasion. Marked by heavy fighting, particularly in the Donbas region, (15 May–15 June 2022), bot activity intensified, mirroring the escalation in military engagement and aiming to influence international opinion. During the Kherson counteroffensive (20 July–30 August 2022), Bots supporting Putin’s regime attempted to counter the narrative of Ukrainian resilience, highlighting their strategic use in narrative control and the manipulation of public opinion.

Figure 2 represents a normalized weekly bot tweet volume from January to October 2022. The normalization process involves scaling the weekly number of bot tweets against the minimum volume observed in any given week within the dataset, thus ensuring that the peak activity is set at a value of 1.0 for relative comparison. This process allows us to observe the proportional intensity of bot activity over time, offering a clear visual representation of bot-driven disinformation campaigns during key phases of the conflict.

The graph shows a surge in bot activity coinciding with the onset of the Putin’s regime invasion, a noticeable uptick around the mid-point period from May to June, and another increase during the Ukrainian counteroffensive in Kherson while aligning with the strategic timing of narrative manipulation and the heightened need for controlling the information flow by state actors through automated means.

Figure 3 shows the normalized patterns of the top hashtag usage from January to September 2022 by scaling the frequency of each hashtag against the peak usage observed within the dataset. The timeframes of interest – marked by purple overlays – correspond to the periods of the Russian invasion, the mid-point of intensified military engagement, and the Ukrainian Kherson counteroffensive.

It is evident from the visualization that hashtags demonstrating Ukraine, designated by the outlined blue boxes, dominated the conversation. This prevalence is consistent across the entire timeline but shows notable peaks during key conflict events. The dominance of pro-Ukraine hashtags reveals a significant trend in bot sentiment on Twitter, reflecting widespread global support for Ukraine during these periods. We will explore further into this and how bots were programmed and utilized during this period. We will also study the utilization of these hashtags and how they can be interpreted as a form of digital “solidarity” with Ukraine, as well as a means of countering pro-Russian narratives on the platform and vice versa.

The results in Figs. 4 and 5 provide a visual representation of the stance associations and stances expressed using specific hashtags on Twitter during the Russian-Ukraine conflict.

Figures 4 and 5 depict the ego network of the most popular hashtag for each stance, which refers to a specific type of network centered around a single node (the “ego”) where the network includes all the direct connections or interactions that the ego has with other nodes (called “alters”), as well as the connections among those alters.

Figure 4 depicts the ego network for a pro-Ukraine stance, centered around the hashtag #StandWithUkraine. The orange node represents the selected hashtag (the ego), which serves as the focal point of the network. The blue nodes indicate hashtags associated with a positive stance towards Ukraine, suggesting support and solidarity with the Ukrainian cause. Red nodes, on the other hand, signify negative stance but, in this context, they are against Russia, thereby reinforcing the pro-Ukraine stance. Green nodes denote neutral hashtags that are neither exclusively pro-Ukraine nor pro-Russian but are possibly used in discussions that involve a broader or neutral perspective on the conflict.

The prevalence of blue nodes surrounding the ego, #StandWithUkraine, highlights the strong positive stance and support for Ukraine within the Twitter discourse. The interconnectedness of these nodes suggests a cohesive community of Twitter bots who are programmed to align their support for Ukraine and in opposition to Russia. This network structure implies digital solidarity, where bots and users rally around common hashtags to express support, spread awareness, and potentially counteract pro-Russian narratives. However, it is still important to recognize that the presence of these coordinated bot activities does not necessarily equate to effective manipulation of public opinion.

Figure 5 illustrates the ego network for the pro-Russian stance, with #IstandwithRussia as the ego node. Like Fig. 4, the colors denote the stances associated with each hashtag. Blue nodes reflect positive stance towards Russia, red nodes represent negative stance but, like the pro-Ukraine stance, these are against Ukraine, and green nodes are neutral. This network indicates a less dense clustering of hashtags around the central node compared to the pro-Ukraine network. This implies a less cohesive or smaller group of Twitter bot accounts that share pro-Russian sentiment. This could also reflect a strategic use of varied hashtags to spread pro-Russian stance messages across different Twitter communities. The prevalence of green nodes (neutral nodes) implies a strategy to engage a wider audience or to introduce a pro-Russian stance message into broader, potentially unrelated discussions.

These networks were completed over multiple iterations. The structure and composition of these multiple networks showed not only the sentiments and stances of Twitter bots but also the dynamics of how these stances are presented and propagated. By analyzing the connections between different hashtags, we can deduce the strategic use of language and digital behavior that defines these online events (Figure 6).

By completing this process, we created a list of 2,0000 hashtags for stance detection that contained both pro-Russian (including anti-Ukraine) and pro-Ukraine (including anti-Russian) stances to conduct further sentiment analysis. As shown in Fig. 7, the heatmap visually represents some of the stance occurrences over time. The top portion of the heatmap, indicated by the black horizontal line, is composed of pro-Ukraine stances. These stances are characterized by support for Ukraine, opposition to Putin and Russia, and criticism of the Russian regime. The lower portion represents pro-Russian stances, which predominately consist of support for Russia, antagonism towards NATO, and pejorative references to Ukraine as a Nazi state.

Some of the most notable ones for pro-Ukraine which can be seen throughout all three timeframes: #StandWithUkraine, #istandwithzelensky, #PrayingforUkraine, #standwithNATO, #PutinWarCrimes, #PutinWarCriminal, #StopPutinNow.

The hashtags such as #StandWithUkraine and #istandwithzelensky signify clear rallying for solidarity with Ukraine. Their significant appearance in the heatmap during all three phases of the conflict demonstrates a sustained and widespread digital mobilization in support of Ukraine. #PrayingforUkraine reflects the global community’s concern and hope for the welfare of the Ukrainian people, while #standwithNATO suggests an alignment with Western Military alliances, which are seen as protectors and allies within this conflict.

The frequency of hashtags like #PutinWarCrimes and #PutinWarCriminal indicates an accusatory stance against the Russian leadership, branding their military actions as criminal. This not only conveys condemnation but also echoes calls for international legal action.

Some notable ones for Pro-Russian which can be seen throughout all three timeframes: #istandwithrussia, #istandwithPutin, #abolishNATO, #noNATO, #UkroNazi, #WarCrimesUkraine, #StopNATOExpansion, #NATOterrorists.

The hashtags #istandwithrussia and #istandwithPutin represent a digital front of support for Russian actions and policies. These hashtags demonstrate support for Russian narratives and reject Western critiques. #abolishNATO and #noNATO indicate a stance against NATO, suggesting that it is viewed as an aggressor within the conflict, aligning with Russian claims of being threatened by NATO expansion to their borders. #UkroNazi and #WarCrimesUkraine are utilized to delegitimize the Ukrainian government and its actions, employing historical references to vilify Ukraine’s position and justify Russia’s actions.

Figure 8 depicts a sample of pro-Ukraine and pro-Russian hashtags discovered through stance detection where it shows how the narratives progressed during the three major phases of the conflict. Their usage patterns across the timeframes of the conflict show how both sides leverage social media to build and maintain support, counteract opposition narratives, and potentially influence undecided or neutral observers.

During the three timeframes, the hashtags display their efforts to rally communities to their causes. Their repeated use, particularly during critical events, suggests a bot-centric strategy to amplify certain narratives. The predominance of specific hashtags and similar like hashtags, as identified in the heatmap, indicates areas where bot activity is concentrated, representing an attempt to shape discourse around key narrative points.

These hashtags and their distribution over time provide quantitative backing to the qualitative observations derived from the ego networks. Understanding the rise and fall of these hashtags usages provides valuable insight into the role and reach of these bots is critical for both sides of the conflict, as it can significantly affect international perception and policy decisions. The network analysis depicted in Figs. 4 and 5 shows how bots are interconnected and Fig. 8 measures the intensity and prevalence of opinions during critical events of the Russian-Ukraine conflict, while Fig. 6 shows the frequency at which these bots are posting.

Figure 6 depicts the frequency at which bots are posting across all three timeframes. Figure 6, with its delineation of pro-Ukraine and pro-Russian hashtags, shows a pronounced skew towards pro-Ukrainian stances, especially during key moments of the conflict. This skew is not just indicative of public opinion but also reflects a concerted effort by bot networks to amplify the Ukrainian narrative. The graph shows a persistent dominant use of pro-Ukrainian hashtags, suggesting a strong and continuous bot engagement by those behind the automated accounts supporting Ukraine’s cause. The sharp fluctuations in pro-Ukrainian hashtag frequency, particularly at the points marked “Russian Invasion”, “Mid-Point,” and “Counteroffensive,” suggest that key military or political events trigger significant spikes in bot activity.

Content moderation policies also played a significant role, with a higher pro-Ukraine stance observed in the beginning and a lower pro-Russian stance. However, as the year progressed and new policies were established, pro-Russian sentiment grew, particularly towards the end of 2022. This shift is evidenced by the increased use of hashtags such as “istandwithrussia”, “UkraineNazis”, “NATOized”, and “StopNaziUkraine” during the counteroffensive period, highlighting how changes in content moderation policies increased bot activity promoting pro-Russian stance messaging.

The relatively stable line of pro-Russian hashtags in Fig. 6 usage suggests a consistent, albeit less pronounced usage of the hashtags by bots. This implies several things: it suggests a more restrained approach to social media, a less mobilized base of support, or it could also reflect countermeasures taken by platforms to limit the reach of pro-Russian messaging, which has been a policy of several social media platforms.

The normalization of data in Fig. 6 is critical as it allows for the comparison of relative changes over time, controlling for the absolute number of messages sent. This method of data representation highlights the relative intensity of information warfare campaigns rather than the raw numbers, providing insight into how the conflict is being fought on social media platforms. This figure, along with the heatmap (Fig. 8) and the ego-centric networks (Figs. 4 and 5), provides a comprehensive picture of how information warfare campaigns are taking place, allowing us to have a deeper understanding into those themes.

4.2 Operational narratives: the role of bots in conflict storytelling

When performing stance analysis, TweetBERT processes tweets by considering their content, language style, and structure. Its underlying architecture, pre-trained on millions of tweets, captures the informal and idiosyncratic language of Twitter (Qudar and Mago 2020). The model classifies tweets as positive, negative, or neutral based on learned patterns from its training data, encompassing a wide spectrum of expressions, from straightforward statements to subtle or sarcastic comments. This sentiment analysis was applied to our dataset, covering a range of emotions, opinions, and perspectives conveyed through informal language and hashtags.

In the sentiment analysis of Fig. 7, it is apparent that pro-Russian tweets are more inclined to convey very negative sentiments, with an average probability of 35%, in contrast, pro-Ukraine tweets are more predisposed to express very positive sentiments, evidenced by a higher average probability of 40%. This stark disparity in sentiment distribution is indicative of a deliberate strategic approach, where negative sentiments often serve to undermine or vilify the opposition, while positive sentiments are utilized to promote unity and garner support.

Specifically, the 35% probability of very negative sentiments in pro-Russian tweets suggests a calculated effort to sow discord and foster a hostile perception of Ukraine and its allies, aiming to erode international support by casting their efforts in a negative light. Conversely, the 40% probability of very positive sentiments in pro-Ukraine tweets likely aims to bolster the legitimacy and morale of the Ukrainian cause, seeking to strengthen and consolidate global backing for their stance.

Figure 9, generated from this analysis, is a visual representation of the tweets’ embedding reduced to two principal components through PCA. The two-dimensional PCA plot provides a simplified yet insightful visualization of the high-dimensional data. The PCA serves as a powerful tool to highlight the distinction between the pro-Ukraine and pro-Russian clusters formed by the K-means algorithm, based on the semantic content of the tweets as encoded by TweetBERT.

Figure 9 illustrates two clusters with a clear dichotomy in the narrative propagated by bots: one distinctly pro-Ukraine and the other pro-Russian. This plot not only shows the presence of two opposing narratives but also the degree to which these narratives are being propagated, as evident from the clustering patterns. The spatial distribution suggests that there is minimal crossover or ambiguity in the messaging of these bots; they are programmed with specific narratives and don’t typically engage with or share content from the opposing viewpoint.

The distribution of points within each cluster could be telling the strength and coherence of the messaging. For example, the tight clustering in each pro-Ukraine and pro-Russian indicates a highly focused and consistent narrative push. There is some disperse within each cluster, suggesting that there is some variability of sub-narratives within each messaging strategy, but they mostly follow the same main narrative for each viewpoint.

Figure 9 shows that there is a level of sophistication to the narrative warfare at play. Bot are not merely spreading information; they are curating a specific emotional sentiment that aligns with their programmed objectives. This emotional manipulation can have a profound impact on human users who encounter these tweets, potentially swaying public opinion and influencing the social media discourse surrounding the war.

The analysis of bot activity, as visualized in Fig. 9, again shows a stark division between pro-Ukraine and pro-Russian clusters, with no apparent overlap. This segregation is characteristic of echo chambers, which are typically strengthened by the absence of dissenting or alternative viewpoints. Our findings are supported by recent research, which highlights the significance of bipartisan users in bridging divided communities and disrupting the formation of such echo chambers (Zhang et al. 2023). When human bipartisan interactions are present, these users can introduce variance and mitigate the polarizing effects of echo chambers by connecting different narrative threads (Zhang et al. 2023).

However, in our bot-only dataset, the lack of this bipartisanship shows the potential for echo chambers to thrive unchecked. Without the moderating influence of bipartisan users, be they human or bots programmed with diverse narratives, the clusters formed are highly polarized and exhibit a high degree of narrative consistency. This raises important questions about the design and intention behind these bots. If bots were programmed to mimic the bipartisan behavior observed in human users, could they serve a similar function in diluting the echo chamber effect? Or would their artificial nature render such efforts ineffective or even counterproductive?

It is not surprising that the two groups are disjoint, as most bots do not interact, indicating that simple bots, which relay messages but don’t engage in adaptation, are being used. This absence of interaction among bots highlights the use of a more rudimentary form of artificial intelligence in these information campaigns, focusing on message distribution rather than engaging in complex conversations or altering strategies based on audience response. Such an approach reveals a deliberate choice to prioritize volume and consistency over adaptability and engagement, hinting at a strategic emphasis on shaping narratives and controlling discourse rather than fostering genuine interaction.

The absence of human users in this dataset allows for unique observation of how bots alone create and sustain narrative echo chambers. The homogeneity within each cluster indicates a sophisticated level of narrative control, likely intended to shape public discourse. This highlights the potential for bots to be used in information warfare, significantly influencing social media in the absence of human counter-narratives. Zhang et al. (2023) suggest that the inclusion of bipartisan elements could introduce complexity and interaction, preventing such insulated informational environments (Zhang et al. 2023). Integrating these insights could inform future strategies for digital platform governance and algorithm design to detect and mitigate polarized content.

The stark separation of sentiment and narrative between pro-Ukraine and pro-Russian bots reveals a deliberate effort to not only inform but also emotionally influence public opinion. By highlighting the operational simplicity and strategic focus of the bots used in these campaigns, we can better understand the dynamics at play in digital propaganda efforts and the critical role of human engagement in countering the formation of narrative echo chambers. However, it is important to recognize that while these findings show the potential for bots to shape narratives and control discourse, the overall influence of these echo chambers and coordinated disinformation efforts on public opinion remains limited. This nuanced understanding can guide future efforts in digital platform governance and the development of strategies to foster more diverse and interactive online environments.

Narrative Dynamics Across Conflict Phases

By employing topic analysis in our dataset, the analysis of bot-driven sentiment presents how narratives evolve in their efforts of information warfare. Our results try to understand the patterns of how bots have strategically broadcast both pro-Ukraine and pro-Russian stances during critical key events of the conflict.

Russian Invasion Analysis

Figure 10 features the narratives propagated by bot-driven communities during the Russian invasion from 08FEB-15MAR 2022. The Pro-Ukraine word cloud focuses on rallying cries such as #supportukraine and calls to action like #stoprussianaggression, reflecting a clear call for international support and immediate action to counter Russian aggression. The emphasis on hashtags talking about war crimes such as #putinwarcriminal and #warcrimesofrussia aligns with efforts to draw global attention to the humanitarian impact that the invasion had to the Ukrainian people.

The term #putinwarcriminal and #arrestputin suggests a concerted effort to personify the conflict, concentrating the narrative against a single figure of Vladimir Putin to simplify the complex geopolitical situation into a clear-cut story of who is the main aggressor behind the military actions of Russia.

Conversely, the pro-Russian word cloud is dominated by terms such as #stopnato and #nazis, which appear to be part of a broader strategy to undermine the legitimacy of NATO’s involvement and to cast aspersions on the motivations behind Ukraine’s defense efforts. The repeated use of #nazi in conjunction with various entities (#kosovoinnato, #germannazis, and #ukronazis) is a provocative attempt to invoke historical animosities and paint the opposition as not just wrong, but morally reprehensible.

The presence of terms like #zelenkylies and #fuckbiden suggests an aggressive stance against international figures who are critical of Russia’s actions. This aggressive language is likely intended to resonate with and amplify existing anti-Western sentiment, rallying support by tapping into such strong emotions.

The word clouds in Fig. 10 demonstrate the capacity of bot-driven communities to disseminate targeted messages that can influence public discourse. The strategic repetition of these specific terms and the emotional weight they carry can have a significant impact on the public perception of the conflict. For example, the repeated association of Ukraine with Nazism by pro-Russian bots could, over time, influence on-the-fence observers to view the Ukrainian resistance with skepticism. Similarly, the pro-Ukraine bots focus their strategies on war crimes and heroism can bolster a narrative of moral high ground and rightful resistance, influencing international opinion and potentially swaying public policy.

Mid-Point Analysis

Figure 11 from the midpoint of the conflict shows how the intensified narratives are being pushed by bot-driven communities, highlighting how these automated accounts adapt their messaging in response to evolving circumstances of the Russian-Ukraine conflict.

The pro-Ukraine bots, during the midpoint timeframe, focus sharply on the characterization of Russian leadership as criminal, with #putinwarcrimes and #putinisaterrorist featuring notably. This indicates a strategic push to hold the Russian state accountable for its actions and to appeal to the international community’s sense of justice. The word cloud also shows a call for solidarity and support for Ukraine, with terms like #saveukraine and #standwithukraine appearing frequently. The repeated use of #stopputin and #stoprussianaggression serves as a rallying cry to mobilize international pressure against the Russian military campaign.

The pro-Russian word cloud exhibits a significant focus on terms that escalate the dehumanization of Ukraine and its allies. The prominent display of #nazi related terms in conjunction with #ukronazis and #stopnato suggests a continuation and intensification of the strategy to vilify Ukraine by associating it with historical evils. The use of such charged terms is a common tactic in information warfare, aimed at delegitimizing an opponent and swaying public sentiment by drawing on emotional and historical connotations.

This phase also shows an increased effort to reinforce narratives that support Russian actions, with terms like #isupportputin and #russianato, which indicates a defensive posture in response to global criticism of the invasion.

The word clouds suggest a battle for the narrative high ground, where each side’s bots work continuously to sway public opinion and influence international perception. Having the focus on emotionally charged and historically weighted language demonstrate bots role in amplifying existing narratives.

Bots are used not only to disseminate information but also to engage in psychological operations. The stark contrast between the two sets of word clouds highlights the polarized nature of the conflict as perceived through social media, with bots acting as catalysts for these divisive narratives. As the war progresses, these automated agents continue to play a crucial role in the information warfare that accompanies the physical fighting on the ground.

Kherson Counteroffensive Analysis

In Fig. 12, the word cloud from the Kherson Counteroffensive shows how bot-driven communities target and continuous amplification of specific narratives. The word cloud shows how these strategies and thematic focuses of bot activity continued when entering the turning point of the conflict with the Ukrainian counteroffensive.

The pro-Ukraine stance word cloud responds to the Pro-Russian narrative with a heightened focus on justice and accountability. Terms like #putinwarcriminal and #warcrimainlputin dominate, suggesting a strategic emphasis on the legal and ethical implications of the conflict. This shift towards highlighting war crimes and the criminality nature of it serves to erase the perceived illegitimacy of Russian military actions and to bolster international support for Ukraine’s counteroffensive.

The recurrent mention of #stoprussianaggression and the calls to #standforukraine reflect a continued urgency in the pro-Ukraine narrative. They serve not only as a plea for support but also as a means of reinforcing the identity of Ukraine as a nation under unjust attack, striving to defend its sovereignty and people.

The pro-Russian bots during the Kherson Counteroffensive continue to push a narrative that is heavily laden with historical and nationalistic sentiments. The use of #nazis in conjunction with #ukronazis and #nato persists, emphasizing an attempt to paint the Ukrainian defense efforts and their Western allies in a negative light. This continued usage of such incendiary terminology suggests a relentless drive to cast the conflict not just as a territorial dispute but as a moral battle against perceived fascism that is viewed from a Russian standpoint.

The terms #traitorsofukraine and #standwithrussia indicate a dual strategy of internal division and external solidarity. By labeling opposition elements as traitors, these bots aim to sow discord and delegitimize the Ukrainian resistance while simultaneously calling for unity among pro-Russian supporters.

The thematic content of these word clouds during the Kherson Counteroffensive highlights how bots can adapt their messaging to the changing dynamics of war. As Ukraine takes a more offensive stance, the pro-Russian bots ramp up their use of divisive language, likely to counteract the rallying effect of Ukrainian advances. Similarly, pro-Ukraine bots amplify their calls for justice and international support, aiming to capitalize on the momentum of the counter-offensive.

These bot-driven narratives are engineered to provoke specific emotional responses and attempt to manipulate the perception of the conflict. The use of emotionally charged language and polarizing terms highlights the sophistication of these information warfare campaigns, designed not just to report on the conflict but to actively shape the discourse around it.

Throughout the various stages of the Russia-Ukraine conflict, bot-driven narratives strategically attempted to influence public perception and opinions. During the invasion phase, pro-Russian bots discredited NATO and rallied support for Russia, while pro-Ukraine bots highlighted the urgency of resisting Russian aggression and focused on humanitarian issues. As the conflict escalated, pro-Russian narratives intensified the vilification of Ukraine using historical antagonisms. In contrast, pro-Ukraine bots condemned Russian leadership and emphasized accountability. During the Kherson Counteroffensive, pro-Russian bots amplified divisive rhetoric to undermine Ukrainian unity, whereas pro-Ukraine bots stressed criminal accountability and valor.

4.3 Analyzing strategic information warfare tactics in Russia–Ukraine Twitter bot networks

The impact of information warfare, particularly in the context of social media, became a defining strategy of modern-day warfare. Information warfare leverages the interconnections and immediacy that social media platforms provide to spread disinformation, manipulate narratives, and sow discord among target populations. Its tactics can shape public opinion, influence political processes, and destabilize entire nation-states by eroding trust in institutions and democratic processes. The normalization of disinformation and the exploitation of existing social platforms amplify the potency of such disinformation campaigns.

Figures 13 and 14 highlight the thousands of tweets associated with our results from our stance detection against the overall BEND analysis for each pro-Russian and pro-Ukraine sentiment through the selected three timeframes.

We performed the BEND analysis separately on communities segregated based on stance detection. The analysis was also used to focus the topic analysis portion and show how these maneuvers manifest across all three timeframes and how they affect the narrative and community dynamics. This analysis aligns with social cybersecurity’s focus on understanding the digital manipulation of community and narrative dynamics, highlighting the strategic use of social media in modern conflicts. Aggregation and normalization of the data were applied to ensure a clear comparison across the timeframes to understand how each maneuver changed in each stance across the selected timeframes.

Figure 13 illustrates the evolution of pro-Ukraine narratives within the Twitter bot community. During the Russian invasion, there was a significant Boost and Build effort, using hashtags like #westandforukraine and #istandwithzelensky to foster solidarity and support for Ukraine, reflecting the BEND framework’s principles of community building (Carley 2020). As Russian activities escalated at the mid-point, Engage and Excite maneuvers increased, aiming to make the conflict more globally relevant and counter Russian disinformation. During the Ukrainian counteroffensive, Dismay, Distort, and Distract maneuvers surged, with hashtags like #putinisawarcriminal, #russiaisaterroriststate, and #PutinGenocide challenging pro-Russian narratives and diverting attention from Russian messaging.

Figure 14 illustrates the evolution of pro-Russian narratives within the Twitter bot community. During the Russian invasion, there was a focus on Negate and Neutralize maneuvers, using hashtags like #abolishNATO and #endNATO to diminish opposing narratives. Increased Russian military activity saw Distort and Dismiss maneuvers, skewing the narrative in favor of Russia by highlighting negative aspects of Ukraine, such as Nazi associations. In response to the Ukrainian counteroffensive, Dismay and Distort efforts surged, with hashtags like #westandwithrussia and #naziNATO, aiming to cause fear and discredit Ukraine while garnering support for Russia. This reflects the social cybersecurity concept of narrative manipulation, where digital platforms reshape public perception.

The BEND framework shows that pro-Ukraine efforts predominantly utilize the ’B’ (Boost and Build) and ’E’ (Engage and Excite) maneuvers, focusing on community building and positive narrative development. Conversely, pro-Russian bot activities rely heavily on the ’N’ (Negate and Neutralize) and ’D’ (Distort and Dismay) maneuvers, aiming for community disruption and negative emotional influence.

Applying the BEND framework shows the contrasting online strategies employed by both sides. Pro-Ukraine bots emphasize strengthening solidarity and support, using the Back maneuver to amplify pro-Ukraine voices and the Engage maneuver to foster online camaraderie. This proactive stance aims to rally support and create a collective pro-Ukraine sentiment. In contrast, pro-Russian bots supporting Putin’s regime focus on disrupting communities and spreading negative sentiment to weaken opposition and influence perceptions negatively.

These strategies extend beyond bot communities and are designed to influence the perceptions and behaviors of human users. To better understand the impact of these hashtags, we investigated selected hashtags across the three timeframes to identify the maneuvers each stance represented in bot activity and community engagement on Twitter during the key phases of the Russia-Ukraine conflict.

Russian Invasion Analysis

The Russian invasion, as shown in Fig. 15, highlights the predominant themes during that time through the #istandwithukraine and #istandwithrussia hashtags.

For the pro-Ukraine stance, the Excite maneuver is prominent, suggesting a focus on eliciting positive emotions like joy and happiness to boost morale and support. The Engage maneuver follows, indicating efforts to increase the topic’s relevance, sharing impactful stories, and suggesting ways for the audience to contribute to the cause.

Conversely, the Dismay maneuver is also significant, indicating a strategy to invoke worry or sadness about the invasion’s impacts. This humanizes the conflict, attracting global empathy and support for Ukraine by highlighting the gravity of the situation and the suffering of the Ukrainian people.

For the pro-Russian stance, the dominant maneuver is Dismay, aiming to evoke negative emotions like worry or despair, potentially to demoralize or create a sense of hopelessness regarding the situation in Ukraine. The Distort maneuver suggests an attempt to manipulate perceptions, promoting pro-Russian viewpoints while questioning the legitimacy of Ukrainian narratives. The Excite maneuver is also prevalent, indicating efforts to rally support by justifying Russia’s actions and uniting pro-Russian communities under sentiments of patriotism or anti-Western sentiment.

The strategic deployment of these maneuvers highlights the sophisticated use of social media as a battleground for psychological and narrative warfare. Pro-Ukraine bots focus on building support and maintaining positive sentiment, while pro-Russian bots concentrate on narrative manipulation and fostering negative emotions to influence opinions.

Mid-Point Analysis

During the mid-point of the war, when Russia escalated its military efforts as shown in Fig. 16, there was a notable shift in topics from both pro-Ukraine and pro-Russian standpoints. Key themes include NATO engagement by the Russian state and criticisms of Vladimir Putin by Ukraine. We analyzed the hashtags #putinwarcriminal and #stopnato.