Abstract

Scientists often collect samples on characteristics of different observation units and wonder whether those characteristics have similar distributional structure. We consider methods to find homogeneous subpopulations in a multidimensional space using regression tree and clustering methods for distributions of a population characteristic. We present a new methodology to estimate a standardized measure of distance between clusters of distributions and for hierarchical testing to find the minimal homogeneous or near-homogeneous tree structure. In addition, we introduce hierarchical clustering with adjacency constraints, which is useful for clustering georeferenced distributions. We conduct simulation studies to compare clustering performance with three measures: Modified Jensen–Shannon divergence (MJS), Earth Mover’s distance and Cramér–von Mises distance to validate the proposed testing procedure for homogeneity. As a motivational example, we introduce georeferenced yellowfin tuna fork length data collected from the catch of purse-seine vessels that operated in the eastern Pacific Ocean. Hierarchical clustering, with and without spatial adjacency constraints, and regression tree methods were applied to the density estimates of length. While the results from the two methods showed some similarities, hierarchical clustering with spatial adjacency produced a more flexible partition structure, without requiring additional covariate information. Clustering with MJS produced more stable results than clustering with the other measures.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Classification and regression tree (CART, Breiman et al. 1984) and hierarchical clustering (Gordon 1999) are commonly used methods, not only to find homogeneous groups of observations in a data set, but also to describe the distributional structure of population characteristics. The data of interest in this study are samples of individuals, where each sample is large enough to allow estimation of the sample-specific density of a population characteristic. Several authors have previously proposed regression tree methods for distributions, mostly frequency tables. De’ath (2002) proposed a multivariate regression tree method and applied it to histograms by simply treating frequencies as numerical vectors. Lennert-Cody et al. (2010) and Lennert-Cody et al. (2013) proposed a regression tree method for frequency tables using a Kullback–Leibler divergence-based dissimilarity measure.

For clustering of samples from populations, Dhillon et al. (2002) and Dhillon et al. (2003) proposed a divisive algorithm for word clustering applied to text classification using generalized Jensen–Shannon divergence and presented the properties of the divergence. Cha (2007) listed distance/similarity measures between distributions exhaustively. Vo-Van and Pham-Gia (2010) defined a measure using \(L^1\) distance and proposed a method using it. To analyze patent citation data, Jiang et al. (2013) categorized the clustering methods for distributions into three classes and showed that performance of the method with the Modified Kullback–Leibler divergence is better than the one with geometric distance. Nielsen et al. (2014) investigated the use of \(\alpha -\)divergence for clustering histograms. The Earth Mover’s distance has become popular in machine learning literature. Henderson et al. (2015) proposed a k-means method using the Earth Mover’s distance and applied it to airline route distances to classify airline company and IP traffic data. Phamtoan and Vovan (2022) proposed a fuzzy clustering method for density functions using the \(L^1\) distance that they defined and Nguyen-Trang et al. (2023) proposed an algorithm for globally automatic fuzzy clustering for density functions and applied it for image data. Regarding statistical inference for clustering, Gao and Bien (2022) discussed selective inference for hierarchical clustering.

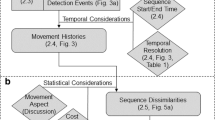

In this paper, we present a unified approach for regression tree analysis and hierarchical clustering of the distributions, possibly of georeferenced. In Sect. 2, we introduce the length composition data for yellowfin tuna as a motivational example. In Sect. 3, a regression tree method for distributions and a clustering method for distributions using the Modified Jensen–Shannon divergence (MJS) are presented. A standardization method for MJS, a testing procedure for homogeneity for the cluster analysis and a method to find the minimal homogeneous tree structure and a near-homogeneous tree structure are proposed in Sect. 4. In Sect. 5, these methods are applied to the tuna length composition data and results from simulation experiments that compared clustering performance using MJS with that of other distance measures are presented. A discussion is made in Sect. 6. All analyses presented in this paper were conducted using the statistical freeware R (R Core Team 2023).

2 Yellowfin Tuna Fork Length Data

The yellowfin tuna length composition data (fork length; the length from the tip of the fish’s snout to the deepest part of the fork in the tail) were collected from the catch of purse-seine vessels that operated in eastern Pacific Ocean from 2003 to 2007. The data were collected by the port sampling program (Suter 2010) of the Inter-American Tropical Tuna Commission (IATTC; www.iattc.org), which is the regional tuna fisheries management agency responsible for the conservation of tunas and other marine resources in the eastern Pacific Ocean. A total of 797 samples were available for analysis, where each sample contains the fork lengths (cm) of about 50 yellowfin tuna, and the date and the location of the fishing operations associated with the tuna catch. The samples were collected from individual storage compartments (wells) aboard tuna vessels when they returned to port to unload their catch. Data on the area and time period of the fishing operations that produced the catch in each well are obtained from data recorded by onboard observers or from fishermen’s logbooks.

For the assessment of population status of yellowfin tuna, it is important to study the spatial distribution of the length composition of the catch because this can provide insights into population structure, as well as the selectivity of the fishing method. Thus, we would like to find subregions within the eastern Pacific Ocean within which the length composition of the catch is as homogeneous as possible. The fork length data were aggregated by location over time into \(5^{\circ }\) latitude by \(5^{\circ }\) longitude cells so that 797 samples were combined into 60 spatial cells (Fig. 1).

It is observed that the shapes of histograms differ by cell, but there is some similarity between adjacent cells in some regions. For example, the largest fish tended to be found in samples from fishing operations that took place south of about \(5^{\circ }\)N, and dominated the samples from fishing operations in that area that occurred west of about \(95^{\circ }\)W. The smallest fish tended to be found in samples from fishing operations closest to the coast and north of about \(5^{\circ }\)N. It should be noted that the number of samples in each cell varies spatially.

3 Regression Trees and Hierarchical Clustering for Distributions

This section begins with a brief review of CART, hierarchical clustering and MJS. Then, regression tree and clustering methods for distributions using the MJS are presented.

3.1 CART and Hierarchical Clustering

The CART methodology (Breiman et al. 1984) starts from a set of all observations and repeatedly subdivides that set using binary partitions, which are defined by the values of explanatory variables. The partitions are selected to provide the greatest decrease in a measure of impurity of the values of the response variable, such as the Gini index or the deviance for a categorical response variable and the sum of within groups sum of squares for a numerical response variable.

On the other hand, hierarchical clustering is an agglomerative approach where each observation starts in its own cluster, and the method repeatedly combines the two closest clusters, as measured by some metric for distance among observations, until all observations form one large cluster. One of the simplest and commonly used measures of distance between two clusters is the squared distance between their centroids. Another commonly used measure is the incremental sum of squares, also known as the Ward’s method (Ward 1963). Because hierarchical clustering does not involve a response variable, it is generally regarded as an unsupervised method.

3.2 The Modified Jensen–Shannon Divergence

The MJS between two distributions with probability/density functions \(f_1\) and \(f_2\) and sample sizes, i.e., numbers of observations that follow these distributions, \(n_1\) and \(n_2\) (\(n_1,n_2>0)\), respectively, is defined as:

where \({\bar{f}}_{1,2}= n_1/(n_1+n_2)\cdot f_1(x)+ n_2/(n_1+n_2)\cdot f_2(x)\) and KL represents the Kullback–Leibler divergence, defined generically by

where \(\Omega \) is the support of the probability/density function g.

3.3 Regression Tree for Distributions

Now we consider a regression tree method for distributions, that is, the response variable corresponding to each sample can be represented as a frequency table, a probability function or a density. In the case of a sample of numerical values, a frequency table and a histogram are useful tools to summarize data. However, the interpretation of such summaries can be influenced by the choice of bins used to produce the table or histogram. This implies that the result of a regression tree analysis also might be influenced by the choice of bins if frequency tables are used to represent distributions. One possible way to avoid this or reduce the magnitude of the problem is to use the data for each sample to estimate the sample-specific density. For example, a kernel density estimate with a Gaussian kernel (Silverman 1986) for a sample consisting of a particular characteristic from n individuals, \(\varvec{x}= \{x_1, x_2,\ldots , x_n\}, x_l\in {\mathbb {R}},l=1,\ldots ,n,\) with bandwidth h is given by

Suppose now that there are M individual distributions. Let \(\mathcal{G}_0\) denote the index set of all individual distributions; \(\mathcal{G}_0 =\left\{ 1,2,\ldots , M\right\} .\) For each individual distribution i in \(\mathcal{G}_0\), we assume that the response is given as a density or probability function \(f_i(x)\) with its sample size \(n_i\) (i.e., a sample-specific density or probability function based on data from \(n_i\) individuals). For simplicity, we express \(f_i(x)\) as if it is a density function in the following equations and formulae, but it can be a probability function and the integral replaced with summation.

First, we define an impurity measure for a group of distributions. For a group of distributions denoted by the index set \(\mathcal{G}\), we define its impurity as the weighted sum of Kullback–Leibler divergences from the weighted mean distribution to each distribution:

where \(n_{\mathcal{G}}\) is the sample size for the mean distribution \({\bar{f}}(\mathcal{G})\) defined by \(n_{\mathcal{G}} = \sum _{i \in \mathcal{G}} n_i\). Note here that since \(f_i\) is absolutely continuous with respect to \({\bar{f}}\), \(\textrm{KL}(f_i|{\bar{f}}(\mathcal{G}))\) is always defined, and thus, so is \(\textrm{Imp}_{\textrm{KL}}( \mathcal{G})\). Simple calculation shows the impurity can be expressed with the information entropy as

where \(\displaystyle \textrm{H}(f)=- \int f(x) \log f(x) dx.\) The decrease in impurity by a partition \(\mathcal{G}\rightarrow (\mathcal{G}_L, \mathcal{G}_R)\) can be simplified as

That is, the decrease in impurity \(\nabla \textrm{Imp}_{\textrm{KL}}(\mathcal{G}_L, \mathcal{G}_R)\) is the MJS between the average distributions of the two child nodes, \(\mathcal{G}_L\) and \(\mathcal{G}_R\). We note here that the decrease in impurity can be computed only with the average distributions of the child nodes. The regression tree algorithm for distributions starts from \(\mathcal{G}_0\) and repeatedly selects binary partitions of the index set of distributions that provides the greatest decrease in impurity given by (3) until a stopping rule is satisfied. It should be also mentioned that a binary partition that provides the largest decrease in the KL impurity also provides the largest decrease in the overall information entropy for partition where the overall entropy for partition \(\Pi =\left( \mathcal{G}_1,\mathcal{G}_2,\ldots ,\right) \) is defined by

3.4 Clustering of Distributions

Here we consider a hierarchical clustering method that is applicable when the data from a population are in the form of samples, where each sample is represented by a frequency table or an unaggregated collection of individual measurements. That is, we assume that there are M samples and each sample is summarized by a density or a probability function \(f_i(x)\) with sample size \(n_i\). Let us now define a distance between two clusters \(\mathcal{G}_1\) and \(\mathcal{G}_2\) of distributions as the MJS between the mean densities of two clusters \({\bar{f}}_{1}\) and \({\bar{f}}_{2}\) with sample sizes \(n_{\mathcal{G}_1}\) and \(n_{\mathcal{G}_2}\):

As shown in (2), it holds

This distance measure represents the distance between the weighted mean distributions, that is, “centroids” of clusters, but the above equation shows that it is also analogous to Ward’s method. Thus, regression trees and clustering for the distributions, as defined above, are based on the same impurity measure (2). The regression tree method repeatedly partitions the data set into smaller groups of distributions to maximize the decrease in the impurity, while clustering repeatedly merges individual distributions, or small groups of distributions, to minimize the increase in the impurity.

When ancillary data, such as the spatial locations associated with each sample, are available, constraints based on those data can be imposed on the clusters to be merged.

4 Standardized Distance for a Merge and Testing Procedure for Homogeneity

In practical situations, the number of clusters is often determined visually from the dendrogram or from the magnitude of the distances between merging clusters. However, distance measures between clusters are not absolute measures, nor do they take into account that two merging clusters were each obtained from a series of previous merges. Moreover, we are often interested in whether each cluster is homogeneous or what the minimal tree structure would be such that each of its terminal clusters is homogeneous.

In this section, we propose a method to provide a standardized distance between merging clusters, each of which was obtained after a series of previous merges, as well as a randomization test for homogeneity of a cluster and a hierarchical testing procedure to find the minimal homogeneous tree structure. We note that standardized distance can be also used to define a “near homogeneity” and find a minimal near-homogeneous tree structure. As in Sect. 3.3, in this section, we assume that \({\hat{f}}_i\) represents the distribution estimate for the i th sample, such as a frequency table or a kernel density estimate, based on a sample of size \(n_i\).

4.1 Standardized Distance Between Merging Clusters

Suppose that \(\mathcal{G}\) is an index set for a group of distribution estimates \({\hat{f}}_i\) with sample size \(n_i\), \(i \in \mathcal{G}\) and that \(\mathcal{G}\) is obtained by combining \(\mathcal{G}_L\) and \(\mathcal{G}_R\) at some step of hierarchical clustering, thus, \(\mathcal{G}= \mathcal{G}_L \cup \mathcal{G}_R\). Let d be the distance between \(\mathcal{G}_L\) and \(\mathcal{G}_R\) based on some measure. We are interested in standardizing distance d to quantify how different the two merging clusters are compared to those that would be obtained if the combined cluster were homogeneous, that is, if the samples used to estimate individual distributions \({\hat{f}}_i\) were all from the same distribution.

To standardize the distance d for a merge, we employ a randomization testing procedure. Let \(\overline{f}_{\mathcal{G}}\) be the mean distribution of \(\mathcal{G}\), \(\displaystyle \overline{f}_{\mathcal{G}}= {\sum _{i\in \mathcal{G}} n_i {\hat{f}}_i}\big /\sum _{i\in \mathcal{G}}n_i\). We generate K randomization samples from \(\overline{f}_{\mathcal{G}}\) and, with each randomization sample, perform clustering and compute the distance between the last merged clusters. Using these values of the distance, we can estimate the mean and variance of the distance between last merged clusters when the observations are from a homogeneous distribution. The details of the standardization algorithm are as follows.

Repeat the following steps for \(k=1,\ldots , K\):

-

1.

Generate a sample \(\varvec{x}_i^{(k)}\) of size \(n_i\) from \(\overline{f}_{\mathcal{G}}\) and compute density estimate \({\hat{g}}_i^{(k)}\) from \(\varvec{x}_i^{(k)}\) for all \(i \in \mathcal{G}\).

-

2.

Perform clustering with \(({\hat{g}}_i^{(k)}, n_i), i\in \mathcal{G}\) and compute the distance \(t^{(k)}\) between the last merged clusters.

Then, compute the sample average \({\hat{\mu }}\) and sample variance v of \(t^{(1)},t^{(2)},\ldots , t^{(K)}\);

These are unbiased estimates for the mean and variance of the distributional distance between the last merging clusters when the merged cluster is homogeneous. The standardized distance \(d^*\) is obtained by

Under the assumption of homogeneity of the merged cluster, \(d^*\) is asymptotically standard normal. This statistic can be used for testing homogeneity of the merged cluster and for finding the minimal homogeneous or near-homogeneous tree given a cutoff value used to define near homogeneity.

4.2 A Randomization Test for Homogeneity of a Cluster

Now, we propose a two-step testing procedure for the hypothesis \(H_0:\) cluster \(\mathcal{G}\) is homogeneous, or more precisely, all samples in \(\mathcal{G}\) are from the same population. A conceptual overview of the procedure is as follows. Generate a relatively small number of randomization samples from \(\mathcal{G}\). If the p-value for distance d between \(\mathcal{G}_L\) and \(\mathcal{G}_R\), computed using randomization samples, is sufficiently large or small, the procedure stops and this p-value is taken as the outcome of the test. Otherwise, generate a second, much larger number of randomization samples and estimate the p-value for distance d between \(\mathcal{G}_L\) and \(\mathcal{G}_R\). This p-value is considered the final outcome of the test. A detailed description of the procedure is as follows.

Let the null hypothesis be \(H_0: \mathcal{G}\) is homogeneous, and \(\alpha \) denote a significance level. We start by setting the values for the relatively small number of samples, \(K_1\) in the first step (e.g. 100 samples), \(N_{d1}\) such that \(N_{d1}/K_1 \gg \alpha \), a small value \(\epsilon \ll \alpha \) and a relatively large number of samples in the second step \(K_2\) (e.g., 1000). The following steps are then implemented:

-

1.

Generate \(K_1\) randomization samples, perform clustering, and compute distances \(t^{(k)} (k=1,2,\ldots , K_1)\) as described in Sect. 4.1. Then,

-

(a)

If the number of \(t^{(k)} (k=1,2,\ldots , K_1)\) that are larger than d is greater than \(N_{d1}\), then we conclude that the p-value of d is greater than \(N_{d1}/K_1\), and do not reject \(H_0\).

-

(b)

If not, compute the Chebyshev’s upper bound \(v/(d-{\hat{\mu }})^2\left( =(d^*)^{-2}\right) \) of the probability \(\textrm{P}(X \ge d)\) where \({\hat{\mu }}\) and v are the sample average and sample variance of \(t^{(k)} (k=1,2,\ldots , K_1\)), respectively, and X is a random variable that follows the same distribution as \(t^{(k)} (k=1,2,\ldots , K_1)\). If the upper bound is less than \(\epsilon \), then we conclude that p-value for d is less than \(v/(d-{\hat{\mu }})^2\) and reject \(H_0\).

-

(a)

-

2.

If both conditions for 1-(a) and 1-(b) are not satisfied, then generate another \(K_2\) randomization samples, for \(K_2\) a large number, perform clustering, and compute distances \(t^{\prime (k)} (k=1,2,\ldots , K_2)\). Let \(n_{d2}\) be the number of \(t^{\prime (k)} (k=1,2,\ldots , K_2)\) that are larger than d. We conclude that the p-value for d is \(\min \left\{ p\, |\, \textrm{P}(X \le n_{d2}) \le 0.05 \right. \) where \(\left. X \sim \textrm{Bin}(1000, p)\right\} \).

One might wonder whether the assumption that the cluster \(\mathcal{G}\) is an intermediate cluster from some step in hierarchical clustering rather than the whole collection of distributions of interest might make the validity of the test questionable. However, this does not cause a problem. The fact that the distance of the two clusters is the minimum among distances between all combinations of uncombined clusters at some step of hierarchical clustering means that d satisfies the extra condition in addition to the one that \(t_k\) of a randomization sample satisfies. Because of the additional condition on d that it is “the minimum” distance among other candidates, it holds that \(\textrm{P}(D> d_1) \le \textrm{P}(T_k > d_1)\) for any \(d_1>0\) where D and \(T_k\) denote d and \(t_K\) under \(H_o\) as random variables, respectively. Thus, \(\textrm{P}(T_k > d) \le \alpha \) implies \(\textrm{P}(D > d) \le \alpha \) and the test is valid although it might be conservative.

4.3 A Hierarchical Testing Procedure for Clustering

We now consider a testing procedure to find the minimal homogeneous tree structure of distributions from the results of hierarchical clustering such that observations in each terminal cluster are homogeneous.

The tests of homogeneity proceed in the reverse order to the merging process of clustering. The null hypotheses “\(H_{0j}:\) cluster \(\mathcal{G}_j\) is homogeneous” have the same hierarchical structure as the dendrogram because \(\mathcal{G}_{j1} \supset \mathcal{G}_{j2}\) implies \(H_{0j1} \Rightarrow H_{0j2}\). Thus, the hierarchical testing procedure (Marcus et al. 1976) to control familywise error rate (FWER) can be applied to this problem. Let \(\alpha \) denote the significance level. The hierarchical testing procedure for finding the minimal homogeneous tree structure is summarized as follows:

-

The hypothesis test starts from the cluster of all samples, the top of the dendrogram.

-

If the significance of the test for the hypothesis of homogeneity is less than or equal to \(\alpha \), then the hypothesis is rejected and the test proceeds to lower clusters. If the significance is greater than \(\alpha \), then the hypothesis of homogeneity for the cluster is not rejected. The tests for the lower clusters are not performed.

-

The procedure ends if there is no cluster to test.

With this procedure, the FWER is controlled to be less than \(\alpha \) when the error rate of each test is less than \(\alpha \).

4.4 Near Homogeneity and a Minimal Near-Homogeneous Tree Structure

In some applications, homogeneity might be too strong of a condition to find useful partitions of a population. In such cases, partitions for a desired number of clusters can be obtained by choosing a cutoff value for the standardized MJS. We can define a cluster obtained by a merge as “near homogeneous” if its standardized MJS is less than the cutoff value and define the minimal tree structure with only near-homogeneous nodes as “a minimal near-homogeneous tree structure” for a given cutoff value. Note that the number of terminal nodes (i.e., the number of clusters) in a minimal near-homogeneous tree structure will depend on the cutoff value used for the a standardized MJS.

5 Analysis of the Tuna Fork Length Data and Simulation Experiment

We applied the methods presented in the previous sections to the yellowfin tuna fork length data and performed a simulation experiment to compare clustering performance of the method with MJS to that of other distance measures.

5.1 Regression Tree

We applied the regression tree method to the yellowfin tuna fork length data. These data were previously analyzed by Lennert-Cody et al. (2010) where the response variable for each sample was a frequency table with 11 fork length bins, and the predictors considered were the \(5^{\circ }\) latitude, \(5^{\circ }\) longitude and season associated with the catch. Here, to facilitate the comparison of results among methods, we choose to work with density estimates instead of frequency tables; however, we note that our results are very similar to those of Lennert-Cody et al. (2010). For each of the 60 spatial cells (Sect. 2), we computed the Gaussian kernel density estimate \({\hat{f}}_i, i=1,2,\ldots , 60\) with the same bandwidth for all cells. We employed a stopping rule which considers a node as a terminal node when its impurity is less than \(\epsilon \) times the root node’s impurity or its number of samples is less than some value m. For this analysis, we set \(\epsilon =0.1\) and \(m=20\). Table 1 shows the partition rules and information about each node of the resulting regression tree, and Fig. 2 shows the tree structure and spatial partitions. The first partition is at \(5^{\circ }\) N latitude. It reduces impurity \(91.8/206.74\times 100=44.4\%\). We observe that to the north of \(5^{\circ }\) N, particularly in the area corresponding to node N15, the peaks of densities are at smaller lengths than those estimated other areas. To the west of \(115^{\circ }\) W and north of \(5^{\circ }\) N (N6), the distributions are flatter than those in the eastern parts (N14 and N15), even though the modes in the three areas are at somewhat similar fork length values.

Clustering results without an adjacency constraint: The first row: dendrograms of \(5^{\circ }\) by \(5^{\circ }\) cells. The second and the fourth rows: color-coded \(5^{\circ }\) by \(5^{\circ }\) cells in 4 clusters and 5 clusters, respectively. The third and fifth rows: density estimates for 4 clusters and 5 clusters, respectively

5.2 Hierarchical Clustering

We applied hierarchical clustering with three different distance measures for distributions to the aggregated tuna fork length data (Sect. 2). The three distance measures used to quantify distance between two clusters \(\mathcal{G}_1\) and \(\mathcal{G}_2\) of distributions, with sample sizes \(n_{\mathcal{G}_1}\) and \(n_{\mathcal{G}_2}\), respectively, are as follows:

-

1.

MJS

$$\begin{aligned} \displaystyle D_{\textrm{JS}}(\mathcal{G}_1, \mathcal{G}_2) = D_{\textrm{JS}}\left( (\overline{f}_1, n_{\mathcal{G}_1}), (\overline{f}_2,n_{\mathcal{G}_2})\right) = n_{\mathcal{G}_1}\, \textrm{KL}(\overline{f}_1|\overline{f}_{1,2})+n_{\mathcal{G}_2}\, \textrm{KL}(\overline{f}_2|\overline{f}_{1,2}) \end{aligned}$$ -

2.

The Earth Mover’s distance (EMD, Henderson et. al. 2015)

$$\begin{aligned} \displaystyle D_{\textrm{EM}}(\mathcal{G}_1, \mathcal{G}_2)= \int _{0}^{1}\left| \overline{F}_1^{-1}(y)-\overline{F}_2^{-1}(y)\right| dy =\int _{-\infty }^{+\infty }\left| \overline{F}_1(x)-\overline{F}_2(x)\right| dx \end{aligned}$$ -

3.

Cramér-Von Mises type distance (CVM, Baringhaus and Henze 2017)

$$\begin{aligned} \displaystyle D_{\textrm{CVM}}= & {} \displaystyle \frac{ n_{\mathcal{G}_1}\cdot n_{\mathcal{G}_2}}{n_{\mathcal{G}_1\cup \mathcal{G}_2}} \int _{-\infty }^{+\infty }\left( \overline{F}_1(x)-\overline{F}_2(x)\right) ^2 dF^b(x)\\= & {} n_{\mathcal{G}_1}\int _{-\infty }^{+\infty }\left( \overline{F}_1(x)-\overline{F}_{1,2}(x)\right) ^2 dF^b(x) +n_{\mathcal{G}_2}\int _{-\infty }^{+\infty }\left( \overline{F}_2(x)-\overline{F}_{1,2}(x)\right) ^2 dF^b(x) \end{aligned}$$

where \(\overline{f}_j\) and \(\overline{f}_{1,2}\) are weighted average density functions of clusters \(\mathcal{G}_j (j=1,2)\) and \(\mathcal{G}_1\cup \mathcal{G}_2\), respectively, whereas \(\overline{F}_j\) and \(\overline{F}_{1,2}\) are weighted average distribution functions of clusters \(\mathcal{G}_j (j=1,2)\) and \(\mathcal{G}_1\cup \mathcal{G}_2\), respectively.

Because we have spatial information on each cell, we also applied the hierarchical clustering with each of the three distance measures above under the constraint that only clusters that are adjacent to one another can be combined.

Without the adjacency constraint, the clusters obtained using MJS and CVM are somewhat similar, compared to the clusters obtained using EMD (Fig. 3). Based on visual inspection of the dendrograms, one might consider four as a possible choice for the number of clusters. A notable difference of the clusters from these distance measures is that EMD produced a cluster with a small sample size (Table 2). The number of well samples in cluster 2 is only 18, only \(2.2\%\) of the total number of 797 wells sampled. This is probably because EMD is defined only with distribution functions and sample sizes are not considered.

The results under the adjacency constraint are markedly different among the three methods (Fig. 4), in contrast with results without the adjacency constraint (Fig. 3). Cell No. 42 forms a single-cell cluster with both of EMD and CVM, and 4 or 5 clusters, while with MJS, there is no cluster based on a single cell. With EMD for the case of 4 clusters, there are two single-cell clusters, one cluster with two cells and one big cluster containing all the other cells.

Comparing the results of the cluster analysis (Fig. 3, 4) with the results of the regression tree analysis (Fig. 2), all based on MJS, it is seen that the cluster analysis method allows more flexibility in the partition structure than regression tree method with simple latitude and longitude covariates. In particular, in the region north of \(5^{\circ }\) N, the clustering structure appears to be related to distance from the coast as well as latitude, whereas for the regression tree structure the partitions are purely on latitude. On the other hand, the regression tree provides rectangular partitions that might be more convenient, in some situations, from a resource management perspective. Of course, with other covariates such as the distance from the coast line, the regression tree approach might yield structure more similar to the cluster analysis. South of \(5^{\circ }\) N, both methods indicate a homogeneous region to the west of \(90^{\circ }\) W–\(95^{\circ }\) W, whereas to the east of \(95^{\circ }\) W for the regression tree structure and \(90^{\circ }\) W for the cluster analysis, there is more heterogeneity. The hierarchical clustering under the adjacency constraint seems to result in a flexible methodology that produces ecologically plausible results when considering the oceanography and biogeochemical provinces of the eastern Pacific Ocean (Kessler and Gourdeau 2006; Kessler 2006; Longhurst 2007; Reygondeau et al. 2013).

Clustering results with an adjacency constraint: The first row: dendrograms of \(5^{\circ }\) by \(5^{\circ }\) cells. The second and the fourth rows: color-coded \(5^{\circ }\) by \(5^{\circ }\) cells in 4 clusters and 5 clusters, respectively. The third and fifth rows: density estimates for 4 clusters and 5 clusters, respectively

Boxplot of MJS and the minimal homogeneous structure: The top left: the dendrogram for the tuna fork length data, the top right: the boxplots of randomization samples and the MJS of the observed data in red squares, the bottom: clusters in the terminal nodes of the minimal homogeneous tree. Note that the scales for the boxplots are different by the frames

5.3 Minimal Homogeneous/Near-Homogeneous Tree Structure

For the yellowfin fork length data, we computed standardized MJS and applied the hierarchical randomization test of homogeneity to the clustering results without an adjacency constraint of the tuna fork length data.

The minimal homogeneous tree structure with the significant level 0.01 had 6 multi-component clusters and 18 single clusters (Fig. 5). The first four nodes in the testing procedure (ID 59 - 56) had very large standardized distances (Table 3(a)). The boxplots in Fig. 5 show that for these four merges, the red squares of the observed data are far away from MJS distances for randomization samples. The next three nodes (ID 55-53) had the considerably large standardized MJS, and the p-values of the first seven nodes are small enough to conclude that these nodes are not homogeneous at the first step. The following nodes, with the exception of ID 47, had mid-range values of the standardized MJS, so the first step was not conclusive, and the second step was performed.

Table 3(b) shows the cutoff value intervals of the standardized MJS and the terminal nodes in the corresponding minimal near-homogeneity trees. It is interesting that the minimal near-homogeneous tree with five clusters is the same as that obtained from the dendrogram although the minimum near-homogeneous tree with three clusters is different from that obtained from the dendrogram and the minimum near-homogeneous tree with four clusters does not exist.

5.4 Simulation Experiment

To evaluate the performance of the proposed method, we conducted simulation experiments using a collection of four probability density functions with similar characteristic to the tuna fork length data. We generated several types of datasets. Each data set is based on a collection of 117 cells that make up the four clusters (see below). A sample for each cell is consisting of 200 observations. The distributions and the numbers of cells of the four clusters are as follows.

Cluster 1 Beta\((\alpha = 15+\epsilon _1,\ \beta =8+\epsilon _2)\), 34 cells.

Cluster 2 Beta\((\alpha = 6+\epsilon _3,\ \beta =10+\epsilon _4)\), 35 cells.

Cluster 3 Beta\((\alpha = 5+\epsilon _5,\ \beta =5+\epsilon _6)\), 30 cells.

Cluster 4 \((0.6+\epsilon _7)\times \)Beta\((15,\ 8)\ + \ (0.4-\epsilon _7)\times \)Beta(10, 4), 17 cells.

No noise was added in Experiment 1 (Fig. 6 shows the density functions for the four clusters). The smallest MJS was 211.7 between clusters 3 and 4, while the others were 6080.6 between clusters 1 and 2, 3275.6 between clusters 1 and 3, 1059.3 between clusters 1 and 2, 1339.2 between clusters 2 and 3, and 1693.6 between clusters 2 and 4.

In Experiments 2 and 3, the noises added to the parameters are \(\epsilon _1,\ldots , \epsilon _6 \sim N(0, \theta _1^2)\) and \(\epsilon _7 \sim N(0, \theta _2^2)\) with \(\theta _1 = 0.5, \theta _2 = 0.05\) for Experiment 1 and \(\theta _1 = 1, \theta _2 = 0.1\) for Experiment 2. In Experiments 4, 5 and 6, the noises added to the parameters are \(\epsilon _1,\ldots , \epsilon _6 \sim \textrm{Uniform}(-\theta _1, \theta _1)\) and \(\epsilon _7 \sim \textrm{Uniform}(\theta _2, \theta _2)\) with \(\theta _1 = 1, \theta _2 = 0.1\) for Experiment 4, \(\theta _1 = 1.5, \theta _2 = 0.15\) for Experiment 5 and \(\theta _1 =2, \theta _2 = 0.2\) for Experiment 6. All \(\epsilon \) were generated independently.

For each experiment, 100 datasets were generated, and clustering was performed using the three methods of Sect. 5. To compare the clustering performance of these methods, we computed the Normalized Mutual Index (NMI, Kvalseth 1987) and the Adjusted Rand Index (ARI, Hubert and Arabie 1985) from among possible measures (Vinh et al. 2010).

In all experiments, for four clusters the MJS method performed best according to both correlation measures, followed by the CVM method, and the EMD method performed worst (Table 4). This result may be understood by looking at the dendrograms of the results from the three methods (Fig. 6), which indicate that the method with EMD distance is likely to be prone to misclassification because the branches between observations within a cluster are relatively long since a small difference in distribution results in a relatively large change in EMD distance.

The hierarchical testing procedure for clustering proposed in Sect. 5 found the correct number 4 of clusters for datasets of experiment 1 where each of four clusters is homogeneous. For example, the standardized distances \(d^*\) for a dataset of experiment 1 were 39.5, 36.9 and 4.47 for the last three merges and that of the fourth from the last merge was \(-0.30\) which led to a choice of the value 4 for the number of clusters.

6 Discussion

In this paper, we discussed the methods to find homogeneous subpopulations based on regression tree and hierarchical clustering methods for distributions in a multidimensional space using MJS and proposed a hierarchical testing procedure to find the minimal homogeneous tree structure and a method to find minimal near-homogeneous tree structures.

Results of applying the proposed methods to yellowfin tuna fork length data showed that while the regression tree produced simple and clear spatial partitions, the clustering method for distributions allowed more flexibility in the partition structure, which may be more consistent with the underlying structure of the environment and ecology of the region. As for clustering, MJS and EMD produced quite different partitions of distributions, both with and without an adjacency constraint. All clusters produced by MJS contained reasonable numbers of original distributions, whereas EMD produced a cluster with only a single original distribution.

In the application to yellowfin tuna fork length data, the MJS results were more useful than the EMD results, since we are more interested in the partitioning of the eastern Pacific Ocean based on distributions rather than distribution forms themselves. In the experiments with simulated data, MJS led to better classification performance in terms of NMI and ARI. However, if one is more interested in classifying the functional forms of distributions, or the data are such that the numbers of samples in each cluster are very different and there are clusters with a small number of samples, EMD may be more appropriate.

In this study, we proposed the method to control the number of clusters by determining the cutoff value for the standardized MJS value to obtain a near-homogeneous tree structure. However, since merging by MJS is equivalent to minimizing the overall entropy, it is possible to control the number of clusters by giving a prior distribution to the partitions and using a Bayesian clustering procedure. We consider this a topic of future research. We note that estimating a standardized distance among clusters is only possible when clustering distributions; standardized distance cannot be computed for clusters of individual observations (values).

Data Availability

The yellowfin tuna length composition data used in this paper are property of the Inter-American Tropical Tuna Commission (IATTC), La Jolla, California, USA. Data sharing restrictions and confidentially criteria apply. Data inquiries should be made directly to IATTC at www.iattc.org.

References

Baringhaus L, Henze N (2017) Cramér–Von Mises distance: probabilistic interpretation, confidence intervals, and neighbourhood-of-model validation. J Nonparametric Stat 29(2):1–12

Breiman L, Friedman JH, Olshen RA, Stone CJ (1984) Classification and regression trees. Wadsworth and Brooks, Monterey

Cha S-H (2007) Comprehensive survey on distance/similarity measures between probability density functions. Int J Math Model Meth Appl Sci 1:300–307

De’ath G (2002) Multivariate regression trees: a new technique for modeling species-environment relationships. Ecology 83(4):1105–1117

Dhillon IS, Mallela S, Kumar R (2002) Enhanced word clustering for hierarchical text classification. In: Proceedings of the Eighth ACM SIGKDD international conference on knowledge discovery and data mining, KDD ’02, ACM, New York, pp 191–200. https://doi.org/10.1145/775047.775076

Dhillon IS, Mallela S, Kumar R (2003) A divisive information-theoretic feature clustering algorithm for text classification. J Mach Learn Res 3:1265–1287

Gao LL, Bien J, Witten D (2022) Selective inference for hierarchical clustering. J Am Stat Assoc 0:1–11

Gordon A (1999) Classification, 2nd edn. Chapman & Hall/CRC monographs on statistics & applied probability. CRC Press, Boca Raton

Henderson K, Gallagher B, Eliassi-Rad T (2015) EP-MEANS: an efficient nonparametric clustering of empirical probability distributions. In: Proceedings of the 30th annual ACM symposium on applied computing. ACM, pp 893–900

Hubert L, Arabie P (1985) Comparing partitions. J Classif 2:193–218

Jiang B, Pei J, Tao Y, Lin X (2013) Clustering uncertain data based on probability distribution similarity. IEEE Trans Knowl Data Eng 25(4):751–763

Kessler WS (2006) The circulation of the eastern tropical Pacific: a review. Prog Oceanogr. https://doi.org/10.1016/j.pocean.2006.03.009

Kessler WS, Gourdeau L (2006) Wind-driven zonal jets in the South Pacific Ocean. Geophys Res Lett. https://doi.org/10.1029/2005GL025084

Kvalseth TO (1987) Entropy and correlation: some comments. IEEE Trans Syst Man Cybern 17(3):517–519

Lennert-Cody C, Maunder M, da Silva AA, Minami M (2013) Defining population spatial units: simultaneous analysis of frequency distributions and time series. Fish Res 139:85–92

Lennert-Cody C, Minami M, Tomlinson P, Maunder M (2010) Exploratory analysis of spatial-temporal patterns in length-frequency data: an example of distributional regression trees. Fish Res 102(3):323–326

Longhurst A (2007) Ecological geography of the sea, 2nd edn. Academic Press, Burlington

Marcus R, Eric P, Gabriel KR (1976) On closed testing procedures with special reference to ordered analysis of variance. Biometrika 63(3):655–660. https://doi.org/10.1093/biomet/63.3.655

Nguyen-Trang T, Nguyen-Thoi T, Vo-Van T (2023) Globally automatic fuzzy clustering for probability density functions and its application for image data. Appl Intell 53:18381–18397. https://doi.org/10.1007/s10489-023-04470-24

Nielsen F, Nock R, Amari S (2014) On clustering histograms with k-means by using mixed \(\alpha \)-divergences. Entropy 16:3273–3301

Phamtoan D, Vovan T (2022) Automatic fuzzy clustering for probability density functions using the genetic algorithm. Neural Comput Appl 34:14609–14625. https://doi.org/10.1007/s00521-022-07265-74

R Core Team (2023) R: A Language and Environment for Statistical Computing, R Foundation for Statistical Computing, Vienna, Austria. https://www.R-project.org/

Reygondeau G, Longhurst A, Martinez E, Beaugrand G, Antoine D, Maury O (2013) Dynamic biogeochemical provinces in the global ocean. Glob Biogeochem Cycles 27(4):1046–1058. https://doi.org/10.1002/gbc.20089

Silverman BW (1986) Density estimation for statistics and data analysis. Chapman & Hall, London

Suter JM (2010) An Evaluation of the area stratification used for sampling tunas in the eastern Pacific Ocean and implications for estimating total annual catches. http://hdl.handle.net/1834/23952

Vinh N, Epps J, Bailey J (2010) Information theoretic measures for clusterings comparison: variants, properties, normalization and correction for chance. J Mach Learn Res 11:2837–2854

Vo-Van T, Pham-Gia T (2010) Clustering probability distributions. J Appl Stat 37(11):1891–1910. https://doi.org/10.1080/02664760903186049

Ward J Jr (1963) Hierarchical grouping to optimize an objective function. J Am Stat Assoc 58:236–244

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no Conflict of interest to declare.

R programs

R programs and command sequence for simulation experiments are available as at: https://keio.box.com/s/p27b4tq0uuyvm50drbcjfpf4i7v3mr2s+

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Minami, M., Lennert-Cody, C.E. Regression Tree and Clustering for Distributions, and Homogeneous Structure of Population Characteristics. JABES (2024). https://doi.org/10.1007/s13253-024-00631-z

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s13253-024-00631-z