Abstract

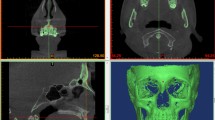

Consistent cross-sectional imaging is desirable to accurately detect lesions and facilitate follow-up in head computed tomography (CT). However, manual reformation causes image variations among technologists and requires additional time. We therefore developed a system that reformats head CT images at the orbitomeatal (OM) line and evaluated the system performance using real-world clinical data. Retrospective data were obtained for 681 consecutive patients who underwent non-contrast head CT. The datasets were randomly divided into one of three sets for training, validation, or testing. Four landmarks (bilateral eyes and external auditory canal) were detected with the trained You Look Only Once (YOLO)v5 model, and the head CT images were reformatted at the OM line. The precision, recall, and mean average precision at the intersection over union threshold of 0.5 were computed in the validation sets. The reformation quality in testing sets was evaluated by three radiological technologists on a qualitative 4-point scale. The precision, recall, and mean average precision of the trained YOLOv5 model for all categories were 0.688, 0.949, and 0.827, respectively. In our environment, the mean implementation time was 23.5 ± 2.4 s for each case. The qualitative evaluation in the testing sets showed that post-processed images of automatic reformation had clinically useful quality with scores 3 and 4 in 86.8%, 91.2%, and 94.1% for observers 1, 2, and 3, respectively. Our system demonstrated acceptable quality in reformatting the head CT images at the OM line using an object detection algorithm and was highly time efficient.

Similar content being viewed by others

References

Powers WJ, Rabinstein AA, Ackerson T, Adeoye OM, Bambakidis NC, Becker K, Biller J, Brown M, Demaerschalk BM, Hoh B, Jauch EC, Kidwell CS, Leslie-Mazwi TM, Ovbiagele B, Scott PA, Sheth KN, Southerland AM, Summers DV, Tirschwell DL (2019) Guidelines for the early management of patients with acute ischemic stroke: 2019 update to the 2018 guidelines for the early management of acute ischemic stroke: a guideline for healthcare professionals from the American Heart Association/American Stroke Association. Stroke 50:e344–e418. https://doi.org/10.1161/STR.0000000000000211

Shetty VS, Reis MN, Aulino JM, Berger KL, Broder J, Choudhri AF, Kendi AT, Kessler MM, Kirsch CF, Luttrull MD, Mechtler LL, Prall JA, Raksin PB, Roth CJ, Sharma A, West OC, Wintermark M, Cornelius RS, Bykowski J (2016) ACR appropriateness criteria head trauma. J Am Coll Radiol 13:668–679. https://doi.org/10.1016/j.jacr.2016.02.023

Mair G, Wardlaw JM (2014) Imaging of acute stroke prior to treatment: current practice and evolving techniques. Br J Radiol 87:20140216. https://doi.org/10.1259/bjr.20140216

Potter CA, Vagal AS, Goyal M, Nunez DB, Leslie-Mazwi TM, Lev MH (2019) CT for treatment selection in acute ischemic stroke: a code stroke primer. Radiographics 39:1717–1738. https://doi.org/10.1148/rg.2019190142

Kim YI, Ahn KJ, Chung YA, Kim BS (2009) A new reference line for the brain CT: the tuberculum sellae-occipital protuberance line is parallel to the anterior/posterior commissure line. Am J Neuroradiol 30:1704–1708. https://doi.org/10.3174/ajnr.A1676

Sugimori H, Kawakami M (2019) Automatic detection of a standard line for brain magnetic resonance imaging using deep learning. Appl Sci 9:3849. https://doi.org/10.3390/app9183849

Nishio M, Koyasu S, Noguchi S, Kiguchi T, Nakatsu K, Akasaka T, Yamada H, Itoh K (2020) Automatic detection of acute ischemic stroke using non-contrast computed tomography and two-stage deep learning model. Comput Methods Progr Biomed 196:105711. https://doi.org/10.1016/j.cmpb.2020.105711

Soltanpour M, Greiner R, Boulanger P, Buck B (2021) Improvement of automatic ischemic stroke lesion segmentation in CT perfusion maps using a learned deep neural network. Comput Biol Med 137:104849. https://doi.org/10.1016/j.compbiomed.2021.104849

Monteiro M, Newcombe VFJ, Mathieu F, Adatia K, Kamnitsas K, Ferrante E, Das T, Whitehouse D, Rueckert D, Menon DK, Glocker B (2020) Multiclass semantic segmentation and quantification of traumatic brain injury lesions on head CT using deep learning: an algorithm development and multicentre validation study. Lancet Digit Health 2:e314–e322. https://doi.org/10.1016/S2589-7500(20)30085-6

Sugimori H, Hamaguchi H, Fujiwara T, Ishizaka K (2021) Classification of type of brain magnetic resonance images with deep learning technique. Magn Reson Imaging 77:180–185. https://doi.org/10.1016/j.mri.2020.12.017

Fang X, Harris L, Zhou W, Huo D (2021) Generalized radiographic view identification with deep learning. J Digit Imaging 34:66–74. https://doi.org/10.1007/s10278-020-00408-z

Ichikawa S, Hamada M, Sugimori H (2021) A deep-learning method using computed tomography scout images for estimating patient body weight. Sci Rep 11:15627. https://doi.org/10.1038/s41598-021-95170-9

Hsieh C-I, Zheng K, Lin C, Mei L, Lu L, Li W, Chen F-P, Wang Y, Zhou X, Wang F, Xie G, Xiao J, Miao S, Kuo C-F (2021) Automated bone mineral density prediction and fracture risk assessment using plain radiographs via deep learning. Nat Commun 12:5472. https://doi.org/10.1038/s41467-021-25779-x

Tzutalin, labelImg (2017) Accessed 1 October 2021. https://github.com/tzutalin/labelImg

Wang CY, Mark Liao HY, Wu YH, Chen PY, Hsieh PW, Yeh IH (2020) CSPNet: a new backbone that can enhance learning capability of CNN. In: Proceedings of the IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. Work pp 390–391. https://doi.org/10.1109/CVPRW50498.2020.00203.

He K, Zhang X, Ren S, Sun J (2015) Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans Pattern Anal Mach Intell 37:1904–1916. https://doi.org/10.1109/TPAMI.2015.2389824

Wang K, Liew JH, Zou Y, Zhou D, Feng J (2019) PANet: few-shot image semantic segmentation with prototype alignment. In: Proceedings of the IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. Work pp 9197–9206. https://doi.org/10.1109/ICCV.2019.00929

Ultralytics, YOLOv5 (2020) Accessed 1 October 2021. https://github.com/ultralytics/yolov5.

Lin T-Y, Maire M, Belongie S, Hays J, Perona P, Ramanan D, Dollár P, Zitnick CL (2014) Microsoft coco: Common objects in context. In: Proceedings of the European conference on computer vision. pp 740–755. https://doi.org/10.1007/978-3-319-10602-1_48

Beare R, Lowekamp B, Yaniv Z (2018) Image segmentation, registration and characterization in R with SimpleITK. J Stat Softw. https://doi.org/10.18637/jss.v086.i08

Yaniv Z, Lowekamp BC, Johnson HJ, Beare R (2018) SimpleITK image-analysis notebooks: a collaborative environment for education and reproducible research. J Digit Imaging 31:290–303. https://doi.org/10.1007/s10278-017-0037-8

Lowekamp BC, Chen DT, Ibáñez L, Blezek D (2013) The design of SimpleITK. Front Neuroinform. https://doi.org/10.3389/fninf.2013.00045

Li Y, Li H, Fan Y (2021) ACEnet: anatomical context-encoding network for neuroanatomy segmentation. Med Image Anal 70:101991. https://doi.org/10.1016/j.media.2021.101991

Kang SH, Jeon K, Kang SH, Lee SH (2021) 3D cephalometric landmark detection by multiple stage deep reinforcement learning. Sci Rep 11:1–13. https://doi.org/10.1038/s41598-021-97116-7

Zheng Y, Liu D, Georgescu B, Nguyen H, Comaniciu D (2015) 3D deep learning for efficient and robust landmark detection in volumetric data. In: International conference on medical image computing and computer-assisted intervention, pp 565–572. https://doi.org/10.1007/978-3-319-24553-9_69

Noothout JMH, De Vos BD, Wolterink JM, Postma EM, Smeets PAM, Takx RAP, Leiner T, Viergever MA, Isgum I (2020) Deep learning-based regression and classification for automatic landmark localization in medical images. IEEE Trans Med Imaging 39:4011–4022. https://doi.org/10.1109/TMI.2020.3009002

Nishii T, Okuyama S, Horinouchi H, Chikuda R, Kamei E, Higuchi S, Ohta Y, Fukuda T (2021) A real-world clinical implementation of automated processing using intelligent work aid for rapid reformation at the orbitomeatal line in head computed tomography. Invest Radiol 56:599–604. https://doi.org/10.1097/RLI.0000000000000779

Zhou XS, Zhan Y, Raykar VC, Hermosillo G, Bogoni L, Peng Z (2012) Mining anatomical, physiological and pathological information from medical images. ACM SIGKDD Explor Newsl 14:25–34. https://doi.org/10.1145/2408736.2408741

Yiqiang Zhan M, Dewan M, Harder AK, Zhou XS (2011) Robust automatic knee MR slice positioning through redundant and hierarchical anatomy detection. IEEE Trans Med Imaging 30:2087–2100. https://doi.org/10.1109/TMI.2011.2162634

Ardekani BA, Bachman AH (2009) Model-based automatic detection of the anterior and posterior commissures on MRI scans. Neuroimage 46:677–682. https://doi.org/10.1016/j.neuroimage.2009.02.030

van der Kouwe AJW, Benner T, Fischl B, Schmitt F, Salat DH, Harder M, Sorensen AG, Dale AM (2005) On-line automatic slice positioning for brain MR imaging. Neuroimage 27:222–230. https://doi.org/10.1016/j.neuroimage.2005.03.035

Redmon J, Farhadi A (2018) YOLOv3: an incremental improvement. http://arxiv.org/abs/1804.02767

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. In: Proceedings of international conference on learning representations. http://arxiv.org/abs/1409.1556

Ren S, He K, Girshick R, Sun J (2016) Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Mach Intell 39:1137–1149. https://doi.org/10.1109/TPAMI.2016.2577031

Liu W, Anguelov D, Erhan D, Szegedy C, Reed S, Fu CY, Berg AC (2016) SSD: single shot multibox detector. In: Proceedings of the European conference on computer vision pp 21–37. https://doi.org/10.1007/978-3-319-46448-0_2

Tan M, Pang R, Le QV (2020) EfficientDet: scalable and efficient object detection. Proc IEEE/CVF Conf Comput Vis Pattern Recogn. https://doi.org/10.1109/CVPR42600.2020.01079

Acknowledgements

The authors would like to thank Enago (www.enago.jp) for the English language review.

Funding

The authors declare that no funds, grants, or other support were received during the preparation of this manuscript.

Author information

Authors and Affiliations

Contributions

SI contributed to the study design, data collection, algorithm construction, image evaluation, and the writing and editing of the article; HI carried out the data collection, image evaluation, and the reviewing and editing of the article; HS performed supervision, project administration, image evaluation, and reviewing and editing of the article. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Ethical approval

This study was performed in line with the principles of the Declaration of Helsinki. Approval was granted by the institutional review board of Kurashiki Central Hospital (Approval Number 3766). The requirement for individual informed consent was waived due to the retrospective nature of the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Ichikawa, S., Itadani, H. & Sugimori, H. Toward automatic reformation at the orbitomeatal line in head computed tomography using object detection algorithm. Phys Eng Sci Med 45, 835–845 (2022). https://doi.org/10.1007/s13246-022-01153-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13246-022-01153-z