Abstract

This paper is devoted to a theoretical and numerical investigation of Nash equilibria and Nash bargaining problems governed by bilinear (input-affine) differential models. These systems with a bilinear state-control structure arise in many applications in, e.g., biology, economics, physics, where competition between different species, agents, and forces needs to be modelled. For this purpose, the concept of Nash equilibria (NE) appears appropriate, and the building blocks of the resulting differential Nash games are different control functions associated with different players that pursue different non-cooperative objectives. In this framework, existence of Nash equilibria is proved and computed with a semi-smooth Newton scheme combined with a relaxation method. Further, a related Nash bargaining (NB) problem is discussed. This aims at determining an improvement of all players’ objectives with respect to the Nash equilibria. Results of numerical experiments successfully demonstrate the effectiveness of the proposed NE and NB computational framework.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This paper is devoted to a theoretical and numerical investigation of a class of differential (dynamical) Nash games and related Nash bargaining problems governed by differential models with bilinear state-control (input-affine) structures.

Since their appearance [24, 25], Nash games have attracted the attention of many scientists as they provide a convenient mathematical framework to investigate problems of competition and cooperation. A competition game can be interpreted as a coupled optimization problem for which the Nash equilibrium (NE) defines the solution concept. In this framework, non-cooperative differential Nash games were introduced in [18]. In this case, differential (dynamical) models govern the state of the system that is subject to the action of different controls representing the strategies of the players in the game, and to each player is associated an objective (pay-off) functional. Differential games have received much attention in the field of economics and marketing [11, 19], and are well investigated in the case of linear differential models with linear control mechanism and quadratic objectives; see, e.g., [5, 13, 15, 28]. On the other hand, much less is known in the case of nonlinear models, especially in the case of a nonlinear control structure and, in particular, in the case of a bilinear structure, where a function of the state variable multiplies the controls. For a past review of works on differential Nash games, we refer to [27], and for more recent and detailed discussion and references see [13, 16, 19]. We also remark that nonlinear differential NE games have been investigated in the framework of Young measures; see, e.g., [3, 27]. However, the numerical implementation of the latter framework is at its infancy and requires further development that is beyond the scope of our present work.

In the general framework of differential Nash games, we focus on a bilinear control structure and we remark that bilinear control problems play a central role in many applications [26]. In particular, they are omnipresent in the field of quantum control problems [4]. However, the additional theoretical and numerical difficulties due to the bilinear structure hinder the further application of the NE framework to many envisioned problems. Furthermore, Nash games are strictly related to multi-objective optimization with nonlinear control structure, and therefore we believe that a study of NE bilinear game problems would be beneficial for further development and application of the differential NE framework and related fields. This is in particular true for new problems involving quantum systems [17] and biological systems [6], and for this reason, we show results with two related models that can both be put in the following general bilinear structure

where \(x(t) \in {\mathbb {R}}^n\) represents the n-dimensional state of the system at the time t, \(x_0\) is the initial condition and \(u=(u_1,\ldots ,u_N)\) represents the vector control function. The function \(f^0\) governs the free dynamics of the system, and the function F denotes the interaction coupling between the control and the system.

We present a theoretical and numerical investigation of Nash games governed by (1), where the control components \(u_j\), \(j=1,\ldots , N\), represent N players, choosing their strategy in an admissible set, and to each player we associate a different cost functional such that a non-cooperative problem is defined. Thus, our first purpose is to prove existence of a NE in this framework, and for this purpose we consider the theoretical framework in [28], which we extend to our nonlinear case. The result is that a NE exists if a sufficiently short time horizon is considered, \(T < T_0\). Moreover, we provide an estimate of \(T_0\).

With this knowledge, we turn our attention to the numerical realisation of the NE solution. For this purpose, we implement and analyse a relaxation scheme [20] combined with a semi-smooth Newton method [7,8,9], where the latter choice is motivated by the presence of constraints on the players’ actions that make our game problem non-smooth.

The second aim of our work is to address the fact that a Nash equilibrium does not provide an efficient solution with respect to the payoffs that the players could achieve by agreeing to cooperate negotiating the surplus of payoff that they can jointly generate. For this purpose, J. F. Nash proposed in [23] a bargaining strategy of jointly improving efficiency while keeping close to the strategy of a NE point. The idea is to find a point that is Pareto optimal and symmetric (the labelling of the players should not matter) that maximizes the surplus of payoff for both players. In this way, we obtain controls that represent an improvement toward the task of approaching the desired targets while keeping their costs as small as possible.

In order to implement this goal, we use the Nash characterization of a bargaining solution to construct an efficient method to explore the Pareto frontier and converge to the solution sought. For this purpose, we compute a Pareto point based on its characterization as a solution of a bilinear optimal control problem for u and a cost functional resulting from a composition of the players’ objectives. Further, we consider the framework in [12], see also [13], to reformulate the characterization of a Nash bargaining (NB) solution and use this characterization to construct an iterative scheme that converges to this solution on the Pareto frontier.

In the following section, we formulate a class of bilinear optimal control problems as the starting point for the formulation of our differential Nash game. Correspondingly, we define the NE concept and the related NB solution. In Sect. 3, we prove existence of NE for our game. For this purpose, preparatory results are presented addressing the Fréchet differentiability of our differential model and other functional properties of the components of the dynamic Nash game. In Sect. 4, we illustrate our numerical framework for solving a NE differential game, which requires to introduce first-order optimality conditions that must be satisfied by the NE solution and discuss a semi-smooth Newton scheme that is applied to this system in such a way to obtain partial updates for the game strategies sought. Thereafter, we show how these updates are combined in a relaxation scheme in order to construct an iterative procedure that converges to the NE point. The convergence properties of this relaxation scheme are also discussed. Section 5 is devoted to the analysis of our Nash bargaining problem, where we prove a characterization of a solution to this problem, and correspondingly define a solution procedure for its calculation. In Sect. 6, we present results of numerical experiments that successfully validate our NE differential game framework based on a quantum model of two spins and on a model of population dynamics with two competing species. A section of conclusion completes this work.

2 From Bilinear Control to Bilinear Games

In many applications with (1), the aim of the controls \(u_{j}\) is to drive the system from an initial state \(x(0)=x_{0}\) to a neighbourhood of a given target state \(x_{T}\) at a given time T. For this purpose, the following bilinear optimal control problem is formulated

where \(x \in X\) is referred to as the state of the system in the set

where \(H^{1}\) is the usual Sobolev space of the subset of \(L^2\) functions such that their first-order weak derivatives have finite \(L^2\) norm; see, e.g., [1]. Further, \(x_{T} \in {\mathbb {R}}^{n}\) is a given target and the functions \(f^0, F_j : {\mathbb {R}}^n \rightarrow {\mathbb {R}}^n\) are assumed to be Lipschitz and two-times Fréchet differentiable. Whenever necessary, we denote with \(F(x) \in {\mathbb {R}}^{n \times N}\) the matrix with columns \(F_j(x)\), \(j=1, \dots , N\). The functions \(u_{j} : [0,T] \rightarrow {\mathbb {R}}\), \(j=1,\dots ,N\), represent the controls belonging to the following admissible sets

where \(M \in (0, \infty ]\) is a given positive constant and N is the number of controls. We denote \(\mathcal {U}(M)= \mathcal {U}_1(M) \times \cdots \times \mathcal {U}_N(M)\).

Now, turning to the framework of differential games, we can consider each scalar function \(u_j\) in (2) as the control strategy of a player that we label with j. In the present setting, all players have the same purpose: come as close as possible to \(x_T\) at final time while minimizing the \(L^2\) cost of its action. Within the game strategy, we can now consider different control objectives associated with different players and thus attempt to drive the bilinear system to perform different and even competitive tasks.

Assuming that each player has chosen its control strategy, this choice determines a unique solution \(x \in X\) of problem (1). We call x(t), \(t \in [0,T]\), the trajectory corresponding to the controls \(u_1, \dots , u_N\). For the jth player, each choice of controls has a cost, \( J_j(u_1, \dots , u_N): ={\widetilde{J}}_j (x(u_1, \dots , u_N), u_1, \dots , u_N)\), defined as follows

We call \(J_j\) the objective (reduced cost functional) of player j. The \(J_j\), \(j=1,\ldots ,N\), represent the objectives of the game.

The purpose of each player is to minimize its own cost functional. However, since the game is non-cooperative, this criterion does not provide a suitable solution concept. On the other hand, a Nash equilibrium is an outcome in which every player is performing the optimal strategy knowing the other players’ choices. Thus, no player can benefit from unilaterally changing his choice. If each player has chosen a strategy and no player can benefit by changing strategies while the other players keep theirs unchanged, then the current set of strategy choices and the corresponding objectives’ values constitute a Nash equilibrium (NE). A NE is defined as follows.

Definition 1

Let \(M \in (0, \infty ]\) be fixed. The controls \(u_i \in \mathcal {U}_i (M)\) are said to form a Nash equilibrium strategy for the game \(G=(J_1, \dots , J_N; M)\) if for each \(i= 1, \dots , N\), it holds

for all \(v_i \in \mathcal {U}_i(M)\), \(i=1,\ldots , N\).

Whenever necessary, we denote with \(u^{\mathrm{NE}}=(u_1^{\mathrm{NE}}, \dots , u_N^{\mathrm{NE}})\) a NE strategy.

It is well known that the NE solution concept is inefficient in the sense that, in many cases, the players can jointly generate and share an improvement in their objectives by choosing to cooperate. This is a so-called bargaining problem and we pursue the Nash bargaining (NB) solution concept. In particular, the NB solution \(u^{\mathrm{NB}}=(u_1^{\mathrm{NB}}, \dots , u_N^{\mathrm{NB}})\) requires that the strategies of the players are Pareto optimal and satisfy the following criteria

where P is a subset of the Pareto frontier such that \(J_i(u^{\mathrm{NE}}) \ge J_i(u)\). In this formulation, we choose \(u^{\mathrm{NE}}\) as the disagreement outcome, and \(J_i(u^{\mathrm{NE}})\), \(i=1,\ldots ,N\), are the values of the objectives of the game if no bargaining takes place (status quo).

Based on (6), the players act in order to maximize the Nash product of the excesses (or defects) with respect to the solution corresponding to disagreement. Since the NB solution concept requires Pareto optimality, the NB solution is sought in the Pareto frontier.

In this case, under the assumption that the players cooperate in trying to minimize their performance, a set of control actions u is sought such that the resulting individual objectives cannot be improved upon by all players simultaneously. This is a so-called Pareto efficient solution. In the literature, a way to find Pareto solutions is to solve a parameterized optimal control problem as follows

where \(u=(u_1, \dots , u_N)\), \(\mu _i \in [0,1]\) with \(\sum _{i=1}^N \mu _i =1\).

Notice that in this way not all Pareto solutions can be found; see [14]. Nevertheless, we assume that \(u^{\mathrm{NB}}\) can be computed with (7) and corresponding to a specific choice of \(\mu =(\mu _1,\ldots , \mu _N)\).

3 Existence of Nash Equilibria

In this section, after discussing some preliminary results, we prove a theorem stating existence of a NE strategy for our bilinear-control problem. We closely follow the approach in [28]; see [27] for a review of this approach and the discussion of an alternative method in the framework of Young measures.

We start this section showing some properties of the solution to (1). Clearly, this solution depends on the initial condition, and since we focus on open-loop NE games, some of the constants obtained in the following estimates depend on the initial state of the system denoted with \(x_0\).

Lemma 3.1

For any \(u \in \mathcal {U}(M)\) the solution x of (1) is bounded in \(\left[ 0,T\right] \), i.e. there exists a constant \(\mathcal {K}\) such that \(\Vert x(t) \Vert _2 \le \mathcal {K}\) \(\forall t \in [0, T]\).

Proof

It is well known that \(x : [0,T] \rightarrow {\mathbb {R}}^n\) has the following form

For any \(t\in \left[ 0,T \right] \) one can compute

which then allows us to estimate

where the Lipschitz continuity of \(f^0\) and F is used and \(L_{f^0}\) and \(L_F\) denote the corresponding Lipschitz constants. From estimate (11) we obtain

where

With the Gronwall theorem we get

Therefore the Lemma is proved with \(\mathcal {K}(T) := \alpha _T \exp \Big (\int _0^T\beta (s)\mathrm{d}s\Big )\) which is a monotonically increasing function of T. \(\square \)

Lemma 3.2

The solution x is a Lipschitz function of u, for all \(u \in \mathcal {U}(M)\), \(M \in (0, \infty )\).

Proof

For any \(u_1, u_2 \in \mathcal {U}(M)\), consider the corresponding state equations given by

Then, let \(z := x_1 - x_2\). We get

Hence,

and

where K(T) monotonically increases with T because of the continuity of F and the properties of x. Finally, the Cauchy–Schwarz inequality and the Gronwall theorem imply

Therefore x is a Lipschitz function in u. \(\square \)

Let us consider the linearized problem related to (1) for a general reference pair (x, u). We have

where \( \left( f^0 \right) '\) and \( \left( F_j \right) '\) denote the Jacobian matrices of \(f^0\) and \(F_j, j=1,\dots , N\), respectively. Then, the following properties hold.

Lemma 3.3

Let \(\delta u \in L^2(0,T; {\mathbb {R}}^N)\) and let \(\delta x=\delta x(\delta u)\) be the corresponding unique solution to (17). Then the following estimate holds

in (0, T).

Proof

Consider the linearized problem (17). It holds

As in the previous Lemma, applying the Cauchy–Schwarz inequality and the Gronwall theorem, we get

in (0, T), where \({\tilde{C}}(T)\) monotonically increases with T. \(\square \)

Next, consider the operator \(c(\cdot , \cdot ) : H^1(0,T;{\mathbb {R}}^n) \times L^2(0,T;{\mathbb {R}}^N) \rightarrow L^2(0,T;{\mathbb {R}}^n) \times {\mathbb {R}}^n \) defined as follows

In this way (1) and (17) can be written respectively as

where \(( \delta x, \delta u) \mapsto D c(x,u) ( \delta x, \delta u) \) is given by

The operator c is Fréchet differentiable and its Fréchet derivative \(D_x c(x,u)\) is invertible. Hence the equation

and the implicit function theorem (see [10]) imply that \(D_u x(u)(\delta u)= \delta x \) is the solution to the linearized problem (17).

Moreover, the assumptions on \(f^0, F\), with the implicit function theorem imply that the control-to-state map \(u \mapsto x(u)\), where \(x(u) \in H^1(0,T;{\mathbb {R}}^n)\) is the unique solution to (1) corresponding to u, is twice Fréchet differentiable. Therefore, denoting by \( \delta x(u,\delta u) \in H^1(0,T;{\mathbb {R}}^n)\) the unique solution to (17) corresponding to \(\delta u\) and u, the following expansion holds

where \(\theta (u, \delta u): = x''(u)(\delta u, \delta u)\) and \(R(u, \delta u) = o (\Vert \delta u\Vert _{L^2}^2)\).

For our discussion we need to estimate \(\theta (u, \delta u)\). Therefore the following property is proved.

Lemma 3.4

In the above setting, for any \(h \in L^2(0,T)\), \( \theta (u,h) \in H^1(0,T;{\mathbb {R}}^n)\) solves the following problem

and

Proof

Consider the operator \(c(\cdot , \cdot )\) and compute its second derivative. We get

and hence

If x(u) is the solution of (1) corresponding to the control u, then \(c(x(u),u) = 0\). Therefore \(D c(x(u),u)(h) = 0\) and \(D^2 c(x(u),u)(h,h)=0\). Computing term-by-term, we get

By replacing (22)–(26) into \(D^2 c(x(u),u)(h,h)=0\), we get that \(\theta \) solves (20).

The estimate can be proved similarly to the previous Lemma. In fact, integrating (20) over (0, t), we get

Hence,

Defining \(\big (M_i(x)\big )_{kl} := \partial _{x_l} (\partial _{x_k} f_i^0(x))\), \(i,j,k= 1, \dots , n\) and \(\big (N_{ij}(x)\big )_{kl}:= \partial _{x_l}(\partial _{x_k}F_{ij}(x))\), \(i,k,l=1, \dots ,n\) \(j=1,\dots ,N\), it follows

Using Lemma 3.3,

Applying the Gronwall theorem we get

with \(\mathcal {C}(T)\) monotonically increasing with T. \(\square \)

Next, we introduce some functions used in the proof of the main result.

Define \(\psi : \mathcal {U}(M) \times \mathcal {U}(M) \rightarrow {\mathbb {R}} \) by

Lemma 3.5

The function \(u^* = (u_1^*,\ldots ,u_N ^*)\) is an equilibrium strategy for the game G if and only if

for all \(v \in \mathcal {U}(M).\)

Proof

Suppose \(u^*=(u_1^*,\ldots ,u_N^*)\) is an equilibrium strategy and let \(v=(v_1,\ldots ,v_N) \in \mathcal {U}(M)\). Then

for \(i=1,\ldots ,N\). Adding these inequalities for \(i=1,\ldots ,N\), we obtain (28). Conversely, suppose (28) holds. Substitution of \(v= (u_1^*,\ldots ,u_{i-1}^*, v_i, u_{i+1}^*,\ldots , u_N^*)\) in (28) gives (29). \(\square \)

Let \(v= (v_1, \ldots , v_N) \in \mathcal {U}(M)\) be fixed. Consider the function \(\sigma : \mathcal {U}(M) \rightarrow {\mathbb {R}}\) defined by

where \(x_T^{(i)}\) are the desired players’ targets and \(x(t), x^i(t)\), for \(t \in [0,T]\), are the trajectories of (1) corresponding to the controls \(u :=(u_1,\ldots ,u_N)\) and \(u^i := (u_1,\ldots , u_{i-1}, v_i, u_{i+1},\ldots ,u_N)\), respectively.

After this preparatory work, we can state the following result, similar to [28] for the linear case, which focuses to our NE game with bilinear structure.

Lemma 3.6

There exists \(T_0 >0\) such that for all \(T \in [0, T_0)\) it holds that

-

(i)

\(\sigma \) is weakly lower semicontinuous,

-

(ii)

\(\sigma \) is convex,

-

(iii)

\(\sigma (u) \rightarrow \infty \) as \(\Vert u\Vert _{L^2} \rightarrow \infty \).

Proof

Since the functions that constitute \(\sigma \) are continuous, then also \(\sigma : L^2(0,T;{\mathbb {R}}) \times C([0,T];{\mathbb {R}})\rightarrow {\mathbb {R}} \) is a continuous function. Next, we prove that there exist \(T_0 >0\) and \( \epsilon _0 (T_0)>0\) such that

for any \(0<T<T_0\).

For the proof, let \(u\in \mathcal {U}(M), w\in L^2(0,T)\) be fixed and compute the derivatives of \(\sigma \). We have

and

Then, we get

where \(\delta x(u,w)\) and \(\delta x^i (u^i,w)\) are the solutions of (17) with controls \((u_1, \dots , u_N)\) and \((u_1, \dots ,u_{i-1}, w_i, u_{i+1}, \dots , u_N)\).

Therefore, we obtain

where we used Lemmas 3.3 and 3.4 and \(k_1(T) :=2 (\mathcal {K}(T) + \Vert x_T^{(i)} \Vert _2) \mathcal {C}(T) \), \(k_2(T) := ( {\tilde{C}}(T) N ) ^2\).

The coercivity of \(\sigma \) is then guaranteed if require

Therefore it is possible to choose \( \epsilon _0\) and \(T_0\) such that (30) holds and \(\sigma \) is convex [29, Corollary 42.8 page 248]. Moreover, since \(\sigma \) is continuous, it is weakly lower semicontinuous. \(\square \)

Let \(v \in \mathcal {U}(M) \) be fixed. Define \(\psi _v : \mathcal {U}(M) \rightarrow {\mathbb {R}} \) by

Also let \(U_v := \{ u : \psi _v(u) > 0 \}\).

Lemma 3.7

There exists a \(T_0 >0 \) such that if \(T< T_0\) then \(U_v\) is weakly open and \(\psi _v(u) \rightarrow \infty \) as \( \Vert u\Vert _{L^2} \rightarrow \infty \).

Proof

We have

In this expression, the first sum is continuous and the third sum is constant. By Lemma 3.6 there is a \(T_0 >0\) such that if \(T<T_0\) then the first sum plus the second sum is weakly lower semicontinuous and grows indefinitely as \(\Vert u\Vert _{L^2}\) grows indefinitely. Therefore \(\psi _v \) is weakly lower semicontinuous, so that the complement set of \(U_v\), \( U_v^c = \{ u : \psi _v(u) \le 0\}\) is weakly closed and its complement \( U_v\) is weakly open. \(\square \)

Next, we prove existence of a NE for our differential game.

Theorem 3.8

Let \(J_1, \dots , J_N\) be cost functions and \(M \in [0, \infty ]\). There is a \(T_0 > 0\) such that for any \(T < T_0\) there exists at least an equilibrium strategy for the game \(G = (J_1,\dots ,J_N;M)\).

Proof

Suppose the theorem is false. Then, using Lemma 3.5, for each \(u \in \mathcal {U}(M)\), there is a \(v \in \mathcal {U}(M)\) such that \(\psi _v (u) :=\psi (u,u) - \psi (u,v) > 0\), i.e. \(u \in U_v\), where \(U_v\) is defined above. Therefore \(\mathcal {U}(M)\) has the following weakly open cover

Next, we show that there is a finite subset of vector functions \(\{ v^1,\ldots , v^p \}\) of \(\mathcal {U}(M)\) such that

First suppose \(M < \infty \). Then \(\mathcal {U}(M)\) is a convex, bounded and closed subset of \(L^2(0,T; {\mathbb {R}}^N)\) so that it is weakly compact. Then (32) must have a finite subcover (33).

Now, suppose \(M = \infty \). Let \(v^1 \in \mathcal {U}(M)\). Then by Lemma 3.7, \(\psi _{v^1}(u) \rightarrow \infty \) as \(\Vert u\Vert _{L^2} \rightarrow \infty \). Hence, there is \(M_1 < \infty \) such that \(\psi _{v^1}(u) > 0\) whenever \(\Vert u\Vert _{L^2} > M_1\). That is, \( \{ u : \Vert u\Vert _{L^2}^2 > M_1 \} \subset U_{v^1}.\) Now, since \(\mathcal {U}(M_1)\) is weakly compact, there exist \(v^2,\ldots ,v^p \in \mathcal {U}(M)\) such that \(\mathcal {U}(M_1) \subset \cup _{i=2}^p U_{v^i}\). Thus, we have

so that once again (33) holds. Note that (33) implies that for each \(u \in \mathcal {U}(M)\) there is a \(j \in \{1,\ldots ,p\}\) such that \(\psi _{v^j}(u) > 0\).

Now, let V be a convex hull of \(\{ v^1, \ldots , v^p\}\), i.e.,

Notice that \(V \subset \mathcal {U}(M)\). Define the functions \(\gamma _j : V \rightarrow {\mathbb {R}}\) by

Since V is finite-dimensional, the weak and strong topology coincide. Notice that \(\psi _{v^j}\) is strongly continuous on \(\mathcal {U}(M)\), hence it is continuous on V, and so \(\gamma _j\) is continuous on V. Finally, since we have seen that \( \forall v \in V\) there is at least one \(j \in \{1,\ldots ,p\}\) such that \(\psi _{v^j} (v)>0\), then the function

satisfies the condition

Now, define the function \(\eta : V \rightarrow V\) by

Then \(\eta \) is continuous and we can apply the Brouwer fixed-point theorem to get the existence of a point \(v^* \in V\) such that \(\eta (v^*)=v^*\). Suppose \(\gamma _j(v^*) > 0\), \(j=1,\ldots ,\ell \) and \(\gamma _j(v^*) = 0\), \(j >\ell \). Then

and the fixed-point condition becomes

Since \(\gamma _j(v^*) >0\) is equivalent to \(\psi (v^*, v^*)> \psi (v^*,v^j)\) , we have

Finally, it is possible to prove the convexity of \(\psi (v^*,v)\) in v as in Lemma (3.6), defining \(\sigma (v_1, \dots , v_N):= \sum _{i=1}^N \left\{ \frac{\nu }{2} \Vert v_i \Vert _{L^2}^2 + \frac{1}{2} \Vert x^i(T)- x_T^{(i)} \Vert _2^2\right\} \), under the condition \(\nu \ge k_1(T) N T^{3}\), with \(k_2\) defined in Lemma 3.6.

Hence, if \(\nu \ge \max \left\{ k_1(T) N T^3 +k_2(T) N T^{3} ,k_1(T) N T^{3} \right\} \), then

which is in contrast with (34). Therefore the theorem is proved.

As already remarked at the beginning of this section, our results concern open-loop NE games. Thus, in particular, the value of the time horizon \(T_0\) specified in Theorem 3 may depend on the choice of the initial condition.

4 A Numerical NE Game Framework

In this section, we illustrate a numerical procedure for solving our differential NE game. For this purpose, let us consider the case of two players, and suppose that \(u^*=(u_1^*,u_2^*)\) is a Nash equilibrium for the game. Then the following holds [5].

-

1.

The control \(u_{1}^*\) is optimal for Player 1, in the sense that it solves the following optimal control problem

$$\begin{aligned}&\min _{u_1} {\widetilde{J}}_1(x,u_1, u_{2}^*) \nonumber \\&\hbox {s.t.} \,\,\, {\dot{x}} = f^0(x) + u_1 F_1(x) + u_2^* F_2(x) ,\,\qquad x(0)=x_0 , \end{aligned}$$(35) -

2.

The control \(u_{2}^*\) is optimal for Player 2, that is, it is a solution of the following optimal control problem

$$\begin{aligned}&\min _{u_2} {\widetilde{J}}_2(x,u_{1}^*, u_{2}) \nonumber \\&\hbox {s.t.} \,\,\, {\dot{x}} = f^0(x) + u_1^* F_1(x) + u_2 F_2(x),\,\qquad x(0)=x_0 . \end{aligned}$$(36)

Therefore \(u^*=(u_1^*,u_2^*)\) solves simultaneously two optimal control problems whose solutions are characterized by the following optimality system

where \(x_{T}^{(n)}\), \(n=1,2\), are the final targets to the players and \(\mathcal {P}_{\mathcal {U}_n (M)} \) denotes the \(L^2\) projector operator on \(\mathcal {U}_n (M)\); see [9] for all details.

Now, referring to the two optimal control problems above, and as in Lemma 3.4, we can consider the control-to-state maps \(u_1 \mapsto x(u_1,u_2^*)\) and \(u_2 \mapsto x(u_1^* ,u_2)\), where \(x(u_1,u_2)\) is the unique solution to our governing model, with given initial condition \(x(0)=x_0\), corresponding to given \(u_1,u_2\). Correspondingly, we can introduce the reduced cost functionals \({J}_1(u_1,u_2):={\widetilde{J}}_1(x(u_1,u_2),u_1,u_2)\) and \({J}_2(u_1,u_2):={\widetilde{J}}_2(x(u_1,u_2),u_1,u_2)\). Therefore the solution to (35) can be written as \(u_1^*={{\,\mathrm{arg\,min}\,}}_{u_1} {J}_1(u_1,u_2^*)\), and the solution to (36) can be written as \(u_2^*={{\,\mathrm{arg\,min}\,}}_{u_2} {J}_2(u_1^*,u_2)\). In this framework, a classical iterative method for solving our NE problem is the relaxation scheme discussed in [20] and implemented in the following algorithm.

Algorithm 4.1

(Relaxation scheme)

In this algorithm, \(\tau \) is a relaxation factor that we specify in our numerical experiments. In general, there is no a priori choice of \(\tau \) available. However, in our numerical experiments we always observe convergence of this scheme by a moderate choice of the relaxation factor. At the end of this section, a proof of the convergence of Algorithm 4.1 is given.

The main advantage of the above algorithm is that we can compute \({\bar{u}}_1\) and \({\bar{u}}_2\) separately (in parallel) using an efficient optimization scheme. Specifically, given \(u_2^k\), we compute \({\bar{u}}_1\) by solving the optimality system given by

In a similar way, given \(u_1^k\), we can compute \({\bar{u}}_2\) by solving the following optimality system

Next, we give a short description of the semi-smooth Newton scheme that we implement for solving (separately) the optimality systems (38) and (39); for more details, see [8, 9]. Denote with \(\eta :=(x,u_1,p_1)\) and define the map \(\mathcal {F}(\eta ) := \Bigl ( \mathcal {F}_{1}(\eta ) , \mathcal {F}_{2}(\eta ) , \mathcal {F}_{3}(\eta ) \Bigr )^{T}\), which represent the residual of the adjoint, state, and optimality condition equations. Therefore, the solution to our optimality systems corresponds to the root of \(\mathcal {F}(\eta ) = 0\), which can be determined by a Newton procedure. However, for this purpose, we need the Jacobian of \(\mathcal {F}\), which is not differentiable in a classical sense with respect to \(u_n\), because of the projection function.

On the other hand, by sub-differential calculus, it is possible to construct a generalized Jacobian, such that the following Newton equation is obtained [9]

This equation can be solved by a Krylov method that requires to implement the action of the Jacobian on an input vector, and thus allows to avoid the assembling of \(\nabla _{\eta } \mathcal {F}\), which leads to the possibility to define a Krylov and semi-smooth Newton matrix-free procedure. This is the method that we use in our calculations in the Steps 1. & 2. of Algorithm 4.1, and for computing the Pareto points discussed in the following section.

Notice that we have discussed our solution methodology at a functional level. However, its numerical realisation requires to approximate the optimality system by appropriate numerical schemes. In particular, we approximate our model using the so-called modified Crank-Nicolson (MCN) scheme. For this purpose, the time domain [0, T] is subdivided in uniform intervals of size h and \(N_{t}\) points, such that \(t^{j}=(j-1)h\) and \(0=t^{1}< \cdots <t^{N_{t}}=T\).

To conclude this section we prove that Algorithm 4.1 is convergent. For this purpose, we need the following result.

Lemma 4.1

The maps \(u \mapsto p_i(u)\), \(i=1,2\), are bounded in [0, T] and Lipschitz continuous in \(u\in \mathcal {U}(M), M\in (0, \infty )\), i.e.,

where \(\mathcal {K}_{p_i}, i=1,2,\) are monotonically increasing with T.

The proof is similar to those given in Lemmas 3.1 and 3.2, hence omitted.

Theorem 4.2

Let \(\mathcal {B}(v^*)\) be a closed ball of \(\mathcal {U}(M), M \in (0,\infty ),\) centered in \(v^*\), a NE for the game. Assume that for all \(v \in \mathcal {B}(v^*)\) the optimality systems (38)–(39) are uniquely solved.

Then, the relaxation scheme proposed in Algorithm 4.1 is convergent in \(\mathcal {B}(v^*)\).

Proof

In the following we omit the dependence on t of x and \(p_i\), \(i=1,2.\)

Consider \(\mathcal {B}(v^*)\), a closed ball of \(\mathcal {U}(M)\) centered in \(v^*\), a NE solution for the game. By our assumption, the optimality systems (38)–(39) can be uniquely solved in \(\mathcal {B}(v^*)\).

We want to show that the map \(A : \mathcal {B}(v^*) \rightarrow \mathcal {B}(v^*) \), defined as

is a contraction, where \(\bar{u}(v)\) is the solution of (38)–(39), i.e.,

with \(v:= (v_1, v_2)\) and \(\bar{v}_1 := \bar{u}_1(v), \bar{v}_2 := \bar{u}_2(v)\).

Let now \(v \in \mathcal {U}(M)\). For the assumption on \(\mathcal {B}(v^*)\), the map A is well defined.

Next, we prove that A is a contraction in \(\mathcal {B}(v^*)\).

Let \(v:=(v_{1}, v_{2}),w:=(w_{1}, w_{2}) \in \mathcal {U}(M)\) and compute \(\Vert A(v) - A(w) \Vert _{L^2}\). Since

as first, we show the Lipschitz continuity of the map \(v \mapsto \bar{u}(v)\), to get

For this purpose and recalling (43), we need to estimate

and

We estimate (44) and the same holds for (45). The aim is to use the boundedness and Lipschitz continuity of the functions x and \(p_1\) proved in Lemmas 3.1, 3.2, 4.1, and of \(F_1\). Adding and subtracting the following quantities from (44),

we get,

Since x, \(p_1\) are Lipschitz continuous in u and bounded, and \(F_1\) is Lipschitz in x and bounded, it follows

where \(C:= C(T)\) is the maximum between the functions bounds of \(p_1\), \(F_1\) and \(L_{F,x,p} := L_{F,x,p} (T)\) is the maximum between the Lipschitz constants of \(F_1, x, p_1\). Note that the bound of \(F_1\) depends on \(\mathcal {K}(T)\) defined in Lemma 3.1. Moreover C and \(L_{F,x,p}\) are monotonically increasing functions of T.

Repeating the same calculations with (45), it holds

and hence

where \(\hat{C}:= 2 C L_{F,x,p}\), i.e. it is a monotonically increasing funtion of T. Hence, the map \(v \mapsto \bar{u}(v)\) is Lipschitz continuous with constant

Therefore A is a contraction if

Since \(\mathcal {B}(v^*) \subset L^2 (0,T)\) is a complete space, choosing the parameters \(\tau , \nu \) and T such that (48) holds, the map A admits a unique fixed point. Hence the Algorithm 4.1 converges. \(\square \)

5 Bargaining Solution

Consider the case \(N=2\), and assume that the players agree to follow a Nash’s bargaining scheme. We can define the set S of all feasible values of the functionals, which can be achieved with an admissible set of controls, as follows

Hence, we consider the following Nash bargaining (NB) problem:

where \(d_i=J_i(u^{\mathrm{NE}})\) is a disagreement outcome for Player i. We shall assume that there exists at least one point \(s \in S\) such that \(d_i > s_i\), \(\forall i\), and suppose that the NB problem has a solution \(u^* \in \mathcal {U}(M)\).

Now, we recall that the best achievable outcomes of cooperation are given by the Pareto points [14], which form the so-called Pareto frontier. Assuming that this curve is convex, then it is characterized by all solutions to (7). Therefore the solution to the NB problem is sought on the Pareto frontier, as discussed by J. Nash in [23].

Next, we present a theorem that provides a characterisation of the solution to the NB problem on the Pareto frontier. This result is essential to formulate the algorithm for the computation of the NB point; see also [12].

Theorem 5.1

Let \(u^* \in \mathcal {U}(M)\) be such that

for all \(u \in \mathcal {U}(M)\), where

and

Then

Proof

Let us consider the following equation

Adding term by term with (51), it follows

Replacing the values of \(\mu _1, \mu _2\), we get

Multiplying both sides for \((d_2 - J_2(u^*))+(d_1 - J_1(u^*)) > 0\), it follows

Then, dividing by \((d_2 - J_2(u^*))(d_1 - J_1(u^*)) > 0\), the following holds

and hence

Therefore we obtain

\(\square \)

Notice that in (52) and (53), \(\mu _1, \mu _2 \in (0,1) \) and \(\mu _1+\mu _2 = 1\). Theorem 5.1 states that it is possible to find a point on the Pareto frontier that maximizes the product \(\prod _{i=1}^2 \Big ( d_i - J_i (u)\Big )\).

Based on this result, we can introduce a computational method to obtain a solution for the bargaining problem; see also [12, 13]. It works as follows

Algorithm 5.1

(Bargaining solution)

In the above algorithm, we need to solve the optimization problem for the Pareto points in order to get the bargaining solution to our model. To this end, the following optimal control problem is considered

where \(\mu _1, \mu _1 \in (0,1)\), with \(\mu _1 + \mu _2 = 1\).

A solution to (57) is characterized by the following optimality system

Introducing the control-to-state map \((u_1, u_2) \mapsto x(u_1, u_2)\) as in the previous section and the reduced cost functional \(J(u_1, u_2) :={\widetilde{J}} (x(u_1, u_2), u_1, u_2)\), the solution to (57) can be written as

This problem is solved utilizing the semismooth Newton method discussed in Sect. 4.

6 Numerical Experiments

In this section, we present results of numerical experiments to validate the theoretical discussion and the proposed algorithms. We start considering a model of two uncoupled spin-1/2 particles, whose state configuration represents the density matrix operator and the corresponding dynamics is governed by the Liouville–von Neumann master equation. This is a basic model of importance in nuclear magnetic resonance (NMR) spectroscopy. We remark that in the case of quantum dynamics constrained on the energy ground state, only transitions between magnetic/spin states are possible. In this case, as discussed in detail in [4], the Pauli-Schrödinger equation represented in a basis of spherical-harmonics becomes

where the complex-valued vector a(t) represents the time-dependent coefficients of the spectral discretization, and in the Hamiltonian defined by the operator in the square brackets, \({\tilde{H}}_0\) and \({\tilde{H}}_x\) are Hermitian matrices, and \({\tilde{H}}_y\) is a skew-symmetric matrix. In an experimental control setting, we can have that the z-component of the magnetic field, \(B_z\), is fixed, and we would like to manipulate the spin orientation of the particle by acting with the transversal magnetic fields \(B_x\) and \(B_y\), which we identify with \(u_1\) and \(u_2\), respectively, and in this case the controlled dynamics of each spin-1/2 particle is described by the Hamiltonian

where \({\hat{\nu }}\) is the Larmor-frequency, u is the control, and \(I_x\), \(I_y\) and \(I_z\) are the Pauli matrices. To represent this model, it results very convenient to choose a frame rotating with the Larmor frequency and use the so-called real-matrix representation [4]. We obtain the following model

where

with \(c=483\) and

Let \(x_0= (0,0,1,0,0,1)^T\) be the initial state of the system and consider the following targets for the two players \(x_T^{(1)} = (1,0,0,1,0,0)^T\) and \(x_T^{(2)}= \big (0,\frac{1}{\sqrt{2}},-\frac{1}{\sqrt{2}},0,\frac{1}{\sqrt{2}},-\frac{1}{\sqrt{2}} \big )^T\). As in [2], we choose \(T=0.008\), and based on our estimate (31), we take \(\nu =2 \cdot 10^{-1}\).

Let \(u^0 :=(u_1^0, u_2^0)\). We chose \(u^0(t)=(0.1, 0.1)\), \(t \in [0,T]\). Our problem is to find \(u_1 \in \mathcal {U}_1(M)\), with \(M=60\), such that the system aims at performing a transition from the initial state \(x_0\), where both spins are pointing in the z-direction, to a target state \(x_T^{(1)}\) where both spins are pointing in the x-direction, and to find \(u_2 \in \mathcal {U}_2(M)\) that has the aim to drive the system to \(x_T^{(2)}\), where an inversion of orientation is desired.

To solve this NE problem, we use the relaxation scheme in Algorithm 4.1 with \(\tau = 0.5\) and \(tol_u = 10^{-3}\). In this method, the semi-smooth Newton scheme [7, 9] is employed to solve the optimality systems corresponding to the two controls. In this implementation, the differential equations are approximated by the MCN scheme with \(N_t=1000\).

The tolerances for the convergence of the semi-smooth Newton and for the Krylov linear solver are \(10^{-7}\) and \(10^{-8}\), respectively. These tolerances are meat always before the maximum number of allowed iterations, which is set equal to 100, is reached. With this procedure, we obtain the NE controls depicted in Fig. 1, which give NE point that is shown in Fig. 2, as a ‘*’-point. At NE solution, \(\Vert x(T) - x_T^{(1)}\Vert _2 = 0.4967\) and \(\Vert x(T) - x_T^{(2)}\Vert _2 = 0.5996\).

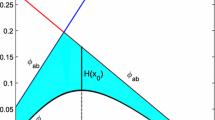

With this point, we can consider the problem of Nash bargaining that assumes cooperation in order to get an improvement of the players’ objectives. We solve the bargaining problem using Algorithm 5.1 with \(\mu _1^{(0)}=0.6\), \(\alpha = 0.01\). We obtain \(\mu ^* = 0.47\), and the corresponding solution is depicted also in Fig. 2 with a \(\circ \)-point. Furthermore, in this figure we present our computation of the Pareto frontier to which this point belongs.

Now, in the second experiment with out quantum model, we replace the final target of the second player with \(x_T^{(2)}=(0,1,0,0,1,0)^T\), and let \(M=15\). All other parameters are as in the previous experiment. In this case, the targets for the two players present similar difficulty. By taking a lower value of M, we see that the constraints on the NE controls become active as shown in Fig. 3.

With this solution, \(\Vert x(T) - x_T^{(1)}\Vert _2 = 0.4155\) and \(\Vert x(T) - x_T^{(2)}\Vert _2 = 0.4155\).

Furthermore, we consider the Nash bargaining problem. In this case, Algorithm 5.1 provides the solution \(\mu ^* = 0.5\), which is plotted in Fig. 4.

A comparison of the results of the two experiments is conveniently done based on the Figs. 2 and 4 . It is clear that, in the second experiment, the two targets are placed on the same hemisphere and therefore the game is more balanced than the game of the first experiment. This fact can be seen also in the positioning of the NE points and NB points with respect to the Pareto frontier.

Next, in order to provide more insight in the performance of our algorithms, we report some results concerning the relaxation procedure and the semi-smooth Newton scheme. Concerning the relaxation scheme in Algorithm 4.1, we give in Tables 1 and 2 the convergence history of this scheme towards a NE point for the first and second experiment, respectively. In both tables, we see that, initially, both functionals are minimized, and thereafter we see that the values of the norms of the two gradients decrease until both reach zero to high accuracy. Algorithm 4.1 stops when the updates of the controls become smaller than a given tolerance \(tol_u\). In some sense, these tables show the history of the game up to reaching the Nash equilibrium. Notice that similar behaviour has been already recorded [20].

To conclude this experiment, we show that our semi-smooth Newton method provides a quadratic convergent behaviour to the solution of the given optimality system. For this purpose, we consider the case of solving (57) corresponding to the setting of the two experiments above and choosing \(\mu =\mu ^*\). With this setting, we obtain the convergence behaviours shown in Table 3.

Next, we present an application to the so-called competitive Lotka-Volterra equations; see [21] for more details. This model describes the case of two species competing for the same limited food resources. For example, one can consider a competition for the territory which is directly related to food resources. In particular, each species has logistic growth in the absence of the other. Then, we focus on the following system

where \(b_i \;(>0)\), \(i=1,2\), are the birth rates of the two species and \(a_{ij}\;(>0)\), \(i,j=1,2\), are the competition efficiencies.

Suppose \(a_{ii}\) and \(a_{ji}\) can be controlled by player i, i.e. \(a_{ij} = a_{ij}^* + u_i\), with \(a_{ij}^* >0\). Then, the system (60) can be written as (1) in the following way

Note that in (61) there are no restriction on the sign of \(a_{ij}\), i.e. also phenomena of mutualism or symbiosis are admitted.

In the dynamical system (61), the function \(f^0(x)\) has the steady states \((0,0)^T, (0, \frac{b_2}{a_{22}^*})^T, (\frac{b1}{a_{11}^*}, 0)^T\) and

(The latter representing co-existence.)

Now, in our NE setting with the players’ objectives given in (4), we choose \(x_T^{(1)} = (\frac{b1}{a_{11}^*}, 0)^T\) and \(x_T^{(2)} = (0, \frac{b_2}{a_{22}^*})^T\), that is, each species aims at the extinction of the other one.

In our numerical simulations, the parameters are chosen as in [22], i.e. \(b_1=1, b_2=1, a_{11}^*=2, a_{22}^*=2, a_{12}^*=1, a_{21}^*=1\). Therefore \(x_T^{(1)} = (\frac{1}{2}, 0)^T\) and \(x_T^{(2)} = (0, \frac{1}{2})^T\). Furthermore, let \(x_0 = (1.5, 1)^T\), \(\nu = 0.1\), \(T= 0.25\) and \(u_1^0 (t)=0, u_2^0(t)=0, t \in [0,T]\).

To solve this NE problem, we use Algorithm 4.1 with \(\tau = 0.5\) and \(tol_u = 10^{-3}\). All the parameters in the semi-smooth Newton method and in the Krylov linear solver are as in the previous experiments.

We obtain the NE controls depicted in Fig. 5, which give the NE point shown in Fig. 6, as a ‘*’-point. At the Nash equilibrium solution, we get \(\Vert x(T) - x_T^{(1)} \Vert _2 = 0.6875\) and \(\Vert x(T) - x_T^{(2)} \Vert _2 = 0.8374\) with \(x(T)= (0.8311, 0.6026)^T\), and \(J_1(u^{\mathrm{NE}}) = 0.2456\), \(J_2(u^{\mathrm{NE}}) = 0.3569\).

Concerning the convergence behaviour of our algorithms, we obtain results very similar to those shown in the previous experiments. Therefore they are omitted.

With this point, we compute the bargaining solution using Algorithm 5.1 with \(\mu _1^{(0)} = 0.75\) and \(\alpha =0.01\). The corresponding point, obtained for \(\mu ^* = 0.57\), is drawn as a \(\circ \)-point in Fig. 6.

At the bargaining solution, we get \(\Vert x(T) - x_T^{(1)} \Vert _2 = 0.6441\), \(\Vert x(T) - x_T^{(2)} \Vert _2 = 0.7959\) with \(x(T) = (0.7925, 0.5739)^T\), and \(J_1(u^{\mathrm{NB}}) = 0.2297\), \(J_2(u^{\mathrm{NB}}) = 0.3300\).

7 Conclusion

A theoretical and numerical investigation of Nash equilibria (NE) and Nash bargaining (NB) problems governed by bilinear differential models was presented. In this setting, existence of Nash equilibria was proved and computed with a semi-smooth Newton scheme combined with a relaxation method. A related Nash bargaining problem was discussed and a computational procedure for its determination was presented. Results of numerical experiments were presented that successfully demonstrated the effectiveness of the present NE and NB computational framework.

Change history

21 July 2021

A Correction to this paper has been published: https://doi.org/10.1007/s13235-021-00394-z

References

Adams RA, Fournier J (2003) Sobolev spaces, 2nd edn. Elsevier/Academic Press, Amsterdam

Assémat E, Lapert M, Zhang Y, Braun M, Glaser SJ, Sugny D (2010) Simultaneous time-optimal control of the inversion of two spin-\(\frac{1}{2}\) particles. Phys Rev A 82:013415

Balder EJ (1997) A unifying approach to existence of Nash equilibria. Int J Game Theory 24:79–94

Borzì A, Ciaramella G, Sprengel M (2017) Formulation and numerical solution of quantum control problems. Society for Industrial and Applied Mathematics, Philadelphia

Bressan A (2011) Noncooperative differential games. Milan J Math 79(2):357–427

Broom M, Rychtar J (2013) Game-theoretical models in biology. Mathematical and computational biology. Chapman & Hall/CRC Press, Boca Raton

Ciaramella G, Borzì A (2015) SKRYN: a fast semismooth-Krylov–Newton method for controlling Ising spin systems. Comput Phys Commun 190:213–223

Ciaramella G, Borz.ì A (2016) A LONE code for the sparse control of quantum systems. Comput Phys Commun 200:312–323

Ciaramella G, Borzì A, Dirr G, Wachsmuth D (2015) Newton methods for the optimal control of closed quantum spin systems. SIAM J Sci Comput 37(1):A319–A346

Ciarlet P (2013) Linear and nonlinear functional analysis with applications. Society for Industrial and Applied Mathematics, Philadelphia

Dockner E, Jorgensen S, Van Long N, Sorger G (2000) Differential games in economics and management science. Cambridge University Press, Cambridge

Ehtamo H, Ruusunen J, Kaitala V, Hämäläinen RP (1988) Solution for a dynamic bargaining problem with an application to resource management. J Optim Theory Appl 59(3):391–405

Engwerda J (2005) LQ dynamic optimization and differential games. Wiley, Chichester

Engwerda J (2010) Necessary and sufficient conditions for Pareto optimal solutions of cooperative differential games. SIAM J Control Optim 48(6):3859–3881

Friedman A (1971) Differential games. Wiley-Interscience, Hoboken

Friesz T (2010) Dynamic optimization and differential games. International series in operations research & management science. Springer, New York

Ge X, Ding H, Rabitz H, Wu R (2019) Robust quantum control in games: an adversarial learning approach. arXiv e-prints. arXiv:1909.02296

Isaacs R (1965) Differential games: a mathematical theory with applications to warfare and pursuit, control and optimization. Wiley, Hoboken

Jørgensen S, Zaccour G (2003) Differential games in marketing. International series in quantitative marketing. Springer, New York

Krawczyk JB, Uryasev S (2000) Relaxation algorithms to find Nash equilibria with economic applications. Environ Model Assess 5(1):63–73

Murray J (1989) Mathematical biology. Biomathematics texts, vol 19. Springer, Berlin

Mylvaganam T, Sassano M, Astolfi A (2015) Constructive \(\epsilon \)-Nash equilibria for nonzero-sum differential games. IEEE Trans Autom Control 60(4):950–965

Nash JF (1950) The bargaining problem. Econometrica 18(2):155–162

Nash JF (1950) Equilibrium points in n-person games. Proc Natl Acad Sci 36(1):48–49

Nash JF (1951) Non-cooperative games. Ann Math 54(2):286–295

Pardalos P, Yatsenko V (2010) Optimization and control of bilinear systems: theory, algorithms, and applications. Springer optimization and its applications. Springer, New York

Tolwinski B (1978) On the existence of Nash equilibrium points for differential games with linear and non-linear dynamics. Control Cybern 7(3):57–69

Varaiya P (1970) N-person nonzero sum differential games with linear dynamics. SIAM J Control 8(4):441–449

Zeidler E (1985) Nonlinear functional analysis and its application III: variational methods and optimization. Nonlinear functional analysis and its applications. Springer, New York

Acknowledgements

We are grateful to the Bayerisch-Französisches Hochschulzentrum (BFHZ) for partial support within the project ‘Multi-agent Fokker-Planck Nash games’ (Project Nr. FK32_2017), and to Abderrahmane Habbal (University of Nice Sophia Antipolis and INRIA) for many helpful discussions.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this article was revised due to a retrospective Open Access order.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Calà Campana, F., Ciaramella, G. & Borzì, A. Nash Equilibria and Bargaining Solutions of Differential Bilinear Games. Dyn Games Appl 11, 1–28 (2021). https://doi.org/10.1007/s13235-020-00351-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13235-020-00351-2

Keywords

- Bilinear evolution models

- Nash equilibria

- Nash bargaining problem

- Optimal control theory

- Quantum evolution models

- Lotka–Volterra models

- Newton methods