Abstract

We use census data on external assessments in primary and secondary schools in the Basque Country (Spain) to estimate learning losses due to the COVID-19 pandemic in March 2021, 1 year after school closures, which lasted from March to June 2020. Differences-in-differences with student and school-by-grade fixed effects show an average learning loss of 0.045 standard deviations, an effect that is smaller than short-run effects estimated by previous papers, and estimated after 6 months of one of the most successful school reopening campaigns among OECD countries. The effect is larger in Mathematics, moderate in Basque language, and none in Spanish language. Controlling for socioeconomic differences, learning losses are especially large in public schools, and also in private schools with a high percentage of low-performing students. On the other hand, we find a regression to the mean within schools, possibly due to a compressed curriculum during the whole period. Finally, and more importantly, we use unique novel data on student socio-emotional well-being and show for the first time that students with higher learning losses self-report significantly worse levels of socio-emotional well-being due to the pandemic.

Similar content being viewed by others

1 Introduction

The COVID-19 outbreak has been a very large and sudden shock, which stopped face-to-face learning through school closures for several weeks or months, all over the world. One of the main concerns about the long-lasting effects of the pandemic is about learning and human capital. For instance, Hanushek and Woessmann (2020) estimate that students in grades 1–12 affected by school closures might expect some 3 per cent lower income over their entire lifetimes. For nations, the lower long-term growth related to such losses might yield an average of 1.5 per cent lower annual GDP for the remainder of the century. Hence, the extent and the persistence of the effects of the pandemic on students’ learning is a relevant question worldwide, and understanding and characterizing these effects, including their implications for inequality, is a crucial step towards designing effective policy responses.

In this paper, we use census data on external assessments of primary and lower secondary school students in the Spanish region of the Basque Country to study the effect of the pandemic on students’ learning. We observe students’ academic outcomes in primary and lower secondary school for two cohorts. On the one hand, the COVID-19 cohort (affected by school closures), took the primary school external assessments before the pandemic (in 2017), and the lower secondary school external assessment in March 2021. On the other hand, we observe a control cohort, which took both assessments before the pandemic (in 2015 and 2019). Hence, we estimate the learning effect of the pandemic by differences-in-differences, including student and grade fixed effects.

Our results show significant learning losses by March 2021, 1 year after the COVID-19 outbreak, and hence, 1 year after a period of school closures and remote learning of 3 months (March to June 2020), a summer break (June to September 2020) and 6 months of successful school reopening (September 2020 to March 2021). For instance, we find a learning loss in Mathematics of around 0.075 standard deviations (s.d.), and in Basque language, of 0.05 s.d.. The average magnitude of our estimates is equivalent to 13% of the usual learning which would take place in a regular year. This is smaller (50% less) than the loss documented in other countries where learning was measured between June and September 2020, relative to baseline measurements in previous years or cohorts. This could be explained by two reasons: (1) a measurement effect, such that learning losses are over-estimated when measured immediately after a break (as in the summer learning loss, (von Hippel and Hamrock 2019; Von Hippel 2019)); (2) an actual catch-up following the reopening of schools. The learning loss is largest for Mathematics (20% of a regular year learning) and Basque language (11%). We do not find any learning loss in Spanish language. This is interesting because, in the Basque Country, education is organized through three language models and the most prevalent model gives high importance to the Basque language—a difficult language with no similarities with any other European language.

Furthermore, we study what type of students and schools have been most affected by the pandemic. We find that observed differences are driven mostly by school variables, as opposed to student variables, although we cannot rule out that omitted student variables may be playing a role. For instance, we find that learning losses are significantly larger in public schools, which represent approximately 50% of the school network. On the other hand, the effects are rather small in private schools. In this setting, private schools are publicly funded (although they often charge additional fees to parents) and privately managed, and represent the remaining 50% of the school network.

At the same time, we find that the learning loss varies significantly within the private school network. Indeed, we find that overall the pandemic has increased the gap between schools with high and low-performing pupils (based on pre-treatment performance, i.e. in primary school tests), and that this happens mostly within private schools. While high-performing private schools show no learning loss due to the pandemic, low-performing private schools feature significant learning losses. These differences also hold after accounting for students’ and schools’ socio-economic status, as well as other characteristics (such as gender, language spoken at home or migrant status) which we do not find to be significantly related to learning losses. These results suggest that schools’ reaction to the sudden shock has been a crucial moderator of the effect of the pandemic on learning.

While the pandemic increases the gap between schools with previously high and low-performing students, we also find a regression to the mean within schools. More precisely, we observe that students with higher scores in primary school are those with the largest learning losses, compared to similar students in the control cohort. This could reflect a compressed curriculum following the COVID-19 outbreak and the school reopening campaign in 2020/21.Footnote 1 On average, we do not find significantly different effects of the pandemic by students’ socio-economic status.

Finally, we study the relationship between students’ attitudes towards school and socio-emotional well-being deterioration due to the pandemic and learning. Importantly, we retrieve self-reported changes in socio-emotional well-being and attitudes towards school due to the pandemic from a unique survey completed by all COVID-19 cohort students during the external assessments. This allows for the first time to simultaneously identify learning loss and socio-emotional outcomes during the COVID-19 outbreak and school closures. We find that the most disadvantaged students (both academically and socio-economically) report larger negative effects of the pandemic on well-being. We also find that students reporting larger well-being deterioration due to the pandemic feature significantly larger learning losses, both unconditionally and within groups (i.e. within schools, socio-economic status, and other characteristics). Besides its novelty, these results are policy-relevant because they show that students are very much aware of the effects of the pandemic on themselves, and outline the large overlap between educational and socio-emotional well-being challenges due to the COVID-19 pandemic.

This paper makes three contributions to the growing literature on the learning and human capital effects of the pandemic, which we further develop below. First, we provide estimates of the learning loss 1 year after the beginning of the pandemic, using a differences-in-differences within-student design that allows us to address biases from cohort effects, and using validated external assessments to measure learning. Second, we characterize the learning loss across schools and students, documenting the importance of school effects. This is important to understand the mechanisms behind the learning loss due to the pandemic, and necessary to understand what are the best policies to address it. Third, and more importantly, we jointly study for the first time the relationship between learning loss due to the pandemic and students’ socio-emotional well-being deterioration. We show that these problems largely overlap and that students are aware of them, which is important for targeting policy responses.

1.1 Existing evidence on learning losses due to the pandemic

As outlined by Werner and Woessmann (2021), the crucial methodological challenge for measuring learning losses due to the pandemic is addressing bias from cohort effects. This requires individual-level longitudinal data, to observe how the students tested after the school closures had performed on tests before the school closures, and to compare them to earlier cohorts. As of October 2021, the only study that had access to that type of data was Engzell et al. (2021). They exploit that national assessments take place twice a year in The Netherlands to study outcomes of students just before and after the first nationwide school closures that lasted 8 weeks and compare progress during this period to the same period in the 3 previous years. They find a learning loss of about 3 percentile points or 0.08 standard deviations. The effect is equivalent to one-fifth of a school year, the same period that schools remained closed, and it is larger among students from less-educated homes. Another important early study by Maldonado and De Witte (2021) uses school-level achievement data in a large sample of Catholic schools in Flanders (Belgium), where full closures continued for 2 months followed by partial openings. Controlling for a vector of school characteristics, they find large learning losses (0.17 s.d. in Math), and that schools with larger shares of disadvantaged students show larger losses.

An open and important question is whether the learning losses documented immediately after school closures will persist over time. Our first contribution is to provide a design resembling Engzell et al. (2021) (i.e. a differences-in-differences design addressing bias from cohort effect) that measures learning outcomes 1 year after the beginning of the pandemic (i.e. after school closures and remote learning for 3 months, between March and June 2020), a summer break (June–September 2020), and 6 months of successful school reopening (September 2020–March 2021). We compare the progress made by students before and during the pandemic between primary and secondary school, and compare such progress with the same period, for the previous cohort. We find significant learning losses, suggesting that this is a persistent effect, although nevertheless smaller in magnitude, implying that there may be room for some catching up over the medium and long run.

Other studies have estimated learning losses at different points in time. Contini et al. (2021) designed an examination for 2000 primary school children in Torino, and matched the sample with test scores from a pre-pandemic national standardized assessment. They find a large learning loss in mathematics (− 0.19 sd), larger for well-performing children of low-educated parents. This is measured in October 2020, shortly after school closures and the summer break. In Denmark, Birkelund and Karlson (2021) estimate the learning loss in mid-2021, 14 months after the start of the pandemic (and including two school closures and two large reopening campaigns): they find that compared to the trend implied by pre-covid cohorts and grades, there is no major learning loss nor significant differences according to the economic level of the student. This is interesting because it suggests an attenuation of the learning loss. Our results imply some attenuation, but still some persistence within a similar time frame. Using a differences-in-differences design similar to this paper, they examine heterogeneous effects, finding little evidence of widening learning gaps by family background, as in our results. A report by the UK’s Education Department assessing learning throughout 2020/2021 suggests that periods of school closure (March–June 2020, and January–March 2021) display losses, while periods of reopening (Autumn 2020 and Spring 2021) display a catch up.Footnote 2

Other relevant contributions include Schult and Lindner (2021), which compares standardized means across cohorts in Baden-Wu¨rttemberg; Tomasik et al. (2021), which use latent growth models comparing learning in Switzerland in the eight weeks of confinement versus the previous eight weeks; or Lichand et al. (2021), which show a 36% increase in dropout risk and a 0.32 sd decline in test scores under remote learning in Sao Paulo, finding evidence that school reopenings significantly attenuated the learning loss, consistently with our results.

Other papers have focussed on time use. For instance, Grewenig et al. (2021) collected detailed information on students before and during school closures in a survey of 1099 parents in Germany. They find that students significantly reduced their daily learning time, especially low achievers. This pattern is interesting because we observe an opposite effect for learning (i.e. a regression to the mean). We find that the regression to the mean is mostly driven by a subset of schools (low-performing and public schools). Hence, this emphasizes the importance of school effects, which may act on top of individual-level time-use effects. Werner and Woessmann (2021) combines a review of the emerging international literature with new evidence from German longitudinal time-use surveys. They find that children’s learning time decreased severely during the first school closures, particularly for low-achieving students, and increased only slightly 1 year later.

1.2 Learning losses and socio-emotional well-being

The study of the effect of the pandemic on children’s socio-emotional well-being has received slightly less attention. Newlove-Delgado et al. (2021) show a deterioration in the mental health of young people aged 5–16 years in the UK. Likewise, Blanden et al. (2021) find significant behavioural and emotional difficulties for children due to the pandemic, using data from the UK Household Longitudinal Study. The magnitude of their effects is at least as large as the immediate impacts of school closures on learning implied by other studies.

Ravens-Sieberer et al. (2021a,b) compare mental health and well-being measures of children in the spring of 2020, compared to previous cohorts in Germany, finding significantly lower scores and a further deterioration in a second wave in early 2021. Again, they document substantial heterogeneity, with children from disadvantaged families experiencing more negative effects. Werner and Woessmann (2021) also present survey evidence that the socio-emotional well-being of students in Germany declined in the short run. Overall, their survey provides a mixed picture. They find a huge psychological burden for many children and families, but the majority of children eventually proved quite resilient to the situation, with most parents reporting no change in most dimensions of their child’s socio-emotional well-being during the school closures and some even reporting improvements. Nevertheless, they find substantial heterogeneity across families, consistent with our results. In Spain, Pizarro-Ruiz and Ord´on˜ez-Camblor (2021) survey 590 confined Spanish children and teenagers between 8 and 18 years old, showing that during confinement, children and adolescents showed emotional and behavioural alterations.

To the best of our knowledge, ours is the first paper that brings together measures of learning loss with socio-emotional well-being problems due to the pandemic, showing their large overlap and outlining students’ awareness.

2 Institutional setting and data

2.1 The Basque school system

The Basque school system is self-managed, and financed through its tax revenues. It is subject to Spanish regulation for key issues regarding the structure of the system and levels, selection and training of the teaching profession, curriculum design, grade promotion or title expedition. The most recent data (2019/2020) show that the system enrolled 376,104 students between kindergarten and upper secondary (both general and vocational) education. Compulsory education consists of 6 years of primary education and 4 grades of lower secondary education.

The system is organized around two distinct school networks of equal size. Both of them are publicly funded, but one is state-owned (public schools), whereas the other is privately owned (private schools), run by religious entities or cooperatives which operate under a non-profit scheme.Footnote 3 These networks differ in their budgetary autonomy (private schools can use their resources for personnel and management more freely), student composition (private schools disproportionately enrol native students and students from higher socioeconomic backgrounds) and funding formula (the lack of sufficient public funding generates the needs of those schools to obtain alternative sources of funding through voluntary parental co-payments).Footnote 4 In addition, the system is organized in three different streams regarding language of instruction. The A model (Spanish is the main language of instruction and Basque is taught as a single subject), the B model (which balances the weight of both languages in terms of hours of instruction) and the D model (Basque being the main language of instruction, and Spanish being taught as a single subject). While about 34% of population aged 5–24 has Basque as its mother tongue (some of which share it with Spanish), around 75% of students attend the D language model.Footnote 5 Hence, Basque is a language that is largely learnt at school, rather than at home.

2.2 External assessments, data collection, and school selection

The Basque Institute for Research and Evaluation in Education (ISEI-IVEI) is a public agency dependent on the Department for Education of the Basque Country. The ISEI-IVEI is responsible for the design, development and reporting of external assessments; and is also in charge of producing and promoting education research knowledge. Since 2009, the ISEI-IVEI has been effectively implementing external diagnosis evaluations (Evaluaciones de Diagn´ostico, or EDs), of a census nature. These evaluations take place every 2 years at mid-stage grades in primary (4th grade) and lower secondary (the equivalent to 8th grade), usually at the beginning of the third academic term. This means that in practice, with minor exceptions (students that repeat grade, newcomers or drop-outs), most students from cohorts which participate in the 4th grade test end up participating again 4 years later, in the grade 8 assessment, allowing for a longitudinal analysis. In the last years, these assessments took place in February and March 2015, March 2017, May 2019, and March 2021.

EDs are used for formative purposes: students and their families as well as school principals receive an individualized report about their individual or school performance, contextualized by the student or school’s socio-economic status. Specific student or school improvement plans are set through the support of schools and teachers (for students) and the inspectorate and teacher training centres (for schools). Student and school results cannot be made public by law, avoiding any sort of rankings or school indicators.

EDs are competency-based assessments where student competency is estimated through the Item Response Theory model based on students’ response to various items: given the multiple-choice nature of the test, ISEI-IVEI implements a two-parameter model (Birnbaum 1968) for dichotomously scored responses and the generalized partial credit model (Muraki 1992) for items with more than two ordered correct response categories. Common items allowed to scale results to make them comparable to previous years in the same scale.

EDs focus on three key domains which all students have to take: Mathematics, Basque language and Spanish language. Additionally, other competencies are assessed, but not for all students and not necessarily every year, such as Science, English language competency, Social and Citizen competency or Learning to Learn competency. In our study, we compare the cohort of students mostly born in 2005 (and hence taking the 4th-grade assessment in 2015 and the 8th-grade assessment in 2019) as the control group with the cohort of students mostly born in 2007 (taking the 4th-grade assessment in 2017 and the 8th-grade assessment in 2021) as the treated group, that is, the cohort affected by COVID-19.

In Spain, education external assessments are partially transferred to regions (Autonomous Communities), although the Ministry of Education is theoretically responsible for organizing national assessments. While other regions in Spain also run external assessments, the ISEI-IVEI’s EDs have three fundamental advantages, especially when it comes to the comparability of results and its usage of data for research. First, its aforementioned longitudinal structure. Second, as in PISA, EDs have common items throughout the years to compare students’ learning over time and across cohorts. Third, the ISEI-IVEI EDs are the only regional evaluations that are applied by an external party outside the schools and the system, and therefore, the reliability of the data is much higher.

The 2021 edition was conditioned by the circumstances regarding school reopening while COVID-19 pandemic outbreaks were still relevant and the incidence of the virus was prevalent and high. Like all schools in Spain, the Basque Country closed its schools between March 12 and the end of the school year 2019/2020. During the school year 2020/21, all schools reopened successfully, but many schools operated in the morning shift to reduce the risk of contagion during lunch breaks in indoor spaces. Schools were pressed to provide regular services in a compressed schedule, whereas, at the same time, they had to attend individual needs of the students most harmed by the school closures between March and June 2020. OECD data suggest that the Spanish education system was successful regarding the school reopening campaign in 2020/21: while Spain stood 10th among OECD countries with fewer school days lost by December 31, the system advanced to the 4th position by May 31.Footnote 6

Data from the Basque Education system shows that the proportion of classes opened in the school year 2020/21 was always above 98.4% and on average near 99%, with January to March (before the EDs) witnessing participation always over 98.6%, even with a COVID-19 outbreak taking place in January 2021.Footnote 7 This figure is no different from the national figure in Spain, which stood between 98.6 and 99.7% in January–March 2021. These numbers were similar from September to December 2020.Footnote 8

Given the difficulties faced by schools regarding reopening, ISEI-IVEI allowed, for the first time, that schools would participate in the EDs voluntarily. Once schools made their decisions, students within participating schools were not allowed to refuse to participate, whereas in regular years, the rate of participation of schools and students was consistently in very high numbers (above 95% of schools), the 2021 edition of EDs took place with a reduced sample of schools (130 schools, compared to 326 schools in the control cohort), which enrol around 38% of students.

2.3 Data

We use data from two cohorts of students. The first one, the control cohort, took the primary school examinations in 2015, and the secondary school examinations in 2019. The second one, which we name the COVID or Treatment cohort, took the primary school examiantion in 2017, and the lower secondary school examination in 2021.

We focus on three learning outcomes, namely test scores in Mathematics, Spanish language and Basque language. We observe the students’ gender, socio-economic status, language spoken at home, immigrant status and basic school characteristics (including indicators on school ownership—private or public-, language model followed and average socio-economic status of students in the school of attendance). We classify students and schools as of either high (above median) or low (below median) socioeconomic status, following an SES index computed by ISEI-IVEI, which aggregates information from various measures, similarly to PISA (i.e. parental education, occupation, ownership of certain goods, time spent at home, or holidays). For the control cohort, we observe the test scores and individual covariates of all students, both in primary and secondary education. For the COVID cohort, we observe all students in primary education, and all students from participating schools in secondary education. We focus only on students whom we observe twice (i.e. in primary and secondary education).

2.3.1 Selection into exam-taking

One challenge in identifying the effect of the pandemic is that exam-taking is not compulsory for the COVID-19 cohort. Schools’ results cannot made be public by law, to avoid rankings, meaning that schools do not have incentives to participate (or not participate). Nonetheless, this may be a problem if exam-taking decisions are correlated with determinants of test scores. Selection into exam-taking depends on schools, not on individual students’ decisions. In schools which agreed to participate, only the classes which were confined at home due to positive COVID-19 cases are exempted from taking the tests.

The longitudinal nature of the data allows us to measure and address some selection concerns. The data contain information on the primary school performance of all students, both for the COVID cohort (i.e. the cohort taking the secondary school examination in March 2021) and for the control cohort. Hence, we can measure an important dimension of selection into exam-taking by looking at the primary school performance of the COVID cohort, compared to the control cohort.

In regular years, educational factors (i.e. grade repetition) are the main reason for not observing students in the secondary school examinations which were observed in the primary school exams. In the COVID cohort, however, many students who would normally participate do not take the secondary school test for new reasons. As a result, we expect the population of non-test-takers in the COVID cohort to have a higher level of past performance than previous cohorts. However, it is less clear whether the population of test-takers in the COVID cohort will be different, on average, compared to a regular year. For instance, if schools which do not take the test in 2021 tend to be high-performing schools, the COVID cohort participating in the 2021 test will be negatively selected. Instead, if schools which do not take exams in 2021 tend to be below-average schools, the COVID cohort will be positively selected.

Figure 1 displays the distribution of primary school test scores (average of Math, Spanish and Basque), comparing students who took and who did not take the secondary school test, for the COVID and the control cohort. The left panel of the figure shows that, as expected, non-takers in the COVID cohort have on average higher performance in primary on average than non-takers from the Control cohort. The right panel, however, shows that test-takers from the COVID cohort are rather similar (in terms of primary school past performance) to test-takers from the control cohort. We run Kolmogorov–Smirnov (K–S) tests for equality of the distributions. For non-test takers, the test statistic for the control cohort having lower test scores than the COVID cohort is 0.340, which measures the largest difference between the CDFs, with a p-value smaller than 0.0005. For test-takers, the test statistic for the COVID cohort having lower test scores than the control cohort is − 0.0314 (the largest difference between the CDFs), with a p-value smaller than 0.0005.

Hence, this pattern suggests that schools participating in the secondary school test in the Covid cohort are academically similar to the average school from the Control cohort. Table A1 in the Online Appendix reports further descriptives across cohorts. On top of primary school test scores, the gender, migrant, and student SES composition is rather similar. The main difference is that the Covid cohort features a lower share of public schools, and also a higher share of schools with low average performance and SES. To account for these differences, in all model regressions, we control for grade-by-school fixed effects, which account for any school-specific differences in performance across grades, and also present specifications which control for primary school test outcomes.

3 Effects on learning

We estimate learning losses by differences-in-differences. We use test scores as the outcome variable. As explained in Sect. 2.2., following Item Response Theory, test scores are scaled to the baseline year (2009) average performance using common items (items that are repeated in every wave) for Math, Basque and Spanish: the baseline year distribution of learning outcomes has its mean approximately at 250 points, with a standard deviation of 50 points. ISEI-IVEI experts provided the benchmark of 20 points as the equivalent of what a student learns per year.Footnote 9

We regress the test scores of student i in grade g on individual fixed effects (αi), grade fixed effects (δg) and an interaction between a COVID cohort dummy and a secondary school (2nd of ESO, or 8th grade) dummy. We also control for school-by-grade fixed effects, which allow each school to have a different intercept for primary and secondary school test scores. Hence, the identification assumption is that changes in test scores between primary and secondary education would be constant across cohorts in the absence of the pandemic. Note that the inclusion of individual fixed effects rules out bias due to students obtaining better or worse average results cohort after cohort. However, if students’ changes in test scores between primary and secondary school grow cohort after cohort, this would bias our results towards finding no effect. On the other hand, if students’ changes in test scores between primary and secondary school decline cohort after cohort, the results could be biased in the direction of confounding cohort trends with the effect of the pandemic. Unfortunately, we do not have data on earlier cohorts to test the plausibility of this assumption. Another important aspect is the interpretation of our pandemic effect estimate, which is drawn from a subsample of schools, in comparison with the average treatment effect that would arise for the universe of schools. Although the last section shows that the Covid cohort sample of schools is similar to the average across many dimensions, if schools with smaller learning losses are more eager to participate, or if participation correlates with an underlying resilience to learning activities under the pandemic, our estimate would capture a local treatment effect which would be a lower bound of the average treatment effect.

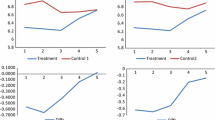

The left panel of Fig. 2 reports point estimates and 95% confidence intervals of the baseline differences-in-differences model, where we cluster the standard errors at the student level. We also report point estimates, standard errors, and further descriptives in Table A2 in the Online Appendix. The results show statistically significant learning losses by March 2021, one year after the COVID-19 outbreak, and hence, 1 year after school closures. The largest learning loss effect is on Mathematics. The magnitude of the estimate (3.8 points on the test scale) corresponds to 0.075 standard deviations (s.d.) and of 20% equivalent of learning in a regular school year. We also find sizable effects for Basque by about 2.2 points in the test scale (0.05 s.d. and 11% of the usual learning which would take place in a regular year). Finally, we find no significant learning losses for Spanish. Overall, the average learning loss is of 0.043 standard deviations.

The pattern in the results of Fig. 2 across subjects is not surprising. Mathematics and Basque are complex subjects, which may have made the periods of online teaching especially challenging. On the other hand, students are more exposed to Spanish in their daily lives (i.e. in the media). Moreover, it is interesting to note that the magnitude of these learning loss estimates is smaller compared to that documented by the literature in the early months of the pandemic (Engzell et al. 2021; Maldonado and De Witte 2021). This could be explained by two reasons: (1) A measurement effect, due to measuring learning immediately after a break (as in the summer learning loss, (von Hippel and Hamrock 2019; Von Hippel 2019)); (2) An actual catch-up following an efficient school reopening. Regarding the latter, it is interesting to recall that the reopening campaign in the Basque Country and Spain was one the most successful among OECD countries, as explained in Sect. 2.2.

We also estimate lagged-dependent-variable specifications, in which we regress the grade in the secondary school of student i on the student’s grades in the three subjects in primary school, school fixed effects αs(i), and a Covid Cohort indicator.

The right panel of Fig. 2 shows the learning loss estimates from lagged dependent variable models, which are very similar. One concern about these results is whether they come from a population with particularly low learning losses due to the pandemic. For instance, this could be the case if schools’ selection into exam taking is based on their perceived learn-ing losses (Werner and Woessmann 2021). As a robustness check, we estimate the learning loss by differences-in-differences as in the baseline specification, but reweighting the covid cohort sample such that the distribution of schools’ and students’ characteristics matches that of the control cohort (i.e. that of a full regular cohort), using entropy balancing (Hainmueller 2012). Entropy balancing relies on a maximum entropy reweighting scheme that calibrates unit weights so that the reweighted treatment and control group satisfy a potentially large set of prespecified balance conditions which incorporate information about known sample moments. In this case, we reweight the COVID cohort so that the reweighted sample features the same fraction of public schools, high-performing schools, high SES schools, high-performing students, high SES students, females, migrants, Basque speakers at home, and linguistic model types. The results in the bottom panel of Table A2 in the Online Appendix show estimates that are slightly larger but very similar. This suggests that heterogeneous attrition across groups is not significantly related to treatment effect heterogeneity.

3.1 Heterogeneous effects

The concern about the long-lasting effects of the pandemic on learning goes beyond its average effects on the student population. We next study heterogeneous effects across students and schools and its implications for inequality. We estimate the heterogeneous effect of a pre-determined covariate Zi (which may vary at the student or the school level) with the following specification:

We follow a triple difference estimator, in which we compare the learning loss (a difference-in-difference) across groups (Z). Table 1 reports the learning loss estimates across students’ and schools’ demographic and academic characteristics: results are presented for the average of the three subjects’ test scores as the dependent variable.

Column (1) shows that females and migrants have slightly larger learning losses, although these differences are not significant. It also shows that we do not find differences by language spoken at home. Column (2) shows that on average, there’s no learning loss difference between students of low and high socio-economic status (SES).

Column (3), however, shows that students with high learning outcomes back in primary school tend to have higher learning losses (we classify students as high or low performers depending on whether they scored above or below average). This means that the pandemic led to a regression to the mean in learning outcomes. Column (4) considers all individual covariates together in the same model, with similar findings.

Columns (5) to (7) focus on heterogeneous effects across schools. Column (5) shows that the learning loss was significantly larger for public schools, compared to private schools, which on average feature a learning loss close to zero. Column (6) shows that there are no significant learning loss differences between schools mostly enrolling students of high or low SES. Column (7), instead, shows that learning losses are significantly larger for schools which enrol pupils who on average had lower grades in elementary school, compared to those schools enrolling pupils with above-average grades in elementary school (High Perf. Schools). These results are interesting because they show that there is an important divergence in learning outcomes between schools due to the pandemic, mainly depending on school ownership and average past academic performance. Importantly, column (9) shows that these differences are not driven by differences in SES across public and private schools or high-performing versus low-performing schools. Column (9) summarizes the main result of this section, which is that the pandemic leads to unequal learning losses between schools, while at the same time, it leads to regression to the mean (concerning past academic performance) within schools. The within-school regression into the mean is emphasized in column (10), which reports a very similar coefficient after accounting for school-by-cohort-by-grade fixed effects.

In columns (11) to (14), we examine whether these heterogeneity patterns are driven by specific subsets of schools. Columns (11) and (12) split the sample by school ownership. The results show that the unequal learning loss between schools takes place mostly within private schools, and not at all in public schools. This means that the learning loss was rather evenly distributed across public schools, but very unequally distributed across private schools: private schools with high-performing pupils display no learning loss, or even learning gains, whereas private schools with previously low-performing pupils display significant learning losses. On the other hand, we observe regression to the mean within both groups.

Columns (13) and (14) split the sample by the average student’s academic performance in primary education (i.e. above and below average). We observe that regression to the mean happens mostly within schools which tend to enrol students with lower scores in primary education. In those schools, we also observe very small differences between private and public schools. On the other hand, this pattern reverses for schools with high-performing students. In those schools, we see no regression to the mean, but large differences between public and private schools in learning outcomes due to the pandemic. This reinforces the idea that schools were a crucial moderator of the effect of the pandemic on learning. Finally, in column (15), we show heterogeneous effects by linguistic model, showing that the effects were concentrated in the Basque (model D, enrolling 65% of students) and Spanish (model A, enrolling 7.5% of students) lines, but that there were little effects in the mixed model (model B, enrolling 27.5% of students).

The results that students from low SES are not affected seem at odds with the evidence that public schools and low-performing schools are more affected, and low-SES students are likely to attend these schools (indeed, Table A7 in the Online Appendix shows that low-SES students are 21pp more likely to attend a public school or a low-performing school.) Although they are more likely to attend these schools, within them, Table A8 shows that they have slightly smaller learning losses than the average (especially in low-performing schools, where the difference is statistically significant). This ends up compensating the public school effect. As a robustness check, we also examine whether the learning loss by parental education matters, as an alternative measure of SES. The results in Table A9 suggest that this is not (significantly) the case.

We also report estimates of heterogeneous learning effects on each of the subjects (Mathematics, Basque, Spanish) in Tables A4, A5 and A6 in the Online Appendix. The main message is rather similar, especially for Basque and Spanish. We observe more negative effects in Basque and Spanish for those who speak it respectively at home, which is consistent with regression to the mean (they tend to have higher scores as well in those subjects). For Mathematics, the heterogeneity patterns are slightly different. We still find very large learning losses for public schools and very small effects for private schools. However, we find that it is high SES schools rather than high-performing schools which exhibit smaller learning losses. On the other hand, we still find regression to the mean within schools, and again, that this is especially high within low-performing schools.

Overall, these heterogeneous effects across schools, especially regarding public versus private schools, are consistent with existing survey evidence for Spain. Bonal and Gonz´alez (2020) fielded an online survey in March 2020 to examine variation in learning opportunities in Spain. They show that students enrolled in private schools, both independent and private subsidized ones, had significantly higher opportunities to learn scores than those in public schools. The authors argue that this could be because public schools did not develop school tasks at the very beginning of the lockdown while waiting for new instructions from the Government. However, private subsidized and independent schools did not stop their teaching activity. One of the plausible explanations for this difference lies in the economic dependency of private schools on fees, which means that they need to keep providing a service to users despite the exceptional circumstances.

Finally, we quantify the explanatory power of schools versus individual observed characteristics. To this aim, we construct an individual-level measure of learning loss due to the pandemic. In the first step, we regress \(\Delta_{{{\text{Test}}\;{\text{Scores}}}}^{{^{{\text{Secondary - Primary}}} }}\) on a vector of school fixed effects and individual characteristics (female, migrant, language at home, SES, and performance in primary school), for the control cohort. Then, we study, for the Covid cohort, how much of the deviation from the prediction of the regression of the first step \(\left( {\Delta_{{{\text{Test}}\;{\text{Scores}}}}^{{^{{\text{Secondary - Primary}}} }} - \hat{\Delta }_{{{\text{Test}}\;{\text{Scores}}}}^{{^{{\text{Secondary - Primary}}} }} } \right)\) is explained by school fixed effects, compared to individual characteristics. The results in Table 2 show that indeed, school effects explain a much larger percentage of the sample variation in learning loss than individual characteristics, although we cannot rule out that there are other individual variables not included in the sample, given that the unexplained part of the model remains substantial (88 per cent). This exercise is done on an estimate of the individual learning loss due to the pandemic (which we cannot perfectly observe, by construction, but simply approximate by comparison with the control cohort), which implies that these results are noisy. Nevertheless, the difference between the variation explained by school characteristics vis-à-vis individual characteristics is clear and favours the school effect hypothesis.

3.2 Socio-emotional well-being and its link to learning loss

A unique feature of our dataset is that it combines cognitive outcomes with survey responses about students’ deterioration of socio-emotional well-being and attitudes towards school due to the pandemic: such a survey was conducted during the external assessments. The survey questions answered by students are the following. On emotional well-being, (1) I have more anxiety and stress, (2) I want the pandemic to be over and live like before, (3) I rely on food to feel better, (4) Lately, I feel more attacked on social media. On social and familiar well-being, (5) I get along worse with my classmates, (6) I get along worse with my family. On attitudes towards school, (7) I behave worse in the classroom, (8) I’m not motivated to study.

Students reply on a scale from 1 to 4 with their degree of agreement with the given statements, with the answer taking a value of 1 if they fully disagree and a value of 4 if they fully agree. We measure socio-emotional well-being problems by constructing an average of the survey responses to all these questions. The average response is around 1.9, indicating that students rather disagree with those statements (i.e. students fully agreeing to 50% but fully disagreeing with 50% of the questions would score 2.5), with a s.d. of 0.5.

Panel A of Table 3 presents a descriptive analysis of the prevalence of well-being deterioration due to the pandemic across students and schools. It shows that the groups doing worse due to the pandemic are male students, students enrolled in public schools and schools with lower SES, and schools with prior lower performance in primary school. Overall, the results show substantial heterogeneity in well-being deterioration across groups, consistent with previous findings and the evidence that lower income comes along with worse access to healthcare (Werner and Woessmann 2021; Aubert et al. 2022).

In panel B of Table 3, we study the relationship between self-reported well-being deterioration and the test score difference between secondary and primary school. In column (1), we regress within-student changes in academic performance on our measure of well-being. The results show that students self-reporting more deterioration due to the pandemic are those performing worse in secondary school (compared to primary school). Nevertheless, this result could be simply capturing that students who typically perform worse in secondary school (compared to primary school) are those with more socio-emotional well-being issues.

In column (2), we relate socio-emotional well-being challenges to the learning losses which arise due to the pandemic. The dependent variable is now the difference between the student’s learning loss and her predicted learning change between secondary and primary education. This predicted change is estimated with the control cohort, with a regression of learning differences between secondary and primary education on school FE and individual characteristics (gender, migrant status, language at home, SES, and elementary school performance). Hence, this outcome variable excludes variation coming from students who typically perform worse in secondary school than in primary school in any case. The results show that socio-emotional well-being issues are indeed significantly associated with learning losses that arise due to COVID-19 and school closures.

Finally, in column (3), we study whether the relationship between well-being challenges and learning losses due to the pandemic is driven by cross-group variation in learning losses, or instead, it also takes place within groups (i.e. after controlling for school and individual characteristics). The results show that this is indeed the case: the association between learning losses and well-being challenges is very similar when focusing on within-group variation. In Table A10 in the Online Appendix, we show that this pattern is very similar across each of the subjects.

To sum up, these results show that students’ socio-emotional well-being challenges due to the pandemic largely overlap with learning losses, which suggests a role for more targeted and holistic policy responses. Moreover, the results also indicate that students are aware of these challenges, which could facilitate policy responses.

4 Conclusion and policy discussion

The findings of the study are policy-relevant for various reasons. First, we present evidence of learning loss 1 year after the start of the COVID-19 pandemic in March 2020, hence measuring the composite effect of 3 months of school closures and remote learning (March to June 2020), the summer break 2020, and 6 months (September 2020 to March 2021). Our case study has been one of the most successful school reopening campaigns (in terms of school closure days) among OECD countries. The fact that the learning loss found is lower compared to other countries’ experiences is consistent with what is found by Lichand et al. (2021) or by the UK Department of Education Reports regarding the effect of school reopening on learning loss alleviation.

Regarding heterogeneous effects, we find no major learning loss gaps between students by socio-economic status. This suggests that the reopening campaign was successful in mitigating inequality and that there is no trade-off between a safe reopening and catching up interventions. A safe reopening which focuses on all students could be, in the short term, the most efficient catching-up strategy for the pandemic’s learning loss.

However, we find that factors linked to schools are crucial mediators driving learning loss differences. The results show that the learning effects of the pandemic are mostly explained by between-school differences: we observe a large decline in learning for public schools, as well as a learning loss in private schools with prior low performance. One possible explanation for these differences is differences in school autonomy between public and private schools, which are notable in Spain, especially when it comes to human and financial resource management (OECD 2016): this would entail an important advantage at the time of managing remote teaching (while schools are closed) as well as school reopening campaigns.

While the pandemic increases differences between schools, we also find a regression to the mean within schools. We observe that students with higher scores in primary school are those with the largest learning losses, which could reflect a compressed curriculum following the COVID-19 outbreak.

We also find heterogeneous effects across subjects, with a higher learning loss in mathematics and Basque language. One possible explanation for this is that these are complex subjects, which may have made the periods of online teaching especially challenging. On the other hand, students are more exposed to Spanish in their daily lives (i.e. in the media). Mathematics and Basque are both taught in the Basque language for the vast majority of students, including many of whom do not speak it as their primary language at home. However, if anything and contrary to what is expected, those who speak Basque at home are those who feature larger learning losses in those subjects: this would be consistent with the regression to the mean, as Basque speakers at home tend to have higher scores in both subjects.

Moreover, to the best of our knowledge, this is the first paper showing a link between learning losses and socio-emotional well-being deterioration during the pandemic, suggesting strong complementarities, due to the access to unique data on student socio-emotional well-being during the pandemic that can be paired with learning losses. This is relevant regarding early detection and assessment, as socio-emotional well-being surveys (easier to implement than external assessment) can serve as optimal instruments to simultaneously detect student socio-emotional and academic challenges. But also regarding policy: policy responses that address students’ learning loss should incorporate socio-emotional and psycho-social support strategies to address both challenges.

Finally, our results lead to several interesting questions, which we hope will be addressed by future research. First, we would like to keep monitoring the learning loss over time. We believe studying the persistence of this shock can be broadly informative about the dynamics of human capital accumulation and learning. Second, following the results on the overlap between learning losses and socio-emotional well-being, it will be interesting to study how other measures of cognitive and non-cognitive skills, beyond test scores, are affected by the pandemic. Finally, it will be crucial to evaluate the effectiveness of the upcoming policy interventions aimed at mitigating the learning loss due to the pandemic.

Data availability

The datasets generated during and/or analysed during the current study are not publicly available due to privacy reasons. Stata do-files will be made available. It is possible to apply for data access to ISEI-IVEI (https://isei-ivei.euskadi.eus/es/contactocentro).

Notes

A compressed curriculum was one of the measures proposed by UNESCO to deal with the pandemic, for instance (Source).

Link to the report.

Moreover, a minority of 0.8% of students attend privately funded schools, which represents the lowest share among all Spanish Autonomous Communities.

According to EUSTAT (2018), parents paid an average annual fee of e1156.6 for basic education services (without considering complementary activities or services) to private schools (publicly funded) in the Basque education system.

Source: Basque government.

Link to source 1—OECD. “The state of school education: One year into the COVID pandemic”. Link to source 2—OECD. “The state of Global Education 18 months into the pandemic.”.

Link to source (ISEI-IVEI).

Link to source 1 (Spanish Government), link to source 2 (Spanish Government).

Other papers use one-third of a standard deviation as an equivalent to one year of learning (Werner and Woessmann 2021).

References

Aubert C, Dang HA, Nguyen MH (2022) The unequal impact of covid-19: Health, wealth and behaviors by income groups. Revue Française D’économie 37(2):43–77

Birnbaum AL (1968) Some latent trait models and their use in inferring an examinee’s ability. Stat Theor Ment Test Scores

Bonal X, González S (2020) The impact of lockdown on the learning gap: family and school divisions in times of crisis. Int Rev Educ 66(5):635–655

Birkelund JF, Karlson KB (2021) No evidence of a major learning slide 14 months into the covid-19 pandemic in denmark. Eur Soc 25:468–488

Blanden J, Crawford C, Fumagalli L, Rabe B (2021) School closures and children’s emotional and behavioural difficulties. In: Institute for Social and Economic Research, University of Essex.

Contini D, Di Tommaso ML, Muratori C, Piazzalunga D, Schiavon L et al (2021) The Covid-19 pandemic and school closure: learning loss in mathematics in primary education. Tech. Rep., Collegio Carlo Alberto.

Engzell P, Frey A, Verhagen MD (2021) Learning loss due to school closures during the covid-19 pandemic. Proc Natl Acad Sci 118(17):e2022376118

Grewenig E, Lergetporer P, Werner K, Woessmann L, Zierow L (2021) Covid-19 and educational inequality: how school closures affect low-and high-achieving students. Eur Econ Rev 140:103920

Hainmueller J (2012) Entropy balancing for causal effects: a multivariate reweighting method to produce balanced samples in observational studies. Polit Anal 20(1):25–46

Hanushek EA, Woessmann L (2020) The economic impacts of learning losses. OECD Education Working Papers, No. 225. OECD Publishing

Lichand G, Dória CA, Neto OL, Cossi J (2021) The impacts of remote learning in secondary education: Evidence from brazil during the pandemic

Maldonado JE, De Witte K (2021) The effect of school closures on standardised student test outcomes. Br Educ Res J 48:49–94

Muraki E (1992) A generalized partial credit model: application of an EM algorithm. ETS Res Rep Ser 1992(1):i–30

Newlove-Delgado T, McManus S, Sadler K, Thandi S, Vizard T, Cartwright C, Ford T (2021) Child mental health in england before and during the covid-19 lockdown. Lancet Psychiatry 8(5):353–354

OECD (2016) PISA 2015 results (Volume II): policies and practices for successful schools. OECD Publishing

Pizarro-Ruiz JP, Ordóñez-Camblor N (2021) Effects of covid-19 confinement on the mental health of children and adolescents in spain. Sci Rep 11(1):1–10

Ravens-Sieberer U, Kaman A, Erhart M, Devine J, Schlack R, Otto C (2021a) Impact of the covid-19 pandemic on quality of life and mental health in children and adolescents in germany. Eur Child Adolesc Psychiatry, pp 1–11

Ravens-Sieberer U, Kaman A, Erhart M, Devine J, Schlack R, Otto C, Devine J, Löffler C, Hurrelmann K, Bullinger M, Barkmann C, Siegel NA et al (2021b) Quality of life and mental health in children and adolescents during the first year of the covid-19 pandemic: results of a two-wave nationwide population-based study. Eur Child Adolesc Psychiatry, pp. 1–14.

Schult J, Lindner MA (2021) Did students learn less during the covid-19 pandemic? Reading and mathematics competencies before and after the first pandemic wave. School Effectiveness School Improv 33:544–563

Tomasik MJ, Helbling LA, Moser U (2021) Educational gains of in-person versus distance learning in primary and secondary schools: a natural experiment during the covid-19 pandemic school closures in switzerland. Int J Psychol 56(4):566–576

Von Hippel PT (2019) Is summer learning loss real? How i lost faith in one of education research’s classic results. Educ next 19(4):8–15

von Hippel PT, Hamrock C (2019) Do test score gaps grow before, during, or between the school years? Measurement artifacts and what we can know in spite of them. Sociol Sci 6:43–80

Werner K, Woessmann L (2021) The legacy of COVID-19 in education. IZA Discussion Papers 14796, Institute of Labor Economics (IZA)

Funding

Arenas acknowledges funding by the Ministry of Science and Innovation, Proyectos de I + D + i 2021–2023, PID2020-120359RA-I00.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Both authors declare no conflicts of interest.

Ethical approval

This article does not contain any studies with human participants performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

We thank Juan Manuel Moreno, David Martinez de Lafuente, Caterina Calsamiglia and Ainara Zubillaga for their comments and suggestions. We are grateful to Angel Martinez (ESADE EcPol) for his excellent research assistance. We thank ISEI-IVEI and the Department for Education of the Basque Country for providing the data and relevant information for the research project. EsadeEcPol and Fundación COTEC para la Innovación funded this research project.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Arenas, A., Gortazar, L. Learning loss one year after school closures: evidence from the Basque Country. SERIEs (2024). https://doi.org/10.1007/s13209-024-00296-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s13209-024-00296-4