Abstract

Pipelines have traditionally been recognized as the most cost-effective and safe mode for transporting natural gas. However, since a tremendous amount of gas is transported through pipelines, a massive investment is required to construct and operate pipeline networks. The oil-and-gas sector has embraced pipeline optimization because of its potential to cut down pipeline costs significantly. However, the inclusion of several variables, single or multiple goals, and intricate linear–nonlinear equality and inequality constraints make pipeline optimization a significant challenge. In recent years, the natural gas industry has experienced a surge in pipeline optimization parameters and techniques to lower the pipeline cost. Numerous researchers have previously focused on developing effective algorithmic modifications to enhance certain search capabilities. However, very few review papers have been published, despite being critical for engineering solution providers. The paper tries to fill this gap by detailing the many gas pipeline optimization parameters, fourteen in our case, tuned to obtain the most outstanding pipeline operating advantages. In addition, the six most widely accepted pipeline optimization techniques, viz. Ant colony, Genetic algorithm, Differential evolution, Particle swarm, Simulated annealing, and Whale optimization algorithms, are also detailed. Furthermore, the potential solution approach for pipeline optimization problems is addressed to supplement the application. The findings of this study intend to enhance the understanding of the methodology, techniques, and advantages of implementing optimization to the pipeline industry, allowing for maximum operational benefits in a period of diminishing fossil fuel supplies.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Problem background

The advent of the industrial era signaled the beginning of a slew of rewards for millions worldwide, ranging from the capacity to utilize various energy sources to unpredicted levels of comfort and mobility. For most of the past two centuries, wealth and opportunity have been inversely related to increasing energy use. However, since society's energy requirements have increased dramatically, humankind is confronted with a major energy crisis. According to Alves et al. (2016), since the mid-1990s, the total primary energy use worldwide has risen by about 50 percent. In 2013, fossil energy accounted for 25% of total energy consumption in Europe (Eurostat 2013). BP's future energy demand projection predicts that fossil fuel demand will increase to 41% by 2035, increasing by just 1.5% each year from 2012 to now. For 2001, fossil fuels accounted for 86.7% of total primary energy consumption, with oil and coal dominating 32.9 and 30.1%, respectively, and natural gas clocking in at 23.7% (Calderón et al. 2017). The rapid growth in fossil fuel consumption has become a global problem; it contributes to pollution, thus negatively impacting environmental quality worldwide. Under these critical conditions, natural gas is seen as a promising, vital, carbon–neutral, clean, and reliable energy source (Mikolajková et al. 2017).

The advantages of exploiting natural gas as fuel in reducing greenhouse gas emissions are immense. For example, the carbon dioxide emissions from US power plants in 2012 were 23% lower than in 1977 when they replaced many fossil fuel reactors with gas-fired facilities (Hu et al. 2016). Gas-fired power production has continued increasing in recent years due to its many benefits, including cheap cost, low carbon emissions, and quick reaction. Natural gas has traditionally been utilized in industrial, heating, and gas-powered vehicles. Using natural gas also has a low energy cost relative to other options, making it ideal as a bridge fuel during the transition to a future energy mix where most energy is generated from renewable sources (Rose et al. 2016).

In 2014 the natural gas consumption in Europe was about 14,400,000 TJ, and the usage was expected to rise by 1.9% per year globally (Guerra et al. 2016). Figure 1 depicts the amount of natural gas anticipated to be used in major sectors in the USA over the next couple of decades. According to World Energy Outlook 2010, global natural gas use in the power industry is projected to rise from 4.303 trillion British thermal units (TWh) in 2008–7600 TWh by 2035 (Tanaka 2010).

Natural gas consumption projection in the USA (AEO 2021)

Unambiguously, the natural gas industry will see significant growth in the years ahead (Jiao et al. 2021). Exploration, extraction, production, storage, and gas transportation need significant financial resources, with transmission costs accounting for up to 30% of total costs. Therefore, prioritizing efficient and cost-effective ways to meet customer demands is critical (Azadeh et al. 2015). Given its many benefits, we may infer from the discussion that natural gas will continue to play an important role in meeting global energy demands over the next several years. However, the natural gas reserves are also limited, and if not used wisely and efficiently, the depletion is bound to occur more quickly than anticipated. Transportation of natural gas is an area that can help in prolonged usage of natural gas if done wisely. Pipelines are considered to be the most efficient mode of transporting natural gas. In the next section, we detail the usage of pipelines for gas transportation worldwide.

Pipelines for transporting natural gas

The natural gas industry often relies on pipeline transportation since it is a low-cost and safe mode of transporting gas (Mokhatab and Poe 2012; Gupta and Arya 2019). A huge network of pipelines is used for gas transportation. According to one estimate, pipelines transport about 93 percent of the world's natural gas. The length of pipelines under construction or planned to be constructed was about 188,108 km in 2013, with 53,180 km of those under construction and the rest planned to be constructed. Compared to 2012, where 47,732 km of pipelines was under construction, 2013 had 11.4% more length of pipelines under construction (53,180 km compared to 47,732 km) (Zhou et al. 2017). According to an estimate, 100,000 km of natural gas pipeline networks was present across Europe (Rose et al. 2016).

China has been one of the major countries expending heavily on constructing pipelines. According to the International Energy Agency, China extracted 277 billion cubic meters of natural gas in 2018, while the country's natural gas pipeline network was about 80,000 km long. In its "Thirteenth Five-Year Plan," China plans the construction of 40,000 km of natural gas pipelines. According to Chinese predictions, the total length of China's pipelines surpassed 104,000 km by 2020 itself, while the annual gas supply capacity reached 400 billion cubic meters (Liu et al. 2019a, b, c). The construction of oil-and-gas pipelines continues to be profitable throughout the world despite the overall economy being in a state of recession. However, an adequate operation of pipelines is a serious difficulty when there are such vast networks of pipelines all over the globe.

Additionally, it is critical to balance the quantity of gas in the system, the number of people seeking it, and the amount of supply since the pipelines are used to transport and store natural gas concurrently. With increased competition among pipeline companies to provide gas to consumers at the lowest feasible price, distributors must learn to operate the pipeline efficiently to guarantee the least loss. Optimization is one of the areas that help pipeline managers to operate the pipeline effectively. The next section explores the fundamentals of pipeline optimization. The paper's scope and limitations are also suggested.

Optimizing pipelines scope and limitations of paper

From the discussion presented in Sects. 1.1 and 1.2, it is clear that pipelines are the backbone of gas transportation. However, since pipelines transport huge amounts of gas, design and operating costs are also immense. Optimization is supposed to play a major role in lowering the gas pipeline networks' design and operating costs. Typically, in a gas pipeline optimization, the objective is to maximize a specific economic measure while also meeting the performance equations that describe the physical behavior of the system and any other limitations that have been set out beforehand. In most circumstances, this issue may be expressed mathematically as a well-defined problem that necessitates numerical solution, except for extremely basic applications. Even though many general-purpose optimization algorithms have been published, applying them to pipeline transportation is often a difficult task. There are a variety of factors contributing to the issue. Firstly, optimization algorithms typically have difficulty incorporating them into pipeline designs due to the many algebraic and differential equations required to model gas pipeline transit. Secondly, many pipeline design and operations problems include continuous and discrete parameter optimization. The consequence makes optimization a typical task. Thirdly, it is often required to consider the uncertainty associated with the parameters of the underlying pipeline models. As a result, one of the most important aspects of pipeline optimization is the innovation of appropriate strategies that, on the one hand, take advantage of the unique characteristics of pipeline transportation while also allowing for the efficient use and interface with general-purpose optimization algorithms. The significance of approaches cannot be overstated since they often decide whether a particular issue or set of problems can be handled acceptably and realistically. The pipeline industry has a wide range of optimization problems at every level, from design to operation. The paper discusses the most critical and promising areas of study in this discipline.

Although the literature on natural gas transmission system challenges is rather vast, this is, to our knowledge, the first complete survey addresses the recent advances in pipeline optimization. Most studies have previously focused on algorithms to improve particular aspects of search capabilities. However, few review papers have been published and are critical for engineering solution providers. A significant gap also identified is that no research has provided a detailed strategy for implementing optimization techniques to obtain maximum profitability from gas pipeline operations. The paper proposes a mechanism for implementing optimization to pipeline activities that we hope will fill this need. The paper attempts to provide a clear snapshot of many of the recent methodologies used by researchers for gas pipeline optimization offered. Rather than going into depth on the numerical methods, we will focus on the fundamentals of the techniques that have been presented for a variety of key pipeline optimization problems in general.

Additionally, the paper intends not to discuss all the pipeline optimization algorithms but the most popular techniques presented. The pipeline operators may benefit greatly from this research since it offers a methodical framework for improving pipeline design and operation. The article may be used to acquire insight into the growth of the many real-world applications and most current improvements in solution approaches that have resulted from this fascinating and complex field of decision-making issues study.

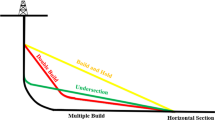

The paper firstly conducts an in-depth critical examination of the different pipeline optimization parameters used to increase the profitability of gas pipelines. Further discussion is made of the most effective optimization approaches and algorithms used to optimize gas pipeline attributes. Additionally, the author discusses the issues, prospective remedies, and future possibilities for improving pipeline parameters. Finally, the most noteworthy results are summarized. Figure 2 shows a general sketch of the problem under study.

Natural gas pipeline optimization parameters

Research has proved that optimizing pipeline operations can significantly save the pipeline networks' cost. The enormous economic boost that the optimizing pipeline network has provided has inspired pipeline managers to improve the pipeline network's characteristics and reduce its cost. The optimization methods are used to find the most efficient strategies for operating natural gas transmission pipelines (Chen et al. 2021; Arya et al. 2021). The following section discusses some contemporary parameters that investigators have explored to optimize the operational advantages of pipeline networks. The parameters used by researchers to maximize profitability in the pipeline sector are shown in Fig. 3. Further, the techniques used in optimizing the pipeline parameters are presented in the next section.

Reduce the risk of a pipeline gas supply shortage

A natural gas distribution system with several suppliers, users, and a substantial infrastructure may be hard to execute. Seasonal and geographical supply/demand mismatches compound the difficulty of natural gas distribution and infrastructure expansion planning. Inadequate pre-planning may lead to a natural gas supply shortfall, while too much pre-planning could result in a supply surplus. Several pipeline managers relied on quantitative analytic technologies to allow effective decision-making amid system disruptions (Li et al. 2019; Shan et al. 2017). A few multi-objective assignments were performed considering dependable gas supply and minimizing the risk of gas supply shortfall while also keeping gas consumption low. During the optimization process, the steady-state performance of the pipeline network is examined. However, consideration is given to the natural gas supply and customers' use. When there is a potential danger of gas supply constraint, multi-objective optimization must consider power demand, and a limit function is developed concerning the mass conservation equation (Su et al. 2019). Once the interruption risk has been determined, the uncertainty is next evaluated using the variables of interruption likelihood and interruption effects. Other authors have produced comparable work (McCarthy et al. 2007; Praks et al. 2015; Su et al. 2018).

Maximizing gas throughput from the pipeline network

While it is necessary to regulate operational constraints and client requirements, determining the maximum amount of natural gas delivered under different conditions is a well-established task. The gas flow rate is considered an objective function. The majority of gas transmission projects encounter difficulties at the local level. The maximum gas flow rate is considered an objective function of the pipeline network that guarantees that the maximum transmission capacity of pipeline is ultimately exploited (Fasihizadeh et al. 2014; Arya and Honwad 2016; Demissie et al. 2017).

Maximizing net profit of gas pipeline network

Ruan et al. (2009) developed an optimization algorithm to design and operate a gas transmission infrastructure. The objective function included the construction and operating cost of pipelines and compressors (Tabkhi et al. 2010). Further, an algorithm for minimizing a high-pressure gas network's investment and operating expenses was implemented by Amir and Reza (2014). The goal was to reduce yearly costs to a bare minimum under the assumption of a 10-year investment depreciation. The primary drawback of this research work was that pipeline diameter was considered a continuous variable.

Finding the optimum location of the compressor station

With the increase in the pipeline's length and the number of sections, running the pipeline efficiently becomes complicated, requiring the installation of a compressor station along the pipeline's route. If there are significant changes in pipeline diameter, the best location for the compressor station must be determined, which involves determining the optimal size for each pipeline section. The Belgrade–Valjevo distributive gas pipeline was designed and tested to find the most cost-effective features. The model effectively identified the optimal settings (Danilovic et al. 2011).

Lowering the power consumption in compressor stations

Gas transmission lines have been in operation for decades for transporting oil and gas. Compressors have been utilized to repressurize vast amounts of natural gas flowing through these networks over enormous distances. Natural gas-driven compressor stations are placed along pipelines regularly to compensate for pressure loss. The compressors use natural gas as the energy source for running, thus increasing fuel consumption. Therefore, fuel efficiency improvement is critical in compressor station operations. Complex optimization issues are challenging due to many elements. Firstly, compressor stations are complex systems with many compressor units, various designs, and nonlinear properties. Secondly, the whole system of nonlinear constraints that includes the compressors' working circumstances and the pipelines' conditions is exceptionally complicated. Authors Mak et al. (2019), Zhang et al. (2016), Liu et al. (2019a, b, c), Baumrucker and Biegler (2010), Mahlke et al. (2007) utilized compressor power consumption as an optimization parameter in their study.

Minimizing the total cost of the pipeline network

The primary motivation for optimizing gas pipes and associated infrastructure is to reduce operational costs. It is essential to consider many variables while operating a gas distribution network. Expenses include compressor fuel or power costs, repair and maintenance of compressor station equipment, and yearly or recurring environmental and permitting costs. Certain expenses will stay constant depending on the business's circumstances, while others will fluctuate. Variable operational expenditures, including the fuel costs for the turbo-compressors, prioritize a pipeline network (Menon 2005). Ruan et al. (2009) presented an optimization model used to minimize the investment cost of the pipeline network. The model included factors that affected the investment cost, such as pressure at pipe nodes, diameter, pipeline thickness, and compressor ratio.

Finding the optimum number of compressors in the pipeline network

Compressor operation is critical to the overall performance of gas transportation. Fuel prices, service life, and environmental impact are essential considerations in determining any product or service (Sun et al. 2000). A compressor station design must be customized to meet the facility's unique requirements. The criterion encompasses the number of units needed, whether they must be on standby, and the kinds of drivers to employ. The capital cost includes the cost of the project and the installation cost. While making the compressor purchasing decisions, it is essential to evaluate the individuals operating the compressor, the machine it will be installed on, and the required equipment to operate, such as valves, cooling systems, instruments, and pulse vials.

Additionally, pipeline industries have to account for capital, operational, and start-up/commissioning spares. The maintenance cost is determined by the components and labor required to maintain the equipment operating at or above a specific power output level. The cost covers regular maintenance, such as changing the lubricating oil, spark plugs, and major overhauls. Kurz et al. (2012) and Ohanian and Kurz (2002) have used the parameter of finding the number of the compressor as their objective function.

Minimizing electric energy consumption in the pipeline network

Combined cycle power plants have helped Spain and many other countries greatly expand their energy output. Over time, the energy and gas networks foster economic growth. Therefore, the dependability of the power supply system should be taken into consideration while evaluating power system dependability. With the introduction of new models, the types of reliability needed for different models should include electrical and gas systems. Munoz et al. (2003) included developing a model to optimize the combined cycle power plant production. Compressors are studied for their effect on network transmission capacity growth. The model was incorporated into a much larger model to measure the combined dependability of electricity and natural gas distribution networks. A comprehensive study on the Belgian high-caloric gas network usage is presented in Mansell (1991).

Minimizing the cost of fuel consumption in the pipeline network

Over the years, as the industry has grown, the gas pipeline network has become very complex, and it will continue to expand for years to come. Modern networks have thousands of pipes, hundreds of stations, and other devices such as valves and regulators. Different compressor models may be found across each station, as the system has grown capacity. Three to five percent of the transported gas is consumed by compressor stations. As a result, the issue of lowering fuel consumption costs is critical. Operational optimization may reduce fuel use by significant amounts. Tabkhi et al. (2008) utilized the generalized reduced gradient algorithm to minimize the cost of the gas pipeline network.

Maximizing the storage capacity or line pack volume of the gas network

The pipelines, apart from transportation, can also be used for storage. The amount of gas stored in the pipeline at standard conditions is a line pack (Sun et al. 2000). Natural gas pipelines have a dual purpose, transporting gas from producers to consumers and representing an opportunity for energy-storage reserves. Additionally, a sufficient line pack enables pipeline operators to deal with brief periods of excess or deficiency in supply and demand. The higher the line pack level, the higher may be the energy consumption. The primary goal of total line pack optimization is also found in the literature Ernst et al. (2011).

Increasing the hydrogen-carrying capacity of pipelines

Renewable energy curtailment has encouraged innovative waste-reduction solutions. Another potential strategy is converting renewable energy to hydrogen and using natural gas pipelines for transport. In the paper, Tabkhi et al. (2008), a mathematical model and a quantitative evaluation of natural gas pipeline networks are provided to improve compressor stations' overall operation that uses hydrogen injection. The article Han et al. (2012) suggests novel coordination control for integrated offshore wind farms and hydrogen management.

Finding the optimal sizing of pipeline network

Kurz et al. (2010) assessed the parameters of a long-distance pipeline for the various pipe sizes, pressure ratings, and station spacing. A 2000-mile, 3220-km-long pipeline buried underground for natural gas transmission was anticipated and optimized for ideal size.

Minimizing greenhouse gas emissions

Increasing the energy conversion efficiency and lowering fuel consumption are effective strategies for reducing the environmental effect, particularly carbon dioxide emissions, a significant greenhouse gas (Ahmadi and Dincer 2011). Sustainability problems have arisen in the political agenda in recent years, and in many countries, governments are using more of an integrated approach when it comes to economics and the environment. CO2 production is taxed in certain nations, and other governments may charge CO2 avoidance. Efficiency and emissions play an important role, but since the total efficiency only affects CO2 emissions from specific fuel gas, the relationship between efficiency and emissions is relatively weak. Environmental impact reduction is an essential goal of the pipeline optimization study, and because this results in a reduction in carbon emissions, the price of carbon emission is included in the total operating cost (Munksgaarda et al. 2007; Kurz et al. 2012).

Optimizing pipeline routes

A crucial element of every conventional oil-and-gas exploration and transportation operation is selecting a suitable pipeline route. Faulty route selection may result in pipe failures, oil spills, and environmental catastrophes. The pipelines that are laid offshore require to be on the seabed. The seabed's complex topography requires identifying feasible routes that require lesser installation and material cost. Meisingset et al. (2004) developed an optimization tool that helped find the possible pipeline route on the seabed. A free-spanning pipeline can lead to vibration, eventually leading to catastrophic failures. The pipeline cost and the number of free spins were reduced by finding the optimal route length. Balogun et al. (2017) developed a spatial hybrid decision support system that used fuzzy logic and GIS integration to find the optimal route on the sea bed. A case study in Malaysia was used to test the effective implementation of the support system.

The present section discussed a vast number of parameters that the researchers have optimized to maximize the benefits of pipeline transportation. The following section discusses some prevalent techniques used to optimize these parameters.

Natural gas pipeline optimization techniques

Numerous optimization techniques have been extensively developed in the past few decades. The common optimization techniques are listed in Fig. 4. The pipeline optimization techniques are distinguished into classical (deterministic) and stochastic (evolutionary). Dynamical programming, generalized gradient, and linear programming techniques are the classical techniques that have been popular in pipeline optimization (Jamshidifar et al. 2009; Carter 1998). Dynamic programming techniques have the unique characteristic of not being bogged down in the quest to find the global optima. However, these techniques have the drawback of very high computational time. Sometimes, reaching global optima is so high for complex pipeline grids that it becomes almost impossible to use dynamic programming techniques. With complicated pipeline grids, the general gradient method performs well. However, since these approaches rely on gradient search, they have the disadvantage of failing to solve nondifferentiable and discontinuous functions. In certain situations, the resulting solution obtained using generalized reduced gradient gets readily stuck in local optima, rendering it incapable of further grid optimization. While linear programming techniques can find the global optimum, they are limited to situations when the objective function is approximately linear. Conventional methods rely mostly on the initial value picked to arrive at the final result.

Additionally, all traditional methods have difficulty when confronted with a nonlinear issue (Mixed-Integer Nonlinear programming—MINLP). Researchers are currently concentrating on stochastic or evolutionary algorithms to address these problems. These algorithms have the benefit of handling large-scale issues and addressing MINLP difficulties. Stochastic methods are concerned with biological entities' formation and social behavior (Elbeltagi et al. 2005). Rather than gradient information, individual samples are used to search for the optimum solution in these methods. As a result, they are not gradient-dependent.

Additionally, the stochastic techniques are easily adaptable to discrete variables. Numerous stochastic methods are described in the literature. Ant colony, Genetic algorithm, Differential evolution, Particle swarm optimization, Whale optimization, and Simulated annealing techniques have become extremely popular to optimize pipeline operations. The present section intends not to discuss all the methods for pipeline optimization but do the most popular pipeline optimization techniques.

Ant colony optimization

The technique's origins resemble "natural ant" foraging activity. The more conservative ant known as "Linepithema Humile" has a natural capacity to emit pheromones while moving from their starting point to their colonies. The release of pheromones assists other ants in locating the source of food. Thus, the shorter route focuses on the longer pathways since the pheromone released by the ants dissipates quickly. The ants are drawn to the more straightforward path by the stigmergy. According to Dorigo, the ants' behavior was an early contributor to creating the Ant Colony Algorithm. Various disciplines analyzed the basic concept of speeding the insect state process to make artificial ants that treat the real enhancement problems (Socha and Blum 2006; Maniezzo et al. 1994; Iredi and Merkle 2001; Stutzle and Hoos 2000). Figures 5 and 6 show the working model of the ant colony optimization algorithm. We have additionally clarified the approach in the following steps approach.

-

Step I: Break down the twofold problem into a single-objective one.

-

Step II: Establish the solution matrix framework.

-

Step III: Assign a simple random solution to matrix initialization.

-

Step IV: Search for a more refined solution by using a statistical approach.

-

Step V: Update the solution.

Implementing ant colony optimization technique to gas pipeline (Arya and Honwad 2018)

Step I: Break down the twofold problem into a single-objective one

The adaptive problem-solving technique converts a multi-objective problem into a single objective. The approach in Eq. (1) illustrates the method to transform a multi-objective problem into a single-objective one.

where \(F_{MO}^{1} = F_{1}\).

Step II. Establish the solution matrix framework

Ants keep track of their routes while on the prowl for food. They leave behind pheromones that other ants use to find the same food. The solution matrix is produced similarly. The discovered solutions are initially saved in the matrix at a random location, providing a roadmap to continue improving the solution. The matrix examines the many variables of the objectives, each of which has multiple ‘k’s distinct facets. Figure 5 shows a sample solution matrix.

Step III: A simple random solution to matrix initialization

The multi-objective ant colony optimization (MOACO) technique provides a set of solutions by initializing the solution matrix with initial guesses for the 'n' number of variables and the 'k's number of goals. At each stage, MOACO creates 'l' objectives. Then, the fittest solutions from the (k + l) solutions are stored in the matrix, and the rest discarded.

Step IV: Search for a finer solution by using a statistical approach

MOACO's algorithm begins with a random estimate of the variables, which leads to many solutions. Gaussian probability distribution, represented in Eq. (2), is used to locate the set of solutions from the second step onward.

The three factors utilized in Eq. (2) that assist the MOACO in generating new solutions are the solution weight (w), the mean of solution (µ), and the standard deviation (σ).

The solution weight is calculated using Eq. (3). It represents the solution's attractiveness based on reducing or increasing the solution to be carried out in the following iteration.

where \(rank(j)\) is mean obtained by setting the Gaussian probability function equal to 1. \(q\) and k are the parameters used in MOACO.

The weight 'w' facilitates finding the appropriateness of the procedure. The weight 'w' is used in Eq. (4) to calculate the likelihood of a solution being carried forward to the next generation.

The mean of the solution \(\mu\) and the standard deviation \(\sigma\) are calculated from Eqs. (5) and (6).

The terms \(d_{l}^{i}\) and \(d_{j}^{i}\) are the two solutions in the solution matrix, and '\(\xi\)' is a factor that allocates the convergence speed to the solution. A lower value \(\xi\) is given for a higher rate of convergence.

Step V: Update the solution

The three parameters (weight, mean, and standard deviation) recalculate the solution matrix for each iteration.

The ACO works according to the steps discussed. A methodology adapted to combine the ACO with the pipeline simulation model is shown in Fig. 6.

Application of ACO to pipeline industry

Arya and Honwad (2016), Arya and Honwad (2018), and Chebouba et al. (2006) utilized the ACO techniques extensively to optimize the pipeline operations.

Genetic algorithm

The genetic algorithm (GA) is one of the most popular pipeline optimization techniques. Goldberg did the first genetic algorithm implementation in 1987 (Goldberg 1987a). The fundamental concept of the genetic algorithm is based on Darwin's evolution theory which states that the evolution process is based on the survival of the fittest and the reproduction process. In the early stages of genetic algorithms, an initial population of solutions to a problem is generated, and they are allowed to develop toward the optimal answer. Rather than being trapped with the worst choices, these individuals have evolved so that the better ones win out over the worst, much like evolution.

Creating a new population uses genetic operators on each chromosome in the population bunch. GA computations are made using chromosomal representations, selection, crossover, mutation, and a fitness function. The section explains, in brief, the working of GA and the benefits it has over other established methods. Following are the fundamental steps in GA.

-

Step 1: Initialization of population.

-

Step 2: Calculation of fitness function.

-

Step 3: Selection.

-

Step 4: Crossover and mutation.

-

Step 5: Termination.

Step 1: Initialization of population

The process starts with a set of individuals that together formulate a population. The population comprises chromosomes which is again a set of genes. The genes are fundamentally a set of variables that lead to a solution referred to as chromosomes in GA. The set of genes is represented by an alphabet or binary values (0 and 1). The process is called the encoding of genes. Figure 7 shows the components of genetic algorithms (genes, chromosomes, and population).

Step 2: Calculation of fitness function

The fitness function also called the evaluation function evaluates the closeness of a solution to the optimum solution. It shows the ability of an individual (gene) to compete with other solutions (genes). The fitness function represents the probability of an individual being selected for reproduction.

Step 3: Selection

The selection phase facilitates the selection of fittest individuals (genes). The fittest genes are passed to the next generation. In the process, two pairs of individuals (called parent genes) in GA are evaluated according to their fitness values. Individuals with higher fitness values are most likely to be chosen for reproduction.

Step 4: Crossover and mutation

Crossover

In genetic engineering, the crossover is a means of altering the genetic coding of chromosomes, which is subsequently passed on to the next generation. In contrast to self-fertilization, crossover involves the crossing of genetic material. Two strings are chosen randomly from the mating pool to breed better offspring in the process. The type of crossover depends on the encoding selected.

Three types of crossover can be chosen in GA. These are single-point crossover, two-point crossover, and uniform crossover.

Single-point crossover

The parent chromosome string searches for a crossover point in a single crossover. After that point in the organism string, all the data in the organism are transferred to the two-parent organisms. The attribute of positional bias is a defining characteristic of strings. Figure 8 represents the single-point crossover methodology.

Two-point crossover

When the two points are chosen randomly on each chromosome, they serve as locations for a transfer of genetic material. Figure 9 represents the two-point crossover methodology.

Uniform crossover

All the genes are chosen randomly from their parents' chromosomes. The uniform crossover methodology is shown in Fig. 10. The procedure is quite similar to the coin toss method.

Mutation

Genetic variety is maintained in genetic algorithms via mutation, a genetic operator. The analogy is similar to biological mutation. Genes inside a chromosome may change after a mutation. The mutation means that the solution undergoes a complete transformation from the prior solution. Using mutation, therefore, GA may solve problems more effectively. Natural mutation occurs according to a user-defined mutation probability, with the option to turn the process on or off. It is essential to keep this possibility to a minimum. Setting the search value too high will transform the search into a primary and unguided hunt.

The mutation is used by GAs to diversify the population sampled from the population and avoid local minima. Mutation operators also help slow down or even stop convergence to the global optimum by preventing the chromosomal population from becoming too identical. Furthermore, GAs avoid selecting just the fittest individuals of the population when generating the next generation instead of selecting a random (or largely random) sample with a bias toward the fitter.

Step 5: Termination

If the offsprings are significantly different from the previous generation, they may have converged. The process is continued till the desired convergence is achieved.

A more detailed working description of GA can be found in Katoch et al. (2021). Figure 11 shows the detailed working of GA.

Genetic algorithm (Riazi 2019)

Advantages of GA over other traditional optimization methods

Genetic algorithms (GA) are one of the most popular techniques in pipeline optimization. Its computational cost can be shown more clearly than other conventional methods, such as the generalized gradient technique. The following advantages of using genetic algorithms are addressed.

-

Instead of using derivatives, genetic algorithms utilize the parameters contained in the string pattern to evaluate a given function.

-

The genetic algorithm uses parameter values to direct the quest, not the individual parameter values.

-

A genetic algorithm examines several possible solutions to a problem all at once rather than focusing on a single option.

-

Instead of using deterministic rules to guide the solutions in a population from one generation to the subsequent generation, genetic algorithms employ probabilistic practices.

-

The genetic algorithm transforms the design into the genetic space's encoded area. The use of small rooms will make it possible to transfigure enormous expanses into fragmented regions, where the optimization problem has to be solved.

-

Most optimization techniques focus on a single point, while the genetic algorithm communicates with a specific group of points. Essentially, genetic algorithms apply several potential answers and then identify the one that results in the best outcome.

-

Genetic algorithms are directed randomly; therefore, they cannot be stuck in the local optimum.

Application of GA to the pipeline industry

Numerous applications of genetic algorithms for pipeline optimization were addressed. Goldberg (1987a), for example, used the GA method to reduce the overall power consumption in turbo-run compressors. In 1987, Goldberg utilized GA to improve a temporary pipeline network (Goldberg 1987b). Li et al. (2011) utilized the technique to increase the efficiency of gas pipeline networks without the usage of compressors. Sanaye and Mahmoudimehr (2012) used the method to reduce the cost of fuel usage in compressors. Botros et al. (2006) extended the technique to a multi-objective GA technique and successfully implemented it to the TransCanada Pipeline network. The application first included a single-objective function of minimizing fuel consumption which was further extended to a multi-objective process of reducing fuel compressors and maximizing gas delivery, maximizing the line pack volume, and maximizing gas delivery. According to Malhotra et al. (2011), the genetic algorithm is a process-control optimization tool that combines genetic concepts with an iterative design method. In gas turbines, genetic algorithms are used to adjust the speed controls and other control parameters. Researchers, Habibvand and Behbahani (2012) have shown that genetic algorithms may successfully be utilized to reduce the fuel consumption of a gas compressor station. Numerous other authors have used GA to optimize the pipeline authors. Some of the popular researches on pipeline optimization include the work done by: EI-Mahdy et al. (2010), Jichen and Changhong (1999), Singh and Nain (2012), Simpson & Goldberg, 1994; Wang and Duan (2006), Peipei et al. (2006), Knowles and Corne (1999), Rodriguez et al. (2010) and Kashani and Molaei (2014).

Differential evolution

Differential evolution (DE) has recently risen in popularity to find optimal solutions to many diverse issues in science and engineering. Additionally, it is one of the most frequently utilized continuous pipeline optimization techniques. The original technique for reducing nondifferentiable and potentially nonlinear continuous functions in DE was developed by Storn and Price (1997). Interestingly, while DE is an evolutionary algorithm, it does not follow any known biological paradigm or biological influences. DE has shown excellent optimization performance in several scientific areas. The process utilizes population-based evolutionary algorithms that improve the design space and use candidate solutions and random mutation, crossover, and selection operators.

In contrast to its more advanced sibling, the DE standard has a more basic control panel with just three adjustments. As with the other optimization strategies for trial vector generation, various control parameter selections affect the DE performance for a given application. First, a decision must be made on the trial vector generation method. Then various optimization control parameters must be adjusted to provide optimal results. Getting the appropriate control settings for complex situations is time-consuming and complicated. The use of DE has inspired researchers to examine and improve on newer and more sophisticated varieties of DE with adaptive and self-adaptive control settings. The settings are modified based on input from the search process (Eiben et al. 1999). In the paper, Shukla et al. (2017) introduced a new methodology for mutation vector production for the basic DE that eliminates the stagnation issue. The authors developed a novel variation of DE, and a series of 24 benchmark functions were used to assess its performance. This study suggested that parents are selected using a tournament-based variation of the DE method to increase searching capacity and improve convergence time. The following section discusses the steps in DE.

Step 1: Initialization

The DE starts with the initialization of parameters. A set of these parameters are referred to as a population. The parameters should be selected between the upper and lower bound given in Eq. (7).

As a thumb rule, all the random choices for the initial population follow a uniform probability distribution, i.e., any vector is equally likely to be chosen.

Step 2: Mutation

Each of the parameters now undergoes the process of mutation, recombination, and selection. In the mutation process, a donor vector \(\overrightarrow {{V_{d} }} \left( t \right)\) is generated for a given vector X using the randomly selected vectors \(\overrightarrow {{X_{rand1} }} \left( t \right)\), \(\overrightarrow {{X_{rand2} }} \left( t \right)\), and \(\overrightarrow {{X_{rand3} }} \left( t \right)\). Now the weighted difference of the two vectors \(\overrightarrow {{X_{rand2} }} \left( t \right)\) and \(\overrightarrow {{X_{rand3} }} \left( t \right)\) is added to the third vector \(\overrightarrow {{X_{rand1} }} \left( t \right)\). Equation (8) represents the methodology.

F is called a mutation factor that lies between [0 2].

Step 3: Recombination

The process incorporates the successful solutions from the previous generation created through mutation. The trial vector \(\overrightarrow {{U_{d} }} \left( {t + 1} \right)\) is generated from the target vector \(\overrightarrow {{X_{d} }} \left( t \right)\) and donor vector \(\overrightarrow {{V_{d} }} \left( t \right)\). Equation (9) represents the trial vector calculations.

The subscript d represents the \(d_{th}\) dimension, and Co represents the crossover rate. \(d_{rand}\) represents randomly selected integer in the interval given by [1 ,2,…, D].

Step 4: Selection

The selection is made based on the trial vector \(\overrightarrow {{U_{d} }} \left( {t + 1} \right)\) and target vector \(\overrightarrow {{X_{d} }} \left( t \right)\) using Eq. 10). The one with the lower value is admitted to the next generation.

Step 5: Termination

Mutation, recombination, and selection continue until some stopping criterion is reached.

Figure 12 shows the methodology of DE working through a flow chart.

Differential evolution algorithm (Fu et al. 2017)

Application of DE to the pipeline industry

The technique is widely used for optimizing the water distribution network. Suribabu (2010) and Babu et al. (2003) utilized; the method to optimize the cross-country gas pipeline network design and found that the technique was robust since it took less computational time. Krause et al. (2015) presented two different variants of DE that used binary and continuous coding for scheduling in pipeline networks. Biju et al. (2015) implemented DE to a project scheduling problem.

Particle swarm optimization

PSO was initially ascribed to Eberhart and Kennedy (1995) and was meant to simulate social behavior by depicting the movement of creatures in a bird squadron or fish school in a stylized manner. The foundation of the PSO technique is to allow information to be shared among the group members so that the group's movement in the solution space may be converted from disorder to order. The solution is the particle's velocity and position update to each optimization issue, which happens through Eqs. (11) and (12). Over the three terms in Eq. (11), the particle's conduct is affected by its prior speed in the first term, past experiences in the second term, and the population's choice of the best particle in the third term (Yu et al. 2021). It means that the next stage in the particle's evolution is a matter of experience that the partner experiences:

where \(V_{id}^{k}\) is the dth component of the particle velocity vector; \(X_{id}^{k}\) indicates the dth element of the position vector; c1 and c2 represent the learning factor. The factor is used to adjust the learned to the maximum step size; r1 and r2 are two random functions ranging from 0 to 1. The factor is used to increase the randomness in the searching process. ω is the inertia weight to adjust the searchability for the solution space. The fundamental algorithm of PSO works in the below-mentioned five steps.

-

Step 1: All particles should be initialized, the population size should be set to S, and the dimension of the search space should be set to D. Provide a random starting location Pi and initial velocity for each particle, with the best value in Pi being recorded as Pg;

-

Step 2: Regenerate the particle's velocity and location according to Eqs. (11) and (12). Calculate the particle velocity and verify that it is between [− Vmax, Vmax]; also, calculate the particle position and ensure that the value is between [− Xmax, Xmax].

-

Step 3: Calculate each particle's adaption value in terms of the goal function.

-

Step 4: Compare the adaptation value obtained in step 3 for each particle to the individual's best adaptation value, Pi. If it is superior to Pi, it is the optimal location; furthermore, compare the adaptation value to the group's optimal adaptation value Pg. If it is superior to Pg, make it the best location for Pg.

-

Step 5: Determine if the algorithm's termination criteria have been met. If the termination condition is met, output pertinent findings; otherwise, go to step 2.

The PSO can be implemented quickly and with only a few parameters to tweak, and it performs well in the path planning method. However, it is challenging to use because it rarely searches well, settles into an optimal local solution, and offers reduced particle diversity, precision, and accuracy in path planning. Figure 13 shows the working of the PSO technique.

Application of PSO to the pipeline industry

There have been quite a few particle swarm optimization applications in the pipeline industry. For example, the technique was used to minimize the power consumption in compressors (Zheng and Wu 2012). It was found that the PSO technique gave almost similar results within a 1% deviation but was faster compared to ACO and DP. Shi et al. (2019) utilized the technique to solve transient gas pipeline network problems. Moghadam et al. (2020) performed analysis on scouring in subsea pipelines of ANFIS network using PSO. Madoliat et al. (2017) used the PSO method for the transient study of gas pipeline networks. Wu et al. (2014) used the method to maximize trunk gas pipeline performance.

Whale optimization algorithm

Mirjalili and Lewis (2016) put forward the whale optimization algorithm (WOA) to improve complicated numerical problems. The program recreates humpback whale intelligence by simulating bubble-net feeding behavior unique to this species. The humpback whales dive down approximately 12 m and then encircle their prey with a spiral of bubbles, thus creating a bubble net, and then rise to the top following the bubbles. Optimization is completed in two stages: first, the mathematical model for spiral bubble-net feeding behavior is provided below:

Step 1: Encircling prey (exploitation phase)

Humpback whales can find the place of prey and encircle them. The WOA algorithm considers; the current best search agent positions to be the target prey or close to the optimum point, and other search agents will try to update their status toward the best search agent. Following two Eqs. (13) and (14) represent the behavior of whales.

where (t) indicates the current iteration; \(\overrightarrow {{X_{p} }}\) is the position vector of the prey; \(\overrightarrow {X}\) denotes the position vector of prey; \(\overrightarrow {A}\) and \(\overrightarrow {C}\) are position vectors and are calculated from following Eqs. (15) and (16).

where \(\overrightarrow {a}\) is a parameter whose value decreases linearly from 2 to 0 as the iteration proceeds.

And \(\overrightarrow {{r_{1} }}\) \(\overrightarrow {{r_{2} }}\) are the random numbers in the interval [0 1].

Step 2: Bubble-net attacking method (exploitation phase)

The bubble-net method is a combination of two strategies that may be quantitatively described in the following two mechanisms:

Mechanism 1: Shrinking encircling mechanism

This mechanism is achieved by reducing the fluctuation \(\overrightarrow {A}\) by decreasing the value \(\overrightarrow {a}\) from 2 to 0 in subsequent iterations—the values of \(\overrightarrow {A}\) lies between [−a, a]. By specifying random numbers, for example, in [−1, 1], the new location of a search agent may be specified anywhere between the agent's initial position and the current best agent's position.

Mechanism 2: Spiral updating position

This technique starts by calculating the distance between the whale and the prey, located at (X, Y) and (X*, Y*). A spiral connection is established as per Eq. (17) between the whale's position and its prey to simulate the humpback whale's helix-shaped movement.

where \(\overrightarrow {D} = \left| {\overrightarrow {{X^{*} }} \left( t \right) - \overrightarrow {X} \left( t \right)} \right|\) represents the distance between the whale and the prey. 'b' indicates the shape of a logarithmic spiral, and t represents a random number in the range [−1, 1].

The humpback whales circle their prey in an ever-shrinking circle and follow a spiral-shaped path. Each whale is projected to have a 50% chance of being updated using either the shrinking encircling method or the spiral model; both depict concurrent activity. The mathematical equation is given in Eq. (18).

where p is a random number in [0, 1].

Almost all meta-heuristic methods use random selection to discover the optimal solution. Because the location of the optimum design is unknown in the bubble-net technique, humpback whales hunt for food at random. The WOA algorithm begins with a collection of randomly generated solutions. After each cycle, search agents update their positions following the preceding explanations. WOA is a global optimization algorithm. The WOA algorithm's adaptive modification of the search vector A (\(\overrightarrow {A}\)) enables it to transition between exploration and exploitation seamlessly. WOA's superior exploring capability results from the whale's location update system.

Step 3: Search for prey (exploration phase)

A similar technique based on the vector modification is used to seek prey (exploration step). In reality, humpback whales hunt freely according to the location of each other. WOA uses \(\overrightarrow {A}\) that have values less than − 1 or greater than 1. The behavior forces the search agent to move far from the reference whale. The exploration phases use a randomly chosen search agent in contrast to the exploitation phase, where the best search agent was used. Equations (19) and (20) show the mathematical formulation.

where \(\overrightarrow {{X_{rand} }}\) a random position vector (a random whale) is chosen from the current population.

Figure 14 depicts the working of WOA algorithms.

Whale optimization algorithm (Jiang et al. 2020)

Application of WOA to the pipeline industry

WOA is a newly developed algorithm. Recently the algorithm was used to predict short-term natural gas consumption (Qiao et al. 2020). Some applications of WOA have been seen in water supply networks (Ezzeldin and Djebedjian 2020).

Simulated Annealing

In metallurgy, annealing is a process that involves heating and cooling a material to modify its crystal structure and eliminate defects. According to thermodynamic theory, raising the temperature of the metal substance increases its thermodynamic free energy, whereas lowering its temperature decreases its thermodynamic free energy. The free energy will decrease to a global minimum as the material nears equilibrium. By approximating the global optimum of a given function, the simulated annealing (SA) algorithm proposed by Khachaturian et al. (1981) follows this kind of probabilistic searching. Like thermodynamic free energy, an energy function is an objective function for optimization.

As the cooling process occurs, a "neighbor" solution may be used instead of the present solution, even if the "neighbor" solution has a higher chance of success. This enhancement brings about an increase in the ability of the SA to use stochastic search and global optimization. Figure 15 depicts the methodology of the SA technique.

Simulated annealing (Zhan et al. 2016)

Application of SA to the pipeline industry

Kirkpatrick et al. (1983) first proposed a general framework for large and complex combinational optimization. A hybrid GA and SA (GASA) method Zhang and Liu (2017) was used to determine the maximum operational benefit of a large-scale gas pipeline network. Coli and Palazzari (1996) utilized the technique for a real-time pipeline system design.

The hybrid GASA, which incorporates an enhanced complicated algorithm, has a higher performance than the standard method. Rodríguez et al. (2013), simulated annealing was used in conjunction with the GAMS algorithm to optimize the design of long-distance hydrocarbon pipeline networks. SAG provided near-optimal solutions. Numerous researches have used SA to optimize gas pipeline networks (Chen et al. 2017; Samora et al. 2016; Zhou et al. 2014; Mahlke et al. 2007; Zhan et al. 2016).

The section discussed the optimization fundamentals and the optimization strategy used to maximize the pipeline's operational benefits. Six contemporary optimization algorithms were discussed in detail. In the following section the current industry's challenges to implementing these techniques in pipeline operations are discussed.

Future scope and challenges

Application of optimization in the pipeline business is unquestionably beneficial in attaining financial gains. However, researchers must consider various issues to reap the possible operational advantages of pipeline transportation. This section discusses some of the current concerns that need to be solved. Additionally, various solutions are offered.

The risk associated with pipelines

Pipeline safety has lately garnered more attention due to the potential for catastrophic catastrophes and economic damage in the event of a pipeline collapse. Including a pipeline reliability objective like the risk associated with pipe rupture, corrosion, or the formation of gas hydrates into current models makes it possible to simultaneously maximize pipeline operation's economic, environmental, and safety aspects. Further study on quantitative risk assessment, failure probability prediction, and failure consequence calculations must be made extensively. Since the safety goal has a different dimension than current objective functions, a suitable decision technique should be created to pick the optimal answer from the Pareto front if a multi-objective objective function is employed.

Steady state vs. transient state

The natural gas business has been changing. Thus, daily transit operations have become more flexible. While providing tremendous possibilities for development, the natural gas infrastructure needs to use analytical techniques to communicate its views. With the technical advancements of the past several decades, these techniques are now used and improved by the scientific community. There are still many areas where the scientific community confronts challenges in terms of optimization. One of the most significant challenges in the natural gas transportation industry is incorporating and resolving time-dependent models into the analysis. The research shows that, in contrast to steady-state optimization, the constraint equations and choice variables rise with transient optimization. The changing properties of the gas flow, velocity, density, pressure, and temperature may be accounted for using partial differential equations in transient optimization. Although quasi-steady optimization techniques have been utilized in the literature to approach the transient outcomes, they do not accurately portray the transient process. The intricacy of the optimization issue is greater as a consequence. Despite this, stochastic optimization methods are better than traditional techniques for addressing transitory issues. In the framework of ModeFRONTIER (design optimization software developed by Italian company ESTECO Spa), Chebouba et al. (2006) raised the capacity of a pipeline's line pack by first utilizing the NSGA-II solver to optimize the results and then optimizing the pipeline's power to reduce it within a period of 24 h. They asserted that the technique would reduce carbon dioxide emissions since they correlate with the compressor's power—Mahlke et al. (2007) utilized simulated annealing (SA) to minimize fuel consumption problems to resolve the transient gas pipeline. They crafted an energy function that included the objective function and all the constraints with a penalty function. The methods for SA were explained in great depth, including the neighborhood structure design, the selection of step size, the creation of the first viable solution, and the cooling scheme. One important tool was the use of piecewise linear functions, which were utilized to approximate the nonlinear equations of the optimization model. Following these specific methods, the combination of SA and an industry algorithm has been developed and evaluated in three real-world scenarios with 11–31 pipelines and three to 15 compressor stations supplied by the German gas provider E. ON Ruhrgas AG (Sinn 2002). Liu et al. (2019a, b, c) created a transient state model for the West–East gas pipeline network to reduce fuel usage. Heuristic techniques were used to optimize the model. A comparison of the steady-state and the transient state models was also carried out.

Oversimplification of model

When optimizing the goal functions, adjusting limitations to reflect pipeline operating circumstances is necessary. Because some restrictions use less detailed versions of the gas flow governing equations, they cannot portray the pipe flow. Consequently, the solutions will not completely satisfy the pipeline's hydraulic and thermal limits. The original governing equations must be included in the optimization model to optimize the pipeline's operation, making the model more complicated. It is possible to resolve this problem by combining the pipeline simulation and the optimization models.

The steady-state experiment suggests that the flow decision factors in the system are time-independent. It is feasible to describe the flow of natural gas across a pipeline network by using algebraic equations. Partial differential equations explain continuity, energy, and momentum equations relating to the decision variables such as gas flow, velocity, density, pressure, and temperature over time. Due to the difficulty of this specific situation, the number of factors involved, and the intricacy of the issue itself, research in this field is still preliminary. Second, despite being transitory in practice, we assume that the issue is steady-state. A pipeline's long-term operational period also influences the mathematical model's output.

Stochastic vs. deterministic methods

The deterministic techniques serve as an initial way to solve stochastic algorithms. The hurdle is overcoming problems by developing both efficient and resilient optimization methods. The capacity to solve complex optimization problems with high computing efficiency and resolve complex issues with global optimization is two of the most significant advantages of stochastic optimization methods. GA is the only among ACO, PSO, SA, and DE algorithms used in the industry significantly. Although more study is being carried out on the other three algorithms, they have not yet gained mainstream acceptance (Wu et al. 2018).

Solution quality

Distribution pipeline design is also a strategic optimization challenge, with the fundamental criteria being solution quality. The solution uses algorithms derived from natural phenomena to perform the task efficiently. Balancing timeliness and solution accuracy is required for the operational optimization issue. The solution quality is critical since the application duration is often several days to several months, much greater than the optimization algorithm's execution time. Additionally, due to the complexity of the restrictions, conventional optimization methods have a difficult time locating the actual global optimum. A current population-based approach is an excellent option in this situation. The batch scheduling issue is an operational-level optimization job whose primary stumbling block is excessive constraints.

Previously, only a few effective implementations of the population-based method have been published. It has the potential to become a contentious issue in the future.

Sensitivity analysis

It is critical to conduct a sensitivity analysis of relevant parameters based on many possible scenarios. General principles for optimum parameters should be derived from the anticipated accomplishments. Then, it is necessary to determine the effectiveness of each optimization method to discover the parameter ranges of each, which will offer advice for selecting an optimization technique for a particular instance.

Blend of different optimization techniques

The enhancement strategies should be studied to address certain limitations of current optimization algorithms based on sensitivity assessments and algorithm comparisons. The hybrid SA-GA and DE-GA algorithms may significantly improve the current algorithms since they integrate the strengths of various techniques. Though there are not many books on the subject, some written material exists. Hybrid algorithms such as the hybrid GA-PSO (Manasrah and Ba Ali 2018) and GA-ACO (Fidanova et al. 2014) approaches have not yet been used to pipeline optimization. Most existing research has addressed optimization models for gun barrels or branching pipeline systems. Recently Li et al. (2021) utilized DWOA, a blend of differential evolution and whale optimization algorithm, to optimize the load sharing between the compressor stations. The technique was demonstrated to be better than DE and WOA in accuracy and stability. Fettaka and Thibault (2013) proposed FP-NSGA-II algorithms that utilized GA and front prediction fundamentals to minimize the power consumption in compressors.

Role of online software

More investigation should be done on improving the performance of more complicated cyclic pipeline systems. It is essential to study internet optimization technologies. Pipes are already loaded with SCADA. A potential approach to fully use optimization findings is to combine the online simulation technology with pipeline operation optimization technology. Because the online optimization technology is in place, it is possible to choose initial solutions based on the accurate pipeline operating data gathered from the SCADA system. The optimization results may then provide valuable information to optimize the pipeline. Additionally, a decision-making system will decide whether to implement the proposed optimum operating scheme. One of the key questions to be answered while developing an online optimization technology is the methodology to link the simulation model, optimization model, and SCADA system (Wu et al. 2018).

Surplus capacity

Pipeline surpluses created by contractual obligations while still managing transportation are a significant issue for the natural gas industry. Models that include pipeline capacity optimization are needed for analytical purposes. Operating the natural gas compressor stations additionally presents a host of configuration difficulties due to the volume fluctuation.

Unidirectional gas flow

In addition, most of the papers focus on the steady-state, one-way gas flow. It seems that most of the researchers believe that valves are there for the sole purpose of preventing flow in one direction. However, stable flow models that include reversible flows may significantly contribute to pipeline networks connecting their main lines to storage facilities and allowing for flow either way.

Heat transfer to surrounding and elevation

Steady-state models assume an isothermal intake temperature of the gas. In addition, researchers believe that heat transmission with the surroundings of the pipeline system will maintain a consistent temperature. The transmission lines are also supposed to be horizontal pipes in these works. In reality, these systems experience regular variations in height. As a result, additional variables to consider will help rectify elevation fluctuations.

Problems due to corrosion

The optimization application to pipeline industries leads to the customers being more satisfied with the gas quality and will experience a far greater benefit to the pipeline system. However, sometimes optimization may lead to discrepancies in the optimization results. As an example, the idea of optimization may lead to inaccuracies in pipeline corrosion predictions based on sulfur compound production in acidic gas (Thakur et al. 2020, 2021a, b). Constituents may change seasonally or perhaps more often, which creates difficulties. For the natural gas transit sector, safety and dependability are important considerations.

Electricity and gas networks in combination

A new notion known as the energy network has been suggested by researchers, encompassing the electrical and gas networks. The naming of electricity and gas production as sources also applies to all users of energy, who get their energy from energy hubs. By converting between various energies (electricity and natural gas), energy network flexibility increases, enabling full use of all available energy sources. To distinguish between "current flow" and "gas flow," the phrase "energy flow" is used by researchers.

In short, the energy network optimization model is a nonconvex, nondifferential, high-dimensional, and extremely nonlinear optimal energy flow model. Solving it is a serious challenge. Beigvand et al. (2016) recently showed that the gravitational search algorithm, PSO, GA, and DE might be used to solve the energy and gas network operational optimization system. Researchers such as Wang et al. (2019) utilized the 'Fruit fly' optimization algorithm for optimizing integrated electric-natural gas networks. Natural gas pipeline operation optimization has already been demonstrated to be improved by a variety of hybrid and extended stochastic optimization methods. They are anticipated to have an even more significant impact on energy network operation optimization in the future.

New optimization algorithms

In recent years various new optimization algorithms have been developed that still are in the developing stage to be implemented in pipeline operations. Contemporary population-based optimization approaches have shown remarkable outcomes in designing large-scale distribution pipelines, while traditional optimization techniques are limited to solving relatively fundamental issues. Algorithms like Cuckoo search (Maliki 2020), Bat algorithms (Fister 2013), Intelligent systems (MohamadietBaghmolaei et al. 2014); Fire-fly algorithms (Banati and Bajaj 2011), Gravity search methods (Rashedi et al. 2009), Fuzzy logic (Jana et al. 2017), Fruit fly (Hu et al. 2021), Memetic algorithms (Banos et al. 2010) should be implemented in the pipeline to achieve operational benefits.

Summary and conclusions

An extensive list of optimization difficulties confronts the gas pipeline industry. The paper discussed the methodology adopted in improving the profitability of gas pipelines by using a variety of widely accepted criteria. Complexity, scalability, and algorithmic performance were discussed in optimizing pipeline operations. Additionally, the methods suggested overcoming these issues are also examined. It is possible to draw some intriguing conclusions here.

-

The traditional optimization of the gas pipeline network is computationally difficult and time-consuming. Thus, intelligent optimization techniques with good global search ability and robustness have drawn extensive attention and are widely used.

-

The gas pipeline optimization model is an MINLP model having a nonconvex region with continuous, discrete, and integer optimization variables. Today's optimization models concentrate on fuel consumption, line packs, compressor costs, and greenhouse gas emissions. But pipeline safety gets less attention. Introducing the pipeline safety goal function may optimize pipeline operational economic, environmental, and safety problems.

-

The most significant impediment to efficiently using natural gas reserves is the limitations of optimization approaches that are theoretically conceivable but difficult to execute in practice due to their various assumptions. Numerous sophisticated technologies capable of rationally responding to changing conditions are required to stimulate demand. Increased efficiency in using conventional natural gas pipelines would result in significant economic benefits for the natural gas industry that would exceed original estimates. The scientific community's continued contributions are expected to speed this trend.

-

Since 1985, stochastic optimization methods have solved the MINLP problem. GA and its extensions have been successfully utilized in many pipeline transportation problems with varying sizes and topologies, such as gun barrels, trees, and cyclic networks. While PSO, ACO, and SA algorithms are less standard, PSO has proven quicker than GA and ACO. However, these stochastic optimization techniques have yet to address early failure.

-

Hybrid algorithms assist overcome existing algorithm constraints by combining the advantages of two or more stochastic algorithms, but applications to gas pipeline operation optimization remain rare.

-

Integrating optimization software, simulation software, decision-making software, and SCADA software in a system effectively use optimization findings. The data obtained from the pipeline operation, which is used as the foundation for the optimization model, may be used to fine-tune the pipeline control.

-

The transient and operational optimization of the integrated energy network is also discussed in the study.

-

We investigated stochastic algorithms including GA, PSO, ACO, SA, DE, and others to improve pipeline operational stability.

-

The article reviews the application of optimization techniques in pipeline design. It shows that many subjects were researched during the last decade. New mathematical programming techniques are continuously developed and utilized to improve optimization results. Using optimization techniques in pipeline design may offer significant advantages.

At last, we conclude that since we provide a unique perspective on the growth of natural gas pipeline optimization, the technical, scientific, and industrial sectors will substantially benefit from our extensive study. In response to pipeline optimization concerns, this extensive literature review gives an overview of the subject's current state and proposes future research approaches. Research in this area is still in its infancy, and this work uses a systematic review to outline the obstacles that need to be addressed and possible solutions.

Abbreviations

- \(F_{{{\text{MO}}}}\) :

-

Multi-objective function

- \(w\) :

-

Weight factor

- \(f\) :

-

Number of functions (f = 1, 2, 3…)

- \(F_{f}\) :

-

Function value (f = 1, 2, 3…)

- \(P^{i} (x)\) :

-

Gaussian probability distribution

- \(p_{l}^{i} (x)\) :

-

Probability function for individual functions

- \(c\) :

-

Value stored in the matrix

- \(X_{j}^{L}\), \(X_{j}^{U}\) :

-

Lower and upper bounds of variables

- \(\overrightarrow {X} \left( t \right)\) :

-

Vector

- F:

-

Mutation factor (differential evolution)

- \(\overrightarrow {{U_{d} }} \left( {t + 1} \right)\) :

-

Trial vector

- \(V_{id}^{k}\) :

-

dth component of the particle velocity vector

- \(X_{id}^{k}\) :

-

dth element of the position vector

- \(\omega\), c 1, c 2 :

-

Learning factor

- r 1 and r 2 :

-

Random functions ranging from 0 to 1

- \(\overrightarrow {D}\) :

-

Distance between the whale and the prey

- \(\overrightarrow {A}\), \(\overrightarrow {C}\) :

-

Position vectors

- \(C_{O}\) :

-

Crossover rate

- \(p\) :

-

Random number in [0, 1].

- \(\mu\) :

-

Mean of solution

- \(x\) :

-

Variable

- \(q\) and \(k\) :

-

Parameters

- \(\sigma\) :

-

Standard deviation

- \(\pi\) :

-

3.14

- \(d\) :

-

Solution in the matrix

- \(\xi\) :

-

Convergence speed

- \(\overrightarrow {{V_{d} }} \left( t \right)\) :

-

Donor vector

- \(\begin{gathered} \overrightarrow {{X_{rand1} }} \left( t \right),\overrightarrow {{X_{rand2} }} \left( t \right), \hfill \\ \overrightarrow {{X_{rand3} }} \left( t \right) \hfill \\ \end{gathered}\) :

-

Randomly selected vectors

- \(d_{rand}\) :

-

Randomly selected integer

- \(\overrightarrow {{X_{d} }} \left( t \right)\) :

-

Target vector

- \(P_{i}\) :

-

Individual's best adaptation value

- \(P_{g}\) :

-

Group's optimal adaptation value

- [− V max, V max]:

-

Lower and upper bounds for velocity of particle

- [− \(X_{\max }\),\(X_{\max }\)].:

-

Lower and upper bounds for the position of particle

- \(\overrightarrow {{r_{1} }}\) and \(\overrightarrow {{r_{2} }}\) :

-

Random numbers in the interval [0, 1]

- \(\overrightarrow {a}\) :

-

2 To 0

- ‘b’:

-

Shape of a logarithmic spiral

- \(\overrightarrow {{X_{rand} }}\) :

-

Random position vector

References

AEO (2021) https://www.aeo.org.uk/covid-19

Ahmadi P, Dincer I (2011) Thermodynamic and exergoenvironmental analyses and multi-objective optimization of a gas turbine power plant. Appl Therm Eng 31:2529–2540

Amir H, Reza M (2014) Techno-economic and environmental optimization of natural gas network operation. Chem Eng ResDes 92(11):2106–2122

Arya AK, Honwad S (2016) Modeling, simulation, and optimization of a high-pressure cross-country natural gas pipeline: application of an ant colony optimization technique. J Pipeline Syst Eng Pract 7(1):04015008. https://doi.org/10.1061/(asce)ps.1949-1204.0000206

Arya AK, Honwad S (2018) Optimal operation of a multi-source multi delivery natural gas transmission pipeline network. Chem Prod Process Model 13(3):1–17. https://doi.org/10.1515/cppm-2017-0046

Arya AK, Jain R, Yadav S, Bisht S, Gautam S (2021) Recent trends in gas pipeline optimization. Mater Today: Proc

Azadeh A, Raoofi Z, Zarrin M (2015) A multi-objective fuzzy linear programming model for optimization of natural gas supply chain through a greenhouse gas reduction approach. J Nat Gas Sci Eng 26:702–710. https://doi.org/10.1016/j.jngse.2015.05.039

Babu BV, Angira R, Chakole PG, Syed Mubeen JH (2003) Optimal design of gas transmission network using differential evolution. Department of Chemical Engineering, Birla Institute of Technology & Science, Pilani, India

Balogun AL, Matori AN, Hamid-Mosaku AI, Umar Lawal D, Ahmed Chandio I (2017) Fuzzy MCDM-based GIS model for subsea oil pipeline route optimization: an integrated approach. Mar Georesour Geotechnol 35(7):961–969

Banati H, Bajaj M (2011) Fire fly based feature selection approach. Int J Comput Sci Issues (IJCSI) 8(4):473