Abstract

The prediction of production volumes from shale gas wells is important in reservoir development. The physical parameters of a reservoir are uncertain and complex, and therefore, it is very difficult to predict the production capability of a shale gas well. An improved GM(1, N) model for shale gas well productivity prediction, focused upon the causes of prediction errors from the existing traditional GM(1, N) method, was established. By processing a data series related to the predicted data, the improved GM(1, N) model takes into account the fluctuations of the original production data, reflects the trend of the original data under the influence of relevant factors, and hence predicts more accurately the fluctuation amplitude and direction of the original data. Additionally, the proposed method has higher accuracy than the conventional GM(1, N), GM(1, 1), and MEP models. The prediction accuracy increases gradually and the relative error decreases gradually from bottom data (casing pressure at well start-up, etc.) to top data (shale gas production). Accordingly, a step-by-step prediction method could be effective in improving prediction accuracy and reflects the typical fluctuation characteristics of shale gas production.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The prediction of shale gas production rates and volumes is an important part of oilfield development (Elmabrouk et al. 2014). Whether the production of shale gas wells can be predicted effectively in the future, based on the historical data, is related to the real-time adjustment of the shale gas well working schedule, thus playing the role of an assistant during decision-making (Mohammadpoor and Torabi 2018).

There are three methods to predict production rate. First is the reservoir engineering method based on basic percolation theory, for example, production decline analysis (Bahadori 2012; Miao et al. 2020; Wang 2017). This method takes into account the effects of reservoir properties, well conditions, and production control parameters on shale gas production. It is a common mathematical statistics method for predicting and analysing reservoir production performance and is one of the typical representations used in shale gas field. However, the reservoir engineering criteria are obtained for an ideal percolation environment, which will not reflect fully the phenomena and laws of actual shale gas field percolation. Furthermore, during operation, shale gas production is constantly being interfered with by various stimulation technologies (Luo et al. 2019), such as fracturing and acidizing, and the production decline analysis has certain limitations.

The second prediction approach includes the numerical simulation methods (Ado et al. 2019). This approach has high reliability, but it needs a clear understanding of geological conditions and the reservoir condition. From model building, to historical fitting, to development prediction, the whole process is long and complex, and the procedure is difficult. A third option utilizes machine learning methods based on data mining, such as grey system theory (Hu et al. 2018; Hu 2020; Julong 1982; Qiao et al. 2019; Yang et al. 2015; Ye et al. 2020; Zhou et al. 2019b), and artificial neural networks (Khan et al. 2020; Seyyedattar et al. 2020; Xiong and Lee 2020; Yang et al. 2005). Compared with the traditional methods, machine learning has a lower reliance upon the hypothetical data. It mines the actual data deeply by setting training sets and verifies the generalization ability of the model through independent verification sets. Therefore, especially in the case of large amounts of data, machine learning often has excellent prediction performance (Goebel et al. 2020; Ma 2019).

As the physical parameters of a reservoir are uncertain and complex, and such geological and engineering parameters are difficult to obtain (Luo et al. 2020), it is very difficult to predict shale gas well production by the use of reservoir engineering methods and numerical simulation methods. However, machine learning methods, especially grey system theory, have played an important role in predicting shale gas production (Ma and Liu 2016). Although grey system theory has good performance in solving small sample problems, it still has some limitations in application; for example, the calculation of background values has errors. In order to solve these problems, some researchers have deduced the accurate background value of the GM(1, 1) model by using the solution for non-homogeneous forms (Truong and Ahn 2012a, b; Zhou et al. 2019a). Because of the validity of the background value transformation method, many scholars have begun to transform the background value of other grey system models, such as the grey power model, the grey Verhulst model (Rajesh 2019), the discrete non-homogeneous model (Cui et al. 2013), and the multivariable grey prediction model (Zhi et al. 2017). These models have developed forecasting theory to a certain extent, but there are still some problems. For example, the optimization of background values is unreasonable, and the data are not processed smoothly.

The present investigation focused upon the cause of error in the existing conventional GM(1, N) model, taking the production of shale gas wells as the research object and establishing an improved GM(1, N) model-based shale gas well productivity prediction model. To achieve this, firstly the original data were smoothed. Secondly, the improved GM(1, N) model was established, based on improving the background value of the existing conventional GM(1, N) model. Finally, the model established during the present study was compared and analysed through the example calculation.

Methodology

GM(1, N) is one of the main methods of grey system theory and is a first-order differential equation composed of multivariables. It mainly fits and predicts the dominant factors and related variables in some complex systems under the condition of “small sample and poor information”.

The principles of GM(1, N)

The feature series (or output series) is (Julong 1982):

The sequence of related factors is (Tien 2011):

\(X_{i}^{\left( 1 \right)}\) is the 1-AGO sequence of \(X_{i}^{\left( 0 \right)}\), and

The equal weight mean value sequence of \(X_{1}^{\left( 1 \right)}\), \(Z_{1}^{\left( 1 \right)}\), is:

where,

The grey differential equation is:

where \(x_{1}^{(0)} \left( k \right)\) is the grey derivative, \(z_{1}^{(1)} \left( k \right)\) is the background value, \(a\) is the developing coefficient, and \(b\) is the grey action quantity.

Then, the whitening differential equation of GM(1, N) is:

If the range of \(x_{i}^{(1)} \, \left( {i = 1,2, \ldots n} \right)\) is not too wide and \(\sum\limits_{i = 2}^{n} {b_{i} } x_{i}^{(1)} \left( k \right)\) is a grey constant, then:

Finally, the predicted value of the feature series is obtained by cumulative subtraction:

The least-square estimation of the parameters of GM(1, N), \(\left[ {a,b_{2} , \cdot \cdot \cdot ,b_{n} } \right]^{T}\), satisfies:

where

Parameter optimization

Data smoothness processing

As the production of shale gas wells is affected by stimulation treatment, interference from adjacent wells, equipment maintenance, and other factors, there must be some volatility. However, the traditional GM(1, N) method has some limitations in dealing with volatile data series. The reason is that the original data did not meet the smoothness requirements of the prediction model (Kung and Yu 2008). Therefore, in order to predict shale gas well production more accurately, the screened raw data are pre-processed to make the processed data satisfy the smoothness requirement of the prediction model.

If the original data sequence that has been smoothed is defined to be \(X_{i} ^{\prime}\left( 0 \right)\), then:

where

The processed data then are used as the original modelling data for prediction, and the prediction results are restored exponentially after the prediction is finished:

Background value optimization

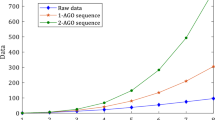

The accuracy of GM(1, N) is directly related to its time response function Eq. (8). The parameters that determine the time response function are the developing coefficient \(a\) and the grey action quantity \(b\). However, the values of \(a\) and \(b\) are related directly to the background value. Therefore, the key to improving the accuracy of GM(1, N) is to construct better formulae for calculating the background value. It can also be seen from Eq. (5) that the background value is the average value of \(x_{1}^{\left( 1 \right)} \left( k \right)\) and \(x_{1}^{\left( 1 \right)} \left( {k - 1} \right)\) in Fig. 1. Apparently, the use of the two-point average formula to calculate the integral value of the nonlinear function will bring about great errors. The accuracy of G(1, n) can be improved by using a more reasonable numerical integration method to calculate background value (Shen et al. 2012).

In the present study, the background value optimization is proposed to improve the accuracy of G(1, n).

For the interval [− 1,1], the Gauss–Legendre quadrature formula is (Sidi 2009):

The expression of the Nth Legendre polynomial in [− 1,1] is:

Nth Legendre polynomial is an orthogonal polynomial with the interval [− 1,1]. Therefore, the zero point of the Legendre polynomial is the Gauss point of Eq. (15) and \(x_{k} \, \left( {0,1, \ldots ,n} \right)\) is the zero point of the \(N{ + }1\) Legendre polynomial.

Let \(x = \frac{b - a}{2}t + \frac{b + a}{2}\), and transform interval [a,b] to [− 1,1]:

The Gauss–Legendre formula for N = 5 is used, so the new background value calculation method is:

The background value can be calculated more accurately by using Eq. (18) instead of Eq. (5). \(x_{1}^{(1)} \left( k \right)\) is the discrete data in a discrete sequence. The values of \(x_{1}^{(1)} \left( {k + 0.0469} \right)\), \(x_{1}^{(1)} \left( {k + 0.2307} \right)\), \(x_{1}^{(1)} \left( {k + 0.5} \right)\), \(x_{1}^{(1)} \left( {k + 0.7692} \right)\), and \(x_{1}^{(1)} \left( {k + 0.9531} \right)\) cannot be obtained accurately. To solve this problem, the expression \(x_{1}^{(1)} \left( k \right)\) is given by exponential function (Jiang et al. 2014; Ma et al. 2014):

where

The values of \(x_{1}^{(1)} \left( {k + 0.0469} \right)\), \(x_{1}^{(1)} \left( {k + 0.2307} \right)\), \(x_{1}^{(1)} \left( {k + 0.5} \right)\), \(x_{1}^{(1)} \left( {k + 0.7692} \right)\), and \(x_{1}^{(1)} \left( {k + 0.9531} \right)\) can be obtained accurately from Eqs. (19) and (20).

Solution process

For the present application, the solution process for the model can be summarized as follows:

-

1.

Smooth the original data \(X_{i}^{(0)}\) logarithmically, and the processed data \(X_{i} ^{\prime}\left( 0 \right)\) are taken as the original modelling data sequence.

-

2.

Accumulate the original modelling data sequence \(X_{i} ^{\prime}\left( 0 \right)\) once by Eq. (3) to generate the accumulated sequence \(X_{i}^{(1)}\).

-

3.

Calculate the background value \(z_{1}^{(1)} \left( k \right)\) using Eq. (18), and the improved GM(1, N) model is established.

-

4.

Obtain the value of \(B\) using the optimized background value \(z_{1}^{(1)} \left( k \right)\) according to the least-squares method Eq. (11), then the value of \(a\),\(b_{2}\),\(\ldots\),\(b_{n}\) is obtained from Eq. (10), and the value of \(\widehat{x}_{1}^{(1)} \left( {k + 1} \right)\) is obtained from Eq. (8).

-

5.

Finally, the predicted value \(\widehat{x}_{1}^{(0)} \left( {k + 1} \right)\) of the feature series is obtained using Eqs. (9) and (14).

Results and discussion

The production data were obtained for Well W1 for 20 days (Table 1). Data from the first 16 days were used for model building, and data from the last 4 days were used to test the prediction results. In addition, the model was evaluated by comparison with results from the mean square error [MSE, Eq. (19)], mean relative percentage error [MRPE, Eq. (20)], relative mean squares [RMS, Eq. (21)], and the linear correlative coefficient [R, Eq. (22)].

The model established in the present study was compared with MEP (Ma and Liu 2016; Oltean and Dumitrescu 2002), conventional GM(1, N) (Ma et al. 2014), and traditional GM(1, 1) (Ikram et al. 2019; Julong 1982; Liu and Deng 1996). The production rate is simulated using the data shown in Table 1. Figure 2 shows the results as simulated by the different methods; Table 2 shows the evaluation indices results.

As shown in Fig. 2, GM(1, 1) can be applied only to progressive or decreasing data prediction; it cannot be applied for the prediction of fluctuating series. Conventional GM(1, N), MEP, and the improved GM(1, N) model can be used to predict fluctuating production data, which can reflect the direction and amplitude of fluctuation in the production data and has a good prediction capability. As shown in Table 2, the evaluation results indicate that the model established in the present study had the smallest covariance and higher prediction accuracy. The linear correlation coefficient R shows that the calculated results of GM(1, 1) were almost fixed, leading to an R-value for its predicted results that was very close to zero, and the prediction effect was the worst. The trend for the MEP prediction results was contrary to the trend of the actual production data. However, the improved GM(1, N) model had a slight advantage over the conventional GM(1, N) on data relevance.

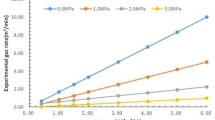

As the improved GM(1, N) model mimics the fluctuations of the original data by processing a series of data related to the simulated data, it reflects the trends of the original data under the influence of relevant factors and can more accurately predict the fluctuation amplitude and direction of the original data. Figure 3 shows the trend of the predicted results of the relevant parameters and the relative errors between the predicted values and the original values. The improved GM(1, N) model first predicts the casing pressure at well start-up through GM(1, 1). Then, based on the trend of casing pressure at well start-up, it predicts the tubing pressure at well start-up. Next, based on the casing pressure and the tubing pressure at well start-up, it predicts the tubing pressure at shut-in and so on and finally estimates the overall shale gas production rate with fluctuating characteristics. From the variation trend of Fig. 3a, e and the relative error shown in Fig. 3f, the prediction accuracy increases gradually and the relative error decreases gradually according to the sequence of casing pressure at well start-up, tubing pressure at well start-up, tubing pressure at shut-in, water production, and shale gas production. Therefore, such a step-by-step prediction method can effectively improve the accuracy of production rate/volume predictions and accurately reflects the fluctuation characteristics of shale gas production.

Conclusions

The present investigation focused upon identification of the causes of the error of the conventional GM(1, N) shale gas production estimation method. Taking the production of shale gas wells as the research object, an improved GM(1, N) model-based shale gas well productivity prediction model has been proposed. After verifying the accuracy of the improved model, the following conclusions were reached:

-

1.

The improved G(1, n) model mimics the fluctuation of the original data by the processing of a series of data related to the predicted data. In consequence, it can better reflect the trends exhibited in the original data under the influence of relevant factors pertaining to the reservoir and more accurately predicts the fluctuation amplitude and direction from the original data.

-

2.

For the data in the present example, the improved GM(1, N) method had a slight advantage over the conventional GM(1, N) method on data relevance and had significant advantages over the GM(1, 1) method and the MEP method. Additionally, the improved GM(1, N) method had the smallest covariance and exhibited greater predictive accuracy.

-

3.

Prediction accuracy gradually increased, and the relative error gradually decreased from bottom data (e.g. casing pressure at well start-up, etc.) to top data (shale gas production volume/rate). A step-by-step prediction method such as this can improve prediction accuracy effectively and can reflect accurately the fluctuation characteristics of shale gas and shale gas production.

References

Ado MR, Greaves M, Rigby SP (2019) Numerical simulation of the impact of geological heterogeneity on performance and safety of THAI heavy oil production process. J Petrol Sci Eng 173:1130–1148

Bahadori A (2012) Analysing gas well production data using a simplified decline curve analysis method. Chem Eng Res Des 90(4):541–547

Cui J, Liu S-F, Zeng B, Xie N-M (2013) A novel grey forecasting model and its optimization. Appl Math Model 37(6):4399–4406

Elmabrouk S, Shirif E, Mayorga R (2014) Artificial neural network modeling for the prediction of oil production. Pet Sci Technol 32(9):1123–1130

Goebel R, Glaser T, Skiborowski M (2020) Machine-based learning of predictive models in organic solvent nanofiltration: solute rejection in pure and mixed solvents. Sep Purif Technol, p 248

Hu N, Ye Y, Lu Y, Luo J, Cao K (2018) Improved unequal-interval grey Verhulst model and its application. J Grey Syst 30:175–185

Hu Y-C (2020) A multivariate grey prediction model with grey relational analysis for bankruptcy prediction problems. Soft Comput 24(6):4259–4268

Ikram M, Mahmoudi A, Shah S, Mohsin M (2019) Forecasting number of ISO 14001 certifications of selected countries: application of even GM (1,1), DGM, and NDGM models. Environ Sci Pollut Res 26(12):12505–12521

Jiang SQ, Liu S, Zhou XC (2014) Optimization of background value in GM(1,1) based on compound trapezoid formula. Control Decis 29:2221–2225

Julong D (1982) Control problems of grey systems. Syst Control Lett 1(5):288–294

Khan MR, Tariq Z, Abdulraheem A (2020) Application of artificial intelligence to estimate oil flow rate in gas-lift wells. Nat Res Res, pp 1–13

Kung L-M, Yu S-W (2008) Prediction of index futures returns and the analysis of financial spillovers—a comparison between GARCH and the grey theorem. Eur J Oper Res 186(3):1184–1200

Liu S, Deng JL (1996) The range suitable for GM(1,1). J Grey Syst 11:131–138

Luo Z et al (2019) Thermoresponsive in situ generated proppant based on liquid-solid transition of a supramolecular self-propping fracturing fluid. Energy Fuels 33(11):10659–10666

Luo Z et al (2020) Interaction of a hydraulic fracture with a hole in poroelasticity medium based on extended finite element method. Eng Anal Boundary Elem 115:108–119

Ma X (2019) A brief introduction to the grey machine learning. J Grey Syst 31(1):1–12

Ma X, Liu Z-B (2016) Predicting the oil well production based on multi expression programming. Open Pet Eng J 9:21–32

Ma X, Liu Z, Chen Y (2014) Optimizing the grey gm(1, n) model by rebuilding all the back ground values. J Syst Sci Inf 2:543–552

Miao Y, Zhao C, Zhou G (2020) New rate-decline forecast approach for low-permeability gas reservoirs with hydraulic fracturing treatments. J Petrol Sci Eng 190:107112

Mohammadpoor M, Torabi F (2018) A new soft computing-based approach to predict oil production rate for vapour extraction (vapex) process in heavy oil reservoirs. Can J Chem Eng 96(6):1273–1283

Oltean M, Dumitrescu D (2002) Multi expression programming. Technical report, UBB-01-2002. Babeș-Bolyai University, ClujNapoca

Qiao X, Zhang Z, Jiang X, He Y, Li X (2019) Application of grey theory in pollution prediction on insulator surface in power systems. Eng Fail Anal 106:104153

Rajesh R (2019) Social and environmental risk management in resilient supply chains: a periodical study by the Grey-Verhulst model. Int J Prod Res 57(11):3748–3765

Seyyedattar M, Zendehboudi S, Butt S (2020) Technical and non-technical challenges of development of offshore petroleum reservoirs: characterization and production. Nat Resour Res 29(3):2147–2189

Shen Y, Sun H, Bao L (2012) Optimization of GM(1, N) model based on numerical analysis and its application. In: 2012 8th international conference on computing and networking technology, pp 184–188

Sidi A (2009) Asymptotic expansions of Gauss-Legendre quadrature rules for integrals with endpoint singularities. Math Comput 78(265):241–253

Tien T-L (2011) The indirect measurement of tensile strength by the new model FGMC (1, n). Measurement 44(10):1884–1897

Truong DQ, Ahn KK (2012a) An accurate signal estimator using a novel smart adaptive grey model SAGM(1,1). Expert Syst Appl 39(9):7611–7620

Truong DQ, Ahn KK (2012b) Wave prediction based on a modified grey model MGM(1,1) for real-time control of wave energy converters in irregular waves. Renew Energy 43:242–255

Wang H (2017) What factors control shale-gas production and production-decline trend in fractured systems: a comprehensive analysis and investigation. Spe J 22(2):562–581

Xiong X, Lee KJ (2020) Data-driven modeling to optimize the injection well placement for waterflooding in heterogeneous reservoirs applying artificial neural networks and reducing observation cost. Energy Explor Exploit. https://doi.org/10.1177/0144598720927470

Yang G, Li X, Wang J, Lian L, Ma T (2015) Modeling oil production based on symbolic regression. Energy Policy 82:48–61

Yang Q, Zhang S, Qi FJLFT (2005) Integrating neural network and numerical simulation for production performance prediction of low permeability reservoir. Pet Sci Technol 23(5–6):579–590

Ye J, Dang Y, Yang Y (2020) Forecasting the multifactorial interval grey number sequences using grey relational model and GM (1, N) model based on effective information transformation. Soft Comput 24(7):5255–5269

Zhi Q, Song J, Guo Z (2017) Prediction of soil creep deformation using unequal interval multivariable grey model. Appl Mech Mater 864:341–345

Zhou Q, Lin L, Chen G, Du Z (2019a) Prediction and optimization of electrospun polyacrylonitrile fiber diameter based on grey system theory. Materials 12(14):2237

Zhou Q et al (2019b) Prediction and optimization of chemical fiber spinning tension based on grey system theory. Text Res J 89(15):3067–3079

Acknowledgements

This work was supported by the Soft Science Project from the Ministry of Science and Technology of China (Grant No. 2013GXS3K054-6) and the Humanities and Social Sciences Cultivation Project of Ningbo University (Grant No. XPYB15008).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Luo, X., Yan, X., Chen, Y. et al. The prediction of shale gas well production rate based on grey system theory dynamic model GM(1, N). J Petrol Explor Prod Technol 10, 3601–3607 (2020). https://doi.org/10.1007/s13202-020-00954-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13202-020-00954-w