Abstract

Pressure transient data from downhole gauges are one of the key parameters in characterizing reservoir properties and forecasting future reservoir performance. Reservoir pressure is usually measured under dynamic changes. The collected data usually contain different levels of noise, particularly due to imperfections in measuring instruments and imperfect calibrations. The latter is due to changes between the laboratory environment and reservoir conditions. To have accurate descriptions of reservoir, it is essential to smooth the pressure data. Most related studies have employed the wavelet transform to reduce noise. However, there appears to be little research addressing the use of other smoothing techniques for pressure transient data. This paper, therefore, evaluates and compares the performance of three types of smoothing and noise removal methods, namely wavelet transform as a widely used filtering technique, regression-based smoothers, and autoregressive smoothing methods to reduce artificial noise added to simulated dual-porosity pressure data. Particularly, noise is more pronounced in pressure derivative, and so denoising of pressure derivative requires more effective tools. The effectiveness of the noise removing methods was compared using mean square error. The results show that the regression-based methods lead to the same or even better reduction in the noise level as compared to the wavelet domain filter, while the employed autoregressive method results in a moderate performance. We also test the performance of various combinations of the different smoothing methods to filter the same noisy data. It is shown that the combined locally weighted scatterplot smooth (LOESS) and autoregressive moving average (ARMA) gives the best smoothing performance for pressure derivative data. Application of the combined LOESS–ARMA to real field data shows promising results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The production of hydrocarbons from underground reservoirs is associated with many challenging issues, due particularly to the complex characteristics of reservoirs. As more information regarding the reservoir properties is collected, the uncertainty associated with the recovery of hydrocarbons decreased. Reservoir characteristics have long been studied by means of a variety of techniques such as well log data (Dashtian et al. 2011), well test data (Braester and Zeitoun 1993; Vaferi et al. 2011), seismic data (Arora and Tomar 2010), and well drilling data (Weber 1993). Among these methods, recording transient well pressures created by flow rate alteration is a useful measure to obtain reservoir properties (Athichanagorn and Horne 1995; Gringarten 1987; Sanni and Gringarten 2008; Vaferi and Eslamloueyan 2015). Reservoir pressure is one of the key variables that is used to obtain reservoir properties, monitor reservoir condition, and forecast reservoir performance (Athichanagorn et al. 2002). In well pressure testing, wellbore pressure and flow rate are monitored to evaluate reservoir characteristics by matching a simplified reservoir model on pressure responses (Blasingame et al. 1989; Bourdet and Gringarten 1980; Ghaffarian et al. 2014; Horne and Reyner 1995; Jeirani and Mohebbi 2006).

Since 1990s, most of wells have been installed with permanent downhole gauges. These gauges are equipment that measures well pressure at small time interval, e.g., every second. As a result, the pressure monitoring generates large volumes of data. The recorded data are always associated with measurement errors due to various reasons such as sudden alteration in flowing temperature and problems in gauge that occur by physical changes in reservoir. Measurement errors are due to systematic and random deviations from the true value of the measured (recorded) data. While systematic errors are introduced by an inaccuracy inherent in the system such as imperfect performance (or calibration) of measurement gauges, random errors are caused by unpredictable fluctuations in the reading of gauges. The collected data are usually associated with noise. There is not a unified definition for noise since this term has been used in various disciplines. On one hand, noise is clearly related to measurement errors. Hence, it is possible to have systematic and random noises, although the latter is more common. Horne (1994) defined noise as a type of measurement error during a test which may be either unbiased (random noise) or biased (drift error). On the other hand, there are some anomalies in the recorded data which are distinguished from noise. For example, outliers are data points that lie away from the trend of data, while noise is considered as data points around the trend of overall data and in the same neighborhood as the true data (Athichanagorn et al. 2002). Either noise or other artifacts such as outliers may cause high degree of uncertainty in well test interpretations, and hence, it is essential to remove noise and other artifacts or reduce them to acceptable levels before further analysis.

In well test practice, the amount of collected pressure data is tremendous. Practically it is impossible to consider all data points in the interpretation due to limitation in computational resources. In addition, noisy pressure data may lead to wrong interpretation, and hence, large uncertainties would be expected in parameter estimation. As a result, noise removing is an essential part of analyzing well test data. In this regard, denoising of downhole gauge data has been the subject of several research papers. Osman and Stewart (1997) employed the Butterworth digital filter method to subtract noise in the data. Kikani and He (1998) showed that the Butterworth filter results in poor performance for some cases. Instead, they used a wavelet analysis to remove noise. Unneland et al. (1998) presented the use of decline curve analysis to describe production from a simple reservoir by pressure and rate measurement. The well flow rates were calculated by resolving the rate convolution integral. The rates that determined by convolution integral are rates which happen if pressure stays constant during production. Athichanagorn et al. (2002) proposed a seven-step procedure for processing of permanent downhole gauge data as follows: (1) outlier removal, (2) denoising, (3) transient identification, (4) data reduction, (5) flow rate history reconstruction, (6) behavioral filtering, and (7) data interpretation. They indicated that the wavelet thresholding method is one of the most effective methods to denoise data. An important application of wavelet in well testing is permuting a pressure signal to compress parameters that named wavelet coefficients (Daubechies 1990). The wavelet transform was also applied by Olsen and Nordtvedt (2005) to denoise pressure data. They proposed automatic filtering methods to estimate appropriate thresholds and pressure data reduction. In another study, Nomura (2006) described a smoothing algorithm based on derivative constraints suitable for pressure transient data. He compared the constraint smoother and unconstrained regression splines and concluded that the former can have better performance when the order of derivative constraints increases.

The aim of this paper is to evaluate the performance of regressive and autoregressive methods as well as various combinations of regressive/autoregressive methods for denoising pressure and pressure derivative transient data in comparison to the wavelet transform method which has widely been used in the petroleum industry. The paper is organized as follows: First, the basic concepts of the denoising/smoothing methods used in this study are described. Next, we explain how to generate clean and noisy dual-porosity model pressure data. Different levels of noise are added to the clean signal. Then, the smoothing techniques are applied to the noisy pressure and pressure derivative data, and the smoothing performance is evaluated using the mean square error (MSE) associated with each method for different levels of noise. The effect of time span as the most important parameter on smoothing is then investigated. Finally, the performance of noise reduction for various combinations of smoothing techniques is examined, and the most effective method is applied to the model and field noisy pressure derivative data.

Preprocessing techniques for enhancement of downhole pressure data

Reduction in massive data sets

With advances in pressure gauge technology, current gauges can measure pressure with small time interval (e.g., every second, ten seconds, …). In most instances, extremely large pressure data sets should be processed and analyzed. It is expected to use appropriate techniques to reduce large data sets to manageable size. Pressure thresholding is one of the most common methods to reduce the number of pressure data. Regarding noisy data, it was recommended to denoise data before reduction in their size (Athichanagorn 1999). In this study, we have used small data sets (with a few thousands data points), so data reduction was not needed.

Outlier removal

Outliers are defined those data that are far away from the main part or trend of a data set (Becker and Gather 1999). They are more obvious and easier to detect in comparison with noise. Outliers demonstrate a problem with the measurement equipment, human error or the data recording process (Hodge and Austin 2004). There are several methods to remove outliers from true data including statistical methods such as univariate and multivariate methods, Minkowski error, and signal processing methods such as wavelet, transform (Aggarwal 2013). If a data set contains a considerable amount of outliers, a combination of these methods should be tested. In this study, we focus on noise removal methods since noise is more common and difficult to detect. Besides, since we have added Gaussian noise to synthetic data, it is not needed to deal with outliers. In case of real field data, outliers should be removed from data set before noise removal techniques are applied. In this paper, we used an outlier-free field data to assess the effects of denoising methods on smoothing of pressure derivative data.

Denoising techniques

Regression-based smoothing methods

The smoothness of the acquired data is crucial for estimating the reservoir properties in well test analysis. In general, smoothing is a technique to create an approximate function that attempts to capture main patterns or trends in the data set. A variety of regression-based smoothing methods have been used among which four types of linear smoothers are considered in this study.

Simple moving average

Simple moving average (SMA) is a low-pass filter which replaces every point by unweighted mean of around points in a span (Johnston et al. 1999; Kirchgassner and Jurgen 2007; Mac Berthouex and Brown 1994; Wei 1994). The smoothing signal is given by:

where \(\bar{x}(i)\) is the smoothed value, N denotes number of neighboring points on each side, and 2N + 1 represents the span. The (window) span is the time axis length over which the average is to be calculated. So, the span essentially determines the number of data points contributing to the moving average calculations. Obviously, the span controls the performance of the moving average method. SMA is the simplest smoother that has jagged appearance in some points such as boundaries.

Savitzky–Golay filter

Savitzky–Golay (S–G) filter is a least squares filter used to smooth a set of digital data points. This filter acts by fitting a polynomial of a low degree on a subset of data into a span by applying unweighted linear least squares. This method is based on mathematical technique published by Savitzky and Golay in 1964 (Luo et al. 2005; Savitzky and Golay 1964). A remarkable advantage of this filter is that it tends to keep features of the distribution such as extrema which are often flattened by other smoothing methods such as SMA (Azami et al. 2012).

The data consist of a set of n pairs (xi, yi) where xi is an independent variable (here is time) and yi is an observed value (here is pressure). The polynomial used to fit the data is given by:

The S–G filter required that the sum of the squares of the discrepancies between the yi and pn(xi) be a minimum.

where ei and et are the local error at xi and total error, respectively, and N + 1 is the total number of data points. In this work, various low-degree polynomials were tested and it was found that the polynomial of the second degree resulted in the best fit.

LOWESS and LOESS

The methods of “locally weighted scatterplot smooth,” known as “LOWESS” and “LOESS” employ locally weighted linear regressions to data smoothing. The difference between them is that LOWESS uses a first-degree polynomial to fit, whereas in the LOESS the second-degree polynomial is used. These methods, similar to the SMA and S–G filters, use neighboring data points to estimate smoothed value, and hence, they are local smoothing methods. In addition, a regression weight function is defined for each point inside the span, so the methods are weighted (Cleveland 1979, 1981; Cleveland and Devlin 1988; Cleveland and Loader 1996; Fan and Gijbels 1996; Hardle 1990).

In the LOWESS and LOESS methods, the least square criterion is modified by multiplication of the squared error at xi by a nonnegative weight factor, wi, before the sum of the squared error is minimized.

The weight function for each point in the span is calculated using Eq. (5) (Cleveland and Devlin 1988):

where x is the observed value we intend to be smoothed, xi is the nearest neighbor of x which is defined within the span, and d(x) is the maximum distance between x and xi. One of the characteristics of the weight function is that the target point to be smoothed has the maximum weight. Outside the span, data points have no impact on fitting, i.e., have zero weights. The regression weight function might not be symmetric about the target point. For end points and data points around the boundary for which the number of neighboring points are not symmetric, the weight function is also not symmetric. If the same numbers of neighboring data points on either side of the target point are available, then the weight function is symmetric (Cleveland 1979). After computation of the weight functions for each point within the span, a weighted linear least square regression is performed. The regression employs a linear polynomial, for LOWESS and a quadratic polynomial for LOESS. Span is a parameter that deeply affects the accuracy of smoothing. The chosen span should not change during the smoothing process.

Autoregressive moving average

A time series is a set of observations collected over evenly spaced intervals of some quantity of interest. Forecasting is a process that the value of a variable (or set of variables) is estimated at some future point in time. There are various models to time series forecasting. Three basic time series models are autoregressive (AR), moving average (MA), and a combined autoregressive moving average (ARMA). These methods have long been used to filter noisy data (Box et al. 2015; Huang and Shih 2003). In this paper, we use the ARMA model which includes the advantages of both AR and MA methods.

The ARMA model consists of an autoregressive (AR) and a moving average (MA) parts. AR describes time series as a linear function of previous observation values. The name autoregressive refers to the regression on self. AR (p) refers to autoregressive model of order p (Hannan and Deistler 1988). Given a time series of data xt, the autoregressive model of order p is written as:

where φ1, φ2, …, φp are model parameters, εt is the white noise. The white noise refers to uncorrelated observations with zero mean and finite constant variance which indicates a weakly stationary condition (Montgomery et al. 2008, 1990). In addition, the noises (εt, εt−1,…) are supposed to be random variables in time and had normal distribution. It can be seen from Eq. (6) that the AR model is such a regression model in which xt is regressed on its past values.

The MA model regarded time series as a moving average (unevenly weighted) of a white noise series (εt). The moving average of order q is given by:

where θ1, θ2, …, θq are the model parameters, and εt, εt−1,… are the white noise terms.

The ARMA (p, q) is a model contains AR (p) and MA (q). The ARMA (p, q) has p terms of AR and q terms of MA models as expressed by Eq. 8 (Box et al. 2015):

The ARMA model was first developed by Whitle (1951). He employed the Laurent series and Fourier analysis along with statistical inference.

Wavelet transform

The wavelet transform (WT) is one of the main tools that is used to catch smooth functions (Donoho and Duncan 2000). In this study, we employ WT for denoising as a base method to compare with other smoothing methods. In this work, the WT noise removal algorithm proposed by Kopsinis and McLaughlin (2009) is used. This technique employs wavelet thresholding principle and empirical mode decomposition (EMD) for denoising signals. A sampled noisy signal can be shown as:

where x(t) is the noisy signal, \(\bar{x}\left( t \right)\) is the denoised signal, σ represents a noise variance, and m(t) denotes an independent random variable with Gaussian distribution. Discrete wavelet transform described below uses proper wavelet basis on the noisy signal. The wavelength coefficients are given by:

where, x = [x(1), x(2), …, x(N)], W represents an orthogonal N × N matrix (Theodoridis and Koutroumbas 2009), and x = [c1, c2, …, cN] is the resultant wavelet coefficients. Because of orthogonality of wavelet components (W), wavelet coefficients (ci) have normal distribution with variance σ. The denoised signal is obtained by taking an average of wavelet coefficients.

Wavelet transform (WT) of noisy signal has small number of high signal-to-noise ratio (SNR) coefficients and large number of low SNR coefficients. Hence, signal energy is concentrated in a small number of coefficients. After removing low SNR coefficients, signal is reconstructed by taking inverse of WT. Figure 1 shows the procedure of wavelet transform denoising.

To remove low SNR coefficient, wavelet-based thresholding is used by setting to zero all the coefficients that are lower than a threshold related to the noise level expressed by (Kopsinis and McLaughlin 2009):

where T is wavelet coefficients and C is a constant value. We then construct the denoised signal using the high amplitude coefficients. The common thresholding operators used in practice are hard thresholding and soft thresholding (Kopsinis and McLaughlin 2009) which are expressed by Eqs. (12) and (13), respectively.

where sgn stands for the sign function. The above process can be applied for each level of wavelet transform which is called the interval-dependent thresholding method (Zhang et al. 2006). The high and low frequency parts of a signal may have different characteristics such as mean and variance. Hence, the threshold is obtained for each level of this method, and denoising process is applied for each interval.

Generation of synthetic well test data

Noisy pressure data

We used the PanSystem® well test analysis software to generate transient pressure data in a dual-porosity reservoir. The reservoir is assumed to be infinite acting. Table 1 shows the numerical values of reservoir fluid and rock properties, wellbore parameters, and operating conditions used in generating pressure data. Once the pressure transient data were generated, we added Gaussian noise with zero mean and unit variance to the synthetic data sets. To have a noise at an acceptable level, we added the random noise as small fractions of ± 2, ± 4, ± 6, and ± 8% to the original pressure signal. Figure 2 illustrates the original non-noisy (clean) pressure data and the simulated noisy pressure data generated by adding 2% noise to the clean pressure signal.

Pressure derivative analysis

Using only pressure data for well test analysis may be insufficient and even may lead to misleading results. For example, the pressure data of Figure. 2 may be obtained from a homogeneous reservoir or a dual-porosity reservoir. Pressure derivative is hence useful in well test analysis, including for identification of reservoir model in particular. Pressure derivative curve is a graph in which the logarithm of the pressure derivative is plotted against the logarithm of the elapsed time. Using pressure derivative data is advantageous for accurate reservoir model recognitions (Onur and Reynolds 2001). While pressure versus time plot usually cannot provide enough information, derivative curves show useful information about reservoir and also reservoir characteristics (Booth et al. 2012). In this work, pressure derivative is calculated by the method proposed by Bourdet et al. (1989). This method, called three-consecutive points method, uses one point before and one point after the point of interest, point i, computes the corresponding derivatives, and calculates their weighted mean at the point considered. The formula is given by:

Essentially, Eq. (14) is a weighted average of slopes calculated from data points on either side of data point i. A three-stage procedure for assessing the effectiveness of different smoothing techniques on pressure derivative data is adopted in this work as follows. First, a noisy pressure signal is created by adding a certain amount of Gaussian noise. Next, various single or combined smoothing techniques are employed to smooth pressure data. Finally, pressure derivative is calculated using Eq. (14).

Analysis of smoothing error

The criterion used in this study to compare the performance of different smoothing techniques is the mean square error (MSE) between the clean (non-noisy) and smoothed signals which is given by:

where n represents the numbers of data points of the bottom-hole pressure, pclean and psmoothed are the clean and smoothed pressure signals, respectively.

Results and discussion

To smooth data using this model, it is necessary for the data to be stationary or can be transformed to achieve stationarity. A stationary data has a constant mean with no temporal trend. A quadratic trend in the generated data (Fig. 2) was found when analyzing the data. We removed the trend by first applying a cubic spline fit on the data and second subtracting the original data from the fitted values. All the detrending process was performed using the ITSM time series analysis software (Brockwell and Davis 2013).

Pressure smoothing with the ARMA model

The first step in producing an accurate smoothing by the ARMA model is to find the best orders of the model for the pressure data. Autocorrelation function (ACF) and partial autocorrelation function (PACF) analyses are commonly used to determine the appropriate orders of ARMA. The ACF defines the correlation between series of data at different times. The general formula for ACF is given by Eq. 16 (Brockwell and Davis 2013):

where Rit is time series data at time level i and Ri,t-k is series data shifted back by a lag of k. The PACF describes as the partial autocorrelation of a time series that is the amount of correlation between a variable and a lag of itself which is not explained by correlations at all lower-order lags. The PACF is defined by Eq. 17 (Box et al. 2011; Enders 2008):

where ρ denotes the autocorrelation and π is partial autocorrelation. The pair plot of autocorrelation and partial autocorrelation versus time lag is named correlogram. Correlogram is a useful tool to provide optimum order of the model. The ITSM software was used to find the best degree of the ARMA model (Brockwell and Davis 2012) as illustrated in Fig. 3. The number of points with large spikes which cross the dashed lines in ACF and PACF plots is used to identify the order of AR and MA parts of the ARMA model, respectively (Friendly 2002). As can be seen from Figure. 3, there are two spikes for ACF and two spikes for PACF, and hence, the best degree for the ARMA model is ARMA (2, 2).

The coefficients of the ARMA model were estimated by the least square method. The resulting ARMA model was given by the ITSM as:

Figure 4 shows the smoothed data points given by the ARMA model and the original data corrupted by 2% noise. It is evident from this figure that the main trend of the original data was kept using this model.

Pressure smoothing with nonparametric regressive smoothers

Four local regressive smoothers (i.e., SMA, S–G, LOWESS, and LOESS) are considered in this study. Figure 5 shows the noisy and the smoothed pressure data obtained by the methods for 2% noise addition. The calculated MSE for each regressive method is also reported in Fig. 5. The LOESS shows the least MSE. Span also controls the performance of regressive smoothing techniques. We calculated MSE for each smoothing technique at different spans. Figure 6 shows the calculated MSE as a function of span for different noise levels. The common trend which can be seen from all plots in Fig. 6 is that MSE decreases at first which implies that the performance gets better. MSE for SMA and S–G filters decreases with increasing span until they reach a minimum, then MSE starts to increase when span grows up. SMA gives equal weights to all the surrounding points, and error increases with span because data points that are far from the predicted point affect the estimation as much as the closer points. Regarding the LOWESS and LOESS methods, MSE decreases and then almost levels off. Because these two methods are weighted and also fit a polynomial on data points to estimate smoothed values, so as span increases, the number of data points which participate in fitting increases and yields better polynomial fit. As a result, the corresponding MSE decreases and better performance is acquired. From Figure. 6, one can conclude that the LOESS method is more efficient in smoothing than the other local methods.

As can be seen from Figure. 6, an optimum span can be considered for each method and noise level. In this paper, the optimum span is one which minimizes MSE. The calculated optimum spans are given in Table 2 for each local smoother method for various noise levels. Figure 7 illustrates the optimum span as a function of noise levels for various local smoothing methods. The S–G filter shows small changes in optimum span with increasing noise levels. In other words, the S–G filter has a constant optimum span with different noises, and hence, this method is not affected by noise levels. For the LOESS method, a specific trend is not observed, while there is an approximately increasing trend for the LOWESS and SMA.

Pressure smoothing with wavelet-based methods

In Figure. 8, denoised data for the soft and hard interval thresholding are illustrated. It is evident that the soft thresholding shows better performance with respect to the hard thresholding. In order to improve noise removal performance by WT, a number of denoised versions of the signal are obtained iteratively. Since the variance estimation in the first pass of the thresholding operation is based on the observed noisy coefficients Y (m, n), a considerable amount of unreliable estimates leads to visually annoying noise specks in the reconstruction. Hence, it is proposed to add some additional steps of thresholding, each based on a local variance estimation that uses already denoised coefficients of the previous step. Suppose that the denoised coefficients of the (i − 1)th iteration step is represented by x̂(i−1) (m, n), then the local variance of the ith iteration step and threshold is determined (Beck and Teboulle 2009; Bioucas-Dias and Figueiredo 2007; Daubechies et al. 2003; Fornasier and Rauhut 2008). Figure 9 illustrates the calculated MSE values for different WT methods. The iterative thresholding methods exhibit better performance than the interval thresholding ones. Overall, the soft iterative thresholding illustrates the minimum MSE.

Figure 10 shows the smoothed data points given by the hard and soft iterative thresholding methods for 5 and 25 iterations. Denoising performance for hard and soft thresholding for different iteration number is shown in Fig. 11, in terms of MSE for different iteration numbers.

Comparisons of different smoothing methods at different noise levels

Figure 12 compares MSE values of different smoothing methods used in this study for different levels of noise. It is evident that the lower the MSE, the more efficient the smoothing technique. Overall, the best results correspond to the WT and LOESS. For simulated pressure with 2% artificial noise, it can be seen from the results that the LOESS has minimum MSE and therefore best performance. For this noise level, the MSE of LOESS is lower than the MSE of WT by 21.5%. In addition, the maximum MSE was obtained for the SMA method. As more noise was added to the clean simulated signal, slight changes in the MSE values are observed. The SMA shows better performance at low noise level. For other methods, the results may alter by adding more noise to the pure signal. For 4% noise level, the WT shows better performance than the LOESS method by a small margin. SMA shows maximum MSE for all noise levels, and hence it is not recommended for denoising. For 6% noise level, LOESS has the best performance and its MSE is lower than the MSE of WT by 17.5%. When the clean pressure signal is corrupted by 8% noise level, the LOESS can reduce the noise level as much as the wavelet domain filter does.

Performance of combination of smoothing techniques for noise reduction

After having assessed the smoothing effects of various methods for different levels of noise, it may be of interest in this context to evaluate noise reduction using combination of smoothing methods. Table 3 reports the calculated MSE of the combined methods for the simulated pressure signal corrupted by adding 2% noise. From Table 3, it is concluded that the combined LOESS and ARMA method gives the best performance, namely a nearly 50% reduction in MSE. In this work, various combinations of the studied smoothing techniques have been examined for noise reduction. Unfortunately, a specific combination of smoothing techniques that works for all levels of noise was not found. However, this study shows that combination of smoothing methods can reduce MSE significantly in comparison with the results obtained by single smoothers.

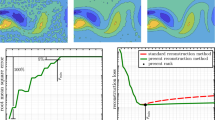

Evaluation of smoothing efficiency for pressure derivative data

In the case of a noisy pressure signal with 2% added Gaussian noise, it was shown that the LOESS method led to the highest smoothing performance (Fig. 12). Figures 13 and 14 illustrate the noisy pressure derivative, calculated by adding 2 and 6% noise to the original non-noisy (clean) pressure signal, respectively, and the smoothed pressure derivatives, calculated by applying the LOESS method to the noisy pressure data before calculating the derivatives. The clean pressure derivatives calculated from the noise-free pressure data were also shown for comparison purposes. It is evident from Figures. 13 and 14 that, for the original pressure derivative data, the noise is so overwhelming that no pattern or trend can be observed. In contrast, the smoothed derivative data using the LOESS clearly shows a dual-porosity flow model in an infinite acting reservoir for 2% noisy pressure data (Fig. 13). However, the performance of the LOESS filter deteriorates when the level of noise increases as evident from Fig. 14. In a similar way, noise reduction from pressure derivative data was examined using various combined methods of smoothing. In all of these methods, denoising techniques were applied to pressure data and then the pressure derivative was calculated using the Bourdet pressure derivative formula (Eq. 14). In this work, it was not feasible to denoise pressure derivative data after its calculation by the Bourdet method since they did not show any trend. The smoothed pressure derivatives calculated using the combined ARMA and LOESS method are shown in Figs. 13 and 14. The most striking results to emerge from Figs. 13 and 14 are that the combined LOESS–ARMA makes the noisy signal much smoother, particularly for higher noise levels as evidenced by comparing the results shown in Fig. 13 with those in Fig. 14.

(Red) the calculated pressure derivatives from clean noise-free pressure data; (green) the calculated noisy pressure derivatives from 2% noisy pressure data; (magenta) the smoothed pressure derivatives, calculated by applying the LOESS filter; and (blue) the smoothed pressure derivatives, calculated by applying the combined LOESS and ARMA filters to the noisy pressure data

(Red) the calculated pressure derivatives from clean noise-free pressure data; (green) the calculated noisy pressure derivatives from 6% noisy pressure data; (magenta) the smoothed pressure derivatives, calculated by applying the LOESS filter; and (blue) the smoothed pressure derivatives, calculated by applying the combined LOESS and ARMA filters to the noisy pressure data

Application of the proposed denoising method to real field data

To demonstrate the reliability and applicability of the methods discussed in this paper for real field pressure data, we applied the denoising methods discussed in this paper to one of the pressure test data sets published by Bourdet et al. (1984). The available pressure transient test data in a naturally fractured reservoir were chosen for which the important reservoir and well properties are listed in Table 4. The pressure derivative versus time plot of the used data is shown in Fig. 15 by green color. As shown before, we have found that the combined LOESS–ARMA method showed the best performance for noise reduction and pattern recognition. As a result, this method was applied for the field data. The smoothed pressure derivatives are shown in blue in Fig. 15. It is evident from Fig. 15 that the applied method effectively smoothed the noisy field data and recovered the appropriate pressure derivative pattern for fractured reservoirs.

(Green) the pressure transient test data published by Bourdet et al. (1984); (blue) the smoothed pressure derivatives using the combined LOESS–ARMA method

Conclusions

In this paper, we have compared the performances of regressive, autoregressive, and wavelet smoothing methods as well as various combinations of them to reduce noise from the synthetic well test data of a dual-porosity infinite reservoir. WT technique has been used in most previous studies. We show that the LOESS method can lead to the same or even better results than the widely used WT. Several key findings can be summarized as:

-

1.

The LOESS and WT have shown the best performances in reducing noise. In 6% noise level, the MSE of the LOESS is less than the MSE of the WT by 17.5%.

-

2.

SMA acts as a low-pass filter and the accuracy of this method decreases significantly by increasing the noise level.

-

3.

In regression-based method, MSE decreases as span increases. Particularly, in the S–G and SMA methods, MSE first decreases, then reaches a minimum value and after that begins to increase. When time span increases in local regression, MSE is decreased. In LOWESS and LOESS methods, the same trend is observed with the difference that MSE remains unchanged when reaching the minimum point.

-

4.

WT with soft thresholding shows better results than hard thresholding. It is found that iterative interval thresholding results in the minimum MSE.

-

5.

The ARMA method significantly improves the reduction in noise as compared to the simple moving average.

-

6.

The amount of noise added to the clean pressure signal affects the performance of smoothing techniques.

-

7.

Regarding various combinations of smoothing filters, it was found that the combined LOESS–ARMA scheme yields a significantly better noise reduction performance. The combined method is more efficient when it is applied for smoothing the pressure derivative data.

-

8.

The combined LOESS–ARMA method efficiently smoothed the field data with moderate noise levels and recovered the ideal pattern in the log–log diagnostic plot.

Abbreviations

- B o :

-

Oil formation volume factor

- c :

-

Compressibility

- h L :

-

Formation thickness

- k :

-

Permeability

- N :

-

Number of recorded pressure data

- p :

-

Pressure

- p 0 :

-

Initial pressure

- q :

-

Flow rate

- r :

-

Radius

- t :

-

Time

- t p :

-

Production time

- T :

-

Vector of the wavelet coefficients

- t i :

-

Wavelet coefficients

- W :

-

Orthogonal N × N matrix

- w s :

-

Wellbore storage

- x(t):

-

Noisy signal

- \(\overline{x} \left( t \right)\) :

-

Denoised signal

- α :

-

Diffusivity coefficient

- φ :

-

Porosity

- λ :

-

Interporosity flow coefficient

- μ :

-

Viscosity

- σ :

-

Noise variance

- ω :

-

Storativity ratio

- T :

-

Total

- w :

-

Wellbore

- AR:

-

Autoregressive

- ARMA:

-

Autoregressive moving average

- ACF:

-

Autocorrelation function

- EMD:

-

Empirical mode decomposition

- LOESS:

-

Locally weighted smoothed scatter gram (second-degree polynomial)

- LOWESS:

-

Locally weighted smoothed scatter gram (first-degree polynomial)

- MA:

-

Moving average

- SMA:

-

Simple moving average

- PACF:

-

Partial autocorrelation function

- PDE:

-

Partial differential equation

- WT:

-

Wavelet transform

References

Aggarwal CC (2013) Outlier analysis. Springer, New York

Arora A, Tomar S (2010) Seismic reflection from an interface between an elastic solid and a fractured porous medium with partial saturation. Transp Porous Media 85(2):375–396

Athichanagorn S (1999) Development of an interpretation methodology for long-term pressure data from permanent downhole gauges, Stanford University

Athichanagorn S, Horne RN (1995) Automatic parameter estimation from well test data using artificial neural network. In: SPE annual technical conference and exhibition. Society of Petroleum Engineers

Athichanagorn S, Horne RN, Kikani J (2002) Processing and interpretation of long-term data acquired from permanent pressure gauges. SPE Reserv Eval Eng 5(5):384–391

Azami H, Mohammadi K, Bozorgtabar B (2012) An improved signal segmentation using moving average and Savitzky–Golay filter. J Signal Inf Process 3(1):39–44

Beck A, Teboulle M (2009) A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J Imaging Sci 2(1):183–202

Becker C, Gather U (1999) The masking breakdown point of multivariate outlier identification rules. J Am Stat Assoc 94(447):947–955

Bioucas-Dias JM, Figueiredo MA (2007) A new TwIST: two-step iterative shrinkage/thresholding algorithms for image restoration. IEEE Trans Image Process 16(12):2992–3004

Blasingame T, Johnston J, Lee W (1989) Type-curve analysis using the pressure integral method. In: SPE California regional meeting. Society of Petroleum Engineers

Booth R, Morton K, Onur M, Kuchuk F (2012) Grid-based inversion of pressure transient test data with stochastic gradient techniques. Int J Uncertain Quantif 2(4):323–339

Bourdet D, Gringarten AC (1980) Determination of fissure volume and block size in fractured reservoirs by type-curve analysis. Paper SPE 9293, presented at the SPE annual technical conference and exhibition, 21–24 September, Dallas, Texas

Bourdet D, Alagoa A, Ayoub JA, Pirard YM (1984) New type curves aid analysis of fissured zone well tests. World Oil 198:111–124

Bourdet D, Ayoub JA, Pirard YM (1989) Use of pressure derivative in well test interpretation. SPE Form Eval 4(2):293–302

Box GE, Jenkins GM, Reinsel GC (2011) Time series analysis: forecasting and control. Wiley, Hoboken, p 734

Box GE, Jenkins GM, Reinsel GC, Ljung GM (2015) Time series analysis: forecasting and control. Wiley, Hoboken

Braester C, Zeitoun DG (1993) Pressure transient response of stochastically heterogeneous fractured reservoirs. Transp Porous Media 11(3):263–280

Brockwell PJ, Davis RA (2012) ITSM for windows: a user’s guide to time series modelling and forecasting. Springer, Berlin

Brockwell PJ, Davis RA (2013) Time series: theory and methods. Springer Science & Business Media, New York

Cleveland WS (1979) Robust locally weighted regression and smoothing scatterplots. J Am Stat Assoc 74(368):829–836

Cleveland WS (1981) LOWESS: a program for smoothing scatterplots by robust locally weighted regression. Am Stat 35(1):54

Cleveland WS, Devlin SJ (1988) Locally weighted regression: an approach to regression analysis by local fitting. J Am Stat Assoc 83(403):596–610

Cleveland WS, Loader C (1996) Smoothing by local regression: principles and methods. In: Härdle W, Schimek MG (eds) Statistical theory and computational aspects of smoothing. Springer, Heidelberg, pp 10–49

Dashtian H, Jafari GR, Lai ZK, Masihi M, Sahimi M (2011) Analysis of cross correlations between well logs of hydrocarbon reservoirs. Transp Porous Media 90(2):445–464

Daubechies I (1990) The wavelet transform, time-frequency localization and signal analysis. IEEE Trans Inf Theory 36(5):961–1005

Daubechies I, Defrise M, De Mol C (2003) An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. arXiv:math/0307152

Donoho DL, Duncan MR (2000) Digital curvelet transform: strategy, implementation, and experiments. In: AeroSense 2000. International Society for Optics and Photonics, pp 12–30

Enders W (2008) Applied econometric time series. Wiley, New York

Fan J, Gijbels I (1996) Local polynomial modelling and its applications: monographs on statistics and applied probability, vol 66. CRC Press, London

Fornasier M, Rauhut H (2008) Iterative thresholding algorithms. Appl Comput Harmon Anal 25(2):187–208

Friendly M (2002) Corrgrams: exploratory displays for correlation matrices. Am Stat 56(4):316–324

Ghaffarian N, Eslamloueyan R, Vaferi B (2014) Model identification for gas condensate reservoirs by using ANN method based on well test data. J Pet Sci Eng 123:20–29

Gringarten AC (1987) How to recognize” double-porosity” systems from well tests. J Pet Technol 39(06):631–633

Hannan EJ, Deistler M (1988) The statistical theory of linear systems. Wiley

Hardle W (1990) Applied nonparametric regression. Cambridge University Press, New York

Hodge V, Austin J (2004) A survey of outlier detection methodologies. Artif Intell Rev 22(2):85–126

Horne RN (1994) Uncertainty in well test interpretation. Paper SPE 27972, presented at the University of Tulsa centennial petroleum engineering symposium, Society of Petroleum Engineers, 29–31 August, Tulsa, Oklahoma

Horne JA, Reyner LA (1995) Sleep related vehicle accidents. BMJ 310(6979):565–567

Huang S-J, Shih K-R (2003) Short-term load forecasting via ARMA model identification including non-Gaussian process considerations. IEEE Trans Power Syst 18(2):673–679

Jeirani Z, Mohebbi A (2006) Estimating the initial pressure, permeability and skin factor of oil reservoirs using artificial neural networks. J Pet Sci Eng 50(1):11–20

Johnston F, Boyland J, Meadows M, Shale E (1999) Some properties of a simple moving average when applied to forecasting a time series. J Oper Res Soc 50(12):1267–1271

Kikani J, He M (1998) Multi-resolution analysis of long-term pressure transient data using wavelet methods. Paper 48966, presented at the SPE annual technical conference and exhibition, 27–30 September, New Orleans, Louisiana

Kirchgassner G, Jurgen W (2007) Introduction to modern time series analysis. Springer, Berlin

Kopsinis Y, McLaughlin S (2009) Development of EMD-based denoising methods inspired by wavelet thresholding. IEEE Trans Signal Process 57(4):1351–1362

Luo J, Ying K, Bai J (2005) Savitzky–Golay smoothing and differentiation filter for even number data. Signal Process 85(7):1429–1434

Mac Berthouex P, Brown LC (1994) Statistics for environmental engineers. Lewis Publishers, Boca Raton

Montgomery DC, Johnson LA, Gardiner JS (1990) Forecasting and time series analysis. McGraw-Hill Companies, New York

Montgomery DC, Jennings CL, Kulahci M (2008) Introduction to time series analaysis and forecasting. Wiley, Hoboken

Nomura M (2006) Processing and interpretation of pressure transient data from permanent downhole gauges. Ph.D. Thesis, Stanford University, Stanford, CA

Olsen S, Nordtvedt J-E (2005) Automatic filtering and monitoring of real-time reservoir and production data. Paper SPE 96553, presented at the SPE annual technical conference and exhibition, 9–12 October, Dallas, Texas

Onur M, Reynolds AC (2001) Nonlinear regression: the information content of pressure and pressure-derivative data. In: SPE annual technical conference and exhibition. Society of Petroleum Engineers

Osman MS, Stewart G (1997) Pressure data filtering and horizontal well test analysis case study. Paper SPE 37802, presented at the middle east oil show and conference, 15–18 March, Bahrain

Sanni MO, Gringarten AC (2008) Well test analysis in volatile oil reservoirs. Paper SPE 116239, SPE annual technical conference and exhibition, 21–24 September, Denver, Colorado, USA

Savitzky A, Golay MJ (1964) Smoothing and differentiation of data by simplified least squares procedures. Anal Chem 36(8):1627–1639

Theodoridis S, Koutroumbas K (2009) Pattern recognition. Elsevier, Amsterdam

Unneland T, Manin Y, Kuchuk F (1998) Permanent gauge pressure and rate measurements for reservoir description and well monitoring: field cases. SPE Reserv Eval Eng 1(03):224–230

Vaferi B, Eslamloueyan R (2015) Hydrocarbon reservoirs characterization by co-interpretation of pressure and flow rate data of the multi-rate well testing. J Pet Sci Eng 135:59–72

Vaferi B, Eslamloueyan R, Ayatollahi S (2011) Automatic recognition of oil reservoir models from well testing data by using multi-layer perceptron networks. J Pet Sci Eng 77(3–4):254–262

Weber K (1993) Enhanced reservoir definition for increased production. AAPG Bull 77(2):354–355

Wei WW-S (1994) Time series analysis. Addison-Wesley, Boston

Whitle P (1951) Hypothesis testing in time series analysis. Almqvist & Wiksells, Uppsala

Zhang Q, Aliaga-Rossel R, Choi P (2006) Denoising of gamma-ray signals by interval-dependent thresholds of wavelet analysis. Meas Sci Technol 17(4):731

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Moosavi, S.R., Qajar, J. & Riazi, M. A comparison of methods for denoising of well test pressure data. J Petrol Explor Prod Technol 8, 1519–1534 (2018). https://doi.org/10.1007/s13202-017-0427-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13202-017-0427-y