Abstract

Hydropower is a clean and efficient technology for producing renewable energy. Assessment and forecasting of hydropower production are important for strategic decision-making. This study aimed to use machine learning models, including adaptive neuro-fuzzy inference system (ANFIS), gene expression programming, random forest (RF), and least square support vector regression (LSSVR), for predicting hydroelectric energy production. A total of eight input scenarios was defined with a combination of various observed variables, including evaporation, precipitation, inflow, and outflow to the reservoir, to predict the hydroelectric energy produced during the experimental period. The Mahabad reservoir near Lake Urmia in the northwest of Iran was selected as a study object. The results showed that a combination of hydroelectric energy produced in the previous month, evaporation, and outflow from the dam resulted in the highest prediction performance using the RF model. A scenario that included all input variables except the precipitation outperformed other scenarios using the LSSVR model. Among the models, LSSVR exerted the highest prediction performance for which RMSE, MAPE, and NSE were 442.7 (MWH), 328.3 (MWH), and 0.85, respectively. The results showed that Harris hawks optimization (HHO) (RMSE = 0.2 WMH, MAPE = 10 WMH, NSE = 0.90) was better than particle swarm optimization (PSO) (RMSE = 0.2 WMH, MAPE = 10 WMH, NSE = 0.90) in optimizing ANFIS during the prediction. The results of Taylor’s diagram indicated that the ANFIS-HHO model had the highest accuracy. The findings of this study showed that machine learning models can be used as an essential tool for decision-making in sustainable hydropower production.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Today, the increasing demand for electricity requires more production and better predictions of output (Hammid et al. 2018). Developing countries, where the need to receive and supply sufficient energy is important, need to stabilize the power system by better tracking of demand and necessary output (Hammid et al. 2018). Considering the adverse effects of fossil fuels, using renewable energy sources such as wind or solar energy has recently attracted much attention. However, they are still somewhat expensive compared to conventional energy sources such as hydropower (Elliot et al. 1998). This means to use the economic and environmental potentials of hydroelectricity to its fullest capacity (Linsley et al. 1992). Hydroelectricity production is usually one of the goals of dam construction, and the amount of electricity produced depends on the height of the dam and the inflow to the dam (Dehghani et al. 2019). Hydroelectricity does not emit carbon dioxide, so in addition to producing clean, renewable energy, it also helps to reach a low-carbon society (Li et al. 2016).

Less developed or developing countries face various challenges in managing electricity demand and supply. The gap between the demand and supply of electricity has a great impact on the economic growth of the countries. Energy production forecasting is vital for policymakers to timely identify the sudden change in electricity demand in certain conditions. The effectiveness of hydropower plants is affected by several factors, such as river flow, reservoir volume, temperature, and electricity price. These factors make it a difficult challenge to predict and manage the operational output of a plant. Therefore, reliable and accurate forecasts of energy production are vital for capacity planning and operation of energy systems (Barzola-Monteses et al. 2022). In addition, since electricity consumption varies remarkably throughout the year, foresight about the value of electricity is an important tool in the management of the electricity distribution network and the operation of the dam. As a result, one of the main components in the operation of the dam is forecasting the production of hydroelectricity (Dehghani et al. 2019).

The amount of electricity produced is affected by various parameters, thus, there is a relationship between the amount of hydropower produced and the influencing parameters nonlinear and complex. It is necessary to use models to recognize the existing relationships to solve such relationships. In recent years, machine learning models to solve nonlinear relationships have become popular (Arya Azar et al. 2021a; Allawi and El-Shafie 2016). These models recognize nonlinear patterns in the system and use them to predict future status (Zare and Koch 2018; Zhang et al. 2019).

Machine learning models have already to some extent been used in the field of hydropower production (e.g., Hammid et al. 2018; Choubin et al. 2019; Dehghani et al. 2019). A summary of studies related to hydropower production forecasting is presented in Table 1. According to the table, during 2008 and 2022, methods including artificial neural networks (ANNs) have received much attention from researchers. On the other hand, hydrological models, such as the soil and water assessment tool (SWAT) to predict hydropower production, are also important (Tamm et al. 2016). Table 1 shows that hydroelectric energy is usually predicted using hydrometeorological variables such as precipitation, temperature, evaporation, and flow entering the reservoir. As well, some researchers have predicted the energy production considering the produced energy in previous periods (Dehghani et al. 2019; Hanoon et al. 2022).

Adaptive neuro-fuzzy inference system (ANFIS) is a machine learning model that is obtained from the combination of ANNs and fuzzy inference systems. The learning ability of ANNs and the capability of fuzzy logic reasoning create a favorable model to determine the complex relationships between input and output variables (e.g., Firat and Güngör, 2007). Despite the advantages of this algorithm, ANFIS sometimes does not perform well since its training algorithms may be trapped in local minima. Therefore, this problem has prompted researchers to use evolutionary optimization algorithms to improve the prediction results of ANFIS (e.g., Milan et al. 2021; Kayhomayoon et al. 2021; Qasem et al. 2019; Seifi and Riahi-Madvar 2019). An algorithm that can improve the performance of ANFIS is the Harris hawks optimization (HHO; Kayhomayoon et al. 2021; Milan et al. 2021). HHO is an algorithm based on swarm intelligence that mimics the collective behavior of the Harris hawks. Harris hawks chase and hunt in groups. This algorithm has been successfully used in engineering problems (Malik et al. 2021; Moayedi et al. 2021a, 2021b). Particle swarm optimization (PSO) is another evolutionary optimization algorithm. The algorithm defines a set of candidate solutions for the optimization problem as a particle swarm. This swarm determines paths through the variable space that are guided by the best performance of itself and its neighbors (Marini and Walczak 2015). In the current study, HHO and PSO algorithms were used to optimize the ANFIS parameters.

Another model used in this study is least square support vector regression (LSSVR), an advanced version of the standard SVRs with lower computational and time complexities and higher performance (Suykens et al. 2002). Mirabbasi et al. (2019) compared this algorithm with other algorithms for the prediction of monthly rainfall, and the results showed that LSSVR performed with higher accuracy. As an evolutionary algorithm, gene expression programming (GEP) is inspired by the theory of Darwinian evolution and imitates the mechanism of living organisms. In this algorithm, unlike ANNs, firstly, the input variables, target, and location function are determined, and then, the optimal structure of the model and its coefficients are determined (Bahmani et al. 2020). The random forest (RF) algorithm is an ensemble method that combines multiple decision tree algorithms to generate an iterative decision-making procedure. A regression tree (RT) represents a set of constraints or conditions that are hierarchically organized and applied sequentially from the root to the leaf of the tree (Breiman et al. 1984; Quinlan 1993). RF is one of the popular models among researchers due to its high prediction accuracy, the ability to learn nonlinear relationships, and the ability to determine essential variables in prediction (Norouzi and Moghaddam 2020).

Considering the importance of hydropower production, this study aims to evaluate the efficiency of GEP, RF, LSSVM, and ANFIS algorithms and then use two evolutionary optimization algorithms, i.e., HHO and PSO, in optimizing the ANFIS model to predict hydroelectric energy production. Each of these models had an acceptable performance in predicting time series in past researches. Also, the approach to achieve the appropriate prediction accuracy varies in each model. Therefore, comparing their results and choosing the most suitable model for predicting hydroelectric energy production seems necessary.

The Mahabad dam in West Azerbaijan province, located in the northwest of Iran, was considered for testing the models. Since the combination of ANFIS with evolutionary optimization algorithms has rarely been applied in the prediction of hydroelectric energy production, the main innovation of this study relies on using two algorithms to optimize the parameter values of the ANFIS model. Moreover, in this study, a combination of different variables was used as model scenarios. This means that, at first, the variables affecting hydroelectric energy production were identified and then used in the modeling in the second stage.

Materials and methods

Study area and data preparation

The Mahabad river catchment is located in northwest of Iran. The catchment is part of the main basin of Lake Urmia (Fig. 1). Its catchment area is 1524.53 km2 and about 3% of the Lake Urmia basin. The Mahabad river is formed by the joining of the Bitas branch in the east and the Kouter branch in the west. On this river, a reservoir dam was constructed at the junction of the two branches of Bitas and Kouter in 1967. At the same time, another diversion dam was constructed downstream of the Mahabad dam to divert water to irrigation canals and control floods that occur downstream of the basin every few years. Also, another goal of the Mahabad dam is to produce hydroelectric energy. Mahabad Dam is one of ten water-filled dams in the country, with an annual water input volume of 339 MCM. The lake of this dam is a permanent wetland, which is located one kilometer from Mahabad city, which is supplied by water supply and agricultural water by the regional water organization the area of the lake behind the dam is about 360 ha.

Various variables, including precipitation, evaporation from the dam reservoir, inflow to the dam, outflow from the dam, and amount of hydroelectric energy production in the previous month, were used to predict the hydroelectric energy production of Mahabad dam (Table 2). The number of data was 193 samples during the years 2000–2020 According to the table, the maximum hydroelectric energy produced is 4464 WMH, with an average of 775.4 WMH. On average, the inflow to the dam and outflow from the dam are 18.02 (MCM) and 17.39 (MCM), respectively.

An important aspect of machine learning models is choosing appropriate input data and variables for the modeling. The selection of these variables should depend on two characteristics, a statistical relationship with the output variable and depending on time. For this purpose, to predict the hydroelectric energy production (HEP) from the Mahabad dam, various variables were used to create the model, including precipitation (P), inflow (Qin), inflow during the previous month (Qin(t − 1)), outflow (Qout), evaporation from the reservoir (E), hydropower produced the previous month (HEP(t − 1)), hydropower produced two months ago (HEP(t − 2)), and hydropower produced three months ago (HEP(t − 3)).

Combining appropriate variables to achieve the highest prediction performance requires creating reliable input scenarios where various input variables add to the quality of output. For this purpose, the correlation between input and output variables was calculated. According to Table 3, HEP(t − 1) had the highest correlation (0.71) with the output variable. After that, E and Qout, with correlation of 0.64 and 0.62, respectively, had the highest correlation with HEP. Among the input variables, P and Qin had the lowest correlation with the target variable.

According to Table 4, the first scenario was defined by only having HEP(t − 1). The second scenario included HEP(t − 1) and E as inputs. The third scenario was defined by three inputs, HEP(t − 1), E, and Qout. Therefore, the scenarios were created based on the correlation coefficient of independent variables with the output, where in each scenario, according to the correlation coefficient of the variables, one variable is added to the previous scenario (Milan et al. 2021). Therefore, eight scenarios were introduced, in which the first scenario had the lowest number of input variables and the eighth included all independent input variables (Table 4).

Hydroelectric energy production

Hydropower is a renewable energy produced from water stored in dams. Water flow turns the blades of a turbine to produce electrical energy from potential energy (Fig. 2). As the water flows through the transmission channels, its speed and pressure reach an optimal level so that the maximum energy can be transferred to the turbines by falling on the turbine blades. In hydropower plants, speed governors and excitation systems are used to control the frequency. These power plants can be started quickly and increasing or decreasing power output can be performed fast.

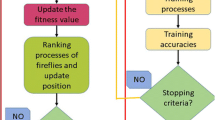

Methodological flowchart

The methodological flowchart is shown in Fig. 3. Hydro meteorological variables, inflow and outflow of the dam, and hydroelectric energy produced in previous periods were identified as factors affecting the present production of hydroelectric energy. Input scenarios were defined by combining these variables to predict hydroelectric energy production. The results of the models were compared using error evaluation criteria and graphical charts such as scatterplots, ridgeline plots, Taylor’s diagrams, and time series diagrams. The model scenario that provided the highest performance was selected for the prediction of hydroelectric energy production (Fig. 3).

Machine learning methods

Random forest

RF is a group method that combines several tree algorithms to produce an iterative procedure for prediction tasks and is one of the efficient tools for classifying patterns and regression. RF can learn complex patterns and consider nonlinear relationships between input variables and output. Presented by Breiman et al. (1984), RF combines several individual algorithms using rule-based methods. The general principles of group training techniques assume that their overall performance is higher than individual training algorithms.

To create an RT, recursive partitioning and multiple regressions are used. The decision process is repeated from the root node to each internal node according to the tree rule until the previously determined stopping condition is reached. Each of the final nodes or leaves is connected to a regression model that is used only in the node. Pruning is used to improve the generalization capacity of the trees by reducing the complexity of the structure. The number of samples in the nodes can be considered as a pruning criterion. To avoid different RTs, RF reduces the diversity of the trees by creating various subsets of the training data, which is called bagging (Breiman 1996). Bagging is a method of randomly resampling the data set from the original data to create training data. In this step, none of the selected data is removed from the input samples to generate the next subset, and thus the variance is reduced. Hence, some data might be used once in the training process, while some other data that are not effective in the modeling might never be used (Schoppa et al. 2020). This makes the model more reliable against slight changes in the input data, and the prediction performance increases.

Gene expression programming

Introduced by Ferreira (2006), GEP is a generalized technique of genetic algorithm (GA) and genetic programming (GP) (Fig. 4). It is an evolutionary algorithm inspired by Darwinian evolution theory and works based on mimicking the mechanism of living organisms (Bahmani et al. 2020). First, the input variables, the objective function, and the positions are defined in this model. Then, the optimal structure of the model and coefficients are determined during the training process. In this method, individuals are nonlinear entities with different sizes and shapes called expression trees (Mehdizadeh et al. 2020). These complex organisms are encoded as simple strings of fixed length called chromosomes (Ferreira 2006). Such a model can be successfully used to identify the relationships between complex variables where common mathematical methods cannot solve the problem and the number and length of data sets are very large (Banzhaf et al. 1998). Before implementing the GEP model, there are steps to be taken, including defining variables of the problem and random fixed numbers, the set of mathematical operators used in the formulas, choosing the appropriate objective function to evaluate the fit of the formulas, determining the parameters controlling the program execution, and the stop criteria and presenting the results of the program (Ferreira 2006). The procedure of modeling is as follows:

By combining the set of functions and terminals, an initial population is created. Each individual is evaluated using the objective function, followed by producing a new population of formulas using one of the three main genetic operations of cross-over, mutation, and selection. The new population is then evaluated by the objective function to determine the desired model with the highest fitness. This continues until the stop criterion is satisfied. GeneXproTools 0.4 was used in this study to implement the GEP algorithm.

Least squares support vector regression

Given a set of training data such as \(\left\{ {x_{k} , y_{k} } \right\}_{K = 1}^{N}\), with \(x_{k} \in R^{N}\) as inputs and \(y_{k} \in R\) as output, Eq. (1) indicates the nonlinear regression function in the initial weighting (Suykens et al. 2002)

where T, b, and W are weight, regression bias, and transpose operator, respectively. φ (x) is considered for mapping inputs to a high dimensional feature space, which can then be solved utilizing an optimization equation (Eq. 2)

with the constraint

where γ is the regulation term for the error e and controls the approximation function. Solving this optimization equation using the Lagrangian form of the objective function results. αi is the Lagrangian coefficient. LSSVR equation is written for the approximation function as Eq. (4) based on the Karush–Kuhn–Tucker condition (Mellit et al. 2013; Arya Azar et al. 2021)

where K (x, xk) is the kernel function. Various types of kernel functions can be defined where the Gaussian type was used in this study (Mellit et al. 2013).

Adaptive neuro-fuzzy inference system

The main purpose of the ANFIS model is to optimize the parameters of the fuzzy inference systems using input–output data sets through a learning algorithm (Jang 1993). In this structure, the nodes in the first and last layers represent the input and output data, while the nodes in the hidden layers are identified as fuzzy membership functions and linguistic rules (Fig. 5). The Sugeno model, which is widely used in the ANFIS structure because of its interpretability and high computational capability, was utilized in this study.

ANFIS suffers from several drawbacks such as trapping in local minima and slow convergence of training, especially for wide search spaces. Therefore, there is a need to use hybrid methods that use optimization techniques to optimize the parameters of the ANFIS. In this study, along with the ANFIS model, HHO and PSO evolutionary algorithms were used to improve the ANFIS model. According to the literature, the HHO algorithm has unique features that can significantly improve the traditional ANFIS model. The ANFIS is improved with evolutionary algorithms to achieve better results. In this structure, the objective function is to minimize the error of the predicted values.

Optimization methods

Harris hawks optimization

HHO is a nature-inspired optimization technique, inspired from rabbit hunting by Harris hawks (Heidari et al. 2019). Similar to other optimization methods, it includes exploration and exploitation steps (Fig. 6). In this method, the hawks, which are the candidate solutions, perch randomly and their position is obtained using Eq. (6)

where X(t) is the current position vector of hawks, X(t + 1) is their position in the next iteration, Xrabbit(t) is the position of rabbit, r1, r2, r3, r4, and q are random values between 0 and 1, which are updated in each iteration, LB and UB are the upper and lower bounds of variables, Xrand(t) is the current position of a randomly selected hawk, and Xm is the average position of the current population of hawks. The average position of hawks is obtained using Eq. (7)

where Xi(t) indicates the location of the i-th hawk in iteration t and N denotes the total number of hawks. The escaping energy of the rabbit is modeled using Eq. (8)

where E indicates the escaping energy of the prey, T is the maximum number of iterations, and E0 is the initial energy. The hawks intensify the besiege process to catch the exhausted prey effortlessly. Defining the escaping energy in this algorithm enables it to switch between soft besiege (|E|≥ 0.5) and hard besiege (|E|< 0.5). If r ≥ 0.5 and |E|≥ 0.5, the rabbit still has enough energy to escape by some random misleading jumps. During these attempts, the hawks encircle it softly to make the rabbit more exhausted to perform the surprise pounce (Eqs. 9 and 10)

where ΔX(t) is the difference between the position vector of the rabbit and the current location of the hawks in iteration t, r5 is a random number between 0 and 1, and J is the random jump strength of the rabbit throughout the escaping procedure. If r ≥ 0.5 and |E|< 0.5, the prey is exhausted, and the hawks hardly encircle the prey to perform the surprise pounce. In this situation, the current positions are updated using Eq. (11)

To perform a soft besiege, it is supposed that the hawks could decide their next move based on Eq. (12).

They would dive based on the levy flight (LF)-based patterns using the following rule.

where D is the dimension of the problem, S is a random vector, and LF is calculated using Eq. (14)

where u and v are random values between 0 and 1, and β is a defau constant set to 1.5. The final strategy for updating the positions of hawks in the soft besiege is brought in Eq. (15).

where Y and Z are obtained using Eqs. (12) and (13), respectively. If r < 0.5 and |E|< 0.5, the rabbit has not enough energy to escape, and there is a hard besiege before the surprise pounce to catch the prey. In this situation, Y and Z in Eq. (15) are obtained using Eqs. (16) and (17).

Particle swarm optimization

PSO is one of the nature-inspired optimization methods first introduced by Kennedy and Eberhart (1995). The PSO algorithm starts with creating a random population. Each component in nature is a different set of decision variables whose optimal values should be provided. Each component represents a vector in the problem-solving space. The algorithm includes a velocity vector in addition to the position vector, which forces the population to change their positions in the search space. The velocity consists of two vectors called p and pg. p is the best position that a particle has ever reached and pg is the best position that another particle in its neighborhood has ever reached. In this algorithm, each particle provides a solution in each iteration. In the search for a d-dimensional space, the position of the particle i is represented by a D-dimensional vector called Xi = (Xi1, Xi2, …, XiD). The velocity of each particle is shown by a D-dimensional velocity vector called Vi = (Vi1, Vi2, …, ViD). Finally, the population moves to the optimum point using Eqs. (18) and (19).

where ω is the shrinkage factor used for convergence rate determination, r1 and r2 are random numbers between 0 and 1 with uniform distribution, N is the number of iterations, c1 is the best solution obtained by a particle, and c2 is the best solution identified by the whole population. In this study, to train ANFIS using the PSO algorithm, a number of N random vectors with the initial Xi position were created. The ANFIS was then implemented with the particle positions, and the PSO objective function error was considered. The particles were then moved to find better positions and new parameters are used to construct the ANFIS. This process was repeated until convergence with the least prediction error was achieved.

Performance evaluation criteria

The selection of the training and test data was performed randomly. About 70% of the data was used for training, while the rest was for testing the models. Root mean square error (RMSE), mean absolute percentage error (MAPE), and coefficient of determination (R2) were used to evaluate the performance of scenarios and machine learning methods

where xo is the observed (measured) value, xp is the predicted value, and n is the number of samples. The lower the RMSE, MAPE, and higher R2 values, the better the performance of the model is.

Results

In this study, machine learning models optimized by sophisticated optimization algorithms were used to predict hydroelectric energy production. Table 5 brings the optimized values of the machine learning parameters and the details of the optimization methods. The radial basis function (RBF) kernel was used in the LS-SVR model for prediction (Sarlaki et al. 2021; Massah et al. 2021), with the optimal value of the kernel parameter was obtained 1206.7. The number of neurons in the learning layer of neural-based algorithms is an important parameter (Hashemi et al. 2014). A Gaussian membership function was selected in the ANFIS structure (Esmaili et al. 2021; Milan et al. 2021). ANFIS uses the Sugeno type fuzzy system, and the best performance was observed when using a linear function as the membership function of the output. The population and the maximum number of iterations in the HHO algorithm were 30 and 2500, respectively. Other optimized parameters and specifications of the models are shown in Table 5. The size of initial population of PSO was considered 30 since higher values did not improve the model’s performance. Furthermore, after 2500 iterations, no changes were observed in the objective function’s value, and therefore, the maximum iteration was considered 2500.

Table 6 shows the results of ANFIS, ANFIS-HHO, ANFIS-PSO, LSSVR, RF, and GEP models in predicting hydroelectric energy production using the various input scenarios. The superiority of the models in prediction is determined based on their performance on the test data. If the values of error evaluation criteria are close to each other for the test data, the results obtained for the training are also used. Among the models, the ANFIS-HHO model, with the evaluation criteria of MAPE, RMSE, and NSE equal to 225.5 WMH, 348 WMH, and 0.88 for the test data, performed the best in predicting hydropower production. The results of this model were obtained using Scenario S2 as input. For the training data, these values were very close to the values of the test data (NSE = 0.93, RMSE = 288.8 WMH, and MAPE = 165.6 WMH). ANFIS-PSO exerted similar results to those of the ANFIS-HHO model so that the values of MAPE, RMSE, and NSE for the training data were 225 WMH, 348.2 WMH, and 0.90, respectively, and 214.8 WMH, 379 WMH, and 0.86, respectively for the test data. Like the ANFIS-HHO model, optimal results of the ANFIS-PSO model were obtained using Scenario S3 and with the input of HEP(t − 1), E, and Qout. Comparing the results of these two models with the basic ANFIS model shows that the optimized hybrid models improved the results. Although ANFIS exerted reliable results for the training data, especially using scenarios S3–S8, it showed notable errors for the test data.

The best performance of the ANFIS model was obtained for Scenario S2, where the MAPE, RMSE, and NSE values were 477.1 WMH, 633.5 WMH, and 0.62, respectively, for test data. The best performance of this model for the training data was observed for Scenario S5 (MAPE = 101.7 WMH, RMSE = 57.2 WMH, and NSE = 0.99). According to the results of the basic ANFIS model and its hybrid models, ANFIS required more data to perform reliably. The basic model was trapped in local minima and could not recognize the behavior of the target parameter, thus, it predicted a model output with significant error. The optimization algorithms greatly improved the shortcomings of this basic model and improved the accuracy of the model based on MAPE, RMSE, and NSE values. Among the other three proposed models, LSSVR and RF models estimated the training data with relatively less error, like ANFIS. In both models, Scenario S1, in which the number of input variables is less, provided the worst results (MAPE = 477.3 WMH, NSE = 0.64, and RMSE = 699.6 WMH for LSSVR, and MAPE = 470 WMH, RMSE = 647.7 WMH, and NSE = 0.65 for RF). The best results of the LSSVR model were obtained using Scenario S7, with more input variables (MAPE = 328.3 WMH, RMSE = 442.7 WMH, and NSE = 0.0.85). For the RF model, using Scenario S3, which included a lower number of input variables, better results were achieved (MAPE = 389.3 WMH, RMSE = 573.1 WMH, and NSE = 0.78). Compared to ANFIS-HHO, ANFIS-PSO, LSSVR, and RF models, the GEP model exerted a lower ability to predict hydroelectric energy production. Compared to ANFIS, although it has lower accuracy than this model for the training data, it performed well for the test data in Scenarios S4–S8. The best results for this model were obtained for Scenario S6, where MAPE, RMSE, and NSE were 305 WMH, 489.5 WMH, and 0.84, respectively.

A comparison of the observed and predicted hydroelectric energy production using ANFIS, ANFIS-HHO, ANFIS-PSO, and LSSVR, RF, and GEP models for test data is presented in Fig. 7. According to the figure, the ANFIS model predicted the test data with a greater error. This error is primarily observed for peak values. As can be seen, in these peaks (i.e., points 3, 15, 20, 22, 37, 44, 45, 48, and 49), the model estimated the amount of hydroelectric energy production either higher or lower than the observed value (Fig. 7). The prediction error reached between 1500 and 2000 in some points and below − 1000 in others. Using the PSO and HHO algorithms improved the results of the ANFIS model to a great extent. In the diagrams related to the ANFIS-HHO and ANFIS-PSO models, the hybrid algorithms better recognized the behavior of the peak points and compensated for the weaknesses of the ANFIS model. This improvement is more evident in the ANFIS-HHO than in the ANFIS-PSO model (Fig. 7). The two hybrid models reduced the error range in the respective graphs to between − 1000 and 1000. Although the LSSVR model predicted the peak values somewhat correctly, at some points, it can be seen that the observations and predictions are relatively far apart. Both RF and GEP models predicted hydroelectric energy production with a relatively large error. The prediction error reached more than 2000 at some points for the RF model while it was lower than −1500 for the GEP model (Fig. 7).

The scatterplot of the selected scenario of each model for the test data is presented in Fig. 8. These plots indicate the trends and intensity of the changes in the predictions compared to observations. The plots show that there is an acceptable agreement between the observed and predicted values. In the ANFIS-HHO, ANFIS-PSO, and LSSVR models, the data are denser around the regression line, and the R2 values for these models were 0.89, 0.87 and 0.85, respectively, indicating a high correlation between observations and predictions. Although the R2 values were within the acceptable range among the three models of GEP, RF, and ANFIS, the dispersion around the regression line is higher, especially for the ANFIS model.

A comparison of models was made using Taylor’s diagram (Fig. 9). This diagram is essential since it compares the models by considering the three criteria of standard deviation (vertical axis), RMSD (arcs inside the quarter circle), and correlation coefficient (arc of the quarter circle). According to the figure, a model with a position closer to the position of the observation data has a better estimate of hydroelectric energy production. The correlation in almost all models was between 0.9 and 0.95, indicating appropriate efficiency of the models in predictions. Among the models, the location of the ANFIS-HHO and ANFIS-PSO models is closer to that of the observations. On the other hand, the RMSD value of the total data for the four ANFIS-PSO, ANFIS-HHO, RF, and GEP models is about 400 or less, while these values for the ANFIS and LSSVR models are more than 400 and close to 500. According to Taylor’s diagram, the three models, ANFIS-HHO, ANFIS-PSO, and LSSVR, performed best in predicting hydroelectric energy production, respectively.

The ridgeline plot was used to compare the statistical distribution of observed and predicted power for the models under the selected scenarios (Fig. 10). The shape of prediction distribution for lower values is closer to the observational data. For the LSSVR model, in the range between 1000 and 2000 (horizontal axis), the distribution is slightly higher than for other models and observations. In the graphs related to RF and GEP, this decreased to the range between 2000 and 4000, and for maximum values, the shape of the graph of these two models is more elongated. However, in the diagrams related to the ANFIS, ANFIS-HHO, and ANFIS-PSO models, the shape of the diagram is closer to the observations. Of course, since this diagram is drawn for the entire data and the ANFIS model performed well for the higher share training data, the shape of its distribution is closer to the observations.

Discussion

In this study, the performance of ANFIS, LSSVR, RF, and GEP models in predicting the hydroelectric energy production of the Mahabad reservoir in northwest Iran was evaluated. For this purpose, various input variables were considered for predictions, among which HEP(t − 1) and P had the highest and lowest correlation with the produced hydroelectric energy, respectively. To implement the models, eight input scenarios were developed based on the correlation between the input and output variables. Three models, LSSVR, RF, and GEP provided acceptable results both for the training and test data. The LSSVR and GEP models achieved acceptable results using S6 and S7 scenarios, while the RF model provided promising results using Scenario S3. Therefore, the LSSVR and GEP models needed more input variables, while the RF model required fewer ones, making this model useful in situations of lack of data or data with high uncertainty. Although the ANFIS had the best performance among the models in predicting the training data, it had a poor performance in estimating the test data. It seems that ANFIS requires more data to provide reliable results. In such a situation, one of the most effective solutions is using evolutionary optimization algorithms to improve the results of basic models, which has been reported previously in the literature (Tikhamarine et al. 2020; Kayhomayoon et al. 2022; Azar et al. 2021b). Therefore, two algorithms, HHO and PSO, were used in this study to optimize the parameters of the ANFIS model. Although these two optimization algorithms reduced the accuracy of the model for the training data, they significantly improved the results for the test data. As the model structure becomes more complicated, the convergence time of the model using the optimization methods also increases. Another advantage that was observed from the two hybrid models in this research was that the two models achieved good results with fewer input variables, i.e., Scenario S3.

The ANFIS-HHO hybrid model generally provided the most relevant results in predicting hydroelectric energy production. After that, the ANFIS-PSO hybrid model with results close to the ANFIS-HHO model is suggested as a reliable tool to predict hydroelectric energy production. The results showed that evolutionary algorithms can improve the results of basic machine learning-based regression models (Dehghani et al. 2019). In terms of the structure of the models, the two hybrid models require a complex structure to make predictions with appropriate accuracy, while the RF model uses a simpler structure, but its accuracy is less than the hybrid models.

The results also showed the importance of using independent variables such as evaporation and inflow and outflow to the reservoir to predict hydroelectric energy production. Therefore, according to the obtained results, in order to predict the hydroelectric energy production from the dam reservoirs, meteorological variables and the flows of the dam are also required. This corresponds to the actual operation of the reservoirs of the dams. The results of the models in this research showed that their performance is different using each prediction scenario. The role of machine learning models in predicting energy production time-series was showed to be positive in this research, which can be an effective step for the management of multi-purpose dam reservoirs.

Conclusion

The performance of HHO and PSO algorithms in improving the results of the ANFIS model to predict the hydroelectric energy production of the Mahabad reservoir was evaluated in this study. Moreover, the performance of ANFIS, ANFIS-HHO, ANFIS-PSO, LSSVR, RF, and GEP was compared in the prediction of the power output. At first, the parameters affecting hydroelectric energy production were identified, and then, eight input scenarios were defined by combining the different variables. About 70% of the total data were used for training the models and the remaining for the testing. The performance of the models was compared using error evaluation criteria (MAPE, RMSE, and NSE) and graphical methods, including scatterplots, time series and error plots, Taylor’s diagrams, and ridgeline plots.

The results showed that even though the ANFIS model estimated the training data with a low error and a high NSE, it performed poorly for the test data. For this purpose, two optimization models, PSO and HHO, were combined with the ANFIS model to prepare hybrid ANFIS-HHO and ANFIS-PSO models, which exerted more accurate results for the test data than the basic ANFIS model. The accuracy of the ANFIS-HHO model was slightly higher than that of the ANFIS-PSO. The hybrid models provided the best results using Scenario S3. This scenario included a low number of input variables; therefore, these two models can provide reliable results when there is a lack of data. LSSVR and RF provided acceptable results in predicting hydroelectric energy production, but their accuracy was lower than those of the two hybrid models. Although, the LSSVR model used more input variables (Scenario S7) to achieve the highest accuracy. The GEP model had an acceptable performance for five scenarios.

Scatterplots, time series and error plots, and Taylor’s diagrams showed that the results of the ANFIS-HHO model for the test data were closer to the observational data, and after that, the ANFIS-PSO model provided results close to the ANFIS-HHO model. Finally, a ridgeline diagram was drawn for the entire data. Based on these diagrams, the most distribution of data was in the range of 0–1000, and the models recognized the behavior of the function well for these points. However, the shape of the diagram for the ANFIS and LSSVR models, especially at peak points, was more similar to the shape of the observations, while in other models, at the peak points, the shape of the diagram was more elongated.

The results show that machine learning models are reliable tools for predicting hydroelectric energy production. Since all the models achieved relatively good results without using all the variables affecting the production of hydroelectric energy, it can be said that these models can provide promising performance in the conditions of lacking input data. For future research, it is recommended that in addition to the input variables used in this research, variables such as temperature, water height behind the dam, and the volume of water stored behind the dam should be considered. In addition, today, the phenomenon of climate change is a well-known phenomenon that affects various sectors. Investigating the effects of climate change on the amount of hydroelectric energy produced can provide exciting results.

Data availability

The data of this research cannot be published.

References

Allawi MF, El-Shafie A (2016) Utilizing RBF-NN and ANFIS methods for multi-lead ahead prediction model of evaporation from reservoir. Water Resour Manage 30(13):4773–4788

Arya Azar N, Ghordoyee Milan S, Kayhomayoon Z (2021) Predicting monthly evaporation from dam reservoirs using LS-SVR and ANFIS optimized by Harris hawks optimization algorithm. Environ Monit Assess 193(11):1–14

Azar NA, Milan SG, Kayhomayoon Z (2021) The prediction of longitudinal dispersion coefficient in natural streams using LS-SVM and ANFIS optimized by Harris hawk optimization algorithm. J Contam Hydrol 240:103781

Bahmani R, Solgi A, Ouarda TB (2020) Groundwater level simulation using gene expression programming and M5 model tree combined with wavelet transform. Hydrol Sci J 65(8):1430–1442

Banzhaf W, Nordin P, Keller R, Francone F (1998) Genetic programming: an introduction: on the automatic evolution of computer programs and its applications, vol 340. Morgan Kaufmann, Burlington, pp 94104–3205

Barzola-Monteses J, Gomez-Romero J, Espinoza-Andaluz M, Fajardo W (2022) Hydropower production prediction using artificial neural networks: an Ecuadorian application case. Neural Comput Appl 34(16):13253–13266

Breiman L (1996) Bagging predictors. Mach Learn 24(2):23–40

Breiman L, Friedman JH, Olshen RA, Stone CJ (1984) Classification and regression trees. Chapman and Hall/CRC, New York, p 744

Choubin B, Moradi E, Golshan M, Adamowski J, Sajedi-Hosseini F, Mosavi A (2019) An Ensemble prediction of flood susceptibility using multivariate discriminant analysis, classification and regression trees, and support vector machines. Sci Total Environ 651:2087–2096

Cobaner M, Haktanir T, Kisi O (2008) Prediction of hydropower energy using ANN for the feasibility of hydropower plant installation to an existing irrigation dam. Water Resour Manage 22(6):757–774

Dehghani M, Riahi-Madvar H, Hooshyaripor F, Mosavi A, Shamshirband S, Zavadskas EK, Chau KW (2019) Prediction of hydropower generation using grey wolf optimization adaptive neuro-fuzzy inference system. Energies 12(2):289

Elliot TC, Chen K, Swanekamp RC (1998) Standard handbook of power plant engineering, 2nd edn. McGraw-Hill

Esmaili M, Aliniaeifard S, Mashal M, Asefpour Vakilian K, Ghorbanzadeh P, Azadegan B, Seif M, Didaran F (2021) Assessment of adaptive neuro-fuzzy inference system (ANFIS) to predict production and water productivity of lettuce in response to different light intensities and CO2 concentrations. Agric Water Manag 258:107201

Ferreira C (2006) Gene expression programming mathematical modeling by an artificial intelligence. Springer, Berlin

Firat M, Güngör M (2007) River flow estimation using adaptive neuro fuzzy inference system. Math Comput Simul 75(3–4):87–96

Hammid AT, Sulaiman MHB, Abdalla AN (2018) Prediction of small hydropower plant power production in Himreen Lake dam (HLD) using artificial neural network. Alex Eng J 57(1):211–221

Hanoon MS, Ahmed AN, Razzaq A, Oudah AY, Alkhayyat A, Huang YF, El-Shafie A (2022) Prediction of hydropower generation via machine learning algorithms at three Gorges Dam. China. Ain Shams Eng J 14:101919

Hashemi A, Asefpour Vakilian K, Khazaei J, Massah J (2014) An artificial neural network modeling for force control system of a robotic pruning machine. J Inf Organ Sci 38(1):35–41

Heidari AA, Mirjalili S, Faris H, Aljarah I, Mafarja M, Chen H (2019) Harris hawks optimization: algorithm and applications. Futur Gener Comput Syst 97:849–872

Huangpeng Q, Huang W, Gholinia F (2021) Forecast of the hydropower generation under influence of climate change based on RCPs and developed crow search optimization algorithm. Energy Rep 7:385–397

Jang JS (1993) ANFIS: adaptive-network-based fuzzy inference system. IEEE Trans Syst Man Cybern 23(3):665–685

Jung J, Han H, Kim K, Kim HS (2021) Machine learning-based small hydropower potential prediction under climate change. Energies 14:3643

Kayhomayoon Z, Azar NA, Milan SG, Moghaddam HK, Berndtsson R (2021) Novel approach for predicting groundwater storage loss using machine learning. J Environ Manage 296:113237

Kayhomayoon Z, Naghizadeh F, Malekpoor M, Arya-Azar N, Ball J, Ghordoyee-Milan S (2022) Prediction of evaporation from dam reservoirs under climate change using soft computing techniques. Environ Sci Pollut Res 30:27912–27935

Kennedy J, Eberhart R (1995) Particle swarm optimization. In: Proceedings of ICNN'95-international conference on neural networks, vol. 4. IEEE, pp 1942–1948

Kostić S, Stojković M, Prohaska S (2016) Hydrological flow rate estimation using artificial neural networks: model development and potential applications. Appl Math Comput 291:373–385

Li GD, Masuda S, Nagai M (2016) Prediction of hydroelectric power generation in Japan. Energy Sources Part B 11(3):288–294

Linsley RK, Franzini JB, Freyberg DL, Tchobanouglous G (1992) Water resources engineering, 4th edn. McGraw-Hill, New York

Lopes MNG, Da Rocha BRP, Vieira AC et al (2019) artificial neural networks approaches for predicting the potential for hydropower generation: a case study for Amazon region. J Intell Fuzzy Syst 36:5757–5772

Malik A, Tikhamarine Y, Sammen SS, Abba SI, Shahid S (2021) Prediction of meteorological drought by using hybrid support vector regression optimized with HHO versus PSO algorithms. Environ Sci Pollut Res 28(29):39139–39158

Marini F, Walczak B (2015) Particle swarm optimization (PSO). a tutorial. Chemom Intell Lab Syst 149:153–165

Massah J, Vakilian KA, Shabanian M, Shariatmadari SM (2021) Design, development, and performance evaluation of a robot for yield estimation of kiwifruit. Comput Electron Agric 185:106132

Mehdizadeh S, Ahmadi F, Mehr AD, Safari MJS (2020) Drought modeling using classic time series and hybrid wavelet-gene expression programming models. J Hydrol 587:125017

Mellit A, Pavan AM, Benghanem M (2013) Least squares support vector machine for short-term prediction of meteorological time series. Theor Appl Climatol 111(1–2):297–307

Milan SG, Roozbahani A, Azar NA, Javadi S (2021) Development of adaptive neuro fuzzy inference system–evolutionary algorithms hybrid models (ANFIS-EA) for prediction of optimal groundwater exploitation. J Hydrol 598:126258

Mirabbasi R, Kisi O, Sanikhani H, Meshram SG (2019) Monthly long-term rainfall estimation in Central India using M5Tree, MARS, LSSVR, ANN and GEP models. Neural Comput Appl 31(10):6843–6862

Moayedi H, Abdullahi MAM, Nguyen H, Rashid ASA (2021a) Comparison of dragonfly algorithm and Harris hawks optimization evolutionary data mining techniques for the assessment of bearing capacity of footings over two-layer foundation soils. Eng Comput 37(1):437–447

Moayedi H, Osouli A, Nguyen H, Rashid ASA (2021b) A novel Harris hawks’ optimization and k-fold cross-validation predicting slope stability. Eng Comput 37(1):369–379

Norouzi H, Moghaddam AA (2020) Groundwater quality assessment using random forest method based on groundwater quality indices (case study: Miandoab plain aquifer, NW of Iran). Arab J Geosci 13(18):1–13

Qasem SN, Samadianfard S, Kheshtgar S, Jarhan S, Kisi O, Shamshirband S, Chau KW (2019) Modeling monthly pan evaporation using wavelet support vector regression and wavelet artificial neural networks in arid and humid climates. Eng Appl Comput Fluid Mech 13(1):177–187

Quinlan JR (1993) C4.5 programs for machine learning. Morgan Kaurmann, San Mateo

Sarlaki E, Paghaleh AS, Kianmehr MH, Asefpour Vakilian K (2021) Valorization of lignite wastes into humic acids: process optimization, energy efficiency and structural features analysis. Renew Energy 163:105–122

Schoppa L, Disse M, Bachmair S (2020) Evaluating the performance of random forest for large-scale flood discharge simulation. J Hydrol 590:125531

Seifi A, Riahi-Madvar H (2019) Improving one-dimensional pollution dispersion modeling in rivers using ANFIS and ANN-based GA optimized models. Environ Sci Pollut Res 26(1):867–885

Suykens J, De Brabanter J, De Moor B, Vandewalle JAK, Van Gestel T (2002) Least squares support vector machines, vol 4. World Scientific, Singapore

Tamm O, Luhamaa A, Tamm T (2016) Modeling future changesinthe north-estonian hydropower production by using SWAT. Hydrol Res 47:835–846

Tikhamarine Y, Souag-Gamane D, Ahmed AN, Sammen SS, Kisi O, Huang YF, El-Shafie A (2020) Rainfall-runoff modelling using improved machine learning methods: Harris hawks optimizer vs. particle swarm optimization. J Hydrol 589:125133

Zare M, Koch M (2018) Groundwater level fluctuations simulation and prediction by ANFIS-and hybrid Wavelet-ANFIS/Fuzzy C-Means (FCM) clustering models: application to the Miandarband plain. J Hydro-Environ Res 18:63–76

Zhang X, Peng Y, Xu W, Wang B (2019) An optimal operation model for hydropower stations considering inflow forecasts with different lead-times. Water Resour Manage 33(1):173–188

Zhou, F., Li, L., Zhang, K., Trajcevski, G., Yao, F., Huang, Y., et al. (2020) Forecasting the evolution of hydropower generation. In: Proceedings of the 26th ACM SIGKDD international conference on knowledge discovery and data mining. pp 2861–2870

Zolfaghari M, Golabi MR (2021) Modeling and predicting the electricity production in hydropower using conjunction of wavelet transform, long short-term memory and random forest models. Renew Energy 170:1367–1381

Funding

This research is funded independently and supported by the authors.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Ethical approval

There is no ethical issues.

Consent to participate

The full consent of all authors is confirmed.

Consent to publish

All authors agree to publish.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kayhomayoon, Z., Arya Azar, N., Ghordoyee Milan, S. et al. Application of soft computing and evolutionary algorithms to estimate hydropower potential in multi-purpose reservoirs. Appl Water Sci 13, 183 (2023). https://doi.org/10.1007/s13201-023-02001-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s13201-023-02001-5