Abstract

In this paper, we developed video surveillance system to detect incidents from heavy traffics in tunnels. Incidents in tunnels may cause additional accidents or fires that may result in fatal disaster. Therefore, it is important to detect incidents as soon as possible to manage the traffics inside the tunnels by the officers. Generally, video images in the tunnels suffer from heavy occlusion due to the low position of camera settings. In particular, the problem of heavy occlusions would be more serious in the urban tunnels due to their heavy traffics. We developed a tracking algorithm to segment vehicles and estimate the precise vehicle trajectories against the heavy occlusions. Utilizing this tracking algorithm, dedicated algorithm to detect incidents from the traffic images was developed. For the experiments, video streams of three cameras for 6 months were investigated, and 32 incidents were examined to evaluate the developed algorithm.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

There are a lot of previous works on the vehicle tracking. Historical works of the vehicle tracking are as follows. A work by H. Kolling and H. Nagel [1] is widely known as successful by using vehicle shape model consisting of horizontal and vertical edges. Peterfreund [2] developed boundary extraction method by ’Snakes [3]’ for vehicle tracking. Smith [4] and Grimson [5] employed statistical analysis and clustering of optical flows for the tracking problem. Although those works do not deal with occlusion problem, some previous works deal with the problems. Leuck [6] and Gardner [7] employed 3D models of vehicle shape and developed a dedicated pattern matching algorithm to vehicle images. However, since this method requires various kinds of vehicle models, it would be difficult to be applied to a scene containing various kinds of vehicle traveling into various directions. Malik [8] tried to resolve occlusion problem by a dedicated reasoning method. Although, those methods achieved good performance in a sparse traffic with simple motion, they cannot deal with complicated traffics.

In recent years, the tracking algorithm has been restlessly improved [9–13]. Among them, Kanhere [10] tried to solve the occlusion problem at low camera angle by employing a 3-D perspective mapping from the scene to the image along with a plumb line projection. Zhang [12] applied multilayer framework to the occlusion problem. In this work, occlusion is detected by evaluating the compactness ratio and interior distance ratio of vehicles, and the detected occlusion is handled by removing a “cutting region” of the occluded vehicles. Ambai [13] developed the tracking method against occlusions consisting of feature point detection and clustering feature points. Gradient based feature detector was used for the feature point detection, and graph cut method was used for dividing the occluding objects.

A lot of researches on incident detection have been performed. Cuchira [14] applied traffic rule-based reasoning to images for simple traffic conditions on a single-lane straight road. Jung [15] tracked vehicle from traffic images for precise traffic monitoring. Zhang [16], Srinivasan [17], and Byun [18] employed Bayesian Network and Neural Network in order to distinguish incident from ordinary traffics. All the above algorithms do not consider about the effect of occlusion. Thus, they have some difficulties in being applied to complicated traffic situation such as congestion. Thomas [19] and Zheng [20] tried find outliers in the traffic flow statistics for the incident detection. Although their methods were successful, the methods require longer time for the detection than video analysis. Shehata [21], Versavel [22], and Austin [23] employed video surveillance for the incident detection. Although their methods were successful, they are focusing on relatively easier cases such as the detection of vehicles stalling at prohibited areas.

Incident detection in the heavy traffic is quite challenging because of heavy occlusions. The incident detections in the tunnel become more difficult due to the low angle condition of the camera settings. In this paper, we resolved the heavy occlusion problems by the interlayer feedback strategy, and developed a successful algorithm to detect incidents even in the congested traffic in the tunnel. The algorithm was examined using video images recorded in the tunnel at the Tokyo Metropolitan Expressway.

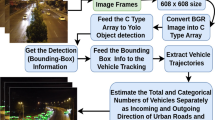

2 Algorithm Hierarchy for Scene Understanding

Semantic Hierarchy is one of popular idea to construct cognitive systems. In this section, an algorithm hierarchy for traffic scene understanding is discussed in detail. In particular, an innovative idea of interlayer feedback algorithm was developed in this paper, and the idea will be described in the last part of this section. Although the discussions exemplify traffic scene understanding, the idea would be useful for cognitive systems in general.

2.1 Overview of Semantic Hierarchy

Generally, algorithm hierarchy for cognitive systems can be separated into two groups of layers, Signal Processing Layer and Semantics Layer (Fig. 1). Signal Processing Layer is responsible for transforming the electronic signal such as image data into designated data having significance in the real-world such as vehicle trajectories. Semantics Layer is responsible for transforming data obtained from Signal Processing Layer into semantic symbols verbally recognizable by human.

Physics Layer in Signal Processing Layer should contain algorithms that refer purely intrinsic information within images themselves. In other words, the algorithms in Physics Layer should not refer to any information such as forms of vehicles and human, coordinate geometry between the image and the real world, and topology of vehicle lanes. Typically, the methods for background image acquisition or edge extraction are considered as Physics Layer algorithms. Thus, such the algorithms can be generally applied to a variety of objects and scenes. On the other hand, the algorithms in Morphology Layer should refer to external information that cannot be obtained from images themselves. Models for vehicle shapes or human bodies, real world geometry, camera parameters, and vehicle lane topology are examples for such the external information.

Previous works on object tracking does not usually distinguish Physics Layer and Morphology Layer. However, it is important to clarify the difference of the two layers and to combine them appropriately, in order to maintain both benefits of the generality of Physics Layer and the respectiveness of Morphology Layer. In this paper, the idea of interlayer feedback will be discussed in detail as can be read in Section 3.

In Behavior Layer of Semantics Layer, vehicle trajectories obtained from Signal Processing Layer are transformed into verbally significant symbols that represent each vehicle’s behavior such as stalling, slow, and changing lanes. Finally, algorithms in Event Layer determine the most preferable event to represent the traffic scene by combining behaviors of vehicles obtained from Behavior Layer.

As an example, when the system detected a stalling vehicle and the following vehicles changing lanes to avoid the stalled vehicle frequently, it should be determined as a scene of an incident. On the other hand, even when the system detected a stalled vehicle, it should be determined as congestion if the following vehicles do not move to the same way.

2.2 The S-T MRF Model as a Physics Layer Algorithm

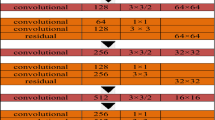

The Spatio-Temporal Markov Random Field model [24, 25] was developed in order to segment spatio-temporal images by referring to purely intrinsic information within image sequences. It divides an image into blocks as a group of pixels, and optimizes labeling of such blocks by referring to texture and labeling correlations among them, in combination with their motion vectors. Combined with a stochastic relaxation method, the S-T MRF optimizes object boundaries precisely against occlusions.

For example, Object-map representing segmentation result map of object ID distribution will be obtained as shown in Fig. 2. This result is obtained by applying the S-T MRF model to the image from very low-angle at merging traffic on an expressway. In the tracking images of Fig. 2a, bounding boxes overlaps each other. However, vehicles were segmented correctly and the overlapped rectangles are just a problem of visualization as appeared in the Object-map in Fig. 2b.

Since the S-T MRF model was defined as an algorithm in Physics Layer, it is not able to segment occluded objects moving in similar motions along the traveling. Interlayer feedback strategy would be effective to solve such the occlusion problems with similar motions as described in the following section.

3 Interlayer Feedback Algorithm

Occlusion problems frequently occur in heavy traffic in the tunnel, and the degrees of occlusions would be serious due to the low angle condition of the camera installation. In addition, occluded vehicles travel to the same direction in the similar velocities in tunnel traffic as shown in Fig. 3a. The S-T MRF model is not able to segment the occluded vehicles with similar motions.

In such cases, algorithms in Morphology Layer should segment the vehicles which were not segmented by the S-T MRF model. For that purpose, we developed a dedicated algorithm to segment occluding vehicles by referring to horizontal edge patterns of vehicles. Vehicle images are rich in horizontal edges, and the patterns are different among the variations of vehicles as sedan, wagon, trucks and buses as shown in Fig. 3b. Therefore, occluding vehicles can be segmented by finding the gaps of horizontal edge patterns. An edge image should be obtained from an original image to find the gap. This is binary edge image defined as a binarized image of illumination invariant edge image used in S-T MRF model [25]. The coordination among S-T MRF model, edge extraction, and this algorithm (Interface-split) is represented in Fig. 4. The Interface-split algorithm is applied to the rectangle judged that two vehicles exist astride two lanes. A description of the algorithm is as follows.

[Interface-split: detecting gap of horizontal edge]

-

[Step 1]

The scanning line is prepared in a vertical direction of a rectangular image, and the presence of the edge in each block on the scanning line is inspected.

-

[Step 2]

The one-dimensional pattern is generated on the scanning line. This pattern has a binary value corresponding to the existence of edge.

-

[Step 3]

The scanning line is slid to the horizontal direction of the rectangle. A line which has the maximum amount of change in the patterns between the own line and the neighbor line is detected as a splitting line. Hamming distance is used as an index of the amount of change for the line.

-

[Step 4-a]

If the splitting line exists, and if the amount of change for the splitting line exceeds a threshold, object ID on the Object-map is changed based on the splitting line. On the other hand, it is judged that only one vehicle (such as heavy vehicle) exists in the rectangle extracted by the ST-MRF model, and the Object-map is not changed.

-

[Step 5]

In the next frame, the ST-MRF model revised the Object-map based on the result of step 4.

[End of the algorithm]

In this paper, our original method for illumination invariant preprocessing was applied to the original image to cancel the effect of head lights as Fig. 3b, c and d and tracking results in the following sections. The existence of an edge is examined by each block defined in the S-T MRF model, in order to reduce the time and maintain the robustness of the gap finding process.

It is remarkable that after the S-T MRF model is informed segmentation results from the algorithm in Morphology Layer, the S-T MRF model is able to segment the vehicles in the following image frames. Generally, the algorithm in Morphology Layer is not able to perform segmentation and tracking so robustly by itself. Since the S-T MRF model accepts any algorithms in Morphology Layer, the strategy of interlayer feedback is promising to apply to various kinds of objects.

4 Occlusion Robust Estimation of Vehicle Trajectories

For the incident detection in the tunnel, evaluations of the slow down behaviors and lane changing behaviors of vehicles are necessary. Spatio-temporal estimations of trajectories enable evaluation of vehicle behaviors and scene understanding as incident detection.

In images of low angle cameras in tunnel, a majority of vehicles are not occluded when they come into the view beneath the camera. Therefore, grounding position of the vehicle tail can be estimated from the vehicle segmentation results at the moment when they travel beneath the camera. However, as the vehicles travel toward the downstream in the camera view, tail of the preceding vehicle should be occluded by the following vehicle to cause the failure in estimating grounding position of the preceding vehicle. In this section, a dedicated method to estimate the grounding position of the vehicle tail was developed as described below.

Cameras were originally installed at about 2.5 m high in the tunnel and their optical axes are horizontal. We used them as the original settings. In Fig. 5, y represents vertical coordinate in the image coordinate measured from the vanishing point, and it should be transformed into real world coordinate for the estimation of vehicle velocity in the real world. When the tail of the preceding vehicle is occluded by the following vehicle, y grnd of the preceding vehicle cannot be observed directly. Therefore, y grnd can be estimated from y top and h app (y grnd ) where y top represents position of the vehicle roof in the image coordinate, and h app (y grnd ) represents appearance height of the vehicle in the image coordinate when the vehicle exists at the grounding position y grnd . In Eq. 1, since y grnd is estimated from y grnd itself, a feedback optimization process should be employed for the estimation.

The problem now is to estimate h app (y grnd ). In Eq. 2, h true (y grnd ) represents the true height of the vehicle in the image coordinate, and h app (y grnd ) represents appearance height of the vehicle which consists of roof length and true height of the vehicle as shown in Fig. 6. Figure 6 thumbnails the side view of the road infrastructure, camera setting, and the vehicle. From Fig. 6, the correction from h app (y grnd ) to h true (y grnd ) should be done by using correction factor Ω (y grnd ) as represented in Eq. 2.

For the purpose of the definition, Ω (w) is function of w, position in the real world coordinate, in Eq. 3. Since Ω (y grnd ), position in the image coordinate as a function of y grnd , is used for the transformation from h app (y grnd ) to h true (y grnd ), calibration between w and y grnd is required. We used cameras already in practical operations by officers in this paper. Therefore, geometrical condition of camera setup is not rigid, and it is difficult to perform calibration between w and y grnd from the geometrical condition. In addition, since image resolution steeply declines along with the depth toward the vanishing point, it is difficult to perform the calibration accurately. Consequently, we decided to calibrate Ω (y grnd ) from the traffic image in direct manner as described in the experimental results, Section 6.1.

Equation 4 assumes that true height of the vehicle in the image coordinate is proportional to the distance from the vanishing point in the image coordinate. Consequently, Eqs. 5 and 6 are obtained from Eqs. 1 to 4. As same as Eq. 2, y grnd is estimated from y grnd itself in Eq. 6, and a feedback optimization process is necessary. Following descriptions are about the procedure to estimate y grnd of the preceding vehicle occluded by the following vehicle.

In the first step, h app (y grnd ) of a vehicle is measured from the image, and the vehicle is classified into two categories of large vehicles as trucks and small vehicles as passenger cars. Vehicle regions are obtained from segmentation results from the S-T MRF model, and most of the vehicles are not occluded at the entrance area of the camera view. When the vehicle belongs to the large vehicle category, it is regarded as Ω (y grnd ) = 1. Since large vehicles as trucks are higher than 2.5 m, the height of the camera position, roofs of the vehicles do not appear in the image. When the vehicle belongs to the small vehicle category, Ω (y ground ) is obtained from the calibration results.

In the second step, y top of the preceding vehicle is obtained by extracting the edge at the top of the vehicle region. The preceding vehicle and the following vehicle are segmented by the S-T MRF model against the occlusion at this moment, and the top edge of the preceding vehicle appears in the image while the bottom edge is occluded. Finally, y grnd is obtained from Eq. 6 by substituting the value of y top . However, right side of Eq. 6 has y grnd as a valuable. Therefore, the iterative procedure should be performed as substituting y grnd on the left side into y grnd on the right side of Eq. 6 for the next loop of the iteration. In the first loop of the iteration, the value of y top is substituted into y grnd on the right side, iteration of single loop appeared as sufficient to pursue the precise result of y grnd from our experiment.

Vehicle trajectory of each vehicle can be obtained by sequencing the grounding points of the vehicle in image coordinate, and the vehicle trajectory enables to estimate vehicle velocity. Thus, estimated vehicle velocity should be transformed into the velocity in the real world coordinate. Usually, calibration about the transforming parameters is performed based on solving camera parameters and setting condition. However, since the cameras used in this paper are already in practical operations aside heavy traffic, such the calibration is quite difficult. Therefore, we adopted more practical method for the calibration in this paper. Ultrasonic wave sensors are installed on the road side of our experimental field. In the highway of this paper, ultrasonic wave sensors are standardized as to show errors of less than 5% from the true value in the velocity measure. Calibrations between the image coordinates and the real world coordinates were performed to keep consistency of average velocities between the ultrasonic wave sensors and the image sensors.

5 Implementation of Semantic Layer for Incident Detection

Generally, it is quite difficult to detect the moment of the collision from an image. Therefore, algorithms to detect abnormally slowdown vehicles and stalling vehicles have been developed to detect incidents in which vehicles are stalling because of accidents as well as their own troubles. Slowdown vehicles and stalling vehicles are easily detected in sparse traffics. However, it is difficult to detect them from the congested or jammed traffics because a majority of vehicles move in similar velocities in congested or jammed traffics. In this paper, we designed semantic layer algorithms to classifying the behavior of each vehicle and to detect the incident by analyzing the behaviors of interacting vehicles.

5.1 Behavior-class Functions

From the previous section, we can obtain spatio-temporal trajectory of each vehicle. By classifying the trajectories, vehicle behaviors can be determined as slowdown, stalling, and lane changing. The following are examples of functions representing behaviors of vehicles such as slowdown, stalling, changing lanes, vacant space ahead of the vehicle.

-

typeOfSpTrajectory(V.i, V j ,t): Returns ’Changing lanes’ when vehicle V.i changes its lane to avoid vehicle V.j. Otherwise, returns ’Ordinary’. ’Changing lanes’ includes that vehicles change its lane and has six types of traveling: ’left-to-right’, ’right-to-left’, ‘center-to-left’, ’left-to-center’, ’center-to-right’, and ’right-to-center’. The six types of ’Lane changing’ are discriminated by evaluating similarities of a vehicle trajectory to the clustered trajectories of the particular types.

-

\( typeOfSpTrajectory\left( {{\hbox{V}}.i,\,{\hbox{L}}_m^{\rm{k}},t} \right) \): Returns ’Changing lanes’ when vehicle V.i changes its lane to avoid the m−th discretized location along the k−th lane. Otherwise, returns ’Ordinary’.

-

typeOfTpTrajectory(V.i, t): Returns ’True’ when VehicleV.i is stalled at time t, and ’False’ otherwise. The threshold between stalled or not is determined by statistical analysis of the vehicle behaviors.

-

isSpace(V.i, t): Returns ’True’ when there is the vacant space ahead of the VehicleV.i along the vehicle traveling lane at time t, and ’False’ otherwise. It should be noted that there is no traffic flow in any lanes, this function returns ’False’.

5.2 Event-class Functions

Event-class functions are described by combining behavior functions to determine the types of events. The following are descriptions of logics for the incident detection.

-

Logic 1:

If average velocity of the vehicles is less than TH ave_vel , the traffic should be determined as congested, otherwise the traffic should be determined as free flow.

-

Logic 2:

If a vehicle is stalling at the prohibited area in the period longer than TH stall_time , the scene should be determined as an incident.

-

Logic 3:

If there appeared the vacant space ahead of the stalling vehicle while the traffic is free flow, the scene should be determined as an incident.

-

Logic 4:

If there occurred lane changing behaviors over the stalling vehicle more than TH lchange times, the scene should be determined as an incident.

Above algorithms for the incident detection were developed and proved as successful for the incident detection at relatively high angle in the open aired highway [26]. In this paper, the algorithms are applied to the tracking results by applying the interlayer feedback algorithm to low angle images in the tunnel. We adopted TH stall_time = 10 sec, and TH lchange = 3 in the following experiments according to the publication [26], and \( T{H_{ave\_ vel}} = 20km/hour \) is defined according to operational rules in the highway.

6 Experimental Results

Experiments were performed by using video images recorded by the cameras at three different locations inside the tunnel. Those three locations consist of straight, separating, and merging traffic. In this section, experimental results of vehicle segmentations and incident detections are described.

6.1 Calibration of Ω (y grnd )

Figure 7 shows calibration results of Ω (y grnd ). The plots were obtained by measuring the ratio between true height and appearance height of vehicles directly from images. All the vehicles used for the calibration were not occluded by the following vehicles, in order to obtain y grnd directly from the images. Each plot corresponds to each vehicle measured. For these plots, horizontal axis represents y grnd of the vehicle, and vertical axis represents Ω (y grnd ). Diversity of Ω (y grnd ) is due to variations in vehicle types, shapes of rear and top of the vehicles. Consequently, Ω (y grnd ) was estimated as an average of the vehicles. In Fig. 7, smaller y grnd means close to the vanishing point, and smaller Ω (y grnd ) means true height of the vehicle is close to appeared height.

6.2 Segmentation and Trajectory Estimation

Figure 8a and b exemplifies segmentation result by the S-T MRF model and the interlayer feedback algorithm. The S-T MRF model failed to segment vehicles traveling with the similar velocities and occluding along all the way in the view, as shown in Fig. 8a. The interlayer feedback algorithm succeeded to segment the two vehicles, and their trajectories were correctly obtained, as shown in Fig. 8b.

Segmentation results and trajectory estimations by the interlayer feedback process. a By the S-T MRF model alone. b By the interlayer feedback algorithm. Bottom of the vehicle in the right lane is not occluded. c Vehicle ID75 entered the view. d Vehicle ID75 was occluded by Vehicle ID76. Bottom of the vehicle in the right lane is occluded

Segmentation performance was evaluated by using 70-min video recorded by the three cameras in the tunnel. Totally, 8,563 vehicles were passed through the views of the three cameras. As a result, 6,577 segments were obtained by the S-T MRF model, and 8,964 segments were obtained by the interlayer feedback algorithm. Out of 8,864 segments by the interlayer algorithm, 7,856 segments were correctly appeared to include a single vehicle, and 107 segments appeared to include multiple vehicles. Nine hundred one segments appeared to be excessively divided segments. Consequently, in a rough description, contributions on vehicle segmentation against heavy occlusion would come from the S-T MRF model for about 77% and from the interlayer feedback for about 27%.

Figure 8c and d shows an example of trajectory estimations of the preceding vehicles over the occlusions by the following vehicles. Although the preceding vehicle of ID75 in the right lane was occluded by the following vehicle of ID76 in the left lane, the trajectory of the vehicle of ID75 was estimated precisely. Usually, the grounding position of the vehicle tail appears inside the bounding box of the vehicle segment. However, grounding position of the vehicle should be outside of the bounding box when the bottom part of the vehicle was occluded by the following vehicle. In Fig. 8d, each line tailing from the bottom of the vehicle connects the grounding positions y grnd for the past five image frames of the vehicle. The latest grounding point of the vehicle of ID75 exists outside of the bounding box. Since the bottom part of the vehicle of ID75 was occluded by the vehicle of ID76, this result was successful.

6.3 Incident Detection Results

Figure 9 shows a result of stalling vehicle detection. Figure 9a shows the scene soon after the vehicle stalled at the road side. The algorithm determined that the vehicle is stalling in the prohibited area over the emergency lane. After the length of the vehicle stalling exceeded the threshold, the state of the algorithm turned into ‘incident’ as in Fig. 9b. Even if there was no emergency lane, the algorithm can conclude the scene as ‘incident’ by detecting stalling vehicle and passing vehicle in the same lane as in Fig. 10a and b.

Figure 10 shows image sequences of incident detection process by the S-T MRF model alone and the interlayer feedback algorithm. The incident could not be detected by the S-T MRF model alone, since vehicles could not be segmented because of heavy occlusions as shown in Fig. 10a. However, the incident was detected successfully by the interlayer feedback algorithm and edge pattern classification, since vehicles were segmented precisely against heavy occlusions as shown in Fig. 10b. In this case, the algorithm detected the incident by detecting frequent lane changing behaviors against a stalling vehicle. Such the behavior analyses over the congested traffic were enabled by the vehicle segmentation using the interlayer algorithm and the trajectory estimations against occlusions.

Consequently, experimental results were summarized as shown in Table 1, in terms of success rates of incident detection. For the experiments, 32 incidents were edited from the 6 month video stream of the cameras. Videos of 16 incidents were used as learning data to determine thresholds to detect slowdowns, stalling, and the frequent lane changing in the same location to avoid the incident vehicles. The other videos of 16 incidents were used as test data to examine the performance of the algorithm. Success rates for incident detections were improved by the interlayer feedback algorithm to 88% for test data and 91% in total of learning data and test data, whereas they were 44% and 53% by the S-T MRF model alone, respectively.

7 Conclusions

In this paper, an algorithm for incident detection in the tunnel was developed. We developed the interlayer algorithm to combine the S-T MRF model and the algorithm in the Morphology layer, in order to resolve heavy occlusion problems in heavy traffic with the low angle image. The algorithm was proved to be effective for the segmentation of vehicles in the heavy occlusion images though the examinations using heavy occlusion images. Consequently, the algorithm could obtained precise trajectories of the vehicles, and contributed to the incident detections from the congested traffics in tunnels.

Since the incidents in tunnels may cause additional accidents or fires that may result in fatal disaster, it is important to detect them as soon as possible. The disaster would be rather serious in the heavy traffics of urban tunnels. The technology in this paper is expected to enhance the safety of tunnel traffics and the efficiency of tunnel operations.

References

Kolling, H., Nagel, H.: 3D pose estimation by directly matching polyhedral models to gray value gradients. IJCV 23(3), 283–302 (1997)

Peterfreund, N.: Robust tracking of position and velocity with Kalman Snakes. IEEE Trans. Pattern Analysis and Machine Intelligence (PAMI), vol. 21, no. 6, pp. 321–331, 1988

Kass, M., Witkin, A., Terzopoulos, D.: Snakes: active contour models. Int. J. Comput. Vis. 1, 564–569 (1999)

Smith, S.M., Brady, J.M.: ASSET-2: real-time motion segmentation and shape tracking. IEEE Trans. Pattern Analysis and Machine Intelligence (PAMI), vol. 17, no. 8, pp. 814–820, 1995

Stauffer, C., Grimson, W.E.L.: Adaptive background mixture models for real-time tracking. Proc. of CVPR, 1999, vol. II, pp. 246–252, Jun 1999

Leuck, H., Nagel, H.: Automatic differentiation facilitates of-integration into steering-angle-based road vehicle tracking. IEEE CVPR’99, pp. 360–365

Gardner, W.F., Lawton, D.T.: Interactive model-based vehicle tracking. IEEE Trans. PAMI, vol. 18, no. 11, pp. 1115–1121, 1996

Weber, K., Malik, J.: Robust multiple car tracking with occlusion reasoning. Proc. of ECCV’94, Vol. I, Lecture Notes in Computer Science 800, pp. 189–196. Springer-Verlag

Hsieh, J.-W., Yu, S.-H., Chen, Y.-S., Hu, W.-F.: Automatic traffic surveillance system for vehicle tracking and classification. IEEE Transactions On Intelligent Transportation Systems, vol. 7, no. 2, pp. 175–187, June 2006

Kanhere, N.K., Birchfield, S.T.: Real-time incremental segmentation and tracking of vehicles at low camera angles using stable features. IEEE Transactions On Intelligent Transportation Systems, vol. 9, no. 1, pp. 148–160, March 2008

Wang, C.-C.R., Lien, J.-J.J.: Automatic vehicle detection using local features - a statistical approach. IEEE Transactions On Intelligent Transportation Systems, vol. 9, no. 1, pp. 83–96, March 2008

Zhang, W., Wu, Q.M.J., Yang, X., Fang, X.: Multilever framework to detect and handle vehicle occlusion. IEEE Transactions on Intelligent Transportation Systems, vol. 9, no. 1, pp. 161–174, March 2008

Ambai, M., Ozawa, S.: Vision-based traffic monitoring system for various traffic situations using monocular camera. 13th World Congress on ITS, London, October 2006

Cucchara, R., Piccardi, M., Mello, P.: Image analysis and rule-base reasoning for a traffic monitoring system. IEEE/IEEJ/JSAI ITS conf., Tokyo, pp. 758–763, Oct. 1999

Jung, Y., Lee, K., Ho, Y.: Content-based event retrieval using semantic scene interpretation for automated traffic surveillance. IEEE Trans. on ITS, Sep. 2001

Zhang, K., Taylor, M.: A new method for incident detection on urban arterial roads. 11thWorld Congresson ITS, Nagoya, Oct 2004

Srinivasan, D., Jin, X., Cheu, R.L.: Evaluation of adaptive neural network models for freeway incident detection. IEEE Trans. ITS, vol. 5, no. 1, March 2004

Byun, S.C., Choi, D.B., Ahn, B.H., Ko, H.: Traffic incident detection using evidential reasoning based data fusion. Proceedings of ITS 1999, 6th World Congress on ITS

Thomas, T., van Berkum, E.C.: Detection of incidents and events in urban networks. IET Intell. Transp. Syst. 3(2), 198–205 (2009)

Zheng, P., McDonald, M., Jeffery, D.: Event detection based on loop and journey time data. IET Intell. Transp. Syst. 2(2), 113–119 (2008)

Shehata, M.S., Cai, J., Badawy, W.M., Burr, T.W., Pervez, M.S., Johannesson, R.J., Radmanesh, A.: Video-based automatic incident detection for smart roads: the outdoor environmental challenges regarding false alarms. IEEE Transactions on Intelligent Transportation Systems, vol. 9, no. 2, pp. 349–359, June 2008

Versavel, J.: Improving road and tunnel safety via incident management: implementing a video image processing system. 16th World Congress on ITS, Stockholm, September 2009

Austin, M., Brock, M., Carter, E., Metcalfe, F., Orme, L.: Implementation and evaluation of an adaptive CCTV display system for incident detection for the highways agency. 16th World Congress on ITS, Stockholm, September 2009

Kamijo, S., Matsushita, Y., Ikeuchi, K., Sakauchi, M.: Traffic monitoring and accident detection at intersections. IEEE trans. ITS, vol. 1, no. 2, pp. 108–118, June 2000

Kamijo, S., Nishida, T., Sakauchi, M.: Occlusion robust and illumination invariant vehicle tracking for acquiring detailed statistics from traffic images. To appear in IEICE Transactions on Information and Systems, vol. E85-D, no. 11, 2002

Kamijo, S., Harada, M., Sakauchi, M.: An incident detection system based on semantic hierarchy. Proceedings of ITSC 2004, pp. 853–858, Oct. 2004

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License (https://creativecommons.org/licenses/by-nc/2.0), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Kamijo, S., Fujimura, K. Incident Detection in Heavy Traffics in Tunnels by the Interlayer Feedback Algorithm. Int. J. ITS Res. 8, 121–130 (2010). https://doi.org/10.1007/s13177-010-0018-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13177-010-0018-5