Abstract

This work is aimed at illustrating the strict relationship between a general definition of concentration function appeared quite some time ago on this journal and a widely used measure of the diagnostic strength of a family of binary classifiers indexed by a threshold parameter, the so-called ROC curve. The ROC curve is a common work tool in Statistics, Machine Learning and Artificial Intelligence, appearing in many applications where a binary classification (diagnosis) procedure is of interest. Hence, it is worth remarking that diagnostic strength and concentration are two sides of the same coin: the higher the concentration of one probability measure with respect to another, the higher the diagnostic strength of the likelihood ratio classification rule.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

More than a hundred years ago Corrado Gini started his elaboration on the notion of concentration, with particular application to transferable characteristics such as wealth. The products of his work, e.g. the Gini mean difference, the Gini concentration coefficient and the Lorenz-Gini concentration curve, are part of the toolbox of any data analyst.

More recently, on this journal, Cifarelli and Regazzini (1987) extended the notion of concentration to become a relationship between two probability measures, rather than a one-dimensional concept, giving at the same time solid measure-theoretical justifications.

Parallely, in a variety of literature scattered across many disciplines such as Signal Processing, Medical Diagnosis and Artifical Intelligence, the ROC (Receiver Operating Characteristic) curve was developed as a tool to measure the diagnostic strength of a family of classification rules indexed by some threshold parameter. The importance of the ROC curve in applied work can not be overstated, since it commonly appears in all applications where a binary classification (or diagnosis) procedure is of interest. See the recent textbooks by Krzanowski and Hand (2009), Pepe (2003), and Zou et al. (2011). It was soon realized in the earlier literature on ROC (see e.g. Egan 1975), that, an optimal decision rule for binary classification exists, as long as the two probability measures compared are completely specified: such optimal rule is based on the likelihood ratio (LR from now on), as proven by the Neyman-Pearson lemma, another milestone in the development of Statistics in the last century.

Remarkably, the ROC curve of the LR classifier can also be viewed as an application of the notion of concentration: the higher the concentration of one probability measure with respect to another, the higher the diagnostic strength of the optimal decision rule. The aim of this work is to illustrate this relationship, clarifying the theoretical and interpretational advantages given by a model based approach.

2 The ROC Curve of the Likelihood Ratio Based Classification Rule

From a mathematical point of view we can describe the binary classification problem as a competition between two populations, represented as probability measures. An object, on which one or more random variables are observed, is to be assigned to one of the two populations, based on some classification rule. We focus here on the situation where the two probability measures are completely known, hence avoiding the statistical problems of estimation or, as they say in the Machine Learning literature, learning.

Assume therefore the two alternative probability measures P+ and P− are absolutely continuous with respect to one another and have densities f+ and f−, respectively, with respect to a common dominating measure. Without loss of generality, f− can be taken to be positive, so that the Likelihood Ratio (LR)

is a well defined non negative random variable and, as such, has distribution functions under P− and P+, which are denoted by H− and H+ respectively. More precisely, for each \(\ell \in \mathcal {R}\):

and

Next, define the quantile function associated with H− in the usual way as follows:

and recall that, for any real number ℓ, qt ≤ ℓ if and only if H−(ℓ) ≥ t. For any given value t ∈ (0,1), it may or may not happen that t = H−(qt), depending on whether t does not correspond or does correspond to a jump of H−. More specifically, if t≠H−(qt), then \(H_{-}(q_{t}^{-}) \leq t < H_{-}(q_{t})\), where the notation − indicates left limits (nothing to do with P−), a particularly relevant occurrence for the discussion below. H− and H+ may have jumps, even though P− and P+ are absolutely continuous laws on the real line. This happens, for example, if P+ and P− have piecewise constant densities as provided by an example in Section 4. In this paper we focus on the following definition of LR based classification rule:

DEFINITION 1 (LR based classification rule)

Given two alternative probability laws P− or P+ mutually absolutely continuous with densities f− and f+ respectively, define the likelihood ratio L = f+/f−, its respective distribution functions H− and H+ and the following classification rule. For any given 0 < t < 1:

-

if L > qt, declare positive;

-

if L < qt, declare negative;

-

if L = qt, then perform an auxiliary independent randomization and declare positive with probability

$$ r(t) = \frac{H_{-}(q_{t})-t}{H_{-}(q_{t}) - H_{-}(q_{t}^{-})} $$and negative otherwise.

The LR based classification rule is optimal because it is nothing else than the Neyman-Pearson lemma, enriched with the possibility of randomization (as in Lehmann (1986), for example).

In the classification literature, it is generally recognized that the LR based classification rule is optimal, although for reasons related to the statistical estimation of P− and P+ in the presence of data and the computational problems with highly dimensional observations, other kinds of classification rules are often considered. As mentioned above, we focus here on the no-data situation and assume P− and P+ are known.

Whatever the classification rule, it is typically indexed by a real-valued threshold parameter \(t \in \mathcal {R}\), like the LR based rule is. By varying t, the associated ROC is generated: it is defined as the parametric two-dimensional locus

where the false positive rate FPR is the probability the classification rule assigns the object to population P+ given the object comes from population P− and the true positive rate TPR is the probability the classification rule assigns the object to population P+ given the object comes from population P+. A variety of other names exist, in particular sensitivity for the TPR and specificity for 1-FPR.

Theorem 1.

The ROC function of the classification rule of Definition 1 is

As usual, we can complete the result by setting ROC(0) = 0 and ROC(1) = 1.

Proof

First of all, the FPR and the TPR are computed separately.

Notice that if t = H−(qt) then \(H_{-}(q_{t}^{-})- H_{-}(q_{t})=0\); in other words the expression simplifies for points which are not H−-atoms.

since, P+ and P− being mutually absolutely continuous, they will both have or not have an atom in qt and their LR in qt will be exactly \((H_{+}(q_{t}) - H_{+}(q_{t}^{-}))/(H_{-}(q_{t}) - H_{-}(q_{t}^{-}))\), i.e. qt itself. Next, set FPR = x, i.e. t = 1 − x, to eliminate the parameter t and obtain the explicit form of the ROC curve:

□

Expression (3) may seem cumbersome when compared to simpler expressions for special cases contained in popular textbooks, but one should consider that is the ROC curve of the optimal LR based classification rule, covering discrete, continuous and mixed cases. Still greater generality could be achieved by considering the case where P− and P+ are not absolutely continuous with respect to one another, in which case we would have ROC curves starting at (0, y0), with y0 > 0, or ending at (x0,1), with x0 < 1, although that is usually of little interest.

3 Relationship with a General Concentration Function

Another important reason justifying the generality of expression (3) is that it is strictly related to a definition of concentration function given on this journal by Cifarelli and Regazzini (1987). Such definition was given with the aim of extending the classical concepts of concentration developed by Gini at the beginning of the XX-th century and was further expanded by Regazzini (1992).

It is recalled here for the case in which P+ and P− are mutually absolutely continuous (see the discussion at the end of last section):

DEFINITION 2 (Concentration function by Regazzini and Cifarelli)

Let P+ and P− be mutually absolutely continuous probability measures, let f+ and f− be their respective derivatives with respect to a common dominating measure μ, let their LR be defined as the real-valued random variable L = f+/f−, let H− be its distribution function under P− and let qx be its quantile function. Then Cifarelli and Regazzini (1987) define the concentration function of P+ with respect to P− as φ(0) = 0, φ(1) = 1 and

The easy connection between this definition and the ROC curve of the LR based classification rule of the previous section is established in the next Theorem.

Theorem 2.

Under the hypotheses described in Definition 1,

where φ(⋅) is the concentration function of P+ with respect to P−.

Proof

The equivalent relationship

can be verified directly for x = 0,1 and as follows for 0 < x < 1:

□

Corollary 1.

Under the assumptions described in Definition 1, ROC(⋅) is a nondecreasing, continuous and concave function on [0,1]. In particular, ROC(⋅) is proper.

Proof

This is a consequence of Theorem 2.3 in Cifarelli and Regazzini (1987). In particular, φ(x) is always convex over its domain, i.e. ∀x1, x2 and ν ∈ [0, 1], φ(νx1 + (1 − ν)x2) ≤ νφ(x1) + (1 − ν)φ(x2). By Theorem 2:

The left hand side of the previous equality becomes:

while the right hand side can be rewritten as:

Therefore:

where t1 = 1 − x1, t2 = 1 − x2. □

It is not the first time a relationship between ROC curves and Lorenz-Gini concentration curves is noticed (see for example (Lee, 1999; Schechtman and Schechtman, 2019) and the critical appraisal (Gasparini and Sacchetto, 2020)), but Definitions 1 and 2 allow for great generality and clarify many misunderstandings present in the literature. In particular, we emphasize that, unless the classification rule is based on the LR or on a monotone transformation of it, it can not sensibly be related to a concentration curve.

Properness of ROC curves has been discussed since the early literature, since there are cases where classification rules which are not based on the LR are used and may give rise to non-concave ROC functions. The binormal heteroschedastic case is a notable well-known example. Suboptimal rules are often used since the LR rule may not be easily calculated or not even estimated, but the fact that the LR based classification rule gives automatically a proper ROC curve is an important foundational result.

At the same foundational level, it finally appears clear that the diagnostic strength of the LR based classification rule is equivalent to the mutual concentration of the two competing probability laws: the more P+ is concentrated with respect to P− the higher the diagnostic strength of the LR based classification rule, since one can tell the two probability laws better apart.

4 Examples

This section contains some examples. The first one is a well known case in which both P+ and P− are discrete, since they are given by the empirical frequencies of an ordinal diagnosis. The usual practice of connecting the dots obtained at the thresholds in order to draw a concave ROC curve is fully justified by Definition 1. The second example is instead based on an observable variable which is absolutely continuous both under P+ and under P− and has nonetheless LR distributions with discrete components, resulting in a mixed ROC curve (partially linear and partially curvilinear). The third and fourth examples are multivariate and they are based on the normality assumption, leading to a rediscovery of Fisher’s discriminant functions.

4.1 Example 1: Ordinal Diagnosis

The following example is taken from the Encyclopedia of Biostatistics (Colton and Armitage, 2005). Suppose 109 patients have been classified as diseased (P+) or not diseased (P−), based on a gold standard such as biopsy or autopsy. On the basis of radiological exams, they have also been classified over five ordinal levels

Here are the results:

– – | – | +– | + | ++ | total | |

P− | 33 | 6 | 6 | 11 | 2 | 58 |

P+ | 3 | 2 | 2 | 11 | 33 | 51 |

In particular, P+ and P− are two empirical measures, relative to the diseased and not diseased population respectively, derived from data. There are four possible values for the LR:

which give rise to four empirical ROC points { (25/58, 48/51); (19/58, 46/51); (13/58, 44/51); (2/58, 33/51)}, shown in Fig. 1. Now, thanks to the randomization device, it is possible to ... connect the dots! This is so since the distribution functions of L under P− and P+ are

and

Therefore, the ROC curve can be calculated using Eq. 3:

The continuous ROC curve interpolates the empirical ROC points, as shown in Fig. 1.

4.2 Example 2: Absolutely Continuous Measures with Discrete/continuous LR

Let P− be uniform between 0 and 3 (an absolutely continuous probability measure on the real line) and let P+ have density f+ defined as follows:

Suppose S is a real random variable with density f− under P− and f+ under P+. It is easy to see that the LR L = f+/f− has mixed components and it is not monotone in S, being:

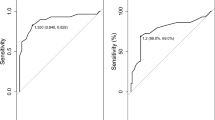

A naive classification rule based solely on S gives rise to the ROC curve

which is not concave, shown as dashed line in Fig. 2. Using instead the LR based classification rule, the ROC curve is:

where \(x_{1} = \frac {1}{3}-\frac {1}{3}(\frac {1}{3})^{1/5}\) and \( x_{2} = \frac {2}{3}-\frac {1}{3}(\frac {1}{3})^{1/5}\). This curve is concave and dominates the previous one as shown in Fig. 2.

This example deals with absolutely continuous densities which, nonetheless, have a likelihood ratio - often called score in classification - with mixed components (partly discrete and partly continuous): it is an exquisitely theoretical exercise, but it addresses a case particularly difficult for the usual approach to ROC curves (which emphasizes a continuous score is necessary).

4.3 Example 3: Two Multivariate Normal Measures

Assume P− is multivariate normal with mean μ− and variance Σ− and P+ is multivariate normal with mean μ+ and variance Σ+ and both densities exist. By taking the logarithmic transformation of the LR, it can easily be seen that for the normal case the LR based classification rule in Definition 1 declares positive if the quadratic score

is large. This is the well known Fisher’s Quadratic Discriminant Analysis (QDA) rule (Fisher, 1936), which reduces to linear – hence the corresponding Linear Discriminant Analysis (LDA) – in the case Σ− = Σ+ (homoschedasticity). The original work by Fisher did not actually focus on the normality assumption, but QDA and LDA are well established terminology in the literature. Being based on the LR, QDA has a proper ROC curve: the score in Eq. 4 is a continuous random variable and no randomization device is needed.

4.4 Example 4: Fisher Versus Best Linear Rules

In the multivariate normal case, insisting on a linear classifier leads to suboptimal procedures in the case of heteroschedasticity. The classifier which is optimal within the class of linear classifiers is considered in Su and Liu (1993) and it declares positive if

is large. If Σ−≠Σ+ it gives an improper ROC curve, which has a “hook” and is dominated by the ROC curve of the corresponding quadratic score in Expression (4). It is worth considering a numerical example in this case, since the optimality of the quadratic score in the normal case is being continuously rediscovered (see e.g. Metz and Pan 1999 and Hillis 2016), but it actually boils down to Fisher (1936).

Consider a bivariate normal vector (X, Y ) which in population P− has a bivariate standard normal distribution, whereas in population P+ has independent components X distributed normally with mean μx > 0 and variance \({\sigma _{x}^{2}}\) and Y distributed normally with mean μy > 0 and variance \({\sigma _{y}^{2}}\not ={\sigma _{x}^{2}}\). According to Eq. 4, the QDA classifier declares positive if

where c is an arbitrary threshold. By varying c and calculating the appropriate probabilities under P− and P+, we can obtain the ROC curve, by simulation or, if greater precision is needed, by using non-central chi-square distributions. The ROC curve for the case μx = 1, μy = 2, σx = 2, σy = 4 is plotted as a solid line in Fig. 3.

The best linear classifier according to Expression (5) is instead

S has normal distributions under P− and P+ and by a well-known result its ROC is

where ϕ(⋅) is the standard normal distribution function,

and

This ROC curve for the case μx = 1, μy = 2, σx = 2, σy = 4 is plotted as a dashed line in Fig. 3. We can easily see that the QDA ROC curve is concave and dominates the best linear ROC curve.

5 Conclusions

The brief historical overview given in the Introduction can be completed with a look into the present and future times.

Nowadays the model based approach - namely the centrality of two competing probability measures P+ and P− - is considered out fashioned by many researchers, solely interested in algorithms for classification. The reason is a certain degree of success obtained in the presence of high dimensional data by methods apparently unrelated to probability: Support Vector Machines, Deep Learning and alike. Focus is on obtaining viable computational methods addressed to minimizing the empirical risk or prediction error; the resulting ROC curves are often not proper and the distinction between population and sample estimates of many objects - including ROC curves themselves - is often blurred or simply ignored.

Contrarily to this trend - which has undoubtedly many advantages, including challenging statisticians with new computing intensive ideas - we have shown that the diagnostic strength of the theoretically optimal LR based classification rule is strictly related to how concentrated a certain probability measure is with respect to another. We have also demonstrated how this relationship is deeply rooted in a few fundamental concepts from classical Statistics.

References

Cifarelli, D.M. and Regazzini, E. (1987). On a general definition of concentration function. Sankhya: Ind. J. Stat., Series B 49, 307–319.

Colton, T. and Armitage, P. (2005). Encyclopedia of biostatistics. John Wiley & Sons.

Egan, J.P. (1975). Signal Detection Theory and ROC Analysis. Academic Press, London.

Fisher, R.A. (1936). The use of multiple measurements in taxonomic problems. Ann. Eugen. 7, 179–188.

Gasparini, M. and Sacchetto, L. (2020). On the definition of a concentration function relevant to the ROC curve. Metron 78, 271–277.

Hillis, S.L. (2016). Equivalence of binormal likelihood-ratio and bi-chi-squared ROC curve models. Stat. Med. 35, 2031–2057.

Krzanowski, W.J. and Hand, D.J. (2009). ROC Curves for continuous data. Chapman and hall/CRC.

Lee, W.C. (1999). Probabilistic analysis of global performances of diagnostic tests: interpreting the lorenz curve-based summary measures. Stat. Med. 18, 455–471.

Lehmann, E.L. (1986). Testing statistical hypotheses. Springer.

Metz, C.E. and Pan, X. (1999). “Proper” binormal ROC curves: theory and maximum-likelihood estimation. J. Math. Psych. 43, 1–33.

Pepe, M.S. (2003). The statistical evaluation of medical tests for classification and prediction. Oxford University Press.

Regazzini, E. (1992). Concentration comparisons between probability measures. Sankhya: Ind. J. Stat., Series B 54, 129–149.

Schechtman, E. and Schechtman, G. (2019). The relationship between Gini terminology and the ROC curve. Metron 77, 171–178.

Su, J.Q. and Liu, J.S. (1993). Linear combinations of multiple diagnostic markers. J. the Am. Stat. Assoc. 88, 1350–1355.

Zou, K.H., Liu, A., Bandos, A.I., Ohno-Machado, L. and Rockette, H.E. (2011). Statistical evaluation of diagnostic performance: topics in ROC analysis. CRC Press.

Funding

MG funded by the Italian Ministry of Education, University and Research, MIUR, grant Dipartimenti di Eccellenza 2018–2022 (E11G18000350001). Open access funding provided by Politecnico di Torino within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

The authors declare that they have no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gasparini, M., Sacchetto, L. Concentration and ROC Curves, Revisited. Sankhya A 85, 292–305 (2023). https://doi.org/10.1007/s13171-021-00244-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13171-021-00244-5