Abstract

We propose a verification method for specification of homoclinic orbits as application of our previous work for constructing local Lyapunov functions by verified numerics. Our goal is to specify parameters appeared in the given systems of ordinary differential equations (ODEs) which admit homoclinic orbits to equilibria. Here we restrict ourselves to cases that each equilibrium is independent of parameters. The feature of our methods consists of Lyapunov functions, integration of ODEs by verified numerics, and Brouwer’s coincidence theorem on continuous mappings. Several techniques for constructing continuous mappings from a domain of parameter vectors to a region of the phase space are shown. We present numerical examples for problems in 3 and 4-dimensional cases.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Recently numerical verification has been widely applied to studying dynamical systems together with a lot of applications (e.g. [2, 3, 5, 7,8,9, 12, 16, 24,25,26,27, 29]). The second and the third authors have also proposed methods for local Lyapunov functions constructed by numerical verification as tools of analysis of dynamical systems [16].

In study of Lyapunov functions, it is a usual manner to construct global Lyapunov functions or Lyapunov functions with wide domains, not only in theoretical studies but also in studies applying numerical verification [8, 9]. Nevertheless, combining with numerical verification methods, we assert local Lyapunov functions with small domains are important tools to analyze dynamical systems according to the following reasons.

-

It is possible to construct local Lyapunov functions for a wide class of dynamical systems which have hyperbolic equilibria or fixed points.

-

Domains of the local Lyapunov functions can be specified by numerical verification.

-

There are several numerical verification methods to specify trajectories in continuous dynamical systems and sequences in discrete dynamical systems, e.g. Lohner method, Taylor model and power series arithmetic [10, 11, 13, 14, 20]. It is possible to analyze global dynamics by information of trajectories or sequences together with some features around equilibria or fixed points obtained by local Lyapunov functions.

In order to show usefulness of local Lyapunov functions as tools of analysis, we propose a verification method for specification of homoclinic orbits based on combination of verified numerics and local Lyapunov functions.

Early works on connecting orbits including homoclinic orbits for systems of ordinary differential equations (ODEs) by verified numerics are those of Zgliczyński and/or Wilczak [26, 27], and many successive works are provided. On the other hand, Arioli, Koch, Barker, Oishi etc. gave some results on existence proof of connecting orbits and their stability [2, 3, 19]. Around 2011, Lessard and others succeeded in capturing connecting orbits by using analytical methods, which lead to lots of results including bifurcation of connecting orbits and further topics on partial differential equations and delay differential equations [5, 7, 12].

These methods are roughly sorted into two ways, topological methods and analytical methods. A representative one of topological methods is covering relation by Arioli [4] including application of mapping degree. As a typical one of analytical methods, we mention the method using parameterization and radii polynomials by Lessard and others where contraction mappings are explicitly constructed. From this point of view, our proposing method in this paper can be regarded to have the both aspects since mapping degree is adopted as a topological method and Lyapunov function is explicitly constructed in an analytical way.

Our method on proving existence of connecting orbits consists of Lyapunov functions, integration of ODEs by verified numerics, and Brouwer’s coincidence theorem on continuous mappings. Some techniques in order to construct a continuous mapping from a domain of parameter vectors to a region of phase space are presented. Once such a continuous mapping is specified, one can apply both of Brouwer’s fixed point theorem and Brouwer’s coincidence theorem to proving the existence of a parameter vector admitting a connecting orbit. In our case we adopt Brouwer’s coincidence theorem with calculation of degree of a continuous mapping by verified numerics. These ideas are based on Master’s thesis of Yamano [28] supervised by the second author and advised by the third author.

We deal with autonomous systems of ODEs with a parameter vector \({{\varvec{p}}}\):

where D is a closed domain in \({\mathbb {R}}^{n-\nu }\) with a positive integer \(\nu\) which is mentioned below. In the whole arguments, we suppose the following :

Assumption 1.1

-

For each \({{\varvec{p}}} \in D\), (1.1) has a hyperbolic equilibrium \({{\varvec{x}}}^*({{\varvec{p}}})\) that is a saddle with \((n - \nu )\)-dimensional unstable manifold and \(\nu\)-dimensional stable manifold for every \({{\varvec{p}}} \in D\).

-

The equilibrium \({{\varvec{x}}}^*({{\varvec{p}}})\) depends continuously on \({{\varvec{p}}}\).

In this article, we restrict ourselves to the system (1.1) with an equilibrium \({{\varvec{x}}}^*\) which is independent of the parameter vector \({{\varvec{p}}}\). This simplification permits us to avoid complicated mathematical arguments and to explain our method more understandable, though we believe that our method can be extended to the cases where equilibria depend on \({{\varvec{p}}}\).

Let \(\varphi :{\mathbb {R}} \times {\mathbb {R}}^{n} \times {\mathbb {R}}^{n-\nu } \rightarrow {\mathbb {R}}^{n}\) be a flow generated by (1.1), namely \(\varphi (t, {{\varvec{x}}};\, {{\varvec{p}}})\) is the solution of (1.1) with an initial point \({\varvec{x}} \in {\mathbb {R}}^{n}\) for a parameter vector \({\varvec{p}} \in {\mathbb {R}}^{n-\nu }\). The definition of our Lyapunov functions is as follows.

Definition 1.2

((local) Lyapunov function) Let \(D_L \subset {\mathbb {R}}^n\) be a closed subset. If a \(C^1\)-function \(L:D_L \times D \rightarrow {\mathbb {R}}\) satisfies the following conditions, it is called a (local) Lyapunov function.

-

1.

\((dL/dt)(\varphi (t,\,{\varvec{x}};\,\,{\varvec{p}}),\,{\varvec{p}})|_{t=0} \le 0\) holds for each solution orbit \(\{\varphi (t,\,{\varvec{x}};\,\,{\varvec{p}})\}\) through \({\varvec{x}} \in D_L\).

-

2.

\((dL/dt)(\varphi (t,\,{\varvec{x}};\,\,{\varvec{p}}),\,{\varvec{p}})|_{t=0} = 0\) implies \(\varphi (t,\,{\varvec{x}};\,\,{\varvec{p}}) = {\varvec{x}}^*\) for all \(t \in {\mathbb {R}}\).

We call the domain \(D_{L}\) a Lyapunov domain.

By virtue of the numerical verification methods proposed in [16], a Lyapunov function can be defined for each fixed \({\varvec{p}}\) with a local domain including only one equilibrium \({\varvec{x}}^*\), and takes both positive and negative values reflecting the property that \({\varvec{x}}^*\) is a saddle point.

Fix a parameter vector \({\varvec{p}} \in D\). Since \({\varvec{x}}^*\) is a hyperbolic equilibrium of (1.1), we can construct a local Lyapunov function in a quadratic form

within a domain \(D_{L}({\varvec{p}})\) which is specified by numerical verification [16]. We calculate \(Y({\varvec{p}}) \in {\mathbb {R}}^{n \times n}\) as a real symmetric matrix from the information of eigenvalues of the Jacobian matrix \(D{\varvec{f}}^{*}({\varvec{p}}) = D_{{\varvec{x}}}{\varvec{f}} ({\varvec{x}}^*,\,{\varvec{p}})\) with respect to \({\varvec{x}}\) at the equilibrium \({\varvec{x}}^{*}\). Our method employs the local Lyapunov functions with relatively small domains in order to construct a continuous mapping F from the parameter set D to the phase space and apply Brouwer’s coincidence theorem.

The rest of the present paper is organized as follows. We show an outline of our method in the next section and define the mappings \(F_{1}\) and \(F_{2}\) which construct F. In Sect. 3, construction of Lyapunov functions and its validity based on [16] are explained. Note that the method in [16] is slightly modified since our ODEs have parameters. We state Brouwer’s coincidence theorem and verification methods to compute mapping degree in Sect. 4. In Sect. 5, two lemmas on properties of our Lyapunov functions and one theorem concerning continuous mappings appeared in Sect. 8, which are proved in preparation of mathematical arguments in the successive sections. We consider a mapping called Lyapunov Tracing and prove its continuity in Sect. 6, which plays an important role in our framework. In Sect. 7, our numerical procedure with verified computation to specify homoclinic orbits is shown, which precedes mathematical arguments of construction of the continuous mappings \(F_{1}\), \(F_{2}\) and F. We prove that \(F_{1}\) is well-defined and continuous in Sect. 8. Unstable manifold theorem plays a central role in the proof. In Sect. 9, we describe how to construct the continuous mapping \(F_{2}\) and F, and state the reason why our method verifies the parameters which admits a homoclinic orbit. Some results of numerical examples are shown in Sect. 10 including a system of 3-dimensional ODEs for illustration of our method and a 4-dimensional system of ODEs admitting the 2-dimensional unstable manifold and the 2-dimensional stable manifold of an equilibrium.

2 Outline of our method

We describe an outline of our approach before detailed explanation for verification of homoclinic orbits. Let \(\tilde{{\varvec{p}}} \in D\) be a parameter vector which is calculated as an approximation to a parameter vector admitting a homoclinic orbit. Take a small closed domain \(D_p \subset D\) including \(\tilde{{\varvec{p}}}\) as an interior point. Note that \(D_p\) is taken to be homeomorphic to a closed ball in \({\mathbb {R}}^{n-\nu }\) in order to apply Brouwer’s coincidence theorem.

Our goal is to prove that there exists a parameter vector in \(D_p\) which admits a homoclinic orbit. First we construct Lyapunov functions with respect to \({\varvec{x}}^*\). The domain \(D_{p}\) should be sufficiently small so that we can take a common matrix \(Y \in {\mathbb {R}}^{n\times n}\) as \(Y({\varvec{p}})\) independently of \({\varvec{p}} \in D_{p}\). In particular, our Lyapunov function has the form of

Note that the form of the Lyapunov function does no longer depend on the parameter vector \({\varvec{p}}\), but the domain of the Lyapunov function is still depending on \({\varvec{p}}\).

As is mentioned in Sect. 3, the domain \(D_{L}({\varvec{p}})\) of \(L({\varvec{x}})\) for each \({\varvec{p}}\) can be defined to be compact and convex. Moreover we define the compact and convex set \(D_L\) by

which is called a Lyapunov domain.

The subsets \(L^0\), \(L^+\) and \(L^-\) of \(D_L\) are defined as follows:

In order to apply Brouwer’s coincidence theorem in our frame work, we take a hyperplane \(\Gamma \subset {\mathbb {R}}^{n-\nu }\) and define the projection \(P: {\mathbb {R}}^{n} \rightarrow \Gamma\). The projection P is supposed to satisfy the following:

-

For any \({\varvec{x}} \in L^0\cup L^{-}\), if

$$\begin{aligned}&P({\varvec{x}}) = P({\varvec{x}}^{*}) \end{aligned}$$holds, then \({\varvec{x}} = {\varvec{x}}^{*}\).

Construction of the projection P with the above feature is described in Sect. 7.

Let \(W^s({\varvec{x}}^*;\,{\varvec{p}})\) and \(W^u({\varvec{x}}^*;\,{\varvec{p}})\) be the stable and the unstable manifold for \({\varvec{x}}^*\), respectively, which depend on a parameter vector \({\varvec{p}} \in D_{p}\). Let \(W^s_{c.c.}({\varvec{x}}^*;\,{\varvec{p}})\) and \(W^u_{c.c.}({\varvec{x}}^*;\,{\varvec{p}})\) be the connected componentFootnote 1 of \(W^s({\varvec{x}}^*;\,{\varvec{p}})\) and \(W^u({\varvec{x}}^*;\,{\varvec{p}})\) in \(D_L\) including \({\varvec{x}}^*\), respectively. Here \(W^s_{c.c.}({\varvec{x}}^*;\,{\varvec{p}})\) is the collection of points \(\mathbf{x} \in W^s({\varvec{x}}^*;\,{\varvec{p}}) \cap D_{L}\) connected directly with \({\varvec{x}}^*\) through a curve within \(W^s({\varvec{x}}^*;\,{\varvec{p}}) \cap D_{L}\), and \(W^u_{c.c.}({\varvec{x}}^*;\,{\varvec{p}})\) is defined in the similar manner. Since we only consider the connected component throughout this paper, we write \(W^s_{c.c.}({\varvec{x}}^*;\,{\varvec{p}})\) as \(W^s({\varvec{x}}^*;\,{\varvec{p}})\) and \(W^u_{c.c.}({\varvec{x}}^*;\,{\varvec{p}})\) as \(W^u({\varvec{x}}^*;\,{\varvec{p}})\) for simplicity.

Consider two continuous mappings

where \(B_{0} \subset \bigcup _{{\varvec{p}}\in D_{p}}W^u({\varvec{x}}^*;\,{\varvec{p}})\) and \(F_{1}({\varvec{p}})= ({\varvec{f}}_{1}({\varvec{p}}),\,{\varvec{p}})\) with a continuous mapping \({\varvec{f}}_{1}({\varvec{p}}): D_{p} \rightarrow B_{0} \cap W^u({\varvec{x}}^*;\,{\varvec{p}})\). The set \(B_{0}\) should be defined in order that there exists a certain \(T > 0\) to attain \(\varphi (T,\,{\varvec{x}};\,{\varvec{p}}) \in L^{+}\) for any \({\varvec{x}} \in B_{0}\) and \({\varvec{p}} \in D_{p}\). The mapping \(F_{2}({\varvec{x}},\,{\varvec{p}})\) is defined by using the flow \({\varvec{y}}=\varphi (T,\,{\varvec{x}};\,{\varvec{p}})\) with the domain \(B_{0}\) and \(\varphi (t_{0}({\varvec{y}}),\,{\varvec{y}};\,{\varvec{p}}) \in L^{0}\) with a positive time \(t_{0}({\varvec{y}})\) for each \({\varvec{y}} \in L^{+}\). Thus \(F_2({\varvec{x}},\,{\varvec{p}}) \in L^0\) holds for every \({\varvec{x}} \in B_{0}\). Details are described in Sects. 7, 8 and 9.

Now we define the continuous mappings \(F, H: D_{p} \rightarrow \Gamma\) by

and apply Brouwer’s coincidence theorem with numerical verification of the mapping degree of F. This proves that F and H have a coincidence point, that is, \(F({\varvec{p}}^{*}) = H({\varvec{p}}^{*}) \in \Gamma\) holds for some \({\varvec{p}}^{*} \in D_{p}\). We obtain \(F_{2} \circ F_{1}({\varvec{p}}^{*}) = {\varvec{x}}^{*}\) using the feature of the projection P, which implies that there exits a flow from \({\varvec{f}}_{1}({\varvec{p}}^{*}) \in W^u({\varvec{x}}^*;\,{\varvec{p}}^{*})\) satisfying

Namely there exists a parameter vector \({\varvec{p}}={\varvec{p}}^{*} \in D_{p}\) which admits a homoclinic orbit. The expression (2.1) indicates that difficultly of the computation may be caused by taking the limit \(t\rightarrow \infty\) in the definition of the continuous mapping \(F_{2}\). In order to avoid the difficulty, we adopt a tool called Lyapunov Tracing explained in Sect. 6.

Remark 2.1

There is a possibility to adopt some fixed point theorems to prove the existence of \({\varvec{p}}^{*}\) instead of the coincidence theorem. For example, apply a nonlinear solver of verified numerics based on a fixed point theorem to a nonlinear equation \({\mathcal {F}}({\varvec{p}}) = \varvec{0}\) with \({\mathcal {F}}({\varvec{p}}):= F({\varvec{p}}) - H({\varvec{p}})\). However, there might be a difficulty in computation of \({\mathcal {F}}({\varvec{p}})\) for \({\varvec{p}}\) in a neighborhood of \({\varvec{p}}^{*}\) which makes the verification process fail since we need long time integration to get the image \(F({\varvec{p}})\) for \({\varvec{p}}\) very close to \({\varvec{p}}^{*}\), which causes so much computational error. Note that we can avoid such difficulty around \({\varvec{p}}^{*}\) by using the coincidence theorem and Lyapunov Tracing.

3 Lyapunov functions

In this section, we show the procedure to construct Lyapunov functions and to specify the domains of Lyapunov functions based on [16].

3.1 Construction procedure of Lyapunov functions

Consider the autonomous system (1.1). For each \({\varvec{p}} \in D\), it is possible to construct a quadratic form as a Lyapunov function by the following steps.

-

1.

Let \(D{\varvec{f}}^*({\varvec{p}})\) be the Jacobian matrix of \({\varvec{f}}({\varvec{x}} ;\,{\varvec{p}})\) with respect to \({\varvec{x}}\) at \({\varvec{x}} = {\varvec{x}}^*\). For simplicity, assume that \(D{\varvec{f}}^*({\varvec{p}})\) is diagonalizable by a nonsingular matrix \(X({\varvec{p}})\), which is generically valid, and we have

$$\begin{aligned} \Lambda ({\varvec{p}}) = X^{-1}({\varvec{p}}) D{\varvec{f}}^*({\varvec{p}}) X({\varvec{p}}), \end{aligned}$$where \(\Lambda ({\varvec{p}}) =\)diag\((\lambda _1({\varvec{p}}),\lambda _2({\varvec{p}}),\ldots ,\lambda _n({\varvec{p}}))\) with eigenvalues \(\lambda _k({\varvec{p}})\). Note that \(\Lambda ({\varvec{p}})\) and \(X({\varvec{p}})\) are sufficient to be calculated by floating-point arithmetic.

-

2.

Let \(I^*\) be a diagonal matrix \(I^*({\varvec{p}}) =\)diag\((i_1({\varvec{p}}),i_2({\varvec{p}}),\ldots ,i_n({\varvec{p}}))\), where

$$\begin{aligned} i_k({\varvec{p}}) = \left\{ \begin{array}{ll} 1,&{}\quad \mathrm{if~ Re}(\lambda _k({\varvec{p}})) <0, \\ -1,&{}\quad \mathrm{if~ Re}(\lambda _k({\varvec{p}})) >0. \end{array} \right. \end{aligned}$$Note that Re(\(\lambda _k({\varvec{p}})\)) is not 0 for \(k=1,\ldots ,n\) since each \({\varvec{x}}^*\) is assumed to be hyperbolic.

-

3.

Compute a real symmetric matrix \(Y({\varvec{p}}) = (Y_{ij}({\varvec{p}}))_{i,j=1}^n\) as follows:

$$\begin{aligned} {\hat{Y}}({\varvec{p}}) := X^{-H}({\varvec{p}}) I^*({\varvec{p}}) X^{-1}({\varvec{p}}),\quad Y({\varvec{p}}) := \mathrm{Re}({\hat{Y}}({\varvec{p}})), \end{aligned}$$where \(X^{-H}({\varvec{p}})\) denotes the inverse matrix of the Hermitian transpose \(X^H({\varvec{p}})\) of \(X({\varvec{p}})\), which is also sufficient to be calculated by floating-point arithmetic.

-

4.

Define the quadratic form by

$$\begin{aligned} L({\varvec{x}};\,\,{\varvec{p}}) := ({\varvec{x}}-{\varvec{x}}^*)^T Y({\varvec{p}}) ({\varvec{x}}-{\varvec{x}}^*), \end{aligned}$$which is our candidate of Lyapunov function around \({\varvec{x}}^*\).

In our verification methods for homoclinic orbits, we take \(\tilde{{\varvec{p}}} \in D\) which admits an approximate homoclinic orbit and put \(Y := Y(\tilde{{\varvec{p}}})\). If a parameter domain \(D_p\) is a sufficiently small neighborhood of \(\tilde{{\varvec{p}}}\), the quadratic form

is also a candidate of Lyapunov function for each \({\varvec{p}} \in D_p\). Then we define the domain \(D_{p}\) by a closed ball centered at \(\tilde{{\varvec{p}}}\) with a small radius so that the above \(L({\varvec{x}})\) is expected to be the Lyapunov function with a common Y.

3.2 Verification of Lyapunov domains

We define the Lyapunov domain as follows.

Definition 3.1

(Lyapunov domain) Take a compact and convex domain \(D_L({\varvec{p}})\) including the equilibrium \({\varvec{x}}^*\) of (1.1) as an interior point. If a Lyapunov function \(L({\varvec{x}},\,{\varvec{p}})\) in the sense of Definition 1.2 exists in \(D_L({\varvec{p}})\), then the domain is called a Lyapunov domain.

In order to ensure that \(D_L({\varvec{p}})\) is a Lyapunov domain, a verification procedure consisting of two stages is proposed in [16]. The following steps are referred to as Stage 1 in [16], while the present argument contains the parameter dependence of objects.

-

Define a matrix \(A({\varvec{x}};\,\,{\varvec{p}})\) for \({\varvec{x}}\in D_{L}({\varvec{p}})\), \({\varvec{p}} \in D_{p}\) by

$$\begin{aligned} A({\varvec{x}};\,\,{\varvec{p}}) = D{\varvec{f}}({\varvec{x}};\,\,{\varvec{p}})^T Y + Y D{\varvec{f}}({\varvec{x}};\,\,{\varvec{p}}). \end{aligned}$$Divide the domain \(D_L({\varvec{p}})\) into some subdomains \(D_{k}\), \(k=1, 2, \ldots , K\) and enclose each \(D_{k}\) by an interval vector \([{\varvec{x}}_{k}] \subset {\mathbb {R}}^{n}\). Then verify the negative definiteness of an interval matrix \(A([{\varvec{x}}_{k}];\,{\varvec{p}})\). An example of such a procedure is the following:

-

1.

Calculate a matrix \(A({\varvec{x}}_{k};\tilde{{\varvec{p}}})\) for an interior point \({\varvec{x}}_{k}\) in \([{\varvec{x}}_{k}]\) by floating-point arithmetic. Diagonalize \(A({\varvec{x}}_{k};\tilde{{\varvec{p}}})\) approximately, namely calculate a matrix \(X_{k}\) such that

$$\begin{aligned} \Lambda _{k} = (X_{k})^{-1} A({\varvec{x}}_{k};\tilde{{\varvec{p}}}) X_{k} \end{aligned}$$should be an approximately diagonal matrix. Note that \(X_{k}\) can be chosen as an orthogonal matrix since \(A({\varvec{x}}_{k};\tilde{{\varvec{p}}})\) is real and symmetric.

-

2.

Calculate an interval matrix \((X_{k})^{-1} A([{\varvec{x}}_{k}];\,{\varvec{p}})X_{k}\) by interval arithmetic with verified numerics. Let \([a_{k}]_{ij}\) denote the (i, j)th entry of the matrix, which takes an interval value.

-

3.

Verify

$$\begin{aligned}{}[a_{k}]_{ii} + \sum _{j \ne i} |[a_{k}]_{ij}| < 0 \end{aligned}$$with interval arithmetic for \(i = 1,2,\ldots ,n\), and apply the Gershgorin Circle theorem to the interval matrix.

-

1.

Note that if the above verification is succeeded for all \(k=1, 2, \ldots , K\), then

-

1.

The domain \(D_{L}({\varvec{p}})\) is verified to be a Lyapunov domain.

-

2.

The cone condition holds within \(D_{L}({\varvec{p}})\). See Sect. 5 for an explanation of the cone condition.

Define the compact and convex set \(D_L\) by

We also call \(D_L\) a Lyapunov domain. In actual computation, the parameter vector \({\varvec{p}} \in D_{p}\) is treated by an interval vector \([{\varvec{p}}] \subset D_{p}\) with a small radius.

4 Brouwer’s coincidence theorem and verification methods for mapping degree

We apply Brouwer’s coincidence theorem to proving the existence of homoclinic orbits, and numerical verification of mapping degree of continuous mappings is the essential technique in order to apply the theorem. In this section, the definition of the mapping degree and two theorems about the mapping degree applied in our verification method are stated.

The following theorem is known as Brouwer’s coincidence theorem.

Theorem 4.1

(e.g. [23]) Let \(B^n\) be a unit ball in \({\mathbb {R}}^n\), and \(S^{n-1}\) be the surface of \(B^n\). Consider a continuous mapping F : \(B^n \rightarrow B^n\) and let \(F|_{S^{n-1}}\) be the restriction of F to \(S^{n-1}\). The mapping degree of F is denoted by \(\mathrm{deg} F\).

If \(F|_{S^{n-1}} \subset S^{n-1}\) and \(\mathrm{deg} F|_{S^{n-1}} \ne 0\) holds, then for an arbitrary continuous mapping G : \(B^n \rightarrow B^n\), there exists a point \({\varvec{x}} \in B^n\) such that \(F({\varvec{x}}) = G({\varvec{x}})\).

When \(n=2\), the mapping degree can be defined as follows according to [23].

Definition 4.2

(Mapping degree of maps on \(S^1\)) Identify \(S^1 \subset {\mathbb {R}}^2\) with a unit circle in the complex plane and let f : \(S^1 \rightarrow S^1\) be a continuous mapping. Take an arbitrary pair \((t_0,x_0) \in {\mathbb {R}} \times [0,1]\) fixed and consider continuous mappings \(e, {\hat{f}}\) and \({\tilde{f}}\) given below:

Note that \({\tilde{f}}\) is uniquely defined under the condition \({\tilde{f}}(x_0) = t_0\) (see [23]).

The mapping degree of f is then defined as \(\mathrm{deg} f = {\tilde{f}}(1) - {\tilde{f}}(0)\).

The following theorem is called the Interval Simplex Theorem which gives a verification process to check the mapping degree not to be 0 in case of \(n=2\) [28].

Theorem 4.3

(Interval Simplex Theorem) Let \(f,e,{\hat{f}},{\tilde{f}}\) be given in Definition 4.2. For \(0< s_1< s_2 < 1\), take closed intervals \(V_1, V_2,V_3 \subset S^1\) satisfying

If \(V_1 \cap V_2 \cap V_3 = \emptyset\) holds, then \(\mathrm{deg} f = 1\) or \(-1\).

Proof

Take \(t_0,t_1,t_2\) as

From the definition of degree of mapping, if degf = \(k \in {\mathbb {Z}}\), then \({\tilde{f}}(1) = t_0 + \text{ deg }f = t_0 + k.\)

Note that, \(e(t_0) = e(t_0 + k)~(=e \circ {\tilde{f}}(1))\), and

holds. From these relations, each condition of

is in conflict with \(V_1 \cap V_2 \cap V_3 = \emptyset\). This fact implies that the order of \(t_0,t_1,t_2\) should be

or

regardless of k. Indeed, assuming \(t_1 \le t_0 \le t_2\), then

holds, which contradicts with \(V_1 \cap V_2 \cap V_3 = \emptyset\). Similarly other orders \(t_0 \le t_2 \le t_1, t_1 \le t_0 \le t_2, t_1 \le t_2 \le t_0, t_2 \le t_0 \le t_1\) also lead contradictions.

Now assume that \(k = 0\). Since \(e(t_0) = e \circ {\tilde{f}}(1)\), we have

for (4.1) and

for (4.2). Therefore the case of \(k = 0\) is in conflict with \(V_1 \cap V_2 \cap V_3 = \emptyset\) and degf is not 0.

For cases of \(k \ge 1\), the relation \(t_2 \le t_1 \le t_0 \le t_0 + k\) derives

which contradicts \(V_1 \cap V_2 \cap V_3 = \emptyset\), and thus, (4.2) does not hold.

Similarly, the relations \(t_0 \le t_1 \le t_0 + k \le t_2\) and \(t_0 \le t_0 + k \le t_1 \le t_2\) lead to contradiction since

and

holds, respectively. Therefore, \(t_0 \le t_1 \le t_2 \le t_0 + k\) is the only possible relation compatible with \(V_1 \cap V_2 \cap V_3 = \emptyset\).

On the other hand, if \(k \ge 2\) then \(t_0 + 1 \le t_1 + 1 \le t_0 + k\) derives \(e(t_1) = e(t_1 + 1) \in e([t_2,t_0 + k]) = e \circ {\tilde{f}}([s_2,1]) \subset V_3\). Therefore among the cases of \(k \ge 1\), \(k = 1\) is the only case compatible with \(V_1 \cap V_2 \cap V_3 = \emptyset\).

Similar arguments hold for the cases of \(k \le -1\). Consequently, if \(V_1 \cap V_2 \cap V_3 = \emptyset\) is satisfied, then deg\(f = 1\) or \(-1\). \(\square\)

The assumptions of Theorem 4.3 can be checked by interval arithmetic with verified computation which is used in our numerical examples. Note that we do not have to specify the mapping \({\tilde{f}}\) in the verification process. In the case of \(|\text{ deg }f|\) \(>1\) or \(n>2\), Aberth’s method [1] can be applied as a numerical verification method to the mapping degree though it is more complicated than the Interval Simplex Theorem.

5 Mathematical preliminaries

In this section, we show two lemmas on properties of our Lyapunov functions and one theorem about continuous mappings which are used in Sect. 8.

Lemma 5.1

Let \(D_{L}\) be a Lyapunov domain. Then

holds for any \({\varvec{x}}_1,\,{\varvec{x}}_2 \in D_L\) with \({\varvec{x}}_1 \ne {\varvec{x}}_2\) and any \({\varvec{p}} \in D_p\).

Proof

From the definition of L, we have

Consider a function \({\varvec{g}}:[0,1] \rightarrow {\mathbb {R}}^n\) given by

and the differential

Integrating \(\frac{d}{ds}{\varvec{g}}(s)\) over \(s \in [0,1]\), we obtain

Substituting it in (5.1), we have

From the negative definiteness of \(Df^T({\varvec{x}};\,\,{\varvec{p}}) Y + Y Df({\varvec{x}};\,\,{\varvec{p}})\) and convexity of \(D_{L}\) in our assumptions,

holds.\(\square\)

Remark 5.2

Lemma 5.1 leads to the cone condition

which is described in [30]. Here Q is a quadratic form and N is a given set with special properties called an h-set. Indeed, regarding Q and N as \(-L\) and \(D_L\) respectively, the cone condition is satisfied from Lemma 5.1.

Lemma 5.3

Let \(D_{L}\) be a Lyapunov domain. Then for any \({\varvec{x}}_1,\,{\varvec{x}}_2 \in D_L \cap W^u({\varvec{x}}^*;\,{\varvec{p}})\) with \({\varvec{x}}_1 \ne {\varvec{x}}_2\),

holds.

Proof

Putting \({\varvec{y}} = {\varvec{x}}_2 - {\varvec{x}}_1 + {\varvec{x}}^*\) and we shall prove

From \({\varvec{x}}_1,\,{\varvec{x}}_2 \in D_{L} \cap W^u({\varvec{x}}^*;\,{\varvec{p}})\)

holds for any negative time \(t < 0\). Therefore, Lemma 5.1 ensures

for any \(t<0\). Since \({\varvec{x}}_1,\,{\varvec{x}}_2\) are points on \(W^u({\varvec{x}}^*;\,{\varvec{p}})\), we have

and

Now assume that \(L({\varvec{y}})\) is 0, then some negative time \(t_1<0\) exists such that

holds. Let \({\varvec{y}}_1 = \varphi (t_1,\,{\varvec{x}}_2;\,{\varvec{p}}) - \varphi (t_1,\,{\varvec{x}}_1;\,{\varvec{p}}) + {\varvec{x}}^*\) and apply similar discussion to the above for \({\varvec{y}} = {\varvec{y}}_1\), we have

which is in conflict with \(L({\varvec{y}}_1) > 0\). Therefore,

holds.\(\square\)

The following theorem is used in Sect. 8 to prove continuous dependence of the intersection point of a \(\nu\)-dimensional ball and \(W^u({\varvec{x}}^*;\,{\varvec{p}})\) with respect to \({\varvec{p}} \in D_{p}\).

Theorem 5.4

Consider bounded closed sets \(D \subset {\mathbb {R}}^n\) and \(P \subset {\mathbb {R}}^m\) and continuous function \({\varvec{f}} : D \times P \rightarrow {\mathbb {R}}^n\). Assume that for each \({\varvec{p}} \in P\) there exists a unique \(\hat{{\varvec{x}}}({\varvec{p}}) \in D\) such that \({\varvec{f}}(\hat{{\varvec{x}}}({\varvec{p}}),\,{\varvec{p}}) = \varvec{0}\) holds. Then \(\hat{{\varvec{x}}}({\varvec{p}})\) is continuous with respect to \({\varvec{p}} \in P\).

Proof

Consider an arbitrary sequence \(\{{\varvec{p}}_j\}\) which satisfies \({\varvec{p}}_j \rightarrow {\varvec{p}}~(j \rightarrow \infty )\) for each fixed \({\varvec{p}} \in P\). From the assumption, \(\hat{{\varvec{x}}}({\varvec{p}}_j) \in D\) uniquely exists for each \({\varvec{p}}_j\). We should prove \(\hat{{\varvec{x}}}({\varvec{p}}_j) \rightarrow \hat{{\varvec{x}}}({\varvec{p}})~(j \rightarrow \infty )\).

Note that the zero set

of the function \({\varvec{f}}\) is a bounded closed set. Then, the sequence \(\{ \hat{{\varvec{x}}}({\varvec{p}}_j)\}\) has convergent subsequences by virtue of Bolzano–Weierstrass theorem. Let \(\{{\varvec{x}}_{j_k} \} \subset \{ \hat{{\varvec{x}}}({\varvec{p}}_j) \}\) be such a subsequence. Since D is closed, \({\varvec{x}}_{\infty } = \lim _{k \rightarrow \infty } {\varvec{x}}_{j_k} \in D\) and then

holds. When k approaches \(\infty\), \({\varvec{f}}({\varvec{x}}_{\infty },\,{\varvec{p}}) = \varvec{0}\) by virtue of continuity of \({\varvec{f}}\) and \({\varvec{f}}({\varvec{x}}_j,\,{\varvec{p}}_j) = \varvec{0}\) for any j. On the other hand, the point \(\hat{{\varvec{x}}}({\varvec{p}}) \in D\) such that \({\varvec{f}}(\hat{{\varvec{x}}}({\varvec{p}}),\,{\varvec{p}}) = \varvec{0}\) is unique from the assumption. Therefore, \({\varvec{x}}_{\infty } = \hat{{\varvec{x}}}({\varvec{p}})\) holds. This implies the limit of the sequence \(\{ \hat{{\varvec{x}}}({\varvec{p}}_j)\}\) exists and equals \(\hat{{\varvec{x}}}({\varvec{p}})\).\(\square\)

6 Lyapunov tracing: definition and properties

Lyapunov Tracing we shall use later is a mapping from a subset of Lyapunov domain to the surface \(L^0 = \left\{ {\varvec{x}} \in D_L\,|\,L({\varvec{x}}) = 0\right\}\). It is defined using the flow \(\varphi (t,\,{\varvec{x}};\,{\varvec{p}})\) and the time T such that \(\varphi (T,\,{\varvec{x}};\,{\varvec{p}}) \in L^0\) holds. In this section, we refer to the arguments in [17] and show that Lyapunov Tracing is continuous with respect to \({\varvec{x}}\) and \({\varvec{p}}\) under the following assumptions:

Assumption 6.1

There exist a closed ball \(B^+ \subset D_L\) including \({\varvec{x}}^*\) as an interior point and either of the following statements holds for any \({\varvec{p}} \in D_p\) and any \({\varvec{x}} \in B^+ \cap L^+\).

-

1.

There is a finite positive time \(T_0\) such that \(\varphi (t,\,{\varvec{x}};\,\,{\varvec{p}}) \in B^+ \cap L^+\) for all \(t \in [0,T_0)\) and \(\varphi (T_0,\,{\varvec{x}};\,\,{\varvec{p}}) \in B^+ \cap L^0\).

-

2.

\(\varphi (t,\,{\varvec{x}};\,{\varvec{p}}) \in L^+ \cap B^+\) for all \(t > 0\).

Hereafter let \(T_{0}({\varvec{x}},\,{\varvec{p}})\) denote \(T_{0}\) for \(({\varvec{x}},\,{\varvec{p}})\). Note that we treat the case when \(T_{0}\) is positive in this section, but similar arguments hold for the case when \(T_{0}\) is negative. See Sect. 8.

Remark 6.2

Roughly speaking, Lyapunov Tracing defined below is the mapping from points on a set onto the boundary (resp. a subset) through trajectories where the flow immediately (resp. eventually) leaves the set. Continuity of such mappings are well understood in the context of isolating blocks or index pairs (e.g. [6, 22]), in which case the boundary or the subset mentioned above, so-called the exit of blocks, does contain neither inner tangential points of trajectories nor invariant sets. Continuity of mappings onto the exit in such situations is characterized by means of strong deformation retracts and the idea is based on the implicit construction of Lyapunov functions of invariant sets. The spirit of the present argument is similar to the above situation, while the exit-like boundary including equilibria is our present interest. In particular, if \({\varvec{x}}\) is on stable or unstable manifolds of equilibria on the exit, the passage time T takes \(\pm \infty\), which is a nontrivial point for guaranteeing the continuity. Nevertheless, explicitly validated Lyapunov functions around equilibria can be applied to the continuity of mappings attaining the boundary points through solution trajectories. Here the mapping onto the boundary through trajectories is defined by means of level sets of Lyapunov functions, which is the origin of the name Lyapunov Tracing, and properties of Lyapunov functions is used to prove the continuity. When the parameter vector \({\varvec{p}} \in D_{p}\) is fixed, the continuity with respect to \({\varvec{x}}\) is proved in [17].

6.1 Setting and the definition

We consider the autonomous systems (1.1) and assume that Assumptions 1.1 and 6.1 hold. Fix \({\varvec{p}} \in D_p\) and \({\varvec{x}} \in B^+ \cap L^+\). By definition of \(L^+\), \(L({\varvec{x}})\) is a positive value. By Assumption 6.1 and monotonicity of Lyapunov functions, there exist a unique time \(t \ge 0\) for any \(L \in (0, L({\varvec{x}})]\) such that \(L = L(\varphi (t,\,{\varvec{x}};\,\,{\varvec{p}}))\) holds. Moreover by virtue of the implicit function theorem, we regard the time t as a differentiable function of L and write \(\varphi (t,\,{\varvec{x}};\,\,{\varvec{p}}) = \varphi (t(L),\,{\varvec{x}};\,\,{\varvec{p}})\).

Define the mapping \(R:(B^+ \cap L^+) \times D_p \ni ({\varvec{x}},\,{\varvec{p}}) \mapsto R({\varvec{x}},\,{\varvec{p}}) \in B^+ \cap L^0\) by

Note that

holds for any \(\varphi (t,\,{\varvec{x}};\,\,{\varvec{p}}) \in D_L \backslash \{ {\varvec{x}}^*\}\). As long as \(L_{\epsilon } \in (\,0\,,\, L({\varvec{x}})\,]\) there is a positive time \(t_{\epsilon } > 0\) with \(L_{\epsilon } = L(\varphi (t_{\epsilon },\,{\varvec{x}};\,\,{\varvec{p}}))\) such that

We write the last line of (6.2) as \(\varphi (t(L_{\epsilon }),\,{\varvec{x}};\,\,{\varvec{p}})\).

If \({\varvec{x}}\) is outside \(W^s({\varvec{x}}^*;\,{\varvec{p}})\), then the limit of (6.2) exists since

by \(T_{0} = T_0({\varvec{x}},\,{\varvec{p}})\) in Case 1 of Assumption 6.1. On the other hand, if \({\varvec{x}}\) is in \(W^s({\varvec{x}}^*;\,{\varvec{p}})\), the limit of (6.2) also exists since

holds. Summarizing, for each \(({\varvec{x}},\,{\varvec{p}}) \in (B^+ \cap L^+) \times D_p\), the mapping R given in (6.1) is well-defined.

Definition 6.3

(Lyapunov Tracing) We call the mapping \(R: (B^+ \cap L^+) \times D_p \rightarrow B^+ \cap L^0\) given in (6.1) a Lyapunov Tracing.

Continuity of Lyapunov Tracing is discussed in the next subsection.

6.2 The continuity of Lyapunov tracing R

Theorem 6.4

The Lyapunov Tracing R given in (6.1) is continuous on \((B^+ \cap L^+) \times D_p\).

Our proof is based on Theorem 4.1 in [17] and consists of two parts.

6.2.1 Continuity of R on \((B^+ \cap L^+) \backslash \overline{\bigcup _{{\varvec{p}} \in D_p}W^s({\varvec{x}}^*;\,{\varvec{p}})} \times D_p\)

For each \(l \in (0,L_{\max })\) with \(L_{\max } = \min _{{\varvec{p}} \in D_p}\max _{{\varvec{x}} \in L^+ \cap B^+} L({\varvec{x}})\), we set

and define the mapping \(R_l :B_l \times D_p \rightarrow X_l\) by

with \(T_l({\varvec{x}},\,{\varvec{p}}) \in (0,T_0({\varvec{x}},\,{\varvec{p}}))\) such that \(\varphi (T_l({\varvec{x}},\,{\varvec{p}}),\,{\varvec{x}};\,\,{\varvec{p}}) \in X_l({\varvec{p}})\) and \(\varphi (t,\,{\varvec{x}};\,\,{\varvec{p}}) \in B_l({\varvec{p}})\) for any \(t \in [0,T_l({\varvec{x}},\,{\varvec{p}}))\).

Note that \(T_l\) gives a continuous function \(T_l: B_l \times D_p \rightarrow {\mathbb {R}}^+\) and that \(R_l({\varvec{x}},\,{\varvec{p}})\) is continuous with respect to \(({\varvec{x}},\,{\varvec{p}}) \in B_l \times D_p\), which are proved in Lemma 6.5 in the following.

Lemma 6.5

The function \(T_l({\varvec{x}},\,{\varvec{p}})\) is continuous on \(B_l \times D_p\). Moreover the mapping \(R_l({\varvec{x}},\,{\varvec{p}})\) is also continuous on \(B_l \times D_p\).

Proof

Take an arbitrary point \(({\varvec{x}}_{\infty },\,{\varvec{p}}_{\infty }) \in B_l \times D_p\) and any sequence of points \(({\varvec{x}}_i,\,{\varvec{p}}_i) \in B_l \times D_p\), which converges to \(({\varvec{x}}_{\infty },\,{\varvec{p}}_{\infty })\). Let us divide the sequence into two subsequences.

-

Subsequence 1

. \(\{ ({\varvec{x}}_{i_k},\,{\varvec{p}}_{i_k}) \}_{k = 1,2,\ldots } \subset \{ ({\varvec{x}}_i,\,{\varvec{p}}_i) \}_{i = 1,2,\ldots }\) such that \(T_l({\varvec{x}}_{\infty },\,{\varvec{p}}_{\infty }) < T_l({\varvec{x}}_{i_k},\,{\varvec{p}}_{i_k})\) holds.

-

Subsequence 2

. \(\{ ({\varvec{x}}_{j_k},\,{\varvec{p}}_{j_k}) \}_{k = 1,2,\ldots } \subset \{ ({\varvec{x}}_i,\,{\varvec{p}}_i) \}_{i = 1,2,\ldots }\) such that \(T_l({\varvec{x}}_{\infty },\,{\varvec{p}}_{\infty }) \ge T_l({\varvec{x}}_{j_k},\,{\varvec{p}}_{j_k})\) holds.

We prove this lemma using the continuous dependence of solutions of initial value problems for ordinary differential equations on initial values and parameters, namely for any fixed \(T \in {\mathbb {R}}\),

holds as \(\Vert ({\varvec{x}}',\,{\varvec{p}}') - ({\varvec{x}},\,{\varvec{p}}) \Vert \rightarrow 0\), where \(\Vert ({\varvec{x}},\,{\varvec{p}}) \Vert := \Vert {\varvec{x}}\Vert + \Vert {\varvec{p}}\Vert\).

-

Subsequence 1

: \(T_l({\varvec{x}}_{\infty },\,{\varvec{p}}_{\infty }) < T_l({\varvec{x}}_{i_k},\,{\varvec{p}}_{i_k})\).

By the definition of \(T_l({\varvec{x}}_{i_k},\,{\varvec{p}}_{i_k})\), \(\varphi (T_l({\varvec{x}}_{\infty },\,{\varvec{p}}_{\infty }),\,{\varvec{x}}_{i_k};\,\,{\varvec{p}}_{i_k}) \in B_l({\varvec{p}}_{i_k})\) holds. Let \(L_k = L(\varphi (T_l({\varvec{x}}_{\infty },\,{\varvec{p}}_{\infty }),\,{\varvec{x}}_{i_k};\,\,{\varvec{p}}_{i_k});\,{\varvec{p}}_{i_k})\). We have \(L_k > l\) and

By (6.3), \(L_k\) converges to l as \(\Vert ({\varvec{x}}_{\infty },\,{\varvec{p}}_{\infty }) - ({\varvec{x}}_{i_k},\,{\varvec{p}}_{i_k})\Vert \rightarrow 0\). Noting that the integrand in the above integral is finite for \(l > 0\), we obtain \(\varphi (T_l({\varvec{x}}_{i_k},\,{\varvec{p}}_{i_k}),\,{\varvec{x}}_{i_k},\,{\varvec{p}}_{i_k}) \rightarrow \varphi (T_l({\varvec{x}}_{\infty },\,{\varvec{p}}_{\infty }),\,{\varvec{x}}_{i_k},\,{\varvec{p}}_{i_k})\) as \(L_k \rightarrow l\), which indicates that \(T_l({\varvec{x}}_{i_k},\,{\varvec{p}}_{i_k})\) converges to \(T_l({\varvec{x}}_{\infty },\,{\varvec{p}}_{\infty })\) as \(\Vert ({\varvec{x}}_{\infty },\,{\varvec{p}}_{\infty }) - ({\varvec{x}}_{i_k},\,{\varvec{p}}_{i_k})\Vert \rightarrow 0\) by the fact of no-intersections among solution trajectories in autonomous systems.

-

Subsequence 2

: \(T_l({\varvec{x}}_{\infty },\,{\varvec{p}}_{\infty }) \ge T_l({\varvec{x}}_{j_k},\,{\varvec{p}}_{j_k})\).

By Assumption 6.1, \(\varphi (T_l({\varvec{x}}_{j_k},\,{\varvec{p}}_{j_k}),\,{\varvec{x}}_{\infty };\,\,{\varvec{p}}_{\infty }) \in B^+ \cap L^+\) and \(0 < T_l({\varvec{x}}_{j_k},\,{\varvec{p}}_{j_k}) \le T_l({\varvec{x}}_{\infty },\,{\varvec{p}}_{\infty })\) holds. From the boundedness of \(T_l({\varvec{x}}_{j_k},\,{\varvec{p}}_{j_k})\), the upper limit of \(T_l({\varvec{x}}_{j_k},\,{\varvec{p}}_{j_k})\) and the lower limit of \(T_l({\varvec{x}}_{j_k},\,{\varvec{p}}_{j_k})\) take finite values and

holds. Let \(T_l^{\infty } := \varliminf _{k \rightarrow \infty } T_l({\varvec{x}}_{j_k},\,{\varvec{p}}_{j_k})\). From the property of lower limit, there exists a subsequence \(\left\{ \left( {\varvec{x}}_{j_{k_m}},\,{\varvec{p}}_{j_{k_m}} \right) \right\} _{m = 1,2,\ldots } \subset \{ ({\varvec{x}}_{j_k},\,{\varvec{p}}_{j_k}) \}_{k = 1,2,\ldots }\) such that \(T_{l}({\varvec{x}}_{j_{k_m}},\,{\varvec{p}}_{j_{k_m}})\) converges to \(T_l^{\infty }\). On the other hand we have

for \(m \rightarrow \infty\) by (6.3). These yield

which implies that \(L(\varphi (T_l^{\infty },\,{\varvec{x}}_{\infty };\,\,{\varvec{p}}_{\infty }))\) must be l and then \(T_l^{\infty } = T_l({\varvec{x}}_{\infty },\,{\varvec{p}}_{\infty })\) from the definition of \(T_l({\varvec{x}},\,{\varvec{p}})\) and \(T_l^{\infty } \le T_l({\varvec{x}}_{\infty },\,{\varvec{p}}_{\infty })\). This means \(\varlimsup _{k \rightarrow \infty } T_l({\varvec{x}}_{j_k},\,{\varvec{p}}_{j_k}) = \varliminf _{k \rightarrow \infty } T_l({\varvec{x}}_{j_k},\,{\varvec{p}}_{j_k}) = T_l({\varvec{x}}_{\infty },\,{\varvec{p}}_{\infty })\), and we conclude \(T_l({\varvec{x}}_{j_k},\,{\varvec{p}}_{j_k})\) converges to \(T_l({\varvec{x}}_{\infty },\,{\varvec{p}}_{\infty })\) as \(\Vert ({\varvec{x}}_{\infty },\,{\varvec{p}}_{\infty }) - ({\varvec{x}}_{j_k},\,{\varvec{p}}_{j_k})\Vert \rightarrow 0\).

It is obvious that the continuity of \(T_{l}\) ensures continuity of \(R_l({\varvec{x}},\,{\varvec{p}})\) on \(B_l \times D_p\) from the definition of \(R_{l}\). \(\square\)

Similarly continuity of \(T_0({\varvec{x}},\,{\varvec{p}})\) leads to continuity of R on \((B^+ \cap L^+) \backslash \overline{\bigcup _{{\varvec{p}} \in D_p}W^s({\varvec{x}}^*;\,{\varvec{p}})} \times D_{p}\). If we fix \({\varvec{p}} \in D_{p}\) the continuity of \(T_0({\varvec{x}},\,{\varvec{p}})\) with respect to \({\varvec{x}} \in (B^+ \cap L^+) \backslash \overline{W^s({\varvec{x}}^*;\,{\varvec{p}})}\) can be proved in an almost same manner as in Lemma 6.5 noting that the integrand in the right-hand side of (6.4) is still finite for \(l=0\). In order to prove the continuity of \(T_0({\varvec{x}},\,{\varvec{p}})\) with respect to \(\left( {\varvec{x}},\,{\varvec{p}} \right) \in (B^+ \cap L^+) \backslash \overline{\bigcup _{{\varvec{p}} \in D_p}W^s({\varvec{x}}^*;\,{\varvec{p}})} \times D_{p}\), we need the following lemma.

Lemma 6.6

For an arbitrary \({\varvec{p}} \in D_p\) and an arbitrary \({\varvec{x}} \in (L^+ \cap B^+) \backslash W^s({\varvec{x}}^*;\,{\varvec{p}})\) there exist \(r > 0\) and \(\delta > 0\) such that \({\varvec{x}}' \notin W^s({\varvec{x}}^*;\,{\varvec{p}}')\) holds for any \({\varvec{x}}'\) and \({\varvec{p}}'\) within \(\Vert {\varvec{x}}' - {\varvec{x}}\Vert < r\), \(\Vert {\varvec{p}}' - {\varvec{p}}\Vert < \delta\).

Proof

We derive contradiction assuming that, for any \(r>0\) and \(\delta > 0\), there exist \({\varvec{x}}' \in (L^+ \cap B^+)\) and \({\varvec{p}}' \in D_p\) such that

holds. Since the equilibrium point \({\varvec{x}}^*\) is a saddle, there are the stable eigenspace \(E^{\nu } = \text {span}\{ {\varvec{u}}_1, \ldots ,\,{\varvec{u}}_{\nu } \}\) and the unstable eigenspace \(E^{n - \nu } = \text {span}\{ {\varvec{u}}_{\nu + 1} ,\ldots ,\,{\varvec{u}}_n \}\) of \(D{\varvec{f}}^*(\tilde{\varvec{p}})\). Note that \({\mathbb {R}}^n = E^{\nu } \oplus E^{n - \nu }\) holds since \({\varvec{x}}^*\) is hyperbolic, which indicates the \(n \times n\) matrix \(U = ({\varvec{u}}_1,\,{\varvec{u}}_2,\ldots ,\,{\varvec{u}}_n)\) is invertible. We can write an arbitrary \({\varvec{x}} \in {\mathbb {R}}^n\) as

From Lemma 5.1, the cone condition is satisfied in \(D_L\) ( [16]). Thus there exists a function \(\varvec{h}_s:E^{\nu } \times D_p \rightarrow E^{n - \nu }\) (cf. [15, 30]) such that

holds for an arbitrary \({\varvec{q}} \in D_{p}\). Moreover \(\varvec{h}_s\) is Lipschitz continuous with respect to \(\varvec{\eta }\) and \({\varvec{q}}\), respectively.

Write \({\varvec{x}} = (\varvec{\eta },\varvec{\zeta })\) and \({\varvec{x}}' = (\varvec{\eta }',\varvec{\zeta }')\). From the assumptions, \(\varvec{\zeta } \ne \varvec{h}_s(\varvec{\eta },\,{\varvec{p}})\) and \(\varvec{\zeta }' = \varvec{h}_s(\varvec{\eta }',\,{\varvec{p}}')\) hold. Let \(\hat{{\varvec{x}}} = (\varvec{\eta },\varvec{h}_s(\varvec{\eta },\,{\varvec{p}})) \in W^s({\varvec{x}}^*;\,{\varvec{p}})\) and \(\epsilon _0 = \Vert \hat{{\varvec{x}}} - {\varvec{x}}\Vert\). Since \(\hat{{\varvec{x}}} \ne {\varvec{x}}\), \(\epsilon _0\) is positive.

Take r in (6.5) so that \(r < \frac{1}{4}\epsilon _0\) holds, and we have

On the other hand, from the continuity of \(\varvec{h}_s\), there exist \(r' > 0\) and \(\delta ' > 0\) for \(\frac{1}{4}\epsilon _0\) such that

holds.

Take r and \(\delta\) in (6.5) to be small enough so that \(({\varvec{x}},\,{\varvec{p}})\) and \(({\varvec{x}}',\,{\varvec{p}}')\) satisfy (6.8). Then the left-hand side of (6.7) is estimated from below as

which yields a contradiction.\(\square\)

Lemma 6.7

The function \(T_0({\varvec{x}},\,{\varvec{p}})\) is continuous on \((B^+ \cap L^+) \backslash \overline{\bigcup _{{\varvec{p}} \in D_p}W^s({\varvec{x}}^*;\,{\varvec{p}})} \times D_p\)

Proof

Take an arbitrary \({\varvec{p}}_{\infty } \in D_p\) and \({\varvec{x}}_{\infty } \in (B^+ \cap L^+) \backslash \overline{W^s({\varvec{x}}^*;\,{\varvec{p}}_{\infty })}\). From the Lemma 6.6 there exist \(r > 0\) and \(\delta > 0\) for \({\varvec{p}}_{\infty }\) and \({\varvec{x}}_{\infty }\) such that \(\Vert {\varvec{x}}' - {\varvec{x}}_{\infty }\Vert < r\), \(\Vert {\varvec{p}}' - {\varvec{p}}_{\infty }\Vert < \delta\) and \({\varvec{x}}' \notin W^s({\varvec{x}}^*;\,{\varvec{p}}')\) holds. Let

Take any sequence of points \(({\varvec{x}}_i,\,{\varvec{p}}_i) \in B_{r,\delta }\), \(i = 1,2,\ldots\), which converges to \(({\varvec{x}}_{\infty },\,{\varvec{p}}_{\infty })\). Since \({\varvec{x}}_{i} \notin W^s({\varvec{x}}^*;\,{\varvec{p}}_{i})\), the integrand in the right-hand side of (6.4) is finite with \(l = 0\) for each \(({\varvec{x}}_{i},\,{\varvec{p}}_{i}) \in B_{r,\delta }\). Then we can take similar argument to the proof of Lemma 6.5 and obtain the conclusion. \(\square\)

Lemma 6.7 lead to the continuity of \(R({\varvec{x}},\,{\varvec{p}})\) on \((B^+ \cap L^+) \backslash \overline{\bigcup _{{\varvec{p}} \in D_p} W^s({\varvec{x}}^*;\,{\varvec{p}})} \times D_p\) by the definition, namely we have the following proposition.

Proposition 6.8

The mapping \(R({\varvec{x}},\,{\varvec{p}})\) is continuous on \(\left( (B^+ \cap L^+) \backslash \overline{\bigcup _{{\varvec{p}} \in D_p} W^s({\varvec{x}}^*;\,{\varvec{p}})} \right) \times D_p\).

6.2.2 Continuity of R on \(\overline{\bigcup _{{\varvec{p}} \in D_p} W^s({\varvec{x}}^*;\,{\varvec{p}})} \times D_p\)

For a given \(r > 0\), consider a compact set \(D_r\) defined by

Also define

From the property of Lyapunov functions, \(\nabla _{x} L({\varvec{x}}) \cdot {\varvec{f}}({\varvec{x}};\,\,{\varvec{p}}) = \frac{d}{dt}L(\varphi (t,\,{\varvec{x}};\,\,{\varvec{p}}))\) is negative within \(D_r\) and \({\varvec{f}}({\varvec{x}};\,\,{\varvec{p}}) \ne \varvec{0}\) since \({\varvec{x}} \in D_r\). Then \(\alpha (r)\) takes a positive value by the continuity of \(\nabla _{x} L({\varvec{x}}) \cdot {\varvec{f}}({\varvec{x}};\,\,{\varvec{p}})\) with respect to \({\varvec{p}}\) and the compactness of \(D_p\). From the definition we have

as long as \(\varphi (t,\,{\varvec{x}};\,\,{\varvec{p}}) \in D_r\). Moreover the following lemma holds.

Lemma 6.9

Fix \({\varvec{p}} \in D_p\) and take any \(l \in (0,L_{\text {max}})\) and \({\varvec{x}} \in X_{l}\). Let \(r = \Vert {\varvec{x}} - {\varvec{x}}^*\Vert\) and assume that \(\varphi (t,\,{\varvec{x}}) \in D_r\) for \(0 \le t \le T_0({\varvec{x}},\,{\varvec{p}})\) holds. Then we have

Proof

Note that \({\varvec{x}} \in B^+ \backslash W^s({\varvec{x}}^*;\,{\varvec{p}})\) from the assumption \(\varphi (t,\,{\varvec{x}};\,\,{\varvec{p}}) \in D_r\) for \(0 \le t \le T_0({\varvec{x}},\,{\varvec{p}})\), which means \(T_0({\varvec{x}},\,{\varvec{p}})\) takes a finite positive value. Then using (6.9) we have

which implies (6.10). \(\square\)

Now we prove the following proposition.

Proposition 6.10

The mapping \(R({\varvec{x}},\,{\varvec{p}})\) is continuous on \(\left( B^+ \cap \overline{\bigcup _{{\varvec{p}} \in D_p}W^s ({\varvec{x}}^*;\,{\varvec{p}})} \right) \times D_p\).

Proof

Note that \(B^+ \cap \overline{W^s ({\varvec{x}}^*;\,{\varvec{p}})} = B^+ \cap W^s ({\varvec{x}}^*;\,{\varvec{p}})\) for each \({\varvec{p}} \in D_p\) ( [17]). Moreover \(W^s ({\varvec{x}}^*;\,{\varvec{p}})\) is represented as the graph of a Lipschitz function \(\varvec{h}_s(\varvec{\eta },\,{\varvec{p}})\). Then \({B^+ \bigcap (\bigcup _{{\varvec{p}} \in D_p}W^s ({\varvec{x}}^*;\,{\varvec{p}}))}\) is bounded and closed since \(B^+\) and \(D_p\) are compact. Therefore, \(B^+ \cap \overline{\bigcup _{{\varvec{p}} \in D_p}W^s ({\varvec{x}}^*;\,{\varvec{p}})} = B^+ \cap (\bigcup _{{\varvec{p}} \in D_p}W^s ({\varvec{x}}^*;\,{\varvec{p}}))\). Take an arbitrary \(({\varvec{z}},\,{\varvec{p}}) \in (B^+ \cap W^s ({\varvec{x}}^*;\,{\varvec{p}})) \times D_p\) and an arbitrary sequence \(\{({\varvec{x}}_i,\,{\varvec{p}}_i)\}_{i=1}^{\infty } \subset (B^+ \cap L^+) \times D_p\) which converges to \(({\varvec{z}},\,{\varvec{p}})\). We show that \(\lim _{i \rightarrow \infty } R({\varvec{x}}_i,\,{\varvec{p}}_i) = R({\varvec{z}},\,{\varvec{p}}) = {\varvec{x}}^*\) by reductio ad absurdum.

Let \({\varvec{y}}_i = R({\varvec{x}}_i,\,{\varvec{p}}_i) \in B^+ \cap L^0\). Since \(B^+ \cap L^0\) is bounded and closed, the sequence \(\{ {\varvec{y}}_i \}\) has a subsequence \(\{ {\varvec{y}}_{i_{j}} \}_{j = 1}^{\infty }\) which converges to \({\varvec{y}}^*\in B^+ \cap L^0\). We prove the contradiction assuming that \({\varvec{y}}^*\ne {\varvec{x}}^*\). Define a positive value r by \(r := \frac{1}{2} \Vert {\varvec{y}}^*- {\varvec{x}}^*\Vert\). Take a sufficiently large finite time \(t_{l_0}\) such that \(\Vert \varphi (t_{l_0},\,{\varvec{z}};\,\,{\varvec{p}}) - {\varvec{x}}^*\Vert < r\) holds, which is possible since \({\varvec{z}} \in W^s({\varvec{x}}^*;\,{\varvec{p}})\). Let \(l_0 = L(\varphi (t_{l_0},\,{\varvec{z}};\,\,{\varvec{p}});\,{\varvec{p}})\) and note \(R_{l_0}({\varvec{z}},\,{\varvec{p}}) = \varphi (t_{l_0},\,{\varvec{z}};\,\,{\varvec{p}})\). By virtue of the continuity of \(R_{l_0}({\varvec{z}},\,{\varvec{p}})\) from Lemma 6.5, \(\Vert R_{l_0}({\varvec{x}}_{i_j},\,{\varvec{p}}_{i_j}) - {\varvec{x}}^*\Vert < r\) holds for sufficiently large j. Then take some large J such that \(\Vert R_{l_0}({\varvec{x}}_{i_j},\,{\varvec{p}}_{i_j}) - {\varvec{x}}^*\Vert < r\) and \(\Vert {\varvec{y}}^*- {\varvec{y}}_{i_j}\Vert < \frac{1}{2}r\) hold for any \(j \ge J\).

Let \({\varvec{x}}_{l_0} = R_{l_0}({\varvec{x}}_{i_j},\,{\varvec{p}}_{i_j})\) for \(j \ge J\). Since \(\Vert {\varvec{x}}_{l_0} - {\varvec{x}}^*\Vert < r\) and \(\varphi (T_0({\varvec{x}}_{l_0},\,{\varvec{p}}_{i_j}),\,{\varvec{x}}_{l_0};\,\,{\varvec{p}}_{i_j}) = {\varvec{y}}_{i_j} \in D_r({\varvec{p}})\) from \(\Vert {\varvec{y}}^*- {\varvec{y}}_{i_j}\Vert < \frac{1}{2}r\), there exists a time \(t_l \in (0,T({\varvec{x}}_{l_0},\,{\varvec{p}}_{i_j}))\) such that \(\Vert \varphi (t_l,\,{\varvec{x}}_{l_0};\,\,{\varvec{p}}_{i_j}) - {\varvec{x}}^*\Vert = r\) and \(\varphi (t,\,{\varvec{x}}_{l_0};\,\,{\varvec{p}}_{i_j}) \in D_r\) hold for any \(t \in [t_l,T_0({\varvec{x}}_{l_0},\,{\varvec{p}}_{i_j})]\).

Let \({\varvec{x}}_l := \varphi (t_l,\,{\varvec{x}}_{l_0};\,{\varvec{p}}_{i_j})\) and \(l := L({\varvec{x}}_l,\,{\varvec{p}}_{i_j}) < l_0\). Applying Lemma 6.9 to \({\varvec{x}} = {\varvec{x}}_l\), we have

and

This inequality and \(\Vert {\varvec{y}}^*- {\varvec{x}}^*\Vert = 2r\) imply

On the other hand, we can take an arbitrarily large time \(t_{l_0}\) while r is fixed. Therefore \(l_0\) and \(l < l_0\) can be taken arbitrarily small and it is possible to have \(\frac{l}{\alpha (r)} < \frac{1}{2}r\). This contradicts (6.11).Footnote 2\(\square\)

7 Numerical procedure

Before describing mathematical arguments for the existence of homoclinic orbits, we explain our numerical procedures so that readers can use our method as a tool for analysis of dynamical systems. Several figures to illustrate our procedures are shown in Sect. 10 with numerical examples. Through the procedures we use verified computation as necessary together with interval arithmetic [18].

The first procedure shows how to specify the points on the unstable manifolds and gives sufficient conditions to construct the continuous mapping \(F_{1}\) mentioned in Sect. 1.

Procedure 1

-

1.

Find a parameter vector \(\tilde{{\varvec{p}}}\) and \({\varvec{x}}_{0} \in {\mathbb {R}}^{n}\) which admits an approximate homoclinic orbit \(\{ \varphi (t,\,{\varvec{x}}_{0};\tilde{{\varvec{p}}}) ~|~t \in {\mathbb {R}} \}\) by floating-point arithmetic. See Fig. 3 in Sect. 10. Take \(D_p \subset {\mathbb {R}}^{n-\nu }\) as a box-shaped closed domain defined by an interval vector \([D_p] \subset {\mathbb {R}}^{n-\nu }\) including \(\tilde{{\varvec{p}}}\) as an interior point. We assume the radius of each interval element of \([D_p]\) is small enough. Hereafter we use \(D_{p}\) in mathematical expression and \([D_{p}]\) in verified computation with intervals.

-

2.

Construct the symmetric matrix Y which gives the Lyapunov function \(L({\varvec{x}})\) as described in Sect. 3. Verify that \(L({\varvec{x}}) = ({\varvec{x}} - {\varvec{x}}^*)^T Y ({\varvec{x}} - {\varvec{x}}^*)\) is a Lyapunov function around the equilibrium \({\varvec{x}}^*\) by specifying the domain \(D_L\) of \(L({\varvec{x}})\) by verified computation. Specification of \(D_{L}\) is carried out by repetitive computation to obtain the closed convex domain \(D_{L}\) as large as possible such that the interval matrix \(Df({\varvec{x}}; [D_{p}])^T Y + Y\,Df({\varvec{x}};[D_{p}])\) is negative definite for an arbitrary \({\varvec{x}} \in D_{L}\). See Fig. 4 in Sect. 10.

-

3.

Compute interval vectors \([\varvec{v}_i] \subset {\mathbb {R}}^{n}, i=1,\ldots ,n\) by verified computation which contain orthonormal eigenvectors \(\varvec{v}_i, i=1,\ldots ,n\) of the symmetric matrix Y, namely \(Y \varvec{v}_i = \lambda _i\varvec{v}_i, i = 1,\ldots ,n,\) for \(\lambda _1 \ge \lambda _2 \ge \ldots \ge \lambda _{\nu }> 0 > \lambda _{\nu +1} \ge \ldots \ge \lambda _n\), and \(\Vert \varvec{v}_i\Vert = 1,~i = 1,2,\ldots ,n\) with \(\varvec{v}_i^T \varvec{v}_j = 0\) for \(i \ne j\). Computer programs for the verified computation of eigenvectors can be found in INTLAB, for example [21]. Put matrices

$$\begin{aligned} A^{+}&:= \left( \varvec{v}_{1}~ \varvec{v}_{2}~\ldots ~\varvec{v}_{\nu }\right) \in {\mathbb {R}}^{n\times \nu },\\ A^{-}&:= \left( \varvec{v}_{\nu +1}~ \varvec{v}_{\nu +2}~\ldots ~\varvec{v}_n \right) \in {\mathbb {R}}^{n\times ( n - \nu )} \end{aligned}$$and interval matrices

$$\begin{aligned}{}[A^{+}]&:= \left( [\varvec{v}_{1}]~ [\varvec{v}_{2}]~\ldots ~[\varvec{v}_{\nu }]\right) \subset {\mathbb {R}}^{n\times \nu },\\ [A^{-}]&:= \left( [\varvec{v}_{\nu +1}]~ [\varvec{v}_{\nu +2}]~\ldots ~[\varvec{v}_n] \right) \subset {\mathbb {R}}^{n\times ( n - \nu )}. \end{aligned}$$Note that the positive definiteness of \((A^{+})^T Y A^{+}\) and the negative definiteness of \((A^{-})^T Y A^{-}\) are ensured by numerical verification of \([A^{+}]^T Y [A^{+}]\) and \([A^{-}]^T Y [A^{-}]\), respectively.

-

4.

Find \(r^- > 0\) such that a closed ball \(B^- = \{ {\varvec{x}} \in D_L ~|~ \Vert {\varvec{x}} - {\varvec{x}}^*\Vert \le r^- \}\) satisfies the following requirements (see also Assumption 8.1 below). Let \(\partial B^-\) denote the boundary of \(B^-\). Divide \(\partial B^- \cap L^{-}\) into subdomains enclosed by interval vectors \([B^-]_k \subset {\mathbb {R}}^n,k = 1,2,\ldots ,K_{0}\). Then verify if either of the following conditions holds for each k:

-

(I)

\({\varvec{n}}({\varvec{x}})^T {\varvec{f}}({\varvec{x}};\,{\varvec{p}}) > 0\) for arbitrary \({\varvec{x}} \in \partial B^{-}\cap [B^-]_{k}\) and \({\varvec{p}} \in D_p\), where \({\varvec{n}}({\varvec{x}})\) denotes the outward normal vector of \(\partial B^-\) at \({\varvec{x}}\).

-

(II)

There exists a negative time \(T^-_{k}\) such that \(L(\varphi (T^-_{k},\,{\varvec{x}})) > 0\) holds for arbitrary \({\varvec{x}} \in [B^-]_{k}\) and \({\varvec{p}} \in D_p\) and \(\varphi (t,\,{\varvec{x}};\,\,{\varvec{p}}) \in D_L\) holds for any \(t \in [T^-_{k}, 0]\).

These conditions are checked by verified numerics. Interval computation of \({\varvec{n}}([B^-]_k)^T {\varvec{f}}([B^-]_k; [D_p])\) can be used for the condition (I). If the verification is not succeeded, do trial and error to find an appropriate \(r^-\).

-

(I)

-

5.

Choose a point \(\tilde{{\varvec{x}}} = \sum _{i=1}^n {\tilde{x}}_i \varvec{v}_i \in \{ \varphi (t,\,{\varvec{x}}_{0};\tilde{{\varvec{p}}}) ~|~ t \in {\mathbb {R}} \} \cap \left( B^{-}\cap L^{-} \right)\) and define the \(\nu\)-dimensional subset \(\Sigma \subset {\mathbb {R}}^n\) by

$$\begin{aligned} \Sigma = \left\{ {\varvec{x}} \in {\mathbb {R}}^n \,|\,\,{\varvec{x}} = \sum _{i=1}^n x_i \varvec{v}_i, x_{\nu +1} = {\tilde{x}}_{\nu + 1},\ldots , x_n = {\tilde{x}}_n \right\} . \end{aligned}$$ -

6.

Take a \(\nu\)-dimensional ball \(B_0(\tilde{{\varvec{x}}},r) = \left\{ \,\,{\varvec{x}} \in \Sigma \,|\,~ \Vert {\varvec{x}} - \tilde{{\varvec{x}}}\Vert \le r \, \right\} \subset (\Sigma \cap B^- \cap L^-)\) as a candidate set which should include a point of \(W^u({\varvec{x}}^*;\,{\varvec{p}})\) for any \({\varvec{p}} \in D_p\). See Fig. 5 in Sect. 10. Divide the boundary \(\partial B_0(\tilde{{\varvec{x}}},r)\) of the ball \(B_0(\tilde{{\varvec{x}}},r)\) into subdomains included by interval vectors \([{\varvec{b}}]_k \subset {\mathbb {R}}^n\), \(k = 1,2,\ldots ,K\). Verify that there exists a negative time \(T_1({\varvec{x}}_{b},\,{\varvec{p}})\) such that \(L(\varphi (T_1({\varvec{x}}_{b},\,{\varvec{p}}),\,{\varvec{x}}_{b})) = 0\) for every \({\varvec{x}}_{b} \in [{\varvec{b}}]_{k}\), \({\varvec{p}} \in D_{p}\) and k (\(k = 1,\ldots ,K\)). Moreover specify an interval vector \([{\varvec{x}}]_{k}\) for each k (\(k = 1,\ldots ,K\)), which satisfies \(\varphi (T_1({\varvec{x}}_{b},\,{\varvec{p}}),\,{\varvec{x}}_{b};\,{\varvec{p}}) \in [{\varvec{x}}]_{k}\) for every \({\varvec{x}}_{b} \in [{\varvec{b}}]_{k}\) and \({\varvec{p}} \in D_{p}\). This will be carried out by finding times \(0> T_{k}^{-} > T_{k}^{+}\) such that

$$\begin{aligned} L(\varphi (T_k^{-}, [{\varvec{b}}]_{k}; [D_p]))< 0 < L(\varphi (T_k^{+}, [{\varvec{b}}]_{k}; [D_p])) \end{aligned}$$holds and by using interval Lohner’s method, for example.

-

7.

Define the subspace \(\Gamma ^{-} \subset {\mathbb {R}}^n\) by

$$\begin{aligned} \Gamma ^{-} = \{ {\varvec{x}} \in {\mathbb {R}}^n \,|\, (A^{-})^T {\varvec{x}} = \varvec{0} \} \end{aligned}$$(7.1)and the projection \(P^{-}:{\mathbb {R}}^n \rightarrow \Gamma ^{-}\) by \(P^{-} = I - A^{-}(A^{-})^T\), where I is the identity mapping on \({\mathbb {R}}^n\). Calculate an interval matrix \([P^{-}] \subset {\mathbb {R}}^{n \times n}\) by \([P^{-}] = I - [A^{-}][A^{-}]^T\).

-

8.

Verify the following conditions using \([P^{-}]\).

-

The set \(\bigcup _{k=1,\ldots ,K}[P^{-}][{\varvec{x}}]_{k}\) contains a closed surface \(S^{-} \subset \Gamma ^{-}\) which is homeomorphic to sphere in \(\Gamma ^{-}\).

-

The domain \(C^{-} \subset \Gamma ^{-}\) surrounded by the closed surface \(S^{-}\) includes \([P^{-}]{\varvec{x}}^*\).

-

\([P^{-}]{\varvec{x}}^*\notin \bigcup _{k=1,\ldots ,K}[P^{-} ][{\varvec{x}}]_{k}\).

See Fig. 6 for 3-dimensional case and Fig. 10 for 4-dimensional case in Sect. 10.

-

-

9.

Verify that deg(\(P^{-} \circ \varphi (T_1({\varvec{x}}_{b},\,{\varvec{p}}),\,{\varvec{x}}_{b};\,\,{\varvec{p}})\)) with respect to \({\varvec{x}}_{b} \in \partial B_0(\tilde{{\varvec{x}}}, r)\) is not 0 for each fixed \({\varvec{p}} \in D_p\) by using Theorem 4.3 or Aberth’s method and interval arithmetic with respect to \([P^{-}]\), \([{\varvec{b}}]_k\) and \([D_p]\). Then the ball \(B_0(\tilde{{\varvec{x}}},r)\) includes a point of \(W^u({\varvec{x}}^*,\,{\varvec{p}})\) for each \({\varvec{p}} \in D_p\), which is proved in the next section. Note: Trial and error may be necessary to take an appropriate value of r in Step 6 in order to satisfy the conditions in Steps 7, 8 and 9.

We confirm sufficient conditions to construct the continuous mappings \(F_{2}\) and F by Procedure 2 as follows, where \(F_{2}\) and F are appeared in Sect. 1.

Procedure 2

-

1.

Find \(r^+ > 0\) such that a closed ball \(B^+ = \{ {\varvec{x}} \in D_L ~|~ \Vert {\varvec{x}} - {\varvec{x}}^*\Vert \le r^+ \}\) mentioned in Assumption 6.1 satisfies the following. Let \(\partial B^+\) denote the boundary of \(B^+\). Divide \(\partial B^+ \cap L^{+}\) into subdomains included by interval vectors \([B^+]_k \subset {\mathbb {R}}^n,k = 1,2,\ldots ,K'_{0}.\) Verify that either of the following holds for each k.

- (I)\('\):

-

\({\varvec{n}}({\varvec{x}})^T {\varvec{f}}({\varvec{x}};\,{\varvec{p}}) < 0\) for arbitrary \({\varvec{x}} \in [B^+]_{k}\) and \({\varvec{p}} \in D_p\), where \({\varvec{n}}({\varvec{x}})\) denotes the outward normal vector of \(\partial B^+\) at \({\varvec{x}}\). Interval computation of \({\varvec{n}}([B^+]_k)^T {\varvec{f}}([B^+]_k; [D_p])\) can be used for the verification.

- (II)\('\):

-

There exists a positive time \(T^+_{k}\) such that \(L(\varphi (T^+_{k},\,{\varvec{x}};\,\,{\varvec{p}})) < 0\) holds for arbitrary \({\varvec{x}} \in [B^+]_{k}\) and \({\varvec{p}} \in D_p\) and \(\varphi (t,\,{\varvec{x}};\,\,{\varvec{p}}) \in D_L\) holds for any \(t \in [0, T^+_{k}]\).

If the verification is not succeeded, do trial and error to find an appropriate \(r^+\).

-

2.

Verify that there exists a positive time \(T_2\) such that \(\varphi (T_2,[B_0(\tilde{{\varvec{x}}},r)] ;[D_p]) \subset B^{+}\cap L^{+}\) holds, where \([B_0(\tilde{{\varvec{x}}},r)]\) is an interval vector or a union of interval vectors which includes \(B_0(\tilde{{\varvec{x}}},r)\). See Fig. 7 in Sect. 10.

-

3.

Divide the boundary \(\partial D_p\) of \(D_p\) into subdomains included by interval vectors \([{\varvec{d}}]_k \subset {\mathbb {R}}^n\), \(k = 1,2,\ldots ,K'\). Verify that there exists a positive time \(T_3({\varvec{x}},\,{\varvec{p}}_{b}) > T_{2}\) such that \(L(\varphi (T_3({\varvec{x}},\,{\varvec{p}}_{b}),\,{\varvec{x}})) = 0\) for every \({\varvec{x}} \in B_0(\tilde{{\varvec{x}}},r)\), \({\varvec{p}}_{b} \in [{\varvec{d}}]_k\) and k (\(k = 1,\ldots ,K'\)). Moreover specify an interval vector \([{\varvec{x}}']_{k}\) for each k (\(k = 1,\ldots ,K'\)), which satisfies \(\varphi (T_3({\varvec{x}},\,{\varvec{p}}_{b}),\,{\varvec{x}};\,{\varvec{p}}_{b}) \in [{\varvec{x}}']_{k}\) for every \({\varvec{x}} \in B_0(\tilde{{\varvec{x}}},r)\) and \({\varvec{p}}_{b} \in [{\varvec{d}}]_{k}\). See Fig. 8 in Sect. 10.

-

4.

Define the subspace \(\Gamma ^{+} \subset {\mathbb {R}}^n\) by

$$\begin{aligned} \Gamma ^{+} = \{ {\varvec{x}} \in {\mathbb {R}}^n \,|\, (A^{+})^T {\varvec{x}} = \varvec{0} \} \end{aligned}$$and the projection \(P^{+}:{\mathbb {R}}^n \rightarrow \Gamma ^{+}\) by \(P^{+} = I - A^{+}(A^{+})^T\) and an interval matrix \([P^{+}] = I - [A^{+}][A^{+}]^{T}\), where I is the identity mapping on \({\mathbb {R}}^n\).

-

5.

Verify the following conditions using \([P^{+}]\).

-

The set \(\bigcup _{k=1,\ldots ,K'}[P^+][{\varvec{x}}']_{k}\) contains a closed surface \(S^{+} \subset \Gamma ^{+}\) which is homeomorphic to sphere in \(\Gamma ^{+}\).

-

The domain \(C^{+} \subset \Gamma ^{+}\) surrounded by the closed surface \(S^{+}\) includes \([P^+]{\varvec{x}}^*\).

-

\([P^+]{\varvec{x}}^*\notin \bigcup _{k=1,\ldots ,K'}[P^+] [{\varvec{x}}']_{k}\).

See Fig. 9 for 3-dimensional case and Fig. 11 for 4-dimensional case in Sect. 10.

-

-

6.

Verify that deg(\(P^+ \circ \varphi (T_3({\varvec{x}},\,{\varvec{p}}_{b}),\,{\varvec{x}};\,{\varvec{p}}_{b}))\) with respect to \({\varvec{p}}_{b} \in \partial D_p\) is not 0 for each fixed \({\varvec{x}} \in B_0(\tilde{{\varvec{x}}},r)\) using Theorem 4.3 or Aberth’s method and interval arithmetic with respect to \([P^{+}]\), \([B_0(\tilde{{\varvec{x}}},r)]\) and \([{\varvec{d}}]_k\). Then \(D_p\) includes a parameter vector \({\varvec{p}}^{*} \in D_{p}\) which admits a homoclinic orbit from \({\varvec{x}}^{*}\) to itself.

8 Construction of the mapping \(F_1\)

To see that the above numerical procedures definitely provide the existence of a homoclinic orbit, we begin with constructing the mapping

where \(F_{1}({\varvec{p}})= ({\varvec{f}}_{1}({\varvec{p}}),\,{\varvec{p}})\) with \({\varvec{f}}_{1}({\varvec{p}}) \in W^u({\varvec{x}}^*;\,{\varvec{p}})\) which are proved to be well-defined from Theorems 8.4 and 8.6. Suppose that the Lyapunov function \(L({\varvec{x}})\) is defined and the Lyapunov domain \(D_L\) is specified. Note that for each fixed \({\varvec{p}} \in D_p\), the point \({\varvec{x}}^*\) is the unique equilibrium of (1.1) in \(D_L\) [16]. Furthermore, we assume the following assumption which is the counterpart of Assumption 6.1 in the unstable direction.

Assumption 8.1

There exist a closed ball \(B^- \subset D_L\) including \({\varvec{x}}^*\) as an interior point and either of the following statements holds for any \({\varvec{p}} \in D_p\) and any \({\varvec{x}} \in B^- \cap L^-\).

-

1.

There is a finite negative time \(T_1\) such that \(\varphi (t,\,{\varvec{x}};\,\,{\varvec{p}}) \in B^- \cap L^-\) for all \(t \in (T_1, 0]\) and \(\varphi (T_1,\,{\varvec{x}};\,\,{\varvec{p}}) \in B^- \cap L^0\).

-

2.

\(\varphi (t,\,{\varvec{x}};\,\,{\varvec{p}}) \in L^- \cap B^-\) for all \(t < 0\).

Let \(T_{1}({\varvec{x}},\,{\varvec{p}})\) denote \(T_{1}\) for \(({\varvec{x}},\,{\varvec{p}})\).

Assumption 8.1 should be checked by numerical verification. Note that Step 4 of Procedure 1 ensures Assumption 8.1.

The following steps prepare the conditions to specify the points of \(W^u({\varvec{x}}^*;\,{\varvec{p}})\) for \({\varvec{p}} \in D_p\).

-

(i)

Let \(\varvec{v}_i, i=1,\ldots ,n\) be the orthonormal eigenvectors of the symmetric matrix Y, and define the matrices \(A^{+} \in {\mathbb {R}}^{n\times \nu }\) and \(A^{-} \in {\mathbb {R}}^{n\times ( n - \nu )}\) as same as Step 3 in Procedure 1.

-

(ii)

Let \(\{ \varphi (t,\,{\varvec{x}}_{0};\tilde{{\varvec{p}}}) ~|~ t \in {\mathbb {R}} \}\) be an approximate homoclinic orbit. Chose a point \(\tilde{{\varvec{x}}} \in \{ \varphi (t,\,{\varvec{x}}_{0};\tilde{{\varvec{p}}}) ~|~ t \in {\mathbb {R}} \} \cap (B^- \cap L^-)\) and represent it by \(\tilde{{\varvec{x}}} = \sum _{i=1}^{n}{\tilde{x}}_{i}\varvec{v}_{i}\). Define the \(\nu\)-dimensional subset \(\Sigma \subset {\mathbb {R}}^n\) by

$$\begin{aligned}&\Sigma = \left\{ \,\,{\varvec{x}} \in {\mathbb {R}}^{n}\,\left| \,\,{\varvec{x}} = \sum _{i=1}^{n} x_{i}\varvec{v}_{i}, \; x_{j}={\tilde{x}}_{j} \text{ for } j=\nu +1, \ldots , n \right. \, \right\} . \end{aligned}$$Take a \(\nu\)-dimensional ball \(B_0(\tilde{{\varvec{x}}},r) = \left\{ {\varvec{x}} \in \Sigma \,|\,~ \Vert {\varvec{x}} - \tilde{{\varvec{x}}}\Vert \le r \right\}\) on \(\Sigma \cap L^-\), which is expected to have an intersection point with \(W^u({\varvec{x}}^*;\,{\varvec{p}})\) for any \({\varvec{p}} \in D_p\). These are specified in Steps 5 and 6 of Procedure 1.

-

(iii)

Let \(R^{-} : B_0(\tilde{{\varvec{x}}},r) \times D_p \ni ({\varvec{x}},\,{\varvec{p}}) \mapsto {\varvec{y}} \in L^0({\varvec{p}})\) be the Lyapunov Tracing \(R^{-}({\varvec{x}};\,\,{\varvec{p}}) = \varphi (T_1({\varvec{x}},\,{\varvec{p}}),\,{\varvec{x}};\,\,{\varvec{p}})\), where \(T_1({\varvec{x}},\,{\varvec{p}}) \in [-\infty ,0)\) is a time such that \(\varphi (T_1({\varvec{x}},\,{\varvec{p}}),\,{\varvec{x}};\,\,{\varvec{p}}) \in L^0({\varvec{p}})\) holds.Footnote 3 Note that \(T_{1}({\varvec{x}}_{b},\,{\varvec{p}})\) is estimated for each \({\varvec{x}}_{b} \in \partial B_0(\tilde{{\varvec{x}}},r)\) and \({\varvec{p}} \in D_{p}\) in Step 6 of Procedure 1.

-

(iv)

Define the subspace \(\Gamma ^{-} \subset {\mathbb {R}}^n\) by \(\Gamma ^{-} = \{ {\varvec{x}} \in {\mathbb {R}}^n \,|\, (A^{-})^T {\varvec{x}} = \varvec{0} \}\) and the projection \(P^{-}:{\mathbb {R}}^n \rightarrow \Gamma ^{-}\) by \(P^{-} = I - A^{-}(A^{-})^T\) as Step 7 in Procedure 1. Then the following lemma holds.

Lemma 8.2

If \(P^{-}({\varvec{x}}) = P^{-}({\varvec{x}}^*)\) for \({\varvec{x}} \in L^0 \cup L^+\), then \({\varvec{x}} = {\varvec{x}}^*\).

Proof

From the definition of \(L({\varvec{x}})\),

holds for \({\varvec{x}} \in L^0 \cup L^+\). Moreover the definition of \(P^{-}\) indicates

and the left-hand side is equal to \(P^{-}({\varvec{x}} - {\varvec{x}}^*) = P^{-}{\varvec{x}} - P^{-}{\varvec{x}}^*= \varvec{0}\) from the assumption of the lemma, which yield \({\varvec{x}} - {\varvec{x}}^*= A^{-}(A^{-})^T ({\varvec{x}} - {\varvec{x}}^*)\). Substituting it in (8.1), we have

On the other hand, as mentioned in Step 3 of Procedure 1, \((A^{-})^T Y A^{-}\) is negative definite and this implies \((A^{-})^T({\varvec{x}} - {\varvec{x}}^*) = \varvec{0}\). Then

which indicates the conclusion. \(\square\)

-

(xxii)

Put \({\varvec{y}}^*= P^{-}({\varvec{x}}^*)\). Take a \(\nu\)-dimensional ball \(B^-({\varvec{y}}^*,\epsilon )\) on \(\Gamma ^{-}\) as \(B^-({\varvec{y}}^*,\epsilon ) = \{ {\varvec{y}} \in \Gamma ^{-} \,|\, ~ \Vert {\varvec{y}} - {\varvec{y}}^*\Vert \le \epsilon \}\) for \(\epsilon > 0\). The radius \(\epsilon\) can be taken arbitrarily small. Finally define the continuous mapping \(Q^{-}: \Gamma ^{-} \rightarrow \Gamma ^{-}\) by

$$\begin{aligned} Q^{-}({\varvec{y}}) = {\left\{ \begin{array}{ll} \epsilon \frac{{\varvec{y}} - {\varvec{y}}^*}{\Vert {\varvec{y}} - {\varvec{y}}^*\Vert } &{} \text {if }{\varvec{y}} \notin B^-({\varvec{y}}^*,\epsilon ),\\ {\varvec{y}} &{} \text {if }{\varvec{y}} \in B^-({\varvec{y}}^*,\epsilon ), \end{array}\right. } \end{aligned}$$(8.2)where \(\epsilon \frac{{\varvec{y}} - {\varvec{y}}^*}{\Vert {\varvec{y}} - {\varvec{y}}^*\Vert }\) is an intersection point of a line segment between \({\varvec{y}}^*\) and \({\varvec{y}} \in \Gamma ^{-}\) with the boundary \(\partial B^-({\varvec{y}}^*,\epsilon )\) of \(B^-({\varvec{y}}^*,\epsilon )\).

Remark 8.3

Note that \(Q^{-}\) is a retraction from \(\Gamma ^{-}\) to \(B^-({\varvec{y}}^*,\epsilon )\) which clarifies correspondence between our method and Brouwer’s coincidence theorem. Since \(Q^{-}\) is introduced from theoretical reason, one can omit the construction of \(Q^{-}\) in practical verification process. See examples in Sect. 10.

Now we derive the conditions to prove that the ball \(B_0(\tilde{{\varvec{x}}},r)\) includes points of \(W^u({\varvec{x}}^*;\,{\varvec{p}})\).

Theorem 8.4

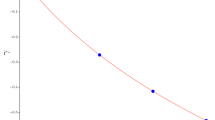

Define the continuous mapping \(G^{-} = Q^{-} \circ P^{-} \circ R^{-} : B_0(\tilde{{\varvec{x}}},r) \times D_p \rightarrow B^-({\varvec{y}}^*,\epsilon ) \subset \Gamma ^{-}\). Fix a parameter vector \({\varvec{p}} \in D_p\). Define the mapping \(G^{-}_S : \partial B_0(\tilde{{\varvec{x}}},r) \rightarrow B^-({\varvec{y}}^*,\epsilon )\) by the restriction \(G^{-}_S = G^{-}|_{\partial B_0(\tilde{{\varvec{x}}},r)}\) (see Fig. 1), and assume that the following conditions hold.

-

\(G^{-}_S(\partial B_0(\tilde{{\varvec{x}}},r)) \subset \partial B^-({\varvec{y}}^*,\epsilon )\),

-

\(\mathrm{deg} G^{-}_S \ne 0\).

Then the ball \(B_0(\tilde{{\varvec{x}}},r)\) includes at least one point of \(W^u({\varvec{x}}^*;\,{\varvec{p}})\) for the fixed \({\varvec{p}} \in D_p\).

The mapping \(G^-_S = (Q^{-} \circ P^{-} \circ R^{-})|_{\partial B_0(\tilde{{\varvec{x}}},r)}\) A schematic illustration of \(G^-_S = (Q^{-} \circ P^{-} \circ R^{-})|_{\partial B_0(\tilde{{\varvec{x}}},r)}\). a The mapping \(P^- \circ R^-(\partial B_0(\tilde{{\varvec{x}}},r))\) in the case of \(n=3\) and \(\nu = 2\). The cone appeared in this figure is \(L^0\) and interior of the cone is \(L^-\). The green disk is \(B_0(\tilde{{\varvec{x}}},r)\). The closed curve on \(L^0\) is the image of \(R^-(\partial B_0(\tilde{{\varvec{x}}},r))\) and the closed curve on the plane \(\Gamma ^-\) is the image of \(P^- \circ R^-(\partial B_0(\tilde{{\varvec{x}}},r))\). b The retraction \(Q^-\). The red circle is \(B^-({\varvec{x}}^*,\epsilon )\) and the closed curve is the image of \(P^-\circ R^-(\partial B_0(\tilde{{\varvec{x}}},r))\) (color figure online)

Proof

From the assumptions of the theorem and Brouwer’s coincidence theorem (Theorem 4.1), there exists a point \({\varvec{x}}_0 \in B_0(\tilde{{\varvec{x}}},r)\) for an arbitrary continuous mapping \(H({\varvec{x}}):B_0(\tilde{{\varvec{x}}},r) \rightarrow B^-({\varvec{y}}^*,\epsilon )\) such that

holds. When we take H as a constant mapping \(H({\varvec{x}}) = P^{-}({\varvec{x}}^*)\), the point \({\varvec{x}}_0\) satisfies the following equation:

Combining this with the definition of \(Q^{-}\) and Lemma 8.2, we have

namely,

This implies that \({\varvec{x}}_0\) is a point of \(W^u({\varvec{x}}^*;\,{\varvec{p}})\). Therefore, \(B_0(\tilde{{\varvec{x}}},r)\) includes the intersection point \({\varvec{x}}_0\) of \(\Sigma ^{-}\) and \(W^u({\varvec{x}}^*;\,{\varvec{p}})\) for the fixed parameter vector \({\varvec{p}} \in D_p\). \(\square\)

Corollary 8.5

If the conditions in Steps 8 and 9 of Procedure 1 are verified, then the ball \(B_0(\tilde{{\varvec{x}}},r)\) includes points of \(W^u({\varvec{x}}^*;\,{\varvec{p}})\) for all \({\varvec{p}} \in D_{p}\).

Proof

Put \({\varvec{y}}^*= P^{-}({\varvec{x}}^*)\) and consider a \(\nu\)-dimensional ball \(B^-({\varvec{y}}^*,\epsilon )\) on \(\Gamma ^{-}\) as \(B^-({\varvec{y}}^*,\epsilon ) = \{ {\varvec{y}} \in \Gamma ^{-} \,|\, ~ \Vert {\varvec{y}} - {\varvec{y}}^*\Vert \le \epsilon \}\) with a positive constant \(\epsilon\). From the conditions of Step 8, it is possible to take a sufficiently small \(\epsilon > 0\) such that the set \(\bigcup _{{\varvec{x}}_{b} \in \partial B_0(\tilde{{\varvec{x}}}, r)}P^{-} \circ \varphi (T_1({\varvec{x}}_{b},\,{\varvec{p}}),\,{\varvec{x}}_{b} ;\,{\varvec{p}})\) exists in the exterior of the ball \(B^-({\varvec{y}}^*,\epsilon )\) for any \({\varvec{p}} \in D_p\). This leads to

from the definition of \(Q^{-}\). Moreover Step 9 of Procedure 1 verifies

and hence all assumptions of Theorem 8.4 are satisfied. \(\square\)

Since \(D_{L}\) is a Lyapunov domain, we can apply Lemma 5.3 to showing the uniqueness of the intersection point of \(B_0(\tilde{{\varvec{x}}},r)\) and \(W^u({\varvec{x}}^*;\,{\varvec{p}})\).

Theorem 8.6

If an intersection point of \(B_0(\tilde{{\varvec{x}}},r)\) and \(W^u({\varvec{x}}^*;\,{\varvec{p}})\) exists, then it is unique for each \({\varvec{p}} \in D_p\).

Proof

By virtue of Lemma 5.3, it is sufficient to show

for any \({\varvec{p}} \in D_p\) and any \({\varvec{x}}_1,\,{\varvec{x}}_2 \in B_0(\tilde{{\varvec{x}}},r)\). From the definition of \(\Sigma\), \({\varvec{x}}_2 - {\varvec{x}}_1\) is written as

Thus,

holds. \(\square\)

Theorems 8.4 and 8.6 show that, for each \({\varvec{p}} \in D_{p}\), there exists the unique point of \(B_{0}(\tilde{{\varvec{x}}} , r) \cap W^u({\varvec{x}}^*;\,{\varvec{p}})\), which is denoted by \({\varvec{f}}_{1}({\varvec{p}})\). Then \({\varvec{f}}_{1}\) gives a mapping from \(D_{p}\) to \(B_{0}(\tilde{{\varvec{x}}} , r) \cap \left( \bigcup _{{\varvec{p}}\in D_{p}}W^u({\varvec{x}}^*;\,{\varvec{p}}) \right)\). The mapping \(F_{1}\) is defined by \(F_{1}({\varvec{p}})= ({\varvec{f}}_{1}({\varvec{p}}),\,{\varvec{p}})\). To prove continuity of the mapping \(F_1\), we apply Theorem 5.4. In Sect. 6, we have defined the stable eigenspace \(E^{\nu } = \text {span}\{ {\varvec{u}}_1, \ldots ,\,{\varvec{u}}_{\nu } \}\) and the unstable eigenspace \(E^{n - \nu } = \text {span}\{ {\varvec{u}}_{\nu + 1} ,\ldots ,\,{\varvec{u}}_n \}\) of \(D{\varvec{f}^*}(\tilde{{\varvec{p}}})\), and pointed out that the \(n \times n\) matrix \(U = ({\varvec{u}}_1,\,{\varvec{u}}_2,\ldots ,\,{\varvec{u}}_n)\) is invertible. By the similar discussion to Sect. 6, a Lipschitz function \(\varvec{h}_u:E^{n-\nu } \times D_p \rightarrow E^{\nu }\) exists and the unstable manifold \(W^u({\varvec{x}}^*;\,{\varvec{p}})\) is represented as

Let

be an \((n - \nu ) \times n\) matrix. Then we can write \(\varvec{\zeta } = A U^{-1} {\varvec{x}}\).

We define the continuous mappings \({\varvec{g}}:{\mathbb {R}}^n \times {\mathbb {R}}^{n-\nu } \rightarrow {\mathbb {R}}^n\) by

For \({\varvec{x}} \in B_0(\tilde{{\varvec{x}}},r)\), if \({\varvec{g}}({\varvec{x}},\,{\varvec{p}}) = 0\), then \({\varvec{x}}\) is in \(W^u({\varvec{x}}^*;\,{\varvec{p}})\). By virtue of Theorem 8.6, an intersection point of \(B_0(\tilde{{\varvec{x}}},r)\) and \(W^u({\varvec{x}}^*;\,{\varvec{p}})\) is unique for each \({\varvec{p}} \in D_p\). Therefore, by taking \(D_p\), \(B_0(\tilde{{\varvec{x}}},r)\) and \({\varvec{g}}\) for P, D and \({\varvec{f}}\) respectively in Theorem 5.4, it is proved that intersection point of \(B_0(\tilde{{\varvec{x}}},r)\) and \(W^u({\varvec{x}}^*;\,{\varvec{p}})\) is continuous with respect to \({\varvec{p}} \in D_p\). This leads to the continuity of \(F_1\) on \(D_p\).

9 Verification method of homoclinic orbits

Let us prove the existence of homoclinic orbits supposing that \(F_1\) is defined in the manner of the previous section. We assume Assumption 6.1, which is verified by Step 1 in Procedure 2, and the following in addition.

Assumption 9.1

There exists a positive time \(T_{2}\) \((0< T_{2} < \infty )\) such that \(\varphi (T_{2},\,{\varvec{x}};\,\,{\varvec{p}}) \in B^+\cap L^+\) holds for any \({\varvec{x}} \in B_0(\tilde{{\varvec{x}}},r)\), where \(B_0(\tilde{{\varvec{x}}},r)\) is defined in the step (ii) in Sect. 8.

Here \(B^{+}\) is the set appeared in Assumption 6.1. Note that Step 2 of Procedure 2 ensures Assumption 9.1.

We construct the continuous mappings \(F_{2}\) and F by the following steps.

-

(i)