Abstract

A foundation for gathering and interpreting data is the empirical law of large numbers (eLLN). The eLLN has multiple aspects and can be regarded and used with multiple foci. However, it generally relates relative frequencies and probabilities or samples and the corresponding populations. Unfortunately, research has repeatedly revealed that students have problems with tasks including certain foci on the eLLN, particularly regarding their sensitivity to sample size when comparing smaller and larger samples.

We first outline the eLLN with its central aspects and provide an overview of corresponding empirical findings. Subsequently, we use Stanovich’s (2018) framework of human processing in heuristics and biases tasks to (re-)interpret theoretical descriptions and prior empirical results to better understand and describe students’ problems with the eLLN. Subsequently, we present three main approaches to support students derived from prior research: A static-contrast approach, a dynamic approach, and a boundary-example approach.

As currently no systematic and comparative evidence exists regarding the effectiveness of these approaches, we conducted a quasi-experimental intervention study (N = 256, 6th grade) which empirically compared three implementations of these approaches to a control group. Results underline significant positive short-term effects of each approach. However, the boundary-example intervention showed the highest pre-post effect, the only significant long-term effect, and also effectively reduced the common equal-ratio bias.

Results are promising from a research perspective, as Stanovich’s framework proved very helpful and is a promising foundation for future research, and from an educational perspective, as the boundary-example approach is lightweight and easy to implement in classrooms.

Zusammenfassung

Das Erfassen und Interpretieren von Daten stellen Kompetenzen dar, denen zunehmende Bedeutung beigemessen wird. Eine Grundlage dieser Kompetenzen ist das empirische Gesetz der großen Zahlen (eGdgZ), welches verschiedene Aspekte besitzt und entsprechend mit unterschiedlichen Foki betrachtet und verwendet werden kann. Im Allgemeinen setzt es jedoch relative Häufigkeiten und Wahrscheinlichkeiten bzw. Stichproben und die entsprechenden Grundgesamtheiten in Beziehung. Forschungsergebnisse zeigen, dass Schülerinnen und Schüler Probleme mit verschiedenen Foki des eGdgZ haben, insbesondere bei der Berücksichtigung der Stichprobengröße beim Vergleich der Variabilität von kleinen und großen Stichproben.

Wir skizzieren zunächst das eGdgZ mit seinen zentralen Aspekten und geben einen Überblick über entsprechende empirische Befunde. Anschließend werden basierend auf Stanovich’s (2018) kognitivem Verarbeitungsmodell bei „Heuristics and Biases“-Aufgaben theoretische Beschreibungen und Befunde empirischer Forschung in Bezug auf das eGdgZ (re-)interpretiert, um so Schwierigkeiten von Lernenden besser verstehen und beschreiben zu können. Aufbauend werden drei zentrale Förderansätze aus der Vorforschung extrahiert und vergleichend dargestellt: ein statisch-komparativer Ansatz, ein dynamischer Ansatz sowie ein neuartiger Ansatz basierend auf Extrembeispielen.

Zum Vergleich der Ansätze wurde eine quasi-experimentelle Interventionsstudie (N = 256, 6. Jahrgangsstufe) durchgeführt, um systematische, vergleichende Evidenz zur Wirksamkeit dreier den Ansätzen entsprechenden Interventionen zu generieren. Es zeigten sich signifikant positive, kurzfristige Effekte aller Interventionen. Jedoch wies der Ansatz der Extrembeispiele den größten Prä-Post-Effekt auf, den einzigen signifikanten längerfristigen Effekt, und war auch in der Lage, den wesentlichen Fehlschluss im Kontext des eGdgZ effektiv zu reduzieren.

Die Ergebnisse belegen die Anwendbarkeit und den Mehrwert des Verarbeitungsmodells von Stanovich (2018) und zeigen darüber hinaus Implikationen hinsichtlich der Verwendung von Extrembeispielen im Unterricht auf.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Twenty-first century skills are ubiquitous in ongoing societal discussions about school and education as well as in educational research (e.g., Pellegrino and Hilton 2013). In this context, concepts such as scientific reasoning and argumentation, statistical literacy, or data-based reasoning are heavily discussed (e.g., Ben-Zvi and Garfield 2004; Fischer et al. 2018). The empirical law of large numbers (eLLN) is an integral foundation for many of these concepts, as it can be used to relate relative frequencies and probabilities of specific events or samples and the corresponding populations. For example, it can be used to estimate an unknown population parameter based on a relative frequency observed in a sample. In this regard, Sedlmeier and Gigerenzer (1997) state that the eLLN in its basic form states that a larger sample is better suited to estimate an unknown parameter than a small sample. Accordingly, scientific experiments containing more observations, polls based on more participants, or evidence based on more sources can generally be regarded as more reliable than evidence based on fewer observations, participants, or sources—a finding highly important for the aforementioned concepts.

However, despite its important role in many aspects of 21st century lives, the eLLN appears difficult to understand and use. Empirical studies suggest that not only school students (e.g., Fischbein and Schnarch 1997; J. M. Watson and Moritz 2000b) but also college students (e.g., Kahneman and Tversky 1972) and even pre-service teachers (e.g., J. M. Watson 2000) have problems adhering to the eLLN and being sensitive to sample size.

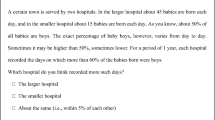

Prior research has repeatedly used the so-called “hospital problem” (or “maternity ward problem”) to examine a person’s sensitivity to sample size in the context of the eLLN, the processing of empirical data, the use of intuitions and heuristics in corresponding situations, and the cognitive processing involved in a person’s judgments (for a literature review see Lem et al. 2011). However, the name “hospital problem” does not refer to one specific task but is rather an umbrella term for multiple closely related types of tasks that focus on the application of the eLLN in the context of childbirths.

Based on the general interest in the eLLN and the repeatedly reported shortcomings of learners, multiple research projects have explored the effects of general statistics instruction as well as more specific approaches to support students in handling tasks involving the eLLN (e.g., Biehler and Prömmel 2013; Dinov et al. 2009; Prömmel 2013; Reaburn 2008; Schnell 2018; J. M. Watson 2000). These specific approaches usually include multiple foci on the eLLN, that is they combine different aspects of the eLLN, such as the prediction and estimation aspect or the static-contrast and dynamic view, to reach positive learning effects. While some of the approaches are empirically validated, the approaches are usually also time-consuming and often go beyond the time allotted to the eLLN in educational standards, so that their value for actual teaching is limited. Moreover, little research has focused on the systematic analysis and comparison of different educational approaches that may help students increase their sensitivity to sample size in the context of the eLLN and to better cope with corresponding tasks—covering different foci of the eLLN—and demands in general. In particular, a study by Weixler et al. (2019), which disentangled partially conflicting evidence regarding the eLLN by using a systematic approach to investigating different task variants of the hospital problem, suggests a new approach based on so-called “maximum-deviation tasks.” These tasks follow an extreme-value heuristic or “Extremalprinzip” (e.g., Grieser 2017) and are similar to boundary examples or boundary problems (see also A. Watson and Mason 2002) for situations based on the eLLN. These boundary examples use high deviations such as ‘1000 of 1000 children born in a hospital are boys’ from usual or expected ratios such as ‘500 of 1000 children’, which may result in different cognitive processing of these tasks. Corresponding examples appear to make the unsuitability of misleading strategies and the conflict between an erroneous answer and one’s knowledge concerning the eLLN very salient, thus possibly leading to high learning gains with comparatively short interventions. The idea of such short and “simplified instructions” regarding the eLLN already exists in early research concerning the hospital problem (e.g., Evans and Dusoir 1977), however, mathematics education research has yet to systematically address it.

The current article first describes the eLLN with its central aspects as well as corresponding research on the eLLN and (re)interprets prior findings using different stages of cognitive processing suggested by Stanovich (2018) to create evidence on the effectiveness of three central approaches to support students’ sensitivity to sample size in the context of the eLLN. It then gives a summary of different approaches to support students’ sensitivity to sample size mentioned in (mathematics) education research and explicates theoretical assumptions why and for which aspects of the eLLN they may be especially well-suited. The paper then focuses on an empirical study with N = 256 students that compares the effectiveness of three specific implementations of the three prototypical approaches to support students derived from theory to a control group, both regarding short- and long-term effects on the successful use of the eLLN in tasks including multiple foci. In conclusion, the results from the empirical study are interpreted in light of prior research on possible approaches to support students in handling tasks involving the eLLN.

2 Background

2.1 The Empirical Law of Large Numbers (eLLN)

Stating the eLLN is far from trivial, as the eLLN has multiple aspects and can be regarded and used with multiple foci that lead to different interpretations of the eLLN. However, the eLLN can be understood as a phenomenon or empirical fact related to the weak law of large numbers (Khinchin 1929). In this regard, Sedlmeier and Gigerenzer (1997) explicitly point out that the eLLN is “not a mathematical theorem like the (mathematical) law of large numbers” (p. 35).

Prior research underlines that students of all ages have difficulties applying the eLLN and being sensitive to sample size. In this regard, prior research implemented multiple different versions of the eLLN—each with a specific focus based on central aspects of the eLLN—, leading to vastly different results regarding students’ solution rates and abilities (see Sect. 2.2 for a detailed description). From a theoretical perspective, one can distinguish five main aspects of the eLLN that lead to different foci of the eLLN (see Table 1).

First, it can be differentiated between the “prediction” aspect of the eLLN and the “estimation” aspect. The prediction aspect assumes that the probability of a specific event or the value of a parameter in the population is given, and predictions are to be made about the relative frequency of the event in a random experiment respectively the value of the parameter in a sample. For example, the hospital problem by Kahneman and Tversky (1972) (see Fig. 1) focuses on the prediction aspect, as the reader of the task is informed that the probability for a boy is about 50%. Similarly, the usual formulations of the mathematical laws of large numbers (e.g., weak law of large numbers) focus on an inference from a mathematical model with known probabilities or distributions to relative frequencies (in particular a high probability that a relative frequency is close to the expected value based on a sufficiently large sample). In contrast, the estimation aspect refers to the estimation of an unknown probability from the observed relative frequency or the inference from the value of a parameter in a given sample to the value of the parameter in the population. For example, based on a test series of 1000 throws of a random object (e.g., a possibly biased die) with 315 “successes” one might conclude that 31.5% is a good estimator for the probability of success.

Task variant of the hospital problem used by Kahneman and Tversky (1972)

Second, some researchers (e.g., Freudenthal 1972; Schnell and Prediger 2012) differentiate between a “static-contrast view” and a “dynamic view” of the eLLN. For example, based on a static-contrast view, the eLLN expresses that the variability of the relative frequency of a specific event in a random experiment is smaller in case of a larger number of trials than in case of a smaller number of trials. However, based on a dynamic view, the eLLN not only contrasts two fixed numbers of trials, but turns the number of trials into a variable, and thus expresses that i) the relative frequency of a specific event in a random experiment stabilizes with an increasing number of trials (see Fig. 5, horizontal arrow in the scatterplot) and that ii) the variability of the relative frequency of the event decreases with an increasing number of trials (see Fig. 5, vertical arrows in the scatterplot).

Third, some researchers (e.g., Well et al. 1990) differentiate between an “accuracy” and a “tail” focus of the eLLN (see also Table 1). In the hospital problem by Kahneman and Tversky (1972) (see Fig. 1) the focus is on the likeliness of an observed relative frequency deviating from the expected relative frequency by a certain degree (i.e., more than 60% boys). This type of problem is called a “tail” problem (e.g., Well et al. 1990), as it focuses on the “tail” of a distribution and hence, how likely a certain sample is to deviate from a certain value by a certain degree. In contrast, some problem variants focus on the likeliness of an observed relative frequency to be close to an expected relative frequency. This type of problem is called an “accuracy” problem (e.g., Well et al. 1990).

Fourth, some researchers (e.g., Evans and Dusoir 1977; Sedlmeier and Gigerenzer 1997) differentiate between ‘one sample’ and ‘multiple samples’Footnote 1. In this regard, the eLLN can both refer to the distribution of values from one sample (see Fig. 2 top task for an example) as well as to the distribution of sample means from multiple samples (see Fig. 1 for an example).

Fifth, it can be differentiated (Weixler et al. 2019) between formulations of the eLLN that focus on (one or multiple) ‘samples’ from a population or that focus on (one or multiple) ‘test series’ (see Fig. 2 for examples).

Concerning the five aspects of the eLLN described above, the task variant of the hospital problem used by Kahneman and Tversky (1972) (see Fig. 1) can be characterized as ‘prediction, static-contrast, tail, multiple, sample’. However, for example, Evans and Dusoir (1977) additionally used other task variants, one of them was a ‘one day with a percentage of baby boys higher than 60%’ variant one can characterize as ‘prediction, static-contrast, tail, one, sample’ (see further Sedlmeier and Gigerenzer 1997). In both cases, the normatively correct response is ‘The smaller hospital’: when the sample size is larger, it is less likely to observe a percentage of baby boys higher than 60%.

Finally, understanding the eLLN with a focus on estimation is central for learners to acquire the frequentist aspect of probability (e.g., Batanero et al. 2005): the observed relative frequency of an event can be used to estimate the probability of this event. Due to its importance and despite students’ repeatedly documented problems, the eLLN is part of school curricula worldwide (e.g., Australian Curriculum, Assessment and Reporting Authority n.d.; Bavarian State Ministry for Education and Culture n.d.; Ministry of Education Singapore 2015; Ontario Ministry of Education n.d.) and often introduced in early secondary education. While many curricula do not provide sufficient detail to capture the exact foci of the eLLN that should be dealt with in school, for example, the bavarian curriculum (Bavarian State Ministry for Education and Culture n.d.) explicitly mentions the estimation aspect in lower secondary education.

2.2 Research Findings

Prior research has generally reported very poor performance for students in grades 5 to 11 when dealing with a task variant of the hospital problem or an analog problem (Engel and Sedlmeier 2005; Fischbein and Schnarch 1997; Rubel 2009; J. M. Watson and Moritz 2000a). This appears to also be the case for college students with no background in statistics or probability (e.g., Kahneman and Tversky 1972). More precisely, corresponding results are mostly based on task variants of the hospital problem one can characterize as ‘prediction’ and ‘static-contrast’. However, besides these common aspects, multiple different formulations of the hospital problem have been used and it has, for example, also been transformed into other contexts, e.g., coin tosses. This has led to partially conflicting evidence (see e.g., Lem 2015; Weixler et al. 2019), as the different possible foci of the eLLN have not been systematically considered. Regarding differences in solution rates, tasks one can characterize as ‘one’ seem to lead to higher solution rates than tasks one can characterize as ‘multiple’ (e.g., Evans and Dusoir 1977; Sedlmeier and Gigerenzer 1997) and tasks one can characterize as ‘accuracy’ seem to lead to higher solution rates than tasks one can characterize as ‘tail’ (e.g., Well et al. 1990). Sedlmeier and Gigerenzer (1997) proposed that statistically naïve people’s everyday life experience is only sufficient for understanding the influence of sample size when one sample is focused, but not for understanding the influence of sample size when multiple samples are focused. Well et al. (1990) proposed that statistically naïve people do not have an in-depth but rather a partial understanding of the eLLN, which corresponds with the application of a “bigger is better” heuristic, thus leading to the answer ‘the larger hospital’, which is the correct answer for tasks one can characterize as ‘accuracy’, but the wrong answer for tasks one can characterize as ‘tail’. Regarding task variants focusing on a ‘static-contrast’ vs a ‘dynamic’ view, prior research has reported problems mostly relating to the ‘static-contrast’ view of the eLLN (e.g., Batanero et al. 1996; Rasfeld 2004), while a ‘dynamic’ view appears to be less problematic. In contrast to the three aforementioned aspects, which appear to be important for the difficulty of corresponding items based on prior research, Weixler et al. (2019) produced evidence that the aspect sample (e.g., childbirths) vs. test series (e.g., coin tosses) plays only a subordinate role regarding item difficulty. Finally, while the differentiation between a ‘prediction’ and an ‘estimation’ focus on the eLLN is an important distinction from a theoretical point of view, there is to our knowledge no conclusive empirical evidence concerning these two foci.

Results of the same study by Weixler et al. (2019), which focused on problem variants one can characterize as ‘prediction, static-contrast, tail, one’, support the hypothesis that differences in prior research, even when using the same type of task based on the aspects described above, may be due to the salience of relevant task features, which heavily impacts performance. In the study by Weixler et al. (2019), university students’ performance increased with a bigger ratio between the larger and smaller sample size and a larger deviation of the observed relative frequency from the expected relative frequency. In particular, results reveal that students were especially successful when answering boundary-example tasks, which are tasks with an observed relative frequency of 0% or 100%. Even more important, tasks including an extreme relative frequency appeared to trigger increased performance in subsequent tasks in this study, even when relevant task features were less salient in these subsequent tasks. Weixler et al. (2019) concluded that it might be a promising instructional approach to proceed from cases of an observed relative frequency of 0% or 100% to other less salient cases and use the strategies applied by the students in these boundary cases as a springboard to strengthen strategies and conceptual knowledge for the general case.

2.3 An Intuition for Solving the Hospital Problem?

Intuition is a concept previously used very differently in the scientific literature (e.g., Hogarth 2001; Rasfeld 2004). Within mathematics education, the work of Fischbein has a great influence; he characterized intuition as a “direct, self-evident” (Fischbein 1999), intrinsically certain, and global cognition. He also introduced various types and differentiations of intuitions, for example, primary and secondary intuitions (e.g., Fischbein 1975; Fischbein et al. 1971). Primary intuitions are those that “are formed before, and independently of, systematic instruction” (Fischbein 1975), whereas secondary intuitions “are formed after a systematic process of instruction” (Fischbein 1975). Concerning the relation between the eLLN and the frequentist approach to probability, Fischbein (1975) states:

The intuition expressed by the so-called ‘law of large numbers’ anticipates the fact that relative frequencies approach their theoretical probability as the number of trials increases. (p. 13)

In numerous papers concerning the hospital problem or analog problems, intuition is an integral part of the theoretical background and/or the discussion of results (e.g., Fischbein and Schnarch 1997; Rasfeld 2004; Sedlmeier and Gigerenzer 1997). For example, concerning school students instructed based on a frequentist approach to probability, Rasfeld (2004) terms the intuition that a fixed deviation of the observed relative frequency from the expected probability becomes more and more unlikely with an increasing number of trials or increasing sample size, as the relative frequency stabilizes, a secondary (in the sense above) and affirmatory (i.e., based on facts accepted as certain or self-evident) intuition.

Concerning the hospital problem, based on low solution rates combined with high rates of the response ‘about the same’ (erroneous answer for ‘tail’ variants of the hospital problem), it has been proposed (e.g., Fischbein and Schnarch 1997; Kahneman and Tversky 1972) that people have an equal-ratio bias, which is an intuition that prohibits them from correctly applying the eLLN and being sensitive to sample size: the identification of equal ratios for both hospitals (e.g., 60% of the babies were boys) imposes itself as the key feature for solving the problem and thus people automatically respond ‘about the same’ using what is called “system 1” by Kahneman (2011), a mode of thought that “operates automatically and quickly, with little or no effort and no sense of voluntary control” (see further Kahneman 2011), without even taking the difference in sample sizes into account. In contrast, based on high solution rates in other studies (see Sect. 2.2: higher solution rates for tasks characterized as ‘one’ than for tasks characterized as ‘multiple’), Sedlmeier and Gigerenzer (1997) proposed the hypothesis that (statistically naïve) people have an intuition (i.e., a primary intuition) for the eLLN.

Today, there is a growing amount of research on people’s sensitivity/insensitivity to sample size, possible biases, their reasons and consequences, as well as humans’ (ir)rational behavior in contexts with statistical data in general (e.g., Hertwig and Pleskac 2010; Jacobs and Narloch 2001; Reaburn 2008), which cannot be briefly described in this context. However, the initially promising concept of intuition for describing people’s reasoning in the context of the eLLN has emerged as a problematic concept from a research perspective: different hypotheses regarding intuitions concerning the eLLN cannot be separated clearly (even when closely distinguishing different foci of the eLLN), and accordingly, researchers have interpreted empirical data in different ways.

2.4 Different Stages of Processing the eLLN

According to a theoretical framework of human processing in heuristics and biases tasks by Stanovich (2018), the hospital problem suggests ‘about the same’ in the sense of a compelling intuitive and effortless response, which however is wrong for the (most often used) ‘tail’ variants of the problem. For example, 72% of N = 173 grade 5–11 students in the study by Rubel (2009) gave this response and “[m]ost of these students offered justifications for their choice based on equal ratios, equal fractions, or equal percentages” (p. 639). In another study, Reagan (1989) demonstrated that the rate of normatively correct responses increased remarkably (from 43% to 75%) for students who were not trained in statistical theory when the ‘about the same’ response (originally 49%) was eliminated to force students to choose either ‘the larger hospital’ or ‘the smaller hospital’.

In his framework, Stanovich (2018) stresses the importance of knowledge structures—termed “mindware”—for successful performance:

This means that how well the relevant mindware is instantiated in memory will affect the performance observed on the task. The task will not just be an indicator of miserly processing but will also be an indicator of the depth of learning of the relevant mindware as well. (p. 430)

The eLLN with its multiple foci is the required knowledge structure (or “mindware”) to solve a variant of the hospital problem or an analog eLLN problem. For example, to solve the variant of the hospital problem shown in Fig. 1, adopting a static-contrast view of the eLLN is required, that is that the variability of the observed percentage of baby boys in a given sample is bigger in case of a smaller sample and smaller in case of a larger sample, respectively.

Five processing stages can be specified on the ‘eLLN instantiation continuum’ (Fig. 3) based on Stanovich (2018). Initially, when the relevant knowledge is absent or has not been learned, the probability of a correct answer is low and close to zero (Fig. 3, far left). However, with an increasing instantiation of the knowledge, the probability of a correct answer increases. At Stages 2–3 (Fig. 3, middle), the knowledge has been acquired, but partially fails to be applied as no conflict to the incorrect answer is realized or because its activation is unlikely. However, with an increasing instantiation, an override of an erroneous answer is increasingly likely (at Stage 4). Finally, at Stage 5, the knowledge has been automatized and the probability of a correct answer is high. In particular, at Stage 5 the knowledge is sufficiently instantiated so that it can be retrieved effortless using system 1 processing, whereas the use of knowledge at Stages 2–4 requires a certain amount of effort and is based on system 2 processing, which is a slower, more deliberative, and more logical mode of thought (see further Kahneman 2011).

Processing stages on the mindware instantiation continuum specified by Stanovich (2018)

The theoretical framework by Stanovich (2018) is particularly suitable for a theoretical revision of the controversy concerning the existence of an intuition for the eLLN.

The hypothesis proposed by Sedlmeier and Gigerenzer (1997) that people possess an intuition for the eLLN (see above) corresponds to Stage 5, that is a high probability of the correct answer in the framework by Stanovich (2018). At this stage, the relevant knowledge for solving the hospital problem or an analog eLLN problem has been automatized and is thus activated directly and automatically (i.e., activation of System 1). Whether the intuition is primary or secondary is irrelevant in this respect.

Contrary, the hypothesis proposed by Fischbein and Schnarch (1997) and Kahneman and Tversky (1972) that people have an intuition that prohibits them from correctly applying the eLLN implies that people have not acquired Stage 5 regarding the eLLN, but rather can be positioned at Stages 1–3 in the framework by Stanovich (2018). If people, for example, the students in grades 5–11 in the study by Fischbein and Schnarch (1997) and the 14-year-olds in the study by Batanero et al. (1996), have not received formal education concerning the eLLN, it is likely that they do not possess the relevant knowledge, i.e. regarding the multiple foci of the eLLN, for solving a variant of the hospital problem or an analog eLLN problem, which corresponds to Stage 1, that is a very low probability of the correct answer in the framework by Stanovich (2018). The corresponding rates of normatively correct responses are between 0 and 35% in Fischbein and Schnarch (1997) and 23.8% in Batanero et al. (1996).

If people, for example, the 18-year-olds in the study by Batanero et al. (1996), have received formal education concerning the eLLN, but respond ‘about the same’ to the hospital problem or an analog eLLN problem, it is likely that the relevant knowledge was learned but either i) the conflict between the intuitive ‘about the same’ response and the learned knowledge was not realized, which corresponds with Stage 2 and a low probability of the correct answer in the framework by Stanovich (2018), or ii) activation of the relevant knowledge was unlikely, which corresponds with Stage 3 and a medium probability of the correct answer in the framework by Stanovich (2018). The corresponding rate of normatively correct responses is 26.9% in the study by Batanero et al. (1996).

Besides low probabilities of a correct answer, also the hypothesis that people have an intuition that prohibits them from correctly applying the eLLN can be explained by using the framework by Stanovich (2018). The intuition is based on the fact that (for the respective foci of the eLLN) inappropriate knowledge (equal ratios, equal fractions, or equal percentages) was automatized and thus is activated directly and automatically in the sense of an activation of system 1, which corresponds with a high probability of the ‘about the same’ response. The corresponding rates of ‘about the same’ responses are up to 80% in the study by Fischbein and Schnarch (1997) and about 60% in the study by Batanero et al. (1996).

To sum up, the framework by Stanovich (2018) describes a continuum ranging from absent to automatized relevant knowledge and is more tangible than the vague concept of an intuition, which can be explained within the framework by Stanovich (2018) based on Stage 5 and system 1 processing. From an educational point of view, the framework and especially the continuum from Stage 1 to 5 is encouraging, as it implies that students can be supported to reach higher stages regarding the instantiation of the eLLN and its multiple foci (and to reach an adequate instantiation of knowledge regarding equal ratios, preventing its use for solving a variant of the hospital problem or an analog eLLN problem). However, up to now, it is unclear if this is possible, and which educational approaches are how effective in this regard.

2.5 Educational Approaches to the eLLN

Prior research (e.g., Biehler and Prömmel 2013; Schnell 2018; J. M. Watson 2000; Weixler et al. 2019) has focused on different types of instructional concepts to support students in handling the eLLN. These often combine different approaches to the eLLN to address it from multiple perspectives. For example, Biehler and Prömmel (2013) describe a concept that involves (at least) seven learning environments focusing on static-contrast comparisons between different sample sizes, simulations that can be used to focus on a dynamic view of the eLLN and stabilizing relative frequencies for a growing number of trials, as well as on more abstract contents such as the \(1/\sqrt{n}\)-law (see Prömmel 2013 for corresponding research results), the latter which is however not applicable in lower secondary education, where the empirical law of large numbers is often introduced. In her approach, Schnell (2018) describes a teaching-learning arrangement called “Betting King”, that allows covering both the static-contrast and the dynamic view of the eLLN in the context of a dice game that is implemented via an excel simulation.

Analyzing approaches to the eLLN from a theoretical perspective, different fundamental types of approaches appear feasible for early secondary mathematics education: Data-driven approaches that either focus on a static-contrast view of the eLLN, for example comparing birth data from a smaller and a larger city, or that focus on a dynamic view of the eLLN, for example on the effects of an increasing number of trials simulating 100, 1000, or 10,000 dice rolls. Additionally, based on the results by Weixler et al. (2019), specific boundary examples can be identified as yet another approach, which is not based on real or fictitious data, but on hypothetical cases with high deviations (e.g., ‘10 out of 10 babies born were boys’) from usual or expected ratios.

2.5.1 Data-driven Approaches

Static-contrast Approach

Educational approaches focusing on the static-contrast view of the eLLN often involve comparisons of contextualized simulated or (authentic) data from real life, for example monthly birthrates of boys and girls in a smaller city versus a bigger city, which can support students in handling the eLLN (e.g., Krüger et al. 2015). For example, the data from both cities illustrate that the observed relative frequency of boys respectively girls in a larger city is in general closer to the expected relative frequency than in a smaller city, as the variability in the smaller city is higher (Fig. 4). To focus on the static-contrast view of the eLLN, these approaches provide students with data for fixed numbers of trials or samples or allow them to generate such in simulations.

Dynamic Approach

To focus on a dynamic perspective of the eLLN, educational approaches usually either include the computer-based or real-life simulation of data, for example by repeatedly running a random experiment or rolling (a potentially biased) dice (Biehler and Prömmel 2013; J. M. Watson 2000). These approaches are close to the frequentist approach to probability and the estimation focus on the eLLN: if the relative frequency of a specific event is tracked during simulation, students can experience that the variability of the observed relative frequency decreases with a growing number of trials. This is illustrated in Fig. 5 using ten trajectories, one highlighted in black.

Over the last years, various corresponding tasks and simulations have been created by practitioners and researchers (e.g., Dinov et al. 2009; Schnell 2018); however, their effectiveness regarding students’ sensitivity to sample size has rarely been studied.

2.5.2 Boundary-example Approach

Specific boundary examples, so-called “maximum-deviation tasks” (Weixler et al. 2019) or boundary-example tasks, focusing on ‘all (or none)’ in a static-contrast view (e.g., ‘10 out of 10 babies born were boys’) were highlighted as alternative means to support students in their sensitivity to sample size in the context of the eLLN.

A positive effect of focusing on ‘all’ on the solution rate of eLLN problems has been shown in a variety of studies (e.g., Evans and Dusoir 1977; Weixler et al. 2019). Putting the focus on ‘all (or none)’ appears to enable the targeted activation of existing knowledge, along with the omittance of the intuitive identification of equal ratios for both hospitals (e.g., Weixler et al. 2019). However, even more importantly, Weixler et al. (2019) also used a within-subjects design to find out that boundary examples have a positive effect on solution rates of subsequent tasks on the eLLN, although those tasks were not boundary examples. Thus, boundary examples focusing on ‘all (or none)’ appear to be a promising starting point to approach the eLLN, in particular regarding the static-contrast view of the eLLN.

Using such tasks, the teacher can highlight characteristics of the task or situation in a way that relevant aspects become obvious (e.g., the effect of sample size) and other compelling aspects, in particular the identification of equal ratios for both hospitals, are omitted in the initial phase of the approach to the eLLN.

2.5.3 Summary of Similarities and Differences of the Approaches

The described data-driven approaches take different views of the eLLN, focusing either on a static-contrast view or on a dynamic view. Both of these approaches can thus be assumed to yield learning effects regarding the eLLN via different mechanisms and thus may lead to a difference in how well the corresponding knowledge is instantiated, which is the reason why many prior learning environments included both approaches (e.g., Biehler and Prömmel 2013; Schnell 2018).

In contrast to the described data-driven approaches, boundary examples use hypothetical cases focusing on an extreme case of deviation of the observed relative frequency from the expected relative frequency of a specific event (i.e., relative frequency 0% or 100%) to nudge students to adhere to the eLLN and be sensitive to sample size. Here, multiple reasons for the effectiveness of these tasks can be given: First, the boundary-example tasks may change a probabilistic task into an analytic task, as all or none of the children born are boys or girls. This may activate different knowledge when solving and answering the task. Second, in contrast to any other cases, the probability of 0 boys out of n children born, respectively n boys out of n children born, can be calculated straightforwardly, as it is 0.5n in both cases. Thus, it is relatively easy to see, that \(0.5^{n}> 0.5^{m}\) for \(n< m\) and that the answer thus must be “The smaller hospital”. Finally, as students can easily interpret the use of relative frequencies 0% or 100% as ‘never’ respectively ‘always’, equal-ratio answers may be questioned (see Evans and Dusoir 1977; Weixler et al. 2019) in the sense of a trigger for an override of the erroneous answer (Stage 4; Stanovich 2018). As the boundary-example approach reflects a static-contrast view of the eLLN, it appears reasonable to assume that the approach should lead to learning gains regarding this view of the eLLN. It is however currently unclear if the approach also yields learning gains regarding the dynamic view of the eLLN.

Finally, the use of an appropriate visual representation (e.g., a table or a plot), for example of the birthrates of boys and girls, may additionally facilitate each approach to the eLLN (see also Ainsworth 2006; Schnotz 2005) as it allows to make the low/high variation visible.

3 Research Questions

Although the eLLN and especially sensitivity to sample size are deemed important topics within school education on statistics and probability (e.g., Arbeitskreis Stochastik der GDM 2003; NCTM 2000) and adopting a dynamic view of the eLLN builds the basis of a frequentist understanding of probabilities (e.g., Batanero et al. 2005), problems have repeatedly been highlighted by mathematics education and psychology research (e.g., Fischbein and Schnarch 1997; Kahneman and Tversky 1972). Even after the systematic introduction of the eLLN, school students, college students, as well as adults appear not to use their knowledge and give incorrect responses to eLLN tasks (e.g., Engel and Sedlmeier 2005), thus representing Stages 2 and 3 in Stanovich’s framework.

Prior research has addressed this issue by focusing on several possible educational approaches, most of which rather focus on learning environments that address multiple foci of the eLLN at the same time (or sequentially) and are generally time-consuming, so that they often cannot be used for actual teaching purposes. Moreover, there is currently no research that systematically compared different approaches to support students and their effects on different foci of the eLLN. In particular, while each of the three approaches will generally help to better understand the eLLN and adhere to sample size, each of the approaches is likely to yield different learning outcomes regarding different foci of the eLLN (e.g., the static-contrast view will likely lead to higher learning gains for tasks that also require a static-contrast view, however, learning gains based on this approach for tasks that require a dynamic view are less clear). Approaches thus likely differ regarding their effectiveness on different foci of the eLLN and thus cannot be compared straightforwardly, when not also addressing these foci in the measurement.

The presented study thus evaluates the effectiveness of the three approaches to the eLLN suggested by prior research to improve the instantiation of relevant knowledge concerning the eLLN in early secondary education by focusing on three specific interventions: i) a data-driven intervention focusing on the static-contrast view, ii) a data-driven intervention focusing on the dynamic view, and iii) a boundary-example intervention in which a static-contrast view is taken. Based on Stanovich (2018), an increased instantiation should lead to a better conflict detection between students’ intuitive wrong responses and their relevant knowledge, a better override of intuitive wrong responses, a better automatization of normatively correct responses, and thus to an improved handling of eLLN problems. To better reflect the effects of the different approaches and corresponding learning mechanisms, different foci of the eLLN are systematically assessed to examine differential effectiveness, in particular regarding the aspects of ‘static-contrast’ vs. ‘dynamic’ and ‘tail’ vs. ‘accuracy’, which may reasonably show a differential development based on the three approaches.

The study was guided by the following research questions:

RQ 1

To what extent are students able to correctly answer mathematical tasks based on the eLLN after it has been introduced in school mathematics education?

Based on prior studies (e.g., J. M. Watson and Moritz 2000a), we expected low solution rates on eLLN tasks. In particular, we expected that students had not yet reached Stages 4 and 5 of Stanovich’s framework (2018), but still had problems realizing a conflict between their intuitive wrong response and their relevant knowledge (Stage 2) and activating their relevant knowledge (Stage 3). As students’ problems in adhering to the eLLN reported in prior research (e.g., Batanero et al. 1996; Rasfeld 2004) mainly relate to the static-contrast view of the eLLN, we hypothesized that students’ solution rates of tasks one can characterize as ‘dynamic’ would be better than the solution rates of tasks one can characterize as ‘static-contrast’. Additionally, we assumed that tasks one can characterize as ‘accuracy’ would be solved better than tasks one can characterize as ‘tail’, as reported by prior research (J. M. Watson and Moritz 2000a; Well et al. 1990).

RQ 2

Can three instructional interventions, which are based either on the data-driven instructional approaches or the boundary-example instructional approach, increase students’ solution rates on tasks requiring the eLLN?

Although empirical evidence is scarce, prior research and theoretical conceptions of the interventions suggest that they should be effective: The studies by Prömmel (2013) and Schnell (2018) suggest that the data-driven approaches yield positive learning gains (however based on longer interventions and interventions combining both approaches) and the study by Weixler et al. (2019) shows positive effects for the boundary examples (based on very short interventions that only consisted of working on corresponding tasks; however not within an educational setting). Based only on these partially fitting results, we still expected that also short-term interventions following the three approaches would be able to significantly support students to instantiate relevant knowledge in tasks requiring the eLLN so that students’ processing would improve to Stage 4 (or even 5).

RQ 3

Which of the three instructional interventions is most effective in increasing students’ solution rates on tasks requiring the eLLN?

Based on results by Weixler et al. (2019) on maximum-deviation tasks and the educational expectancies regarding boundary examples (A. Watson and Mason 2002), we expected the intervention focusing on boundary examples to be most effective. However, we were unsure if the intervention based on the dynamic approach might also be especially effective in improving students’ conflict detection, as corresponding conflicts could arise after each step during the simulation of the data (e.g., after five tosses, ten tosses, etc.) and be even more pronounced here than in the other interventions, where corresponding conflicts can arise only once for each task or comparison.

RQ 4

Do the three instructional interventions differ regarding their effectiveness on tasks one can characterize as ‘static-contrast, accuracy’, ‘static-contrast, tail’, or ‘dynamic’?

We expected that both data-driven interventions, which focus on different views of the eLLN, would also mostly have effects on tasks that require adopting the corresponding view of the eLLN for a successful solution. That is, the data-driven static-contrast intervention would yield high learning gains for tasks that require adopting a static-contrast view but only minor effects for tasks that require adopting a dynamic view. Similarly, we expected that the data-driven dynamic intervention would yield high learning gains for tasks that require adopting a dynamic view but only minor effects for tasks that require adopting a static-contrast view. Finally, we assumed that the boundary-example intervention would be most effective for tasks that require adopting a static-contrast view of the eLLN and less effective for tasks that require adopting a dynamic view.

Regarding the aspect of ‘tail’ vs. ‘accuracy’, we assumed that both data-driven interventions would be similarly effective for both types of items. However, we expected that the boundary-example intervention would be particularly effective for ‘tail’ tasks, as the approach should be particularly helpful to avoid the equal-ratio bias for ‘tail’ tasks.

RQ 5

Are the instructional interventions able to create long-term learning effects?

Based on prior findings regarding students’ and adults’ shortcomings concerning the eLLN (e.g., Engel and Sedlmeier 2005), we were rather skeptical regarding long-term learning effects. However, based on Stanovich’s framework (2018), we hoped that the interventions would be able to improve students’ processing to Stage 4 (possibly also 5 for some students), where ‘only’ the failure to sustain the initiated override process occurs, but the detection of the necessity to override would occur.

4 Method

4.1 Design of the Study

To answer the research questions, we employed a quasi-experimental study with school students comprising three intervention groups, which were based on the theoretical considerations above, and a control group:

-

Intervention group 1: Static-contrast approach (StatCont)

-

Intervention group 2: Dynamic approach (Dyn)

-

Intervention group 3: Boundary-example approach (Boundary)

-

Control group (ControlG)

The study was carried out by the third author during regular mathematics classes and lasted 45 minutes, including testing time. Each intervention had the same structure: introduction of the author, pretest (5 min), repetition of absolute and relative frequencies (5 min), actual intervention (25 min), posttest (5 min), farewell.

Pre- and posttest were administered as paper-and-pencil tests and answered individually by the students. Three weeks after the study, students received an invitation to participate online in a delayed posttest, including the chance to win 20 € as an incentive to participate.

4.2 Sample

The study was implemented in ten randomly sampled grade six classes in secondary schools (so-called “Gymnasium”) in Germany in the second half of the school year. According to the participating teachers, the eLLN had been introduced in these classes following the relevant curriculum around six months before the implementation of the study.

Overall, N = 256 (140 m, 114 f, 2 NA) students participated in the study (Table 2). Each of the three interventions was carried out with three different classes; one class served as a control group. The classes were assigned randomly to the experimental groups and control group.

As pre- and posttest were carried out in the same lesson, all participants took part in both tests. However, the delayed posttest could not be administered in regular lessons, so an online test was used. From a total of 76 students participating in the online test, only N = 64 could be matched to their pre-/posttest due to errors in their personal IDs.

4.3 Instruments

Three parallel sets of tasks were used for pre-, post-, and delayed posttest, each consisting of five tasks in a multiple-choice format. The structure of each set of tasks was identical and tasks only varied slightly. To safeguard that differences between pre- and posttest were not due to differences in their difficulty, Set 1 and Set 2 were counterbalanced and randomly assigned as pre- or posttest. Analysis showed no significant differences regarding their difficulty (t (254) = −0.652, p = 0.515). Set 3, which was used for the delayed posttest, was analog to Set 1, solely including slightly varied numbers for the tasks.

Based on the limited time for each test, we did not include items covering all possible foci of the eLLN based on the aspects from Table 1. In particular, we did not systematically vary the aspects of ‘estimation’ vs. ‘prediction’, ‘one’ vs. ‘multiple’, and ‘sample’ vs. ‘test series’, as (i) there was no a priori reason to assume any differential effectiveness of the interventions regarding these aspects, (ii) the variation of additional aspects might have led to unexplained variation and statistical noise covering the other effects, and (iii) for example items focusing on ‘one’ rather than on ‘multiple’ seemed more appropriate for grade six students based on prior research results (see Sedlmeier and Gigerenzer 1997).

Still, we included items characterized as ‘static-contrast, accuracy’, ‘static-contrast, tail’, and ‘dynamic’ (see Fig. 2 for example items) to cover those aspects of the eLLN which were assumed to be relevant regarding differential effectiveness of the interventions. Moreover, addressing these foci of the eLLN in our tests allowed us to not bias the results regarding the effectiveness of the interventions by using one specific type of item that may be better fitting for one of the interventions (e.g., only items characterized as ‘static-contrast’). As prior research showed large problems for students solving tasks one can characterize as ‘static-contrast’ (e.g., Fischbein and Schnarch 1997; Rasfeld 2004), and as tasks one can characterize as ‘accuracy’ had been reported as easier than tasks one can characterize as ‘tail’ (e.g., J. M. Watson and Moritz 2000a; Well et al. 1990), the main focus of each set of items was on the combination ‘static-contrast, tail’ with three items, whereas the combination ‘static-contrast, accuracy’ was only represented by one item. Concerning the dynamic view of the eLLN, one item characterized as ‘dynamic’ was included.

Finally, the focus on ‘static-contrast, tail’ was also done to better be able to assess participants’ use of the equal-ratio bias and to evaluate the effectiveness of the interventions regarding the framework by Stanovich (2018) not only based on the general solution rates but more specifically regarding the use of biases.

4.4 Design of the Interventions

In all three groups, as well as in the control group, the interventions were designed using a problem-based learning approach (for an overview see Savery 2006) to allow students to actively participate and work individually as well as in groups. Moreover, all interventions explicitly included multiple representations, which for example allowed to make important aspects of the data easily discernable (see for example Figs. 6 and 7). This was done to benefit from effects based on multiple representation learning (see also Ainsworth 2006; Schnotz 2005). Further, all materials were designed to be appealing and motivating for grade six students and at the same time challenging but not overwhelming. Finally, all content elements of the interventions had been aligned with the curriculum. Additionally, the concepts of absolute and relative frequency were repeated in all classes before the intervention to ensure that students had the necessary mathematical content knowledge at hand.

The main part of the interventions was a working phase focusing on the examination of the eLLN, which varied from group to group (see descriptions below). After the working phase, all groups shared their results with the entire class. Subsequently, important results were written down using similar formulations in all three intervention groups. Only the formulations used in the control group differed, as the control group did not work on the eLLN, but on the fairness of certain random games.

Throughout all three intervention groups, the design of the interventions was parallelized to keep the groups comparable. In particular, the amount of instruction, the time on task, as well as the roundup of what was learned in the interventions were controlled. Moreover, the main learning goals were identical in all intervention groups, as well as the formulation of the eLLN.

4.4.1 Static-Contrast Intervention

The instructional idea underlying the static-contrast intervention was based on the common idea (e.g., Biehler and Prömmel 2013; Krüger et al. 2015) to compare authentic data from a larger with a smaller sample, here more precisely monthly birth statistics. For this, a small city in the students’ region was compared with a larger city in the students’ region as well as with Germany as a whole. For the smaller city, relative frequencies of boys born varied between 20 and 67%, whereas they differed between 46 and 57% for the larger city and 51 to 52% for Germany as a whole. Based on the data, students were, amongst other things, asked to draw a scatterplot containing a month-relative frequency of boys for the smaller city and Germany (Fig. 6) and discuss the data and its variation. Thereby they were able to discover that the deviation of the observed relative frequency from the expected relative frequency is smaller for a bigger sample.

4.4.2 Dynamic Intervention

The intervention based on the dynamic approach used a simulation focusing on the random experiment of repeatedly rolling a die, which was introduced as an experiment structurally equivalent to births of children, that is each odd number represented a boy, every even number a girl. During the intervention, students were asked to roll five dice twenty times (equaling 100 rolls of a single die), write down their results for each roll, afterward calculate the relative frequency of boys regarding 5, 10, 15, …, 100 rolls, draw the respective number of births-relative frequency of boys scatterplot (Fig. 7) and finally compare and integrate them in a plenary discussion. The generation and comparison of the plot allowed students to discover that the deviation of the observed relative frequency from the expected relative frequency tends to decrease with a growing sample.

4.4.3 Boundary-example Intervention

The third intervention was based on the results by Weixler et al. (2019) regarding the longitudinal effects of maximum-deviation or boundary-example tasks. For this, well-known random experiments (rolling a die, tossing a coin) were introduced and students’ subjective evaluations of the likeliness of certain hypothetical events (e.g., rolling a die ten times and getting six 3 times; Fig. 8b) were gathered using a classroom response system and discussed subsequently. The presented events also included boundary examples (e.g., getting a six each time; Fig. 8a). After this initial work on the individual events, the students were also asked to first reflect individually and second discuss with a partner which of these events were most likely respectively most unlikely (Fig. 8b). The idea behind the various events was to introduce boundary-example tasks naturally within contexts students would know and to contrast them with other examples so that students could extract the relevant structural elements of the random experiments.

4.4.4 Control Group

Students in the control group worked on dice games throughout the intervention. The rule of the game was: ‘Two dice are rolled, and their values are summed up. Player A wins for the values 2 to 6 and player B for the values 7 to 11, value 12 equals a tie.’ Students were first asked to play this game for some time. Then the focus was put on the (un)fairness of this game variant and (later) on how the game could be changed to be fair. By doing so, Laplace experiments, possible outcomes, and the observed absolute and relative frequencies were discussed. The dice-games intervention was similarly activating as the dynamic intervention. Overall, no explicit connection to the eLLN was established within the control group.

4.5 Analyses

To address research question 1, a descriptive analysis of students’ pretest results was conducted, and all four groups were compared using a one-way ANOVA to determine any significant differences between groups. Moreover, students’ results regarding items characterized as ‘dynamic’, ‘static-contrast, tail’, and ‘static-contrast, accuracy’ were compared using descriptive data as well as a repeated-measures ANOVA. Finally, the frequency of answers based on an equal-ratio bias for items characterized as ‘static-contrast, tail’ was calculated.

For research question 2, a repeated-measures ANOVA was conducted, comparing the results from pre- and posttest with the groups as a between-subjects factor. Additionally, selected post-hoc tests were calculated to determine the effectiveness of each intervention. Research question 3 was answered based on the analysis from question 2 by comparing the pre- and posttest effect sizes, which were based on the marginal means.

To address research question 4, items characterized as ‘dynamic’, ‘static-contrast, tail’, and ‘static-contrast, accuracy’ were evaluated separately. For each type of item, the pre- and posttest scores were calculated and compared using a repeated-measures ANOVA with the groups as between-subjects factor. Additionally, selected post-hoc tests were calculated to determine the effectiveness of each intervention. Afterward, the frequency of answers based on an equal-ratio bias for items characterized as ‘static-contrast, tail’ was calculated and descriptively and statistically compared to the results from the pretest.

Finally, for research question 5, data from the delayed posttest was included and repeated-measures ANOVAs were calculated (i) to compare the differences between posttest and delayed posttest as well as (ii) the overall effects from pretest to delayed posttest.

5 Results

5.1 Students’ Success on eLLN Tasks Before the Intervention

The results from the pretest (Table 3) show that students had problems solving the items requiring the eLLN as the overall mean score was 31.64% (SD = 22.06%). However, results varied from 0 to 1, thus using the whole breadth of the scale. Descriptive statistics (Table 3) further show comparable results between all three experimental groups and the control group.

This finding is underlined by a one-way ANOVA on the pretest data using the groups as a between-subjects factor, which did not reveal significant differences (F (3,252) = 1.813, p = 0.150), thus highlighting the comparability of all groups before the intervention.

Pretest data was further analyzed regarding students’ success in items characterized as ‘dynamic’, ‘static-contrast, tail’, and ‘static-contrast, accuracy’. Results highlight (Table 4) that items characterized as ‘dynamic’ were much easier to solve than both types of items characterized as ‘static-contrast’. This is also supported by a highly significant repeated-measures ANOVA comparing the three types of items (F (2,510) = 84.466, p = 2.065e-32). Post-hoc tests showed significant pairwise differences between all types of items (p < 1.308e-3).

Besides the generally low solution rate for ‘static-contrast, tail’ items, data also show a high rate of answers based on the equal-ratio bias in the pretest as students selected the ‘is equally likely’-answer in 50.65% of all cases. A one-way ANOVA showed no significant differences between all four groups (F (3,252) = 1.302, p = 0.274) regarding the rate of equal-ratio bias.

5.2 Effectiveness of the Interventions

Across all groups (including the control group) the mean posttest score was 41.80% (SD = 26.71%) and thus approximately 10 percentage points higher than the pretest results. Descriptive results (Fig. 9) show that the mean score in all three intervention groups has increased; however, the increase for both data-driven intervention groups appears rather small compared to the increase in the boundary-example intervention group. Results for the control group show a slight decrease from pre- to posttest, which is not significant (t (26) = 0.593, p = 0.559).

Results from the repeated-measures ANOVA (Table 5) comparing pre- and posttest results with the different groups as a between-subjects factor show a significant main effect of time (F (1,252) = 0.700, p = 1.716e-6) with a medium to large effect size \({\eta }_{p}^{2}=0.087\), thus underlining the differences between pre- and posttest. More importantly, there is a significant interaction between group and time (F (3,252) = 9.812, p = 3.824e-6) with an even larger effect size \({\eta }_{p}^{2}=0.105\), thus showing the different effectiveness of the interventions.

Post-hoc comparisons were calculated to determine significant pre-post effects and estimate the effectiveness of each intervention. Results reveal a large effect of the boundary-example intervention (tBoundary = 7.983, p = 5.060e-14; dBoundary = 0.948) and small effects of the static-contrast intervention (tStatCont = 2.439, p = 0.015; dStatCont = 0.286) as well as the dynamic intervention (tDyn = 2.200, p = 0.029; dDyn = 0.263).

5.3 Differential Effectiveness Regarding Items Characterized as ‘Static-contrast’ and Items Characterized as ‘Dynamic’

To compare the differential effectiveness of each intervention regarding the different views of the eLLN, items characterized as ‘dynamic’, ‘static-contrast, tail’, and ‘static-contrast, accuracy’ were evaluated separately.

Results of a repeated-measures ANOVA on the item characterized as ‘dynamic’ neither reveal a significant main effect of time (F (1,252) = 0.090, p = 0.764, \({\eta }_{p}^{2}=0.000\)) nor a significant interaction of time and group (F (3,252) = 2.553, p = 0.056, \({\eta }_{p}^{2}=0.029\)). Post-hoc analyses further reveal insignificant pre-/posttest differences for all intervention groups with minimal to small effect sizes (t < 1.795, p > 0.061; d < 0.398).

Focusing on items characterized as ‘static-contrast, tail’, results (Fig. 10) reveal a significant main effect of time (F (1,252) = 22.365, p = 3.756e‑6, \({\eta }_{p}^{2}=0.082\)) and a significant interaction of time and group (F (3,252) = 16.695, p = 6.342e-10, \({\eta }_{p}^{2}=0.166\)). Post-hoc analyses reveal that differences can be mainly related to the boundary-example intervention, which shows a significant positive effect (tBoundary = 9.180, p = 1.624e-17; dBoundary = 1.068), whereas both data-driven interventions do not show a significant effect (tDyn = 0.886, p = 0.377; dDyn = 0.104 and tStatCont = 0.620, p = 0.536; dStatCont = 0.071).

Similarly, results for items characterized as ‘static-contrast, accuracy’ show a significant main effect of time (F (1,252) = 8.492, p = 0.004, \({\eta }_{p}^{2}=0.033\)); however, there is no significant interaction of time and group (F (3,252) = 2.184, p = 0.090, \({\eta }_{p}^{2}=0.025\)). Post-hoc analyses reveal no significant difference for the boundary-example intervention (tBoundary = 0.572, p = 0.568; dBoundary = 0.083). However, both data-driven interventions show a significant medium effect (tDyn = 3.453, p = 6.490e‑4; dDyn = 0.502 and tStatCont = 3.010, p = 2.876e‑3; dStatCont = 0.429).

Finally, an analysis of students’ use of the equal-ratio bias based on all students’ responses to items characterized as ‘static-contrast, tail’ reveals that the equal-ratio bias occurred less often in the posttest (38.02%) than in the pretest (50.65%). A repeated-measures ANOVA shows a significant main effect of time (F (1,252) = 18.776, p = 2.125e‑5, \({\eta }_{p}^{2}=0.069\)) as well as a significant interaction between time and group (F (3,252) = 7.644, p = 6.550e‑5, \({\eta }_{p}^{2}=0.083\)). Post-hoc analyses reveal a significant large effect (tBoundary = 7.018, p = 2.069e-11; dBoundary = 0.854) for the boundary-example intervention with only 18.86% of the answers in the posttest based on the equal-ratio bias. In comparison, effects for both data-driven interventions are insignificant or small (tDyn = 1.304, p = 0.193; dDyn = 0.160 and tStatCont = 2.132, p = 0.034; dStatCont = 0.256) and for both data-driven interventions more than 42.67% of the answers in the posttest were still based on the equal-ratio bias. For the control group, there was virtually no effect (tControlG = 0.001, p = 0.999; dControlG = 2.218e‑4,), underlining that there were no effects regarding the equal-ratio bias based on repeated testing.

5.4 Long-term Effectiveness of the Interventions

Descriptive results from the delayed posttest (N = 64) are comparable to those from the posttest with a mean of 46.61% (SD = 28.67); however, results vary heavily between the four groups, ranging from 58.06% (SD = 28.18) in the boundary-example group to 27.78% (SD = 9.62) in the control group (Fig. 11a).

For a more detailed analysis of the long-term effectiveness of the interventions a repeated-measures ANOVA comparing posttest to delayed posttest results was calculated based on the data of all participants of the delayed posttest (N = 64). Results do neither reveal a main effect of time (F (1,60) = 0.367, p = 0.547) nor a significant interaction effect of time and group (F (3,60) = 0.620, p = 0.605), showing a mostly constant and similar behavior of all four groups. Descriptively, the data reveals, that the boundary group stays on a high level, while both data-driven groups stay on a somewhat lower level, while the control group appears to decrease from posttest to delayed posttest.

Comparing the results from the pretest with the delayed posttest (Fig. 11b) to draw conclusions about the overall effectiveness of the interventions, a repeated-measures ANOVA reveals a significant main effect of time (F (1,60) = 4.184, p = 0.045), while there is no significant interaction effect of time and group (F (3,60) = 1.870, p = 0.144). Post-hoc tests reveal that only the boundary-example intervention shows a significant medium positive effect (tBoundary = 4.746, p = 1.329e‑5; dBoundary = 1.064), whereas the effects of both data-driven interventions are insignificant and lower (tStatCont = 1.274, p = 0.208; dStatCont = 0.375 and tDyn = 1.734, p = 0.088; dDyn = 0.625).

6 Discussion & Outlook

Successfully handling situations involving the empirical law of large numbers (eLLN), for example, when interpreting (statistical) data in daily life or when collecting data to create evidence for a claim, is an important learning goal within secondary school mathematics and an essential skill for responsible 21st century citizens in the age of fake news. However, research on the eLLN has repeatedly revealed that students of all ages have problems to successfully adhere to the eLLN, in particular regarding a sensitivity to sample size. So far, this has often been addressed by research using the hospital problem (see Kahneman and Tversky 1972) and the concept of intuition (Fischbein 1975, 1999). However, research has mostly focused on describing learners’ status quo; systematic empirical research that contrasts different approaches to support students in their sensitivity to sample size in the context of the eLLN has been scarce so far.

The presented study expands prior research regarding three key points: First, a framework by Stanovich (2018) for describing human cognition in the context of heuristics and biases tasks has been used instead of using the rather vague concept of intuition. Second, three interventions, each based on central approaches suggested by prior research to support students in the context of the eLLN, have been contrasted using an empirical study to examine their effectiveness in supporting students when handling tasks that require the eLLN and especially sensitivity to sample size. Third, instead of either (i) solely focusing on variants of the hospital problem one can characterize as ‘static-contrast, tail’, or (ii) unsystematically using tasks corresponding to different aspects of the eLLN (see Table 1) either in the examined interventions or in their evaluation (e.g., Biehler 2014; Schnell 2018), three distinct aspects (‘dynamic’, ‘static-contrast, tail’, ‘static-contrast, accuracy’) of the eLLN were explicitly investigated to cover different aspects of the eLLN and investigate the effectiveness of the approaches regarding each of these aspects specifically. While Table 1 also includes other aspects such as prediction vs. estimation, which would have been interesting to investigate and might in principle even lead to more systematic results, there are currently no hypotheses regarding differential effects of the intervention approaches regarding these aspects and the highly increased number of items might have had negative motivational and statistical effects.

The study is based on the data of N = 256 students from grade six of German “Gymnasium”, a rather high attaining type of secondary school. All students had covered the eLLN in their regular mathematics lessons and thus one can assume they at least partially possessed the relevant knowledge to handle eLLN problems. Still, pretest results show a rather low average score of about 32%. Moreover, the answer ‘equally likely’ was selected in items characterized as ‘static-contrast, tail’ by more than 50% of all students. Based on this information, research based on the concept of intuition would have likely concluded that there is an intuition prohibiting students from correctly answering tasks regarding the eLLN (judgment focusing on the ‘high’ number of ‘equally likely’ answers). Still, this would conflict with other studies showing high solution rates (e.g., Sedlmeier and Gigerenzer 1997) providing evidence for an intuition for the eLLN. Overall, data would be inconclusive.

However, based on the framework by Stanovich (2018), data can be interpreted more satisfactorily: In the pretest, students in our sample seem to be at Stages 2–3 of the eLLN instantiation continuum; they have learned the eLLN (thus are not at Stage 1) but either did not realize the conflict between their wrong answer and the eLLN or did not activate the relevant knowledge (although realizing the conflict). In particular, an automatized knowledge regarding equal ratios may have been easier at hand (system 1 processing) for approximately half of the sample than applying the relevant knowledge to the problem using system 2 processing.

The interpretation of the data using Stanovich’s framework is even more supported by the results of the posttest, which showed i) a significant medium to large effect of the approaches underlining that students can be supported in adhering to the eLLN, ii) an even larger interaction effect showing the different effectiveness of the interventions, and iii) a decrease in ‘equally likely’ answers, especially for the boundary intervention. In terms of intuitions, these results would be difficult to interpret, especially based on the conclusion from the pretest that students have an intuition prohibiting the successful use of the eLLN. However, in terms of Stanovich’s framework with multiple stages (rather than a binary existence of an intuition), the improvements show that a certain share of students has advanced to higher stages, hence making the activation of relevant knowledge and thus a correct answer more likely.

Comparing the three approaches, the boundary-example approach showed the highest pretest-posttest effect size dBoundary = 0.948, a large effect size, which is remarkable for an intervention of approximately 25 min. However, the result is in line with the results by Weixler et al. (2019) and the educational expectancies regarding boundary examples by A. Watson and Mason (2002).

Examining the effects more closely based on the different types of items, the effectiveness of the boundary-example approach can be mostly related to a vastly higher effectiveness regarding items characterized as ‘static-contrast, tail’ (see Fig. 10) compared to both data-driven approaches. As the boundary-example approach focused on corresponding tasks, this is not surprising. However, it is surprising that all three approaches showed comparable effects regarding items characterized as ‘dynamic’ as well as items characterized as ‘static-contrast, accuracy’, as there were no significant interactions regarding time and group for both types of items.

Finally, the rate of ‘equally likely’-answers in the posttest, indicating an equal-ratio bias, remained above 42% for both data-driven approaches. However, for the boundary-example approach, the rate of ‘equally likely’-answers decreased to below 19% and at the same time, the rate of normatively correct responses increased remarkably. Concerning the framework by Stanovich (2018), we conclude that many students in this group, which were initially at Stages 2–3, improved to Stage 4 (or even 5). Based on the boundary-example approach, students were able to override the ‘equally likely’-answer and use relevant knowledge to correctly answer the items. In contrast, the vast majority of the students in the data-driven approach groups remained at Stages 2–3 of the continuum regarding the instantiation of the eLLN.

These conclusions are further underpinned by the delayed posttest that was conducted approximately three weeks after the intervention. Although there is no significant interaction effect of time and group (which may be due to the reduced sample size in the delayed posttest and accordingly high variances), descriptive data and effect sizes from posttest to delayed posttest and pretest to delayed posttest give a clear indication of the effects: whereas both data-driven approaches only show nonsignificant positive effects from pretest to delayed posttest, the boundary-example approach shows a significant large effect and thus constitutes the only approach that created long(er) lasting effects. This may mirror the students’ improvement to Stage 4 (or even 5) based on the boundary-example approach, whereas students from the data-driven approaches rather stayed at Stages 2–3.

In summary, the framework by Stanovich (2018) appears very well suited to describe the data from this study and to interpret the effectiveness of the interventions. In particular, it is educationally more promising than focusing on intuitions, which appear less susceptible to teaching. In contrast, the eLLN instantiation continuum by Stanovich offers a clear educative perspective that—as data from this study has underlined—can also be tackled effectively with short interventions.

Analog to findings from prior studies (Rasfeld 2004; Well et al. 1990), in particular comparisons between studies, which are always hard to interpret, our direct comparison of three different types of tasks focusing on the eLLN has revealed significant differences in the pretest: the task characterized as ‘dynamic’ was answered significantly better than those characterized as ‘static-contrast’, where in turn tasks characterized as ‘accuracy’ were solved significantly better than tasks characterized as ‘tail’ (see Table 4). Accordingly, it is not surprising that, although all interventions had positive effects from pretest to posttest, effects mainly relate to the tasks characterized as ‘static-contrast’ and here mainly to the type characterized as ‘static-contrast, tail’. It is however surprising that, although both data-driven interventions focused on different views of the eLLN (StatCont: static-contrast view, Dyn: dynamic view), both appear to have mostly the same overall effectiveness as well as effectiveness regarding the three types of tasks. In contrast to the boundary-example intervention, both data-driven interventions also did not seem to be particularly effective regarding the equal-ratio bias. This questions both approaches, in particular as the boundary-example intervention is quite lightweight and easy to implement. But although the effectiveness of the boundary-example intervention parallels the results by Weixler et al. (2019), more research is needed to underpin the results from this study and replicate the effectiveness of the boundary-example approach, especially regarding long-term effectiveness and other implementations of the approach.

A corresponding independent replication study could also address the two main limitations of this study, which are (i) the specific implementation of the approaches in the interventions, which only tentatively allows conclusions from the specific interventions to the approaches in general, and (ii) the low participation in the delayed posttest, which makes the results regarding the long-term effectiveness of the approaches hard to interpret. In particular, a comparison of equal-ratio-based answers in the delayed posttest did not seem appropriate given the low number of participants in the control group, although that could have been very informative regarding the effectiveness of the approaches and the interpretation of results regarding the different stages corresponding to the framework by Stanovich (2018). Still, data from pre- and posttest show conclusive results in line with prior research, in particular the study by Weixler et al. (2019) and results regarding differences between the three types of items. Moreover, all main research questions could be reasonably addressed using the existing data. Also, effect sizes regarding the differences between pre-/posttest and delayed posttest may be able to provide first hints regarding long-term effectiveness until more data is available.