Abstract

Rough set theory is a powerful mathematical technique of decision making, which can be exploited to feature selection and rule induction from uncertain and ambiguous data. Generally, the attribute significance degree is one of the fundamental metrics used to measure the contained information of each attribute. However, in the most existing rough based methods, some attributes share the identical or zero significance degrees, that cannot reflect the situations in the realistic scenarios. We are then motivated to improve the attribute significance degree of rough set, based on the new attribute significance degree of rough set, a new method of supplier evaluation and selection is presented, it solves the problems existing in current research and verifies the scientific nature and effectiveness of the method with a case.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The selection of suppliers is a vital part of the production and operation activities of enterprises. How to evaluate and select suppliers scientifically and effectively has become the key to the healthy operation and development of enterprises. Thus, the evaluation and selection of suppliers have been a matter of optimized decision-making of great interest to the academia and industrial sectors.

Currently, supplier evaluation and selection methods are broadly divided into three categories: qualitative methodology, quantitative methodology, and mixing qualitative and quantitative methodology. Commonly adopted methods include the expert judgment method, cost method, mathematical programming approach, multivariate statistical analysis method, analytic hierarchy process method, neural network algorithm, genetic algorithm, and fuzzy evaluation method. The above methods have some problems. Firstly, these methods are highly subjective in evaluation. Due to the evaluator’s own subjective reasons, the evaluation contains many uncertainties that will directly affect the accuracy of the evaluation results. Secondly, evaluation indexes are vague and difficult to quantify, and evaluation indexes still need to be screened with more objective and scientific methods. Owing to these problems, a more scientific and effective method is needed for supplier evaluation and selection.

As rough set theory proposed by Pawlak is powerful mathematical tool to process incomplete and uncertain knowledge, it is a feasible way to select cooperate suppliers. Now by exploiting rough set techniques, many researchers have paid much attention on discovering the underlining knowledge, such as significant attributes [1,2,3]. The significant difference between rough sets and current methods of dealing with uncertain problems is that it does not require any priori information outside of the problem processing data and fully reflects the objectivity of data processing. The effectiveness of rough sets has been proven in many successful applications in science and engineering fields and is one of the current research hotspots in international artificial intelligence theory and its application areas. In recent years, many scholars have taken advantage of the objectivity of rough set data processing and applied it to the comprehensive evaluation of multiple indicators [4,5,6,7]. Some studies adopting rough sets have also appeared in enterprise supplier evaluation and selection research [8,9,10,11]. The rough set theory is mainly adopted to calculate the weights of each evaluation index, which will overcome the subjective factors of determining the weights of the indexes in the traditional evaluation methods. There are few studies on such areas, and existing studies are superficial, incomplete, and not so scientific. These studies determine weights through the rough set attribute significance degree.

The rough set attribute significance degree determination by Miao et al. [4] failed to consider the influence of the interaction between attributes on the attribute significance degree. Also, unreasonable cases with the calculated index weights being zero and the index weights being the same during application emerged [8, 9]. Sun [12] improved the rough set attribute significance degree according to the analysis of a variety of current methods based on rough set weight determination, presented the attribute weight determination method based on the improved rough set attribute significance degree, and Sun and An [13] applied this method to the determination of land grading factor weights, achieving more satisfactory results. However, in terms of the improvement of the rough set attribute significance by Sun [12], it is not precise enough to assign the sum of the increased part of the combined attribute significance degree to each attribute according to the mean value, and it lacks the consideration of the degree of influence of other attributes on the significance degree of a single attribute. This paper considers the factor and uses the weighted assignment method to further improve the rough set attribute significance degree by Sun [12]. It also uses the new rough set attribute significance degree evaluation and selection method with the help of new rough set attribute significance degree to address the problems in [8, 9] such that the evaluation methodology is more scientific and effective.

This paper is organized as follows: firstly, introduces the current research status of attribute significance degree and the application of attribute significance degree in enterprise supplier evaluation and selection. Secondly, in allusion to the existing problems in the current research, it improves attribute significance degree and studies the proposition of the new attribute significance degree. Thirdly, it presents the methodologies and steps of applying the new attribute significance degree for enterprise supplier evaluation and selection and provides an empirical analysis.

2 Basic concepts

2.1 The equivalence relation

Binary relation: Let U be a set, and R is a subset of \(U \times U\), i.e., \(R \subseteq U \times U\), then R is called a binary relation on U.

Equivalence relation: Let R be a binary relation on U, if R is reflexive, symmetric, and transitive, then R is called an equivalence relation on U.

Equivalence classes Let R be an equivalence relation on U, the set of all elements related to R of an element x in U is called the equivalence class of x, denoted by \(\left[ x \right]_{R}\). Only one relation is considered, the subscript can be omitted and abbreviated as \(\left[ x \right]\).

Cardinal number The total number of elements in a set R is called the Cardinal number of the set, denoted as \(\left| R \right|\).

2.2 Information systems

An information system is the following tuple: \(S = \left( {U,A,V,f} \right)\), where, U denotes a finite nonempty set of objects to be discussed and is also called domain; \(A = C \cup D\) is a set of attributes, with subset C and subset D called the set of condition attributes and the set of decision attributes, respectively; \(V = \bigcup\limits_{a \in A} {V_{a} }\) is the sum of attribute values; \(f:U \times A \to V\) is an information function that formulates the attribute values of each object x in U. For each attribute subset B, an indiscernible binary relation (also called an equivalence relation) \(IND\left( B \right)\) is defined as follows

Clearly, the equivalence relation \(IND\left( B \right) = \bigcap\nolimits_{b \in B} {IND\left( {\left\{ b \right\}} \right)}\) constitutes a partition of U, denoted as \(U/IND\left( B \right)\), and abbreviated as \(U/B\). Thus \(S = \left( {U,A,V,f} \right)\) is also described as a decision system.

2.3 Rough sets

Z. Pawlak does the following research in [1]: Given a finite nonempty set called domain U, R is an equivalence relation on U, and \(X \subseteq U\) is any subset, then:

The set \(R_{*} \left( X \right) = \cup \left\{ {Y \in U/R:Y \subseteq X} \right\}\) is defined as the lower approximation set of X with respect to R, \(POS_{R} \left( X \right) = R_{*} \left( X \right)\) is also called R positive region of X; if \(x \in POS_{R} \left( X \right)\), then \(x\) is discernible on R.

The set \(R^{*} \left( X \right) = \cup \left\{ {Y \in U/R:Y \cap X \ne \emptyset } \right\}\) is defined as the upper approximation set of X with respect to R; \(NEG_{R} \left( X \right) = U - R^{ * } \left( X \right)\) is called the R negative region of X.

The lower and the upper approximation set of X can also be written in the following equivalent formulas:

\(BN_{R} \left( X \right) = R^{ * } \left( X \right) - R_{ * } \left( X \right)\) is called the R-borderline region of X; X is called R-definable if and only if \(R^{ * } \left( X \right) = R_{ * } \left( X \right)\); otherwise, \(R^{ * } \left( X \right) \ne R_{ * } \left( X \right)\) and X is rough with respect to R.

The difference between the upper and lower approximation sets of X concerning the relation R is called the R-borderline set of X, denoted as \(BN_{R} \left( X \right) = R^{ * } \left( X \right) - R_{ * } \left( X \right)\).

3 On attribute significance degree

In this section, the existing significance degrees are discussed and analyzed. Then, to alleviate the weakness of current significance degree, we proposed an improved significance degree. Finally, based on the proposed significance degree, an algorithm of attribute reduction is then proposed.

3.1 Discussions on attribute significance degree

In rough set philosophy, attribute significance has been defined from different perspectives according to the needs in real applications. We review them and analyze their characteristic.

(1) Dependency degree-based significance degree

In Pawlak proposed classical rough sets, the significance degree of an attribute subset \(B \subseteq C\) aims to leverage the change of dependency degree after removing B from the original attribute set C, which can be expressed as:

where \(\gamma_{C} \left( D \right) = \frac{{\left| {POS_{C} \left( D \right)} \right|}}{\left| U \right|}\), \(\left| * \right|\) represents cardinal number of set \(*\), \(POS_{C} \left( D \right) = \cup_{X \in U/D} C_{ * } \left( X \right)\).

This kind of definition of significance degree indicates that, after removing B, the more the samples become indiscernible, the greater the discernibility decreases, indicating that B is more important in C.

Furthermore, based on the concept of dependency degree, Hu and Cercone [14] defined the significance degree of internal/external attribute sets. Specifically, the internal significance degree is used to discover core attributes and the external significance degree is used to rank the significance degree of attributes. Their definition is presented as follows:

Additionally, Chen et al. [15] improved the classical definition of significance degree with supplementary measure of binary granule of the finite set. The improved significance degree of attribute a is defined as follows:

The aforementioned attribute significance degrees are defined based on positive region and dependency degree have the following characteristics.

-

They require a large amount of computation. Because the computing scalability is \(O(n)\).

-

By leveraging dependency degree-based significance degree, multiple attributes may share the same significance degree value.

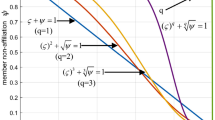

(2) Granularity based significance degree

In recent years, some researchers measure the significance degree by leveraging knowledge granularity.

Miao et al. [4] proposed a significance degree measure by leveraging the change of knowledge granularity. Their definition is presented as follows:

where \(U/C = \left\{ {X_{1} ,X_{2} , \ldots ,X_{m} } \right\}\), \(GP_{U} \left( C \right) = \sum\nolimits_{i = 1}^{m} {\frac{{\left| {X_{i} } \right|^{2} }}{{\left| U \right|^{2} }}}\) denotes the knowledge granularity of C, \(GP_{U} \left( {\left. D \right|B} \right)\) denotes the relative knowledge granularity of B with respect to B \(\left( {B \subseteq C} \right)\).

This definition indicates that the finer the knowledge granularity after adding an attribute, the more significance degree the attribute is. It is easy to get that this definition shares the same meaning of those definitions based on dependency degree, i.e., the measure the change when an attribute is added. However, the significance degree is defined on granularity, not positive region.

(3) Entropy based significance degree

Besides dependency degree and knowledge granularity, entropy is also used to measure the information in information systems. And then Slezak and Wang et al. used the change of conditional entropy to measure the significance degree of an attribute in its attribute subset [16,17,18], which is then exploited in attribute reduction.

The entropy-based attribute significance degree is then presented as follows:

where B is a subset of condition attributes, D is a set of decision attributes, \(H\left( {\left. D \right|B} \right)\) denotes the conditional entropy.

Comparative to dependency degree-based attribute significance degree, the computing entropy is more efficient. Qian et al. [19] extended the concept of attribute significance degree in Formula (5) to measure the constructed granule order. They proposed the concept of positive domain approximation and accelerated the process of positive domain reduction.

(4) Equivalence relation-based significance degree

Some definitions of significance degree are defined on equivalence degree, which is then used in incremental attribute reduction. In [20], Jing et al. proposed attribute significance degree based on the change of equivalence relation after adding attributes, such as the change in the values of the elements in the relational matrix.

For the information system \(S = \left( {U,A,V,f} \right)\), the equivalent relation matrices based on different attribute sets are \(M_{U}^{{R_{C} }}\), \(M_{U}^{{R_{{\left( {C - \left\{ a \right\}} \right)}} }}\), \(M_{U}^{{R_{{\left( {C \cup D} \right)}} }}\) and \(M_{U}^{{R_{{\left( {\left( {C - \left\{ a \right\}} \right) \cup D} \right)}} }}\), \(\forall a \in C\), the matrix-based internal significance degree of a in C is expressed as follows:

where \(\overline{{M_{U}^{{R_{C} }} }} = \sum\nolimits_{i = 1}^{n} {\sum\nolimits_{j = 1}^{n} {\frac{{m_{ij} }}{{n^{2} }}} } = \frac{{Sum\left( {M_{U}^{{R_{C} }} } \right)}}{{n^{2} }}\), \(\overline{{M_{U}^{{R_{{\left( {C - \left\{ a \right\}} \right)}} }} }}\) and \(\overline{{M_{U}^{{R_{{\left( {\left( {C - \left\{ a \right\}} \right) \cup D} \right)}} }} }}\) are the mean value of matrices \(M_{U}^{{R_{{\left( {C - \left\{ a \right\}} \right)}} }}\) and \(M_{U}^{{R_{{\left( {\left( {C - \left\{ a \right\}} \right) \cup D} \right)}} }}\). If \(C_{0} \subseteq C\), the matrix relation are respectively \(M_{U}^{{R_{{C_{0} }} }}\), \(M_{U}^{{R_{{\left( {C_{0} \cup \left\{ a \right\}} \right)}} }}\), \(M_{U}^{{R_{{\left( {C_{0} \cup D} \right)}} }}\) and \(M_{U}^{{R_{{\left( {\left( {C_{0} \cup \left\{ a \right\}} \right) \cup D} \right)}} }}\), \(\forall a \in \left( {C - C_{0} } \right)\), the matrix-based external significance degree of a in C is expressed as follows:

where \(\overline{{M_{U}^{{R_{{C_{0} }} }} }}\), \(\overline{{M_{U}^{{R_{{\left( {C_{0} \cup D} \right)}} }} }}\), \(\overline{{M_{U}^{{R_{{\left( {C_{0} \cup \left\{ a \right\}} \right)}} }} }}\) and \(\overline{{M_{U}^{{R_{{\left( {\left( {C_{0} - \left\{ a \right\}} \right) \cup D} \right)}} }} }}\) are the mean value of matrices \(M_{U}^{{R_{{C_{0} }} }}\), \(M_{U}^{{R_{{\left( {C_{0} \cup D} \right)}} }}\), \(M_{U}^{{R_{{\left( {C_{0} \cup \left\{ a \right\}} \right)}} }}\) and \(M_{U}^{{R_{{\left( {\left( {C_{0} \cup \left\{ a \right\}} \right) \cup D} \right)}} }}\).

(5) Condition attribute-based significance degree

There is also a simple but effect way to define significance degree. Miao et al. [4] proposed the definition of attribute significance based on condition attributes.

Given \(S = \left( {U,A,V,f} \right)\) is an information system, \(A = C \cup D\) and \(R \subseteq C\) is an attribute subset, and \(c \in C\) is a condition attribute, the attribute significance degree of c with respect to R is defined as follows:

where \(\left| R \right| = \left| {IND\left( R \right)} \right|\) and if \(\frac{U}{IND\left( R \right)} = \frac{U}{R} = \left\{ {X_{1} ,X_{2} ,...X_{n} } \right\}\), then \(\left| R \right| = \left| {IND\left( R \right)} \right| = \sum\limits_{i = 1}^{n} {\left| {X_{i} } \right|}^{2}\).

This definition considers the significance degree of c to R.

(6) Significance degree based on various rough set models

Most of the above reviews are defined and used in classical rough set models. And there existed many techniques of significance degree defined in various rough set models. Jin et al. [21] extended significance degree based on entropy to the dominance-equivalence relation rough set models. The attribute significance degree based on four evaluation functions is defined, and attribute reduction in different types of inconsistent decision tables is conducted. In the neighborhood rough set, Hu et al. [22] proposed the attribute significance degree based on dependency degree in a form similar to Eq. (2), that is a direct extension of dependency degree in classical rough set models. Wang et al. [23] used the basic form of external attribute significance degree based on entropy under neighborhood relationship. This method just modified the definition of condition entropy and added attributes one by one from the core attributes generates a reduction, making reduction more efficient.

3.2 An improved attribute significance degree

Considering the influence of attributes themselves and the interaction between attributes on attribute significance degree, Sun [12] proposed the following new definition of attribute significance degree in terms of formula (7).

Let \(S = \left( {U,A,V,f} \right)\) is an information system, \(A = C \cup D\), \(C = \left\{ {c_{1} ,c_{2} , \ldots c_{n} } \right\}\) and \(R \subseteq C\) is an attribute subset, set, \(c_{i} \in C \, \left[ {1 \le i \le n} \right]\) is an condition attribute, the significance degree of \(c_{i}\) with respect to R is defined as follows:

This definition is defined by leveraging the significance degree based on condition attributes, which has solved the problems in application of attribute significance degree of rough set proposed by Miao et al. [4]. However, this definition still has its limitations. Specifically, the sum of the increased part of its double combined attribute significance degree, i.e., \(Sig_{R} \left( {c_{i} ,c_{j} } \right)\), is not precise enough to be assigned to each attribute according to the mean value. Additionally, the degree to other attributes influences the significance of single attribute is not considered. To narrow this gap, we then improve the significance degree by using weighted assignment method.

Definition 1

Let \(S = \left( {U,A,V,f} \right)\) is an information system, \(A = C \cup D\), \(C = \left\{ {c_{1} ,c_{2} , \ldots c_{n} } \right\}\) and \(R \subseteq C\) is an attribute subset, set, \(c_{i} \in C \, \left[ {1 \le i \le n} \right]\) is an condition attribute, the significance degree of \(c_{i}\) with respect to R is defined as follows:

where

\(Sig_{R} \left( {c_{i} ,c_{j} } \right)\) represents the attribute significance degree \(Sig_{R} \left( {\left\{ {c_{i} ,c_{j} } \right\}} \right)\) of the attribute subset \(\left\{ {c_{i} ,c_{j} } \right\}\) with respect to the relation R.

According to Definition 1, it is observed that if there exists a \(j_{0} \left( {j_{0} \ne i} \right)\) satisfying \(Sig_{R} \left( {c_{i} ,c_{{j_{0} }} } \right) - Sig_{R} \left( {c_{i} } \right) > 0\), then \(Sig^{\prime\prime}_{R} \left( {c_{i} } \right)\) does be influenced by attribute \(c_{i}\); otherwise, \(Sig^{\prime\prime}_{R} \left( {c_{i} } \right)\) is not influenced by attribute \(c_{j}\). Hence, our improved significance degree is more reasonable.

In Definition 1, we fully consider the influence of the significance degree of dual combined attributes on the significance degree of single attribute, it provides a method to assign the significance degree of a single attribute to the part where the significance degree of double combined attributes increases. We also consider the degree to which other attributes influence the significance of single attribute, the weight coefficient \(\alpha_{ij}\) (\(0 \le \alpha_{ij} \le 1\)) is used to allocate and adjust, so that the calculation of attribute significance degree is more scientific and reasonable.

In the following, we describe the properties of our improved significance degree.

Proposition 1

In an information system \(S = \left( {U,A,V,f} \right)\), \(A = C \cup D\), \(C = \left\{ {c_{1} ,c_{2} , \ldots c_{n} } \right\}\) and \(R \subseteq C\) is an attribute subset, if there exists \(i_{0}\) (\(1 \le i_{0} \le n\)) satisfying \(Sig_{R} \left( {c_{{i_{0} }} } \right) = 0\), then

Proof

According to the given condition,\(Sig_{R} \left( {c_{{i_{0} }} } \right) = 0\), \(\alpha_{ij} = \frac{{Sig_{R} \left( {c_{i} ,c_{j} } \right) - Sig_{R} \left( {c_{i} } \right)}}{{\sum\limits_{j = 1}^{n} {\left[ {Sig_{R} \left( {c_{i} ,c_{j} } \right) - Sig_{R} \left( {c_{i} } \right)} \right]} }}\left( {\begin{array}{*{20}c} {1 \le i \le n} \\ {1 \le j \le n} \\ \end{array} } \right)\); then.

Proposition 1 shows that when the value of significance degree defined in Formula (7) is zero, our improved one is not. Hence, our improved significance degree can measure the inherent information caused by adding an attribute, which is instructive to catch more informative and more significant attribute in dimension reduction. Furthermore, our improved significance degree has the following property.

Proposition 2

In an information system \(S = \left( {U,A,V,f} \right)\), \(A = C \cup D\), \(C = \left\{ {c_{1} ,c_{2} , \ldots c_{n} } \right\}\) and \(R \subseteq C\) is an attribute subset. For \(c_{{i_{0} }} \in C\) satisfying \(Sig_{R} \left( {c_{{i_{0} }} } \right) = 0\), we have

If there exists \(j_{0} \in C\) (\(1 \le j_{0} \le n\), \(j_{0} \ne i_{0}\)), such that \(Sig_{R} \left( {c_{{i_{0} }} ,c_{{j_{0} }} } \right) > 0\), then \(Sig^{\prime\prime}_{R} \left( {c_{{i_{0} }} } \right) > 0\).

Proof

then

Since

We have

□

By Proposition 2, we have the following results.

-

In concise, Proposition 2 shows that if \(Sig_{R} \left( {c_{{i_{0} }} } \right) = 0\) and \(\forall j\left( {1 \le j \le n} \right)\), \(Sig_{R} \left( {c_{{i_{0} }} ,c_{j} } \right) = 0\), then \(Sig^{\prime\prime}_{R} \left( {c_{{i_{0} }} ,c_{j} } \right) = 0\).

-

This result reveals that by leveraging our proposed significance degree it is workable to reduce a redundant attribute in dimension reduction as those attributes with \(Sig^{\prime\prime}_{R} \left( {c_{{i_{0} }} ,c_{j} } \right) = 0\) is redundant.

-

This result shows that our proposed significance degree is more profound than the formula (7). Accordingly, many attributes thought redundantly by formula (7) is not redundant measured by our improved significance degree. In other words, our proposed significance degree is more sensitive the information contained in attributes.

In fact, this result gives us an algorithm for attribute reduction, i.e., if \(Sig^{\prime\prime}_{R} \left( {c_{{i_{0} }} } \right) = 0\), then attribute \(c_{{i_{0} }}\) can be reduced, otherwise attribute \(c_{{i_{0} }}\) cannot be reduced. And in the following subsection, we would like to introduce attribute reduction based on our improved significance degree.

3.3 Applications based on improved significance degree

In this subsection, we mainly discuss the applications based on significance degree. One application is to sort the attributes according to our improved significance degree. The other application is to reduce the redundant attributes from all the condition attributes.

(1) Attribute Rank

By Propositions 1 & 2, we have verified that our proposed significance degree is sensitive to the inherent information contained in the attributes. It is applicable to sort the attributes as it can avoid multiple zero significant attributes. In the following, we present the rank of attribute according to the improved significance degree.

Definition 2

(Attribute rank) Let \(S = \left( {U,A,V,f} \right)\) is an information system, \(A = C \cup D\), \(C = \left\{ {c_{1} ,c_{2} , \ldots c_{n} } \right\}\), \(B \subseteq C\), for any \(1 \le i\), \(j \le n\), if \(Sig^{\prime\prime}_{B} \left( {c_{i} } \right) > Sig^{\prime\prime}_{B} \left( {c_{j} } \right)\), then we say that the rank of attribute \(c_{i}\) is higher than that of \(c_{j}\), denoted by \(Q_{i} > Q_{j}\).

By the Definition 2, it is available to design an algorithm to sort all the attributes as follows.

By using algorithm 1, it is available to sort all the attributes in an information system. Note that if just sort part of attributes on the condition of any given subset R, the significance degree should be updated by \(Sig^{\prime\prime}_{R} \left( {c_{i} } \right)\).

(2) Attribute Rank

It is another useful application of rough set technique to reduce redundant attributes by using significance degree. Our proposed significance degree is also suitable for attribute reduction.

We describe the definition of attribute reduction by leveraging significance degree as follows.

Definition 3

(Attribute reduction): Let \(S = \left( {U,A,V,f} \right)\) is an information system,\(A = C \cup D\), \(C = \left\{ {c_{1} ,c_{2} , \ldots c_{n} } \right\}\), for \(B \subseteq C\), if.

-

(1)

For any \(c \in C - B\), if \(Sig^{\prime\prime}_{B} \left( c \right) = 0\);

-

(2)

For any \(b \in B\), if \(Sig^{\prime\prime}_{{B - \left\{ b \right\}}} \left( b \right) > 0\);

Then, B is called the attribute reduction of C.

Proposition 3

In an information system \(S = \left( {U,A,V,f} \right)\),\(A = C \cup D\), \(C = \left\{ {c_{1} ,c_{2} , \ldots c_{n} } \right\}\). Given \(P \subseteq C\), the following statement are equivalent.

-

(1)

For any \(c \in C - P\), \(Sig^{\prime\prime}_{P} \left( c \right) = 0\) holds;

-

(2)

P is a superset of a reduction.

Proof

It is the straightforward result of the (1) of Definition 3.

According to Proposition 3, it is inspired that \(Sig^{\prime\prime}_{P} \left( c \right) = 0\) is available as a stop criterion of attribute reduction algorithm. Additionally, Proposition 3 indicates that the subset satisfying \(Sig^{\prime\prime}_{P} \left( c \right) = 0\) may not the minimal subset. There may exist some redundant attributes.

Proposition 4

In an information system \(S = \left( {U,A,V,f} \right)\),\(A = C \cup D\), \(C = \left\{ {c_{1} ,c_{2} , \ldots c_{n} } \right\}\). Given \(P \subseteq C\), the following statement are equivalent.

-

(1)

There exists \(c \in C - P\) satisfying \(Sig^{\prime\prime}_{P} \left( c \right) > 0\);

-

(2)

P is a subset of a reduction.

Proof: it is the straightforward result of the (2) of Definition 3.

By Proposition 4, it is found that the subset of attributes satisfying \(Sig^{\prime\prime}_{P} \left( c \right) > 0\) does not reach a reduction as there still some important attributes are not covered by this subset. Hence, some more attributes should be added into P.

By Definition 3 and Propositions 3 and 4, we find that the concept of attribute reduction is the minimal subset of condition attributes containing all the indiscernible information in the information systems. Simultaneously, Definition 3 shows that any attribute contained in a reduction is informative and it cannot be removed.

Next, we design an algorithm of attribute reduction by leveraging the improved significance degree as follows.

Steps 4–7 get the informative attributes by exploiting a forward adding strategy. Steps 9–13 reduce the redundant attributes by a backward deleting strategy according to the results in Proposition 3. Consequently, Algorithm 2 do reveal a reduction satisfying the requirements in Definition 3.

4 Supplier evaluation and selection indicator determination

4.1 Supplier evaluation selection criterion and principles

Supplier evaluation and selection are based on multiple objectives composed of both qualitative and quantitative factors. There are many criterions for supplier evaluation and selection, and the core elements are quality, cost, and delivery time. Short-term criteria include product quality, cost, price, delivery capability, and service level. Long-term criteria include production capacity, financial status, stability, and credibility. Enterprises should follow the principles of being systematic and comprehensive, scientific and practical, qualitative and quantitative, and expandable.

4.2 The supplier evaluation indicator system

Supplier evaluation selection is combined with the actual situation of the enterprise to determine the supplier evaluation indicator system. the following six factors should be considered for supplier selection.

(1) Price. The price of raw materials provided by corporate suppliers will influence the cost of corporate products, so the price is the main factor in supplier selection. High-quality and inexpensive products are pursued by all enterprises, but the price is not necessarily the lower the better.

(2) Quality. Quality is the most basic condition in the supply process, while whether the value is reciprocal is determined by whether the quality is up to par. The quality of raw materials and the corresponding R&D technical level constitute key conditions for the selection by purchasers. When the quality is not up to standard, the commodities will be less viable in the market and gradually eliminated from the market. The selection of quality is also determined by reality. The best quality is not necessarily the most appropriate. Different companies have different quality standards. Companies should purchase products of the most appropriate quality at the lowest price.

(3) On-time delivery. This means that the supplier will deliver the required products to the warehouse on time according to the company’s demand as specified in the contract. To improve efficiency, quite a few companies have implemented on-time production or zero inventory, so the delivery must be on time.

(4) Geographical location of suppliers. Geographic location is a direct factor in purchasing costs. The location of the supplier has an impact on delivery time, transportation costs, and response time for emergency orders and expedited services. Aside from that, from the perspective of supplier chain and zero inventory, suppliers closer in geographical location should be selected on equal conditions.

(5) After-sales service. After-sales service is a continuation of the procurement process and is an important element to ensure the continuity of procurement. In the selection of suppliers, after-sales service is a key factor. After-sales services include use instructions, technical consulting, tracing services, provision of components, and maintenance & repair. In the process of procurement, these services will play a vital role in the selection process.

(6) Other factors. Other influence factors include supply capacity, corporate reputation, technical level, financial status, internal management, and inventory levels.

The basis and standards for corporate evaluation and selection of suppliers is the integrated evaluation indicator system for supplier evaluation and selection. Thus, establishing a scientific, reasonable, and practical supplier comprehensive evaluation indicator system is the prerequisite for the scientific evaluation and selection of suppliers. However, companies in different industries vary in product demand, scale, and operation environment, so they also have different requirements on suppliers. Companies should determine their appropriate comprehensive evaluation indicator system for supplier selection.

The supplier evaluation indicator system of companies usually consists of product quality, pricing structure, on-time delivery, geographic location, after-sales services, technical capability, and supply capability. The evaluation indicators vary according to the difference in corporate development directions. For instance, if a company is dominant in the market of technology, technical capability is the main indicator for evaluating and selecting suppliers, followed by product quality, pricing structure, on-time delivery, and after-sales service. However, for companies that outperform in cost, price structure is the primary indicator, and a comprehensive balance of quality, technology, on-time delivery, and after-sales service is required.

5 Simulation and analysis

5.1 Basic steps of corporate supplier evaluation and selection based on rough sets

Basic steps of corporate supplier evaluation and selection based on rough sets are listed below:

Step 1: Determine the indicator system of supplier evaluation \(C = \left\{ {c_{1} ,c_{2} , \ldots c_{n} } \right\}\).

Step 2: Determine candidate suppliers.

Step 3: Collect the historical evaluation sample data of each evaluation indicator of candidate suppliers in the supplier evaluation indicator system.

Step 4: Discrete the data of each evaluation indicator of candidate suppliers and develop the information system decision table of the suppliers.

Step 5: Calculate the significance degree of each indicator \(c_{i}\) in the indicator system \(C = \left\{ {c_{1} ,c_{2} , \ldots c_{n} } \right\}\) according to the formula of attribute significance degree (9).

Step 6: Attribute reduction is performed according to algorithm 1 and algorithm 1. Calculating the weight of each indicator of the evaluation system according to the following equation.

Step 7: Calculate the evaluation value of m candidate suppliers according to the following formula.

where \(w\left( {c_{i} } \right)\) denotes the weight of the indicator i, \(V_{i}\) denotes the value of the indicator i of the supplier k, and \(Q_{k}\) denotes the overall evaluation value of supplier k.)

\(Q_{k}\) is ranked according to the value. The supplier with the highest value is the best supplier to be selected.

5.2 Empirical analysis

The research takes the eight most competitive candidates of an enterprise to become its partner as the research object [8] and uses \(U = \left( {1,2,3,4,5,6,7,8} \right)\) to denote the research object (domain), the eight numbers to denote the candidate companies, \(C = \left\{ {c_{1} ,c_{2} , \ldots c_{7} } \right\}\) to be the supply chain partner selection indicator system, and \(c_{i}\) (\(1 \le i \le 7\)) to represent the i indicator attribute Each \(c_{i}\) has an exclusive indicator corresponding to it, where \(c_{1}\), \(c_{2}\) \(c_{3}\), \(c_{4}\), \(c_{5}\), \(c_{6}\), \(c_{7}\) represents delivery stability, quality compliance, product price, geographic location, technical capability, information system flexibility, and market response, respectively. C is the set of condition attributes, which represents the supply chain partner selection indicator system; D is the decision attribute value, which represents the historical evaluation results of candidate cooperative enterprises. The decision table of information system after discretization in [8] is

In Table 1, the attribute values of candidates 1 and 3 have the same attribute value, but different decision results, so they are invalid data. After the removal of the invalid data, we can arrive at Table 2.

In the decision table shown in Table 1, \(C = \left\{ {c_{1} ,c_{2} ,c_{3} ,c_{4} ,c_{5} ,c_{6} ,c_{7} } \right\}\) is the set of condition attributes, let

We can calculate the following conclusions (see Table 3).

Using new significance degree formula (9) below:

We can calculate following results

Calculate according to algorithm 2, there is no reduction attribute.

The weights of each indicator of the supplier evaluation selection indicator system \(C = \left\{ {c_{1} ,c_{2} , \ldots c_{7} } \right\}\) are calculated according to the formula

where

We can calculate the result of \(w\left( {c_{1} } \right) = 0.40\), \(w\left( {c_{2} } \right) = 0.09\), \(w\left( {c_{3} } \right) = 0.09\), \(w\left( {c_{4} } \right) = 0.09\), \(w\left( {c_{5} } \right) = 0.09\),\(w\left( {c_{6} } \right) = 0.15\), \(w\left( {c_{7} } \right) = 0.10\).

According to the following formula

We can obtain the evaluation value of candidate suppliers as follows

Then, we can rank \(Q_{k}\) (\(1 \le k \le 8\)) according to the size

Therefore, the best supplier for the enterprise is 8, followed by 4. The enterprise can choose a supplier based on the above sequence. This result is basically consistent with the actual decision made by the company (See Table 1).

The following Table 4 compares the results of the new and original attribute significance degree calculated above:

From the above table, it is seen that there are four attributes with zero significance degree according to calculating with the original attribute significance degree. Yet, these evaluation indicators are filtered as necessary evaluation indicators, and the significance degree of zero is not in line with reality. The result of the new attribute significance degree calculation is that the significance degree of each attribute is not zero, and the significance degree of two attributes (\(c_{1}\),\(c_{6}\)) calculated by the original attribute significance degree is the same, while the significance degree of two attributes calculated by the new attribute significance degree is not the same, thereby avoiding the unreasonable situation that the calculated attribute significance degree is the same on many occasions. The conclusions obtained from the simulation analysis are consistent with the actual results.

In allusion to the existing problems that attribute significance degree is applied in practice, we have considered the influence of the interaction of attributes on decision making and improve attribute significance degree by using combined attribute significance degree. The degree of interaction between attributes is fully considered in the improved attribute significance degree with the weighted average design method. The supplier evaluation and selection method given by using the new attribute significance degree helps avoid the situation that some necessary indicators calculated by the literature [8] have zero weight and addresses problems of irrationality that multiple indicators are equally weighted as calculated by the literature [9]. This enterprise supplier evaluation and selection methodology is objective and scientific. The results are highly accurate after simulated analysis.

6 Conclusions

The improved attribute significance degree definition of rough set proposed by us only considers the influence of double attribute significance degree on single attribute significance degree. in fact, the significance degree of more than two combination of attributes also has impact on significance degree of single attribute, which has become our future research topic.

References

Pawlak Z (1982) Rough sets. Int J Comput Inform Sci 11:341–356

Qing L (2001) Rough sets and rough reasoning. Science Press, Beijing ((in Chinese))

Zhang Xiuwen Wu, Weizhi LJ (2001) Rough set theory and methods. Science Press, Beijing (in Chinese)

Miao DQ, Fan SD (2002) The calculation of knowledge granulation and its application. Syst Eng-Theory Pract 22(1):48–56

Wang H (2003) The method of ascertaining weight based on rough sets theory. Comput Eng Appl 36:20–21

Cao XY, Liang JG (2002) The method of ascertaining attribute weight based on rough sets theory. Chin J Manag Sci 10(5):98–100

Bao X, Liu Y (2009) A new method of ascertaining attribute weight based on rough sets theory. Chin J Manag 6(6):507–510

Zhou AF, Chen ZY, Liu LC (2007) How to choose the sc partner based on tough set. Log Technol 26(8):178–181

Bao XZ, Sun Y (2010) Multi-attribute decision for selecting suppliers of spare parts in metallurgical enterprises based on the rough set theory. Bjing Keji Daxue Xuebao/J Univ Sci Technol Beijing 32(8):1078–1084

Jingui Z, Shizhou W, Jun Z (2020) Selection and evaluation of third-party logistics suppliers based on rough set of probability dominance relations. Log Eng Manag 42(11):10–12

Jiuhe W, Huan L, Hui G (2021) A study of cold chain logistics service provider selection based on rough PSO-BP neural network. Ind Eng J 24(2):10–18

Limin S (2014) Improved method of attribute weight based on rough sets theory. Comput Eng Appl 5(50):43–45

Limin S, Zhiyan An (2013) A new method to the determination of the land grading factors’ weight based on rough set. Sci Technol Eng 33(13):1098–10101

Hu XH, Cercone N (1995) Learning in relational databases: a rough set approach. Int J Comput Intell 11(03):323–338

Zhenyu Chen, Xiaohong Zhang (2017) An improved attribute significance measure based on rough set. In: ICNC-FSKD, vol 2017. pp 1203–1209

Slezak D (2002) Approximate entropy reducts. Fund Inf 53(3–4):365–439

Wang GY, Yu H, Yang DC (2002) Decision table reduction based on conditional information entropy. Chin J Comput 25(7):759–766

Liang JY, Chin KS, Dang CY, Yam Richid CM (2002) A new method for measuring uncertainty and fuzziness in rough set theory. Int J Gen Syst 31(4):331–342

Qian Y, Liang J, Pedrycz W, Dang C (2010) Positive approximation: an accelerator for attribute reduction in rough set theory. Artif Intell 174(9–10):597–618

Jing Y, Li T, Hamido F, Wang B, Cheng N (2018) An incremental attribute reduction method for dynamic data mining. Inf Sci 465:202–218

Jin Y, Yan L, Qiang H (2016) A fast positive-region reduction method based on dominance-equivalence relations. In: 2016 International Conference on machine learning and cybernetics (ICMLC), vol 1. IEEE, pp 152–157

Hu Q, Yu D, Liu J, Wu C (2008) Neighborhood rough set based heterogeneous feature subset selection. Inf Sci Int J 178(18):3577–3594

Wang C, Hu Q, Wang X, Chen D, Qian Y (2018) Feature selection based on neighborhood discrimination index. IEEE Trans Neural Netw Learn Syst 29(7):2986–2999

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sun, X., Sun, L. On attribute importance measure and its application to supplier selection. Int. J. Mach. Learn. & Cyber. 13, 1167–1178 (2022). https://doi.org/10.1007/s13042-022-01510-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-022-01510-0