Abstract

Sleep posture is closely related to sleep quality, and can offer insights into an individual’s health. This correlation can potentially aid in the early detection of mental health disorders such as depression and anxiety. Current research focuses on embedding pressure sensors in bedsheets, attaching accelerometers on a subject’s chest, and installing cameras in bedrooms for sleep posture monitoring. However, such solutions sacrifice either the user's sleep comfort or privacy. This study explores the effectiveness of using contactless ultra-wideband (UWB) sensors for sleep posture monitoring. We employed a UWB dataset that is composed of the measurements from 12 volunteers during sleep. A stacking ensemble learning method is introduced for the monitoring of sleep postural transitions, which constitute two levels of learning. At the base-learner level, six transfer learning models (VGG16, ResNet50V2, MobileNet50V2, DenseNet121, VGG19, and ResNet101V2) are trained on the training dataset for initial predictions. Then, the logistic regression is employed as a meta-learner which is trained on the predictions gained from the base-learner to obtain final sleep postural transitions. In addition, a sleep posture monitoring algorithm is presented that can give accurate statistics of total sleep postural transitions. Extensive experiments are conducted, achieving the highest accuracy rate of 86.7% for the classification of sleep postural transitions. Moreover, time-series data augmentation is employed, which improves the accuracy by 13%. The privacy-preserving sleep monitoring solution presented in this paper holds promise for applications in mental health research.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

During sleep, people exhibit different sleep postures, i.e., sleeping on the lateral positions (right or left side), and supine or prone positions. Several research studies have demonstrated that monitoring bodily spatial movements during sleep can have an impact on physical and mental health. Particularly, sleep posture is a marker of disease progression. Sleep postures can suggest the progression of Alzheimer’s disease (AD) and Parkinson’s disease (PD). For instance, sleep in the supine or prone position contributes to the accumulation of unwanted proteins (biochemical fluid) in the brain associated with AD (Brzecka et al. 2018). Similarly, fewer sleep postural transitions were found in patients diagnosed with PD (Uchino et al. 2017). In addition, improper sleep posture can be fatal; for example, sleep in the prone position can lead to the sudden death of epilepsy patients (Liebenthal et al. 2015). These examples show the importance of an automated sleep posture monitoring system. Such a monitoring system can inform caregivers to adjust the sleep posture of a patient and provide overall sleep posture statistics to doctors for better management of patients.

Technological advancements in the Internet of things (IoT) and machine learning (ML) have been used to create several sleep monitoring systems (Fallmann and Chen 2019). Primarily, these sleep monitoring systems can be divided into two categories. The first category employs wearable and on-bed sensors resulting in discomfort while sleeping (Guillodo et al. 2020). The second category employs contactless vision-based sensors. Vison-based sensors use a camera and an ML algorithm to record videos and extract sleep postures. The deployment of cameras in users’ bedrooms is privacy-intrusive and sometimes can have poor accuracy in monitoring body posture. For example, a body covered with a blanket, or low lighting are the typical cases where vision-based sensors fail to work. An ideal system should be non-intrusive, contactless, and work under low illumination. Recent research in wireless signals has paved the way for the development of ultra-wideband (UWB) technology for sleep posture monitoring. This allows the contactless patient monitoring, which means while applying UWB radars, patients don’t need to wear any sensor on their body.

In this research, we employ a UWB dataset and develop an ML model which outperforms the recently published framework (Piriyajitakonkij et al. 2020) for the classification of sleep postural transitions. Our ML model employs the processing of time-domain features through a pre-trained deep convolutional neural network (DCNN). We combine the predictions through stacking ensemble learning to optimize the results, which significantly enhances the accuracy of sleep postural transitions.

This paper provides the following contributions:

-

1.

Investigation of the links between sleep postures with mental health.

-

2.

Development of a state-of-the-art ML model for the classification of sleep postural transitions that outperform the accuracy of a recently published framework (Piriyajitakonkij et al. 2020) by 13%.

-

3.

Development of a sleep posture monitoring algorithm that can give accurate statistics of total sleep postural transitions.

1.1 Sleep posture linkage with neurological and sleep disorders

The development of many neurological diseases is associated with aging, including Parkinson's disease (PD), Alzheimer's disease (AD), dementia, etc. Because the production of proteins and protein waste removal in the aging brain is impaired (Kress et al. 2014). Our brain produces proteins for brain functioning and after doing useful work, the proteins need to be extracted from the brain. Abnormal deposition of amyloid-β (Aβ) (Murphy and LeVine Jan. 2010) and α-synuclein (Snyder and Wolozin 2004) in the brain triggers a cascade of events leading to neuroinflammation and neuronal cell death, which are termed as AD and PD, respectively. During the night, sleep serves as a vital function for the removal of protein waste from our brain through the brain glymphatic system. Body posture contributes to proper functioning of the brain's glymphatic system. The lateral position is found to be the best for the removal of protein waste from brain (Lee et al. 2015), which is the most common during sleep. In addition, sleep posture has also been associated with sleep quality (Cary et al. 2021), and poor sleep quality is a common feature of sleep disorders such as sleep apnea (Sleep Quality: How to Determine if You’re Getting Poor Sleep, Sleep Foundation 2021). Accordingly, monitoring of sleep postures may give useful insights into the progression of AD, PD, and sleep disorders.

1.2 Sleep posture linkage with mental health

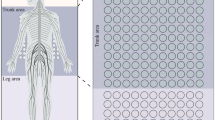

We have discussed how sleep postures can impact the progression of AD and PD. Both diseases affect physical and mental health. AD is known as the main cause of dementia (Brzecka et al. 2018), which can deteriorate mental health. People with early stages of AD are prone to depression, and with the progression of this disease, memory loss worsens, and decision-making becomes more difficult. Neuropsychiatric conditions such as depression, anxiety, etc., are the core features of AD, which are noticeable during the development, and throughout the span of illness (Lyketsos et al. 2011). Progression of AD is linked with cognitive decline, which eventually results in loss of independence and a shorter survival period. Moreover, Tori et al. (2020) studied the relationship between dementia and psychiatric disorders and found that subjects with dementia were more likely to suffer from at least one psychiatric disorder. As compared with the general population, depression and other mental illnesses are common in patients suffering from PD (Ishihara and Brayne 2006). Similarly, researchers observed that patients suffering from PD are also diagnosed with anxiety and major depressive disorder (Alamri 2015). In terms of sleep disorders, Pye et al. (2021) found an increased level of physical activity during sleep and a lower sleep regularity index in depressed subjects. Figure 1 shows the linkage of sleep postures with mental health.

Table 1 gives the strong linkage of sleep posture with mental and physical health conditions. On-bed sleep postures and sleep postural transitions can give useful insights into the progression of neurological disorders and can help diagnose potential mental illness in patients. Based on the studies discussed above, it can be said that individuals who sleep in supine or prone positions are more likely to suffer from mental health issues as compared with those who sleep in lateral positions.

2 Related work

Research work on sleep posture monitoring is categorized into wearable posture monitoring and contactless posture monitoring methods.

2.1 Wearable posture monitoring

The wearable sleep monitoring methods mostly consist of smart band and smartwatch devices equipped with sensors to track breathing, heart rate, and oxygen saturation among others (Li et al. 2017). Some devices are also equipped with an accelerometer to estimate sleep postures. For example, Qian et al. (2015) and Chang and Liu (2011) used an accelerometer to monitor sleep postures. They attached an accelerometer to the subject’s chest and determined the person’s orientation by combining the acceleration along three different axes. These monitoring devices are however invasive and cumbersome as users need to wear a device on their bodies during sleep. Therefore, non-wearable sleep posture monitoring is preferred.

2.2 Contactless posture monitoring

Contactless posture monitoring methods are categorized as on-bed sensing, vision sensing, and wireless sensing. On-bed sensing consists of sensors that are embedded into the bed, such as pressure mats (Liu et al. 2014; Hsia et al. 2008; Ostadabbas et al. 2014; Pouyan et al. 2013; Xu et al. 2015). However, patients’ movement may shift the placement of pressure mat, which needs to be re-adjusted for accurate sleep posture monitoring. Vision-based sensing employs infrared or normal cameras for data collection. Cameras record videos to monitor users’ sleep for the prediction of posture (Grimm et al. 2016; Liu and Ostadabbas 2017; Akbarian et al. 2019). However, cameras are privacy intrusive. Normal cameras require ambient light for their efficient working. Infrared and normal cameras cannot monitor sleep while a blanket is on (Deng et al. 2018).

In contrast, radio frequency sensors can even see through-wall to detect human movements due to their high penetration ability (Adib et al. 2015). These sensors can work without privacy breaches and do not require ambient light for their operation. In the literature, few studies employed radar-based systems for sleep posture monitoring (Piriyajitakonkij et al. 2020; Yue et al. 2020; Barsocchi 2013; Liu et al. 2018). They analysed the collected data and processed them through ML algorithms to detect sleep postures and postural transitions. Moreover, UWB gave promising results with a lot of benefits e.g., UWB can work through a wall due to its high penetration properties, provides a high degree of security with a low probability of detection, and delivers greater signal strength in adverse situations, etc. (Introduction to Ultra-Wideband Communications 2022).

3 Proposed model

3.1 Overview of UWB sensor and dataset

The proposed radar-based model uses a dataset collected with the XeThru X4M03 UWB sensor from Novelda (XeThru X4M03 Development Kit—SensorLogic 2021) for sleep posture monitoring. A UWB radar transmits low-power signals and observes the reflections from the surrounding environment through a receiver. The received signal at the receiver can be represented by Piriyajitakonkij et al. (2020):

where \({z}_{k}\left(t\right)\) is the received signal which represents the slow time index k, while t is the fast time index, which represents the time of arrival of each reflected signal. x and \({s}_{ki}\) are the original pulses sent from the transmitter, \({\tau }_{ki}\) is a time delay, and \(n\left(t\right)\) represents noise. Similarly, \({N}_{path}\) is the number of traveling paths and i represents the ith path of traveling pulses.

The UWB dataset utilized in this research is collected by Piriyajitakonkij et al. (2020), where they have collected two datasets with a different number of subjects. We considered the dataset which contains more realistic scenarios. This dataset is collected from 12 subjects and a detailed description of the subjects is given in Piriyajitakonkij et al. (2020). The dataset consists of over 816 samples from all subjects, which are labeled manually by using video recordings, and each sample contains only one sleep postural transition. The dataset is further divided into two sessions: Session I was recorded where all the objects remained motionless in an environment, while Session II contained a swinging fan. In addition, to replicate the realistic settings, subjects were instructed to execute random actions such as moving their head, body, and limbs, using smartphones, and lying motionlessly. This class is termed as background (BG), which can help identify sleep and awake situations. In total, the dataset contains five classes, which are supine to side (SUSI), side to supine (SISU), supine to prone (SUPR), prone to side (PRSU), and background (BG).

3.2 Data processing

(a) DC Suppression: UWB data is a multivariate time series data that includes slow time indices and range bins. Range bins may unavoidably contain DC noise. The possible way of DC noise elimination is by averaging its value through all slow time indices N:

\({R}_{mn}\) is subtracted from its mean along with the slow time index, before storing in \({\overline{R} }_{mn}\), where m and n represent the rows and columns of the matrix.

(b) Background Suppression: Slow time indices contain the information of the objects present in the environment, which also include the interested target such as an active human. This is termed as static clutter. Data in each slow time index is subtracted with their average along fast time to suppress the static clutter:

\({\overline{R} }_{mn}\) is subtracted by its mean along with the fast time index, before storing in \({Y}_{mn}\).

3.3 Feature extraction

(a) Time Difference (TD): Typically, the objects in a bedroom-like environment are stationary. Therefore, the differentiation along the slow time axis \({Y}^{d}\) suppress the static objects information in the signal and in return, the remaining information represents a moving human:

where every \({Y}_{mn}^{d}\) is a difference between \({Y}_{m,n+1}\) and \({Y}_{mn}\), which denotes the pulses with different slow time axis from range bin m.

(b) Range Selection: Typically, the range of UWB sensors is small. For this experiment, the step size between range is 5.14 cm and 40 range bins were selected in a range of about 2 m which contains information about the human body in the environment. The selection algorithm finds a position of cropping window, where the summation of the slow time difference energy is maximized:

where I and F are the initial and final range bins of the cropping window, respectively. A cropped signal is then stored in the (F − I + 1) × N matrix.

3.4 Data augmentation

Deep learning normally suffers from overfitting as it requires a large training dataset for training. However, in the case of human participants, data points are usually small due to the availability of a limited number of subjects. To eradicate this problem, Range Shift (RS), Time Shift (TS), Magnitude-Warping (MW), and Time-Warping (TW) were applied to the training dataset, and further details can be seen in Piriyajitakonkij et al. (2020) and Um et al. 2017.

3.5 Machine learning model

To extract significant features from the input data, our study incorporates pre-trained DCNN (transfer learning) models for training, and then a stacking ensemble learning model is used for the combination of predictions of all models, to get the final outcome. Transfer learning models are trained on a different type of images, and hence, the transfer learning models are considered best for image recognition tasks. The dataset used in this study is multivariate time series data, which can be represented by a 2D array similar to an image. Therefore, the transfer learning models for the classification tasks are deemed appropriate. In addition, the dataset contains 816 samples which are split into 478, 272, and 68 samples for training, validation, and testing, respectively (Piriyajitakonkij et al. 2020). While data augmentation is only applied on training data to prevent overfitting.

For implementation of our model, we employed six ImageNet pre-trained DCNN’s: VGG16 (Simonyan and Zisserman 2015), ResNet50V2 (He et al. 2016), MobileNet50V2 (Howard et al. 2017), DenseNet121 (Huang et al. 2017), VGG19 (Wen et al. 2019), and ResNet101V2 (Benali Amjoud and Amrouch 2020). The fully connected layers are replaced with custom-built layers. For example, in VGG16 and VGG19, the last fully connected layer was replaced by a layer consisting of 16 neurons with ReLU activation and a 0.5 dropout rate before being finally connected to the output layer, which contains five neurons, responsible for the multi-class classification. The five classes are SUSI, SISU, SUPR, PRSU, and BG. Similarly, ResNet50V2 and MobileNet50V2 have 32 neurons in the fully connected layer with ReLU activation, and DenseNet121 and ResNet101V2 have 64 and 16 neurons in the fully connected layer with ReLU activation, respectively. A dropout rate of 0.45 is used along with the last four models.

All configurations of classification layers were selected by performing the different iteration runs, which include different settings of the number of neurons in the layer and the dropout rate. Moreover, it was observed that the model with more than two hidden layers is prone to overfitting. It is worth mentioning that the dataset used in this study consists of a low number of images and performing data augmentation helps avoid overfitting. We aimed to keep the number of neurons in the second last layer as small as feasible without sacrificing model performance to avoid overfitting. Finally, we concatenated all outputs from individual models to an average ensemble and weighted average ensemble model and created the dataset for the stacking ensemble model. Figure 2 shows the overview of complete procedure of our proposed stacking ensemble model, including all the steps from input to final predictions.

3.6 Ensemble model

Ensemble learning leverages the opportunity to combine the information from several classifiers to make the model more generalized and robust. In addition, variance and bias are also reduced, which results in minimizing the error. Moreover, another important aspect of the ensemble model is that the pattern learned by another classifier could be used for the correction of the feature space regions that may have been inaccurately learned by one of the classifiers. Hence, these attributes make ensemble models the best option for complex classification and regression problems (Géron 2019).

-

(a)

Average Ensemble Model: After prediction through each model, we implemented the average ensemble learning technique to compute the average of predicted classes by comparing the predictions of all models one to one achieving an accuracy of 69.6% among all separately trained models.

-

(b)

Weighted Average Ensemble Model: To increase the efficiency of the ensemble model, we used the weighted average technique by assigning weights to all models. In ensemble learning, different models are good at predicting particular features, and a weighted average allows the use of models according to their needs. We used the grid search to find the best match from models to apply the weighted average. We obtained the maximum accuracy of 76.3% by performing a grid search with different weights of all trained models.

-

(c)

Stacking Ensemble Model: A stacking ensemble method is an effective approach because it combines multiple classification models through a meta-classifier. It has two levels of learning: base learning and meta-learning. The base learners are trained on the training dataset while the meta-learner is trained on the outputs of the base learners, and then the trained meta-learner is used to make predictions on the test dataset (Breiman 1996). Generally, a stacking ensemble model can obtain more accurate predictions compared to the best base-learners (Wolpert 1992).

In this paper, we have applied the data pre-processing to obtain the time domain sleep postural transition data, which is used to train base classifiers. Then, the stacking ensemble method is implemented to integrate the results of base classifiers to improve the overall performance. The stacking ensemble model combined the outputs of multiple transfer learning models for the prediction of sleep postural transitions. The general algorithmic process of stacking ensemble method can be seen in Algorithm 1.

A stacking generalization model is presented in Breiman (1996), which can be written as Eq. (6). The regression model requires a function \(f\) that maps an input vector \(x\in {P}^{d}\) onto the corresponding continuous label value\(y\in P\). Since a training data set \(\{\left({x}_{i},{y}_{i}\right),..., ({x}_{n}{,y}_{n})\}\) is used to find\(f\), the task falls into the category of a supervised learning problem. The machine learning problem is formulated as a minimization problem of the form:

The first term of the objective calculates the quality of the function \(f\). The second term of the objective calculates the complexity or roughness of the function \(f\).

3.7 Sleep postural transitions monitoring algorithm

To show the effectiveness of our proposed model, we have developed a sleep posture monitoring algorithm that can give accurate statistics of total sleep postural transitions during a night, initial and final sleep posture, and total time spent in the most frequent sleep posture. This sleep analysis can give useful insights to caregivers and physicians to track the disease of a patient. As we argued earlier, sleep postures can risk the progression of AD and PD (Brzecka et al. 2018; Uchino et al. 2017). Moreover, frequent changing of sleep posture is linked with poor sleep, and Pye et al. (2021) also revealed that subjects having depression has higher on-bed activities. Research highlights the strong linkage of AD, PD, and sleep disorders with mental health, which can be seen in Table 1.

Algorithm 2 gives the pseudo-code of our sleep postural transitions monitoring algorithm, where D denotes the random testing samples on which we tested the trained models. We iteratively tested multiple times and stored the results in an array L. For full night sleep posture monitoring, we have added the time delay, which can enable the system to capture the sleep posture after every 60 s. For example, if a person slept for 8 h, then a total of 480 times, the system would capture the sleep postures and give the overall indication of total sleeping time, number of sleep postural transitions, most frequent sleep posture, and time spent in that posture as well.

3.8 Complexity analysis of the proposed model

It is crucial to understand computational and spatial complexity, particularly given the practical implications of the proposed model in sleep postural transition monitoring. This analysis focuses on two main aspects: computational complexity, which addresses the algorithm's time efficiency, and space complexity, which relates to the memory requirement.

3.8.1 Computational complexity

-

(a)

Preprocessing and Feature Extraction

The time complexity of the preprocessing and feature extraction stages of the proposed model involves multiple linear operations. The DC suppression process has a complexity of \(O(N)\), where \(N\) is the number of slow-time indices, indicating a linear relationship with the size of the dataset. Similarly, the background suppression step has a complexity of \(O(MN)\), where \(M\) and \(N\) are the slow- and fast-time indices, respectively. This indicates linear complexity with regard to both indices. The time difference calculation also consisted of a linear complexity pattern characterized by \(O(MN)\). Additionally, the range-selection process demonstrates a linear complexity of \(O(F-I)\), where \(F\) and \(I\) represent the final and initial range bins, respectively, indicating that the complexity is directly proportional to the range-bin difference.

-

(b)

Base learners

In the context of our base learners, which are transfer-learning-based DCNN models, the training complexity for each network is denoted by \(O(T*{P}^{2}*D)\). where \(T\) is the total number of training iterations, \(P\) is the number of parameters in the network, and \(D\) is the dataset size. When considering all six base learners collectively, the total time complexity is effectively six times the complexity of a single learner, thus \(6*O(T*{P}^{2}*D)\). All base learners’ ‘trainable’ attribute is set to ‘False’, so the primary computational load during training originates from the forward pass. The base learner models employ a Flatten layer followed by a Dense layer with 16–32 neurons, a Dropout layer, and a final Dense layer with softmax activation. The computational complexity of these layers is significantly lower than the base of all transfer learning models. The Flatten layer reshapes the data but does not require complex computations. The Dense layers are composed of matrix multiplications, and their complexity is proportional to the number of neurons and input. The Dropout layer does not significantly affect computational complexity as it randomly sets input units to 0 at each update during training. For instance, the VGG16 and VGG19 models are known for their sequential layering, and their complexity is dependent on the input size, number of iterations, and filters. In contrast, architectures such as ResNet50V2 utilize skip connections, slightly reducing complexity despite the increased depth. Transfer learning models can be computationally intensive to train due to their depth; however, once trained, the saved models can be deployed without requiring further extensive computational resources. Our training was expediated by Google Colab Pro services, which provided fast GPUs and high RAM, ensuring the process was as time efficient as possible.

-

(c)

Stacking meta-learner

The stacked ensemble model combines the outputs from the base models and involves training a meta-classifier, which incurs additional computational costs. However, these are reduced by the parallelizable nature of ensemble methods, allowing efficient computation, particularly when dealing with high-dimensional data typical of sleep-posture datasets.

The prediction complexity of stacked ensemble model is \(O(N*M)\), where \(N\) is the number of samples and \(M\) is the number of base learners. The training complexity of the meta-learner is represented as \(O({T}{\prime}*{P}^{{\prime}2}*{N}{\prime})\), where \({T}{\prime}\) represents the number of training iterations, \({P}{\prime}\) the number of parameters in the meta-learner, and \({N}{\prime}\) the size of the prediction dataset.

3.8.2 Spatial complexity analysis

(a) Data storage

For data storage, spatial complexity involves storing raw ultra-wideband (UWB) data and preprocessed data. Raw UWB data storage has a complexity of \(O(M*N)\), while the storage of preprocessed data, which is contingent upon the number of selected range bins, is \(O(F*N)\).

(b) Model parameters

The spatial complexity of the model is mainly due to the memory required to store the parameters of each DCNN model and the meta-classifier. Because of the complexity and sophistication of these networks, the parameter space is substantial. The space complexity of the model parameters includes those for the base learners and meta-learner. The maximum space required for the six DCNN base learners is \(O(6*P)\). The parameter space complexity of the meta-learner is denoted as \(O(P)\). Methods such as model tuning and efficient data storage formats have been utilized to effectively manage memory requirements. It is important to mention that the introduction of time-domain data augmentation techniques is beneficial for model performance but also contributes to the memory load, particularly in the training phase.

4 Experiments

In this section, comparison methods are employed to evaluate the proposed model. As the dataset used contains a moving object, and subjects were instructed to perform different sorts of activities (which is added as the BG class). This class also reflects that the subject is not sleeping, which helps to identify whether a person on-bed is sleeping or not. In other words, the overall sleeping time can also be calculated. Here, various state-of-the-art methods based on multi-view learning (Piriyajitakonkij et al. 2020), weighted range-time frequency transform (WRTFT) (Ding et al. 2018; Chen et al. 2019) are used to evaluate the proposed technique. In addition, we have also implemented the average and weighted average ensemble models, which are used to compare the performance of stacking ensemble model.

Model Evaluation: We implemented four extra models to evaluate the performance of our model i.e., TD-CNN, WRTFT-CNN, Support vector machine (SVM), and CNN-LSTM. Firstly, TD-CNN and WRTFT-CNN were implemented to see their performance individually, both models were trained separately. TD-CNN and WRTFT-CNN were employed in the same settings, but they differ in input data shape. Convolutional layers were composed of three convolutional filters with 64, 32, and 16 neurons, max pooling, batch normalization, and dropout layers, respectively. All filters were set with the size of 2 by 3, followed by ReLU activation, and five output neurons placed in the output layer followed by softmax activation. To prevent overfitting, both models were trained on augmented training data.

SVM is used with a radial basis function kernel. By performing grid search we found the best parameters for SVM, which gives the value of C and gamma {0.01, 0.1, 1, 10, 100} and {0.01, 0.1, 1, 10, 100}, respectively. In addition, we also implemented a CNN model with long short-term memory (LSTM) (Maitre et al. 2021). In this model, the bottom layers of CNN are replaced by LSTM to see the performance of CNN-LSTM on the UWB dataset. All evaluation models were implemented five times with random states. To regularize the models, before output layer, we employed the dropout and batch normalization on the convolutional and hidden layers. Dropout, batch size, and constant learning rate varied according to fine-tuning requirements of the models.

5 Results

Initial tuning of models including epochs, optimization method, learning rate, and construction of fully connected layer were found through controlled iterations and the final performance of the models on validation and test dataset is reported. The matrices to evaluate the performance of the proposed model were confusion metrics, F1 score, accuracy, precision, and recall. Accuracy, precision-recall (PR), and receiver operating characteristic (ROC) curves of transfer learning models are presented in Figs. 3, 4, 5, 6, 7, and 8. These plots are used to access the overfitting in base-level classifiers. For example, if the validation accuracy stops increasing at some point while training accuracy continues to increase, is a sign of overfitting. In the proposed model, we performed several iterations to prevent overfitting by fine-tuning the model (decreasing the model complexity and using an augmented dataset), which makes it more generalized.

The stacking ensemble accuracy of 86.7% is enhanced by the base-learner models, as demonstrated in their PR and ROC area under the curves (AUCs). VGG16 and VGG19 demonstrate strong class differentiation, with VGG19 showing better precision. ResNet variants provide high ROC AUCs, indicating robust classification despite potential precision deficits in distorted datasets. MobileNetV2, which is moderately accurate, provides an architectural advantage for mobile deployment. DenseNet121 demonstrated excellent predictive performance, but the potential limitation could be computational intensity. The ensemble benefits from the compensatory effects of model diversity, addressing individual model precision challenges and class imbalance, though attention to computational demands remains essential for practical deployment.

Accuracy Comparison and Significance of Results: In general, the stacking ensemble model achieved an accuracy of 86.7%. This is not just a numerical achievement; it represents a substantial improvement in detecting and monitoring sleep postures, directly correlating to mental health predictions. Compared to previously published framework on the same dataset (Piriyajitakonkij et al. 2020), our model shows a 13% improvement in accuracy, underscoring its potential in clinical applications. The weighted average ensemble model achieved accuracy of 76.3% followed by the average ensemble model, 69.6%. The classification reports and confusion matrices are presented in Figs. 9 and 10, respectively. By analyzing the matrices, it is evident that the overall recall was lowest for the SUSI class, and highest for the BG class, in all cases. This essentially means that the model misclassified SUSI as SUPR and SISU.

Confusion matrices of a MLV (Piriyajitakonkij et al. 2020), b average ensemble model, c weighted average ensemble model, and d stacking ensemble model

The performance of our model is compared to five existing models (see Fig. 11) to evaluate its effectiveness: (i) the multi-view learning (Piriyajitakonkij et al. 2020) where the authors reported the accuracy of 73.7% for monitoring of sleep postural transitions; (ii) TD-CNN and (iii) WRTFT-CNN give 67.9% and 59.4% accuracy, respectively, but both of the models suffered from overfitting; (iv) SVM and (v) CNN-LSTM showed poor performance by achieving an accuracy of 29% and 32%, respectively. The stacking ensemble model demonstrates good effectiveness as using the same dataset achieves higher accuracy and outperforms the previous methods with an improvement of 13% (Piriyajitakonkij et al. 2020) and 26% (Ding et al. 2018), respectively.

5.1 Contextualizing the problem with evidence

5.1.1 Evidence-based justifications

Recent studies have underscored the importance of sleep postures in mental health, particularly in relation to depression (Pye et al. 2021), anxiety (Alamri 2015), bipolar disorder (Drange et al. 2019), and neurological disorders, such as Alzheimer's (Lyketsos et al. 2011) and Parkinson's diseases (Tori et al. 2020). The high accuracy of our model in the classification of sleep postures (86.7%) is crucial in this context. It provides a non-invasive, privacy-preserving method for the early detection of potential mental health problems, which is a significant step beyond current monitoring methods. By accurately monitoring sleep postural transitions, we can potentially identify the early signs of sleep-related disorders that can lead to mental illness. The performance of our model is not only statistically significant, but also clinically relevant. For instance, the ability to accurately monitor lateral sleep posture, which facilitates the glymphatic system of the brain, aids in protein waste removal (Lee et al. 2015). It is crucial to prevent neurological disorders, such as AD and PD. This opens new avenues for preventing neurological disorders and is a substantial step in the early diagnosis and intervention strategies for these disorders.

5.2 Interpreting the results in mental health context

5.2.1 Clinical implications of results from precision, recall, and confusion metrices

The model's accuracy and recall rates demonstrate its ability to accurately identify true sleep postural transitions, which is an essential feature of any tool used in a mental health diagnostic or therapeutic environment. The confusion matrices, and accuracy comparisons (Figs. 10, 11) demonstrate our model's ability to differentiate sleep postural transitions, a key factor in diagnosing and addressing sleep-related mental health issues.

6 Discussion

In this study, a stacking ensemble deep learning model was developed to recognize sleep postural transitions. The reliable results of our model support the use of an ensemble approach, which allows using multiple models at the same time. We can see from the results that our model outperformed the state-of-the-art frameworks. In addition, our findings also support the feasibility and further implementation of UWB radar-based systems for contactless monitoring of patients with AD and PD. The dataset utilized in this study was collected by Xethru UWB radar, which is cost-effective and user-friendly. Moreover, in this research, we developed two algorithms for sleep postural transitions monitoring. In the first algorithm, stacking ensemble learning algorithm classifies each postural transition and the second algorithm provide accurate statistics of total sleep postural transitions including initial and final sleeping posture. These can give doctors and caregivers useful information about the sleeping behavior of a patient. As research highlights, different sleep postures can risk the progression of AD and PD (Brzecka et al. 2018; Uchino et al. 2017), and frequent on-bed turnovers are linked with poor sleep quality (Pye et al. 2021). As Table 1 highlights the linkage of sleep postural transitions with mental health, this research not only provides an opportunity to identify AD and PD at earlier stages but may also help diagnose psychiatric comorbidities such as depression, bipolar disorder, and schizophrenia (Garcez et al. 2015). On average, a normal person changes sleep posture around 40 to 50 times during the night, which could vary in certain situations (Naitoh et al. 1973). So, we can conclude if a person is having way more sleep posture changes during the night, then the individual is suffering from a sleep disorder. Similarly, if a patient diagnosed with AD or PD is having less or no sleep postures changes at all and prolonged sleep in a supine or prone posture, then AD and PD are progressing much faster.

Therefore, in the future, this model could be used as a contactless monitoring tool for monitoring patients with AD, PD, and sleep disorders in their own homes. This will help extract useful information about sleep and sleep postural transitions. In addition, it also gives some insights into the mental health conditions of a patient as AD, PD, and sleep disorders have a link to a range of mental health disorders. For example, Tori et al. (2020) findings reveal that patients suffering from neurological disorders also suffer at least one mental disorder.

6.1 Comparison with recent works

Our study introduces a novel approach in the field of sleep posture monitoring, particularly in terms of the technology and dataset used. This is in contrast to the methods employed in recent research, as we will discuss, with a focus on the uniqueness and benefits of our UWB radar-based methodology. Our work also stand out from recent studies as it has a strong focus on clinical implications in mental and neurological disorders. Table 2 summarizes recent studies in the field, which include a camera-based monitoring method used by Li et al. (2022) that achieved 91.7% accuracy in classifying three sleep postures. However, this method raises significant privacy concerns, potentially incurs higher costs, involves setup complexity, and may not be suitable in various home settings. Another study by Mlynczak et al. (2020) reported an 86% accuracy using a wearable tracheal audio device for binary level sleep apnea classification. The use of wearable sensors can be uncomfortable and may hinder natural sleep patterns. Our contactless wireless monitoring system offers a significant advantage in terms of preserving privacy, ensuring comfort and convenience, allowing for continuous and natural sleep.

Jeng et al. (2021) achieved an 85% accuracy with a chest wearable device in classifying four sleep postures. The use of a chest wearable device may be affecting natural sleep posture and behaviour, potentially affecting data accuracy. While Islam and Lubecke (2022) also employed radar technology, specifically continuous-wave radar, achieving an 85% accuracy. However, their study did not focus on the clinical aspects of the sleep posture. Our research not only offers an improvement in accuracy (86.7%) for classification of five sleeping postures, but also pioneers the application of radar technology in the prediction of mental illnesses from sleep postures. This focus on the clinical implications of sleep postures in relation to mental and neurological disorders is a unique and innovative aspect of our study. However, our study also has some limitations, which include the use of a relatively smaller dataset (12 subjects) compared to some other studies, and the implementation of UWB technology, which might be less established in the field of sleep monitoring than other methods. Despite these challenges, our work demonstrates competitive accuracy and a novel application in mental health research.

7 Conclusion

This paper presented a model for the monitoring of human sleep postural transitions. The proposed stacking ensemble learning model outperformed previous state-of-the-art model by 13% and attained a maximum accuracy of 86.7%. This supports the utilization of UWB sensors for the contactless monitoring of patients in hospitals and in-home scenarios. Specifically, this model can be used to monitor the progression of AD, PD, and sleep disorders, as research demonstrates their strong link with sleep postures. In addition, we also developed a sleep postural transition monitoring algorithm, which can provide accurate information on sleep postures such as the total number of sleep postural transitions at night, most frequent sleep posture, and total time spent in frequent sleep posture. We believe that this work can serve as a solution for sleep posture monitoring, helping clinicians and patients to address this need. In summary, the implementation of this sleep postural transitions monitoring model enables the early diagnosis of a range of mental health conditions of patients suffering from AD, PD, and sleep disorders.

Data availability

The datasets generated during and/or analysed during the current study are available in the IoBT-VISTEC/SleepPoseNet repository [https://github.com/IoBT-VISTEC/SleepPoseNet].

References

Adib F, Hsu CY, Mao H, Katabi D, Durand F (2015) Capturing the human figure through a wall. ACM Trans Graph. https://doi.org/10.1145/2816795.2818072

Akbarian S, Delfi G, Zhu K, Yadollahi A, Taati B (2019) Automated non-contact detection of head and body positions during sleep. IEEE Access 7:72826–72834. https://doi.org/10.1109/ACCESS.2019.2920025

Alamri YA (2015) Mental health and Parkinson’s disease: from the cradle to the grave. Br J Gen Pract 65(634):258–259. https://doi.org/10.3399/bjgp15X684985

Barsocchi P (2013) Position recognition to support bedsores prevention. IEEE J Biomed Health Inform 17(1):53–59. https://doi.org/10.1109/TITB.2012.2220374

Benali Amjoud A, Amrouch M (2020) Convolutional neural networks backbones for object detection. In: Lecture notes in computer science (including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics), vol 12119 LNCS. Springer, Cham, pp 282–289. https://doi.org/10.1007/978-3-030-51935-3_30

Breiman L (1996) Stacked regressions. Mach Learn 24(1):49–64. https://doi.org/10.1007/BF00117832

Brzecka A et al (2018) Sleep disorders associated with Alzheimer’s disease: a perspective. Front Neurosci 12:324683. https://doi.org/10.3389/FNINS.2018.00330/FULL

Cary D, Jacques A, Briffa K (2021) Examining relationships between sleep posture, waking spinal symptoms and quality of sleep: a cross sectional study. PLoS ONE 16(11):e0260582. https://doi.org/10.1371/journal.pone.0260582

Chang K-M, Liu S-H (2011) Wireless portable electrocardiogram and a tri-axis accelerometer implementation and application on sleep activity monitoring. Telemed e-Health 17(3):177–184. https://doi.org/10.1089/tmj.2010.0078

Chen W et al (2019) Non-contact human activity classification using DCNN based on UWB radar. In: 2019 IEEE MTT-S international microwave biomedical conference (IMBioC), IEEE, May 2019, pp 1–4. https://doi.org/10.1109/IMBIOC.2019.8777793

Contador-Castillo I, Fernández-Calvo B, Cacho-Gutiérrez LJ, Ramos-Campos F, Hernández-Martín L (2009) Depression in Alzheimer type-dementia: is there any effect on memory performance. Rev Neurol 49(10):505–510

Deng F et al (2018) Design and implementation of a noncontact sleep monitoring system using infrared cameras and motion sensor. IEEE Trans Instrum Meas 67(7):1555–1563. https://doi.org/10.1109/TIM.2017.2779358

Ding C et al (2018) Non-contact human motion recognition based on UWB radar. IEEE J Emerg Sel Top Circuits Syst 8(2):306–315. https://doi.org/10.1109/JETCAS.2018.2797313

Drange OK, Smeland OB, Shadrin AA, Finseth PI, Witoelar A, Frei O (2019) Genetic overlap between Alzheimer’s disease and bipolar disorder implicates the MARK2 and VAC14 genes. Front Neurosci. https://doi.org/10.3389/fnins.2019.00220

Fallmann S, Chen L (2019) Computational sleep behavior analysis: a survey. IEEE Access 7:142421–142440. https://doi.org/10.1109/ACCESS.2019.2944801

Garcez ML, Falchetti ACB, Mina F, Budni J (2015) Alzheimer´s disease associated with psychiatric comorbidities. An Acad Bras Cienc 87(2 suppl):1461–1473. https://doi.org/10.1590/0001-3765201520140716

Géron A (2019) Hands-on machine learning with Scikit-Learn, Keras, and TensorFlow: concepts, tools, and techniques to build intelligent systems

Grimm T, Martinez M, Benz A, Stiefelhagen R (2016) Sleep position classification from a depth camera using Bed Aligned Maps. In: Proceedings-international conference on pattern recognition, IEEE, pp 319–324. https://doi.org/10.1109/ICPR.2016.7899653

Guillodo E et al (2020) Clinical applications of mobile health wearable-based sleep monitoring: systematic review. JMIR Mhealth Uhealth 8(4):e10733. https://doi.org/10.2196/10733

He K, Zhang X, Ren S, Sun J (2016) Identity mappings in deep residual networks. In: Lecture notes in computer science (including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics), vol 9908 LNCS, Springer Verlag, pp 630–645. https://doi.org/10.1007/978-3-319-46493-0_38

Howard AG, Zhu M, Chen B, Kalenichenko D, Wang W, Weyand T, Andreetto M, Adam H (2017) Mobilenets: efficient convolutional neural networks for mobile vision applications. arXiv:1704.04861

Hsia C-C, Hung Y-W, Chiu Y-H, Kang C-H (2008) Bayesian classification for bed posture detection based on kurtosis and skewness estimation. In: HealthCom 2008—10th international conference on e-health networking, applications and services, IEEE, pp 165–168. https://doi.org/10.1109/HEALTH.2008.4600129

Huang G, Liu Z, van der Maaten L, Weinberger KQ (2017) Densely connected convolutional networks. pp 4700–4708

Ih R (2005) Anxiety disorders in Parkinson’s disease. Adv Neurol 96:42–55

Introduction to ultra-wideband communications. Accessed 1 June 2022 from https://www.informit.com/articles/article.aspx?p=433381&seqNum=5

Ishihara L, Brayne C (2006) A systematic review of depression and mental illness preceding Parkinson’s disease. Acta Neurol Scand 113(4):211–220. https://doi.org/10.1111/j.1600-0404.2006.00579.x

Islam SMM, Lubecke VM (2022) Sleep posture recognition with a dual-frequency microwave doppler radar and machine learning classifiers. IEEE Sens Lett. https://doi.org/10.1109/LSENS.2022.3148378

Jeng P-Y, Wang L-C, Hu C-J, Wu D (2021) A wrist sensor sleep posture monitoring system: an automatic labeling approach. Sensors 21(1):258. https://doi.org/10.3390/s21010258

Kessing LV (2004) Does the risk of developing dementia increase with the number of episodes in patients with depressive disorder and in patients with bipolar disorder? J Neurol Neurosurg Psychiatry 75(12):1662–1666. https://doi.org/10.1136/jnnp.2003.031773

Kress BT et al (2014) Impairment of paravascular clearance pathways in the aging brain. Ann Neurol 76(6):845–861. https://doi.org/10.1002/ana.24271

Lee H et al (2015) The effect of body posture on brain glymphatic transport. J Neurosci 35(31):11034–11044. https://doi.org/10.1523/JNEUROSCI.1625-15.2015

Li Y, Gao H, Ma Y (2017) Evaluation of pulse oximeter derived photoplethysmographic signals for obstructive sleep apnea diagnosis. Medicine 96(18):e6755. https://doi.org/10.1097/MD.0000000000006755

Li Y-Y, Wang S-J, Hung Y-P (2022) A vision-based system for in-sleep upper-body and head pose classification. Sensors 22(5):2014. https://doi.org/10.3390/s22052014

Liebenthal JA, Wu S, Rose S, Ebersole JS, Tao JX (2015) Association of prone position with sudden unexpected death in epilepsy. Neurology 84(7):703–709. https://doi.org/10.1212/WNL.0000000000001260

Liu JJ et al (2014) Sleep posture analysis using a dense pressure sensitive bedsheet. Pervasive Mob Comput 10:34–50. https://doi.org/10.1016/j.pmcj.2013.10.008

Liu J, Chen Y, Wang Y, Chen X, Cheng J, Yang J (2018) Monitoring vital signs and postures during sleep using WiFi signals. IEEE Internet Things J 5(3):2071–2084. https://doi.org/10.1109/JIOT.2018.2822818

Liu S, Ostadabbas S (2017) A vision-based system for in-bed posture tracking. In: 2017 IEEE international conference on computer vision workshops, ICCVW, 2017, pp 1373–1382. https://doi.org/10.1109/ICCVW.2017.163

Lyketsos CG et al (2011) Neuropsychiatric symptoms in Alzheimer’s disease. Alzheimer’s Dementia 7(5):532–539. https://doi.org/10.1016/j.jalz.2011.05.2410

Maitre J, Bouchard K, Bertuglia C, Gaboury S (2021) Recognizing activities of daily living from UWB radars and deep learning. Expert Syst Appl. https://doi.org/10.1016/j.eswa.2020.113994

Mlynczak M, Valdez TA, Kukwa W (2020) Joint Apnea and body position analysis for home sleep studies using a wireless audio and motion sensor. IEEE Access 8:170579–170587. https://doi.org/10.1109/ACCESS.2020.3024122

Murphy MP, LeVine H (2010) Alzheimer’s disease and the amyloid-β peptide. J Alzheimer’s Disease 19(1):311–323. https://doi.org/10.3233/JAD-2010-1221

Naitoh P, Muzet A, Johnson C, Moses J (1973) Body movements during sleep after sleep loss. Psychophysiology 10(4):363–368. https://doi.org/10.1111/j.1469-8986.1973.tb00793.x

Ostadabbas S, Baran Pouyan M, Nourani M, Kehtarnavaz N (2014) In-bed posture classification and limb identification. In: 2014 IEEE biomedical circuits and systems conference (BioCAS) proceedings, IEEE, pp 133–136. https://doi.org/10.1109/BioCAS.2014.6981663

Piccinni A et al (2012) Plasma β-amyloid peptides levels: a pilot study in bipolar depressed patients. J Affect Disord 138(1–2):160–164. https://doi.org/10.1016/j.jad.2011.12.042

Piriyajitakonkij M et al (2020) Sleepposenet: multi-view multi-task learning for sleep postural transition recognition using UWB. IEEE J Biomed Health Inform 24(4):1305–1314. https://doi.org/10.1109/JBHI.2020.3025900

Pouyan MB, Ostadabbas S, Farshbaf M, Yousefi R, Nourani M, Pompeo MDM (2023) Continuous eight-posture classification for bed-bound patients. In: 2013 6th international conference on biomedical engineering and informatics, IEEE, pp 121–126. https://doi.org/10.1109/BMEI.2013.6746919

Pye J et al (2021) Irregular sleep-wake patterns in older adults with current or remitted depression. J Affect Disord 281:431–437. https://doi.org/10.1016/j.jad.2020.12.034

Qian X, Hao H, Chen Y, Li L (2015) Wake/sleep identification based on body movement for Parkinson’s disease patients. J Med Biol Eng 35(4):517–527. https://doi.org/10.1007/s40846-015-0065-0

Simonyan K, Zisserman A (2015) Very deep convolutional networks for large-scale image recognition. arxiv.org, 2015

Snyder H, Wolozin B (2004) Pathological proteins in Parkinson’s disease: focus on the proteasome. J Mol Neurosci 24(3):425–442. https://doi.org/10.1385/JMN:24:3:425

Sleep quality: how to determine if you’re getting poor sleep, Sleep Foundation. Accessed 16 Dec 2021 from https://www.sleepfoundation.org/sleep-hygiene/how-to-determine-poor-quality-sleep

Steffens DC, Fisher GG, Langa KM, Potter GG, Plassman BL (2009) Prevalence of depression among older Americans: the aging, demographics and memory study. Int Psychogeriatr 21(05):879. https://doi.org/10.1017/S1041610209990044

Tandberg E (1996) The occurrence of depression in Parkinson’s disease. Arch Neurol 53(2):175. https://doi.org/10.1001/archneur.1996.00550020087019

Thielscher C, Thielscher S, Kostev K (2013) The risk of developing depression when suffering from neurological diseases. Ger Med Sci 11:Doc02. https://doi.org/10.3205/000170

Tori K et al (2020) Association between dementia and psychiatric disorders in long-term care residents. Medicine 99(31):e21412. https://doi.org/10.1097/MD.0000000000021412

Uchino K, Shiraishi M, Tanaka K, Akamatsu M, Hasegawa Y (2017) Impact of inability to turn in bed assessed by a wearable three-axis accelerometer on patients with Parkinson’s disease. PLoS ONE 12(11):e0187616. https://doi.org/10.1371/JOURNAL.PONE.0187616

Um et al. TT (2017) Data augmentation of wearable sensor data for Parkinson’s disease monitoring using convolutional neural networks. In: Proceedings of the 19th ACM international conference on multimodal interaction, New York, NY, USA: ACM, pp 216–220. https://doi.org/10.1145/3136755.3136817

Wen L, Li X, Li X, Gao L (2019) A new transfer learning based on VGG-19 network for fault diagnosis. In: 2019 IEEE 23rd international conference on computer supported cooperative work in design (CSCWD), IEEE, May 2019, pp 205–209. https://doi.org/10.1109/CSCWD.2019.8791884

Wolpert DH (1992) Stacked generalization. Neural Netw 5(2):241–259. https://doi.org/10.1016/S0893-6080(05)80023-1

XeThru X4M03 Development Kit– SensorLogic. Accessed 30 July 2021 from https://www.sensorlogic.store/products/xethru-x4m03-development-kit

Xu X, Lin F, Wang A,. Song C Hu Y, Xu W (2015) On-bed sleep posture recognition based on body-earth mover’s distance. In: 2015 IEEE biomedical circuits and systems conference (BioCAS), IEEE, pp 1–4. https://doi.org/10.1109/BioCAS.2015.7348281

Yue S, Yang Y, Wang H, Rahul H, Katabi D (2020) BodyCompass: monitoring sleep posture with wireless signals. Proc ACM Interact Mob Wearable Ubiquitous Technol 4(2):1–25. https://doi.org/10.1145/3397311

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Nouman, M., Khoo, S.Y., Mahmud, M.A.P. et al. Advancing mental health predictions through sleep posture analysis: a stacking ensemble learning approach. J Ambient Intell Human Comput 15, 3493–3507 (2024). https://doi.org/10.1007/s12652-024-04827-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12652-024-04827-6