Abstract

Attitudes towards artificial intelligence (AI) and social robots are often depicted as different in Japan, compared to other western countries, such as Sweden. Several different reasons for why there are general differences in attitudes have been suggested. In this study, five hypotheses based on previous literature were investigated. Rather than attempting to establish general differences between groups, subjects were sampled from the respective populations, and correlations between the hypothesized confounding factors and attitudes were investigated within the groups between individuals. The hypotheses in this exploratory study concerned: (H1) animistic beliefs in inanimate objects and phenomena, (H2) worry about unemployment due to AI deployment, (H3) perceived positive or negative portrayal of AI in popular culture, (H4) familiarity with AI, and (H5) relational closeness and privacy with AI. No clear correlations between attitudes and animistic belief (H1), or portrayal of AI in popular culture (H3) could be observed. When it comes to the other attributes, worry about unemployment (H2), familiarity with AI (H4), and relational closeness and privacy (H5), the correlations were similar for the individuals in both groups and in line with the hypotheses. Thus, the general picture following this exploratory study is that individuals in the two populations are more alike than different.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Products using artificial intelligence (AI) are implemented all over the globe. Ranging from Tesla’s self-driving auto-pilots to Google’s and Apple’s services in our smartphones—AI technology is rapidly becoming ubiquitous in our everyday lives. How are this affecting people, how do people feel about this change, and are there any systematic differences in how it affects individuals, both practically and emotionally?

Psychological attitude can be summarized and defined as characteristics of an individual formed by prior experience, which turns into a predisposition for how an individual will value an attitude object. First impressions tend to be lasting and do not change considerably with more observations and considerations. They also affect subsequent judgments and evaluations of the object in question [1, 2]. Consequently, investigations of people’s attitudes towards AI is directly linked to how people will be able to greet and accept the usage of AI in their everyday life. This can inform how to implement AI, as well as enabling the implementation of ethically considered AI systems.

The field of AI is booming and studies explicitly focusing on AI usage are regularly being published, currently, but lack a unifying, common tradition. Social robotics shares a lot of features with AI usage, both in terms of what is implemented in society, and the fact that such robots to a large extent are anthropomorphized versions of AI and expected to interact as human actors. In this sense, social robots can be seen as essentially embodied AI.

In this closely related field of social robotics and human–robot interaction (HRI), there is a longer and more established tradition of research into attitudes towards the use of social robotics. Psychometric measures of attitude and acceptability of robots have mainly been performed from early 2000, but can at the same time be deemed as fairly new. Krägloh et al. [3] review several instruments to measure attitudes towards robots within the field of HRI. Some instruments are proposed to measure the acceptance and intention of using robots as directly as possible, such as adaptations of the Technology Acceptance Model (TAM), which has the assumption that behavioral intention is based on perceived ease of use and usefulness [4]. Others are focused on ethical considerations, such as the Ethical Acceptability Scale, which has mainly been used to evaluate therapeutic robotic partners for children with autism [5].

The most commonly used instrument, and earliest to be established, is the Negative Attitude towards Robots Scale (NARS), first developed by Nomura and colleagues [6]. NARS is a questionnaire sometimes used in experimental settings, but it has also often been used to investigate participants' general attitudes towards robots and has thus been used widely in cross-cultural studies. The NARS questionnaire has 14 items of statements that the participant is asked to rate their agreement with, for example: “I would feel uneasy if I was given a job where I had to use robots”. The 14 items have been analyzed to have three factors: (1) Situations of Interaction with Robots, (2) Social influence of Robots, and (3) Emotions in Interactions with Robots. The NARS questionnaire has been criticized for being too negatively oriented [3], and not addressing positive emotions, attitudes, and intent on use. While the critique is valid, some defense can be granted by NARS having questions measuring both negative and positive aspects; specifically, questions associated with factor 3 about emotions are positively worded and then flipped in the analysis to conform to a consistently oriented scale.

When it comes to cross-cultural studies, Japan has frequently been studied and compared to other cultures. The perception is that Japan has a special kind of relationship with robots. Some even call that relationship a mania, a love, or both [7, 8]. The assumption is that Japanese citizens seem to have more acceptance of social robots, given the ubiquity of them in society, the push to incorporate them in everyday life and areas such as elder care, as well as the frequency of depiction in popular culture. It is suggested to be part of the Japanese philosophical or religious views to attribute spiritual value to objects [9]. This, in turn, is suggested to be the foundation of why Japanese citizens more easily accept, for instance, a factory manufacturing change and evolution towards more automation, even when humans are replaced by robots [10].

Some studies suggest results in line with a notion of robot love to explain more positive attitudes in Japan, but others are contradicting. For example, comparing the UK and Japan (N = 200), participants from the UK had more negative attitudes [11]. In other cultural comparisons, comparing participants from The Netherlands, Japan, and China (N = 96), the Japanese participants had the most negative attitudes [12]. In another study with participants from seven cultural backgrounds (N = 467), the Japanese scored among the most negative [13]. And comparing US and Japanese university students (N = 731), implicit associations tests indicated similar associations to dangers with robots and more pleasant associations with humans [8]. Thus, it remains unclear if attitudes towards robots are generally more positive in Japan, compared to other countries. More importantly, it remains unclear how individual attitudes within the groups differ. This last point is especially important when considering the difficulty of recruiting representative samples of populations. With the exception of MacDorman et al. [8], this challenge does not seem to have been adequately considered in the above-mentioned studies.

This is a general problem, facing most investigations of cultural differences. The studies comparing cultural differences in robot-attitude have a varied number of participants. In the five referenced here, there is a range from as little as 20 participants representing one cultural group, to 400; with a mean of 126 and a median of 68 [6, 8, 11,12,13]. The number of participants is, however, only one part of the problem when it comes to representativeness. To be representative, study participants need to be randomly sampled from the whole population. Furthermore, it is reasonable to suspect that different demographic variables are more relevant than the cultural background. In the above-mentioned studies, there are suggestions for the relevance of gender, age, technical orientation, and familiarity with robots. Consequently, there are several general problems in conducting cross-cultural comparisons, or at least to be able to draw conclusions from them, which motivates a primary focus on individual differences.

NARS has been developed to investigate attitudes towards social robots. This does not translate immediately to the more general problem of studying attitudes towards AI systems. Many of the sentiments and issues involved with AI deployment are likely to be similar or identical to those of robot deployment, but this cannot be guaranteed a priori. In the current study, we have had to make adjustments to the original NARS questionnaire. However, rather than developing a completely new instrument, we see value in paraphrasing an instrument that has been proven useful in prior research. Tentatively, we call this iteration of the instrument Negative Attitudes towards Artificial Intelligence Scale (NAAIS). Building on top of NARS means that, instead of going through the effort of validating a completely new instrument, possible differences between the results of NARS and NAAIS can be examined by cross-validation, using cases of AI with embodiments that do not defy the notion of a robot. Arguably, this would have been a suitable first step for us, and the lack of validation is a major weakness of this paper that warrants caution when interpreting the results. However, we argue that this is a chicken-or-egg problem; the present paper motivates the need for a more general instrument to measure attitudes towards AI systems, and the alternative—to first validate an instrument that nobody knew they needed—to be less interesting for our target audience.

1.1 Hypotheses

Because of the problem of representativeness, this study does not aim to compare general differences between cultures. Instead, it explores the individuals within the groups, regarding how various beliefs and attributes affect attitudes towards AI. The null hypothesis is that there are no systematic differences between individuals within their respective cultural contexts.

Five main hypotheses (H1–5) are formulated to investigate correlations between various attributes or beliefs and negative attitudes towards AI. If a hypothesis can be supported for both samples, it would mean that individuals in both samples are affected similarly. Note that the literature often assumes a causal relationship, while we here only investigate correlations.

1.1.1 Shintoism and Animism

The first hypothesis regards religious belief. Japan has a particular religious background with Shintoism. Unlike many prehistoric religions that have largely disappeared, like Norse mythology of Scandinavia for example, Shintoism has remained an important part of society, alongside the introduction of other religions such as Buddhism, Confucianism, and Christianity. Like many other prehistoric religions, Shintoism is a devotion to spirits in nature, or “yaoyorozu no kami” (八百万の神), which roughly translates to “all the deities”, or “eight million gods”, that are found in natural phenomena such as mountains, rivers, storms, and earthquakes. In modern life, it often also transfers to technological objects such as houses, and this is suggested to be a reason why Japanese people tend to consider robots and artificial entities as living, as having a spirit, a kami [7,8,9]. Animism is a belief that creatures and inanimate objects alike, such as rocks, rivers, or houses, possess some kind of spirit or in some sense are alive. Thus, the suggestion is that animistic beliefs that remain in Japanese culture from Shintoism affect attitudes towards AI and robots; in particular, the idea that inanimate objects in some sense can be considered to be living. Animistic beliefs have been studied extensively in children’s cognitive development by Jean Piaget, but it has also been found in adult populations to a varying degree, for example in college and university students in the US and the Near East [14, 15]. Thus, the hypothesis is as follows:

H1: People who hold animistic beliefs about inanimate objects are less negative towards AI.

1.1.2 Job Security

In many western countries, there is a prevalent threat, voiced from time to time in the media, that AI and automation will “steal” jobs from humans. While robots have replaced muscle power previously, AI has the prospect of replacing human’s cognitive abilities to a far greater extent [16]. Japanese people are perceived as having a different mindset when it comes to this kind of adoption of automation: “Automation has never been seen as a threat to jobs in Japan, because companies employing robots would retrain workers for other jobs rather than dismiss [them]” [8].

H2: People who believe the implementation of AI in society will lead to fewer job opportunities for them are more negative towards AI.

H2a: People who are afraid of losing their jobs and occupations, in general, are more negative towards AI.

1.1.3 Attitudes from Popular Culture

Robots and AI have gained a place of activity in the world of science fiction, and it seems undeniable that the images of robots and AI drawn in popular culture may influence people's attitudes towards AI. There are also studies showing how a single viewing of a science-fiction film can affect a viewer’s scientific understanding of a topic [17], which means that it could also affect a viewer on the potential use and consequence of AI and robots in society.

As already highlighted, humanoid robots and AI are often depicted as friendly and cooperative in Japanese popular cultures, such as manga and anime—hand-drawn or computer-animated comic books or movies [7, 8]. In Japanese pop culture works such as Astro Boy, humanoids have been portrayed as companions to be accepted in human society rather than uncontrollable man-made objects. Also, a common theme in many animated cartoons is the idea that giant robots are morally neutral and should be used by users to help humanity. In western movies and culture, robots and AI are more often depicted in a dystopian future, in which humans are harmed by technology. The Terminator [18] from 1984 is a stereotypical example, to mention one among many. It is thought that the many opportunities to come into contact with such works may affect the attitude towards robots and AI, and it is hypothesized that this could explain a difference in the general attitude towards robots and AI [12].

Therefore, we made the following hypothesis about the relationship between attitudes towards AI and popular culture.

H3: People who have the impression that AI is portrayed mostly negatively in popular culture are more negative towards AI.

1.1.4 Knowledge and Familiarity

A well-established and researched psychological phenomenon is the “Mere-exposure effect”. Simply put: people tend to have a stronger preference and warmer feelings for stimuli and things they are repeatedly exposed to [19]. This effect has been applied and investigated with anything from foreigners liking the food that reminds them of most of their native cuisine [20], to robots being more accepted with more familiarity [21]. This phenomenon is also suggested to be of relevance in studies comparing cultural differences [6, 13]. It is also suggested that knowledge about modern AI technology can be of similar relevance. In a study by Pinto Dos Santos et al. [22], participants were asked to rate their knowledge about AI technology, which was correlated with their attitude towards the implementation of AI. This leads to split hypotheses to investigate the two aspects of familiarity: prior experience and knowledge.

H4: People who are more familiar with AI and AI technology are less negative towards AI.

H4a: People who rate their familiarity with terms related to AI technology, such as machine learning, higher are less negative towards AI.

H4b: People with more experience of using AI are less negative towards AI.

1.1.5 Privacy and Relational Closeness to AI

When it comes to AI and social robotics, there are added challenges to the concept of privacy. As Lutz et al. [23] put it: “Social robots challenge not only users’ informational privacy, but also affect their physical, psychological, and social privacy due to their autonomy and potential for social bonding”. That is, the human-likeness that AI and social robots often try to mimic, or the perception that these artifacts have some kind of autonomy and intentions, creates human-like social problems. This is in line with the claims of the Media equation theory, i.e., that people tend to interact with machines and computers in a fashion similar to interacting with humans [24]. However, when it comes to social robotics and AI, the interaction can be even more human-like when the agents are perceived to possess human qualities. Thus, not only physical distance but also social and relational closeness becomes relevant and mixed up in the notion of privacy [23]. Research into social robotics supports these mixed attributes, for example when people who rated robots as less human-like also held a more negative attitude towards robots, and the relationship with it was not perceived as close [25]. Altogether, prior work suggests that as the experienced social distance and need for social and informational privacy in relation to AI decreases, a positive attitude towards it increases.

H5: People who want more social and informational privacy in relation to AI are more negative towards AI.

H5a: People who would accept or desire relationally closer roles of AI are less negative towards AI.

H5b: People who would like more human-like AI are less negative towards AI.

2 Method

2.1 Participants

Altogether N = 1966 participants from Japan and Sweden completed an online questionnaire. 11 entries were removed due to unsatisfactory answers (e.g., the same response alternative was chosen on all questions). That left N = 1818 entries from Japan and N = 137 entries from Sweden.

The Japanese answers were gathered between July 24th and August 10th of 2020. Participants were recruited by email sent to employees and students at the AI Artificial Art Research Project of the Japan Society for Information Management, and the Faculty of Informatics of Kansai University who are interested in AI education and research. At the same time, snowball sampling—a non-probabilistic sampling technique where existing participants get new participants among acquaintances—was conducted to request the answers of researchers, working people, and university students, which resulted in responses also from Japanese living abroad. The median age group of the Japanese participants was 18–25 years. 66% of the respondents self-identified as male, and 34% as female.

Of the Swedish participants, 80 answers were collected from an online portal called Prolific (www.prolific.co). The site specializes in offering online participants the opportunity to take part in research tests and surveys online with reasonable pay for their time. The participants were rewarded £0.84 for the survey that took 8 min on average to answer. Fifty-four additional answers were collected using snowball sampling. Four more answers were gathered from students of an online course at the Department of Information Technology at Uppsala University, Sweden. The median age group of the Swedish sample was 25–35. 67% of the respondents self-identified as male and 33% as female.

2.2 Questionnaire

To investigate the participants' attitudes towards AI, the NARS questionnaire [6] was adapted to address AI instead of robots. Of the 14 questionnaire items, 11 statements concerning robots were altered merely by changing the object/subject, from “robot” to “AI”, and subsequently judged as unproblematic. Three statements were less directly applicable to AI and had to be adapted slightly. For example, item 8, concerning “standing in front of a robot”, was paraphrased as “being close to an AI device”. The 14 items used a five-point Likert scale, ranging from “Strongly disagree” to “Strongly agree”. The order of the items was randomized, but, due to technical constraints in the survey platform, had the same randomization for all participants; thus no randomization between subjects. The adapted NARS scale was evaluated using factor analysis; see Sect. 3.1 for further details.

Hypothesis 1 (H1) concerns to what extent people have animistic beliefs of inanimate objects. A scale that has previously been used in studies on college and university students [14, 15] was applied here, with some updated items to represent modern artifacts and phenomena. The statement was: “Being alive can be defined as being conscious, having feelings, thoughts, or intentions.”, and then asked participants to rate which of the subsequent items could be considered alive. The 14 items were in the analysis sub-categorized as: (1) animals: cat, mosquito, (2) organic: tree, flower, (3) natural phenomena: ocean, sun, fire, mountain, wind, galaxy, and (4) artificial constructions: car, mobile-phone, house. A final item asked if AI could be alive, but was treated separately and not included in the sub-categories. The order of the items was randomized, but with the same randomization for all participants.

Hypothesis 2 (H2) concerns the worry that the deployment of AI in society would limit job opportunities. Three questions were constructed: if the participant believes that (1) AI would hinder future work opportunities, or (2) increase opportunities, and (3) whether they generally are worried and concerned for their future work opportunities.

To investigate popular culture (H3), participants were asked to rate their impression of the portrayal of AI in popular culture on a five-point Likert scale, ranging from “Mostly negative” to “Mostly positive”. Second, the questionnaire investigated what movies featuring AI that participants had watched and considered as relevant for their impression. A list of the most-watched Hollywood Sci-fi movies was acquired from IMDB (Internet Movie Database; www.imdb.com), for both Japan and Europe (as the closest fit to the Swedish context). From this list, all movies concerning AI were selected. The list was found to be congruent between the two cultural contexts, and 10 feature movies, as well as three TV series, were extracted. They were as follows: The Matrix, Star Wars, Terminator, Wall-E, AI artificial intelligence, 2001: Space Odyssey, Chappie, Her, Iron Giant, Westworld, Battlestar Galactica, Star Trek. In Japan, currently, about 40% of feature movies in the country are produced in “western” countries, dominated by Hollywood productions. Thus, three additional items of the most popular AI movies/TV series in Japan were added: Astroboy, Ghost in the Shell, Doraemon. All movies were independently scored by three researchers as depicting AI either positively, negatively, or mixed and balanced. A valence score of watched movies was then calculated by summing movies with a positive portrayal of AI and subtracting the number of movies with negative portrayals.

To investigate familiarity (H4), a distinction was made between experience and knowledge. Regarding experience (H4.b), participants were asked to rate how often they were using AI systems, apps, or devices, such as Google Home or Apple’s AI assistant Siri, in their everyday life. To investigate knowledge (H4.a), participants were asked to rate their familiarity with modern AI technology terms: artificial neural networks, and machine learning/deep learning. This was rated on a five-point Likert scale, ranging from “No knowledge” to “Deep knowledge”. Additionally, the number of watched AI movies was summed for each participant, regardless of the positive or negative valence of the movie, as a measure of familiarity through exposure by popular culture. Because of considerations in the analysis, Japanese and western movies were summed up separately, see Sect. 3.2.3 for further details.

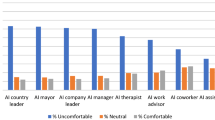

Finally, hypothesis 5 (H5) concerns privacy and relational closeness. Questions were adapted from previous research within social robotics examining physical closeness and proxemics, which is the study of physical space and the effect on humans. In proxemics, the egocentric space around a person is rated as (1) Intimate, (2) Personal, (3) Social, and (4) Public or distant [26]. This scale was adapted to informational privacy with AI, by asking participants what kind of info they would like to share with AI and how relationally close they would like to interact with AI: (1) their most intimate details, (2) opinions and thoughts, (3) only errands and questions, or (4) no interaction at all. Participants were asked to choose one statement that corresponded best to them or none. Participants were also asked to rate how human-like they would like interactions with AI to be (H5.b) on a five-point Likert scale. Finally, adopted from Dautenhahn et.al [27], participants were asked to rate what kind of role they would like AI to have in the future. The six items were graded into an ordinal scale of relational closeness in three levels: (1) tool, or machine, (2) assistant, or servant, and (3) friend, or partner/mate.

Translations of the questionnaire were based on English as the common ground, for Japanese and Swedish, respectively. The Japanese translation had some support with the NARS inspired questions, as that was originally created in Japanese.

3 Results

The statistical analysis was made with R for Windows (4.0.2). Population samples mainly consisting of Snowball sampling are selective and must be assumed non-representative. Neither is this analysis correcting for normality, which statistical tests like ANOVA can be sensitive to. Thus, criteria for proper hypothesis testing of null hypotheses are not met, and the results remain as exploratory indications for what may or may not be the case in terms of relationships between variables. The results are discussed and considered for relevance in the discussion section.

3.1 Exploratory Factor Analysis of Attitude

The first step to analyze is the main attitude measure used in this study, the transformed NARS questionnaire that will be called “Negative Attitude towards Artificial Intelligence Scale” (NAAIS). Both datasets showed a decent Cronbach alpha score and similarity across the 14 NAAIS questions; Japan 0.79, Sweden 0.80. A limitation in this analysis is the smaller Swedish sample (N = 137) versus the much bigger Japanese sample (N = 1818). The Swedish sample is deemed too small to be relevant to compare findings in separate factor analyses of the 14 items. Therefore, an exploratory factor analysis (EFA) is executed on the Japanese dataset alone to be able to establish reasonable factor loadings at the point of this analysis. Further statistical analysis such as Monte Carlo simulations are possible but also more complicated, and are discussed in limitations and future consideration in Sect. 4.1.

A principal component analysis was made on the 14 items to estimate the number of factors. This resulted in the scree plot in Fig. 1, and suggests that there are three factors, before any further components stop adding any explanatory value to the data, at the limit of 1 Eigenvalue.

A subsequent exploratory factor analysis (EFA) was conducted with three factors and Varimax rotation; Chi-square statistics was 315.19 on 52 degrees of freedom, with a p value of 2.4e−39. Table 1 shows mixed loadings on the three factors, contrary to the original NARS [6]. In the original questionnaire with robots, the three factors were the following questions: (FA1) 4, 7, 8, 9, 10, and 12, (FA2) 1, 2, 11, 13, and 14, and (FA3) 3, 5, and 6. Only the third factor was similar in our analysis. Given the large sample, even small loadings can indicate relevance, but in our analysis smaller than 0.200 was deemed insignificant, to then single out items loading on single factors. They were subsequently marked in bold in Table 1. Item 1 and 2 loadings on Factor 2, and question 10 and 12 loadings on factor 1, are both similar to the original NARS. Where the NAAIS questionnaire deviates the most is with question 13, which loads on factor 1, instead of factor 2 in the original. One item, no. 7 (“The words ‘artificial intelligence’/AI means nothing to me.”), stood out with high uniqueness, which means that it does not load well on any of the factors (uniqueness = 0.889). Thus, that motivated item 7 to be removed. The rest had uniqueness scores from 0.342 to 0.731.

A reliability check of Cronbach alpha was performed with the eight selected NAAIS items on both datasets. Reliability dropped the most in the Japanese dataset, from 0.79 to 0.64, compared to the Swedish, from 0.8 to 0.76. Considering the exploratory nature of this analysis, it was deemed marginally acceptable.

The altered factor loading, compared to previous research on NARS, necessitates new categorization of the three factors. Factor 3 has the same items loading to the original NARS. Thus, it can be categorized similarly to concern emotions; but more specifically, it also concerns the relationship with the artificial agent. The three-item questions loading on Factor 1 concerns being close to an AI device, talking with AI, and that AI would have a bad influence on children. This is categorized as concrete situations and interactions of how AI could affect an agent. Item questions to Factor 2 concerns what would happen if AI had emotions, or turned into living things. This in turn is categorized as more abstract and hypothetical situations, for how AI could affect life in a more distant future. Looking at the other questions that load similar to factors 1 and 2, like questions 4 and 8, they concern situations if you would get a job where you had to use AI, and using AI that would affect other people in some way. Both these could have the perspective of something concrete, but could also be something more hypothetical in a potential future. Thus, the new categorization is deemed to be congruent with the questions that do not load on a single factor, but as will be discussed in the next part, this is subject to further investigations.

The three factors in NAAIS are thus, summarized as follows: NAAIS-1) Negative attitude towards concrete use of AI, NAAIS-2) Negative attitude towards hypothetical use of AI, and NAAIS-3) Negative attitude towards emotions and relationships with AI.

3.2 Correlations Between Attitude Factors and Hypothesis Variables

The main analysis of correlations was conducted using Spearman’s rank correlation coefficients (Spearman-rho), which are summarized in Table 2. Analysis of variance (ANOVA) was added where the trend of the two statistical measures differed. The statistical power and effect size was calculated with Cohen’s D, which measures the standardized mean difference. To facilitate the latter, participants were grouped into two groups for each variable investigated. Note that NAAIS-3 item questions are positively phrased in the questionnaire (See supplementary material or Appendix), just as it is in the original NARS questionnaire. Since the scale concerns negative attitudes, the items are reversed in the analysis for similarity between factors.

3.2.1 Animistic Beliefs (H1)

The proportion of participants attributing animistic beliefs in either naturally occurring phenomena, such as lightning, or artificial things, such as a house, were 44% in Japan and 26% in Sweden. A factor analysis of the 13 items showed that organic things, such as trees and animals, were clustered together. Further analysis showed that more people attributed animistic beliefs to the naturally occurring phenomena, than the artificial. These results were formulated into an ordinal scale where 0 = non-belief, 1 = natural-occurrence-belief, and 2 = artificial-things-belief. Thus, this scale could then reflect the distribution highly skewed to the left pole of non-belief. Participants were grouped to the highest level of animistic beliefs they had marked items of.

The Japanese participants showed a small significant positive correlation between animistic beliefs and NAAIS-1 (0.1), but negative with NAAIS-3 (− 0.1). The effect size was negligible (0.19) for both correlations. In the Swedish sample, there were no significant correlations with the attitude measures, neither for Spearman-rho nor for ANOVA.

3.2.2 Job Security (H2)

In the Japanese group, there were significant and positive correlations between all attitude measures and worry about AI leading to less work for the participant (0.26, 0.25, and 0.06, respectively). Thus, the stronger the worry for jobs, the more negative the attitude towards AI. The effect size was in line with the correlations, from medium effect size with NAAIS-1 (0.55) and NAAIS-2 (0.56), and negligible with NAAIS-3 (0.13). General work worry showed similar trends with correlations (0.34, 0.30, and 0.09, respectively), as well as with effect size (0.72, 0.66, and 0.18).

Congruent with the results above, participants showed significant negative correlations between attitude measures and being positive towards AI leading to more work for them (− 0.12, − 0.18, and − 0.16, respectively). Thus, the more positive you were about AI leading to more work, the more positive you were towards AI. The correlations were in line with the effect sizes (0.28, 0.41, and 0.32 respectively).

In the Swedish sample, there was one significant positive Spearman-rho correlation between worry of AI leading to fewer jobs for the participant and NAAIS-1 (0.32), which had a medium (0.78) effect size, close to large at the 0.8 mark of Cohen’s D. Similarly, there was a positive correlation with general work worry and NAAIS-1 (0.35) and medium effect size (0.68).

3.2.3 Popular Culture (H3)

In the Japanese sample, there were minor significant correlations between participants' portrayal of AI in popular culture and NAAIS-2 (− 0.05) and NAAIS-3 (0.06). The effect size was similarly negligible in both cases (0.15 and 0.13, respectively). In the Swedish sample, there were no significant correlations.

The movie metric summarized the valence of the movies seen by participants. Contrary to our expectations, only about 65% of the Japanese participants had marked a western AI movie, compared to 93% from Sweden. On average, the Swedish participants had marked 3.2 of the 13 western AI movies, and the Japanese participants 0.9. Of the three Japanese AI movies included, the Japanese participants had watched, on average, 0.3 of them, compared to 0.02 of the Swedish participants. The number of Japanese movies was deemed too few to calculate valence score, and Japanese participants had not seen enough western movies for a meaningful measure. In the Swedish sample, there was a significant positive correlation between AI movie valence and portrayal of AI movies in popular culture (F = 4.1, p < 0.001), but neither of those two measures were significantly correlated with attitude.

3.2.4 Familiarity (H4)

In line with the hypothesis, familiarity with using AI systems/apps/devices was negatively correlated with all the attitude measures (− 0.08, − 0.08, and − 0.07, respectively) in the Japanese sample. Effect sizes were small with NAAIS-1 (0.21) and NAAIS-2 (0.29), and negligible with NAAIS-3 (0.17). There were no significant correlations in Sweden.

In the Japanese sample, knowledge and familiarity with terms used for AI technology were significantly and negatively correlated to the three attitude measures (− 0.16, − 0.19, and − 0.07, respectively). Similarly, they showed a small effect size to NAAIS-1 (0.25) and NAAIs-2 (0.40), but negligible to NAAIS-3 (0.14). In the Swedish sample, there was a significant negative ANOVA correlation between NAAIS-1 and knowledge (F = 5.4, p = 0.02), and small effect size (0.27).

Additionally, the number of AI movies watched was hypothesized to correlate with familiarity and a more positive attitude towards AI. As mentioned in Sect. 4.2.3, few of the Japanese participants had seen many western (Hollywood) movies, compared to the Swedish sample. Thus, a separate metric of summarized Japanese movies was constructed. While there were no significant correlations with western movies, there was a significant, negative correlation between Japanese movies watched and NAAIS-1 (− 0.6), as well as NAAIS-2 (− 0.10). In the Swedish sample, there was a large negative correlation between western AI movies and NAAIS-3 (− 0.26).

3.2.5 Privacy and Closeness (H5)

Both the Japanese and the Swedish participants showed similar trends, with significant, negative correlations between attitude measures and a desire for human-likeness of AI, the relational closeness of the roles of AI, as well as the level of relational closeness when it comes to informational privacy (− 0.08 to − 0.40 in the Japanese sample, and − 0.23 to − 0.55 in the Swedish). See Table 2 for a more detailed distribution. Similarly, the effect size was medium to large across the board, with the biggest effect sizes found between NAAIS-3 and the relational closeness in the role of AI: large (0.84) in the Japanese sample, and very large (1.57) in the Swedish sample.

3.3 Demographic Variables

There were four demographic variables included in the survey: age, gender, academic level, and being in an IT-academic field or job.

In the Japanese sample (N = 1686), there were small, significant Spearman-rho correlations between age and less of a negative correlation to NAAIS-1 and 2 (− 0.14 and − 0.13, respectively), and a small but positive correlation to NAAIS-3 (0.07). There were similar correlations to male gender and NAAIS-1 and 2 (− 0.07, − 0.14), but no correlation to factor 3. The level of academic degree was also correlated to less of a negative attitude to all factors in a similar fashion (− 0.11, − 0.09, and 0.08). Finally, participants coming from an IT-oriented field were associated with less of a negative attitude only to the third factor NAAIS-3, concerning emotions and relationship closeness (− 0.09). As an example, Cohen’s D of the two significant correlations was negligible and small, respectively (0.15 and 0.32).

In the Swedish sample, there were no significant correlations to the three factors of NAAIS.

Another consideration is that respondents could have different types of AI in mind when answering; be it general AI and highly social and autonomous artificial agents, or narrow AI and the type of everyday artifacts that are available today, like a robot-lawnmower or drones. As a minor check for this, respondents were asked what kind of AI they considered, with 13 alternatives grouped to three different levels of autonomy. No Spearman-rho correlation was found with animistic belief or other variables related to the hypothesis (H1–5), with the exception of those having seen more western movies, in the Swedish sample, considered higher levels of autonomy in AI (0.16). Similarly, in the Japanese sample, there was a correlation between more Japanese movies seen and higher levels of autonomy considered when answering (0.12). In turn, in the Japanese sample only, there was a similar correlation between higher levels of autonomy considered and NAAIS-1 (− 0.14), which is the attitude towards concrete use-cases of AI.

4 Discussion

This study aimed to investigate some propositions of cultural differences between individuals in Japan and Sweden as a western country, regarding attitudes towards AI. Measuring general differences between two cultural groups is difficult because of the problem of representativeness of the samples, and there are mixed results in previous cross-cultural comparisons of attitudes towards robots, as mentioned in the introduction. Thus, this study more specifically aimed to investigate individuals within populations, to see if their attitudes towards AI are correlated with various attributes and beliefs that are assumed and hypothesized in the previous literature to be the reason for general cultural differences.

When it comes to animistic beliefs (H1), there were small significant correlations in the Japanese sample, but where one was negatively correlated the other was positive. Likewise, the effect size was negligible in both cases. Thus, no clear relationship between animistic beliefs and attitudes towards AI can be observed.

It has been speculated that there is less of an issue with job security in Japanese companies when it comes to robotization and automation (H2). A reason for this is stated to be that rather than laying people off or downsizing personnel in automation processes, Japanese companies retrain their workforce or find other tasks for them. In this study, individuals from Sweden had a clear correlation between negative attitudes towards AI and fear of losing their job and an even clearer correlation for individuals from Japan. Thus, no clear differences between the groups can be seen in this regard.

The trends regarding popular culture (H3) were similar to the trends for animistic beliefs, significant, but small, and both negative and positive correlations. Likewise, the effect size was negligible and no clear relationship can be established. More importantly, the measure is of greater uncertainty as there is no internal validity of the different measures of popular culture impressions. An unexpected finding was that the Japanese sample, contrary to the popularity measures from IMDB, had watched only a few western-based AI movies. However, as expected, they had seen more Japanese AI movies. Thus, it requires further methodological development and considerations, which is brought up under the limitations below (Sect. 4.1).

Familiarity when it comes to one's own experience or knowledge of AI was expected to reduce negative attitudes (H4). There were clear indications of this in the Japanese sample, and similar trends in the Swedish sample, although more selective. Additionally, the more western AI movies that the Swedish participants had watched, the lesser the negative attitude towards AI. Similarly in the Japanese participants, there were similar indications with the amount of Japanese AI movies selected. The similarity in the trends indicates that this measure might be context-dependent and less generalizable between people with exposure to different sources of popular culture. Regardless, individuals from the two groups seem to correlate in a similar fashion.

Finally, the relationship between relational closeness and privacy (H5) had the strongest and most powerful correlations and was similar in both samples in line with the hypothesis. That is, the closer the relationship participants would want with AI, the less privacy with AI they prefer, and the more human-like they would like AI to be; the less negative the attitude towards AI was. It also makes sense that the strongest correlation coefficients and effect sizes were observed in relation to NAAIS-3, which is the emotional and relational aspects of AI attitude.

4.1 Limitations

Spearman-rho, which is mainly used in this analysis to check for correlations in this study, is known to have difficulty to capture all relevant effects that, for example, ANOVA could capture. However, the latter is more sensitive to an assumption of normality, and that has not been controlled for in this analysis. For this issue alone, further analysis of the data is warranted. Additional analyses are planned, using additional factor analysis, Structural Equation Modeling, and Monte Carlo-based methods. There are also plans to extend the data gathering to other cultural contexts, as well as gathering more data from Sweden to enable factor analysis of NAAIS.

There are also some methodological considerations for the measurement of attitude as well as some variables for various attributes. First, as mentioned in the result section regarding impressions from popular culture (3.2.3), these measures might not be so strong, given the lack of consistency of movies that participants have marked, as well as the lack of correlation between the two popular culture measures. Second, it can be questioned if the animistic belief captures the relevant aspect of Shintoism in Japan. In a pilot study, animistic belief was attempted to be measured by asking participants to rate their belief in ghosts, as well as their belief in spirits in various objects (or Kami in Japanese). The results from the 50 participants were inconclusive, and thus, the question about animistic belief was formed. In lack of better options, it is still deemed to be able to capture the relevant aspect of Shintoism and the belief of inanimate objects being alive. However, it needs to be validated with other measures.

As mentioned in Sect. 3.3, another consideration is that respondents could have different kinds of AI in mind when answering. While there were some correlations, with NAAIS-1 and concrete use-cases of AI, for example, there were only minor correlations to variables related to the investigated beliefs (H1-5). Thus, it can be argued to have played less of an effect as a possible confound. Another approach would be to have a specific AI device/application exposed to the respondents when doing the questionnaire, which perhaps could affect the responses.

There is also a general problem of translation, in two different ways. First, the original NARS questionnaire was created in Japanese and validated in a Japanese context. Since then, it has been translated to English and various other languages. As Krägloh et al. [3] review there are others that have problematized the translation and application to other cultures and failed to find the same factors in the 14 items as the original studies on NARS. Thus, it is not sure that NARS, nor NAAIS, is applicable to a Swedish population, and currently, in this study, there were too few participants to verify that. Second, the translation from robots (NARS) to AI (NAAIS) adds additional uncertainty. Even if it is validated by several translators, fluent in both Japanese, English, and Swedish, there might be cultural differences that make the meaning difficult to translate to another culture. For example, item 7, which is noted by others [28], as well as us, seems problematic and was excluded in the analysis: “The word ‘robots’ mean nothing to me”.

Finally, there are alternative scales and psychological measures of attitude besides NARS that could be considered. As already mentioned in the introduction, TAM, or RoSAS, which seems to have a concrete theoretical foundation in human social cognition, is of interest (REF). It would be especially interesting to compare different scales on the same samples of people.

5 Conclusions

It remains important to restate that this study has not measured cultural differences between groups. Rather, the study has investigated some assumptions and hypotheses as to why there might be a general difference between cultural groups.

Contrary to the popular belief that the religious background of Shintoism with animistic beliefs as a foundation would play a big part in explaining the Japanese love for robots and artificial agents, no clear relationship could be found. Neither with depictions of robots and AI in popular culture could a clear relationship be found in either population sample.

There were more similarities than differences between the individuals in the two population samples. Familiarity with AI seems to reduce negative attitudes towards AI, and when it comes to worries about job security following automation with AI, both population samples had similar trends. Similarly, individuals who accept and would like closer, more intimate, and more human-like relationships with AI in the future, are less negative.

While this exploratory study does not attempt to compare cultures, indications are that differences between individuals within the populations are greater than the differences between them. Thus, Japanese and Swedish people are probably more alike than different, when it comes to the relationship between the investigated attributes and attitude towards AI. If this would remain the case in further analysis, then it could mean that greater emphasis should be put on individual attributes, desires, and needs, rather than differences between cultural groups, when it comes to implementing AI systems in the future.

The results of this study can also be related to a contemporary discussion about Nihonjinron (日本人論), which is a genre of texts concerning Japanese national and cultural uniqueness. Perhaps most famously criticized by Peter Dale [29], that questions both the legitimacy of the valuation of national uniqueness, as well as the internal logic of the concept and instead point to universal commonalities. However, Dale [29] lacks empirical support for his critique, and perhaps, these exploratory results offer some support for his critique.

References

Thorndike, E. L. (1920). A constant error in psychological ratings. Journal of Applied Psychology, 4, 25–29. https://doi.org/10.1037/h0071663

Ambady, N., & Rosenthal, R. (1992). Thin slices of expressive behavior as predictors of interpersonal consequences: A meta-analysis. Psychological Bulletin, 111, 256–274. https://doi.org/10.1037/0033-2909.111.2.256

Krägeloh, C. U., Bharatharaj, J., Kutty, S. K. S., Nirmala, P. R., & Huang, L. (2019). Questionnaires to measure acceptability of social robots: A critical review. Robotics, 8, 88. https://doi.org/10.3390/robotics8040088

Heerink, M., Ben, K., Vanessa, E., & Bob, W., (2009). Measuring acceptance of an assistive social robot: a suggested toolkit. In RO-MAN 2009. The 18th IEEE International Symposium on Robot and Human Interactive Communication, 528–533. Toyama, Japan: IEEE. https://doi.org/10.1109/roman.2009.5326320.

Peca, A., Coeckelbergh, M., Simut, R., Costescu, C., Pintea, S., David, D., & Vanderborght, B. (2016). Robot enhanced therapy for children with autism disorders: measuring ethical acceptability. IEEE Technology and Society Magazine, 35, 54–66. https://doi.org/10.1109/MTS.2016.2554701

Nomura, T., Suzuki, T., Kanda, T., & Kato, K. (2006). Measurement of negative attitudes toward robots. Interaction Studies, 7, 437–454. https://doi.org/10.1075/is.7.3.14nom

Hornyak, T. N. (2006). Loving the machine: the art and science of Japanese robots (1st ed.). Tokyo, New York: Kodansha International.

MacDorman, K. F., Vasudevan, S. K., & Ho, C.-C. (2009). Does Japan really have robot mania? Comparing attitudes by implicit and explicit measures. AI & Society, 23, 485–510. https://doi.org/10.1007/s00146-008-0181-2

Zeeberg, A., (2020). What we can learn about robots from Japan. BBC.com. January 24.

Why Westerners Fear Robots and the Japanese Do Not. 2018. Wired. July 30.

Nomura, T., (2017). Cultural differences in social acceptance of robots. In 2017 26th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), 534–538. Lisbon: IEEE. https://doi.org/10.1109/ROMAN.2017.8172354.

Bartneck, C., Nomura, T., Kanda, T., Suzuki, T., & Kennsuke, T., (2005). A cross-cultural study on attitudes towards robots. In Proceedings of the HCI International. Las Vegas: Unpublished. https://doi.org/10.13140/RG.2.2.35929.11367.

Bartneck, C., Suzuki, T., Kanda, T., & Nomura, T. (2006). The influence of people’s culture and prior experiences with Aibo on their attitude towards robots. AI & Society, 21, 217–230. https://doi.org/10.1007/s00146-006-0052-7

Dennis, W. (1957). Animistic thinking among college and high school students in the Near East.pdf. Journal of Educational Psychology, 48, 193–198.

Dennis, W. (1953). Animistic thinking among college and university students. The Scientific Monthly, 76, 247–249.

Brynjolfsson, E., & McAfee, A. (2014). The second machine age: work, progress, and prosperity in a time of brilliant technologies (1st ed.). New York: W. W. Norton & Company.

Barnett, M., Wagner, H., Gatling, A., Anderson, J., Houle, M., & Kafka, A. (2006). The impact of science fiction film on student understanding of science. Journal of Science Education and Technology, 15, 179–191. https://doi.org/10.1007/s10956-006-9001-y

Cameron, J., (1984). The Terminator. MGM.

Bornstein, R. F. (1989). Exposure and affect: Overview and meta-analysis of research, 1968–1987. Psychological Bulletin, 106, 265–289. https://doi.org/10.1037/0033-2909.106.2.265

Hong, J. H., Park, H. S., Chung, S. J., Chung, L., Cha, S. M., Lê, S., & Kim, K. O. (2014). Effect of familiarity on a cross-cultural acceptance of a sweet ethnic food: a case study with Korean Traditional Cookie (Y ackwa ): cross-cultural acceptance of Yackwa. Journal of Sensory Studies, 29, 110–125. https://doi.org/10.1111/joss.12087

Kim, A., Han, J., Jung, Y., & Lee, K., (2013). The effects of familiarity and robot gesture on user acceptance of information. In 2013 8th ACM/IEEE International Conference on Human-Robot Interaction (HRI), 159–160. Tokyo, Japan: IEEE. https://doi.org/10.1109/HRI.2013.6483550.

Pinto dos Santos, D., Giese, D., Brodehl, S., Chon, S. H., Staab, W., Kleinert, R., Maintz, D., & Baeßler, B. (2019). Medical students’ attitude towards artificial intelligence: a multicentre survey. European Radiology, 29, 1640–1646. https://doi.org/10.1007/s00330-018-5601-1

Lutz, C., Schöttler, M., & Hoffmann, C. P. (2019). The privacy implications of social robots: Scoping review and expert interviews. Mobile Media & Communication, 7, 412–434. https://doi.org/10.1177/2050157919843961

Reeves, B., & Nass, C. I. (1996). The media equation: how people treat computers, television, and new media like real people and places. Cambridge University Press, CSLI Publications.

Cramer, H., Kemper, N., Amin, A., Wielinga, B., & Evers, V. (2009). ‘Give me a hug’: the effects of touch and autonomy on people’s responses to embodied social agents. Computer Animation and Virtual Worlds, 20, 437–445. https://doi.org/10.1002/cav.317

Hall, E. T. (1966). The hidden dimension. Garden City, Doubleday.

Dautenhahn, K., Woods, S., Kaouri, C., Walters, M.L., Koay, K.L., & Werry, I. (2005). What is a robot companion-friend, assistant or butler? In 2005 IEEE/RSJ international conference on intelligent robots and systems, 1192–1197. IEEE.

Syrdal, D.S., Dautenhahn, K., Koay, K. L., & Walters, M.L., (2009). The Negative Attitudes towards Robots Scale and Reactions to Robot Behaviour in a Live Human-Robot Interaction Study. In Proceedings of AISB09 Symposium on New Frontiers in Human-Robot Interaction 2009: 7.

Dale, P. N. (1986). The myth of Japanese uniqueness. St Martin’s Press.

Acknowledgements

We would like to express our gratitude towards Sumanth Pinnaka for helping with collecting Swedish participants, as well as towards the Swedish Foundation for International Cooperation in Research and Higher Education (STINT) and the Japan Society for the Promotion of Science (JSPS) for funding the project collaboration between Uppsala University, Sweden, and Meiji University, Tokyo Japan.

Funding

Open access funding provided by Uppsala University.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Persson, A., Laaksoharju, M. & Koga, H. We Mostly Think Alike: Individual Differences in Attitude Towards AI in Sweden and Japan. Rev Socionetwork Strat 15, 123–142 (2021). https://doi.org/10.1007/s12626-021-00071-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12626-021-00071-y