Abstract

The paper describes how the attitude of a sounding rocket, launch vehicle or satellite with respect to the Earth can be estimated from camera images of the Earth horizon. Details about detecting the horizon in the camera image, fitting hyperbolae or ellipses to the detected horizon curve and deriving the Earth nadir vector and the corresponding error covariance from the fitted conic section are given. The presented method works at low heights, where the projected horizon mostly appears to be hyperbolic, as well as at large heights, where the projected horizon mostly appears to be elliptic and it is irrelevant if the Earth is fully or only partially in the field of view of the camera. The method can be universally used to estimate the direction vectors and attitude with respect to any spherical celestial body such as the Sun or Moon. Using the example of a sounding rocket mission with two cameras aboard, it is illustrated how the estimates of the Earth nadir and the Sun direction vectors are fused with the measurements of a strapdown inertial measurement unit and a GPS receiver to obtain an accurate and continuous estimate of the three-dimensional orientation of the sounding rocket with respect to the Earth.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The paper presents a method for estimating the orientation of sounding rockets, launch vehicles and satellites with respect to the Earth by detecting the horizon of the Earth in the images of an optical camera, estimating the Earth nadir vector, i.e. the vector pointing from the vehicle to the center of the Earth and fusing this vector with the measurements of an inertial measurement unit (IMU) in an integrated navigation system. The position of the vehicle with respect to the Earth is assumed to be known, for example, from a global navigation satellite system (GNSS) receiver. The fundamentals of the image processing of the presented method have been first derived in [2] and [3].

The estimation of the Earth nadir vector works at very low heights, where the projected horizon of the Earth is a nearly linear curve in the image, at low heights, where the projected horizon appears as a hyperbola in the image, at medium heights, where the projected horizon appears as a hyperbola or a section of an ellipse in the image and at large heights, where the Earth is partly or fully visible in the image and the projected horizon appears as an ellipse, depending on the field of view (FOV) and the angle of view of the camera. The Earth nadir vector is directly estimated from pixels of the detected horizon in the image, without having to fit the detected horizon to a hyperbolic or an elliptic conic section at first.

Although the method was primarily developed to detect the horizon of the Earth and estimate the Earth nadir vector, the underlying geometric principles are generally applicable to projections of any spherical object onto an image plane – provided that the body is clearly different from the background so that the horizon is visible in the image of the camera. The image processing can therefore be adapted to detect the Sun and estimate the direction vector to the Sun. Similarly, the method could be used at night or during eclipse to detect the Moon and to estimate the direction vector to the Moon. If the celestial body is partially eclipsed however, the method has undefined behaviour.

By observing the Earth nadir vector, two of the three rotational degree of freedom (DOF) of the vehicle can be determined at the time when the image of the Earth’s horizon is taken by the camera. The third rotational DOF about the Earth nadir vector itself is not observable. If the Sun direction vector is additionally estimated, all three rotational DOF become simultaneously or sequentially observable, as the Earth nadir and Sun direction vectors usually point in different directions. When the estimates of the direction vectors are fused in an integrated navigation system with the solution of an inertial navigation system, an estimate of the three-dimensional orientation of the vehicle can be continuously provided.

The method is interesting for spinning sounding rockets, for example. To compensate for the effect of thrust vector misalignments and aerodynamic asymmetries on the trajectory during the propelled phase of the ascent, these unguided sounding rockets are usually spun up about the longitudinal axis to angular rates of up to 5 Hz. The angular rate is reduced again after the engine action time by means of a yo-yo system and roll control thrusters. If the orientation of the sounding rocket is determined by a strapdown inertial navigation system, especially the orientation error about the longitudinal axis may rapidly grow during the spinning phase due to the scaling factor error of the roll axis gyroscope. To avoid this problem, special inertial navigation systems are commonly used with the IMU being mounted on a rotatable gimbal, which isolates the roll axis gyroscope from the high roll rate. The roll rate measurement is then composed of the revolution speed of the gimbal, that is measured by an angle encoder, and the roll axis gyroscope measurement. These highly specific and mechanically complex platforms could be replaced by more robust and more readily available strapdown sensors if the accumulating orientation error were instead observed with the help of the Earth nadir and Sun direction vector measurements from a camera and image processing system.

The unguided spinning ATEK/MAPHEUS-8 sounding rocket, launched from Esrange, Sweden, in 2019, carried two cameras, three orthogonal fibre-optical gyros and accelerometers, and a GPS receiver. The mission was carried out by Mobile Rocket Base (MORABA), a department of the German Aerospace Center's (DLR) Space Operations institution. Amongst others, the DLR Institutes of Aerodynamics and Flow Technology, Materials Physics in Space and Aerospace Medicine, as well as the Microgravity User Support Center (MUSC) and the Technical University of Munich (TUM) were involved in the experiments.

Although the cameras and inertial sensors were not intended for navigation purposes, the collected image and sensor data set is ideal for demonstrating the functionality of the method step by step – starting from the raw distorted picture of one of the cameras, detecting potential horizon curves in the image, undistorting the found edges, estimating the Earth nadir vector and the corresponding error covariance, selecting the most likely horizon from the list of candidates by means of the residuals, and finally fusing the estimated Earth nadir and Sun direction measurements with the gyroscope, accelerometer and GPS receiver measurements.

It is favorable that there are optical cameras available in the market today which provide high-resolution images and have a highly responsive automatic exposure control. The latter is especially important for rapidly rotating vehicles to cope with the changing light conditions, which are challenging in terms of exposure due to the contrast between the dark space and the bright surface of the illuminated Earth. Furthermore, today’s embedded hardware has sufficient computing power to process the images and execute the algorithms of the integrated navigation system in real-time. Particular attention was paid to the computational efficiency of the algorithm.

Earth horizon sensors have been widely used on low Earth orbit (LEO) to geostationary orbit (GEO) satellites to measure the roll and pitch angles. Most of the conventional sensors work in the infrared spectrum, detecting the infrared radiation that is emitted and reflected by the Earth’s surface. They use either scanners that sample the Earth surface with an infrared sensor and rotating optics or an array of static infrared sensors. A prominent example of a scanning horizon sensor is Leonardo’s IRES device, which is designed for orbit heights between 15.300 km and 53.000 km. The accuracy of the roll and pitch angle is given with 0.05 deg random error and 0.02 deg bias error (\(3\sigma\)) [20].

CubeSpace offers the CubeSense sensor, which is a complementary metaloxide-semiconductor (CMOS)-based sensor with a very wide FOV and is available as Earth horizon and Sun sensor variant. The accuracy of the attitude is specified as \({0.2}\,{\hbox {deg}}\) (\(3\sigma\)) if the Earth is fully visible by the sensor [10]. The effective FOV is said to be 130 deg horizontally and vertically. Insight into the early development of the algorithm is provided in [1]. Meller et al. introduced a sensor system for low LEO satellites using multiple CMOS devices, each with a resolution of \(512 \times 512\) pixels and a FOV of 67 deg [21]. Nguyen et al. described a miniaturized horizon sensor that was developed for the MicroMAS CubeSat being deployed from the International Space Station (ISS) in 2015 [26]. It consists in total of four small thermopile sensors. Its accuracy is stated to be better than 0.2 deg (\(1\sigma\)). Kikuya et al. recently presented a method for estimating the attitude with respect to the Earth by first projecting the horizon visible on the image plane onto a unit sphere [19]. With regard to the underlying mathematical theory, the comprehensive work of Christian et al. in the area of optical navigation and attitude estimation from the projected horizon of a celestial body can be recommended, for example [6]- [8]. Although the main focus of their work is on estimating the relative position to a celestial body, they also show how the approaches can be adapted to estimate the relative orientation. Especially [9] is a recommendable tutorial on estimating the position and attitude of a vehicle relative to a spherical or ellipsoidal celestial body from camera images. Modenini et al. has recently published a method that takes the ellipsoidal shape of the celestial body into account [22, 23]. Mortari et al. presented a method for determining the relative position of a spacecraft with respect to an illuminated tri-axial ellipsoidal celestial body [25] from optical images, but also noted that the method can be used to obtain an estimate of the attitude of the camera [28].

The paper is organized as follows: in section 2, the geometric fundamentals are given. In section 3, the image processing algorithm and the estimation of the Earth nadir vector are detailed. In particular, the covariance of the estimation error is derived, which is required for the statistically correct fusion of the Earth nadir vector with the inertial navigation solution. In section 4, the sensitivity of the estimate of the Earth nadir vector with respect to the height above the Earth, the FOV of the camera and the image resolution is analyzed. In section 5, it is discussed how the image processing is adapted to detect the Sun in the image of the camera and to estimate the direction vector to the Sun. Section 6 deals with the aspects of the integrated navigation system that are specific to the data fusion of the Earth nadir and Sun direction vector measurements with the inertial navigation solution. Section 7 presents the results of the image processing and data fusion of the sensor ATEK sounding rocket. The conclusions are finally drawn in section 8.

2 Geometrical fundamentals

2.1 Pinhole camera model

The method is based on the assumption that the camera is an ideal pinhole camera without lenses [32, 37]. Optical effects such as distortion and blurring caused by real lenses are compensated for by intrinsic camera calibration, thoroughly explained in Section 3.2. In this ideal model the image is projected onto the image plane at a distance f from the aperture. Digital cameras usually provide the image already mirrored from left to right and from bottom to top. Therefore, an alternative representation with the image plane on the other side of the aperture of the camera is advantageous for the following derivation, as illustrated in Fig. 1. f is the focal length of the camera.

2.2 Coordinate systems

The required coordinate systems are illustrated in Fig. 2 and explained in the following.

2.2.1 Camera coordinate system (c) and image coordinate system (i)

The origin \(O_c\) of the camera coordinate system (c-frame) is located at the aperture of the pinhole camera at distance f to the image plane. The \(x_c\)- and \(y_c\)-axes are parallel to the image plane. The \(z_c\)-axis points along the optical axis in the viewing direction of the camera, the \(x_c\)-axis is directed to the right, and the \(y_c\)-axis completes the orthogonal, right-handed coordinate system. The pixel locations of the images taken by the camera are specified in the two-dimensional image coordinate system (i-frame) whose origin \(O_i\) lies in the cross point of the optical axis and the image plane and whose \(x_i\)- and \(y_i\)-axes are parallel to the \(x_c\)- and \(y_c\)-axes. The images are w pixels wide and h pixels high.

2.2.2 Principal axes coordinate system (p)

The principal axes coordinate system (p-frame) is a two-dimensional frame lying in the image plane. The \(x_p\)-axis coincides with the principal axis of the conic section projected onto the image plane. Why the projection is a conic section is thoroughly explained in Section 2.5. It is thus oriented along the major axis of ellipses and along the transverse axis of hyperbola or parabola, respectively. The \(y_p\)-axis is orthogonal to the \(x_p\)-axis and points in the direction of the minor axis of the conic section. The origin \(O_p\) is located in the center of the conic section. Using this frame, conic sections can be represented by a simple quadratic equation without linear and bilinear terms.

2.2.3 Earth-centered earth-fixed coordinate system (e)

The Earth-centered Earth-fixed (ECEF) coordinate system (e-frame) [27] serves as reference frame for the orientation of the camera with respect to the Earth. It is also the reference frame of the integrated navigation system which will be later used to fuse IMU measurements, GPS position and velocity measurements, Earth nadir vectors and Sun direction vectors.

2.3 Earth nadir vector

The purpose of the presented method is to estimate the attitude of the camera with respect to the Earth. For that, the normalized Earth nadir vector \({\mathbf {e}}_c\), pointing to the Earth’s center \(O_e\) and specified in the c-frame, is derived from the conic section detected in the image. The same vector is readily available in the e-frame if the position of the camera with respect to Earth’s center \({\mathbf {x}}_e\) is known, for example, from satellite navigation

By relating the vector in c-frame with the vector in e-frame with the transformation matrix \({\mathbf {R}}_{ec}\)

two rotational DOF of the camera with respect to the Earth can be determined. Only the rotational DOF about the vector itself is unobservable.

2.4 Earth cone

According to the pinhole camera model, all light rays creating the image in the image plane pass through the pinhole aperture of the camera. Then, if Earth is assumed to be spherical, all rays originating from Earth and its atmosphere form a solid cone with apex in the aperture, as illustrated in Fig. 3. The surface of this cone represents the boundary between Earth (more precisely the visible atmosphere) and space as seen by an observer at the location of the camera. The cross section of the cone with the geometrical definitions is shown in Fig. 4. r is the mean radius of the Earth, \(\alpha\) is the semi-opening angle of the cone, H describes the height of the camera above the spherical Earth, and d is the effective thickness of the atmosphere. In the following, the mathematical equation of the cone surface is derived. It will be later intersected with the image plane to find the equation of the projected curve which is the horizon line in the image.

The scalar product of the vector of an arbitrary point on the cone surface \({\mathbf {p}}_c\) with the Earth nadir vector \({\mathbf {e}}_c\) is

By squaring both sides, the equation of the double napped cone surface is obtained

\(\alpha\) is given from the geometry in Fig. 4 by

2.5 Conic section

The projection of the Earth’s horizon onto the image plane is the intersection of the Earth cone surface with the image plane \(z_c = f\), and thus forms a general conic section. As generally known, conic sections can be circles, ellipses, hyperbolas and even straight lines or points. The conic section equation is obtained by inserting \({\mathbf {p}}_c = \begin{bmatrix} p_{c,x}&p_{c,y}&f \end{bmatrix}^{\scriptscriptstyle \mathrm {T}}\) into (4), giving

with the coefficients

2.6 Classification of the conic section

In this application the projected conic section can only be a straight line if the image plane is a tangent to the cone while the optical axis points away from Earth. Since the Earth is not in the FOV of the camera in this scenario, it is impossible to observe a straight line and is therefore disregarded in the following. A point can only be observed if the aperture is in the image plane which is impossible and thus also disregarded.

Generally, the discriminant \(B^2 - 4 \, A \, C\) decides whether the conic section (6) is an ellipse, parabola or hyperbola. If \((B^2 - 4 \, A \, C) < 0\), it is an ellipse, if \(\left( B^2 - 4 \, A \, C\right) = 0\), it is a parabola, and if \(\left( B^2 - 4 \, A \, C\right) > 0\), it is a hyperbola. Inserting the coefficients A, B and C into the discriminant and canceling positive factors that do not alter the sign of the discriminant yields the specific discriminant for this application

Making use of the unit length of the Earth nadir vector and the Pythagorean trigonometric identity results in the discriminant

Defining the elevation of the Earth nadir vector above the image plane

the discriminant becomes

Consequently, this means that the conic section is an ellipse if \(\sin ^2 \alpha < \sin ^2 \beta\), is a parabola if \(\sin ^2 \alpha = \sin ^2 \beta\) and is a hyperbola if \(\sin ^2 \alpha > \sin ^2 \beta\). Considering that \(\alpha\) and \(\beta\) take only values between 0 and \(\pi /2\), it is also valid that the conic section is an ellipse if \(\sin \alpha < \sin \beta\) or \(\alpha < \beta\), is a parabola if \(\sin \alpha = \sin \beta\) or \(\alpha = \beta\), and is a hyperbola if \(\sin \alpha > \sin \beta\) or \(\alpha > \beta\). What does this geometrically mean? As illustrated in Fig. 5, if the distance f between focal point and image plane is neglected, one can say \(\alpha < \beta\) if the image plane does not intersect the Earth, \(\alpha = \beta\) if the image plane is just tangential to the Earth cone, and \(\alpha > \beta\) if the image plane intersects the Earth. The results are summarized in Table 1. Parabolic conic sections hardly occur in reality in this application and are therefore disregarded in the following. Circular conic section are just a special case of ellipses and occur only if \(z_c\) exactly intersects \(O_e\) and are therefore disregarded as well.

2.7 Visibility of conic sections

So far the image plane is assumed to be infinite in size but naturally, the actual sensor area of real cameras occupies only a small area of the image plane. The sensor area, together with the focal length f, defines the FOV of the camera. Obviously, the horizon of the Earth must be inside the FOV of the camera to project the horizon onto the actual image, either as ellipse or as hyperbola. Here arises the question whether both types of conic sections are always observable. The Earth may be fully seen in the image if \(\gamma > 2 \alpha\) holds for the FOV angle of the camera \(\gamma\). An elliptic horizon conic section may be partially or fully projected onto the image plane if \(\gamma > \pi - 4 \alpha\). A hyperbolic horizon conic section may be visible if \(\gamma > -\pi + 4 \alpha\). Fig. 6 illustrates if solely elliptic, solely hyperbolic or both elliptic or hyperbolic horizon conic sections are theoretically observable if the camera is appropriately oriented depending on the height of the camera above Earth H and the FOV of the camera. The hatched area marks combinations of H and FOV at which the Earth is completely seen by the camera. Fig. 7 shows the visibility for heights below 800 km. From this analysis one can conclude, that even for low altitude missions like sounding rockets, none of both possible conic section types can be disregarded if a wide angle optical system is used. For this mission with an apogee of 238 km and a FOV of 94.4 deg however, a focus on hyperbolae would have been sufficient. Further more, for missions below 800 km altitude using optical systems with FOV up to 120 deg it is impossible to have the Earth fully inside the image.

2.8 Effect of the spherical Earth approximation

The Earth is in reality ellipsoidal, but is approximated by a sphere with radius r, leading to erroneous estimates of the direction of the Earth nadir vector. To assess the rough magnitude of the orientation error that has to be expected when the ellipsoidal Earth is approximated by a spherical Earth, one can locally approximate the ellipsoid by a sphere that is tangent to the ellipsoid at the user’s location and whose radius r is the local radius of curvature of the ellipsoid normal to the viewing direction of the camera. The direction of the found Earth nadir vector will then be very close to the local normal direction of the ellipsoid, i.e. the direction of gravity. Advantageously, the estimation of the Earth nadir vector is insensitive to the scaling of the substitute sphere, as will be further elaborated in section 4, so that the local radius of curvature normal to the viewing direction of the camera does not need to be exactly known, but can be approximated.

Fig. 8 gives an expression of the orientation angle error about the East direction to be expected if the local normal vector is erroneously assumed as vector pointing to the center of the Earth. Its maximum is about 0.19 deg maximum at mid latitudes.

Following this reasoning, it would indeed be better not to assume the estimated Earth nadir vector toward the center of the Earth, but toward the local normal of the ellipsoid. Then the orientation error about the East direction, as shown in Fig. 8, error is reduced.

3 Image processing

Since the algorithm is intended to run on an embedded system onboard a spacecraft, particular emphasis was posed on the reduction of computational time although for this study the algorithm was applied post-flight on a desktop computer.

3.1 Binarization and edge detection

The presented method makes use of the significant difference in brightness between the illuminated Earth and its atmosphere and the dark space. Edge detection algorithms like the Canny edge detection algorithm [5] are therefore ideal for the identification of the Earth horizon in the camera images. [11] or [34], for example, provide an overview of the many existing edge detection techniques in computer vision. [38] is another good introduction to edge detection. The edge detection algorithm shall be capable of identifying both hyperbolic and elliptic projections of the horizon close to and far from the Earth on a typical embedded computer in real-time. The applied algorithm bases upon the topological search algorithm [33] and was adapted in [3] for horizon detection.

First, a monochrome copy of the image is generated by comparing the mean value of the RGB colors of each image pixel with a given threshold value. The comparison yields 0 (that is black) if the mean value is below the threshold and yields 1 (that is white) if the mean value is above the threshold. Next, all edges, are identified by an adapted version of a topological search algorithm [33]. An edge is a set of white pixels, with at least one adjacent black pixel, that form a continues string. The rough process of this algorithm is searching for edges row-by-row and following them through the image. These detected edges are potential candidates for the horizon curve. In contrast to classical edge detections like Canny edge detection [5], the original algorithm allows the distinction between individual edges, makes it possible to ignore edges inside edges and provides the edges as continuous lines of ordered pixels. All three of these features are key factors for the success of this method. In addition, the process is easily accelerated by taking into account that the Earth will occupy a large area in the image and thus not every row in the image must be searched. In fact, for this application, the Earth is never fully inside the image and thus must always touch the image border which allows to reduce the search for edges to the border pixels. Not only does this optimization reduce computational time considerably but also reduces clutter from sun flares and other objects that create edges. To support cases at high altitudes where the Earth is fully inside the image, additional search lines inside the image must be added. The proposed distance between the search lines is described in Section 5.

In Fig. 9 to 14, the image processing steps are exemplified using an image that was taken on the ATEK sounding rocket at T+220 s at a height of about 230 km. Fig. 9 shows the original unprocessed image as provided by the camera. The image is strongly distorted due to the wide angle lens of the camera. Fig. 10 is the binarized monochrome image. The threshold was set in such a way that the space and the cloud-free areas of the Earth’s surface are categorized as black and the atmosphere and the illuminated cloud cover as white. Only the blue pixels have been analyzed by the edge detection algorithm. The boundaries of the small enclosed white areas originating from clouds were discarded since they do not touch the image border. The area on the right where the payload is visible was set to be ignored by the algorithm. As illustrated in Fig. 11, the detected edges are formally divided into individual horizon edge candidates. In fact, the two long edges that both cross the lower and upper borders of the image are the most promising horizon candidates. One is the actual horizon and the other is the boundary between areas with dense and scattered cloud cover. In the following steps, Earth nadir vectors will be estimated for each candidate. In order to reduce further clutter inside the horizon edge, the Random Sample Consensus (RANSAC) algorithm by [12] is applied to each edge (see Sec. 3.6) using the model described in Sec. 3.3. To increase accuracy, it should finally be mentioned that it may be advisable to use a subpixel accurate edge detection algorithm [32, 30].

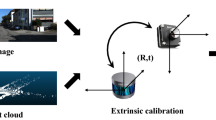

3.2 Calibration and undistortion

The ideal pinhole camera model disregards distortions that are caused by the optics of the camera. Lenses with a large FOV in particular cause large distortions outside the center of the image which are noticeable as nonlinear bending of the incident light rays [16]. The previously found edges must therefore be undistorted before they can be used for curve fitting and estimation of the Earth nadir vector, since the derived algorithm is based on the linear relationship of the simple pinhole camera model. Instead of first undistorting the camera image and then searching for edges, it is advantageous to carry out the undistortion after the edge detection because only the coordinates of the pixels of the detected edges and not all pixels of the image have to be transformed, which is a great reduction of computational time.

Fig. 12 illustrates a distorted nonlinear light ray and the corresponding undistorted linear light ray. The radial distance between the center of the image \(O_i\) and an image point of the undistorted ray \(P_u\) is given by the simple geometrical relation

where \(\theta\) is the angle between the incident light ray and the optical axis of the camera. According to the radially symmetric distortion model [18], the radial distance between the center of the image \(O_i\) and the image point of the distorted ray \(P_d\) can be adequately described by a ninth order polynomial containing only odd terms

The five coefficients \({\mathbf {k}} = \begin{bmatrix} k_1&k_2&k_3&k_4&k_5 \end{bmatrix}^{\scriptscriptstyle \mathrm {T}}\) are estimated during the calibration of the specific camera and lens. For this purpose, a series of images of a geometrically precisely defined and easily recognizable pattern with known dimensions (e.g. chessboard) is taken. In order for the five coefficients to be fully observable, the pose of the pattern relative to the camera must be changed successively. The coefficients are estimated by using the Levenberg-Marquardt algorithm, a method for solving non-linear least-squares problems.

Besides the distortion model coefficients \({\mathbf {k}}\), the intrinsic camera parameters are estimated during the calibration and used to correct the pixel coordinates of the detected edges [37]. These are the exact center of the image \(\begin{bmatrix} c_x&c_y \end{bmatrix}^{\scriptscriptstyle \mathrm {T}}\) as well as the horizontal and vertical focal lengths \(f_x\) and \(f_y\). Each pixel of the detected edges \({\tilde{\mathbf {p}}}_i\) is first transformed with

and then undistorted with

where the scaling factor s is

\(\theta\) is found by iteratively solving (13) for \(\theta\). The detected and undistorted horizon candidates of the example image are shown in Fig. 13.

3.3 Nadir vector estimation

Each of the m points of the detected horizon curve \({\mathbf {p}}_{i,j}\), \(j = 1 \ldots m\), fulfills the conic section equation (6). The searched coefficients are combined in the vector \({\mathbf {z}}\)

The problem at hand can be solved by linear least-squares approximation. However, in order for the solution to be either an ellipse or a hyperbola, the estimated coefficients \(A - F\) must meet the quadratic inequality constraint \(B^2 - 4 A C < 0\) for ellipses and \(B^2 - 4 A C > 0\) for hyperbolae. Given the expected kind of conic section, [13, 31] and [36], for example, describe methods how the detected edges can be best fitted to ellipses or hyperbolae by linear least-squares approximation with quadratic inequality constraint. After having estimated the conic section coefficients \(A - F\), the searched Earth nadir vector \({\mathbf {e}}_c\) is calculated with

Especially if only short sections of elliptic or hyperbolic horizon curves are seen in the image, the fitting may provide erroneous results. More sophisticated least-squares methods that are better adapted to the fitting problem at hand can be found in the literature, such as the hyper least-squares fitting method [17], which is particularly well suited for fitting circles and ellipses to noisy curves.

The main disadvantage of the general conic section fitting methods is that the specific constraints (7) which relate the conic section coefficients \(A - F\) to the Earth nadir vector \({\mathbf {e}}_c\) are not taken into account. Therefore, an adapted solution approach is to estimate the coefficients \(A - F\) by linear least-squares approximation, now with the three quadratic equality constraints

which are derived from (7). Unfortunately, there is no easy way to solve this estimation problem.

A third and more purposeful approach is to directly estimate the Earth nadir vector \({\mathbf {e}}_c\). Each of the m points of the detected horizon curve \({\mathbf {p}}_{i,j}\), \(j = 1 \ldots m\), fulfills the linear equation (3) with \({\mathbf {p}}_{c,j} = \begin{bmatrix} p_{x,i,j}&p_{y,i,j}&f \end{bmatrix}^{\scriptscriptstyle \mathrm {T}}\). \(\hat{{\mathbf {e}}}_c\) is found by least-squares approximation. Combining all \(1 \ldots m\) points in the matrix

and the right hand sides in the vector

the estimate of the Earth nadir vector is

Fig. 14 shows the edge that was selected as most likely horizon curve, and Fig. 15 finally illustrates the hyperbola that was fit to the edge. As sanity check it can be evaluated if the projected conic section lies inside the image, given the calculated Earth nadir vector \(\hat{{\mathbf {e}}}_c\) and the known altitude H. If not, the edge must be disregarded. The approach of estimating the Earth nadir vector directly from the detected curve is also described in [7] and [9] as Christian-Robinson algorithm, where it also proves to be the most powerful.

3.4 Residual

The residual is a measure for the quality of the curve fitting and consequently of the Earth nadir vector estimate. It is used to eventually decide which of the potential candidates is most likely the horizon conic section. It would be best to use the geometrical distance between each pixel of the detected edge and the estimated conic section, which, however, is costly to calculate. Therefore, both the pixels of the detected edge and the estimated conic section are first transformed to the p-frame, which is defined by the estimated conic section. At least in the case of flat hyperbolae, which mainly occur in sounding rocket and low Earth orbit satellite applications, the distance between the transformed curve and conic section in \(x_p\)-direction is a good approximation of the geometrical distance, which can be computed efficiently. Fig. 16 illustrates a hyperbola and an ellipse that are transformed to the p-frame. The parameterized conic section equation (6) transformed to the p-frame is

with the coefficients

The linear and bilinear terms are zero. The transformed conic section is symmetrical to the \(y_p\)-axis. The pixels of the detected edge \({\mathbf {p}}_i\) are first transformed to the p-frame by

The residual is then calculated from the m points of the transformed detected edge and estimated conic section

The horizon edge candidate with the smallest residual is chosen as the actual horizon edge. The absolute value in Eq. 28 ensures that the positive branch is used in the residual.

3.5 Error covariance

The covariance of the error of the Earth nadir vector estimate \(\delta {\mathbf {e}}_c\) is later required for the data fusion in the integrated navigation filter. (3) is linearized about the Earth nadir vector \(\tilde{{\mathbf {e}}}_c\) and the vector \(\tilde{{\mathbf {p}}}_c = \begin{bmatrix} \tilde{{\mathbf {p}}}_i^{\scriptscriptstyle \mathrm {T}}&f \end{bmatrix}^{\scriptscriptstyle \mathrm {T}}\)

where \({\mathbf {e}}_c = \tilde{{\mathbf {e}}}_c + \delta {\mathbf {e}}_c\) and \({\mathbf {p}}_c = \tilde{{\mathbf {p}}}_c + \delta {\mathbf {p}}_c\). With \(\delta {\mathbf {p}}_c = \begin{bmatrix} \delta {\mathbf {p}}_i^{\scriptscriptstyle \mathrm {T}}&0 \end{bmatrix}^{\scriptscriptstyle \mathrm {T}}\) and \(\delta {\mathbf {p}}_i\) being the pixel error. The right-hand side of the equations can be written as the scalar product of two two-dimensional vectors

By defining the (\(m \times 2m\))-sized matrix

with the row vectors

the covariance of the Earth nadir vector error \({\mathbf {P}}_c = \mathrm {E}\!\left[ \delta {\mathbf {e}}_c \, \delta {\mathbf {e}}_c^{\scriptscriptstyle \mathrm {T}}\right]\) is derived from the pixel error covariance \({\mathbf {R}}_i = \mathrm {E}\!\left[ \delta {\mathbf {p}}_i \, \delta {\mathbf {p}}_i^{\scriptscriptstyle \mathrm {T}}\right]\) by

It is assumed that the \(x_i\)- and \(y_i\)-coordinates of the pixel error \(\delta {\mathbf {p}}_i\) are each correlated between the corresponding \(x_i\)- and \(y_i\)-coordinates of the adjacent pixels but are statistically independent of each other. In its simplest form, this correlation may be described by a spatial \(1^{\hbox {st}}\)-order Gauss-Markov process. The pixel error covariance \({\mathbf {R}}_i\), which considers the correlation, then takes the form

with the correlation matrix

and R being the scalar variance of the pixel coordinate error and L being the characteristic distance of the Gauss-Markov process between the correlated pixels. For example, Fig. 17 shows the detected horizon curve in the image of camera 1 on the ATEK sounding rocket and the estimated hyperbola at T+220 s. The pixel error is composed of a component that is correlated over a distance of several hundred pixels and a sharply bounded, only little correlated noise component featuring characteristic patterns. This second component is quantization noise, which is due to the fact that the detected edge is discretized to integer pixels before the undistortion transformation. Taking into account the residuals of all detected horizons in the images of camera 1 and camera 2 on the ATEK sounding rocket, typical values for the variance R and the characteristic length L were estimated

Fig. 18 illustrates the estimated hyperbolic horizon in the image of camera 1 at T+220 s with superposed correlated noise that was generated by a white noise shaping filter (red dots) and additionally superposed violet noise (black dots) which represents the quantization noise. Violet noise is spatially differentiated white noise and is thus negatively correlated. It is largely filtered out by the regression and therefore does not need to be considered when calculating the error covariance that will be used for the integrated navigation error filter. The simulated noise in Fig. 18 is visually similar to the actual noise in Fig. 17.

The \(x_c\)-, \(y_c\)- and \(z_c\)-components of the covariance matrix \({\mathbf {P}}_c\) are highly correlated due to the specific geometry of the problem, and the corresponding covariance ellipsoid is strongly elongated in the direction of one semi-axis. Mathematically spoken, the covariance matrix \({\mathbf {P}}_c\) is not well conditioned. Therefore, before using it for data fusion in the integrated navigation system, the covariance matrix \({\mathbf {P}}_c\) is overbounded by assuming a spherical covariance

with \(\lambda _{max}\) being the maximum eigenvalue of \({\mathbf {P}}_c\).

3.6 Elimination of optical disturbances

Especially when the Sun is in the camera’s FOV, the Sun itself or lens flares may hinder or even falsify the edge detection. Outliers are removed by using the RANSAC algorithm of [12]. For that, the Earth nadir vector and the corresponding conic section are repeatedly estimated with three randomly selected pixels of the detected edge using the method described in Sec. 3.3. Each time, the edge pixels within a distance of certain threshold to the conic section are counted. The probability of detection of the correct subset of pixels increases with the number of repetitions. At the end, the largest subset is the clutter free output.

4 Sensitivity analysis

In this section, the sensitivity of the method with regard to the height of the camera above the Earth H, the FOV of the camera, the resolution of the image and the thickness of the atmosphere d is analyzed. The sensitivity analysis is done by evaluating the covariance equation (33) for different scenarios in which one parameter varies and the other parameters are kept constant. The covariance of the Earth nadir vector error \({\mathbf {P}}_c\) is transformed to a north-east-down coordinate system to obtain the roll, pitch, and yaw angle errors covariance.

First, the expected standard deviations of the roll and pitch angle errors are plotted in Fig. 19 as a function of the height H. For this sensitivity analysis, it is assumed that the vehicle is oriented horizontally and the camera is looking forward in a horizontal direction. The FOV is \({94.4}\,\mathrm{deg}\), the resolution is 1080 px, and the effective thickness of the atmosphere d is 0 km. The focus of the sensitivity analysis is on the height range between \({50}\,\mathrm{km}\) and \({500}\,\mathrm{km}\). The height H has only a minor influence on the accuracy of the roll and pitch angles. The standard deviation of the pitch angle error is more or less constant in the height range considered, and that of the roll angle decreases only slightly with increasing height H.

Next, Fig. 20 illustrates the sensitivity of the roll and pitch angle with respect to the FOV of the camera. Here, the height is set to \(H = {200}\,\mathrm{km}\). The standard deviation of the roll angle error is maximum when the FOV of the camera is small and decreases as the FOV increases, but increases again when the FOV is large. A FOV of about \({118}\,\mathrm{deg}\) would be optimal. Interestingly, the pitch angle is not affected by the FOV of the camera.

In Fig. 21, the dependency on the image resolution is shown. The height is \(H = {200}\,\mathrm{km}\), and the FOV is \({94.4}\,\mathrm{deg}\). As intuitively expected, the standard deviations of both the roll and pitch angle errors are maximum at low image resolutions and steadily decrease with higher image resolutions.

Finally, the sensitivity with respect to the effective thickness of the atmosphere d is analyzed in Fig. 22. It can be observed that neither the roll nor the pitch angle errors are influenced by d. So one gets the same result, regardless of whether one estimates the Earth nadir vector from the horizon of a spherical Earth without atmosphere (which is only theoretically possible) or from the actually visible horizon with atmosphere, as long as both horizons can be converted into one another by scaling. This is beneficial because the transition between the atmosphere and space can be fairly blurred depending on the height, viewing angle and solar radiation, and the course of the detected horizon edge depends much on the selected binarization threshold. The effective thickness of the atmosphere d can therefore be set to zero.

5 Sun sensor

The geometrical fundamentals of the presented method are, in general, valid for spherical (celestial) bodies. Thus, the algorithm can be used not only to estimate the Earth nadir vector, but also to estimate the direction vectors to the Sun and Moon. The projections of the Sun and Moon onto the image plane of the camera are ellipses. Because of the bright sunlight, the visible ellipse appears larger than the actual size of the Sun, but the shape and orientation of the ellipse remain unaffected. In the previous section, the sensitivity analysis showed that the method is insensitive to the scale and thus the size of the projection of the sphere on the image plane. It is advantageous if both the horizon of the Earth and the Sun are simultaneously or sequentially visible on the camera images. Since the directions of the Earth nadir vector and the vector to the Sun significantly differ from each other, all three rotational DOF of the camera with respect to the Earth can be determined from the two independent vector observations – either in one epoch or over a longer period of time if an integrated navigation filter is used. Fig. 23 shows again an example image of camera 1 on the ATEK sounding rocket at T+265 s. In addition to the Earth’s horizon, the camera image also shows the Sun. The sunlight is strongly reflected on the surface of the sounding rocket and causes glare on the image. First, the original image is binarized using a threshold value that is adapted to the brightness of the Sun. The result is illustrated in Fig. 24.

In Section 3 the optimization to only search for edge pixels would prevent the detection of the Earth at high altitudes, where its projected ellipse is fully inside the image. In this case it would also prevent the detection of the Sun, since its projection also does not intersect the image borders. Therefore, in addition to the border pixels also the pixels in an horizontal search line raster, as visualized in Fig. 24 as yellow lines, are searched. The distance between the lines is \(2l = 2 b^2 / a = 2f\tan \alpha\) where l is the semi-latus-rectum, b is the semi-minor axis and a is the semi-major axis of the potentially projected ellipse (c.f. Fig. 16). In contrast to the minor axis, the latus-rectum is not an exact measure of the size of the ellipse but it is a sufficiently high lower bound which can be determined without knowledge of the nadir vector.

Since only curves are sought that intersect one of the stripe borders at least once, only the boundaries of the two large white spots are detected as valid edges. The small white spot on the left of the elongated reflection spot does not intersect one of the search lines and is therefore discarded by the topological search algorithm. In fact, the elongated spot of the reflection on the surface of the sounding rocket would almost have been discarded if the search line had been shifted slightly upwards. The edge of the actual projection of the Sun, however, fulfills the search criteria very well. The pixels that are colored blue in Figure 24 and the yellow lines are the only pixels that have been analyzed by the topological search algorithm, all other pixels have not even been examined. In Fig. 25, all found edges are visualized in different colors. They are potential candidates for the Sun ellipse.

Both curves are undistorted and used to estimate the Sun direction vector \({\mathbf {e}}_c\). The solution is again the candidate with the smallest residual between the detected edge and the estimated ellipse. Fig. 26 shows the undistorted edge which was selected as most likely candidate. Finally, Fig. 27 shows the estimated ellipse which is plotted over the original undistorted camera image.

Another example is shown in Fig. 28. Contrary to intuition, the coordinates of the Sun direction vector are not in the center of the detected Sun ellipse. This is because the image plane is highly inclined with respect to the Sun cone. In fact, the coordinates of the Sun direction vector are neither in the center nor in one of the foci of the ellipse.

Specifically for the ATEK sounding rocket,

is assumed for the calculation of the covariance matrix (33).

6 Integrated navigation system

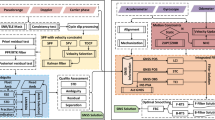

6.1 System design

The design of the integrated navigation system is illustrated in Fig. 29. It is the well-known design of an integrated navigation system (see for example [35] or [15]) which is aided with the position and velocity measurements of a GPS receiver and the Earth nadir and Sun direction vector measurements of the camera(s). The navigation error filter is an error state space Kalman filter. More precisely, it is implemented as a Schmidt-Kalman filter, which allows to distinguish between estimated error states and error states that are only considered statistically [14].

The acceleration and gyroscope measurements \(\tilde{{\mathbf {f}}}_b\) and \(\tilde{\varvec{\omega }}_{ib}\) of the IMU are first in-flight calibrated with the estimated sensor biases \({\mathbf {b}}_f\) and \({\mathbf {b}}_\omega\) and then integrated with time within the inertial navigation system (for example by means of the \(4^{\hbox {th}}\)-order Runge-Kutta integration scheme) to obtain the inertial navigation solution, that is the position \(\hat{{\mathbf {x}}}_{e}\), the velocity \(\hat{{\mathbf {v}}}_{e}\) and the orientation quaternion \(\breve{q}_{{\tilde{e}}b}\), beginning from the initial values \({\mathbf {x}}_{e,0}\), \({\mathbf {v}}_{e,0}\) and \(\breve{q}_{eb,0}\).

The position and velocity measurements \(\tilde{{\mathbf {x}}}_e\) and \(\tilde{{\mathbf {v}}}_e\) with the covariance matrices \({\mathbf {P}}_{e,pos}\) and \({\mathbf {P}}_{e,vel}\) and the Earth nadir vector \(\tilde{{\mathbf {e}}}_{c,hor}\) and the Sun direction vector \(\tilde{{\mathbf {e}}}_{c,sun}\) with the covariance matrices \({\mathbf {P}}_{c,hor}\) and \({\mathbf {P}}_{c,sun}\) are input into the navigation error filter to estimate the navigation and sensor error states. The estimated position error \(\delta {\mathbf {x}}_e\), velocity error \(\delta {\mathbf {v}}_e\) and orientation error \(\varvec{\psi }_{e{\tilde{e}}}\) are fed back to the inertial navigation system to correct the navigation state estimates. The navigation error filter also outputs the estimated covariance matrix \({\mathbf {P}}\) of all navigation and sensor state errors. In this paper, only accelerometer and gyroscope biases are accounted for as main IMU error representatives; other errors are omitted for clarity. If required, other IMU errors, such as scaling factor errors, bias instabilities and misalignments, can be additionally considered or estimated and used for in-flight calibration of the raw sensor values. In addition to the IMU errors, the misalignment of the camera is estimated or considered and the time-correlated measurement noise of the Earth nadir and Sun direction vector measurements is considered.

6.2 Earth nadir and Sun direction vector innovation

The nonlinear observation equations of the Earth nadir and Sun direction vector measurements which relate the vectors to the navigation states of the integrated navigation system are

where \({\mathbf {R}}_{b{\tilde{c}},k}\) is the transformation matrix between the body-fixed frame (b-frame) and the c-frame, representing the mounting orientation of the camera with respect to the body-fixed frame of the vehicle. \({\mathbf {R}}\left( \breve{q}_{{\tilde{e}}b,k}\right)\) is the transformation matrix between the e-frame and the b-frame and is derived from the orientation quaternion \(\breve{q}_{eb}\) at time \(t_k\). \({\mathbf {X}}_{e,k}\) is the position of the Sun with respect to the center of the Earth at time \(t_k\), specified in e-frame. An algorithm for the computation of the Sun’s position in the J2000 Earth-centered inertial frame and its transformation to the e-frame is, for example, given in [24]. The innovations that go into the Kalman filter are then

6.3 Earth nadir and Sun direction vector error model

In addition to the spatial correlation of the pixel errors of the Earth horizon curve, it is assumed that the errors of the estimated Earth nadir vector \(\delta {\mathbf {e}}_{c,hor}\) and the Sun direction vector \(\delta {\mathbf {e}}_{c,sun}\) are correlated with time. This temporal correlation is described in each case by a \(1^{\mathrm{st}}\)-order Gauss-Markov process which is characterized by the correlation length \(T_c\) [29]. The discrete-time propagation equation of a general \(1^{\mathrm{st}}\) Gauss-Markov process between two subsequent times \(t_{k-1}\) and \(t_k\) with \(t_k = t_{k-1} + \varDelta t\) is given with

with the matrices

Therein, \(\varvec{\zeta }_k\) is the state of the \(1^{\mathrm{st}}\)-order Gauss-Markov process, \(\varvec{\eta }_k\) is Gaussian white input noise whose covariance matrix is \({\mathbf {Q}}_k = \mathrm {E}\!\left[ \varvec{\eta }_k \, \varvec{\eta }_k^{\scriptscriptstyle \mathrm {T}}\right]\). The corresponding covariance propagation equation is

with \({\mathbf {P}}_{c,k} = \mathrm {E}\!\left[ \varvec{\zeta }_k\,\varvec{\zeta }_k^{\scriptscriptstyle \mathrm {T}}\right]\). \({\mathbf {P}}_c\) changes only slowly with time and can therefore be considered quasi-stationary in the time frame of the Gauss-Markov process characterized by the correlation length \(T_c\). The covariance matrix of the white Gaussian input noise \({\mathbf {Q}}\) can then be found at each time \(t_k\) by solving the discrete Lyapunov equation

for \({\mathbf {Q}}\),

using the covariance matrix \({\mathbf {P}}_{c,hor} = \mathrm {E}\!\left[ \varvec{\zeta }_{hor}\,\varvec{\zeta }_{hor}^{\scriptscriptstyle \mathrm {T}}\right]\), as given with (33), in case of the Earth nadir vector error and the covariance matrix \({\mathbf {P}}_{c,sun} = \mathrm {E}\!\left[ \varvec{\zeta }_{sun}\,\varvec{\zeta }_{sun}^{\scriptscriptstyle \mathrm {T}}\right]\) in case of the Sun direction vector error. For the ATEK application, the correlation length \(T_c\) was set to 20 s.

Furthermore, the Earth nadir and Sun direction vector measurements are affected by the misalignment of the camera \(\varvec{\psi }_{{\tilde{c}}c}\). It is modeled as a constant.

Defining the navigation state vector at time \(t_k\) as

the Earth nadir and Sun direction vector error equations are

with the matrices

where the operator \(\left( {\mathbf {a}} \times \right)\) forms the skew-symmetric matrix from the input vector \({\mathbf {a}}\). The dependency on the position error \(\delta {\mathbf {x}}_e\) is small and is neglected.

The innovation covariance matrices are consequently

The time-correlated errors of the Earth nadir and Sun direction vector measurements are only statistically considered by the Schmidt-Kalman filter. The entries of the Kalman gain \({\mathbf {K}}\) that correspond to the Gauss-Markov processes are therefore set to zero in the filter update step. For tuning purposes, additional white noise covariances \({\mathbf {Q}}_{hor,\mathrm {tuning}}\) and \({\mathbf {Q}}_{sun,\mathrm {tuning}}\) may be added to (49) to increase the measurement uncertainty if necessary. If the misalignment of the camera is estimated, the transformation matrix \({\mathbf {R}}_{b{\tilde{c}}}\) has to be corrected with the current estimate \(\varvec{\psi }_{{\tilde{c}}c}\) before use by

7 Flight experiment

7.1 ATEK/MAPHEUS-8 mission

The presented method was applied to estimate the orientation of a sounding rocket from real flight data collected on the ATEK/MAPHEUS-8 mission in 2019. ATEK was a sounding rocket campaign by DLR designed to test innovative materials and production methods for reusable rocket parts and to measure structural and thermal loads that act on the rocket during the various phases of flight. With the knowledge gained, the design of the sounding rocket parts shall be improved in terms of reusability in the future.

Lift-off was on June 13, 2019 at 4:21 am (CEST) from Esrange, Sweden. The two-stage launch vehicle VSB-30 accelerated the payload to a velocity of about 1.77 km/s at the beginning of the coasting phase and brought the payload to an apogee height of about 238 km, as illustrated in Fig. 30. In order to reduce the dispersion of the trajectory due to thrust vector deviations during the ascent, the rocket was spun up about its roll axis with its fins and spin-up thrusters shortly after lift-off and despun again by means of a yo-yo system after the burnout of the \(2^{\mathrm{nd}}\) stage. During the coasting phase, which began at T+60 s at a height of about 60 km, the rate control system (RCS) controlled the angular rate by means of nitrogen propelled cold gas thrusters. The roll, azimuth and elevation angles of the payload during the coasting phase are shown in Fig. 31. The payload landed successfully on the parachute about 920 s later, about 67 km north of the launch site. Important flight events are listed in Table 2.

The rocket in lift-off configuration is shown in Fig. 32, and the payload in reentry configuration after nose cone separation at T+56 s and motor separation at T+60 s is illustrated in Fig. 33. The payload was equipped with two GoPro HERO3 cameras, which were integrated into the service module and arranged on opposite sides of the payload, looking downwards at an angle of 20 deg. Furthermore, three orthogonal Honeywell Q-Flex accelerometers and three orthogonal Northrop Grumman LITEF \(\upmu\)FORS gyroscopes were aboard. Position and velocity measurements were provided by a DLR Phoenix GPS receiver. All measurements were recorded on board during the flight and were available for post-processing.

7.2 IMU measurements

Fig. 34 and Fig. 35 show the measured accelerations and angular rates during the coasting phase between T+60 s and T+430 s. The gray areas mark the periods in which the RCS was active and the pitch and/or yaw thrusters were firing. The measured accelerations are in the range of some milli-g and most of the time constant since no outer forces like thrust or aerodynamic forces acted on the rocket during the coasting phase. Only in the short periods with the RCS activated, small additional specific forces were measured on the corresponding axes. Even the roll axis accelerometer experienced some specific force because of the imperfect alignment of the thrusters and the accelerometer axes. The more or less constant offsets are due to the measurement biases of the accelerometers and can later be well estimated by the integrated navigation system. At the beginning and at the end of the coasting phase, the influence of small aerodynamic forces in the upper, low-density atmosphere can be observed.

The stabilizing roll rate is removed by the yo-yo despin at T+57 s. Subsequently, the angular rate of the payload slowly increases especially about the pitch and yaw axes due to moments generated by the payload experiments. As soon as the angular rate exceeds 0.9deg/s about an axis, the RCS counteracts the rate about this axis. As can be seen from the figure, the resolution of the angular rate measurements is with 0.03 deg/s quite low. Furthermore, the sample rate is only 20 Hz. It should be stressed that the accelerometers and the gyroscopes were not originally intended for navigation purposes, but only for monitoring micro-g conditions and for rate control during the coasting phase. For these tasks, it was not necessary to pay special attention to time synchronization, sensor axis alignment and measurement resolution. This fact has to be taken into account later when tuning the navigation error filter.

7.3 Earth nadir and Sun direction vector estimation

The GoPro HERO3 cameras took pictures with a resolution of \(1920 \times 1080\) pixels. The automatic exposure control of the cameras reacts very quickly, which was advantageous for this application, as there was a high contrast between the bright albedo of the Earth and the sunlight on the one hand and the dark space on the other. Like the IMU, the cameras were not originally intended for optical navigation, but for observing the general flight behavior and, in particular, the structural behavior of the \(1^{\mathrm{st}}\) and \(2^{\mathrm{nd}}\) stage fins during the ascent. Unfortunately, the cameras do not offer a way to synchronize the camera time with the system time. In addition, the cameras are not designed to be fixed to the structure, so that the mounting orientation error alone is assumed to be in the range of 1 deg (\(1\sigma\)) about each axis. However, it turned out that the quality of the images was sufficient to demonstrate at least the main functionality of the presented method in a post-processing study. The FOV of the cameras was 94.4 deg.

Fig. 15, Fig. 27 and Fig. 28 already showed examples of hyperbolae and ellipses that were fit to the detected Earth horizon and Sun curves in the images of the two cameras on the ATEK sounding rocket. A video, which is provided as electronic supplementary material (supplementary file 1), shows the undistorted images of the two cameras with the fitted hyperbolae and ellipses with residuals smaller than 0.025 and \(0.5 \cdot 10^{-5}\), respectively, between T+100 s and T+320 s. In addition, the corresponding x- and y-coordinates of the Earth nadir and Sun direction vectors are plotted.

The \(x_b\)-, \(y_b\)- and \(z_b\)-coordinates of the estimated Earth nadir and Sun direction vectors of cameras 1 and 2, transformed to the b-frame and normed to unit length, are plotted in Fig. 36. It can be observed that the Earth horizon and the Sun were visible at the same time over a longer period of time, either together in the same image of one or the other camera or each in one of the images of the two cameras. At T+185 s, there was a smooth uninterrupted transition of the Earth nadir vector from camera 2 to camera 1. Fig. 37 shows the corresponding isotropic standard deviations (\(3\sigma\)), as estimated with (33) and overbounded with (37), represented as angle errors. The angle errors are estimated to be below 0.9 deg (\(3\sigma\)). Due to the challenging light conditions, the horizon is difficult to detect until T+171 s in the image of camera 1, which is also noticeable in the large fluctuation of the standard deviation. These measurements are discarded and not used in the navigation error filter.

7.4 Initial values

Since the gyroscopes were not intended for navigation but for rate control, for which only small rates had to be captured during the coasting phase, their measurement ranges were limited to ± 1000 deg/s for the sake of a higher resolution (as already mentioned, the data was nevertheless only recorded in low resolution). However, the roll rate during the ascent was larger, which made it impossible to estimate the orientation by integrating the gyroscope measurements over time, starting from a known initial orientation at lift-off. Therefore, the Tri-Axial Attitude Determination (TRIAD) method [4] is used to estimate the initial orientation of the payload at the beginning of the coasting phase at T+60 s. The TRIAD method requires two non-parallel vector observations once in the b-frame and once in the navigation reference frame, here the e-frame, to estimate the initial orientation quaternion \(\breve{q}_{eb,0}\). It is a favorable circumstance that the Earth horizon and the Sun are simultaneously visible by camera 2 from T+160 s onwards. With that, the initial orientation is estimated at T+171 s with the TRIAD method and is then back-propagated to T+60 s by integrating the gyroscope measurements by means of the \(4^{\mathrm{th}}\)-order Runge-Kutta integration scheme. It is assumed that the accuracy of the initial orientation at T+60 s is 2 deg (\(1\sigma\)) about all three axes, considering the inaccuracy of the TRIAD method on the one hand and the accumulated orientation error of the back-propagation on the other hand. The initial position and velocity at T+60 s are provided by the GPS receiver.

7.5 Navigation error filter

The navigation solution (i.e. position, velocity, and orientation) is computed with the integrated navigation system design presented in Sect. 6. Table 3 lists the sensor errors that are included into the navigation error filter. For each error component, the number of involved states and the (initial) magnitude (\(1\sigma\)) are given, and it is specified if the error component is estimated (E) or solely statistically considered (C) by the navigation error filter. The accelerometer and gyroscope errors are composed of the velocity/angular random walk (that is white noise), constant turn-on biases and sensor axes misalignments. The misalignments and the gyroscope turn-on bias are small compared to the other errors and are thus unobservable. They are therefore only statistically considered. Only the accelerometer turn-on bias is well observable and is estimated. Other IMU errors like the bias instability are neglected. The large numbers in brackets that are added to the velocity/angular random walk values of the accelerometers and gyroscopes are noticeable. They represent the large amount of additional process noise that is necessary to take the large uncertainties of the IMU measurements and numerical integration errors into account and to tune the navigation error filter properly. The Earth nadir and Sun direction vector errors are composed of time-correlated noise with a characteristic correlation length of 20 s and white noise, which is required for tuning the navigation error filter. These noise-like errors are only statistically considered. Finally, the misalignments of the cameras 1 and 2 are modeled as constants and are estimated by the navigation error filter.

7.6 Orientation solution

The focus is here on the accuracy of the estimated orientation solution. The accuracy of the position and velocity is primarily driven by the accuracy of the GPS measurements. Fig. 38 exemplarily shows the three-dimensional orientation of the payload at T+175 s. The complete sequence between T+60 s and T+430 s can be viewed in the video, which is provided as electronic supplementary material (supplementary file 2). The red arrows represent the body-frame axes of the unaided inertial orientation solution, the blue arrows represent the body-frame axes of the aided integrated orientation solution. The gray ellipses with dotted edges at the tips of the blue arrows represent the \(3\sigma\) uncertainty ellipses of the tip location as predicted by the navigation error filter. Additionally, the estimated Earth nadir vectors (cyan: camera 1, green: camera 2) and estimated Sun direction vectors (magenta: camera 1, red: camera 2) are displayed. Only the vectors that are actually used in the integrated navigation solution are shown. Furthermore, the thrust vectors of the RCS thrusters are illustrated, when firing.

The analysis of the absolute accuracy is difficult in the absence of a highly accurate reference such as a roll-isolated inertial platform would provide. However, one can at least compare the unaided inertial orientation solution with the aided integrated orientation solution, and one can check the statistical consistency of the measurement innovations with regard to the corresponding predicted covariances. Furthermore, it is possible to compare the results with the standalone TRIAD orientation estimates, which can always be computed when an Earth nadir and Sun direction vector measurement are available at the same time.

In Fig. 39, the difference between the unaided inertial and aided integrated orientation solutions about the \(x_e\)-, \(y_e\)- and \(z_e\)-axes is plotted. The \(3\sigma\) standard deviation boundaries of the orientation error \(\varvec{\psi }_{e{\tilde{e}}}\), as predicted by the navigation error filter, are shown in Fig. 40. As soon as the first Earth nadir and Sun direction vector measurements of camera 2 are processed at T+171 s, the standard deviations of the orientation errors immediately decrease about all three axes. Only after T+300 s, they begin to grow again since aiding measurements are no longer available. At T+300 s, the orientation uncertainty has declined from initially 6 deg (\(3\sigma\)) to now 1.1 deg (\(3\sigma\)). Subsequently, it increases again to 1.8 deg (\(3\sigma\)).

The innovations of the Earth nadir and Sun direction vectors together with the corresponding \(3\sigma\) standard deviation boundaries are illustrated in Fig. 41. The innovations lie well in their \(3\sigma\) boundaries. In Fig. 42, the absolute angle differences between the measured and predicted Earth nadir and Sun direction vectors are displayed. (a) shows the angle between the measured vectors and the vectors that are predicted with the unaided inertial orientation solution, and (b) shows the angle between the measured vectors and the vectors that are predicted with the aided integrated orientation solution. In (a), the maximum angle error is 4.2 deg. These angle errors are owing to the numerical integration error of the inertial navigation algorithm and the orientation errors of the Earth nadir and Sun direction vectors. The tendency to grow with time, which can be observed for all four angle differences, is most likely due to the numerical integration error, which is typically increasing with time. In (b), the absolute angle differences are below 0.6 deg all the time. The steps of the angle differences between the Earth nadir and Sun direction vectors of camera 2 at T+170 s and of camera 1 at T+268 s are remarkable. In both cases, the two vector measurements are extracted from the same camera image, and the step occur at the moment the second of the two vectors becomes available for the first time. Since both vectors are affected by the same camera misalignment and inertial orientation errors, the differences are, at least qualitatively, a measure for the error between the Earth nadir and Sun direction vectors themselves. Though both errors cannot be separated, the errors of the individual direction vector measurements may be delimited upwards to 0.6 deg.

Fig. 43 shows the estimated camera misalignments and the corresponding \(3\sigma\) standard deviation boundaries, as predicted by the navigation error filter. All camera misalignments are initialized with zero in the beginning. The estimated errors and the predicted standard deviations seem to be consistent. The step of the misalignment of camera 1 at T+268 s goes hand in hand with the addressed step in Fig. 42.

Finally, it is worth to have a look at the TRIAD orientation solutions in Fig. 44. At each instant of time, at which an Earth nadir vector and a Sun direction vector are simultaneously visible by one of the cameras alone or by both cameras, a TRIAD orientation solution can be calculated. This TRIAD orientation solution is once compared to the unaided inertial orientation solution (a) and once to the aided integrated orientation solution (b). Fortunately, the horizon of the Earth and the Sun can be seen at the same time over a long period of time, and their projections on the images almost seamlessly pass from camera 2 to camera 1. There are three periods: first, the horizon of the Earth and the Sun are visible in the image of camera 2 from T+171 s until T+185 s, then the horizon of the Earth is visible in the image of camera 1 and the Sun is visible in the image of camera 2 until T+240 s, and finally, between T+260 s and T+280 s, the horizon of the Earth and the Sun can be seen in the image of camera 1. In (a), the orientation error continuously increases with time and is maximum at the end of the third period. The maximum orientation difference is 3.4 deg. As with the absolute angle differences, the growing difference is most likely due to the gyroscope measurement errors, which are integrated with time. In (b), the difference between the TRIAD and the aided solution about the \(x_e\)-, \(y_e\)- and \(z_e\)-axes is below 0.6 deg, independently from time, which is another indicator for a good statistical consistency of the integrated orientation solution.

8 Conclusion

Using sample data from a sounding rocket mission, the principle of estimating the Earth nadir and Sun direction vectors from camera images and their fusion with measurements from accelerometers, gyroscopes and a GPS receiver was illustrated. Even if the inertial sensors and cameras were not originally intended for navigation, the functionality of the method could be demonstrated and a consistent orientation solution estimated with the help of the integrated navigation system. For this specific application, the accuracy of the estimated azimuth and elevation angles of the Earth nadir and Sun direction vectors is assumed to be better than 0.6 deg. The accuracy of the orientation solution of the integrated navigation system is assumed to be better than 2 deg (\(3\sigma\)) after processing all Earth nadir and Sun direction vector aiding measurements. A more in-depth study of the achievable accuracy of the direction vector estimation depending on the height, angle of view and image resolution is still pending. On one of the next sounding rocket missions, which is expected to take place in 2021/2022, a roll-isolated inertial platform will be on board in addition to the camera and a strapdown IMU. Then, a highly accurate reference will be available and the accuracy of the estimated orientation method can be evaluated quantitatively.

Since it has been shown in principle that the method can compensate for the disadvantages of a strapdown IMU on a spinning sounding rocket, the development of the method into a robust, real-time method is being pursued. The expensive and hardly available inertial platform could then be replaced by cheaper components, accompanied by a greater algorithmic effort. The achievable accuracy is sufficient for many sounding rocket missions that do not depend on highly accurate orientation estimations. The next step will be to replace the action camera with a camera that can withstand the harsh conditions and that has, among other things, a real-time interface, a time synchronization capability, and can be mounted firmly and repeatably with low misalignment.

References

Dol Bahar, M. N., Effandi, M., Hassan, M., Hamzah, N., Arshad, A., Farr, X., Visagie, L., Steyn, W. H.: Modular CMOS horizon sensor for small satellite attitude determination and control subsystem. 20th Annual AIAA/USU Conference on Small Satellites (2006)

Barf, J., Rapp, T., Bergmann, M., Geiger, S., Scharf, A., Wolz, F.: Horizon acquisition for attitude determination using image processing algorithms – Results of HORACE on REXUS 16. Proceedings of the 22nd ESA Symposium on European Rocket and Balloon Programmes and Related Research (2015)

Barf, J.: Development and implementation of an image-processing-based horizon sensor for sounding rockets. Julius-Maximilians-Universität Würzburg (2017)

Black, H.D.: A passive system for determining the attitude of a satellite. AIAA J. 2(7), 1350–1351 (1964). https://doi.org/10.2514/3.2555

Canny, J.: A computational approach to edge detection. IEEE Transact. Pattern Anal. Mach. Intell. 8(6), 679–698 (1986). https://doi.org/10.1109/TPAMI.1986.4767851

Christian, J.A.: Optical navigation using iterative horizon reprojection. J. Guid. Control. Dyn. 39(5), 1092–1103 (2016). https://doi.org/10.2514/1.G001569

Christian, J.A., Robinson, S.B.: Noniterative horizon-based optical navigation by cholesky factorization. J. Guid. Control. Dyn. 39(12), 2755–2763 (2016). https://doi.org/10.2514/1.G000539

Christian, J.A.: Accurate planetary limb localization for image-based spacecraft navigation. J. Spacecr. Rocket. 54(3), 708–730 (2017). https://doi.org/10.2514/1.A33692

Christian, J.A.: A tutorial on horizon-based optical navigation and attitude determination with space imaging systems. IEEE Access 9, 19819–19853 (2021). https://doi.org/10.1109/ACCESS.2021.3051914

CubeSpace: CubeSense V3 – Interface Control Document. Version 1.3 (2020)

Davies, E. R.: Computer and Machine Vision: Theory, Algorithms, Practicalities. Academic Press, 4th ed. (2017). https://doi.org/10.1016/C2010-0-66926-4

Fischler, M.A., Bolles, R.C.: Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 24(6), 381–395 (1981). https://doi.org/10.1145/358669.358692

Fitzgibbon, A., Pilu, M., Fisher, R.B.: Direct least square fitting of ellipses. IEEE Trans. Pattern Anal. Mach. Intell. 21(5), 476–480 (1999). https://doi.org/10.1109/34.765658

Grewal, M. S., Angus P. Andrews, A. P.: Kalman Filtering: Theory and Practice with MATLAB. Wiley, 4th ed. (2014)

Groves, P. D.: Principles of GNSS, inertial, and multisensor integrated navigation systems. Artech House, 2nd ed. (2013)

Heikkilá, J., Silven, O.: A four-step camera calibration procedure with implicit image correction. Proceedings of IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 1106-1112 (1997). https://doi.org/10.1109/CVPR.1997.609468

Kanatani, K., Rangarajan, P.: Hyper least squares fitting of circles and ellipses. Computat. Stat. Data Anal. 55(6), 2197–2208 (2011). https://doi.org/10.1016/j.csda.2010.12.012

Kannala, J., Brandt, S.S.: A generic camera model and calibration method for conventional, wide-angle, and fish-eye lenses. IEEE Trans. Pattern Anal. Mach. Intell. 28(8), 1335–1340 (2006). https://doi.org/10.1109/TPAMI.2006.153

Kikuya, Y., Iwasaki, Y., Yatsu, Y., Matunaga, S.: Attitude determination algorithm using Earth sensor images and image recognition. Trans. Japan Soc. Aero. Space Sci. 64(2), 82–90 (2021). https://doi.org/10.2322/tjsass.64.82

Leonardo: Infrared Earth Sensor (IRES). Datasheet MM07787 1-17 (2017)

Meller, D., Sripruetkiat, P.,Makovec, K.: Digital CMOS cameras for attitude determination. 14th Annual AIAA/USU Conference on Small Satellites (2000)

Modenini, D.: Attitude determination from ellipsoid observations: a modified orthogonal procrustes problem. J. Guid. Control. Dyn. 41(10), 2324–2326 (2018). https://doi.org/10.2514/1.G003610

Modenini, D., Locarini, A., Zannoni, M.: Attitude sensor from ellipsoid observations: a numerical and experimental validation. Sensors 20(2), 433 (2020). https://doi.org/10.3390/s20020433

Montenbruck, O., Pfleger, O: Astronomy on the Personal Computer. 4th edition, Springer (2000). https://doi.org/10.1007/978-3-642-03436-7

Mortari, D., D’Souza, C.N., Zanetti, R.: Image processing of illuminated ellipsoid. J. Spacecr. Rocket. 53(3), 448–456 (2016). https://doi.org/10.2514/1.A33342

Nguyen, T., Cahoy, K., Marinan, A.: Attitude determination for small satellites with infrared earth horizon sensors. J. Spacecr. Rocket. 55(6), 1466–1475 (2018). https://doi.org/10.2514/1.A34010

National Imagery and Mapping Agency: Department of Defense World Geodetic System 1984, Its Definition and Relationships With Local Geodetic Systems, Technical Report TR8350.2 (2004)

Park, K., Mortari, D.: Planet or Moon image processing for spacecraft attitude estimation. J. Electron. Imaging 17(2), 023020 (2008). https://doi.org/10.1117/1.2938999

Rassmusen, C.E., Williams, C.K.I.: Gaussian Processes for Machine Learning. The MIT Press (2005). https://doi.org/10.7551/mitpress/3206.001.0001

Renshaw, D.T., Christian, J.A.: Subpixel localization of isolated edges and streaks in digital images. J. Imaging 6(5), 33 (2020). https://doi.org/10.3390/jimaging6050033

Rosin, P.L.: A note on the least squares fitting of ellipses. Pattern Recogn. Lett. 14(10), 799–808 (1993). https://doi.org/10.1016/0167-8655(93)90062-I

Steger, C., Ulrich, M., Wiedemann, C.: Machine Vision Algorithms and Applications. Wiley-VCH, 2nd ed (2018)

Suzuki, S., Abe, K.: Topological structural analysis of digitized binary images by border following. Comput. Vision Graph. Image Proces. 30(1), 32–46 (1985). https://doi.org/10.1016/0734-189X(85)90016-7

Szeliski, R.: Computer Vision: Algorithms and Applications. Springer, 1st ed. (2011). https://doi.org/10.1007/978-1-84882-935-0

Titterton, D. H., Weston, J., L.: Strapdown inertial navigation technology. The Institution of Electrical Engineers, 2nd ed. (2004)

Zhang, Z.: Parameter estimation techniques: a tutorial with application to conic fitting. Image Vis. Comput. 15(1), 59–76 (1997). https://doi.org/10.1016/S0262-8856(96)01112-2

Zhang, Z.: A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 22(11), 1330–1334 (2000). https://doi.org/10.1109/34.888718

Ziou, D., Tabbone, S.: Edge detection techniques – an overview. Int. J. Pattern Recogn. Image Anal. 8, 537–559 (1998)

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

No funds, grants, or other support was received. The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Supplementary file 1 (mp4 131647 KB)

Supplementary file 2 (mp4 9638 KB)

Rights and permissions