Abstract

Correction for systematic measurement error in self-reported data is an important challenge in association studies of dietary intake and chronic disease risk. The regression calibration method has been used for this purpose when an objectively measured biomarker is available. However, a big limitation of the regression calibration method is that biomarkers have only been developed for a few dietary components. We propose new methods to use controlled feeding studies to develop valid biomarkers for many more dietary components and to estimate the diet disease associations. Asymptotic distribution theory for the proposed estimators is derived. Extensive simulation is performed to study the finite sample performance of the proposed estimators. We applied our method to examine the associations between the sodium/potassium intake ratio and cardiovascular disease incidence using the Women’s Health Initiative cohort data. We discovered positive associations between sodium/potassium ratio and the risks of coronary heart disease, nonfatal myocardial infarction, coronary death, ischemic stroke, and total cardiovascular disease.

Similar content being viewed by others

Data Availability

The data that support the findings of this study are available from the Women’s Health Initiative (WHI) but restrictions apply to the availability of these data, which were used under license for the current study, and so are not publicly available. Data are however available from the authors upon reasonable request in a collaborative mode and with permission of Women’s Health Initiative (WHI).

References

Adams KF, Schatzkin A, Harris TB, Kipnis V, Morris T, Ballard-Barbash R (2006) Overweight, obesity and mortality in a large prospective cohort of persons 50 to 71 years old. N Engl J Med 355:763–778

WCRF, AICR (2007) Food, nutrition and the prevention of cancer: a global perspective. American Institute for Cancer Research, Washington, DC

Paeratakul S, Popkin BM, Kohlmeier L, Hertz-Picciotto I, Guo X, Edwards LJ (1998) Measurement error in dietary data: implications for the epidemiologic study of the diet-disease relationship. Eur J Clin Nutr 52:722–727

Prentice RL, Mossavar-Rahmani Y, Huang Y, Horn L. Van, Beresford SAA, Caan B, Tinker L, Schoeller D, Bingham S, Eaton CB, Thomson C, Johnson KC, Ockene J, Sarto G, Heiss G, Neuhouser ML (2011) Evaluation and comparison of food records, recalls, and frequencies for energy and protein assessment by using recovery biomarkers. Am J Epidemiol 174:591–603

Carroll RJ, Ruppert D, Stefanski LA, Crainiceanu CM (2006) Measurement error in nonlinear models: a modern perspective. CRC Press, Boca Raton

Freedman LS, Schatzkin A, Midthune D, Kipnis V (2011) Dealing with dietary measurement error in nutritional cohort studies. J Natl Cancer Inst 103:1086–1092

Bartlett JW, Keogh RH (2018) Bayesian correction for covariate measurement error: a frequentist evaluation and comparison with regression calibration. Stat Methods Med Res 27:1695–1708

Hu C, Lin DY (2002) Cox regression with covariate measurement error. Scand J Stat 29:637–655

Huang Y, Wang CY (2000) Cox regression with accurate covariate unascertainable: a nonparametric-correction approach. J Am Stat Assoc 45:1209–1219

Li Y, Ryan L (2006) Inference on survival data with covariate measurement error-An imputationapproach. Scand J Stat 33:169–190

Song X, Huang X (2005) On corrected score approach for proportional hazards model with covariatemeasurement error. Biometrics 61:702–714

Wang CY, Hsu L, Feng ZD, Prentice RL (1997) Regression calibration in failure time regression. Biometrics 53:131–145

Yan Y, Yi GY (2015) A corrected profile likelihood method for survival data with covariate measurement error under the Cox model. Can J Stat 43:454–480

Zucker DM (2005) A pseudo-partial likelihood method for semiparametric survival regression with covariate errors. J Am Stat Assoc 100:1264–1277

Prentice RL (1982) Covariate measurement errors and parameter estimation in a failure time regression model. Biometrika 69:331–342

Rosner B, Spiegelman D, Willett WC (1990) Correction of logistic regression relative risk estimates and confidence intervals for measurement error: the case of multiple covariates measured with error. Am J Epidemiol 132:734–745

Shaw PA, Prentice RL (2012) Hazard ratio estimation for biomarker-calibrated dietary exposures. Biometrics 68:397–407

Zheng C, Beresford SAA, Horn L. Van, Tinker LF, Thomson CA, Neuhouser ML, Di C, Manson JE, Mossavar-Rahmani Y, Seguin R, Manini T, LaCroix AZ, Prentice RL (2014) Simultaneous association of total energy consumption and activity-related energy expenditure with cardiovascular disease, cancer, and diabetes risk among postmenopausal women. Am J Epidemiol 180:526–535

Lampe JW, Huang Y, Neuhouser ML, Tinker LF, Song X, Schoeller DA, Kim S, Raftery D, Di C, Zheng C, Schwarz Y, Van Horn L, Thomson CA, Mossavar-Rahmani Y, Beresford SAA, Prentice RL (2017) Dietary biomarker evaluation in a controlled feeding study in women from the Womens Health Initiative cohort. Am J Clin Nutr 105:466–475

Huang Y, Horn L. Van, Tinker LF, Neuhouser ML, Carbone L, Mossavar-Rahmani Y, Thomas F, Prentice RL (2013) Measurement error corrected sodium and potassium intake estimation using 24-hour urinary excretion. Hypertension 63:238–244

Prentice RL, Huang Y, Neuhouser ML, Manson JE, Mossavar-Rahmani Y, Thomas F, Tinker LF, Allison M, Johnson KC, Wssertheil-Smoller S, Seth A, Rossouw JE, Shikany J, Crbone LD, Martin LW, Stefanick M, Haring B, Horn L. Van (2017) Associations of biomarker-calibrated sodium and potassium intakes with cardiovascular disease risk among postmenopausal women. Am J Epidemiol 186:1035–1043

O’Donnell M, Mente A, Rangarajan S, McQueen MJ, Wang X, Liu L, Yan H, Lee SF, Mony P, Devanath A, Rosengren A, Lopez-Jaramillo P, Diaz R, Avezum A, Lanas F, Yusoff K, Iqbal R, Ilow R, Mohammadifard N, Gulec S, Yusufali AH, Kruger L, Yusuf R, Chifamba J, Kabali C, Dagenais G, Lear SA, Teo K, Yusuf S (2014) Urinary sodium and potassium excretion, mortality, and cardiovascular events. N Engl J Med 37:612–623

Prentice RL, Pettinger M, Neuhouser ML, Raftery D, Zheng C, Gowda GAN, Huang Y, Tinker LF, Howard BV, Manson JE, Wallace R, Mossavar-Rahmani Y, Johnson KC, Lampe JW (2021) Biomarker-calibrated macronutrient intake and chronic disease risk among postmenopausal women. J Nutr 151(8):2330–2341

Prentice RL, Pettinger M, Neuhouser ML, Raftery D, Zheng C, Gowda GAN, Huang Y, Tinker LF, Howard BV, Manson JE, Wallace R, Mossavar-Rahmani Y, Johnson KC, Lampe JW (2021) Four-day food record macronutrient intake, with and without biomarker calibration, and chronic disease risk in postmenopausal women. Am J Epidemiol 191:1061

Prentice RL, Huang Y (2018) Nutritional epidemiology methods and related statistical challenges and opportunities. Stat Theory Relat Fields 154:2152–2164

Subar AF, Kipnis V, Troiano RP, Midthune D, Schoeller DA, Bingham S, Sharbaugh CO, Trabulsi J, Runswick S, Ballard-Barbash R, Sunshine J, Schatzkin A (2003) Using intake biomarkers to evaluate the extent of dietary misreporting in a large sample of adults: the OPEN study. Am J Epidemiol 158:1–13

Belanger CF, Hennekens CH, Rosner B, Speizer FE (1978) The nurses health study. Am J Nurs 78:1039–1040

Acknowledgements

This work was partially supported by Grant R01 CA119171 from the U.S. National Cancer Institute and R01 GM106177 and U54 GM115458 from the National Institute of General Medical Sciences. The WHI programs are funded by the National Heart, Lung, and Blood Institute, National Institutes of Health, U.S. Department of Health and Human Services through contracts, HHSN268201600018C, HHSN268201600001C, HHSN268201600002C, HHSN268201600003C, and HHSN268201600004C. The authors acknowledge the following investigators in the Women’s Health Initiative (WHI) Program: Program Office: Jacques E. Rossouw, Shari Ludlam, Dale Burwen, Joan McGowan, Leslie Ford, and Nancy Geller, National Heart, Lung, and Blood Institute, Bethesda, Maryland; Clinical Coordinating Center, Women’s Health Initiative Clinical Coordinating Center: Garnet L. Anderson, Ross L. Prentice, Andrea Z. LaCroix, and Charles L. Kooperberg, Public Health Sciences, Fred Hutchinson Cancer Research Center, Seattle, Washington; Investigators and Academic Centers: JoAnn E. Manson, Brigham and Women’s Hospital, Harvard Medical School, Boston,Massachusetts; Barbara V. Howard, MedStar Health Research Institute/Howard University, Washington, DC; Marcia L. Stefanick, Stanford Prevention Research Center, Stanford, California; Rebecca Jackson, The Ohio State University, Columbus, Ohio; Cynthia A. Thomson, University of Arizona, Tucson/Phoenix, Arizona; Jean Wactawski-Wende, University at Buffalo, Buffalo, New York; Marian C. Limacher, University of Florida, Gainesville/Jacksonville, Florida; Robert M. Wallace, University of Iowa, Iowa City/ Davenport, Iowa; Lewis H. Kuller, University of Pittsburgh, Pittsburgh, Pennsylvania; and Sally A. Shumaker, Wake Forest University School of Medicine, Winston-Salem, North Carolina; Women’s Health Initiative Memory Study: Sally A. Shumaker, Wake Forest University School of Medicine,Winston-Salem, North Carolina. For a list of all the investigators who have contributed to WHI science, please visit: https://www.whi.org/researchers/SitePages/WHI Decisions concerning study design, data collection and analysis, interpretation of the results, the preparation of the manuscript, and the decision to submit the manuscript for publication resided with committees that comprised WHI investigators and included National Heart, Lung, and Blood Institute representatives. The contents of the paper are solely the responsibility of the authors.

Funding

The research is partially supported by Grant R01 CA119171 from the U.S. National Cancer Institute, U54 GM115458 and R01 GM106177 from the National Institute of General Medical Sciences.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interest

All authors declare that there are no relevant financial or non-financial competing interests to report.

Appendices

Appendix A: Technical Details

1.1 A.1: Bias Factor Derivation

In this subsection, we derived the asymptotic bias of \({\varvec{\theta }}\) for method 1 under rare disease assumption by checking how \(E({\hat{X}}|Q,{\varvec{V}})\) is biased away from \(E(X|Q,{\varvec{V}})\). For the first step regression model of \({\tilde{X}}\) on \(({\varvec{W}}, {\varvec{V}})\), we have

where \(\Sigma _{{\varvec{W}}{\varvec{W}}|V}=Var({\varvec{W}}|{\varvec{V}})\) and \(\Sigma _{X{\varvec{W}}|{\varvec{V}}}=Cov(X,{\varvec{W}}|{\varvec{V}})\) are conditional variance covariance matrices. Plugging this into the second step regression model, we have

When the bias factor \(\rho _{{\varvec{V}}}\) is a constant over \({\varvec{V}}\), we simply denote it as \(\rho\) and we have \(\rho \theta _z^{*}=\theta _z\), or \(\theta _z^{*}=\rho ^{-1}\theta _z\) with appropriate adjustment for V. Explicitly, we have

If we further have \(E(X|{\varvec{V}})={\varvec{V}}\delta\) is a linear function of V, then \((1-\rho )\delta \theta _z^{*}+\theta _v^{*}=\theta _v\), or \(\theta _v^{*}=\theta _v-\frac{(1-\rho )\delta }{\rho }\theta _z\).

1.2 A.2: Proof of Theorem 1

Before proof of main theorem 1, we first proof the lemma below which give the asymptotics for the third step regression given the \({\hat{\gamma }}\) from the second step.

Lemma 1

Assume \(n_3/n_2\rightarrow C_{2}<\infty\) and \(\theta ^{*}\) is the unique solution to \(EU(\theta ,\gamma ^{*})=0\) for an estimating function \(U(\theta ,\gamma )\). If \({\hat{\theta }}\) solve the estimating equation \(0=n_3^{-1}\sum _{i=1}^{n_3} U_i(\theta ,{\hat{\gamma }})\) where \(\sqrt{n_2}({\hat{\gamma }}-\gamma ^{*})\rightarrow N(0,\Sigma _{\gamma })\) and \({\hat{\gamma }}\) is independent of \(U_i(\theta ,\gamma )\), then we have \(\sqrt{n_3}({\hat{\theta }}-\theta ^{*})\rightarrow N(0, \Sigma _{\theta })\) where

where \(I_{\theta }=E\left( -\frac{\partial U(\theta ,\gamma )}{\partial \theta }|_{\theta ^{*},\gamma ^{*}}\right)\), \(I_{\gamma }=E\left( -\frac{\partial U(\theta ,\gamma )}{\partial \gamma }|_{\theta ^{*},\gamma ^{*}}\right)\) and \(J_{\theta }=Var(U(\theta ^{*},\gamma ^{*}))\). This variance can be consistently estimated by

where \({\hat{I}}_{\theta }=n_3^{-1}\sum _{i=1}^{n_3}\left( -\frac{\partial U_i(\theta ,\gamma )}{\partial \theta }|_{{\hat{\theta }},{\hat{\gamma }}}\right)\), \({\hat{I}}_{\gamma }=n_3^{-1}\sum _{i=1}^{n_3}\left( -\frac{\partial U_i(\theta ,\gamma )}{\partial \gamma }|_{{\hat{\theta }},{\hat{\gamma }}}\right)\), \({\hat{J}}_{\theta }=n_3^{-1}\sum _{i=1}^{n_3}U_i({\hat{\theta }},{\hat{\gamma }})U^\top _i({\hat{\theta }},{\hat{\gamma }})\) and \({\hat{\Sigma }}_{\gamma }\) is a consistent estimator of \(\Sigma _{\gamma }\).

Proof

We can derive the asymptotic for \({\hat{\theta }}\) as below:

With Uniform Law of Large Number (ULLN), we have

Similarly, we have

Plug in the Taylor expansion, notice that \(EU(\theta ^{*},\gamma ^{*})=0\), we have

So we have

By Central Limit Theorem (CLT), we have

So we have

By ULLN and continuous mapping theorem, we have \({\hat{I}}_{\theta }=I_{\theta }+o_p(1)\), \({\hat{I}}_{\gamma }=I_{\gamma }+o_p(1)\) and \({\hat{J}}_{\theta }=J_{\theta }+o_p(1)\). By assumption \({\hat{\Sigma }}_{\gamma }=\Sigma _{\gamma }+o_p(1)\) and \(n_3/n_2\rightarrow C_{2}<\infty\), using continuous mapping theorem, we have \({\hat{\Sigma }}_{\theta }=\Sigma _{\theta }+o_p(1)\). \(\square\)

Now we provide the detail forms for the quantities involved in a Cox regression model.

Lemma 2

Assume \(n_3/n_2\rightarrow C_{2}<\infty\) and \(\theta ^{*}\) is the unique solution to

where \(Z^{*}=\mathbb {X}\gamma ^{*}\). If \({\hat{\theta }}\) solve the estimating equation

where \({\hat{Z}}_i=\mathbb {X}_i{\hat{\gamma }}\) and \(\sqrt{n_2}({\hat{\gamma }}-\gamma ^{*})\rightarrow N(0,\Sigma _{\gamma })\), then we have \(\sqrt{n_3}({\hat{\theta }}-\theta ^{*})\rightarrow N(0, \Sigma _{\theta })\) where

where \(a^{\otimes 2}=aa^T\) and

This variance can be consistently estimated by

where

\({\hat{\Sigma }}_{\gamma }\) is a consistent estimator of \(\Sigma _{\gamma }\) and \(n_3/n_2 \rightarrow {C_2}\).

Proof

Denote \(U_i(\theta ,\gamma )=\int _0^{\tau }\left[ \left( \begin{array}{c} Z_{i}\\ {\varvec{V}}_i\end{array}\right) -\frac{E\left[ Y_j(t)\exp \left\{ (Z_{j},{\varvec{V}}_j^\top )\theta \right\} \left( \begin{array}{c} Z_{j}\\ {\varvec{V}}_j\end{array}\right) \right] }{E \left[ Y_k(t)\exp \left\{ (Z_{k},{\varvec{V}}_k^\top )\theta \right\} \right] }\right] dN_i(t)\) where \(Z_i=\mathbb {X}_i\gamma\). Then by definition of \(I_{\theta }\), \(I_{\gamma }\), \(J_{\theta }\) in Lemma 1, we can compute the form of these terms as stated above. The convergence of \({\hat{\theta }}\) and \({\hat{\gamma }}\) as long as ULLN and continuous mapping ensure that we have

which lead to the convergence \({\hat{I}}_{\theta }=I_{\theta }+o_p(1)\), \({\hat{I}}_{\gamma }=I_{\gamma }+o_p(1)\) and \({\hat{J}}_{\theta }=J_{\theta }+o_p(1)\). Applying Lemma 1, we have the asymptotic for \({\tilde{\theta }}\) that solve the equation \(0=n_3^{-1}\sum _{i=1}^{n_3}U_i(\theta ,{\hat{\gamma }})\). Now we just need to show \({\tilde{\theta }}\) and \({\hat{\theta }}\) is asymptotically equivalent, which is guaranteed by applying ULLN to get:

Here we would like to comment that for method 2–4, \(Z^{*}\) has the same expression \(E(Z|Q,{\varvec{V}})\) and thus the \(I_{\theta }\) are the same though their estimated version \({\hat{I}}_{\theta }\) are different. \(\square\)

Now we handle the estimation of \(\gamma\) in the following lemma.

Lemma 3

Assume \(n_2/n_1\rightarrow C_{1}<\infty\) and \(\gamma ^{*}\) is the unique solution to \(EU(\gamma ,\beta ^{*})=0\) for an estimating function \(U(\gamma ,\beta )=[(Q_i,{\varvec{V}}_i^\top )^T{\hat{X}}_i-(Q_i,{\varvec{V}}_i^\top )^T(Q_i,{\varvec{V}}_i^\top )\gamma ]\). If \({\hat{\gamma }}\) solve the estimating equation \(0=n_3^{-1}\sum _{i=1}^{n_3} U_i(\gamma ,{\hat{\beta }})\) where \(\sqrt{n_1}({\hat{\beta }}-\beta ^{*})\rightarrow N(0,\Sigma _{\beta })\), then we have \(\sqrt{n_2}({\hat{\gamma }}-\gamma ^{*})\rightarrow N(0, \Sigma _{\gamma })\) where

where \(I_{\gamma }=E\left( -\frac{\partial U(\gamma ,\beta )}{\partial \gamma }|_{\gamma ^{*},\beta ^{*}}\right) =E[(Q_i,{\varvec{V}}_i^\top )^\top (Q_i,{\varvec{V}}_i^\top )]\) and \(I_{\beta }=E\left( -\frac{\partial U(\gamma ,\beta )}{\partial \beta }|_{\gamma ^{*},\beta ^{*}}\right)\). This variance can be consistently estimated by

where \({\hat{I}}_{\gamma }=n_2^{-1}\sum _{i=1}^{n_2}\left( -\frac{\partial U_i(\gamma ,\beta )}{\partial \gamma }|_{{\hat{\gamma }},{\hat{\beta }}}\right)\), \({\hat{I}}_{\beta }=n_2^{-1}\sum _{i=1}^{n_2}\left( -\frac{\partial U_i(\gamma ,\beta )}{\partial \beta }|_{{\hat{\gamma }},{\hat{\beta }}}\right)\) and \({\hat{\Sigma }}_{\beta }\) is a consistent estimator of \(\Sigma _{\beta }\).

Proof

We can derive the asymptotic for \({\hat{\gamma }}\) as below:

With ULLN,

and with continuous mapping theorem, we have

So we have

Similarly, we have

Plug in the Taylor expansion, notice that \(EU(\gamma ^{*},\beta ^{*})=0\), we have

So we have

By CLT, we have

So we have

As we have shown \({\hat{I}}_{\gamma }=I_{\gamma }+o_p(1)\), \({\hat{I}}_{\beta }=I_{\beta }+o_p(1)\) and by assumption \({\hat{\Sigma }}_{\beta }=\Sigma _{\beta }+o_p(1)\) and \(n_2/n_1\rightarrow C_{1}<\infty\), using continuous mapping theorem, we have \({\hat{\Sigma }}_{\gamma }=\Sigma _{\gamma }+o_p(1)\). \(\square\)

Applying the general form to each specific regression method, we can obtain the asymptotic results we need.

Lemma 4

Assume \(n_2/n_1\rightarrow C_{1}<\infty\) and \(\gamma ^{*}_1\) is the unique solution to \(EU(\gamma _1,\beta ^{*}_1)=0\) for an estimating function \(U(\gamma _1,\beta _1)\). If \({\hat{\gamma }}_1\) solve the estimating equation \(0=n_2^{-1}\sum _{i=1}^{n_2} U_i(\gamma _1,{\hat{\beta }}_1)\) where \(\sqrt{n_1}({\hat{\beta }}_1-\beta ^{*}_1)\rightarrow N(0,\Sigma _{\beta _1})\), then we have \(\sqrt{n_2}({\hat{\gamma }}_1-\gamma ^{*}_1)\rightarrow N(0, \Sigma _{\gamma _1})\) where

where \(I_{\gamma _1}=E\left( -\frac{\partial U(\gamma _1,\beta _1)}{\partial \gamma _1}|_{\gamma ^{*}_1,\beta ^{*}_1}\right)\) and \(I_{\beta _1}=E\left( -\frac{\partial U(\gamma _1,\beta _1)}{\partial \beta _1}|_{\gamma ^{*}_1,\beta ^{*}_1}\right)\). This variance can be consistently estimated by

where \({\hat{I}}_{\gamma _1}=n_2^{-1}\sum _{i=1}^{n_2}\left( -\frac{\partial U_i(\gamma _1,\beta _1)}{\partial \gamma _1}|_{\hat{\gamma _1},\hat{\beta _1}}\right)\), \({\hat{I}}_{\beta _1}=n_2^{-1}\sum _{i=1}^{n_2}\left( -\frac{\partial U_i(\gamma _1,\beta _1)}{\partial \beta _1}|_{\hat{\gamma _1},\hat{\beta _1}}\right)\) and \({\hat{\Sigma }}_{\beta _1}\) is a consistent estimator of \(\Sigma _{\beta _1}\).

Proof

We need to derive asymptotic for \({\hat{\beta }}_1\) and then apply 3. The asymptotic of \({\hat{\beta }}_1\) can be derived as below:

where \(U_i(\beta _1^{*})=(1,{\varvec{W}}_i^\top ,{\varvec{V}}_i^\top )^\top X_i^{*}-(1,{\varvec{W}}_i^\top ,{\varvec{V}}_i^\top )^\top (1,{\varvec{W}}_i^\top ,{\varvec{V}}_i^\top )\beta _1^{*}.\) With ULLN,

and with continuous mapping theorem, we have

So we have

Plug in the Taylor expansion, notice that \(EU(\beta ^{*}_1)=0\), we have

So we have

By CLT, we have

So we have

By assumption, \({\hat{\Sigma }}_{\beta _1}=\Sigma _{\beta _1}+o_p(1)\) which is a consistent estimator of \(\Sigma _{\beta _1}\). Then by applying Lemma 3, we have

\(\square\)

Lemma 5

Assume \(n_2/n_1\rightarrow C_{1}<\infty\) and \(\gamma ^{*}_2\) is the unique solution to \(EU(\gamma _2,\beta ^{*}_2)=0\) for an estimating function \(U(\gamma _2,\beta _2)\). If \({\hat{\gamma }}_2\) solve the estimating equation \(0=n_2^{-1}\sum _{i=1}^{n_2} U_i(\gamma _2,{\hat{\beta }}_2)\) where \(\sqrt{n_1}({\hat{\beta }}_2-\beta ^{*}_2)\rightarrow N(0,\Sigma _{\beta _2})\), then we have \(\sqrt{n_2}({\hat{\gamma }}_2-\gamma ^{*}_2)\rightarrow N(0, \Sigma _{\gamma _2})\) where

where \(I_{\gamma _2}=E\left( -\frac{\partial U(\gamma _2,\beta _2)}{\partial \gamma _2}|_{\gamma ^{*}_2,\beta ^{*}_2}\right)\) and \(I_{\beta _2}=E\left( -\frac{\partial U(\gamma _2,\beta _2)}{\partial \beta _2}|_{\gamma ^{*}_2,\beta ^{*}_2}\right)\). This variance can be consistently estimated by

where \({\hat{I}}_{\gamma _2}=n_2^{-1}\sum _{i=1}^{n_2}\left( -\frac{\partial U_i(\gamma _2,\beta _2)}{\partial \gamma _2}|_{\hat{\gamma _2},\hat{\beta _2}}\right)\), \({\hat{I}}_{\beta _2}=n_2^{-1}\sum _{i=1}^{n_2}\left( -\frac{\partial U_i(\gamma _2,\beta _2)}{\partial \beta _2}|_{\hat{\gamma _2},\hat{\beta _2}}\right)\) and \({\hat{\Sigma }}_{\beta _2}\) is a consistent estimator of \(\Sigma _{\beta _2}\).

Proof

Define \(\beta _2=\frac{\beta _1}{BF}\).

First note that,

where we let \(\Omega _{1i}=\left\{ {\tilde{X}}_i-(1,{\varvec{W}}_i^\top ,{\varvec{V}}_i^\top )\beta _1\right\} ^2\) and \(\Omega _{2i}=\left( {\tilde{X}}_i-(1,{\varvec{V}}_i^\top )\beta _t\right) ^2\).

Second, the estimating equations considered are

Third, we can derive the asymptotic normal distribution for \(\beta\), \(\beta _t\), \(\Omega _1\) and \(\Omega _2\) as

where J is the variance covariance matrix of the above four estimating equations and I is a matrix composed by the expectation of derivatives of each estimating equation with respect to \(\beta\), \(\beta _t\), \(\Omega _1\) and \(\Omega _2\), respectively. Specifically,

where \(\mathbb {X}_i=(1,{\varvec{W}}_i^\top ,{\varvec{V}}_i^\top )^\top\) and \(\mathbb {X}_{ti}=(1,{\varvec{V}}_i^\top )^\top\) Fourth, the asymptotic normal distribution for \({\hat{\beta }}_1\) and \({\widehat{BF}}\) jointly can be derived using delta method.

where C is a matrix derived by taking derivative of \(\beta\) and BF each with respect to \(\beta\), \(\beta _t\), \(V_1\) and \(V_2\) respectively. For example,

Fifth, \({\hat{\beta }}_2=\frac{{\hat{\beta }}_1}{{\hat{BF}}}\) can be derived using delta method.

where C’ is a matrix derived by taking derivative of \(\beta _2\) each with respect to \(\beta\) and BF, respectively. That is,

Then we have estimating equation for \(\beta _2\) as below:

With ULLN,

and with continuous mapping theorem, we have

So we have

Plug in the Taylor expansion, notice that \(EU(\beta ^{*}_2)=0\), we have

So we have

By CLT, we have

So we have

By assumption, \({\hat{\Sigma }}_{\beta _2}=\Sigma _{\beta _2}+o_p(1)\) which is a consistent estimator of \(\Sigma _{\beta _2}\). Then by applying Lemma 3, we have

\(\square\)

Lemma 6

Assume \(n_2/n_1\rightarrow C_{1}<\infty\) and \(\gamma ^{*}_3\) is the unique solution to \(EU(\gamma _3,\beta ^{*}_3)=0\) for an estimating function \(U(\gamma _3,\beta _3)\). If \({\hat{\gamma }}_3\) solve the estimating equation \(0=n_2^{-1}\sum _{i=1}^{n_2} U_i(\gamma _3,{\hat{\beta }}_3)\) where \(\sqrt{n_1}({\hat{\beta }}_3-\beta ^{*}_3)\rightarrow N(0,\Sigma _{\beta _3})\), then we have \(\sqrt{n_2}({\hat{\gamma }}_3-\gamma ^{*}_3)\rightarrow N(0, \Sigma _{\gamma _3})\) where

where \(I_{\gamma _3}=E\left( -\frac{\partial U(\gamma _3,\beta _3)}{\partial \gamma _3}|_{\gamma ^{*}_3,\beta ^{*}_3}\right)\) and \(I_{\beta _3}=E\left( -\frac{\partial U(\gamma _3,\beta _3)}{\partial \beta _3}|_{\gamma ^{*}_3,\beta ^{*}_3}\right)\). This variance can be consistently estimated by

where \({\hat{I}}_{\gamma _3}=n_2^{-1}\sum _{i=1}^{n_2}\left( -\frac{\partial U_i(\gamma _3,\beta _3)}{\partial \gamma _3}|_{\hat{\gamma _3},\hat{\beta _3}}\right)\), \({\hat{I}}_{\beta _3}=n_2^{-1}\sum _{i=1}^{n_2}\left( -\frac{\partial U_i(\gamma _3,\beta _3)}{\partial \beta _3}|_{\hat{\gamma _3},\hat{\beta _3}}\right)\) and \({\hat{\Sigma }}_{\beta _3}\) is a consistent estimator of \(\Sigma _{\beta _3}\).

Proof

We can derive the asymptotic for \({\hat{\beta }}_3\) as below:

where \(U_i(\beta _3^{*})=(1,{\varvec{W}}_i^\top ,{\varvec{V}}_i^\top ,{\varvec{Q}}_i)^\top {\tilde{X}}_i-(1,{\varvec{W}}_i^\top ,{\varvec{V}}_i^\top ,{\varvec{Q}}_i)^\top (1,{\varvec{W}}_i^\top ,{\varvec{V}}_i^\top ,{\varvec{Q}}_i)\beta _3^{*}.\) With ULLN,

and with continuous mapping theorem, we have

So we have

Plug in the Taylor expansion, notice that \(EU(\beta ^{*}_3)=0\), we have

So we have

By CLT, we have

So we have

By assumption, \({\hat{\Sigma }}_{\beta _3}=\Sigma _{\beta _3}+o_p(1)\) which is a consistent estimator of \(\Sigma _{\beta _3}\). By applying Lemma 3, we have

\(\square\)

Lemma 7

Assume \(\gamma ^{*}_4\) is the unique solution to \(EU(\gamma _4)=0\) for an estimating function \(U(\gamma _4)\). Solving the estimating equation \(0=n_1^{-1}\sum _{i=1}^{n_1} U_i({\hat{\gamma }}_4)\), we have \(\sqrt{n_1}({\hat{\gamma }}_4-\gamma ^{*}_4)\rightarrow N(0, \Sigma _{\gamma _4})\) where

where \(I_{\gamma _4}=E\left( -\frac{\partial U(\gamma _4)}{\partial \gamma _4}|_{\gamma ^{*}_4}\right)\). This variance can be consistently estimated by

where \({\hat{I}}_{\gamma _4}=n_1^{-1}\sum _{i=1}^{n_1}\left( -\frac{\partial U_i(\gamma _4)}{\partial \gamma _4}|_{\hat{\gamma _4}}\right)\) and \({\hat{\Sigma }}_{\gamma _4}\) is a consistent estimator of \(\Sigma _{\gamma _4}\).

Proof

Derive asymptotic \({\hat{\gamma }}_4\) for method 4 directly

where \(U_i(\gamma _4^{*})=(1,{\varvec{V}}_i^\top ,{\varvec{Q}}_i)^\top {\tilde{X}}_i-(1,{\varvec{V}}_i^\top ,{\varvec{Q}}_i)^T(1,{\varvec{V}}_i^\top ,{\varvec{Q}}_i)\gamma _4^{*}.\) With ULLN,

and with continuous mapping theorem, we have

So we have

Plug in the Taylor expansion, notice that \(EU(\gamma ^{*}_4)=0\), we have

So we have

By CLT, we have

So we have

By assumption, \({\hat{\Sigma }}_{\gamma _4}=\Sigma _{\gamma _4}+o_p(1)\) which is a consistent estimator of \(\Sigma _{\gamma _4}\). \(\square\)

Combine different steps together, we obtain the final asymptotics result.

Theorem 2

Provide the asymptotic for method 1–4 under Cox model

With \(\frac{n_3}{n_2}\rightarrow C_2\) and \(\frac{n_2}{n_1}\rightarrow C_1\), we have \(\sqrt{n_3}({\hat{\theta }}_1-\theta _1^{*})\rightarrow N(0, \Sigma _{\theta _ 1})\) where \(\Sigma _{\theta _1}=I_{\theta _1}^{-1}(I_{\theta _1}+C_2I_{\gamma _1}\Sigma _{\gamma _1}I_{\gamma _1}^\top )I_{\theta _1}^{-\top }\) can be consistently estimated by \({\hat{\Sigma }}_{\theta _1}={\hat{I}}_{\theta _1}^{-1}({\hat{I}}_{\theta _ 1}+\frac{n_3}{n_2}{\hat{I}}_{\gamma _1}{\hat{\Sigma }}_{\gamma _1}{\hat{I}}_{\gamma _1}^\top ){\hat{I}}_{\theta _1}^{-\top }\) for method 1.

With \(\frac{n_3}{n_2}\rightarrow C_2\) and \(\frac{n_2}{n_1}\rightarrow C_1\), we have \(\sqrt{n_3}({\hat{\theta }}_2-\theta _2^{*})\rightarrow N(0, \Sigma _{\theta _ 2})\) where \(\Sigma _{\theta _2}=I_{\theta _2}^{-1}(I_{\theta _2}+C_2I_{\gamma _2}\Sigma _{\gamma _2}I_{\gamma _2}^\top )I_{\theta _2}^{-\top }\) can be consistently estimated by \({\hat{\Sigma }}_{\theta _2}={\hat{I}}_{\theta _2}^{-1}({\hat{I}}_{\theta _ 2}+\frac{n_3}{n_2}{\hat{I}}_{\gamma _2}{\hat{\Sigma }}_{\gamma _2}{\hat{I}}_{\gamma _2}^\top ){\hat{I}}_{\theta _2}^{-\top }\) for method 2.

With \(\frac{n_3}{n_2}\rightarrow C_2\) and \(\frac{n_2}{n_1}\rightarrow C_1\), we have \(\sqrt{n_3}({\hat{\theta }}_3-\theta _3^{*})\rightarrow N(0, \Sigma _{\theta _ 3})\) where \(\Sigma _{\theta _3}=I_{\theta _3}^{-1}(I_{\theta _3}+C_2I_{\gamma _3}\Sigma _{\gamma _3}I_{\gamma _3}^\top )I_{\theta _3}^{-\top }\) can be consistently estimated by \({\hat{\Sigma }}_{\theta _3}={\hat{I}}_{\theta _3}^{-1}({\hat{I}}_{\theta _ 3}+\frac{n_3}{n_2}{\hat{I}}_{\gamma _3}{\hat{\Sigma }}_{\gamma _3}{\hat{I}}_{\gamma _3}^\top ){\hat{I}}_{\theta _3}^{-\top }\) for method 3.

With \(\frac{n_3}{n_2}\rightarrow C_2\) and \(\frac{n_2}{n_1}\rightarrow C_1\), we have \(\sqrt{n_3}({\hat{\theta }}_4-\theta _4^{*})\rightarrow N(0, \Sigma _{\theta _ 4})\) where \(\Sigma _{\theta _4}=I_{\theta _4}^{-1}(I_{\theta _4}+C_2I_{\gamma _4}\Sigma _{\gamma _4}I_{\gamma _4}^\top )I_{\theta _4}^{-\top }\) can be consistently estimated by \({\hat{\Sigma }}_{\theta _4}={\hat{I}}_{\theta _4}^{-1}({\hat{I}}_{\theta _ 4}+\frac{n_3}{n_2}{\hat{I}}_{\gamma _4}{\hat{\Sigma }}_{\gamma _4}{\hat{I}}_{\gamma _4}^\top ){\hat{I}}_{\theta _4}^{-\top }\) for method 4.

Proof

By applying Lemmas 2 and 4, the asymptotic \({\Sigma }_{\theta _1}\) can be derived as \({\hat{\Sigma }}_{\theta _1}={\hat{I}}_{\theta _1}^{-1}({\hat{I}}_{\theta _ 1}+\frac{n_3}{n_2}{\hat{I}}_{\gamma _1}{\hat{\Sigma }}_{\gamma _1}{\hat{I}}_{\gamma _1}^\top ){\hat{I}}_{\theta _1}^{-\top }\).

By applying Lemmas 2 and 5, the asymptotic \({\Sigma }_{\theta _2}\) can be derived as \({\hat{\Sigma }}_{\theta _2}={\hat{I}}_{\theta _2}^{-1}({\hat{I}}_{\theta _ 2}+\frac{n_3}{n_2}{\hat{I}}_{\gamma _2}{\hat{\Sigma }}_{\gamma _2}{\hat{I}}_{\gamma _2}^\top ){\hat{I}}_{\theta _2}^{-\top }\).

By applying Lemmas 2 and 6, the asymptotic \({\Sigma }_{\theta _3}\) can be derived as \({\hat{\Sigma }}_{\theta _3}={\hat{I}}_{\theta _3}^{-1}({\hat{I}}_{\theta _ 3}+\frac{n_3}{n_2}{\hat{I}}_{\gamma _3}{\hat{\Sigma }}_{\gamma _3}{\hat{I}}_{\gamma _3}^\top ){\hat{I}}_{\theta _3}^{-\top }\).

By applying Lemmas 2 and 7, the asymptotic \({\Sigma }_{\theta _4}\) can be derived as \({\hat{\Sigma }}_{\theta _4}={\hat{I}}_{\theta _4}^{-1}({\hat{I}}_{\theta _ 4}+\frac{n_3}{n_2}{\hat{I}}_{\gamma _4}{\hat{\Sigma }}_{\gamma _4}{\hat{I}}_{\gamma _4}^\top ){\hat{I}}_{\theta _4}^{-\top }\). \(\square\)

1.3 A.3: Efficiency Comparison

In this part, we heuristically compare the efficiency of method 3 and method 4 by comparing the average variation in the estimation of Z. For simplicity, we can assume without loss of generality that all variables are centered and only compare the efficiency in estimating \({\widehat{Z}}\) because the variance of \({\widehat{\theta }}\) is a monotone function of the variance of \({\widehat{Z}}\). We compare the expected variance under fixed design. For method 4,

and for method 3,

Appendix B: Details of Simulation Settings and Additional Results

1.1 B.1: Details of Simulation Settings

For each setting, we evaluate the strength of biomarker, self-reported data as well as observed prediction strength from each stage. Specifically, we consider the following quantities, coefficient and partial coefficient of multiple determination on long-term dietary intake by biomarker, mathematically, \(R_{ZWV}^2=1-\frac{Var(Z|W,V)}{Var(Z)}\), \(R_{ZW|V}^2=1-\frac{Var(Z|W,V)}{Var(Z|V)}\); coefficient and partial coefficient of multiple determination on long-term dietary intake by self-reported data, mathematically, \(R_{ZQV}^2=1-\frac{Var(Z|Q,V)}{Var(Z)}\), \(R_{ZQ|V}^2=1-\frac{Var(Z|Q,V)}{Var(Z|V)}\); coefficient and partial coefficient of multiple determination on long-term dietary intake by self-reported data and biomarker, mathematically, \(R_{ZQWV}^2=1-\frac{Var(Z|W,Q,V)}{Var(Z)}\), \(R_{ZQW|V}^2=1-\frac{Var(Z|W,Q,V)}{Var(Z|V)}\); coefficient and partial coefficient of multiple determination on consumed dietary intake by biomaker, mathematically, \(R_{{{\tilde{X}}}WV}^2=1-\frac{Var({{\tilde{X}}}|W,V)}{Var({{\tilde{X}}})}\), \(R_{{{\tilde{X}}}W|V}^2=1-\frac{Var({{\tilde{X}}}|W,V)}{Var({{\tilde{X}}}|V)}\); coefficient and partial coefficient of multiple determination on consumed dietary intake by biomaker and self-reported data, mathematically, \(R_{{{\tilde{X}}}WQV}^2=1-\frac{Var({{\tilde{X}}}|W,Q,V)}{Var({{\tilde{X}}})}\), \(R_{{{\tilde{X}}}WQ|V}^2=1-\frac{Var({{\tilde{X}}}|W,Q,V)}{Var({{\tilde{X}}}|V)}\), coefficient and partial coefficient of multiple determination on estimated dietary intake with Method 2 by self-reported data, mathematically, \(R_{{\hat{X}}_2QV}^2=1-\frac{Var({\hat{X}}_2|Q,V)}{Var({\hat{X}}_2)}\) and \(R_{{\hat{X}}_2Q|V}^2=1-\frac{Var({\hat{X}}_2|Q,V)}{Var({\hat{X}}_2|V)}\).

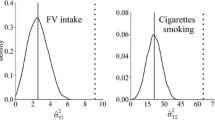

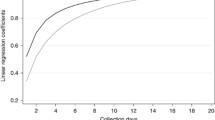

In settings 1, 2 and 3, we fixed the effect on Q by setting \(a_0=4\), \(a_1=1.5\) and \(\sigma _q=3\). In setting 4, 5 and 6, we set \(a_0=0.4\), \(a_1=2\) and \(\sigma _q=4\). In addition, we decrease the coefficient of X on W from 1.3 to 0.8 in the first three settings while we decrease the coefficient of X on W from 1.1 to 0.5 in the last three settings. Table 5 displays all different types of \(R^2\) mentioned above for all six settings. To be more specific, by fixing the strength of self-reported data and correlation between true dietary intake and personal characteristic, we gradually decreased the strength of biomarker in the first three settings. In the last three settings, the correlation between true dietary intake and personal characteristic is set to be 0. The strength of self-reported data is again fixed but at a different level compared to the first three settings. We again decreased the strength of biomarker gradually in the last three settings. Below is the list of the six settings with varying parameters.

\(b_1=1.3\), \(\rho =0.6\), \(a_0=4\), \(a_1=1.5\), \(\sigma _q=3\) (Setting 1);

\(b_1=1.1\), \(\rho =0.6\), \(a_0=4\), \(a_1=1.5\), \(\sigma _q=3\) (Setting 2);

\(b_1=0.8\), \(\rho =0.6\), \(a_0=4\), \(a_1=1.5\), \(\sigma _q=3\) (Setting 3);

\(b_1=1.1\), \(\rho =0\), \(a_0=0.4\), \(a_1=2\), \(\sigma _q=4\) (Setting 4);

\(b_1=0.8\), \(\rho =0\), \(a_0=0.4\), \(a_1=2\), \(\sigma _q=4\) (Setting 5);

\(b_1=0.5\), \(\rho =0\), \(a_0=0.4\), \(a_1=2\), \(\sigma _q=4\) (Setting 6); The values of \(R^2\)s are listed in Table 5.

1.2 B.2: Additional Simulation Results

To better compare the robustness of these methods, we further conduct simulation under the setting where the relationships between the self-reported dietary intake and the short-term dietary intake are different before and after the controlled feeding study. Tables 6 and 7 summarize simulation results when the correlation structures between the FFQ and the true dietary intakes of the controlled feeding study and the full cohort are different under settings 1 and 4. With the correlation unequal between controlled feeding study and full cohort, method 2 with BF added has shown more robustness in controlling bias compared with method 3 and method 4. With the increase of difference from 10 to 50% in association between Q and Z, the performance of both method 3 and method 4 become worse (larger bias) while the performance in controlling bias of the estimator from method 2 is consistently good in most cases. In addition, in most settings, we observe that method 2 has smaller SD compared with method 3–4. The performance of method 2 is adequate even when partial \(R^2\)s (i.e., Setting 3: \(R^2_{{\tilde{X}}W|V}=0.21;\) Setting 6: \(R^2_{{\tilde{X}}W|V}=0.16\)) from the biomarker construction step and the calibration equation building step are both low, which suggests that in the real application of method 2, one may not need to be too stringent on the threshold of partial \(R^2\).

Rights and permissions

About this article

Cite this article

Zheng, C., Zhang, Y., Huang, Y. et al. Using Controlled Feeding Study for Biomarker Development in Regression Calibration for Disease Association Estimation. Stat Biosci 15, 57–113 (2023). https://doi.org/10.1007/s12561-022-09349-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12561-022-09349-3