Abstract

Unsupervised feature learning refers to the problem of learning useful feature extraction functions from unlabeled data. Despite the great success of deep learning networks in this task in recent years, both for static and for sequential data, these systems can in general still not compete with the high performance of our brain at learning to extract useful representations from its sensory input. We propose the Neocortex-Inspired Locally Recurrent Neural Network: a new neural network for unsupervised feature learning in sequential data that brings ideas from the structure and function of the neocortex to the well-established fields of machine learning and neural networks. By mimicking connection patterns in the feedforward circuits of the neocortex, our system tries to generalize some of the ideas behind the success of convolutional neural networks to types of data other than images. To evaluate the performance of our system at extracting useful features, we have trained different classifiers using those and other learnt features as input and we have compared the obtained accuracies. Our system has shown to outperform other shallow feature learning systems in this task, both in terms of the accuracies achieved and in terms of how fast the classification task is learnt. The results obtained confirm our system as a state-of-the-art shallow feature learning system for sequential data, and suggest that extending it to or integrating it into deep architectures may lead to new successful networks that are competent at dealing with complex sequential tasks.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

We call feature extraction the process of extracting values of interest (features) from the input data with the intention of finding a new representation of the data that is easier to work with or to interpret. Feature extraction is commonly used, for instance, in machine learning applications, to find alternative representations of the input data from which it is easier to extract the desired information (e.g., in speech recognition, working with the frequency components instead of with the audio signal itself simplifies considerably the task). It is also often used to reduce the dimensionality of the input data, by removing the redundant information while keeping the relevant data (e.g., to compare videos in a large database, working directly at the pixel level may be overwhelming, and extracting the information from keypoints of interest can help make the problem manageable).

The feature extraction process can be either handcrafted or learned [1]. Handcrafted feature extraction systems (e.g., SIFT for images [2], MPCC for audio [3]) involve generally the work of domain experts that understand what features may be most relevant to best describe the input data, and are therefore application-specific, besides requiring expert knowledge for their design. Feature extraction learning systems (also known as feature learning or representation learning systems), on the other hand, automatically learn the feature extracting mechanism from the available data by finding patterns or correlations in the data. This way, they can be used in very different applications, and they don’t require the manual work of experts in the specific field. In addition, these learning systems often lead to features that give better results than those coming from handcrafted systems, since they are able to automatically find more complex or barely perceptible correlations in the data. Therefore, the use of these learning systems has become in the last years the main method to extract features in many fields and applications.

Feature learning systems are often classified into traditional systems (e.g., PCA [4], LDA [5]) and deep learning systems (e.g., autoencoders [6], deep belief networks [7]) [8]. Deep learning systems, while more demanding in terms of computational cost and of the amount of data required, have shown to be able to find and extract more complex and useful features, which has resulted in an increase in their popularity during the last years. These systems appear to find representations of the input at different levels of abstraction, with the latter layers learning more abstract and complex features.

Feature learning systems can also be classified in terms of whether the learning is performed in a supervised way (e.g., LDA, supervised neural networks [9]) or in an unsupervised way (e.g., PCA, autoencoders) [8]. Supervised feature learning systems count on the information about the desired output for each input sample, and therefore, can learn features that are better adapted to the specific task (e.g., triplet networks [10]). However, it is required that each training sample is labeled with its corresponding desired output. Unsupervised feature learning systems, on the other hand, learn feature representations using only the input data. These systems are very useful in tasks where there is a large amount of data available that has not been labeled, something that is very common in real-life applications (as the process of labeling the data usually requires considerable manual work). Since the learnt features can be considered general-purpose, they are also useful to be reused in different applications without the need of learning new ones (in fact, the features learnt through supervised learning can also often be considered general-purpose [9]).

Much of the data and tasks that we deal with in our daily life has a temporal nature (e.g., speech recognition [11], action recognition [12]), and therefore, a large amount of work in feature learning has been also carried out for temporal (or sequential) data [1]. These systems typically rely either on taking as input all the samples in a time window around the current sample (and not just the current sample), or on having recurrent connections (e.g., through the use of LSTM or GRU modules [13]) or attention mechanisms (e.g., through the use of transformers [14]). The type of temporal data used by these systems takes generally the form of time series, with the samples taken at regular intervals in time (note that other forms of sequential data also exist, such as text or event data, which are processed in very different ways [15]).

While these sequential systems (e.g., LSTMs, GRUs) have shown very good results in the last years for different time-related problems, they are still far from the performance of the human brain in most daily-life tasks. Indeed, these systems present certain limitations in real-life scenarios when compared to the human brain, which is very good at adapting to, interpreting and reasoning over situations that it has never seen before, being able to apply its knowledge in more general ways [16]. This way, our brain can be considered the best-known general-purpose system at dealing with temporal data. However, no structures similar to LSTMs or GRUs have been found in our brain, and, therefore, the brain appears to deal with temporal information in a different way. Thus, it seems reasonable to search for new ways of adapting neural networks to temporal data by drawing inspiration from the functioning and structure of the brain. This may lead to the development of new architectures and systems that are able to deal better with temporal data, and that are closer to the high performance of the human brain.

In this study, we propose a system that tries to take a step in this direction, by bringing structures observed in the neocortex into the well-established artificial neural networks. This way, we propose the Neocortex-Inspired Locally Recurrent Neural Network (NILRNN), a recurrent neural network for unsupervised feature learning in sequential data that draws inspiration from connection patterns observed in the neocortex. This network relies on a novel 2D layer (the locally recurrent layer) with a pattern of connectivity able to develop semantic order along its neurons. This way, nearby neurons are expected to represent similar concepts and can be pooled together, achieving a form of low-level semantic pooling that can be seen as a generalization of the spatial pooling of convolutional neural networks (CNNs). The system proposed is actually a shallow network, and does not pretend to compete in performance with more complex deep recurrent networks. It has been rather designed to explore a different direction on how to deal with temporal data, focusing on the problem of the unsupervised learning of features. It has also been designed with the idea in mind of being easily extendable to (or integrated into) deep architectures. We believe that the promising results obtained in this study already place NILRNN as a candidate for the task of feature extraction in simple temporal applications. In addition, we expect that its extension to deep systems will bring new successful results.

This article is organized as follows: “Background’’ introduces concepts about the human brain and machine learning systems and mechanisms upon which NILRNN has been designed. “Methods’’ describes the NILRNN architecture. “Results’’ presents a comparison of the results obtained for NILRNN and other similar systems and for different datasets. “Discussion’’ discusses on NILRNN and the results obtained and proposes some possible future directions. Finally, “Conclusion’’ summarizes the results obtained and their implications and concludes the article.

Background

This section introduces some of the main concepts and mechanisms upon which NILRNN has been developed, including notions about the structure and function of our neocortex and about machine learning systems and techniques.

The Neocortex

The neocortex is a thin layered structure surrounding the brain that is essential for sensory perception, rational thought, voluntary motor control, language, and other high-level cognitive functions [17]. This region of the brain is divided into different areas, which perform different functions, and are organized in hierarchical structures, with areas higher in the hierarchies encoding more complex or abstract concepts [18]. For example, the ventral stream of the visual cortex, which is believed to be involved in object recognition, is often described as a hierarchy of neocortex areas, with areas lower in the hierarchy getting less processed visual input and encoding simple features such as edges in the image, and areas higher in the hierarchy using the features extracted by lower areas to encode more complex features, such as those describing whole objects [19] (in fact, within these hierarchies, besides those feedforward connections, feedback and horizontal connections also exist [20]). Still, the neocortex seems to be quite uniform along its areas in terms of structure and operation, with most of its areas organized in a relatively uniform six-layered structure [21]. This suggests a common underlying algorithm governing the different areas and functions [22]. On the other hand, similar to other regions of the brain, the strength of the connections between neocortical neurons evolves depending, among other factors, on the activation correlations between those neurons. These learning processes are often modeled through Hebbian rules, which state that connections between neurons with correlated activations get strengthened [23]. Homeostatic plasticity mechanisms also exist that maintain stability in the network while permitting changes to occur, e.g., regulating neuron activity so that neurons don’t spend too much time active or inactive [24].

Probably, the most-studied and best-known areas of the neocortex are those belonging to the ventral stream of the visual cortex, and, in particular, the primary visual cortex, which is the first area of the neocortex that is reached by the visual information coming from the eyes (i.e., the lowest area of the hierarchy). Within these areas, the most-studied connections, and the ones that are usually considered when designing models of this hierarchy, are the feedforward connections, which are responsible for the flow of visual information from the eyes to the higher levels of the hierarchy (backward and horizontal connections seem to be more related to performing top-down predictions [25] or to work as top-down attention mechanisms [26], but their function is less well understood). In general, feedforward connections mainly originate in layers 2 and 3 (L2/3) of the neocortex areas (or in the thalamus) and reach layer 4 (L4) of the next area in the hierarchy (which can be considered as the starting point of the feedforward information processing in the area). Then, the information propagates within the area through the cortical columns (transversally to the layers) to L2/3, to then go through the feedforward connections to the next area of the hierarchy [21, 27]. This way, models of the ventral stream that focus on the feedforward connections often model only L4 and L2/3, not considering the other layers of the neocortex.

Neurons in L4 and L2/3 of the primary visual cortex are sensitive to small regions of the input stimuli known as receptive fields, and are often classified as simple or complex cells: Simple cells tend to respond to edges in their receptive field with a specific orientation and position, and are mainly found in L4, while complex cells tend to respond to edges with a specific orientation, but are more position-invariant (i.e., small shifts in the image affect little their activity), and are mainly found in L2/3 [28]. To study this behavior, sine gratings as those shown in Fig. 1 are typically used as visual stimuli, for which simple cells tend to fire after a particular orientation and phase, while complex cells fire after a particular orientation but are more phase-invariant. The fact that these neurons respond so selectively to certain patterns in the input seems to indicate that the neocortex represents information through a sparse coding scheme, with most activity in a given time occurring only in a small proportion of the neurons, something that is consistent with physiological evidence [29]. Neurons in L4 and L2/3, besides being connected to neurons in other layers of the same or different areas, are also connected to neurons in the same layer through short-range excitatory and long-range inhibitory lateral connections [30], being these connections more numerous and relevant in L2/3 [31]. In addition, they are distributed in a way that neurons with similar receptive fields and orientation preferences (or other properties, e.g., ocular preference) are located close to each other, forming smooth ordered maps as shown in Fig. 2a [32], while no such organization seems to exist when it comes to phase preferences in L4 [33].

Many models have been designed with the purpose of describing the emergence of these properties in the primary visual cortex. The behavior of simple cells, which detect edge patterns from the input image, is usually achieved through Hebbian-like learning techniques. Sparse activities, on the other hand, can be obtained by implementing lateral inhibitory connections and homeostatic plasticity mechanisms. Regarding complex cells, their behavior is typically achieved by selectively pooling simple cells with similar receptive fields and orientations, but with shifts in the position of the edge, leading to a higher phase tolerance, as shown in Fig. 2b [34]. If L4 contains simple cells with similar orientations but different phases located together (as has been observed in the primary virtual cortex), the behavior of complex cells can emerge by just pooling indiscriminately a region of L4. However, achieving this order in a biologically plausible way has been more challenging. Many models achieve the orientation maps by defining strong short-range excitatory lateral interactions in L4. However, this generally leads to neurons next to each other firing simultaneously, and therefore, having similar phases. This makes the emergence of complex cell behavior more problematic, as these cells would have to selectively pool simple cells with similar orientations but different phases which would be located in separated positions. Antolik et al. [35] proposed a biologically plausible way of achieving this behavior by defining strong lateral interaction in L2/3, as well as a feedback connection back to L4. This brings a time delay in the coupling between nearby neurons in L4. This way, nearby neurons are not anymore pushed to fire simultaneously and to learn the same input patterns. Instead, they are pushed to fire close in time, learning patterns that appear in the input with certain delay. Input stimuli to our visual system generally shift smoothly in time, which implies that, for small regions and time windows, the orientation of the input edge patterns stays constant, and only the phase changes. This pushes nearby neurons in L4 to learn patterns with similar orientations but different phases, achieving a behavior analogous to that observed in the primary visual cortex. In fact, this interpretation of the emergence of orientation maps and complex cells in terms of time delays instead of in terms of image patterns may be also applicable to other areas of the neocortex (as we said before, the different areas of the neocortex appear to have a similar structure and operation, which makes some of the properties described for the primary visual cortex also valid to other areas). This would mean that neurons in L2/3 of other areas of the neocortex would be quite invariant to features in their input that change in short timescales, being mainly sensitive to slower-varying features (such as edges of a specific orientation for the case of the primary visual cortex).

Brain-Inspired Machine Learning Systems and Mechanisms

This section introduces other brain-inspired systems and mechanisms that have also served as inspiration to develop our system, or that may be useful to better understand its design.

Probably, the best-known and most popular brain-inspired machine learning systems are artificial neural networks. These networks are formed of artificial neurons that are organized in layers and stacked forming hierarchies, with the lowest-level layer processing the input to the system and representing it in terms of simple features, and the higher layers extracting more complex and abstract features [36]. This is similar to what has been observed in our brain, and in the neocortex in particular, as described in “The Neocortex’’. CNNs are a good example of brain-inspired neural networks, as they mimic to some extent the feedforward connections in the ventral stream of the visual cortex. Especially in the earlier convolutional layers of CNNs, neurons tend to behave in an analogous way to simple cells in L4 of the primary visual cortex, learning specific patterns from small regions of their input (e.g., edges with a particular orientation and phase). Neurons in the early max pooling layers, for their part, tend to behave in a similar way to complex cells in L2/3, pooling neurons that detect shifted versions of the same input pattern [37]. A common interpretation on why spatial pooling in CNNs works comes from assuming that slightly shifted versions of an edge in a region of an image contribute essentially with the same information to its overall meaning, and therefore, those neurons detecting shifted edges can be grouped together, losing little relevant information and simplifying the representation.

This interpretation is related to the concept of slowness: The slowness principle, inspired by behavior observed in the neocortex, states that our sensory input varies in a faster timescale than the environment itself, from which that sensory input arises (e.g., pixels vs. objects in a video) [38]. This way, by learning feature extracting functions that vary slowly in time, we may get useful representations of the causes behind the input, i.e., of the environment. For example, Slow Feature Analysis (SFA) [38] is an algorithm based on this principle that learns functions whose output only depends on the input at each instant, avoiding this way functions such as low-pass filters. This algorithm has been able to achieve similar behaviors to those observed in the brain, such as those of complex cells when having natural image sequences as input, detecting edges with specific orientations independently of their position [39]. This idea has also been applied hierarchically, leading to different timescales at different levels of complexity or abstraction (e.g., MTRNN [40], applied for the learning of complex actions formed of concatenated simpler movements).

Sparse coding, already mentioned in “The Neocortex’’, is another brain-inspired mechanism that consists of building representations with only a small percentage of active neurons for each given input. This form of coding is often very appropriate to represent the observations of the real world, as these observations can usually be described in terms of the presence, at each instant, of a small number of features out of a considerably larger number of possible features (e.g., edges of specific orientations, the presence of certain objects). In addition, sparse coding has shown several advantages when applied to artificial systems. For instance, its inherent redundancy seems to contribute to robustness and fault-tolerance [41], as well as to dealing with partial information [42], and its highly separated representations are also very appropriate for applications requiring incremental or few-shot learning, avoiding catastrophic forgetting [43].

Self-organization, on the other hand, is a behavior that not only appears in the brain (e.g., with neurons in the primary visual cortex responding to similar orientations appearing close to each other, see Fig. 2a), but also in nature in many different forms. It consists of the spontaneous emergence of some form of global order in a system due to the local interactions among its components, without the need of intervention of an external agent. Since self-organization mechanisms in artificial systems can take many different forms, their advantages are also diverse. Still, a typical common advantage is that it contributes to both the adaptability and robustness of the system [44]. It also shows advantages for incremental and few-shot learning applications, as well as in terms of the interpretability of the system.

Finally, Hebbian learning is a model of how neurons in the brain learn. It is an unsupervised and online learning model that consists of strengthening the connections between neurons with a correlated activity (and usually also weakening the connections with no correlated activity) [23]. Hebbian learning, while popular among models of brain regions, has some limitations that make it not the preferred option in machine learning solutions: it is unstable by nature, and it requires supplementary processes or structures (e.g., homeostatic mechanisms, lateral inhibitory interactions, feedback circuits) to solve several of the typical learning problems, making the system design process more complex [45].

Autoencoders

When it comes to unsupervised machine learning systems, autoencoders [36] are in most cases preferred to Hebbian learning, as their behavior is better explored and understood, relying on the better-established backpropagation learning technique. This way, these algorithms make it more straightforward to design systems with a certain desired behavior and performance.

An autoencoder [36] is a type of neural network that uses the backpropagation learning method (supervised) to learn new representations of the input data in an unsupervised way (i.e., it is a self-supervised feature learning system). This is done by setting as desired output a copy of the input data. This way, the network learns alternative representations of the input data in its hidden layer(s), keeping the main information of the input data to then be able to reconstruct it. To make the autoencoder learn new representations and extract useful features from the data, and prevent it from just learning the trivial solution (i.e., from just keeping a copy of the input in its hidden layer(s)), autoencoders include constraints in their hidden layer(s), such as having a smaller number of neurons in the hidden layer(s) than in the input/output layer. An autoencoder with such constraint is called undercomplete (see Fig. 3). Other popular types of autoencoders are sparse autoencoders, stacked autoencoders, denoising autoencoders and variational autoencoders [36].

Sparse autoencoders have the particularity that they enforce a sparse activity in their hidden layer(s), i.e., they implement a mechanism so that, for each possible input, only a small percentage of the neurons in their hidden layer(s) are active (or most of them have activities close to 0). This is typically done by adding a term to the cost function that penalizes activities in the hidden layer(s) that are different from those just described [36]. For example, it is common to approximate the activity in each neuron of the hidden layer(s) by a Bernoulli random variable and define a desired Bernoulli distribution for all those neurons. The cost term would then be given by the sum for all neurons of the Kullback-Leibler divergences (\(D_{KL}\)) between those two variables:

where \(J_{sparse}\) is the sparsity cost term, \(s_{hidden}\) is the size (number of neurons) of the hidden layer(s), \(\rho\) is the desired sparsity (or desired average activity for each neuron), and \(\hat{\rho }_i\) is the estimated average activity of neuron i.

This method allows the system to learn useful sparse representations of the input data (e.g., edge patterns at different positions and orientations when taking images as input [46]) without the need of other more complex techniques (as would be required, e.g., when using Hebbian learning).

Methods

In this section, we describe the proposed NILRNN, which is a recurrent neural network for feature extraction of sequential data that draws inspiration from models of layers L4 and L2/3 of the primary visual cortex. Further, NILRNN relies on brain-related concepts such as sparseness, slowness or self-organization, which, as we saw in “Brain-Inspired Machine Learning Systems and Mechanisms’’, often bring computational benefits. On the other hand, to mitigate the issues related to the Hebbian learning methods often used in models of the visual cortex, NILRNN relies on a self-supervised learning method similar to the one used with autoencoders. We will first present the architecture of the feature extraction system, to then describe the system used for the unsupervised learning of the weights.

The Feature Extraction System

The NILRNN feature extraction system is to a large extent inspired by layers L4 and L2/3 of the neocortex, as well as by the convolutional and max pooling layers of CNNs. As we saw in “2.2’’, a possible interpretation of why CNNs and their spatial pooling mechanism are so effective is that, in an image, slightly shifted versions of a pattern in a region of the image contribute with very similar meanings, and can be therefore grouped together. This idea, however, while inspired by models of the primary visual cortex, does not seem to describe anything occurring in other regions of the neocortex, nor seems to be applicable to most domains other than vision (as shifting the elements of a generic sensory input or feature vector will in general change completely its meaning). As we mentioned in “2.1’’, Antolik et al. [35] proposed a model of the primary visual cortex relying on a more general principle that may actually be also applicable to other regions of the neocortex: In this model, what is grouped together is input patterns tending to occur close in time (e.g., shifted edges). Following a similar reasoning to that of CNNs, we could argue that such strategy works because input patterns that tend to occur close in time have associated very similar meanings, something that seems true for most domains dealing with sequential data. This way, a neural network that relies on this principle could be seen as a generalization of CNNs for sequential data other than images, making use of a form of (sub-symbolic) semantic pooling that is more general than the spatial pooling from CNNs. NILRNN has been designed based on this principle, pooling together neurons that tend to fire close in time. Drawing again inspiration from the neocortex, such behavior is achieved through a layer, which is to some extent analogous to L4 and to the convolutional layer, with its neurons arranged in two dimensions and in which neighbor neurons are connected in a recurrent fashion. We will refer to such layer as locally recurrent layer (note that this is different from the local recurrent connections as defined in [47]). This way, during the training phase, neurons active in a certain timestep contribute to the firing of neighbor neurons in the next timestep, pushing them to learn patterns that tend to appear successively in the input, and emerging this way a self-organization mechanism that makes neurons with similar meanings be located close to each other (note that, to achieve such behavior, a sparse activity in the layer is also important, which will be enforced during the training phase). Then, these nearby neurons with similar meanings can be pooled together through a 2D max pooling layer (analogous to L2/3 and to the max pooling layer of CNNs), achieving the sought semantic pooling, which allows the system to simplify and reduce the dimensionality of the representation while keeping the relevant information, as well as its ordered distribution in two dimensions.

Coming back to the locally recurrent layer, its neurons will not only keep information on the current input, but also on the previous ones (e.g., with input images, a neuron could represent the input pattern as well as the direction and velocity in which it is moving). However, the max pooling layer will pool neurons representing those different previous inputs together, losing an important part of the recurrent information and behaving to some extent as a feedforward network (e.g., with input images, neurons in the recurrent layer detecting edges with similar orientations would tend to be located together, independently of the velocity of the edge). This behavior, while may seem undesirable, can be actually seen as an emergence of the slowness principle, and is analogous to what occurs in the SFA algorithm in particular: During the training phase, a set of functions are learnt whose outputs change slowly in time while only depending on the input at each instant. On the other hand, the lack of a hidden layer between the input and the locally recurrent layer sets a limitation on the possible features that this layer can learn (as neural networks require at least one hidden layer to behave as universal approximators [48]). Therefore, sparse inputs with the main information relying on the neurons being active/inactive rather than on their precise level of activity are preferred.

In summary, the NILRNN feature extraction system consists of a 2D locally recurrent layer followed by a max pooling layer. The recurrent layer is characterized by its height h and width w (i.e., it has a size of \(s = h \cdot w\)), as well as by the number of neighbor neurons r to which each neuron is connected recurrently. The recurrent connectivity pattern (i.e., the kernel) takes a circular shape, with only neurons under a specific distance limit being connected to each other (this way, parameter r can only take values that correspond to “circular” shapes). Regarding the layer input, in this study we have used a fully connected input, but other connectivity patterns are also possible depending on the type of input (more on this in “Comparison to Other Systems’’). The max pooling layer, on the other hand, is defined by the number of neurons p that each neuron pools from a region of the recurrent layer, and by a stride t. Similar to the recurrent connection pattern, the pooling kernel also takes a circular shape. Since we are working with sparse representations for which the main information relies mainly on whether the neurons are active or not, rather than on their exact level of activation, all neurons in the recurrent layer make use of sigmoid activation functions. Figure 4 shows this feature extraction system applied over an input in a fully connected manner, and within a classification task that applies logistic regression (i.e., a single neural layer) over the extracted features.

The Unsupervised Learning System

While the system just presented can of course be trained in a supervised way through backpropagation, in this study we are interested in the task of learning features in an unsupervised way. Therefore, in this section, we propose a system designed specifically for the unsupervised learning of the NILRNN weights. As we mentioned earlier, NILRNN relies on the backpropagation technique through a self-supervised approach similar to that used in autoencoders (see “Autoencoders’’). In principle, this could be achieved by adding a layer at the end of the system and training the system to reconstruct its input. However, the max pooling layer has no weights to learn, making it unnecessary at this stage. In fact, it would just introduce obstacles to the reconstruction of the input, since it groups together patterns that are different (e.g., shifted edges). Therefore, we decided not to include it in the learning system (i.e., the input is reconstructed directly from the locally recurrent layer). On the other hand, the locally recurrent layer is supposed to have information about the current input as well as the previous inputs. This way, it makes sense to design the self-supervised system so that, besides reconstructing the current input, it also reconstructs the previous inputs or predicts the next inputs. Preliminary tests, together with the idea that making the system predictive would push the system to learn features that represent the input within its context in a more meaningful way, led us to go for the second option. The influence of each of those predictions over the learning process can be weighted in the cost function (e.g., using the weighted Euclidean distance to calculate the squared-error cost term). This way, since counting on information about the previous inputs decreases the output error during training, this learning system pushes neighbor (connected) neurons to learn patterns occurring close in time, leading to the self-organization mechanism previously mentioned, as well as to the slowness phenomenon once the max pooling layer is added. Finally, to guarantee that the activity in the locally recurrent layer is sparse, a sparsity term is introduced in the cost function, as done with sparse autoencoders (see “Autoencoders’’).

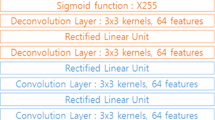

In summary, the NILRNN unsupervised learning system is a three-layer neural network as the one shown in Fig. 5, formed of an input layer, the same 2D locally recurrent layer as that of the feature extraction system, and an output layer of the size of the input times \(n_{\hat{x}}\), being \(n_{\hat{x}}\) the number of predictions plus 1 (as it also reconstructs the current input). The neurons in the output layer also use sigmoid activation functions, which means that, for the input reconstruction to be successful, the input also needs to be normalized between 0 and 1 (which is in line with the preferred sparse inputs). The network is trained using truncated backpropagation through time (TBPTT), characterized by a truncation horizon k, to minimize the following cost function:

where W and b represent all the variable weights and bias units in the network, \(J_{error}\) is the weighted squared-error cost term, \(J_{regularization}\) is the \(L_2\) regularization term (or weight decay), \(J_{sparse}\) is the sparsity term as described in (1) applied to the locally recurrent layer, and \(\lambda\) and \(\beta\) are the \(L_2\) regularization term and sparsity term weights in the cost function:

with m being the number of samples in the batch, \(x_i\) and \(y_i\) the input and target output corresponding to the i-th sample (with \(y_i\) being a concatenation of \(x_i\) and the next \(n_{\hat{x}}-1\) inputs), \(h_{W,b}(x_i)\) the output of the network for input \(x_i\), \(\omega _{\hat{x}}\) the vector of weights to control the influence of the different predictions (which appears within a square root because in the weighted Euclidean distance the weights multiply the terms being summed, i.e., the squares of the differences), \(\sqrt{\cdot }\) the square root of the vector elements, \(\circ\) the element-wise or Hadamart product, and \(\Vert \cdot \Vert\) the 2-norm operator (or Euclidean norm, i.e., the square root of the sum of squares of all elements in the vector or matrix).

Results

To evaluate the performance of NILRNN, we have opted to test its feature extraction ability for the task of classification over two typical domains involving sequential data: action recognition and speech recognition. We will use NILRNN configurations similar to those presented in Figs. 4 and 5, i.e., fully connected input, shallow systems (without building hierarchies) and unsupervised learning of features (with fully connected output). This way, we are not interested in too complex data such as video data, which typically requires deep architectures. Instead, we have looked for free popular balanced sequential datasets that can be successfully processed by shallow systems. In particular, we have tested our system over WARD (Wearable Action Recognition Database) [49], containing human action data, and FSDD (Free Spoken Digit Dataset) [50], containing speech data. These datasets have been divided, using stratification, into a training set (\(\sim\)60% of the samples), a validation set (for model selection, \(\sim\)20% of the samples) and a test set (for evaluation, \(\sim\)20% of the samples). Since speech data is typically processed in the frequency domain, when working with the FSDD dataset we have used the spectrogram of the data as input (which is also a form of mimicking our auditory system [51]). This has the added advantage that the spectrogram can be considered to certain extent as sparse data, which, as mentioned in “The Feature Extraction System’’, is a preferable property of the input when working with NILRNN (the WARD data comes from inertial sensors, and therefore does not satisfy this property). All the input data coming from the datasets have been normalized through truncation to \(\pm 3\) standard deviations and through rescaling to the range [0.1, 0.9]. Finally, as we argued in “Introduction’’, one of the advantages of learning in an unsupervised way is that the amount of unlabeled data is typically way larger than that of labeled data, allowing the system to learn from much more data, from which it is easier to generalize. However, when training NILRNN over the mentioned datasets, it is not taking advantage of this characteristic, as their size is limited. In order to evaluate its performance also in a context with unlimited unlabeled data, we have added to the previous datasets an input stream of human action data generated synthetically and which takes a sparse form (see Appendix). The first columns of Table 1 show the main properties of these three datasets.

Considering that we are working with sequential data, the classification task will be performed using both a logistic regression and a fully connected shallow vanilla recurrent neural network (RNN), i.e., a fully connected recurrent layer followed by a fully connected feedforward layer. Regarding the metrics for the evaluation of the classification performance, we have opted for accuracy, as it is a valid representative metric and one of the preferred ones when it comes to balanced datasets [52]. For the sake of comparison, the two classifiers will be applied over the features extracted by NILRNN and by other shallow feature learning systems, as well as over the input directly. In particular, we will compare the performance of our system to that of an undercomplete autoencoder and of a sparse autoencoder [36], as well as to that of an alteration of NILRNN without the max pooling layer (to better understand how this layer contributes). All these neural networks have been initialized according to Xavier initialization and have been trained through backpropagation using the Adam optimization algorithm with stepsize \(\alpha\). A genetic algorithm has been used (together with the training and validation sets, randomly split with stratification in each iteration) to find the best hyperparameters for each system and each dataset. Still, to simplify the model selection process, the values of few hyperparameters have been set before the application of the genetic algorithm: the batchsize has been set to \(m = 1000\) samples, the 2D layers have been set to a squared shape (i.e., equal height and width), and the prediction weights in the cost function have been set to 1 (i.e., \(\omega _{\hat{x}}=1\), all predictions have the same weight). The fitness function of the genetic algorithm is obtained as the estimated accuracy of the network over 100000 samples, after having trained the feature extraction system (if it exists) over 1000 batches (10000 batches for FSDD) and the classification system over a number of batches that is different depending on the dataset and that is indicated in the last column of Table 1 (these values have been defined manually according to the accuracies achieved for the different datasets and number of batches). The obtained hyperparameters for the different systems and datasets are shown in Tables 2, 3 and 4.

With the hyperparameters defined, the systems have been evaluated in terms of accuracy (over the test set) after different numbers of training batches (from the combined training and evaluation sets) to understand how fast they can learn as well as how good they can get. For each system, and with the feature extraction component already trained over 5000 batches (10000 batches for FSDD), the classification accuracy has been measured after every 50 batches of training of the classification system, until reaching the 5000 batches. This process has been repeated 32 times per system, and the obtained results averaged, to reduce the influence of the variance that corresponds to different executions. These averaged results for the different systems and datasets are shown in Figs. 6, 7 and 8.

The obtained results show that, in general, the features learnt by both NILRNN with and without the max pooling layer outperform the features learnt by other systems and the raw input in terms of accuracies achieved with them as well as in terms of the number of samples required to achieve specific accuracies. In principle, this could be associated to the fact that NILRNN is a recurrent network and therefore contains also information about the previous samples. However, as we mentioned in “The Feature Extraction System’’, the max pooling layer of NILRNN makes it behave to some extent as a feedforward network. This is coherent with the fact that the NILRNN without the max pooling layer is in general outperforming the complete NILRNN when using a logistic regression classifier (Figs. 6a, 7a and 8a), but this is not true anymore when using an RNN classifier (Figs. 6b, 7b and 8b). Indeed, the logistic regression system has no recurrent connections, making the memory of the NILRNN without max pooling a clear advantage. However, since the RNN already has memory by itself, using features that keep information about the previous samples may not be that relevant, and having the best possible features describing the current input (which is what we are looking for) may be more important. This should also apply to the other feature extraction systems, which even when using an RNN classifier achieve lower performances. In fact, Fig. 8b shows how most of the other systems, when using an RNN classifier, are able to achieve similar accuracies after a large enough number of training samples, indicating that they do have the capability to achieve such accuracies. This is not the case for Figs. 6b and 7b, but may also occur for a larger number of training samples than those shown.

Focusing on the differences between NILRNN with and without the max pooling layer when using an RNN classifier, Figs. 6b and 7b show that, for the WARD and FSDD datasets, the full NILRNN is allowing a faster learning for a small enough number of samples, which is one of the main objectives of this system (for the synthetic actions input, shown in Fig. 8b, both curves increase so fast that there is no perceptible difference). Regarding the accuracies reached after a large enough number of training samples, the complete NILRNN is slightly outperforming the NILRNN without max pooling for the FSDD dataset and for the synthetic actions input (Figs. 7b and 8b). However, this is not the case for the WARD dataset (Fig. 6b), for which, after certain number of samples, the NILRNN without max pooling begins to outperform the complete version. This may be related to the fact that the input data is not sparse, and therefore NILRNN does not behave as desired. In any case, these small differences between the two systems, added to the fact that the number of batches in the fitness function has been chosen to some extent in an arbitrary way, indicate that further investigation is needed to understand better the behavior of the max pooling layer within NILRNN. Still, the results obtained are already promising.

Finally, in recent work, we have also evaluated NILRNN in a qualitative way, using sequences of shifting images as input and comparing its emerging behavior to known behavior of the primary visual cortex [53]. The results show that neurons in the recurrent layer learn edge patterns, with nearby neurons detecting edges with similar orientations but different phases, following a pattern similar to that of Fig. 2a. This is good evidence that NILRNN is behaving as desired.

Discussion

In this article, we have presented the Neocortex-Inspired Locally Recurrent Neural Network (NILRNN): an unsupervised learning system for feature learning of sequences that brings ideas from the neocortex to the well-established fields of machine learning and artificial neural networks. In particular, this system tries to keep the learning and representation capabilities of models of the neocortex while simplifying their computational complexity and cost to make it more adequate for real-life machine learning applications. It draws inspiration from computational neuroscience concepts such as sparsity, self-organization and slowness, as well as from other machine learning algorithms such as CNNs and autoencoders, and relies on a new form of low-level semantic pooling, which is a generalization of the usual spatial pooling. The results show that NILRNN outperforms other shallow unsupervised learning systems such as undercomplete autoencoders or sparse autoencoders in the task of feature learning for classification. This way, NILRNN is successful at fulfilling its purpose of learning features that allow classifiers to learn their task more efficiently and in less iterations. In fact, NILRNN is designed to learn from a large amount of unlabeled data, so the results obtained may be even better for situations where the amount of data is not as limited as in the speech and action datasets we have used. This also holds true for cases in which a larger number of batches is used during the unsupervised learning phase (which we have not done due to computational and time constraints). On the other hand, instead of working with the average of the performances obtained from the different features learnt in different iterations, a real application could just keep and work with the best learnt features, leading to better results. In addition, once the classification system has been trained, the whole system (feature extraction + classification) could be trained end-to-end (unfreezing the feature extraction system weights) to fine-tune the weights for the specific application and improve the accuracy. Finally, as we mentioned in “Results’’, the fact that the data from the WARD dataset is not sparse may be depriving NILRNN from working at its best. When working with such type of data, it would probably improve the behavior of the system to transform it into some sparse representation (or to add a new hidden layer that can automatically learn such sparse representation). This needs to be further investigated.

Comparison to Other Systems

One way to better understand conceptually our system and to possibly unveil future directions is to compare it to other existing systems. In “The Feature Extraction System’’, we already went through some similarities between NILRNN and CNNs, and we mentioned that our system can be seen as some sort of generalization of CNNs (and, in particular, of the block composed of a convolutional layer followed by a max pooling layer) to sequences of data other than images, with its form of semantic pooling being a generalization of the spatial pooling in CNNs. One important difference between CNNs and our system is that, in CNNs, the convolutional layer is composed of different channels, with each channel containing the output of one learnt feature detector applied along the whole image. In NILRNN, on the other hand, the recurrent layer has one single “channel”, with all those channels of the CNN convolutional layer being represented in a single 2D layer while still being organized locally (being this way more analogous to L4). On the other hand, in this work, the NILRNN input has been made fully connected. However, in order to work, for instance, over full images, its input should be made partially connected, with a similar arrangement to that of CNNs (the same applies to the unsupervised learning system output). This way, each neuron in the recurrent layer would only be connected to a small patch from the input image, with these patches shifting smoothly in space as you move along the neurons in the recurrent layer (similar to receptive fields in the primary visual cortex). This new system could be applied to types of sequences of data (besides sequences of images) whose samples contain some form of topological information, with the elements in those samples being especially correlated to their neighbors (e.g., brain signals, spectrograms). On the other hand, CNNs assume that the statistical characteristics of images are homogeneous and independent of the position in the image, and, therefore, they learn the same features along the whole image (and apply what is learnt in one region of the image to the other regions), which brings several computational and learning advantages. Our system could also be adapted to take advantage of this homogeneity, by making the input weights shared periodically along the layer in the two dimensions (e.g., having a hexagon of neurons whose weights are repeated periodically). In fact, such periodicity has also been observed in the primary visual cortex [54]. This could be useful for topological input data that is homogeneous along its extension, but in which input patterns don’t necessarily tend to shift laterally (or shifted patterns don’t imply similar information) as occurs in sequences of images (if they actually do, CNNs are probably a better option). In fact, while simple features of images (such as edges) usually behave this way, this is not generally true for more complex features, such as the presence or absence of an object. Indeed, in images that are close in time, such object may not only shift laterally, but also rotate, or even change its shape (e.g., a closing hand). CNNs trained in a supervised way are probably able to create associations among those different appearances of the same object thanks to the large amounts of labeled data, but they have more trouble deducing those associations in an unsupervised way, which may be a reason why convolutional autoencoders are not that good at learning high-level features [55]. This way, NILRNN may also bring advantages to the processing of sequences of images in the higher layers of the network.

When comparing NILRNN to complete CNNs (or to other deep architectures), one advantage of these architectures is that they stack blocks of layers to build deep hierarchies and extract more abstract or complex features. This is something that can also be done with our proposed system, by stacking blocks containing the recurrent layer followed by the max pooling layer to build deeper supervised or unsupervised networks. The resultant system, while composed of many layers, can still be trained in a shallow unsupervised way, by training the blocks one by one, learning at each step more abstract features (mimicking again somehow models of the neocortex [56]). Then, training successfully a classifier over those higher-level features may just require a small number of labeled samples. In addition, this hierarchy could be useful to better investigate our system and understand if it is able to learn useful representations at different levels of abstraction, or if, on the contrary, it is losing relevant information at each level. On the other hand, since, independently of the input data, the output of these blocks is always distributed in two dimensions and with neighbor neurons tending to fire close in time, making the input to the next block partially connected (as described before) may lead to good results while reducing the complexity of the network. One characteristic of this new hierarchical system would be that the activity in the layers changes slower in time as you go higher in the hierarchy. Such hierarchy of timescales is something that has been observed in the brain [57], and that also occurs in other brain-inspired systems such as hierarchical SFA or MTRNNs (see “Brain-Inspired Machine Learning Systems and Mechanisms’’), with the difference that, in our system, it would emerge spontaneously, without the need to impose explicit restrictions. Our system could take advantage of this behavior to reduce its computational cost by working at lower sample rates as it goes higher in the hierarchy.

Coming back to our shallow system, and as we already suggested in “The Feature Extraction System’’, NILRNN has several similarities with SFA: Both systems learn representations of the input that change slower in time than the input. In addition, to some extent, and similar to SFA, while our system is recurrent, its output depends mainly on its input at that instant. This way, we could say that both SFA and NILRNN learn in a “recurrent” way, considering the current sample as well as the previous ones (or next ones), while they then operate in a “feedforward” way, considering only the current sample. In fact, we could interpret that CNNs also behave in a similar way: While it is true that they are feedforward networks (both during learning and prediction), we could consider that CNNs have this temporal knowledge about the input “hardcoded” by design (e.g., grouping together shifted versions of the same pattern could be learnt from sequences of images). Taking all this into consideration, we could describe NILRNN as a feature extractor for sequences that learns slow-changing representations of the current input, even though internally it also keeps information about previous samples.

It is also worth comparing NILRNN to Hierarchical Temporal Memory (HTM) [41]. HTM is a machine learning system that is also highly inspired by our knowledge about the neocortex. It relies on mechanisms such as sparse coding or Hebbian learning to learn patterns and sequences via unsupervised learning. The HTM layer is to some extent analogous to our locally recurrent layer: both layers contain neurons connected both to the input and to other neurons in the same layer, learning this way to encode input patterns within a “context” (i.e., they contain information about the current and previous inputs). Some differences are that HTM works with boolean-valued (instead of real-valued) neurons (which may be a limitation in terms of dealing with uncertainty), and that they use Hebbian learning (instead of backpropagation) for the training of the system. Hebbian learning has been in fact explored in the training of NILRNN, but the unsatisfactory results obtained seemed to indicate that adapting the system for such learning may not be that straightforward. On the other hand, the self-organization mechanism that emerges in our recurrent layer, allowing a max pooling layer to then group the information, is something that does not exist in HTM. Still, HTM does group in columns neurons with the same input weights but different recurrent weights, i.e., neurons recognizing the same input pattern in different contexts. A similar arrangement has also been explored in our system, as it seemed it could push more together similar patterns occurring in different contexts (e.g., edges with similar orientations but shifting at different speeds), making our system closer to that desired “feedforward” behavior previously mentioned. However, the results obtained were not able to outperform those obtained without the columns.

Robustness

So far, we have compared NILRNN to other existing systems, discussing possible future directions mainly about its architecture (e.g., deep hierarchy, partially connected input). Other ideas that can be investigated are those involving the increase of the robustness of the system. Regularization methods, while usually focused on reducing overfitting in situations with limited training data (note that NILRNN is supposed to be trained with nearly unlimited data), often bring other advantages to the system related to its robustness. Our system already includes two regularization terms in its cost function: the L2 regularization term and the sparse regularization term. In addition, the predictive feature of our training system may also be acting as a regularization method: Preliminary results with systems reconstructing the previous inputs instead of predicting the next ones seemed to simply try to remember those previous inputs. With the predictive feature, instead, NILRNN seems to try to keep a meaningful context for the current input that allows it to better predict the next inputs and to handle the uncertainty, penalizing overconfident predictions, and leading to a better generalization and to a more robust system.

Other regularization methods could also be explored in our system, such as that of denoising autoencoders (i.e., introducing some kind of noise into the training input while leaving the output unchanged), or dropout. Still, dropout may not be as effective in our system, as, besides being mainly designed to address the issue of limited data [58] (which should not be an issue in our system), it doesn’t seem to perform so well when using sigmoid activation functions [59], and it also presents some issues when being applied over recurrent networks [60].

Comparison to Neocortical Behavior

Finally, a better understanding of NILRNN could also come from the comparison of its behavior and emerging characteristics with those observed in the neocortex (e.g., by training it with sequences of images and comparing its properties with those from the primary visual cortex). As we mentioned in “Results’’, some steps in this direction have already been taken, and already indicate that our system is showing analogous behavior to that of the primary visual cortex [53].

On the other hand, similar artificial systems could be built based on the same principles observed in the neocortex, but using different techniques. Comparing such systems would also allow us to understand what the best performing implementation is. For instance, a system with similar properties could be built by substituting the recurrent layer by a feedforward layer with an added cost term that penalizes very fast changes in space and time in its activity (or that rewards smooth and slow changes in the activity along the layer). This would reduce the internal complexity of the system, besides making unnecessary the prediction terms in the output of the learning system. However, all the internal information in the recurrent layer about the previous inputs (which could be useful in some applications) would be lost. Comparing NILRNN to such alternative versions could contribute to a better understanding of its behavior and to finding new directions to explore.

Conclusion

This article has introduced the Neocortex-Inspired Locally Recurrent Neural Network (NILRNN), which is a self-supervised feature learning shallow neural network for sequential data that draws inspiration from models of the primary visual cortex and other computational neuroscience concepts such as sparsity, self-organization or slowness. The system has been compared against other shallow unsupervised feature learning systems in the task of learning features for three different classification problems, outperforming all the other systems. It has also been compared against a variant of the system without its output max pooling layer. While the results seem to show that the complete system generally outperforms the ablated version (at least for sparse input data), this is not yet completely clear and needs further investigation.

Regarding the future work, one possibility is to build a hierarchy of stacked NILRNN blocks that can compete and be compared directly to other deep state-of-the-art systems in the task of feature learning of sequences. With the appropriate design, one advantage of the deep version of our system could be that it can still be trained in a shallow manner. Another possible direction is to introduce modifications to the system in order to improve its performance, e.g., by bringing new ideas from computational models of the brain or from other machine learning systems. Indeed, the fact that it is a novel system leaves much room for analysis and exploration of different possible variants, which may lead to new improved alternative versions.

References

Längkvist M, Karlsson L, Loutfi A. A review of unsupervised feature learning and deep learning for time-series modeling. Pattern Recogn Lett. 2014;42:11–24.

Lowe DG. Object recognition from local scale-invariant features. In: Proceedings of the Seventh IEEE International Conference on Computer Vision. vol.2. IEEE; 1999. p. 1150–1157.

Tiwari V. MFCC and its applications in speaker recognition. Int J Emerg Technol. 2010;1(1):19–22.

Jolliffe IT, Cadima J. Principal component analysis: a review and recent developments. Philos Trans R Soc A Math Phys Eng Sci. 2016;374(2065):20150202.

Fisher RA. The use of multiple measurements in taxonomic problems. Ann Eugen. 1936;7(2):179–88.

Dong G, Liao G, Liu H, Kuang G. A review of the autoencoder and its variants: A comparative perspective from target recognition in synthetic-aperture radar images. IEEE Geoscience and Remote Sensing Magazine. 2018;6(3):44–68.

Le Roux N, Bengio Y. Representational power of restricted Boltzmann machines and deep belief networks. Neural Comput. 2008;20(6):1631–49.

Zhong G, Wang LN, Ling X, Dong J. An overview on data representation learning: From traditional feature learning to recent deep learning. The Journal of Finance and Data Science. 2016;2(4):265–78.

Donahue J, Jia Y, Vinyals O, Hoffman J, Zhang N, Tzeng E, et al. Decaf: A deep convolutional activation feature for generic visual recognition. In: International Conference On Machine Learning. PMLR; 2014. p. 647–655.

Hoffer E, Ailon N. Deep metric learning using triplet network. In: International workshop on similarity-based pattern recognition. Springer; 2015. p. 84–92.

Malik M, Malik MK, Mehmood K, Makhdoom I. Automatic speech recognition: a survey. Multimed Tools Appl. 2021;80(6):9411–57.

Van-Horenbeke FA, Peer A. Activity, plan, and goal recognition: A review. Frontiers in Robotics and AI. 2021;8:106.

Yu Y, Si X, Hu C, Zhang J. A review of recurrent neural networks: LSTM cells and network architectures. Neural Comput. 2019;31(7):1235–70.

Wang C, Tang Y, Ma X, Wu A, Okhonko D, Pino J. Fairseq S2T: Fast Speech-to-Text Modeling with Fairseq. In: Proceedings of the 1st Conference of the Asia-Pacific Chapter of the Association for Computational Linguistics and the 10th International Joint Conference on Natural Language Processing: System Demonstrations. Suzhou, China: Association for Computational Linguistics; 2020. p. 33–39. Available from: https://aclanthology.org/2020.aacl-demo.6.

Wang S, Cao J, Yu P. Deep learning for spatio-temporal data mining: A survey. IEEE Trans Knowl Data Eng.. 2020.

Lake BM, Ullman TD, Tenenbaum JB, Gershman SJ. Building machines that learn and think like people. Behav Brain Sci. 2017;40.

Lukatela K, Swadlow HA. Neocortex. The corsini encyclopedia of psychology. 2010;p. 1–2.

Mesulam MM. From sensation to cognition. Brain: A Journal of Neurology. 1998;121(6):1013–1052.

Kravitz DJ, Saleem KS, Baker CI, Ungerleider LG, Mishkin M. The ventral visual pathway: an expanded neural framework for the processing of object quality. Trends Cogn Sci. 2013;17(1):26–49.

Lamme VA, Super H, Spekreijse H. Feedforward, horizontal, and feedback processing in the visual cortex. Curr Opin Neurobiol. 1998;8(4):529–35.

Narayanan RT, Udvary D, Oberlaender M. Cell Type-Specific Structural Organization of the Six Layers in Rat Barrel Cortex. Front Neuroanat. 2017;11:91. https://doi.org/10.3389/fnana.2017.00091.

Mountcastle VB. The columnar organization of the neocortex. Brain: A Journal of Neurology. 1997;120(4):701–722.

Choe Y. Hebbian Learning. In: Jaeger D, Jung R, editors. Encyclopedia of Computational Neuroscience. Springer, New York: New York, NY; 2015. p. 1305–9.

Turrigiano G. Homeostatic synaptic plasticity: local and global mechanisms for stabilizing neuronal function. Cold Spring Harb Perspect Biol. 2012;4(1): a005736.

Lee TS, Mumford D. Hierarchical Bayesian inference in the visual cortex. JOSA A. 2003;20(7):1434–48.

Hochstein S, Ahissar M. View from the top: Hierarchies and reverse hierarchies in the visual system. Neuron. 2002;36(5):791–804.

Berezovskii VK, Nassi JJ, Born RT. Segregation of feedforward and feedback projections in mouse visual cortex. J Comp Neurol. 2011;519(18):3672–83.

Gilbert CD. Laminar differences in receptive field properties of cells in cat primary visual cortex. J Physiol. 1977;268(2):391–421.

Graham DJ, Field DJ. Sparse coding in the neocortex. Evolution of Nervous Systems. 2006;3:181–7.

Miikkulainen R, Bednar JA, Choe Y, Sirosh J. Computational maps in the visual cortex. Springer Science & Business Media; 2006.

Binzegger T, Douglas RJ, Martin KA. Topology and dynamics of the canonical circuit of cat V1. Neural Netw. 2009;22(8):1071–8.

Hubel DH, Wiesel TN. Sequence regularity and geometry of orientation columns in the monkey striate cortex. J Comp Neurol. 1974;158(3):267–93.

Liu Z, Gaska JP, Jacobson LD, Pollen DA. Interneuronal interaction between members of quadrature phase and anti-phase pairs in the cat’s visual cortex. Vision Res. 1992;32(7):1193–8.

Hubel DH, Wiesel TN. Receptive fields, binocular interaction and functional architecture in the cat’s visual cortex. J Physiol. 1962;160(1):106–54.

Antolik J, Bednar JA. Development of maps of simple and complex cells in the primary visual cortex. Front Comput Neurosci. 2011;5:17.

Goodfellow I, Bengio Y, Courville A. Deep Learning. MIT Press; 2016. http://www.deeplearningbook.org.

Szeliski R. Computer Vision: Algorithms and Applications, 2nd ed. Springer; 2022. Available from: https://szeliski.org/Book/.

Wiskott L. Slow feature analysis: A theoretical analysis of optimal free responses. Neural Comput. 2003;15(9):2147–77.

Berkes P, Wiskott L. Slow feature analysis yields a rich repertoire of complex cell properties. J Vis. 2005;5(6):9.

Yamashita Y, Tani J. Emergence of functional hierarchy in a multiple timescale neural network model: a humanoid robot experiment. PLoS Comput Biol. 2008;4(11): e1000220.

Hawkins J, Ahmad S, Purdy S, Lavin A. Biological and Machine Intelligence (BAMI); 2016. Initial online release 0.4. Available from: https://numenta.com/resources/biological-and-machine-intelligence/.

Bartlett MS, Movellan JR, Sejnowski TJ. Face modeling by information maximization. Face Processing: Advanced Modeling and Methods; 2002. p. 219–53.

Atallah HE, Frank MJ, O’Reilly RC. Hippocampus, cortex, and basal ganglia: Insights from computational models of complementary learning systems. Neurobiol Learn Mem. 2004;82(3):253–67.

Gershenson C. Design and control of self-organizing systems. CopIt Arxives; 2007.

McClelland JL. How far can you go with Hebbian learning, and when does it lead you astray. Processes of Change in Brain and Cognitive Development: Attention and Performance XXI. 2006;21:33–69.

Luo W, Li J, Yang J, Xu W, Zhang J. Convolutional sparse autoencoders for image classification. IEEE Trans Neural Netw Learn Syst. 2017;29(7):3289–94.

Tsoi AC, Back A. Discrete time recurrent neural network architectures: A unifying review. Neurocomputing. 1997;15(3–4):183–223.

Hornik K, Stinchcombe M, White H. Multilayer feedforward networks are universal approximators. Neural Netw. 1989;2(5):359–66.

Yang AY, Jafari R, Sastry SS, Bajcsy R. Distributed recognition of human actions using wearable motion sensor networks. J Ambient Intell Smart Environ. 2009;1(2):103–15.

Jackson Z, Souza C, Flaks J, Pan Y, Nicolas H, Thite A. Jackson Z, editor.: Jakobovski/free-spoken-digit-dataset: v1. 0.8. Zenodo. Available from: https://github.com/Jakobovski/free-spoken-digit-dataset.

Rahman M, Willmore BD, King AJ, Harper NS. Simple transformations capture auditory input to cortex. Proc Natl Acad Sci. 2020;117(45):28442–51.

Sun Y, Wong AK, Kamel MS. Classification of imbalanced data: A review. Int J Pattern Recognit Artif Intell. 2009;23(04):687–719.

Van-Horenbeke FA, Peer A. The Neocortex-Inspired Locally Recurrent Neural Network (NILRNN) as a Model of the Primary Visual Cortex. In: IFIP International Conference on Artificial Intelligence Applications and Innovations. Springer; 2022. p. 292–303.

Paik SB, Ringach DL. Retinal origin of orientation maps in visual cortex. Nat Neurosci. 2011;14(7):919–25.

Lindsay GW. Convolutional neural networks as a model of the visual system: Past, present, and future. J Cogn Neurosci. 2021;33(10):2017–31.

Chomiak T, Hu B. Mechanisms of hierarchical cortical maturation. Front Cell Neurosci. 2017;11:272.

Quax SC, D’Asaro M, van Gerven MA. Adaptive time scales in recurrent neural networks. Sci Rep. 2020;10(1):1–14.

Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R. Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res. 2014;15(1):1929–58.

Dahl GE, Sainath TN, Hinton GE. Improving deep neural networks for LVCSR using rectified linear units and dropout. In,. IEEE international conference on acoustics, speech and signal processing. IEEE. 2013;2013:8609–13.

Bayer J, Osendorfer C, Korhammer D, Chen N, Urban S, van der Smagt P. On Fast Dropout and its Applicability to Recurrent Networks. In: Proceedings of the International Conference on Learning Representations; 2014. p.14. Available from: http://arxiv.org/abs/1311.0701.

Funding

Open access funding provided by Libera Università di Bolzano within the CRUI-CARE Agreement. This research was supported by the Euregio project OLIVER (Open-Ended Learning for Interactive Robots) with grant agreement IPN86, funded by the EGTC Europaregion Tirol-Südtirol-Trentino within the framework of the third call for projects in the field of basic research.

Author information

Authors and Affiliations

Contributions

FV-H did the main contribution to the conception and design of the work, creation of the software, running of the experiments and writing of the manuscript. Both authors contributed to the conceptualization, review, editing, and approval of the final version.

Corresponding author

Ethics declarations

Ethics Approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Conflict of Interest

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix. Synthetic Actions Input

Appendix. Synthetic Actions Input

The synthetic actions input used in “Results’’ is generated from a virtual 2D environment that contains a hand and four objects (see Fig. 9). The hand can perform four different actions: pick-and-place, push, pull and wait. At the beginning of the experiment, as well as periodically after a certain number of actions, the environment is restarted, and four new objects are selected randomly with replacement from a set of five different types of objects, which are placed in random positions of the environment. The target positions of the pick-and-place, push and pull actions are also chosen randomly. The different types of objects have different affordances and identifiers, which are defined at the beginning of the training in a random way. Finally, white noise is added to the position of the hand with respect to the target object so that the “grab” is performed over different object points.

Virtual 2D environment used to generate the synthetic actions input. The white rectangle represents the hand, and the squares with different colors represent different types of objects with different affordances and identifiers. The white shadow informs about the area of attention deduced from the hand trajectory

In order to make the system able to deal with different numbers of objects, a simple attention mechanism defines the object of attention based on the trajectory of the hand and the speed of the objects in case they are moving. On the other hand, to avoid dealing with a computer vision problem, the input is generated by extracting a set of variables from the environment and expressing them in a sparse (or fuzzy) form. These variables are the identifier of the object of attention, the velocity of the hand, the velocity of the object of attention, the position of the object of attention with respect to the hand, and a measure of how open or closed the hand is. The identifier of each type of object is already defined sparse and of size 10. The position and velocity are expressed through the location of a Gaussian over a 2D grid (5x5 for position and 3x3 for velocity). The location of the Gaussian is determined by the magnitude and direction of the position or velocity vector, through a non-linear mapping that assigns more resolution to smaller magnitudes. To express how open or closed the hand is, a vector of size 2 is used. This leads to a total input sample size of 55. Finally, to make the task more challenging, white noise is added to the sparse input.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Van-Horenbeke, F.A., Peer, A. NILRNN: A Neocortex-Inspired Locally Recurrent Neural Network for Unsupervised Feature Learning in Sequential Data. Cogn Comput 15, 1549–1565 (2023). https://doi.org/10.1007/s12559-023-10122-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-023-10122-x