Abstract

Integrating cognitive radio (CR) technique with wireless networks is an effective way to solve the increasingly crowded spectrum. Automatic modulation classification (AMC) plays an important role in CR. AMC significantly improves the intelligence of CR system by classifying the modulation type and signal parameters of received communication signals. AMC can provide more information for decision making of the CR system. In addition, AMC can help the CR system dynamically adjust the modulation type and coding rate of the communication signal to adapt to different channel qualities, and the AMC technique help eliminate the cost of broadcast modulation type and coding rate. Deep learning (DL) has recently emerged as one most popular method in AMC of communication signals. Despite their success, DL models have recently been shown vulnerable to adversarial attacks in pattern recognition and computer vision. Namely, they can be easily deceived if a small and carefully designed perturbation called an adversarial attack is imposed on the input, typically an image in pattern recognition. Owing to the very different nature of communication signals, it is interesting yet crucially important to study if adversarial perturbation could also fool AMC. In this paper, we make a first attempt to investigate how we can design a special adversarial attack on AMC. we start from the assumption of a linear binary classifier which is further extended to multi-way classifier. We consider the minimum power consumption that is different from existing adversarial perturbation but more reasonable in the context of AMC. We then develop a novel adversarial perturbation generation method that leads to high attack success to communication signals. Experimental results on real data show that the method is able to successfully spoof the 11-class modulation classification at a model with a minimum cost of about − 21 dB in automatic modulation classification task. The visualization results demonstrate that the adversarial perturbation manifests in the time domain as imperceptible undulations of the signal, and in the frequency domain as small noise outside the signal band.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

While the rapid development of wireless communication technology brings convenience to people, it also brings the tension of spectrum resources [1]. To alleviate the spectrum resource constraints, researchers have proposed cognitive radio (CR) [2, 3] technique. CR can detect the spectrum accessibility in real time and plan the spectrum resources dynamically. Automatic modulation classification (AMC) [4, 5] plays an important role in CR. AMC can identify the modulation mode and communication parameters of the received signal and provide more information for decision-making of the CR system. Deep learning (DL) techniques have been widely used in AMCs due to their capability in learning high-level or even cognitively reasonable representations [6,7,8,9,10,11,12]. However, DL is usually hard to explain and is challenged for its non-transparent nature [13]. In other words, albeit its effectiveness, one hardly knows what DL has exactly learned and why it can succeed, which hinders the application of DL in many risk-sensitive and/or security-critical applications. More seriously, recent studies have found that DL is susceptible to certain well-designed adversarial examples, which are defined as small and imperceptible perturbation imposed on input samples. Szegedy et al. [14] first found that adding imperceptible adversarial perturbations to input samples can easily fool well-performing Convolutional Neural Network (CNN) models. The effectiveness of the adversarial attack in the modulation classification scenario has been verified [15]. The Fast Gradient Sign Method (FGSM) [16, 17] is a simple, fast, and effective method for adversarial example generation. Papernot et al. [18] proposed a method based on the forward derivative to produce adversarial perturbations, called Jacobian-based saliency map attack (JSMA). Carlini and Wagner [19] proposed a method of generating adversarial perturbation and explored three different distance matrices (\(L_{1}\), \(L_{2}\), and \(L_{\infty }\)).

The research in the area of communication signals is still embryonic. Communication signals are often expressed as a waveform, rather than as pixels in images. Owing to the very different nature of communication signals, it is interesting yet crucially important to study if adversarial perturbation could also fool AMC.

To explore the performance of adversarial attack on DL-based AMC and provide an evaluation of the reliabilities for researchers, an algorithm for generating a minimum power adversarial perturbation is proposed in this paper. The method generates theoretically minimal perturbation that makes the modulation classification task of the neural network much less accurate, well concealment, and destructive.

The main contributions of this article are as follows:

-

We propose a minimum power adversarial attack which is more rational in the modulation classification scenario and validate the feasibility of the adversarial attack in the modulation signal. Experiment results indicate that our proposed method can misclassify a classifier with smaller perturbations.

-

We conduct extensive evaluations on open-source simulation dataset RML 2016 with 220,000 samples. Experimental results verify the high effectiveness of the proposed attacking algorithm. Our proposed method can misclassify a classifier with smaller perturbations than compared methods. Our method requires only perturbations of 2 orders of magnitude smaller than the original signals to enable the classifier to misclassify all the test samples.

Methodology

For communication systems, the received baseband signal can be expressed as

where \(n(t)\) is the additive white Gaussian noise (AWGN) with zero mean and unit variance. The signal \(x(t;{\mathbf{u}}_{k} )\) is modeled via a comprehensive form as

where \({\mathbf{u}}_{k}\) is defined as a multi-dimensional parameter set consisting of a bunch of unknown signal and channel variables, and it is given as

The symbols used in (2) and (3) are listed as follows:

-

1.

\(A\), the signal amplitude.

-

2.

\(\theta_{c}\), the phase shift introduced by the propagation delay and the initial phase together.

-

3.

\(N\), the number of received symbols.

-

4.

\(x^{k,i}\), the \(i\)-th constellation point under k-th modulation scheme,\(k \in \{ 1, \cdots ,C\}\), and \(C\) is the number of candidate modulation schemes to be identified.

-

5.

\(T_{s}\), the symbol interval.

-

6.

\(g(t) = h(t) * p(t)\), where \(*\) is the convolution operator, describing the effect of the signal channel \(h(t)\) and the pulse-shaping filter \(p(t)\).

-

7.

\(\varepsilon\), the normalized timing offset between the transmitter and the receiver, \(0 \le \varepsilon \le 1\).

We consider that the values of the above parameter set determine which modulation scheme the signal belongs to. The actual received signal in (1) is discrete, and the received sequence at a symbol interval \(T_{s}\) can be written as \({\mathbf{y}} = [y_{0} , \cdots ,y_{N - 1} ]^{T}\). The conditional probability density function (PDF) of \(y_{n}\) under hypothesis \(H_{k}\) can be written as

The elements in parameter set \({\mathbf{u}}_{k}\) are deterministic or random variables with known PDFs. Hence, the marginalized density function of an observation sequence can be obtained by taking the statistical averaging of (4), which is given as

where \(E\left(\cdot \right)\) is the expectation operator.

We assume that the channel environment is stationary, and the parameters in set \({\mathbf{u}}_{k}\) is static over the whole observation period. Besides, the normalized epoch \(\varepsilon = 0\) and the noise is white. Thus, the elements of \({\mathbf{y}}\) are I.I.D. The function \(p\left( {y_{n} |H_{k} ,{\mathbf{u}}_{k} } \right)\) represents the PDF of single observation at instant \(n\), and the joint likelihood function of the whole observation sequence can be described as

According to the maximum likelihood criterion, the most possible hypothesis \(H_{k}\) which is correspondent to the k-th modulation scheme is finally chosen with the maximal likelihood value.

where the top-mark \(\stackrel{\wedge }{\left(\cdot \right)}\) denotes the estimation.

The method based on maximum likelihood (ML) has been proved to achieve the optimal performance. However, the exact likelihoods are hard to achieve in complex environments. To obviate this problem, the deep learning-based methods can learn complex functions automatically. While deep learning solves the problem of complex modeling, its robustness has been questioned.

For advanced neural network classifiers, a minimal adversarial perturbation r can be added to the input samples, such that the classifier cannot distinguish the input samples as its true label \(\widehat{k}\left(x\right)\). The formal description is given as follows:

where x is the input data and \(\widehat{k}\left(x\right)\) is the label predicted by the classifier. \(\Delta \left(x;\widehat{k}\right)\) can be referred to as the robustness of the classifier at the input point. In other words, this minimum adversarial perturbation represents the ability of the classifier to withstand the maximum perturbation in any direction.

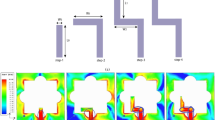

As shown in Fig. 1, in order to find the optimal solution, we first reduce the classifier to a simple binary classifier. Assume \(\widehat{k}\left(x\right)=sign\left(f\left(x\right)\right)\), \(\omega\) and f be any judgment function, i.e., \(f:{\mathbb{R}}^{preim}\to {\mathbb{R}}\).

Set \(F=\left\{x:f\left(x\right)=0\right\}\) as the zero-valued hyperplane of the function f. When \(f={\omega }^{T}x+b\), it is easy to obtain that the robustness \(\Delta \left({x}_{0};\widehat{k}\right)\) of in \({x}_{0}\) is equal to the distance from the point \({x}_{0}\) to the hyperplane\(F=\left\{x:f\left(x\right)=0\right\}\). The minimal perturbation that makes the classifier change its judgment is the orthogonal projection of \({x}_{0}\) to the hyperplane. The mathematical expression is:

In the case of a linear decision function, it happens to be the direction of the gradient of the decision function and the preceding scalar \(f\left({x}_{0}\right)/\parallel \omega \parallel \begin{array}{c}2\\ 2\end{array}\) corresponds to the optimal perturbation coefficient \(\varepsilon\). At this time, \(r_{*} (x_{0} )\) is the distance from point \(x_{0}\) to the hyperplane.

For a nonlinear decision function \(f\), as shown in Fig. 2, we first consider the case where the dimension of input space is \(n = 1\). We then use the iterative approach to approximate the optimal solution. In the first iteration when the input is \(x_{0}\), the green line represents the first-order Taylor expansion tangent, i.e., \(f(x_{0} ){ + }\nabla f(x_{0} )^{\rm T} (x - x_{0} )\), and the intersection of the tangent line and the input data set \({\mathbf{\mathbb{R}}}^{1}\) is \(x_{1}\), at which time the distance of the input data \(x_{0}\) from \(x_{1}\) is \(r_{1}\), which is the perturbation of the first iteration. The iteration stops when the input data sample is on the other side of the decision function. The minimum perturbation obtained at this point is

As such, in the \(i\) th iteration, the minimum perturbation can be obtained as:

The above optimization represents the case where the classifier is a binary classifier, which can also be extended to a multiclass classifier. When it is extended to a multi-class classifier, we use the method of one-vs-others. Let the number of categories be c, and the classification function at this time is \(f:{\mathbb{R}}^{n}\to {\mathbb{R}}^{c}\). The classification task can be expressed as:

Similar to the binary case, the method is first generalized to the linear case and then to arbitrary classifiers. At this point, the problem is transformed into an optimal perturbation problem in the \(1 - n\) classification problem. The minimum distance to multiple bounds is the minimum adversarial perturbation. The formal description is as follows:

Implementation

In this study, all experiments were performed on an NVIDIA GeForce GTX 3090 using on GPU per run. Each of the four attack methods is implemented using pytorch1.10 and cuda11.3. We use Adam to optimize the target model, and the ReLU activation function was used in all layers, and the categorical-cross-entropy loss functions were tried in the experiments. We adopt the early stopping strategy to decide whether to stop the training.

To test the algorithm proposed in this paper, we conducted experiments on the open-source simulation dataset RML 2016.10A designed by DeepSiG [20], which includes 8 digital modulation types BPSK, QPSK, 8PSK, 16QAM, 64QAM, BFSK, CPFSK, and PAM4, and 3 analog modulation WB-FM, AM-SSB, and AM-DSB. The signal–noise-ration (SNR) range covers − 20 ~ 18 dB. The dataset contains 220,000 samples and each sample contains two IQ quadrature signals, and the length of each sample is 128 points. All data were normalized to zero mean and unit variance. The time domain waveforms for 11 modulation styles of high-SNR are shown in Fig. 3. The ratio of training, validation, and test set is 7:2:1.

Experiments and Results

To ensure that the modulation dataset adapts and matches the CNN model, we adopt the VT CNN2 model [21], which was optimized and improved for the dataset RADIOML 2016.10A. That is, the number of layers, network parameters, and initial weights of CNN are modified for the dataset and the input signal is reshaped into a 2 × 128 format at each SNR; these formats are adjusted according to length, width, and height. The specific structure is shown in Table 1. We also consider adopting widely used DL models such as VGG11 [22] and Resnet18 [23, 24] to test our algorithm.

Time–Frequency Characteristics of Communication Signal Adversarial Examples

The purpose of adversarial examples is to make the classifier misjudgment on the basis of not destroying the original examples as much as possible. Therefore, it is necessary to compare the difference between the adversarial examples and the original examples from both perspectives of time and frequency. As shown in

Fig. 4a, there is no obvious difference between the adversarial examples and the original signals, and there are only some slight slope changes at some signal time-domain inflection points. As shown in

Time domain waveform and spectrum of 64QAM modulated signals. a The time domain waveform of 64QAM modulated signal. The blue curve is the original 64QAM modulated signal, and the red curve is the adversarial example. The vertical coordinates of the adversarial example signal are artificially adjusted to show the differences. b The spectrum of modulated signal. The blue curve is the original 64QAM modulated signal, and the red curve is the adversarial example

Figure 4b, there is also no significant difference between the main frequency bands of the adversarial examples and the original signals. The number of peaks in the adversarial sample signals is the same as that of the original signal. It can be said that the increased adversarial perturbation is very subtle.

Effectiveness of Adversarial Examples

After studying the time–frequency characteristics of the adversarial examples, it is found that the adversarial example is difficult to be distinguished from the original signal. On this basis, it is necessary to study whether the adversarial examples have the effect of misclassifying the classifier.

In order to evaluate the robustness to adversarial perturbations of a classifier, we compute the average perturbation-to-signal ratio (PSR), defined by.

where \(P_{r}\) is the power of adversarial perturbations and \(P_{s}\) is the power of signals. The PSR represents the magnitude of adversarial perturbations required to misclassify a classifier.

We compare the proposed method to several attack techniques, including FGSM [16], PGD [25], and white noise attack (WGN). The accuracy and average PSR of each classifier computed using different methods are reported in Table 2. Since the dataset contains some low-SNR (− 20dB ~ − 2 dB) signals that are difficult to classify by the classifiers, we only report the results for high-SNR(0 dB ~ 18 dB) signals in Table 2. Our definition of attack success is that all examples are misclassified. In particular, the FGSM and WGN method cannot misclassify all examples, and these two methods can only reduce the accuracy to about 9%. It can be seen that our proposed method estimates smaller perturbations than other competitive methods. The perturbations estimated by our method are 100 smaller in magnitude than the original signal.

For each modulated signal, the corresponding adversarial example signal is calculated and fed to the already trained neural network. The confusion matrix after classification is shown in Fig. 5. Figure 5a shows the confusion matrix in which the classifier VT_CNN2 identifies the original signals. Most of the signals are classified correctly. Figure 5b shows the confusion matrix in which the classifier classified the signals attacked by the proposed method. It can be seen that the matrix approximates a symmetric matrix, indicating that some particular classes are easily confused with each other, such as {AM-DSB, AM-SSB}, {QAM16, QAM64}, {8PSK, QPSK}, and {BPSK, PAM4}. This conclusion will guide subsequent research to further explore the weaknesses of the classifier.

Power of Adversarial Perturbation Required for Successful Attack

The core idea of the adversarial example generation technique proposed in this paper is to find the minimum adversarial perturbation that makes the classifier misclassified. This section will show the minimum perturbation power required for different modulations.

As can be seen from Table 3, the maximum PSR is − 20.05 dB and the minimum is − 43.10 dB. Ideally, the model is spoofed for classification of AM-DSB modulation when the PSR is − 43.1 dB, and in the worst case, when the PSR is − 20.05 dB, the model is spoof for classification of CPFSK.

Through the abovementioned experiments, the DNN-based modulation classification is quite vulnerable to adversarial attacks. We believe that the security issues could be a main concern in AMC.

Defense of Adversarial Attack

To deal with these types of attacks, we use the adversarial training [16] approach to build more robustness classifiers for the dataset. The loss function of adversarial training is formulated as:

where \(L\left( {\theta ,{\mathbf{x}},y} \right)\) is the loss function of original model, and \(\alpha = 0.5\). \(\varepsilon\) is the step size of adversarial examples, and we use PSR to measure its strength. The evolution of PSR for different robust classifiers is shown in Table 4. Observe that retraining with adversarial examples significantly increases the robustness of the classifiers to adversarial perturbations. For example, the robustness of the network Resnet18 is improved by 4.38 dB and VGG11’s robustness is increased by about 3.25 dB. Moreover, adversarial training is also benefit to the robustness of classifiers for other adversarial attacks and WGN attack. Quite surprisingly, the accuracy of VGG11 and Resnet18 for original signals both increase to 75% after adversarial training, but the VT_CNN2’s decreases to 36%. We think that this behavior is due to the depth of VT_CNN2 being relatively shallow, and its capacity is not enough. Adversarial training will increase the complexity of dataset, so the simple classifier will lose its accuracy.

Conclusions

In this paper, we propose a minimum-power adversarial example generation method for AMC tasks. We test our method in three classifiers, including VT_CNN2, VGG11, and Resnet18. The results show that the CNN-based AMC method is very vulnerable to the proposed adversarial attack. Our method simply generates the raw signals by about 100 times smaller adversarial perturbations, making the classifier completely misidentified. We visualize the samples before and after adding adversarial perturbations from the perspective of time domain waveform and spectrum. These extensive results show that adversarial perturbation is imperceptible in both the time and frequency domains of the signal. We also present the confusion matrix and the minimum PSR required to attack each modulation, which helps to reveal the vulnerable point of classifiers for AMC task.

Furthermore, in order to deal with these attacks, we adopt adversarial training to retrain our classifiers. The results indicate that adversarial training can indeed improve the robustness of classifiers. The robustness of the three classifiers, VT_CNN, VGG11, and Resnet18, against our attack has been improved by 7.06 dB, 3.25 dB, and 4.38 dB.

In the next step, we will construct the real-RF-world communication signal for attack and defense environments and verify the effectiveness of adversarial attacks in a variety of complex electromagnetic environments.

Data Availability

The dataset RML 2016.10A [20] that supports the findings of this study are available in https://www.deepsig.io/datasets for free.

References

Jin X, Sun J, Zhang R, Zhang Y, Zhang C. Deep learning for an effective non-orthogonal multiple access scheme. IEEE Trans Mobile Comput. 2018;17(12):2925–38.

Khan AA, Rehmani MH, Reisslein M. Cognitive radio for smart grids: survey of architectures, spectrum sensing mechanisms, and networking protocols. IEEE Commun Surv Tut. 2015;18(1):860–98.

Ul Hassan M, Rehmani MH, Rehan M, et al. Differential privacy in cognitive radio networks: a comprehensive survey. Cogn Comput. 2022. https://doi.org/10.1007/s12559-021-09969-9.

Shi C, Dou Z, Lin Y, Li W. Dynamic threshold-setting for RF powered cognitive radio networks in non-Gaussian noise. Physical Communication. 2018;27(1):99–105.

Wang H, Li J, Guo L, Dou Z, Lin Y, Zhou R. Fractal complexity based feature extraction algorithm of communication signals. Fractals. 2017;25(4):1740008–20.

Zhang Z, Guo X, Lin Y. Trust management method of D2D communication based on RF fingerprint identification. IEEE Access. 2018;6:66082–7.

Wang Y, Liu M, Yang J, Gui G. Data-driven deep learning for automatic modulation recognition in cognitive radios. IEEE Trans Veh Technol. 2019;68(4):4074–7.

Long J, Shelhamer E, Darrell T. Fully convolutional networks for semantic segmentation. In Proc IEEE Conf Comp Vision Pattern Recogn. 2015;1(1):3431–3440.

Meng F, Chen P, Wu L. Automatic modulation classification: a deep learning enabled approach. IEEE Trans Vehicular Technol. 2018.

Zhao Y, Wang X, Lin Z, et al. multi classifier fusion for open set specific emitter identification. Remote Sens. 2022;14(9):2226. https://doi.org/10.3390/rs14092226.

Sun L, Wang X, Huang Z, Li B. Radio frequency fingerprint extraction based on feature inhomogeneity. IEEE Internet Things J. 2022. https://doi.org/10.1109/JIOT.2022.3154595.

Sun L, Wang X, Zhao Y, Huang Z, Chun Du. Intrinsic low-dimensional nonlinear manifold structure of radio frequency signals. IEEE Commun Lett. 2022. https://doi.org/10.1109/LCOMM.2022.3173990.

Huang K, Hussain A, Wang QF, et al. Deep learning: fundamentals, theory and applications. Springer, ISBN 978–3–030–06072–5. 2019.

Szegedy C, et al. Intriguing properties of neural networks. In Proc Int Conf Learn Repr. 2015;1–10.

Ke D, et al. Application of adversarial examples in communication modulation classification. 2019 International Conference on Data Mining Workshops (ICDMW). 2019.

Goodfellow IJ, Shlens J, Szegedy C. Explaining and harnessing adversarial examples. Comp Sci. 2014.

Lyu C, Huang K, Liang HN. A Unified Gradient Regularization Family for Adversarial Examples. ICDM. 2015.

Papernot N, Mcdaniel P, Jha S, et al. The limitations of deep learning in adversarial settings. IEEE Eur symp sec privacy (EuroS&P). 2016.

Carlini N, Wagner D. Towards evaluating the robustness of neural networks. IEEE. 2017.

DeepSig, Deepsig dataset: Radioml 2016.10a, 2016. Available: https://www.deepsig.io/datasets.

Song L, Qian X, Li H, Chen Y. Pipelayer: a pipelined reram-based accelerator for deep learning. In Proc IEEE Int Symp High Perform Comput Arch. 2017;1(1):541–552.

Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. 2014. arXiv preprint arXiv:1409.1556.

He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. In Proc IEEE Conf Comp Vis Pattern Recogn. 2016;770–778.

Zhang S, Huang K, Zhu J, Liu Y. Manifold adversarial training for supervised and semi-supervised learning. Neural Netw. 2021;140:282–93.

Madry A, et al. Towards deep learning models resistant to adversarial attacks. 2017. arXiv preprint arXiv:1706.06083.

Funding

This research is supported by the Program for Innovative Research Groups of the Hunan Provincial Natural Science Foundation of China (No. 2019JJ10004).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics Approval

This article does not contain any experiments with human or animal participants performed by any of the authors.

Consent to Participate

Informed consent was obtained from all individual participants included in the study.

Conflict of Interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ke, D., Wang, X., Huang, K. et al. Minimum Power Adversarial Attacks in Communication Signal Modulation Classification with Deep Learning. Cogn Comput 15, 580–589 (2023). https://doi.org/10.1007/s12559-022-10062-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-022-10062-y