Abstract

Word embeddings are the semantic representations of the words. They are derived from large corpus and work well on many natural language tasks, with one downside of costing large memory space. In this paper, we propose binary word embedding models based on inspirations from biological neuron coding mechanisms, converting the spike timing of neurons during specific time intervals into binary codes, reducing the space and speeding up computation. We build three types of models to post-process the original dense word embeddings, namely, the homogeneous Poission processing-based rate coding model, the leaky integrate-and-fire neuron-based model, and the Izhikevich’s neuron-based model. We test our binary embedding models on word similarity and text classification tasks of five public datasets. The experimental results show that the brain-inspired binary word embeddings (which reduce approximately 68.75% of the space) get similar results to original embeddings for word similarity task while better performance than traditional binary embeddings on text classification task.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

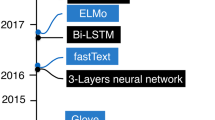

Word embeddings models can convert both semantic and syntactic information of words into dense vectors, for example, Word2Vec [1] and GloVe [2]. Recently, they attract a lot of attention due to their good performances in various natural language processing tasks, such as language modeling [3], parsing [4], sentence classification [5], and machine translation [6].

However, these dense representations are mostly derived from statistical property of large corpus while are lack of interpretability in each dimension of the word vectors. Several works have tried to transform dense word embeddings into sparse ones to improve the interpretability. Murphy et al. introduced a matrix factorization algorithm named non-negative sparse embeddings (NNSE) on co-occurrence matrix to get sparse, effective and interpretable embeddings [7]. Faruqui et al. defined a L1 regularized objective function and proposed an post-process optimization algorithm to convert original dense embeddings into sparse or binary embeddings. They call them sparse or binary overcomplete word vector [8]. Sun et al. introduced an algorithm to get sparse embeddings during training Word2Vec model through L1 regularizer on cost function and regularized dual averaging optimization algorithm [9]. For binary word embeddings, there are also some rounding algorithms on converting dense vectors into discrete integer values to reduce memory. Ling et al. proposed post-processing rounding, stochastic rounding, and auxiliary update vectors algorithms for word embeddings with limited memory, which is named as truncated word embeddings [10]. The interpretability issue in these works is mentioned but not demonstrated clearly. In this paper, we want to improve it via a brain-inspired approach, explaining each dimension of word embeddings based on neuron coding models.

In biological brains, the encoding of information in the areas such as inferior temporal visual cortex, hippocampus, orbitofrontal cortex and insula is with sparse distributed representation [11]. Many experimental evidences have indicated that biological neural systems use the timing of spikes to encode information [12,13,14]. The spike trains of cell activities during information transition inspire us to combine traditional word embeddings and neuron coding models into binary embeddings. In this paper, we perform post-process operations on original dense word embeddings to get binary ones with inspirations from biological neuron coding models, and the proposed binary embeddings are with less space occupation and with better interpretability than previous models.

Related Works

Neuron Coding

Neuron coding is concerned with describing the relationship between the stimulus and the neuronal responses [15]. A great many efforts have been dedicated to developing techniques to enable the recording of the brain’s electrical activity at different spatial scales, such as single cell spike train recording, local field potential (LFP), and electroencephalogram (EEG) [16]. Neuron coding models mainly concern how neurons encode, transmit, and decode information, and their main focus is to understand how neurons respond to a wide variety of stimuli, and to construct models that attempt to predict responses to other stimuli.

Neurons propagate signals by generating electrical pulses called action potentials: voltage spikes that can travel down nerve fibers. For example, sensory neurons change their activities by firing sequences of action potentials in various temporal patterns, with the presence of external sensory stimuli, such as light, sound, taste, smell and touch [16]. It is known that information about the stimulus is encoded in action potentials and transmitted through connected neurons in our brains.

There are various kinds of hypotheses on neuron coding based on recent neurophysiological findings on biological nervous system, mainly including spike rate coding and spike time coding. For spike rate coding, only the firing rate in an interval is concerned as a measurement for information carried. Rate coding is firstly motivated by the observation of the frog cutaneous receptors by Adrian et al. in 1926 that physiological neurons tend to fire more often for stronger stimuli [17]. Spike rate coding has been the main paradigm in artificial neural networks, such as sigmoidal neurons. Meanwhile, the Poisson-like rate coding is widely used by physiologists to describe how the neurons transmit information. Recently, some neurophysiological results show that efficient processing of information is more likely based on precise timing of action potentials rather than firing rate in some biological neural systems [18,19,20]. For timing coding hypotheses [21], they mostly concentrate on the timing of individual spikes and the typical ones are the time to first spike [22, 23], rank order coding [20, 24], latency coding [25], and phase coding [26].

In our study, we use Poisson-like coding for spike rate coding and various spiking neuron models for time coding. We try to apply these biological neuron coding hypotheses to build binary word embedding models.

Spiking Neural Network Models

Spiking neural networks (SNNs), which are highly inspired from recent advancement in neuroscience, are often referred as the third generation neural network models [27]. Different from traditional neural networks, SNNs consider the timing of individual spikes as the means of communication and neural computation [21].

Spiking neuron models are the basis of SNNs, which describe the properties of certain cells in the nervous system that generate spikes across their cell membrane. The most well-known neuron model is Hodgkin-Huxley model (H-H model). In 1952, Hodgkin and Huxley did experiments on the giant axon of squid with the voltage clamp technique, which punctured the cell membrane and allowed to force a specific membrane voltage or current [28]. The model was proposed by the recordings and fitting results, well describing the change of ion channel and neuron behavior after stimulation.

In the H-H model [29], the semipermeable cell membrane separates the interior of the cell from the extracellular liquid and acts as a capacitor. Because of the active ion transportation through the cell membrane, the ion concentration inside the cell is different from that in the extracellular liquid. The Nernst potential generated by the difference in ion concentration is represented by a battery.

The model takes three types of channel into consideration: a sodium channel, a potassium channel, and an unspecific leakage channel with resistance R. From the definition of a capacity C = Q/v where Q is a charge and v is the voltage across the capacitor, thus:

The leakage channel is described by a voltage-independent conductance gL = 1/R. For the sodium channel and the potassium channel, if both of them are open, they transmit currents with a maximum conductance gNa or gK, respectively. However, the channels are not always open; the probability that a channel is open is described by additional variables m, n, and h. The combined action of m and h controls the Na+ channels while the K+ gates are controlled by n.

The parameters ENa, EK, and EL are empirical parameters and the gating variables m, n, and h are defined by differential equations [28].

In addition to the H-H model, other types of spiking neuron models have been proposed, such as integrate-and-fire models and variants, Izhikevich’s neuron model, and spike response model (SRM). Recently, SNN-based models have been applied in variant AI applications, such as character recognition [30, 31], object recognition [32], image segmentation [33], speech recognition [34], robotics [35], knowledge representation [36], and symbolic reasoning [37]. In this paper, we will use leaky integrate-and-fire model and Izhikevich’s neuron model to convert the word embeddings into more explainable binary embeddings.

Word Embedding Models Based on Inspirations from Biological Neuron Coding

The Framework

We build unsupervised models for post-processing binary word embeddings based on two types of brain-inspired models, homogeneous Poisson process and spiking neural networks. Based on preprocessed word embeddings, such as Word2Vec and GloVe, these models convert original dense embeddings into the form of binarization. Different from traditional works on binary word representations, our models are inspired by neuroscience which are biologically plausible and more interpretable.

To mimic information transmission in biological brains, we take temporal information into consideration. As Fig. 1 shows, our models combine original dense word embeddings and neural coding algorithms to get the spiking times of neurons during a given period of time. We denote the original dense word embeddings matrix as W, for each element wid, where i = 1,2,⋯ ,|N|, d = 1,2,⋯ ,|D|, |N| represents the total number of words and |D| represents the dimensions of each word. For each word, we build a neural model based on the value of each dimension. And during a given time T, we record the membrane potential for each neuron per Δt, via neural coding algorithms which will describe in “Homogeneous Poisson Process-Based Binary Word Embeddings” and “Spiking Neural Networks Based Binary Word Embeddings.” Then, spiking times matrix S(i), which contains all neurons’ spiking times for the i th word, will be flattened as a vector f(i) with each row concatenated head to tail. The dimensions for f(i) is |D|× (T/Δt). Finally, to make our model more robust, we introduce the tolerance factor tol. We allow a window of tol × Δt to generate a binary bit, and obtain the binary word embeddings in the following way:

The \(\mathcal {T}(vector)\) operation means that if there are 1s in the vector, then the bit is 1, otherwise it is 0.

Homogeneous Poisson Process-Based Binary Word Embeddings

Poisson-like rate coding is a major algorithm to simulate spiking response to stimuli. Biological recordings from medial temporal [38, 39] and primary visual cortex [40] of macaque monkeys have shown good evidence for Poisson process-based coding.

For homogeneous Poisson process, it assumes that for the current spike, there is no dependence at all on preceding spikes and the instantaneous firing rate r is constant over time. Consider that we are given a interval (0, T) and we place a single spike in it randomly. If we pick a subinterval (t, t + Δt) of length Δt, the probability that the spike occurred in the subinterval equals Δt/T. When we place k spikes in (0, T), according to binomial formula, the probability that n of them fall in (t, t + Δt) is:

Keeping fire rate r = k/T constant, we increase k and T synchronously. As k →∞, the probability becomes:

This is the probability density function for Poisson distribution.

In our homogeneous Poisson process-based binary word embeddings model, we consider each dimension as an independent homogeneous Poisson process and the normalized value of the dimension \(w_{id}^{normalized}\) as the constant firing rate. Following the spike generator within the program, for each Δt in the interval (0, T), we compare \(w_{id}^{normalized}\cdot {\varDelta } t\) with a random variable xrandom. Then, we can get the spiking time matrix in this way:

Spiking Neural Networks Based Binary Word Embeddings

The LIF-Based Binary Word Embedding Model

The leaky integrate-and-fire (LIF) neuron model, a simplified version of H-H model, is one of the simplest spiking neuron models [41]. LIF model is widely used because it is biologically realistic and computationally simple to be analyzed and simulated [31, 42, 43].

In the LIF model, as Eq. 7 shows, v is the membrane potential, τm is the membrane time constant, and R is the membrane resistance, and for LIF-based word embeddings model, we replace the input current I with the product of the d th dimension value of the i th word and current boost factor Iboost.

In our LIF-based binary word embedding model, we regard the value Iboost ⋅ wid as the intensity of current for neurons, and we get the spiking time matrix based on the record of membrane potential v. In addition, we also try to add white noise to the current to improve its robustness.

The Izhikevich Neuron-Based Binary Word Embedding Model

The Izhikevich neuron model is not only capable of producing rich firing patterns exhibited by real biological neurons but also computationally simple [44]. The model makes use of bifurcation methodologies [45] to reduce more biophysically accurate H-H neuron model to a simple one of the following form:

If v(t) ≥ vth, then v(t) ← c and u(t) ← u(t) + d.

In the Izhikevich neuron model, the meaning of v, vth, and vr are the same as in the LIF model, while u represents the membrane recovery variable and a, b, c, and d are four important hyper-parameters. The parameter a describes the time scale of u, b describes the sensitivity of u to the subthreshold fluctuations of v, and c is used to describe the after-spike reset value of v and is caused by fast high-threshold K+ conductances. d is used to describe the after-spike reset of u and is caused by slow high-threshold Na+ and K+.

As Izhikevich et al. [44] shows, different choices of these four parameters can simulate different types of neurons in the mammalian brains, such as excitatory cortical cells, inhibitory cortical cells, thalamocortical cells, etc. In this paper, we mainly focus on excitatory and inhibitory cortical neurons. According to the intracellular recordings, cortical cells can be divide into different types, for example, regular spiking (RS), intrinsically bursting (IB), and chattering (CH) for excitatory neurons while fast spiking (FS) and low-threshold spiking (LTS) for inhibitory neurons.

In our Izhikevich neuron model-based binary word embedding models, we make use of the combination of excitatory and inhibitory neurons at the rate of 4:1, which is motivated by the rate in mammalian cortex [44]. As mentioned before, for each word, we set |D| neurons and regard the product of the original word embeddings wid and a factor Iboost as the the current for the model. We set each neuron to excitatory/inhibitory sub-models, and for different dimensions of each word, we get the spike times according to its sub-models.

Experiment Validations

Validation Tasks and Datasets

We evaluate our binary embeddings on word similarity and text classification tasks. The word similarity task has been widely used to measure in which degree the word embeddings can capture the similarity between two words, while the text classification task is a traditional NLP application. In our experiment, all the binary word embedding models are based on two kinds of well-accepted original word embeddings, namely, Word2Vec [1] and GloVe [2].

For word similarity task, we find similar words via Hamming distance, which will be faster than traditional cosine distance for dense embeddings and we evaluate embeddings on three public datasets: (1) WordSim-353, it is the most widely used dataset for word similarity test, consisting of 353 pairs of words [46]; (2) SimLex-999, it consists of 999 pairs of words and provides a way of measuring how well the word embeddings capture similarity, rather than relatedness or association [47]; (3) Rare Words, it consists of 2,034 word pairs proposed by Luong et al. [48], focusing on rare words to complement exiting ones. All these pairs of words are along with human-assigned similarity scores and we check Spearman’s rank correlation coefficient between word embeddings and the human labeled ranks.

For the text classification task, we do OR operation on binary embeddings to generate the representation for text and use the k-nearest neighbors (kNN) classifier to measure accuracy. We validate our algorithms on two public text datasets: (1) Search Snippets, it is a short text dataset collected by Phan et al. [50], which is selected from the results of Web search transaction using predefined phrases of 8 different domains; (2) Sentiment Analysis, it is proposed by Socher et al. [49] and is a treebank of sentences annotated with sentiment labels from movie reviews. The sentences in the treebank were split into a train (8544), dev (1101), and test splits (2210). We merge the train and dev part for the kNN classifier and ignore neutral sentences, analyzing performance on only positive and negative class.

Experiment Details and Results

In our experiment, we use the pre-trained GloVeFootnote 1 and Word2VecFootnote 2 embeddings, both of which are 300 dimensions. We set three comparative experiments of original embedings, binary embeddings, “Overcomplete-B” derives from Faruqui’s work [8], and “Rude Binarization” convert original embeddings into binary ones via simple sign function.

For all the biological neuron coding-inspired models, we set the interval T = 10 ms and subinterval Δt = 0.1 ms. We find the best hyper-parameter through grid-search on word similarity tasks and apply these for both experiment tasks. For Poisson, LIF, and LIF with noise-based model, the tol is 5, while for other models, tol is 10. For LIF and LIF with noise model, τm = 10 and vth = 15, while for Izhikevich model, vth = 30, and other parameters follow [44] for different sub-models. The Iboost factors are 100 and 200 for GloVe and Word2Vec respectively. In Addition, for Poisson coding and LIF with noise model, we do 10 times for each, with different random seeds, and Table 1 shows the average and their standard deviation results.

Result Analysis

Through analysis from the data shown in Tables 1 and 2 and Fig. 3, we can infer that: (1) We make an exploration on how to generate binary embeddings via biological neuron coding-inspired models (Figs. 2 and 3. The results show that the SNN-based models show good performance while the Poisson coding-based model reflected rate coding’s weakness when transforming dense information into binary bits. Which means, it cannot carry enough information to represent stimuli or patterns. (2) For word similarity task, binary word embeddings, especially rude binarization, LIF-based, and Izhkevich-based models which are transformed through dense word embeddings, can get similar results to original ones. (3) The LIF-based binary embeddings model performs well on word similarity tasks while somehow bad on text classification task. This may due to over simplified mechanism of LIF model, making it robust to represent words while lost many semantic information; LIF model with noise can improve the performance of text classification task, while it is unstable and can pull down the word similarity results. (4) The Izhkevich neuron-based binary embedding model gets excellent results on both tasks, especially the combination of RS and FS neuron sub-models is the best one. The model combines the excitatory and inhibitory neurons to mimic the neurons in the biological brain, making a difference when converting the original dense embeddings into binary ones. (5) From the perspective of space occupation, for database of 3 million words (such as the public pre-training Word2Vec vectors) with 300 dimensions takes 3.6 GB in floating point while 1.125 GB as 3000-bit codes (tol = 10) for the Izh_RS+FS model, which reduced approximately 68.75% space occupation. For neuron coding-based binary embeddings models, the compression ratio is mainly due to the run time and the tolerance factor tol.

Conclusion

In this paper, we propose three kinds of biological neuron coding-inspired models to generate binary word embeddings, which show better performance and interpretability compared to existing works on word similarity evaluation and text classification task. To the best of our knowledge, this is the first attempt to convert the dense embeddings into binary ones via spike timing, and we have proved its feasibility on some natural language processing applications.

Future Work

Due to the limitation on the performance of supervised SNNs, in this paper, we do post-processing operations on given word embeddings. However, we are looking forward to build SNN-based language model to get brain-inspired word embeddings from the raw corpus. We are trying to adjust the cost function of supervised SNNs and add several biological mechanisms such as STDP to the model to get them. Furthermore, in contrast to excitatory neocortical neurons, which have stereotypical morphological and electrophysiological classes, inhibitory neocortical interneurons have wildly diverse classes with various firing patterns that cannot be classified as FS or LTS [45]. In this paper, we focus on FS and LTS inhibitory neurons for their parameters in Izhikevich’s neuron model are easy to get. In the future, we will pay more attention to more detailed types of inhibitory neuron models.

References

Mikolov T, Sutskever I, Chen K, et al. Distributed representations of words and phrases and their compositionality. Advances in neural information processing systems; 2013. p. 3111–9.

Pennington J, Socher R, Manning C. Glove: global vectors for word representation. Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP); 2014.

Bengio Y, Ducharme R, Vincent P, et al. A neural probabilistic language model. J Mach Learn Res 2003;3:1137–55.

Socher R, Bauer J, Manning CD, et al. Parsing with compo- sitional vector grammars. Meeting of the association for computational linguistics; 2013. p. 455–65.

Kim Y. 2014. Convolutional neural networks for sentence classification. arXiv preprint arXiv:1408.5882.

Sutskever I, Vinyals O, Le QV. Sequence to sequence learning with neural networks. Advances in neural information processing systems; 2014. p. 3104–12.

Murphy B, Talukdar P, Mitchell T. Learning effective and interpretable semantic models using non-negative sparse embedding. COLING; 2012. p. 1933–50.

Faruqui M, Tsvetkov Y, Yogatama D, et al. Sparse overcomplete word vector representations. Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing, 149101500; 2015.

Sun F, Guo J, Lan Y, et al. Sparse word embeddings using l1 regularized online learning. Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence; 2016. p. 2915–21.

Ling S, Song Y, Dan R. Word embeddings with limited memory. Meeting of the association for computational linguistics; 2016. p. 387–92.

Rolls ET. Cortical coding. Language Cogn Neurosci 2017; 32(3): 316–29.

Hopfield JJ. Pattern recognition computation using action poten- tial timing for stimulus representation. Nature 1995; 376(6535): 33–6.

Lestienne R. Determination of the precision of spike timing in the visual cortex of anaesthetised cats. Biol Cybern 1995; 74(1): 55– 61.

Rieke F, Warland D, Rob DRVS, et al. 1999. Spikes: exploring the neural code: MIT Press, Cambridge.

Brown EN, Kass RE, Mitra PP. Multiple neural spike train data analysis: state-of-the-art and future challenges. Nat Neurosci 2004;7(5):456–61.

Quiroga RQ, Panzeri S. Principles of neural coding. Boca Raton: CRC Press; 2013.

Adrian ED. The impulses produced by sensory nerve endings. J Physiol 1926;61(1):49–72.

Thorpe S, Fize D, Marlot C. Speed of processing in the human visual system. Nature 1996;381(6582): 520–2.

Van RR, Thorpe SJ. Rate coding versus temporal order coding: what the retinal ganglion cells tell the visual cortex. Neural Comput 2001;13(6):1255–83.

Tiesinga P, Fellous JM, Sejnowski TJ. Regulation of spike timing in visual cortical circuits. Nat Rev Neurosci 2008;9(2):97.

Ponulak F, Kasinski A. Introduction to spiking neural networks: information processing, learning and applications. Acta Neurobiol Exp 2011;71(4):409.

Johansson RS, Birznieks I. First spikes in ensembles of human tactile afferents code complex spatial fingertip events. Nat Neurosci 2004;7(2):170.

Saal HP, Vijayakumar S, Johansson RS. Information about complex fingertip parameters in individual human tactile afferent neurons. J Neurosci: The Official Journal of the Society for Neuroscience 2009;29(25):8022.

Thorpe SJ. Spike arrival times: a highly efficient coding scheme for neural networks. Parallel processing in neural systems & computers; 1990.

Faisal AA, Selen LP, Wolpert DM. Noise in the nervous system. Nat Rev Neurosci 2008;9(4):292–303.

Laurent G. Dynamical representation of odors by oscillating and evolving neural assemblies. Trends Neurosci 1996;19(11):489–96.

Maas W. Networks of spiking neurons: the third generation of neural network models. Trans Soc Comput Simul Int 1997;14(4): 1659–71.

Hodgkin AL, Huxley AF. A quantitative description of membrane current and its application to conduction and excitation in nerve. J Physiol 1952;117(4):500.

Gerstner W, Kistler WM. Spiking neuron models: single neurons, populations, plasticity. Cambridge: Cambridge University Press; 2002.

Gupta A, Long LN. Character recognition using spiking neural networks. International Joint Conference on Neural Networks; 2007. p. 53–8.

Tavanaei A, Maida AS. 2016. Bio-inspired spiking convolutional neural network using layer-wise sparse coding and STDP learning. arXiv preprint arXiv:1611:03000.

Cao Y, Chen Y, Khosla D. Spiking deep convolutional neural networks for energy-efficient object recognition. Int J Comput Vis 2015;113(1):54–66.

Azhar H, Iftekharuddin K, Kozma R. A chaos synchronization-based dynamic vision model for image segmentation. IEEE International Joint Conference on Neural Networks. IJCNN ’05. Proceedings. IEEE; 2005.

Loiselle S, Rouat J, Pressnitzer D, et al. Exploration of rank order coding with spiking neural networks for speech recognitionI. IEEE International Joint Conference on Neural Networks, 2005 IJCNN ’05 Proceedings IEEE; 2005. p. 2076–80.

Floreano D, Epars Y, Zufferey JC, et al. Evolution of spiking neural circuits in autonomous mobile robots. Int J Intell Syst 2006;21(9):1005–24.

Crawford E, Gingerich M, Eliasmith C. Biologically plausible, human-scale knowledge representation. Cognit Sci 2016;40(4):782–821.

Stewart TC, Xuan C, Eliasmith C. Symbolic reasoning in spiking neurons: a model of the cortex/basal ganglia/thalamus loop. Meeting of the cognitive science society; 2010.

O’Keefe LP, Bair W, Movshon JA. Response variability of MT neurons in macaque monkey. Soc Neurosci Abstr 1997;23:1125.

Bair W, Koch C, Newsome W, et al. Power spectrum analysis of bursting cells in area MT in the behaving monkey. J Neurosci 1994;14(5):2870–92.

Softky WR, Koch C. Cortical cells should fire regularly, but do not [J]. Neural Comput 1992;4(5):643–6.

Burkitt AN. A review of the integrate-and-fire neuron model: I. Biol Cybern 2006;95(1):1–19.

Hu J, Tang H, Tan KC, et al. A spike-timing-based integrated model for pattern recognition. Neural Comput 2012;25(2):450–72.

Hunsberger E, Eliasmith C. 2015. Spiking deep networks with LIF neurons. Computer Science.

Izhikevich EM. Simple model of spiking neurons. IEEE Trans Neural Netw 2003;14(6):1569–72.

Izhikevich EM. Dynamical systems in neuroscience: the geometry of excitability and bursting. Cambridge: MIT Press; 2007.

Agirre E, Alfonseca E, Hall K, et al. A study on similarity and relatedness using distributional and wordnet-based approaches. Proceedings of human language technologies: The 2009 Annual Conference of the North American Chapter of the Association for Computational Linguistics. Association for Computational Linguistics; 2009. p. 19–27.

Hill F, Reichart R, Korhonen A. 2016. Simlex-999: evaluating semantic models with (genuine) similarity estimation. Computational Linguistics.

Luong T, Socher R, Manning CD. Better word representations with recursive neural networks for morphology. CoNLL; 2013. p. 104–13.

Socher R, Perelygin A, Wu JY, et al. Recursive deep models for semantic compositionality over a sentiment treebank. Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP); 2013. p. 1642.

Phan XH, Nguyen LM, Horiguchi S. Learning to classify short and sparse text & web with hidden topics from large-scale data collections. Proceedings of the 17th International Conference on World Wide Web. ACM; 2008. p. 91–100.

Funding

This study is supported by the Strategic Priority Research Program of Chinese Academy of Sciences (Grant No. XDB32070100), the Beijing Municipality of Science and Technology (Grant No. Z181100001518006), the CETC Joint Fund (Grant No. 6141B08010103), and the Major Research Program of Shandong Province 2018CXGC1503.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical Approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Conflict of Interest

The authors declare that they have no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Yuwei Wang and Yi Zeng have equal contribution to this work and should be regarded as co-first authors

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Wang, Y., Zeng, Y., Tang, J. et al. Biological Neuron Coding Inspired Binary Word Embeddings. Cogn Comput 11, 676–684 (2019). https://doi.org/10.1007/s12559-019-09643-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-019-09643-1