Abstract

Purpose

To develop and internally validate prediction models with machine learning for future potentially preventable healthcare utilization in patients with multiple long term conditions (MLTC). This study is the first step in investigating whether prediction models can help identify patients with MLTC that are most in need of integrated care.

Methods

A retrospective cohort study was performed with electronic health record data from adults with MLTC from an academic medical center in the Netherlands. Based on demographic and healthcare utilization characteristics in 2017, we predicted ≥ 12 outpatient visits, ≥ 1 emergency department (ED) visits, and ≥ 1 acute hospitalizations in 2018. Four machine learning models (elastic net regression, extreme gradient boosting (XGB), logistic regression, and random forest) were developed, optimized, and evaluated in a hold-out dataset for each outcome.

Results

A total of 14,486 patients with MLTC were included. Based on the area under the curve (AUC) and calibration curves, the XGB model was selected as final model for all three outcomes. The AUC was 0.82 for ≥ 12 outpatient visits, 0.76 for ≥ 1 ED visits and 0.73 for ≥ 1 acute hospitalizations. Despite adequate AUC and calibration, precision-recall curves showed suboptimal performance.

Conclusions

The final selected models per outcome can identify patients with future potentially preventable high healthcare utilization. However, identifying high-risk patients with MLTC and substantiating if they are most in need of integrated care remains challenging. Further research is warranted investigating whether patients with high healthcare utilization are indeed the most in need of integrated care and whether quantitively identified patients match the identification based on clinicians’ experience and judgment.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The management of multimorbidity or multiple long term conditions (MLTC), defined as having two or more chronic diseases simultaneously [1, 2], is difficult for both patients and providers due to interacting care plans, interactions of diseases, and the involvement of multiple different healthcare professionals [3,4,5,6]. These complex interactions can lead to potentially unnecessary healthcare utilization and potentially preventable adverse health outcomes, such as emergency department (ED) visits or acute hospitalizations [7, 8]. The monodisciplinary care organization contributes to this complexity, resulting in higher mortality, treatment burden, and lower quality of life for patients with MLTC [3, 8]. Consequently, increases in healthcare utilization [8] and healthcare costs [9, 10] pressure current healthcare systems [1].

An integrated care approach has been recommended for patients with MLTC to prevent adverse outcomes and potentially preventable healthcare utilization [11]. Integrated care refers to “initiatives seeking to improve outcomes of care by overcoming issues of fragmentation through linkage or coordination of services of providers along the continuum of care” [12]. However, to be effective and reduce healthcare utilization, such approaches should target patients that experience fragmentation and are most in need of integrated care [13]. Different determinants related to the patient, involved healthcare professionals, and the healthcare system contribute to this increased need for integrated care. These determinants include physical functioning [14], patient activation [15], communication amongst healthcare providers [16], and healthcare utilization [8]. Healthcare professionals do not have the time or resources to create an overview of these determinants per patient [17]. In contrast, information technology tools based on regularly collected electronic health record (EHR) data can partially provide insights into these determinants and the concurrent risk of high healthcare utilization [17].

Since the delivery of an integrated care approach is neither feasible nor warranted for all patients with MLTC, support tools to improve the identification of patients who would benefit most could prove valuable [18]. Predicting a high risk for potentially preventable healthcare utilization based on EHR data could be a valuable tool to identify a preselection of patients needing integrated care [18]. Such a data-driven tool based on readily available EHR data can complement more subjective identification approaches, by providing insights into the expected potentially preventable healthcare utilization [19]. Previous research developing prediction models with primary care data and data from one general hospital in the Netherlands showed that patients with high healthcare utilization could be reliably predicted [18, 20]. However, further development with EHR data from other hospitals, including more specialized medical centers, is needed to substantiate the added value of such prediction models.

Academic medical centers (AMCs) provide highly specialized care for numerous complex patients with MLTC [21, 22]. Nonetheless, research on predicting high healthcare utilization in patients with MLTC within AMCs is limited [18, 23, 24]. Due to inadequate geographic access resulting from longer travel time [25], higher complexity of patients [22], higher costs of care [21], and greater fragmentation as a consequence of hyperspecialization [26], support in the identification of patients in need for integrated care in AMCs is needed.

Prediction models could contribute to a better identification of patients with MLTC that are most in need of integrated care by providing insights into future expected risk for potentially preventable healthcare utilization. Therefore, this study aims to develop and internally validate prediction models for future outpatient visits, ED visits, and acute hospitalization with machine learning (ML) in patients with MLTC based on EHR data from an AMC in the Netherlands.

2 Materials and methods

2.1 Data

Data were derived from the EHR from an academic hospital in the Netherlands, the University Medical Center of Groningen (UMCG). Since 2006, the Netherlands has a regulated competitive universal health insurance system, in which private health insurance is mandatory for all citizens [27]. General practitioners have an important gatekeeper function and referrals are needed for hospital and specialist care [27]. Electronic records are mostly not centrally stored, nationally standardized, or interoperable between care domains [27].

Data for this study were collected based on Diagnosis-Treatment Combinations (DTCs), which are used in the Netherlands to code hospital care and claim payments. The DTCs include information on all care activities performed per patient within one hospital, including the associated diagnoses, specialties, and type of care activity [28]. The registered diagnoses within the DTC include an International Classification of Diseases and Related Health Problems 10 (ICD-10) code and can be linked with the Dutch Hospital Data – Clinical Classification Software (DHD-CCS) [29]. Dutch Hospital Data (DHD) organizes national registration, facilitates research, and connects hospitals to optimize medical-specialist care in the data field [30]. In the DHD-CCS, the ICD-10 codes are clustered into relevant subgroups. DHD has further classified these subgroups into five main categories: chronic, oncologic, acute, elective, and other diagnoses (supplementary table S1). As the ICD-10 provides too detailed diagnosis groups compared to the DHD-CCS, the 152 diagnosis groups defined by the DHD-CCS classification were used for this research.

For all adult patients who visited the UMCG in 2017 and 2018, EHR data of all outpatient visits, ED visits, and hospitalizations were collected. For this study, we used demographic (sex, date of birth, four-digit zip code (PC4)) and healthcare utilization data (type of care activity and linked diagnoses, and involved specialty).

The Central Ethics Review Board of the UMCG approved the pseudonymous use of the data for research purposes and a waiver of informed consent (#20200861, amendment approval number: #107275). Patient data were pseudonymized and patients who objected to the use of their data were excluded from this study prior to data collection. We followed the reporting guidelines from the TRIPOD (Transparent Reporting of a multivariable prediction model for Individual Prognosis Or Diagnosis) statement [31].

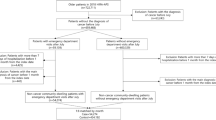

2.2 Study design and sample

We performed a retrospective cohort study and aimed to include patients with a potentially higher complexity of MLTC and higher healthcare use [32]. Based on registered outpatient visit diagnoses in 2017, MLTC was therefore defined as having two or more chronic and/or oncological conditions (based on DHD-CCS codes) and having two or more outpatient visits registered by two or more different specialties in one year. Included patients had to have at least two registered outpatient visits in the year of inclusion, because outpatient care is an important driver of preventable high healthcare utilization [33]. Chronic and oncological conditions were defined based on the DHD-CCS classification (supplementary table S1). Oncological conditions were included as they are usually included in MLTC research but are classified within the DHD-CCS in a separate category next to chronic conditions [34,35,36]. For our study, we included all patients with MLTC in 2017 and aged 18 years or older on 01.01.2017.

2.3 Outcomes

We aimed to predict high healthcare utilization in 2018 with demographic and healthcare utilization data from 2017. Based on previous research that developed prediction models for patients with MLTC in a Dutch general hospital [18], we included three types of high healthcare utilization outcomes in 2018 with the following cut-off values:

-

≥ 12 outpatient visits,

-

≥ 2 ED visits,

-

≥ 1 acute hospitalization(s).

Acute hospitalization(s) were defined based on whether the registered diagnosis for a hospitalization was labeled as acute according to the DHD-CSS classification (supplementary table S1). For patients who visited the hospital in 2017 but did not visit it in 2018, we labeled their outcomes for 2018 as zero (i.e., not having one of the outcomes).

2.4 Predictors

Demographic and healthcare (utilization) characteristics of 2017 were used as predictors. Demographics included age and sex of the patient. Age was determined on 1 January 2017. Selected healthcare (utilization) characteristics were the registered DHD-CCS diagnoses for outpatient visits and the involved medical specialties at outpatient visits in 2017, which were included as separate binary variables for each patient. Other included characteristics were the number of registered diagnoses based on the DHD-CCS classification and the number of involved medical specialties at outpatient visits. Further, the number of outpatient visits, ED visits, hospitalization days, and hospitalization days with an acute diagnosis were used. We also used the PC4 per patient and calculated the distances in kilometers (km) to the UMCG and the closest hospital in the patients’ region per patient. Details on how these distances were calculated are provided in supplementary material S1.1. All predictors can automatically be retrieved from the patient’s EHR. The included predictors in the baseline settings are provided in the supplementary tables S1 and S2.

2.5 Machine learning development and evaluation

To balance the interpretability, predictive accuracy, robustness to imbalanced datasets, and handling of non-linearity, we used four ML prediction models for each outcome, namely Elastic Net Regression (ENR) [37], eXtreme Gradient Boosting (XGB) [38], Logistic Regression (LR) [39], and Random Forest (RF) [40]. A brief description of all four algorithms, including the rationale for their usage, is provided in supplementary material S1.2.

Data were randomly split into a stratified cross-validation (CV) (80%) and independent test (IT) (20%) set. To prevent overfitting, the IT dataset was kept separate from the CV dataset during model development and only used for final model evaluation. A 10-fold CV was performed to assess the results’ robustness and provide confidence intervals (CI). Our baseline settings for all models included the 152 DHD-CCS diagnoses groups, 1000 trees for RF and XGB models, and default hyperparameter ranges (supplementary material S1.2). To evaluate the performance of each model, we calculated the area under the curve (AUC) of the receiver operating characteristics (ROC) and their 95% CI based on the mean AUC of the CV folds and the standard errors. Hyperparameter values for ENR, XGB, and RF were tuned using an exhaustive grid search with size 100, meaning that 100 combinations of hyperparameters were sampled from a prespecified range to determine the best hyperparameters for each model. Hyperparameter combinations that resulted in the best AUC for each separate model in the CV set were selected. To optimize prediction results, hyperparameter ranges were visually inspected for all four models per outcome by plotting them against the AUC. The hyperparameter range was adjusted if no global maximum was detectable and a decreasing or increasing trend was visible in the AUC for a hyperparameter value.

To optimize prediction results of the baseline settings for all models, we tested broader and more narrow diagnoses groups based on definitions of the DHD-CCS (233 and 17 groups, respectively; supplementary tables S3 and S4) and 3000 trees for the RF and XGB models. Moreover, several variables were transformed into theoretically relevant and logical combinations or transformations of variables (i.e., features). We then tested whether the addition of these features resulted in better AUCs. The five features that we defined and evaluated are described in supplementary table S5. In addition, we tested log transformations of skewed variables with and without the five features.

We evaluated the concordance (c) statistic and their 95% confidence intervals (CI) based on the mean AUC of the ROC of the cross-validation folds and the standard error. Discrimination and calibration were evaluated on the IT set for the final models per outcome. For discrimination, AUC and their 95% CI (based on DeLong [41]) and ROC curves were used. The ROC curve visualizes the tradeoff between the true positive rate (sensitivity) and the false positive rate (1 - specificity) [42]. The AUC (equal to the c-statistic for binary outcomes) ranges from 0.5 to 1.0, with high values indicating higher discrimination, meaning that a model can better differentiate patients with the outcome from those without. The final model per outcome was chosen based on the highest mean AUC in the cross-validation set. In addition, calibration curves, intercepts, and slopes in the independent testing set were used. Calibration can be assessed by plotting the predicted probabilities against the actual probabilities and visualizing the agreement between the observed outcome and the predicted probability [43]. For the calibration intercept, values < 0 indicate systematically too high predictions (overestimation of risks) and > 0 too low predictions (underestimation of risks). If the slope of the calibration curve is < 1, the risks are overfitted, meaning that low risks are underestimated and high risks are overestimated. When the slope is > 1, the risks are underfitted. A perfect calibration curve has a slope of 1 and an intercept of 0. Lastly, the precision-recall (PR) AUC and PR curves in the IT test were evaluated to provide a more nuanced understanding of precision and recall, and the impact of different classification thresholds [44]. All analyses and figures were performed and created with R version 4.2.1 [45].

2.6 Model explainability

To increase the interpretability and explainability of each final selected model, we calculated the SHapley Additive exPlanations (SHAP) [46] values per outcome. The SHAP method is intended to explain individual prediction in complex ML models and is based on additive feature attribution and cooperative game theory [47]. With SHAP feature importance and summary plots, we provide insights into the contribution of the 15 most important predictors.

2.7 Sensitivity analyses

We performed several sensitivity analyses and repeated the hyperparameter grid search using our baseline settings. First, we trained and evaluated our models on a subset of the data that excluded all patients who visited the hospital in 2017 but not in 2018. Second, we tested different cut-off values, being ≥ 10 and ≥ 14 instead of ≥ 12 outpatient visits, ≥ 1 instead of ≥ 2 ED visits, and ≥ 1 overall hospitalization instead of ≥ 1 acute hospitalization, similar to previous research [20, 48]. Finally, we examined whether random oversampling of our outcomes (balancing the data) [49] improved the predictive performance.

3 Results

3.1 Population characteristics

Demographic and healthcare (utilization) characteristics of the total included population and of the healthcare utilization subgroups in 2017 are shown in Table 1, including the three outcomes in 2018. For more insights into the included population, the prevalence of diseases and involved medical specialties ≥ 2% in 2017 are shown in supplementary tables S6 and S7. In addition, bar plots depicting the number of diagnoses versus age and gender are displayed in supplementary fig. S1 and S2. This study included 14,486 patients, with a median age of 60 and 54.4% females. Patients in the three high healthcare utilization groups were more often male and older than the total included population. Of the total population, 14.0% had ≥ 12 outpatient visits, 12.7% had ≥ 2 ED visits, and 4.9% had ≥ 1 acute hospitalization in 2017. For patients that visited the hospital in 2017, the three outcomes in 2018 were distributed as follows: 8.8% with ≥ 12 outpatient visits, 6.7% with ≥ 2 ED visits, and 3.5% with ≥ 1 acute hospitalization. The characteristics of the CV and IT sets for all three outcomes are provided in supplementary table S8.

3.2 Final model selection and evaluation

Results of different model optimization and feature engineering approaches and their corresponding AUC results are provided in supplementary table S9. Finally, we used our baseline settings for ≥ 12 outpatient visits and ≥ 1 acute hospitalization(s) since the optimization approaches did not increase the AUC. For ≥ 2 ED visits, we used the baseline settings with 3000 trees for the XGB and RF models (instead of 1000 trees).

We further evaluated discrimination and calibration in the IT set for these final settings per outcome. The supplementary fig. S3-S5 show ROC curves on the IT dataset for the final settings per outcome. For all three outcomes, ROC curves were similar for all four models.

Calibration curves per outcome on the IT dataset, including calibration intercept and slope, are provided in Figs. 1, 2 and 3. For ≥ 12 outpatient visits, all four models overestimated higher risks, as indicated by the calibration curves and calibration intercepts (all < 0, Fig. 1). The XGB and RF showed comparable calibration curves for ≥ 12 outpatient visits, with XGB showing a slightly better overall agreement and lower variabilities in the curve (Fig. 1b and d). For ≥ 2 ED visits, all four models overestimated higher risks (Fig. 2). For the RF model, the model showed an overestimation of low and high risks but an underestimation in-between (Fig. 2d). The confidence interval of the RF’s calibration curve covered the ideal line well, but the predicted probabilities were limited. The XGB model had the most ideal calibration curve for ≥ 2 ED visits with both an intercept close to 0 and a slope close to 1 (Fig. 2b). For ≥ 1 acute hospitalization(s) the ENR and LG models overfitted the risks, meaning that low risks were underestimated and high risks were overestimated (Fig. 3a and 3c). The RF model vastly underestimated higher risks (Fig. 3d). The XGB model had the most ideal calibration curve for ≥ 1 acute hospitalization(s), even though it overestimated lower risks and underestimated higher risks (Fig. 3b).

Table 2 provides the final selected model per outcome based on the AUC and the calibration curves. For each final model per outcome, the corresponding AUC and 95% CI in the CV and IT set and calibration slope and intercept in the IT set are shown. We selected the XGB model with baseline settings for ≥ 12 outpatient visits as well as for ≥ 1 acute hospitalization(s) and the XGB model with 3000 trees for ≥ 2 ED visits.

The final selected models per outcome were evaluated with precision-recall curves (supplementary figs. 6–8). Precision and recall AUCs were 0.30 for ≥ 12 outpatient visits, 0.17 for ≥ 2 ED visits, and 0.11 for ≥ 1 acute hospitalizations. Highest precision reached around 0.7 for ≥ 12 outpatient visits, 0.5 for ≥ 2 ED visits, and 1.0 for ≥ 1 acute hospitalizations. Nonetheless, they were achieved at low recall levels.

3.3 Model explainability

Per outcome, we plotted the SHAP feature importance and summary plots of the final selected model, showing the contribution of the 15 most important variables to the predictions (Figs. 4, 5 and 6). The contribution of all variables with a mean Shapley value ≥ 0.001 are provided in supplementary tables 10–12. For ≥ 12 outpatient visits in 2018, the number of outpatient visits and the involvement of the internal medicine specialty in 2017 had the highest contributions to the predictions, changing the predicted absolute value probability by 4.6 and 2.6 percentage points, respectively (Fig. 4a). High numbers of outpatient visits, involved specialties, and diagnoses tend to increase the risk for ≥ 12 outpatient visits (Fig. 4b). In contrast, very high age decreased the risk for ≥ 12 outpatient visits while lower to medium-high ages increased the risk.

For ≥ 2 ED visits in 2018, the most important predictors were the number of outpatient visits, the number of ED visits, the distance to the UMCG, and the involvement of the internal medicine specialty in 2017 (Fig. 5). A high number of outpatient and ED visits, and a shorter distance to the UMCG tended to increase the risk of ≥ 2 ED visits (Fig. 5b).

For ≥ 1 acute hospitalization(s) in 2018, the age of the patient, the presence of an acute diagnosis, the number of diagnoses, and the distance to the UMCG in 2017 had the highest influence (Fig. 6). Higher age, a higher number of diagnoses and a shorter distance to the UMCG tended to increase the risk for ≥ 1 acute hospitalization(s) (Fig. 6b).

3.4 Sensitivity analysis

Results of the sensitivity analyses, in comparison to the baseline setting models as a reference, are displayed in supplementary table S13. The sensitivity analyses based on the subset of the data and of balancing the data for the three outcomes did not improve predictive performance. The different cut-off values did not improve predictive performance, except for the cut-off of ≥ 14 outpatient visits, which resulted in slightly higher AUCs for all four models.

4 Discussion

To investigate whether prediction models can contribute to the identification of patients with MLTC with a high risk of future potentially preventable healthcare utilization, we developed and internally validated prediction models with EHR data for future ≥ 12 outpatient visits, ≥ 2 ED visits, and ≥ 1 acute hospitalization(s). Our study used a large and well-defined sample of patients with MLTC in an AMC and contributes to the limited available clinical prediction models that report measures of discrimination as well as calibration. Furthermore, we improved the explainability of the models by calculating SHAP values [23, 47]. Overall, our models could identify patients with expected future potentially preventable healthcare utilization and showed better or similar performance compared to previous research, as outlined below. Nonetheless, our models could not substantiate whether the identified patients are indeed patients most in need of integrated care.

Our study shows similar or higher AUC values for predicting outpatient and ED visits compared to previous research [18, 20, 50]. For ≥ 12 outpatient visits, our final selected XGB model resulted in an AUC of 0.83 (95% CI: 0.81–0.85) in the CV set and 0.82 (95% CI: 0.80–0.84) in the IT test. One previous study predicted outpatient visits with a LR model and found an AUC of 0.75 [18]. Our study showed an AUC of 0.80 for the LR model. As we used comparable data, including more variables about the patients’ healthcare (utilization) characteristics in our study might explain the better performance. For ≥ 2 ED visits, our final selected XGB model resulted in an AUC of 0.76 (95% CI: 0.74–0.78) in the CV set and 0.76 (95% CI: 0.73–0.80) in the IT set. Previous studies found AUCs ranging between 0.66 and 0.79 and used cut-offs of ≥ 1 to ≥ 4 ED visits in their models [18, 20, 50]. For ≥ 1 acute hospitalization(s), our final selected XGB model resulted in an AUC of 0.75 (95% CI: 0.73–0.78) in the CV set and 0.73 (95% CI: 0.67–0.78) in the IT test. Studies with similar cut-off values focusing on acute or unplanned hospitalizations found lower AUCs of 0.69–0.70 [18, 20]. Another study focusing on any hospitalization within six months resulted in an AUC of 0.84 [48], which is higher than our findings. Their best-performing model included demographic, healthcare utilization, diagnoses, and medication data, which, together with their tenfold larger population, might explain the difference with our findings. Similarly, a review of prediction models of emergency hospital admission found AUCs in 18 studies ranging between 0.63 and 0.83 in models using administrative or clinical record data [51]. Models with an AUC > 0.80 also included medication data or polypharmacy as predictors, which seem to improve the predictive performance for unplanned hospitalizations. Our findings suggest that complex ML models with more complex explainability such as XGB and RF do not seem to increase predictive performance tremendously compared to models with higher explainability as ENR or LG.

To the best of our knowledge, the calibration of comparable prediction models has only been reported in two studies [18, 48]. In line with previous research, we found reasonable agreement between observed and predicted probabilities for outpatient visits. We found similar calibrations for ED visits, with an overestimation of higher predicted risks. In contrast, our model for acute hospitalization(s) underestimated higher risks, whereas previous studies overestimated higher risks [18, 48]. We did, however, find an overestimation of risks in the LR model (instead of the finally selected XGB), which is in line with previous studies. Overall, previous literature and our study suggest that higher risks of potentially preventable healthcare utilization can be predicted less accurately than lower risks. In addition, we evaluated precision-recall curves which has been recommended when dealing with imbalanced datasets [52]. Results were suboptimal and showed high precision only at low recall values. Overall, very high risk patients had the best potential to be correctly classified by the models, but many remain undetected due to the low recall. Further insights are needed to assess whether such very high risk patients could potentially also be identified based on clinical experience and judgement alone.

Several variables increased the risk for all three outcomes. In line with previous research, we identified more prior-year diagnoses, outpatient visits, and hospitalizations as important predictors for all three outcomes [18, 20, 48]. Higher age has been reported in previous studies as an important predictor for all three outcomes, which we only found for ≥ 1 acute hospitalization(s). In our study, very high ages seemed to decrease the risk for ≥ 12 outpatient visits. This finding can be partially seen in our descriptive results (Table 2) and can be explained by changes in treatment goals of the oldest patients [53] and greater challenges to visit the hospital [54]. Other important predictors in our models were the number of involved specialties and the distance to the UMCG. Only one previous study included residential areas in their models [50]. In line with our expectations, most high healthcare utilizers seem to live closer to the UMCG. Nonetheless, some outliers were visible in patients with a larger distance to the UMCG and an increased risk for acute hospitalizations, potentially indicating complex patients with a high travel and disease burden.

4.1 Future work

Future work is needed to evaluate the added value of prediction models for identifying patients with MLTC most in need of integrated care. First, it should be evaluated whether the identified high-risk patients are indeed the patients that either self-report to experience fragmented care or have healthcare providers recognizing a need for integrated care. Such assessment is in line with the suggestion by Verhoeff et al. (2022) [18] and can shed light on whether the high healthcare utilization is related to experienced care fragmentation or simply to the complexity of the patients.

Second, if the models can identify patients needing integrated care, the identification with prediction models should be compared to identifying patients based on more straightforward threshold values. Comparisons of complex versus simple models have similarly been performed in the prediction of chronic opioid therapy and hospitalizations within six months [48, 55]. Such more straightforward threshold values could include, for example, a certain amount of prior healthcare utilization and a specific number of diagnoses. In addition, the models’ performance could be compared to identification based on clinical experience and judgement alone. An identification based on these values, next to or in combination with clinicians’ experience, could be equally (cost-) effective compared to the extensive development, implementation, and maintenance of prediction models.

Third, previous research suggests that including medication data could further improve predictive performance. Hence, future work should assess the impact of including more data on predictive performance and accurately identifying the target population. Moreover, we recommend to further evaluate the added value of additional performance indicators next to the commonly used AUC [56, 57]. The identification of high risk patients for potentially preventable healthcare utilization remains challenging and might be unfeasible for the total population. Therefore, models with acceptable precision-recall AUC might be able to identify a subset of the population in which the model can achieve high precision [58]. In addition, other measures of MLTC that measure disease burden differently compared to simple disease counts should be assessed [32, 59]. Thereby, the feasibility of a hybrid case finding approach [19], in which quantitative prediction modeling and a more qualitative personal assessment are combined, should be further investigated.

4.2 Limitations

Our study has several limitations, the first being the limited prediction time span of one year. Our descriptive results suggest that around half of our patient population has high healthcare utilization over the two-year study period, suggesting that long-term high healthcare utilizers might represent a different patient population [24]. In addition, we could not differentiate between incident and prevalent patients with MTLC in our study due to the limited time span. Longitudinal studies could further differentiate long-term healthcare utilizers, provide insights into the accumulation of conditions, and inform prevention [60].

Second, our data was collected prior to the COVID-19 pandemic. Healthcare utilization might have decreased or changed post COVID-19 and might not be comparable to data from 2017 and 2018 [61, 62].

Third, despite that our sensitivity analysis showed slightly higher AUCs for ≥ 14 outpatient visits, we did not further evaluate this model due to resource constraints. Future research should investigate whether a cut-off of ≥ 14 outpatient visits results in a better predictive performance, even though the identified increase in AUC in our sensitivity analyses was only minor.

Fourth, we labeled all patients who visited the hospital in 2017 but did not in 2018 as zero (i.e., not having one of the outcomes). This labeling is in line with reality, as future healthcare utilization or death will not be known at the time of prediction. However, this could create a mixed class of patients labeled as zero: patients who do not return, visit another hospital, or die. Therefore, we performed a sensitivity analysis that excluded patients who did not visit the hospital in 2018. The results were comparable.

Fifth, the inclusion of ‘enabling’ characteristics, such as income or country region, could improve the selection of patients [24]. We could not include such characteristics because this information is not collected in the administrative data based on DTC codes. However, our data is readily available from the EHR and we included the distance to the UMCG and the closest hospital in the patient’s region as a proxy for the country region.

Sixth, the prevalence of MLTC might be underestimated in our study because the definition was based on DTC codes and focused on registered diagnoses at outpatient visits. Within one DTC trajectory, a medical specialist cannot simultaneously register multiple diagnoses of the same main diagnostic group that are associated with his or her medical specialty [63]. Nonetheless, diagnoses from other main diagnostic groups and from other medical specialties can be registered in parallel within one DTC trajectory. In addition, the DTC data accurately reports the involvement of medical specialists and the healthcare utilization. Our data included registered diagnoses from one AMC only. Patients with MLTC often visit multiple primary and secondary healthcare providers [7, 8], leading to a potential underestimation of MLTC in our study. Since data across healthcare providers is not centrally collected or available in Netherlands, we were, however, not able to include such data [27]. This potential underestimation of the MTLC burden might have impacted the predictive performance of the number of diagnoses in this study.

Finally, we did not externally validate our models in data from other AMCs, which is recommended for assessing the general applicability of prediction models [42]. In addition, temporal external validation in updated post COVID-19 has not been performed, limiting conclusions on the consistency of our models over time. As AMCs provide highly specialized care to heterogenous patient populations, the general applicability of our models to other AMCs was beyond the scope of this study. We assume that our models must be retrained or updated in other centers to account for local and hospital-specific variability in patient populations [23, 42, 64]. As high healthcare users can visit multiple hospitals in one year [65], developing and externally validating prediction models with data from multiple Dutch hospitals would be ideal. However, data sharing across hospitals remains problematic in the Netherlands to date. Consequently, implementing locally developed and internally validated models is more feasible.

5 Conclusion

Patients with MLTC and high future healthcare utilization can be identified with ML models. Whether these models identify patients that experience fragmented care and most need an integrated care approach has yet to be substantiated. Patients identified with these models should be compared to identifications based on clinical experience and judgment to assess the added value and potential (cost-) effectiveness of incorporating such prediction models within the EHR.

Data availability

The data that support the findings of this study are available from the University Medical Centre Groningen (UMCG) but restrictions apply to the availability of these data, which were used under license for the current study, and so are not publicly available. Data are however available from the authors (via the corresponding author, Liann Weil: l.i.weil@umcg.nl) upon reasonable request and with permission of the UMCG.

References

The Academy of Medical Sciences. (2018) Multimorbidity: a priority for global health. https://acmedsci.ac.uk/file-download/82222577. Accessed 15 June 2023.

Khunti K, Sathanapally H, Mountain P. Multiple long term conditions, multimorbidity, and co-morbidities: we should reconsider the terminology we use. BMJ. 2023;2327. https://doi.org/10.1136/bmj.p2327.

Rosbach M, Andersen JS. Patient-experienced burden of treatment in patients with multimorbidity - A systematic review of qualitative data. PLoS ONE. 2017;12(6):e0179916. https://doi.org/10.1371/journal.pone.0179916.

Damarell RA, Morgan DD, Tieman JJ. General practitioner strategies for managing patients with multimorbidity: a systematic review and thematic synthesis of qualitative research. BMC Fam Pract. 2020;21(1):131. https://doi.org/10.1186/s12875-020-01197-8.

Xu X, Mishra GD, Jones M. Evidence on multimorbidity from definition to intervention: an overview of systematic reviews. Ageing Res Rev. 2017;37:53–68. https://doi.org/10.1016/j.arr.2017.05.003.

Valderas JM, Starfield B, Sibbald B, Salisbury C, Roland M. Defining comorbidity: Implications for Understanding Health and Health Services. The Annals of Family Medicine. 2009;7(4):357–63. https://doi.org/10.1370/afm.983.

Lehnert T, Heider D, Leicht H, Heinrich S, Corrieri S, Luppa M, Riedel-Heller S, König H-H. Review: Health Care utilization and costs of Elderly persons with multiple chronic conditions. Med Care Res Rev. 2011;68(4):387–420. https://doi.org/10.1177/1077558711399580.

Marengoni A, Angleman S, Melis R, Mangialasche F, Karp A, Garmen A, Meinow B, Fratiglioni L. Aging with multimorbidity: a systematic review of the literature. Ageing Res Rev. 2011;10(4):430–9.

Tran PB, Kazibwe J, Nikolaidis GF, Linnosmaa I, Rijken M, Van Olmen J. Costs of multimorbidity: a systematic review and meta-analyses. BMC Med. 2022;20(1). https://doi.org/10.1186/s12916-022-02427-9.

Wammes JJG, van der Wees PJ, Tanke MAC, Westert GP, Jeurissen PPT. Systematic review of high-cost patients’ characteristics and healthcare utilisation. BMJ Open. 2018;8(9). https://doi.org/10.1136/bmjopen-2018-023113.

National Institute for Health and Care Excellence. (2016) Multimorbidity: clinical assessment and management. NICE guideline [NG56]. https://www.nice.org.uk/guidance/ng56/evidence/full-guideline-pdf-2615543103. Accessed 15 June 2023.

Council of the European Union. (2013) Document 12981/13, Annex IV. https://data.consilium.europa.eu/doc/document/ST%2012981%202013%20INIT/EN/pdf. Accessed 15 June 2023.

Bell C, Appel CW, Frølich A, Prior A, Vedsted P. Improving Health Care for patients with multimorbidity: a mixed-methods study to explore the feasibility and process of aligning scheduled outpatient appointments through collaboration between medical specialties. Int J Integr Care. 2022;22(1):17. https://doi.org/10.5334/ijic.6013.

Calderón-Larrañaga A, Vetrano DL, Ferrucci L, Mercer SW, Marengoni A, Onder G, Eriksdotter M, Fratiglioni L. Multimorbidity and functional impairment-bidirectional interplay, synergistic effects and common pathways. J Intern Med. 2019;285(3):255–71. https://doi.org/10.1111/joim.12843.

Blakemore A, Hann M, Howells K, Panagioti M, Sidaway M, Reeves D, Bower P. Patient activation in older people with long-term conditions and multimorbidity: correlates and change in a cohort study in the United Kingdom. BMC Health Serv Res. 2016;16(1):582. https://doi.org/10.1186/s12913-016-1843-2.

van der Aa MJ, van den Broeke JR, Stronks K, Plochg T. Patients with multimorbidity and their experiences with the healthcare process: a scoping review. J Comorb. 2017;7(1):11–21.

Schiotz ML, Host D, Christensen MB, Dominguez H, Hamid Y, Almind M, Sorensen KL, Saxild T, Holm RH, Frolich A. Quality of care for people with multimorbidity - a case series. BMC Health Serv Res. 2017;17(1):745. https://doi.org/10.1186/s12913-017-2724-z.

Verhoeff M, De Groot J, Burgers JS, Van Munster BC. Development and internal validation of prediction models for future hospital care utilization by patients with multimorbidity using electronic health record data. PLoS ONE. 2022;17(3):e0260829. https://doi.org/10.1371/journal.pone.0260829.

Haime V, Hong C, Mandel L, Mohta N, Iezzoni LI, Ferris TG, Vogeli C. Clinician considerations when selecting high-risk patients for care management. Am J Manag Care. 2015;21(10):e576–82.

Heins M, Korevaar J, Schellevis F, Rijken M. Identifying multimorbid patients with high care needs - a study based on electronic medical record data. Eur J Gen Pract. 2020;26(1):189–95. https://doi.org/10.1080/13814788.2020.1854719.

Fuchs VR. Current challenges to Academic Health centers. JAMA. 2013;310(10):1021. https://doi.org/10.1001/jama.2013.227197.

Berkowitz SA, Pahira JJ. Accountable Care Organization Readiness and Academic Medical Centers. Acad Med. 2014;89(9):1210–5. https://doi.org/10.1097/acm.0000000000000365.

Goldstein BA, Navar AM, Pencina MJ, Ioannidis JP. Opportunities and challenges in developing risk prediction models with electronic health records data: a systematic review. J Am Med Inform Assoc. 2017;24(1):198–208. https://doi.org/10.1093/jamia/ocw042.

de Ruijter UW, Kaplan ZLR, Bramer WM, Eijkenaar F, Nieboer D, van der Heide A, Lingsma HF, Bax WA. Prediction models for future high-need high-cost Healthcare Use: a systematic review. J Gen Intern Med. 2022;37(7):1763–70. https://doi.org/10.1007/s11606-021-07333-z.

Quinaz Romana G, Kislaya I, Cunha Gonçalves S, Salvador MR, Nunes B, Matias Dias C. Healthcare use in patients with multimorbidity. Eur J Public Health. 2019;30(1):16–22. https://doi.org/10.1093/eurpub/ckz118.

Stange KC. The Problem of Fragmentation and the need for Integrative Solutions. Ann Fam Med. 2009;7(2):100–3. https://doi.org/10.1370/afm.971.

Wammes JJG, Jeurissen P, Westert GP. (2016) 2015 International Profiles of Health Care Systems - The Dutch Health Care System https://www.commonwealthfund.org/sites/default/files/documents/___media_files_publications_fund_report_2016_jan_1857_mossialos_intl_profiles_2015_v7.pdf. Accessed December 28, 2023.

Folmer K, Mot E. (2003) Diagnosis and treatment combinations in Dutch hospitals. https://www.researchgate.net/publication/242185877_Diagnosis_and_treatment_combinations_in_Dutch_hospitals#fullTextFileContent. Accessed 3 July 2023.

Healthcare Cost and Utilization Project (HCUP). (2018) Beta Clinical Classifications Software (CCS) for ICD-10-CM/PCS. https://www.hcup-us.ahrq.gov/toolssoftware/ccs10/ccs10.jsp. Accessed 10 June 2023.

Dutch Hospital Data (n.d.) About us [Over ons]. https://www.dhd.nl/over-ons. Accessed 29 Dec 2023.

Moons KG, Altman DG, Reitsma JB, Ioannidis JP, Macaskill P, Steyerberg EW, Vickers AJ, Ransohoff DF, Collins GS. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): explanation and elaboration. Ann Intern Med. 2015;162(1):W1–73. https://doi.org/10.7326/M14-0698.

Harrison C, Britt H, Miller G, Henderson J. Examining different measures of multimorbidity, using a large prospective cross-sectional study in Australian general practice. BMJ Open. 2014;4(7):e004694–4. https://doi.org/10.1136/bmjopen-2013-004694.

Das LT, Kaushal R, Garrison K, Carrillo V, Grinspan Z, Theis R, Shenkman E, Abramson E. Drivers of preventable high health care utilization: a qualitative study of patient, physician and health system leader perspectives. J Health Serv Res Policy. 2020;25(4):220–8. https://doi.org/10.1177/1355819619873685.

Ho IS-S, Azcoaga-Lorenzo A, Akbari A, Black C, Davies J, Hodgins P, et al. Examining variation in the measurement of multimorbidity in research: a systematic review of 566 studies. Lancet Public Health. 2021;6(8):e587–97. https://doi.org/10.1016/S2468-2667(21)00107-9.

Diederichs C, Berger K, Bartels DB. The measurement of multiple chronic diseases--a systematic review on existing multimorbidity indices. J Gerontol A Biol Sci Med Sci. 2011;66A(3):301–11. https://doi.org/10.1093/gerona/glq208.

Huntley AL, Johnson R, Purdy S, Valderas JM, Salisbury C. Measures of Multimorbidity and Morbidity Burden for Use in Primary Care and Community settings: a systematic review and guide. The Annals of Family Medicine. 2012;10(2):134–41. https://doi.org/10.1370/afm.1363.

Zou H, Hastie T. Regularization and variable selection via the elastic net. J R Stat Soc Series B Stat Methodol. 2005;67(2):301–20.

Chen T, Guestrin C. XGBoost. 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. 2016. https://doi.org/10.1145/2939672.2939785. Accessed 3 July 2023.

Menard S. (2002) Applied Logistic Regression Analysis https://doi.org/10.4135/9781412983433.

Breiman L. Random forests. Mach Learn. 2001;45(1):5–32. https://doi.org/10.1023/a:1010933404324.

DeLong ER, DeLong DM, Clarke-Pearson DL. Comparing the areas under two or more correlated receiver operating characteristic curves: a nonparametric approach. Biometrics; 1988. pp. 837–45.

Steyerberg EW. Clinical prediction models. Statistics for biology and health [Internet]. 2019. https://doi.org/10.1007/978-3-030-16399-0.

Van Calster B, Vickers AJ. Calibration of risk prediction models. Med Decis Making. 2015;35(2):162–9. https://doi.org/10.1177/0272989x14547233.

Davis J, Goadrich M. (2006) The relationship between Precision-Recall and ROC curves. Proceedings of the 23rd international conference on Machine learning.

R Core Team. (2013) R: A language and environment for statistical computing.

Lundberg S, Lee S-I. A Unified Approach to interpreting model predictions. arXiv pre-print server; 2017.

Molnar C. (2023) Interpretable Machine Learning. A Guide for Making Black Box Models Explainable. https://christophm.github.io/interpretable-ml-book/. Accessed 10 June 2023.

Morawski K, Dvorkis Y, Monsen CB. Predicting hospitalizations from electronic health record data. Am J Manag Care. 2020;26(1):e7–e13. https://doi.org/10.37765/ajmc.2020.42147.

Fernández A, García S, Galar M, Prati RC, Krawczyk B, Herrera F. Learning from imbalanced data sets. 2018. https://link.springer.com/book/10.1007/978-3-319-98074-4.

Hudon C, Courteau J, Chiu YM, Chouinard M-C, Dubois M-F, Dubuc N, Elazhary N, Racine-Hemmings F, Dufour I, Vanasse A. (2020) Risk of frequent Emergency Department Use among an Ambulatory Care Sensitive Condition Population: a Population-based Cohort Study. Med Care, 58(3).

Wallace E, Stuart E, Vaughan N, Bennett K, Fahey T, Smith SM. (2014) Risk prediction models to Predict Emergency Hospital Admission in Community-dwelling adults: a systematic review. Med Care, 52(8).

Saito T, Rehmsmeier M. The Precision-Recall plot is more informative than the ROC plot when evaluating Binary classifiers on Imbalanced datasets. PLoS ONE. 2015;10(3):e0118432. https://doi.org/10.1371/journal.pone.0118432.

Wyman MF, Liebzeit D, Voils CI, Bowers BJ, Chapman EN, Gilmore-Bykovskyi A, Kennelty KA, Kind AJH, Loosen J, Rogus-Pulia N, Dattalo M. Hopes and wishes: goals of high-need, high-cost older patients and their caregivers. Patient Educ Couns. 2020;103(7):1428–34. https://doi.org/10.1016/j.pec.2020.02.022.

Annette LF, Neil RP, Lawton SC, Diane G, I, John AR. Barriers to Health Care Access among the Elderly and who perceives them. Am J Public Health. 2004;94(10):1788–94. https://doi.org/10.2105/ajph.94.10.1788.

Bjarnadóttir MV, Anderson DB, Agarwal R, Nelson DA. Aiding the prescriber: developing a machine learning approach to personalized risk modeling for chronic opioid therapy amongst US Army soldiers. Health Care Manag Sci. 2022;25(4):649–65. https://doi.org/10.1007/s10729-022-09605-4.

De Hond AAH, Leeuwenberg AM, Hooft L, Kant IMJ, Nijman SWJ, Van Os HJA, Aardoom JJ, Debray TPA, Schuit E, Van Smeden M, Reitsma JB, Steyerberg EW, Chavannes NH, Moons KGM. Guidelines and quality criteria for artificial intelligence-based prediction models in healthcare: a scoping review. Npj Digit Med. 2022;5(1). https://doi.org/10.1038/s41746-021-00549-7.

Steyerberg EW, Vickers AJ, Cook NR, Gerds T, Gonen M, Obuchowski N, Pencina MJ, Kattan MW. Assessing the performance of Prediction models: a Framework for Traditional and Novel measures. Epidemiology. 2010;21(1):128–38. https://doi.org/10.1097/EDE.0b013e3181c30fb2.

Sofaer HR, Hoeting JA, Jarnevich CS. The area under the precision-recall curve as a performance metric for rare binary events. Methods Ecol Evol. 2019;10(4):565–77. https://doi.org/10.1111/2041-210x.13140.

Valero-Bover D, Monterde D, Carot-Sans G, Cainzos-Achirica M, Comin-Colet J, Vela E, Clèries M, Folguera J, Abilleira S, Arrufat M, Lejardi Y, Solans Ò, Dedeu T, Coca M, Pérez-Sust P, Pontes C, Piera-Jiménez J. Is age the most important risk factor in COVID-19 patients? The relevance of Comorbidity Burden: a retrospective analysis of 10,551 hospitalizations. Clin Epidemiol. 2023;15:811–25. https://doi.org/10.2147/clep.s408510.

Kudesia P, Salimarouny B, Stanley M, Fortin M, Stewart M, Terry A, Ryan BL. The incidence of multimorbidity and patterns in accumulation of chronic conditions: a systematic review. J Comorb. 2021;11:26335565211032880. https://doi.org/10.1177/26335565211032880.

Roy CM, Bollman EB, Carson LM, Northrop AJ, Jackson EF, Moresky RT. Assessing the indirect effects of COVID-19 on healthcare delivery, utilization and health outcomes: a scoping review. Eur J Public Health. 2021;31(3):634–40. https://doi.org/10.1093/eurpub/ckab047.

Xiao H, Dai X, Wagenaar BH, Liu F, Augusto O, Guo Y, Unger JM. The impact of the COVID-19 pandemic on health services utilization in China: time-series analyses for 2016–2020. Lancet Reg Health West Pac. 2021;9:100122. https://doi.org/10.1016/j.lanwpc.2021.100122.

Nederlandse Zorgautoriteit. DBC-regel registratie – 2020. https://dbcregels.nza.nl/2020/ggz/typeren/typeren-dbc-wat/diagnoseclassificatie/omgaan-met-meerdere-primaire-diagnoses/index.html. Accessed 10 Jun 2023.

Van De Sande D, Van Genderen ME, Smit JM, Huiskens J, Visser JJ, Veen RER, Van Unen E, Ba OH, Gommers D, Bommel JV. Developing, implementing and governing artificial intelligence in medicine: a step-by-step approach to prevent an artificial intelligence winter. BMJ Health Care Inform. 2022;29(1):e100495. https://doi.org/10.1136/bmjhci-2021-100495.

Maruster L, van der Zee D-J, Buskens E. Identifying frequent Health Care users and care consumption patterns: process mining of Emergency Medical Services Data. J Med Internet Res. 2021;23(10):e27499. https://doi.org/10.2196/27499.

Funding

No funding to report.

Author information

Authors and Affiliations

Contributions

BM, LW and MV conceptualized and designed the study. LW collected the data. HC, LZ and LW analyzed the data. LW drafted the main article. BM, MV and PJ provided interpretation of the results. All coauthors made substantial contributions to revise the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The Central Ethics Review Board of the UMCG approved the pseudonymous use of the data for research purposes and a waiver of informed consent.

Consent to participate and publish

The Central Ethics Review Board of the UMCG approved the pseudonymous use of the data for research purposes and a waiver of informed consent. Patient data were pseudonymized and patients who objected to the use of their data were excluded from this study prior to data collection.

Conflict of interest

The authors declare no conflicts of interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Weil, L.I., Zwerwer, L.R., Chu, H. et al. Identifying future high healthcare utilization in patients with multimorbidity – development and internal validation of machine learning prediction models using electronic health record data. Health Technol. 14, 433–449 (2024). https://doi.org/10.1007/s12553-024-00824-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12553-024-00824-z