Abstract

Driver monitoring system (DMS) was mainly developed to prevent accident risks by analyzing facial movements related to drowsiness and carelessness in real time such as driver’s gaze, blink, and head angle through cameras and warning the driver. Recently, the scope has been expanded to monitor passengers, and it has been linked to safety functions such as neglecting children, empty seats, or controlling airbags on seats with people under safety weight. However, evaluation research for algorithm advancement and performance optimization is relatively insufficient. In addition, the verification system is facing limitations such as personal information protection problems caused by the subject’s face data, errors in reproducing the subject’s drowsy and careless behavior, and differences in behavior according to individual differences. Therefore, as the importance of traffic safety is emphasized, an evaluation tool that can more efficiently and systematically evaluate the performance of DMS is needed. In this study, a driver behavior simulation dummy was developed that can quantitatively control the movement of the driver’s face and upper body. The driver behavior simulation dummy was developed in three stages in the order of function and specification definition, design and manufacture according to specifications, and verification through error tests for each function.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

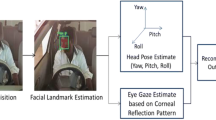

Many drivers have experienced drowsy driving at least once while driving, and car accidents require to pay more attention behind the wheel. According to a report by the National Highway Traffic Safety Administration (NHTSA), 95% of car accidents were caused by human error. In order to prevent accidents due to human error, in the EURO NCAP and EC regulations, DMS installation has been mandated until 2022, and starting from July 2024, it will be required for all new vehicles according to the revised regulations on general vehicle safety. DMS that is able to prevent drowsy driving through in-vehicle driver monitoring are becoming increasingly important. DMS was initially developed to analyze real-time driver behavior, such as eye movements, blinking, and head angles, related to drowsiness and inattentiveness, and to provide warnings to prevent accidents [1]. Recently, the scope of DMS has expanded to include monitoring not only the driver but also the passengers, and it is being integrated with safety functions, such as controlling airbags for unattended children, vacant seats, or occupants with insufficient safety weight, through interior monitoring of the vehicle [2]. With the development of technologies that fuse steering wheel and camera information to enhance the accuracy of driver monitoring, the recognition performance of DMS has also improved [3]. DMS-related technologies are mainly evolving through camera-based artificial intelligence learning, and various evaluation studies are being conducted to validate the algorithm functionality and performance. Many cases have been observed where evaluations are conducted on actual human subjects to assess the recognition performance of DMS itself and the warning system. Some examples include inducing test subjects into drowsy states or performing inattentive behaviors to assess the recognition performance and warning effectiveness [4]. However, evaluating the recognition performance accurately is challenging in the case of assessments involving actual human subjects, as errors due to individual differences can occur during the reproduction of drowsiness and inattentive behaviors. Additionally, to obtain sufficient evaluation data, it requires continuous trial and error, which can be somewhat inefficient. The need for evaluating driver monitoring performance in real vehicles and road environments by providing visual analysis of eye conditions and head posture has been emphasized [5]. Despite various evaluation studies, including those mentioned above, that assess the recognition performance of driver gaze, accurate measurement and evaluation are challenging due to individual differences in attending visual media such as clusters or displays within the cabin environment. To investigate the individual differences in driver gaze fixation at specific points (points of interest), gaze data was measured and compared when participants observed in-cabin devices inside an actual vehicle. As shown in Fig. 1, test subjects were instructed to observe the rearview mirror, hazard light switch, and right-side mirror for approximately 15 s each while seated in the driver's seat. Real-time gaze data was collected using wearable eye-tracking glasses (Tobii Pro Glasses 2). The results showed that even when the same target was observed, the positions and distributions of gaze varied among the drivers. Even when focusing on the relatively small area of the hazard light switch, differences were observed in the distribution of gaze. In conclusion, it is difficult to obtain accurate gaze data from a driver's eye gaze measurements due to errors over time. This means that the recognition evaluation results of the DMS algorithm may be affected by the facial movements and gaze data of different subjects. Therefore, even if the operator instructs the subject to look at a specific location, a certain amount of error is inevitable due to human factors, and it is necessary to develop an evaluation device to reduce the error caused by these human factors.

On the other hand, most of the prior studies related to the driver simulation dummy for DMS evaluation proposed in this study used human simulation dummy to investigate the severity of human injury caused by a vehicle collision or a collision test with a pedestrian [6,7,8,9,10,11]. In other words, the human simulation dummy used in previous studies is a structure that cannot simulate the driver's gaze and behavior, and there is a limit to evaluating DMS. Therefore, a driver behavior simulation dummy was developed that can accurately evaluate DMS by quantitatively simulating the driver's drowsy and careless behaviors, and a series of processes such as driver behavior definition, function implementation and instrument design, and error test for dummy development are described in detail.

2 Design Functions and Specifications

2.1 Scope of Driver Behaviors and Ranges

In order to define the driver behaviors to be emulated using the driver behavior simulation dummy, we conducted a survey of DMS development research cases and identified the specific body parts and actions of the driver that were predominantly considered in each study. Among the DMS development research cases, the most commonly considered driver behaviors were eye movements and head movements. State determination was based on the dispersion and duration of the driver's gaze, and proposed methods were suggested to improve warning strategies based on this information [12]. In research cases that considered head movements, geometric models were presented to evaluate the yaw rotation angle of the driver's head. Some studies also proposed methods for detecting cognitive dissonance by utilizing the position of the driver's lips [13, 14]. There were also cases that utilized motion capture, tracking the movements of the driver's head and skeleton, and assessing inattentiveness based on the relative distances between joint positions [15]. By compiling the surveyed DMS development research cases, a total of six behaviors were derived: head rotation, eye rotation, eye blinking, mouth opening and closing, upper body rotation, and upper body movement. Each behavior was further classified into 12 categories based on roll/pitch/yaw rotation and X/Y/Z translation axes. Based on these results, behaviors such as eye movements, head movements, eye blinking, and upper body movements were identified. The selection of the range for each behavior was guided by referencing the EURO NCAP evaluation protocol document and DMS evaluation standardization research. The standardization research provided illumination and camera positioning for DMS evaluation, as presented in Fig. 2, while the EURO NCAP evaluation protocol specified the specific positions that the driver focuses on for different scenarios [16, 17].

Taking into consideration the driver's gaze positions presented in the two references, we calculated the range of eye and head rotation as well as the range of upper body movement to ensure that the driver behavior simulation dummy can focus on all of these positions. The ranges for each behavior were selected, and the resolution, which is the minimum unit of control for each behavior, was determined to be as precise as possible within the specifications of the motors and mechanisms used in the development of the dummy. The finalized behaviors and specifications of the driver behavior simulation dummy are summarized in Table 1.

2.2 Selection of Reference Points

In order to design the structural layout of the driver behavior simulation dummy, it was necessary to establish absolute reference points for the X/Y/Z axes. We selected the eye center and the Hip point as the reference points. As the dummy developed in this study is intended to be installed inside a vehicle, the absolute positions of each reference point were determined by calculating the distances along the X/Y/Z axes from the steering wheel, the cabin floor, and the rear end of the driver's seat. The internal specifications of the vehicle, which serve as the basis for dummy installation, were based on the Large Car dimensions specified in the guidelines for ergonomic vehicle design [18]. During the process of selecting the reference point positions, we referred to the dimensional data provided in the driver's seat design guidelines and also considered the anthropometric data from Size Korea to account for the sitting posture of Korean drivers. For determining the position of the Z-axis reference point, we calculated the average distance from the Hip point to the eye position of Koreans, which was defined as the difference between the eye height and the hip height. The positions of the eye center and the Hip point reference points of the dummy are summarized in Table 2 (Fig. 3).

3 Structure Design and Implementing Functions

3.1 Structure Design

Based on the defined functions and specifications, we designed the structure of the driver behavior simulation dummy. The key components responsible for performing each function of the dummy include servo motors, a main control board, and potentiometers. The operational procedure according to the key components is as follows: when control values are inputted through dedicated software, they are transmitted to the main control board, which then controls the servo motors connected to each axis. As the servo motors perform rotational or translational movements and reach the control values inputted by the potentiometers, they come to a stop. The servo motors and potentiometers continuously provide feedback by comparing the actual motion values with the control values and adjust any discrepancies. Since there are specification differences in the key components connected to the upper body and face sections of the dummy, we designed the structure by dividing it into a base plate responsible for upper body movements and a head skull responsible for facial movements, in order to minimize interference between each part. The head skull is designed to be detachable and attachable to the base plate. The dimensions of the base plate were designed, taking into consideration the reference point positions and range of movements of the dummy, as well as the interior installation of a Large Car-sized vehicle, as shown in Fig. 4.

As shown in Fig. 5, a fixing structure was manufactured so that the base plate could be mounted to the vehicle seat position. It is in a state that can be mounted on a Genesis DH seat using this fixing structure. There is a limitation in that it is inevitable to manufacture a separate fixing structure suitable for the vehicle in order to be mounted on another vehicle. However, the base plate including the head skull was manufactured in consideration of the range of driver behavior in the seats of the passenger car (sedan) and commercial vehicle (bus).

In designing the head skull of the driver behavior simulation dummy, the focus was placed on making it as human-like as possible, as it should be recognized as a real person rather than a mannequin through DMS. To achieve this, size Korea's actual measurement data of a male in his 30 s was referenced, and the average values for the 45 facial components were calculated and applied in the design. Figure 6 shows the representative facial components applied in the head skull design.

The head skull reproduces the rotation of the driver's head and eyes, and a laser pointer was inserted into the left eye to enable real-time tracking of the eye gaze. In the process of creating the skin that wraps around the head skull, a silicone material that closely resembles human skin was utilized to ensure that it can be recognized as a real person through DMS. Figure 7 shows the confirmation of human recognition through DMS. Additionally, durability was considered to withstand external influences from the experimental environment and repetitive performance of actions. The driver behavior simulation dummy is designed for use in the vehicle interior, so an RTV silicone material that is most suitable for use at indoor temperatures was used. This material exhibits high flexibility and durability, making it commonly used for the production of items that require frequent movements, such as medical prosthetics.

In this section, the dimensions of the base plate and head skull, as well as the specifications of the key components of the dummy according to the motion axes, were considered. The hardware specifications of the driver behavior simulation dummy are summarized in Table 3.

3.2 Implementing Functions

To efficiently evaluate the performance of the DMS, we developed dedicated software capable of performing self-feedback of control values and behavior patternization. The dedicated software, developed using the Rubi language-based Flow Stone, consists of a graphical interface for controlling each action of the dummy and a sequencer interface for behavior patternization according to the passage of time. The action control interface is designed to control the 12 rotation and movement functions derived during the definition phase of the functionalities and specifications. Each function can be controlled within its maximum range and resolution, and animations are designed to visually confirm the dummy's actions when control values are input. Furthermore, by displaying the actual values obtained through feedback from the potentiometers, real-time verification of errors is possible. The action control interface is implemented in the form shown in Fig. 8

To facilitate the efficient evaluation of the DMS, we developed a patternization feature that allows the repetitive performance of driver actions, which can be implemented in the sequencer interface at the bottom of the software. The behavior patterns are implemented by inputting the starting and ending positions of specific actions, along with their execution time. These patterns, implemented in this manner, can be sequentially arranged in the sequencer interface to patternize various actions according to the passage of time. For example, as shown in Fig. 9, patterns for rotating the Head Pitch by 15° and the Head Yaw by 20° can be consecutively arranged to implement a behavior pattern where the driver looks at the rearview mirror. This pattern can be repeated. In the case of implementing patterns as shown in Fig. 8, the Pitch and Yaw axis movements occur step-by-step. However, it is also possible to implement patterns where both actions are input simultaneously, allowing them to move together. The execution time for the inputted patterns in the sequencer interface can be set with a minimum increment of 0.125 s.

4 Experiment

4.1 Environment and Methodology

In order to verify the accuracy of the driver behavior simulation dummy, an error test was conducted. Although the dummy continuously displays the error between the input value and the actual control value in real time through continuous feedback between the servo motor and the potentiometer, there may be errors due to mechanical play in the dummy's mechanism. The test involved classifying the control range of each function into steps, repeatedly performing control movements at each step, measuring the actual rotation and displacement values, and comparing them with the control values inputted into the software to determine the error values. For precise measurement, the Z-axis of the driver behavior simulation dummy and the bottom surface of the base plate were arranged to be vertical and horizontal, respectively, with respect to the ground in the laboratory. The front surface of the dummy was adjusted to be parallel to the wall. To facilitate measurement, protective film was attached to the wall, allowing the distance between the fixation point indicated by the left eye laser pointer of the dummy and the distances between fixation points to be displayed. The scenarios for error testing for each movement axis of the dummy are summarized in Table 4. Due to difficulties in accurately measuring the gaps in the eyelids and mouth opening and closing, these two actions were excluded. The measurement method for the control values of upper body movements according to the test scenarios involved marking a reference line on the underside structure surface of the base plate for X/Y/Z-axis movement values, and measuring the distance moved after control. For Pitch/Roll rotation values, a digital level was attached to the Z-axis of the base plate to measure the actual rotation. The measurement method for head movements followed the same procedure as upper body movements, where the control values were compared with the measured values after performing repeated movements according to the categorized steps. For the Roll/Pitch/Yaw rotation of the head, an eyeglass-shaped eye tracker was attached to the dummy's head, and the built-in gyro sensor measured the acceleration values, which were then converted to angular velocity values. The Pitch/Yaw rotation of the eyes was measured by utilizing the laser pointer embedded in the left eye to measure the distance between the origin point displayed on the wall and the point after movement, and by measuring the distance between the dummy's eyes and the wall to calculate the tangent value of the two distances (Figs. 10, 11).

4.2 Results of Upper Body Control

The error test results for upper body movements showed an average error of 0.62° for Pitch rotation, while Roll rotation exhibited a relatively higher error of 1.12°. For the translational movements, the average errors were observed to be 0.48 mm, 0.49 mm, and 0.29 mm for the X, Y, and Z axes, respectively. The error in Z-axis movement was lower compared to the other axes (Tables 5, 6).

4.3 Results of Head Control

The error test results for head movements showed an average error of 0.19° for Pitch rotation and a relatively higher average error of 1.08° for Yaw rotation of the eyes. In the case of the head skull, unlike other movement axes, the insertion space for components such as servo motors and gears are somewhat limited, and the largest clearance between components related to eye Yaw rotation was observed, which is believed to have contributed to the larger errors. For head rotation movements, the average errors were 1.67°, 1.94°, and 1.58° for Yaw, Pitch, and Roll axes, respectively, with Pitch rotation exhibiting the largest average error. This is expected as Pitch rotation is influenced the most by the weight load of the head skull among the three rotation movements. The average error of head movements was generally larger compared to other movements, which is attributed to the noise inherent in the Gyro sensor used for measuring the actual control values.

5 Conclusions and Future work

In this study, we developed a driver behavior simulation dummy that enables quantitative control of driver actions for a more systematic and efficient evaluation of DMS performance. We showed that the accuracy of the proposed apparatus through error test under condition of upper body and head control. As a result, the average error values for each movement axis of the dummy were generally lower than the resolution, indicating that precise control is achievable as a DMS evaluation tool. We believe that it can be a solution to overcome the limitations of previous driver monitoring system evaluation methods (movement errors caused by representative individual cars) using human subjects. However, some movement axes showed higher average error values than the resolution. This can be attributed to the faster rotation speed of the upper body Roll motor compared to the Pitch motor, resulting in larger errors due to speed-related influences. Additionally, the eye Yaw rotation, being the most constrained in terms of the insertion space for core components such as motors and gears, likely experienced interference and backlash, leading to significant errors. Improvements in the mechanical design are necessary for these two movement axes to minimize errors. The average error values for the three rotational movements of head Roll, Pitch, and Yaw were generally higher compared to other movement axes. However, the average error of less than 2 degrees is not unreasonable for the driver to visually recognize the type of small button (e.g., emergency lights) in the vehicle and is not expected to affect the decision of the driver's condition through DMS. In addition, the gaze position and eye movement (degree of opening and closing, frequency, etc.) used in Euro NCAP to detect carelessness and drowsiness can be implemented and verified with the proposed driver behavior simulation dummy, and detection performance evaluation can be performed in connection with various test cases. However, the lack of review of the impact on the driver behavior simulation dummy, such as control accuracy due to external environmental effects such as vibration in the actual vehicle environment, is a limitation. In the future, it is intended to verify its effectiveness as an evaluation tool by increasing the overall control accuracy of the dummy through mechanical improvement and performing performance evaluation in dynamic and static driving environments for various DMSs in real vehicles.

Abbreviations

- DMS:

-

Driver monitoring system

- EURO NCAP:

-

European new car assessment program

- EC:

-

European commission

- RTV:

-

Room temperature vulcanization

References

Dong, Y., Hu, Z., Uchimura, K., & Murayama, N. (2011). Driver inattention monitoring system for intelligent vehicles: A review. IEEE Transactions of Intelligent Transportation Systems, 12(2), 596–614.

Song, H., & Shin, H. (2021). Single-channel FMCW-radar-based multi-passenger occupancy detection inside vehicle. MDPI. Entropy 23(11).

Yekhshatyan, L., & Lee, J. D. (2013). Changes in the correlation between eye and steering movements indicate driver distraction. IEEE Transactions of Intelligent Transportation Systems, 14(1), 136–145.

Kim, S., Yae, J., Shin, J., & Choi, K. (2018). Design and evaluation of haptic signals for drowsy driving warning system. Journal of the Ergonomics Society of Korea, 37(3), 243–257.

Tawari, A., Martin, S., & Trivedi, M. M. (2014). Continuous head movement estimator for driver assistance: Issues, algorithms, and on-road evaluation. IEEE Transactions of Intelligent Transportation Systems, 15(2), 818–830.

Zhang, C., Li, X., Lei, Y., Zhang, D., & Zhang, T. (2024). Driver injury during automatic emergency steering in vehicle-vehicle side-impact collisions. IEEE Access, 12, 9400–9417.

Dong, L. P., Zhu, X. C., & Ma, Z. X. (2015). Side impact sled test method based on multipoint impact. J. Tongji Univ., 43(8), 1213–1218.

Shang, E. Y., Zhou, D. Y., & Li, Y. M. (2020). Research on abnormal problems of the Q3 Dummy’s chest acceleration curve in frontal crash test. Automob. Technol., 20(3), 45–49.

Fanta, O., Lopot, F., Kubovy, P., Jelen, K., Hilmarova, D., & Svoboda, M. (2022). Kinematic analysis and head injury criterion in a pedestrian collision with a tram at the speed of 10 and 20 km/h. Manuf. Technol., 22(2), 139–145.

Peng, Y., Hu, Z., Liu, Z., Che, Q., & Deng, G. (2024). Assessment of pedestrians’ head and lower limb injuries in tram-pedestrian collisions. Biomimetics, 9(1), 17.

Park, M., Lee, J., Choi, I., & Jeon, J. (2022). Performance of AEB vehicles in rear-end and cut-in collisions. International Journal of Precision Engineering and Manufacturing, 23, 139–145.

Ahlstrom, C., Kircher, K., & Kircher, A. (2013). A gaze-based driver distraction warning system and its effect on visual behavior. IEEE Transactions of Intelligent Transportation Systems, 14(2), 965–973.

Narayanan, A., Kaimal, R. M., & Bijlani, K. (2016). Estimation of driver head yaw angle using a generic geometric model. IEEE Transactions of Intelligent Transportation Systems, 17(12), 3446–3460.

Azman, A., Ibrahim, S. Z., Meng, Q., & Edirisinghe, E. A. (2014). Physiological measurement used in real time experiment to detect driver cognitive distraction. In 2014 international conference on electronics, information and communications(ICEIC). IEEE

Gallahan, S. L., Golzar, G. F., Jain, A. P., Samay, A. E., Trerotola, T. J., Weisskopf, J. G., & Lau, N. (2013). Detecting and mitigating driver distraction with motion capture technology: Distracted driving warning system. In 2013 IEEE systems and information engineering design symposium. IEEE

Tian, R., Ruan, K., Le, J., Greenberg, J., & Barbat, S. (2019). Standardized evaluation of camera-based driver state monitoring systems. IEEE/CAA Journal of Automatica Sinca, 6(3), 716–732.

Euro NCAP. (2013). Assessment protocol—SA—Safe Driving, Euro NCAP. www.euroncap.com/en/for-engineers/protocols/safety-assist

Bhise, V. D. (2011). Ergonomics in the automotive design process. CRC Press Taylor & Francis Group.

Acknowledgements

We would like to acknowledge the financial support from the R&D Program of Ministry of Trade, Industry and Energy of Republic of Korea (20014353, The development of passenger interaction system with level 4 of Automated driving).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This paper was presented at PRESM2023.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yae, J.H., Oh, Y.D., Kim, M.S. et al. A Study on the Development of Driver Behavior Simulation Dummy for the Performance Evaluation of Driver Monitoring System. Int. J. Precis. Eng. Manuf. (2024). https://doi.org/10.1007/s12541-024-01037-0

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12541-024-01037-0