Abstract

Laser micro-drilling is a significant manufacturing method used to drill precise microscopic holes into metals. Quality inspection of micro-holes is costly and redrilling defective holes can lead to imperfection owing to the misalignment in re-aligning the removed specimens. Thus this paper proposes an in-situ, automatic inspection method using photodiode data and machine learning models to detect defects in real-time during the fabrication of SK5 steel plates with 1064 nm Nd:YAG Laser machines to reduce the workload and increase the quality of products. Further, it explores the possibility of generalizing the models to 51 different scenarios of fabrication by classifying unseen data into 51 classes. A dataset of around 1,500,000 time series data points was generated using an optical probe while drilling over 56,000 holes into test specimens. 15 different combinations of thickness and diameter were drilled using suggested parameters. An additional 12 potential defect-prone conditions were designed to obtain data during conditional drilling. Hole quality was measured for each hole using OGP 3D profile microscope measuring machine. Results showed high accuracy in specialized defect detection within each scenario and showed a possibility of classifying photodiode data patterns, offering opportunities to improve the practicality of the proposed solution.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Advances in many fields require decreased size and increased precision leading to higher demand for precision manufacturing including micro-drilling. Laser beam micro-drilling is a favorable method that achieves high-quality micro-holes with impressive accuracy and precision and is used in various fields including biomedical (implants, medicine), aerospace, robotics, and electronic applications [1]. The current method of quality checking is highly inefficient and prone to subjective judgment. After the drilling process is completed, the specimen is removed from the machine and undergoes a quick visual inspection by checking the light penetration. Sampling inspection with a sample rate requested by the client is done using a precise microscope. If the quality of the sampled holes does not meet the requirements asserted by the client, additional re-drilling is done on correctable defective holes. The vast number of holes makes total inspection extremely costly hindering the economic feasibility. Visual inspection is susceptible to subjective judgment increasing uncertainty in the reliability of quality control. When redrilling is necessary, the need to remove specimens from the machine causes a delay in completion in addition to a reduction in quality due to machining errors while realigning the specimen onto the workspace. The objective of this project is to overcome the limitations in the current quality inspection method: overcome time and cost limitations of total inspection using real-time quality inspection through machine learning, exclude subjective judgment using automatic inspection, prevent the need to reschedule for redrilling, and overcome machining errors by eliminating the need for plate removal. Thus in this paper, we will propose a real-time, in-situ automatic quality inspection method using real-time light intensity measurements and machine learning defect detection models. To achieve this goal, an experiment was designed to drill over 56,000 holes onto SK5 metal plates with varying thicknesses using an Nd:YAG laser machine. Photodiode data collected during the fabrication was used to train machine learning models designed for anomaly detection to identify defective holes. In the following section a more detailed background of the specific laser drilling machine and process, anomaly detection using machine learning, and related work will be given.

2 Background

2.1 Laser Micro-drilling

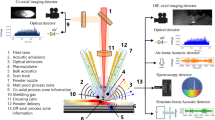

The laser machine used for this research is a Neodymium-doped yttrium aluminum garnet laser (Nd: YAG) emitting a pulsed laser beam with a wavelength of 1024 nm. The drilling process used was trepanning drilling where the pulsed laser creates a hole by removing a circular disk from the workpiece [2]. In particular, the path taken by the laser is 1 → 2 → 3 → 3 → 3 shown in Fig. 1 and the laser circles the full diameter three times to prevent under drilling.

Parameters that affect the quality of drilled holes can be divided into two categories: laser parameters and physical parameters. Laser parameters include settings such as peak power, frequency, and percent duty of the laser which control the intensity and schedule of the laser pulsing. Physical parameters include the velocity of the base stage where the specimen is fixed and laser defocus is the distance between the laser head and the specimen surface in relation to the focal length of the laser [3].

2.2 Anomaly Detection Algorithms

Anomalies are outliers that are significantly different from other normal instances. Accordingly, anomaly detection aims to identify points that are deviated from the generally normal data [4]. Anomaly detection algorithms are actively used in various applications, namely fraud detection [5], health monitoring [6, 7], surveillance monitoring [8], predictive maintenance [9,10,11], and defect detection [12, 13]. The latter two applications are closely related to manufacturing where key events like machine failure and defective products are scarce events that are significantly different from normal situations. The task of laser micro-drilling is a defect detection problem where an estimated average of 1–2% of holes are reported to be defective.

Machine learning algorithms are effective in anomaly detection as they can be trained on a vast amount of normal data. Some well-known machine learning algorithms include local outlier factor (LOF), K-nearest neighbors (KNN), support vector machines (SVM), Bayesian networks and autoencoders [14]. In this paper the autoencoder algorithm was explored.

2.3 Related Work

Multiple works have been done regarding laser drilling and hole quality. Many focused on the task of quality prediction with the goal of parameter optimization. Baiocco et al. [15] used artificial neural networks (ANNs) to predict the kerf width and hole diameter given four parameters: pulse duration (Pd), cutting speed (Cs), focus depth (Fd), and laser path (Lp). Biswas et al. [16] attempted to predict the optimal values for machining parameters (lamp current, pulse frequency, pulse width, air pressure, and focal length) that produce the best hole circularity and taper using ANNs. Ranjan et al. [17] used time-series signals to predict the quality of traditionally micro-drilled holes. The adaptive neuro-fuzzy inference system (ANFIS) model was trained on wave packets from vibration signals and cutting force signals.

Some works attempted real-time defect detection and monitoring of other laser manufacturing processes. Zuric and Brenner [18] used lateral monitoring to detect defects during ultrashort pulse laser micro structuring. Valtonen et al. [19] collected real-time photodiode data to detect defects by detecting when the laser missed a pulse using an industrial computer PXI system with a Data Acquisition card and Real-Time Controller module.

3 Methodology

3.1 Methodology Overview

Our approach stems from the repeated observation of unusually large amounts of light emitted during defective hole drilling. To quantify this phenomenon light intensity was collected to monitor the drilling process and used as data for machine learning modeling [20, 21]. Thorlabs PM16-140-USB Power Meter with a wavelength range of 350–1100 nm was used to measure five light intensity parameters: power [W], logarithmic power [dBm], saturation [%], irradiance [\({\mathrm{W}/\mathrm{cm}}^{2}\)], and current [A]. A web camera was also installed for visual monitoring throughout the experiment. Both the sensor and camera were controlled using an edge computer allowing remote data collection and control as shown in Fig. 2.

A total of six SK5 metal plate specimens were fabricated as part of this experiment. Two specimens per each of the three thicknesses, namely, 1 mm, 1.5 mm, and 1.8 mm were prepared. For each thickness, five different diameters, 0.05 mm, 0.08 mm, 0.2 mm, 0.3 mm, and 0.5 mm were drilled using normal laser and physical parameters suggested by field experts resulting in a total of 35,640 normal condition holes. In addition, 20,736 holes were drilled testing 12 defect-prone conditions on 0.08 mm diameter holes on each of the three thicknesses. The chosen conditions were obstacles (glass, metal, sand particles), surface scratches, laser defocus (positive and negative), and unsuggested laser parameters (peak power, frequency, and duty). Two diagrams repeated for each of the three thicknesses are shown in Fig. 3a, b. More detailed combinations of diameter and condition can be seen in Tables 1 and 2.

Light intensity data collected during the fabrication of the six specimens were processed and used as data to train and test our machine learning model. The trained model is used to identify holes that are likely to be defective. To verify the performance of the final model as a defect detector, the actual quality of each hole was measured using a precision microscope to distinguish real defects. Model performance was quantified using accuracy, detection rate, false rejection rate (FRR), and false acceptance rate (FAR).

Additionally, a classification model using DNN will be trained using the same data with 51 classes, one for each combination. The accuracy and confusion matrix will be used as a performance measure of classification.

3.2 Machine Learning Model

3.2.1 Autoencoder Algorithm

Autoencoders are trained with a dataset containing mostly normal data and learn repeated patterns within the normal dataset. Patterns are learned through updating weights in compression (encoder) and expansion (decoder) layers. The model outputs the reconstructed values as a result of compression and expansion as shown in Fig. 4. The difference between the original and reconstructed values is used to calculate the loss, namely the reconstruction loss, and is used as a measure of how abnormal the input data is.

Weights inside the encoder and decoder layers are updated through backpropagation as Eq. (1) during the training process. Trained autoencoders reconstruct new, unseen data using the trained encoding and decoding layers. New data sharing similar patterns with normal data is more likely to be reconstructed close to the original data while data with abnormal patterns are more likely to be unsuccessful in accurate reconstruction yielding high reconstruction loss.

where \(\alpha\) is the learning rate and \(E\left[\left|x-x^{\prime}\right|\right]\) is the mean absolute error (MAE) loss. This is applied for all weights w and where x′ is the reconstruction of the input x.

The specific design of layers within the encoder and decoder determines functions \({P}_{\theta }\) and \({Q}_{\varphi }\). In this paper LSTM and CNN layers were tested as encoding and decoding layers. LSTM is a type of recurrent neural network (RNN) that is capable of storing long-term dependencies within time-series data. The internal structure of a LSTM unit consists of a cell state and three gates: input gate, output gate, and forget gate as depicted in Fig. 5. New and past information is added and forgotten to optimize the loss. CNN is used often for image classification where repeated patterns appear in a spatially invariant manner. It uses kernels to convolute the data to create feature maps, and pooling to reduce the length during encoding, and up-sampling to reconstruct to the original length. The same trepanning drilling is repeated for each hole which should be learned by CNN layers as a pattern for normal holes.

The threshold is set as the sum of the mean and three standard deviations. Holes with loss values greater than the threshold are considered defective.

3.2.2 Model Structure

The length of collected data varied depending on the number of photodiode data points collected during the fabrication of each section. For two down sampling layers by a factor of two, the inputs were padded with zero to the nearest multiple of four. The input into the LSTM model is a one dimensional vector of the padded data. For the CNN model, the collected data was padded to the nearest multiple of 128, then 128 data points were grouped as one input data sample for consistent input dimension. Both the LSTM and CNN models have two layers in their encoder and decoder. The first encoding layer increases the number of channels to 8 and the second layer to 16 channels. The decoder reverts this process, from 16 channels to 8 to a flattened vector matching the shape of the input. Figure 6 depicts the entire CNN model structure from the input to encoding layers, decoding layers, and the output. The mean absolute error (MAE) of the input values and the reconstructed values is compared with the threshold to determine whether the input is of a defected hole or not. Hyperparameters of CNN and LSTM are listed in Table 3.

3.2.3 Classifier Algorithm

Classification is another fundamental machine learning technique that aims to differentiate samples in a dataset into distinct groups. In this paper, a DNN will be used to classify the entire dataset into 51 classes (17 different combinations for each of the three thicknesses). For multiclass classification, Softmax loss is used. Softmax loss refers to the use of Softmax activation followed by a Cross-Entropy loss. The Softmax activation function maps the final layer of the DNN to the probability of being each of the 51 classes as a score. Cross-Entropy loss is used to penalize false predictions for efficient training.

The weights are updated by backpropagation and the chain rule, as shown in Eq. (3). where f is the activation function.

When using Softmax with Cross-Entropy, the loss function reduces in the following manner

where \({s}_{p}\) is the score of the true class and C is the total number of classes.

3.3 Hole Quality Measure

Hole quality is represented using multiple parameters that affect the functionality of the final product. The circularity or roundness [16, 17, 22] and taper [23] are key features used to determine the quality of micro holes. In this paper diameter error and center alignment of the holes at entrance and exit were used in addition resulting in six hole quality parameters: diameter error at entrance and exit, circularity at entrance and exit, taper, and hole alignment.

An OGP 3D profile microscope measuring machine (Fig. 7) was used to routinely measure the diameter, roundness, and hole center coordinates (x, y, z) for both the entrance and exit sides of all holes. Diameter error was calculated as the difference between the designed and measured hole diameter. Taper and hole alignment was represented using angles calculated by Eqs. (6) and (7) from Fig. 8, respectively.

A defect is defined based on the requirements of different usage cases of the drilled plates. In this paper it was defined as consulted with field experts to be a hole with a diameter error greater than ten percent of the desired diameter, circularity less than 0.002, a taper or hole alignment error greater than 0.25 radians.

3.4 Data

Five different photodiode parameters were measured during the experiment, however, each of the four parameters, power [dBm], saturation[%], irradiance[\({\mathrm{W}/\mathrm{cm}}^{2}\)], and current showed a mathematical relationship to power [W]. Since the patterns visible in the power [W] data are repeated in the other parameter measurements, only power [W] was used in our dataset to avoid an unnecessary volume of data. The values for power [W] ranged up to 0.03 mW for normal hole drilling while conditionally drilled holes ranged to higher values up to 2.5 mW.

Raw data were preprocessed in four steps: cleaning, concatenation, formatting, and normalization. Data were cleaned by removing excess data collected during idle state. The cleaned data files were then concatenated into one continuous time-series file for each combination resulting in 51 files. The data was cut into multiple rows of data sharing the same length for CNN modeling. All data were normalized to adjust the scale of data for more effective modeling.

4 Results

4.1 Experiment Results

The fabricated specimens were of the form shown in Fig. 9. After sanding and cleaning the specimens, the hole quality was measured as outlined in Sect. 3.3. Some sample images of drilled holes viewed using the OGP 3D profile microscope can be seen in Fig. 10.

4.2 Modeling Results

4.2.1 Autoencoder Modelling Results

The reconstruction results of the two models trained shown in Fig. 11a suggest that the models successfully learn patterns in data during normal fabrication. As seen in Fig. 11b, LSTM has higher accuracy than CNN with a lower MAE reconstruction loss, especially at peaks. Thus model performance evaluation was executed on the LSTM model. This is likely due to the advantage of LSTM as a time-series model. LSTM stores information about past events in data with varying weights for longer and shorter-term memory. Although holes are drilled routinely with the same settings, fabrication on metal plates is influenced by other factors such as heat stored in neighboring areas of the plate. These other factors are time-dependent supporting the advantage of using LSTM over CNN.

4.2.2 Classifier Modelling Results

A DNN classifier was trained over 3000 epochs using 80% of the entire dataset. The remaining 20% of the data was used to validate the model as a check for common problems such as underfitting and overfitting. The final accuracy and loss were 0.9630 and 0.1178 for the training set, and 0.9553 and 0.1634 for the validation set as shown in Fig. 12 and Table 4. The similarity in the accuracy of training and validation suggests that the model has properly learned to generalize to unseen data. The high accuracy suggests each of the 51 combinations can be differentiated from each other.

4.3 Model Performance

4.3.1 Performance Measures

Model performance measures how well the model performs the designed task. This section will evaluate how well our model functions as a quality inspection tool through four performance parameters: accuracy, detection rate (DR), false rejection rate (FRR), and false acceptance rate (FAR). Each of the values is calculated as in Eqs. (8)– (11). Accuracy is the percentage of correct predictions over the total predictions. Detection rate is the percentage of real defects that are identified correctly by the model. FRR is a percentage of over detection where the model falsely rejects normal holes by incorrectly identifying them to be defective. FAR represents under detection where the model fails to identify real defects. As organized in Table 5, the performance measures for the final model were as follows: 99.86% accuracy, 90.37% DR, 0.08% FRR, and 9.63% FAR. The model has extremely high accuracy and low FRR which is desirable. This suggests that very few normal holes are likely to be rejected as a defect. However, improvements could be made on the DR and FAR which suggests that about 9.6% of defects are likely to be under detected. Considering the original 1–2% defect rate of laser drilled holes, the use of our suggested model can lower the defect rate to round 0.1–0.2%.

There exists a trade-off between FRR and FAR values depending on the choice of threshold value as depicted in Fig. 13. It can be seen that reducing the FAR (reduces under detection) adversely increases FRR which represents over detection. The crossover error rate (CER), also called the equal error rate (ERR), is when FRR and FAR have equal values. CER is often used as a performance measure in authentication algorithms such as biometric systems [24]. Improvements in determining the performance of the model, independent of the choice of the threshold could be made by experimentally plotting the FAR and FRR curve.

4.3.2 Classifier Performance

Model performance for classification is the accuracy that gives the rate of correct predictions over total predictions. The validation accuracy as mentioned in Sect. 4.2.1 was 0.9553 suggesting that the model will correctly predict about 95.5% of new, unseen data.

The confusion matrix seen in Fig. 14 visualizes the performance of our classifier. Each square out of the 51 by 51 board represents a probability from 0 to 1. Diagonal squares represent correct predictions and it can be seen that most have a high probability represented by the darker color.

5 Conclusions

The use of light intensity measurements was effective in process monitoring. However using one sensor limited the data collection to a single angle from the laser drilling spot limiting the data to be periodic in nature. The use of a multi-array sensing module surrounding the laser drilling spot would allow for a more complex relation to be captured in the data. Furthermore, spectrum analysis of the collected light would be greatly insightful in the actual composition of the incident light and could be used for a more intricate adjustment of sensor parameters to increase the quality of data.

In further research, the integration of the classifier within the autoencoder will be investigated to increase the precision of prediction and enhance the applicability of the model in real-life situations. As in most machine learning research, more data would be desirable in terms of an increased number of combinations (thickness, materials, diameters, etc.) and added features such as images or videos.

Abbreviations

- LSTM:

-

Long Short Term Memory

- CNN:

-

Convolutional Neural Network

- DNN:

-

Deep Neural Network

- ANN:

-

Artificial Neural Network

- ANIFIS:

-

Adaptive Neuro-Fuzzy Inference System

- LOF:

-

Local Outlier Factor

- KNN:

-

K-Nearest Neighbors

- SVM:

-

Support vector machines

- DR:

-

Detection Rate

- FAR:

-

False Acceptance Rate

- FRR:

-

False Rejection Rate

- CER:

-

Crossover Error Rate

- \({D}_{ent}, {D}_{ext}\) :

-

Hole diameter at entrance and exit

- \({C}_{ent}, {C}_{ext}\) :

-

Hole center at entrance and exit

- \(({x}_{ent}, {y}_{ent})\) :

-

X and Y coordinates of \({C}_{ent}\)

- \(({x}_{ext}, {y}_{ext})\) :

-

X and Y coordinates of \({C}_{ext}\)

- \(\alpha\) :

-

Learning rate

- \(w\) :

-

Weights in neural network

- \(x\) :

-

Input to autoencoder

- \(x'\) :

-

Reconstructed output of autoencoder

- \(z\) :

-

Result of compressing input x with encoding layer of autoencoder

- \({Q}_{\varphi }\) :

-

Encoder function

- \({P}_{\theta }\) :

-

Decoder function

- L:

-

Loss function

- f:

-

Activation function

- \({s}_{p}\) :

-

Score of actual class

- \({s}_{i}\) :

-

Score of class i

- \(MAE\) :

-

Mean Absolute Error

References

Nath, A. K. (2014). 9.06—Laser drilling of metallic and nonmetallic substrates. In S. Hashmi, G. F. Batalha, C. J. Van Tyne, & B. Yilbas (Eds.), Comprehensive materials processing (pp. 115–175). Elsevier.

Dubey, A. K., & Yadava, V. (2008). Experimental study of Nd:YAG laser beam machining—An overview. Journal of Materials Processing Technology, 195(1–3), 15–26.

Pattanayak, S., & Panda, S. (2018). Laser beam micro drilling—A review. Lasers. Journal of Manufacturing and Materials Processing, 5, 366–394.

Madhuri, S., & Usha, M. (2020). Anomaly detection techniques—Causes and issues.

Xu, B., Shen, H., Sun, B., An, R., Cao, Q., & Cheng, X. (2021). Towards consumer loan fraud detection: graph neural networks with role-constrained conditional random field. Proceedings of the AAAI Conference on Artificial Intelligence, 35(5), 4537–4545.

Fernando, T., Gammulle, H., Denman, S., Sridharan, S., & Fookes, C. (2020). Deep learning for medical anomaly detection: A survey. arXiv:2012.02364.

Niu, H., Omitaomu, O., Cao, Q., Ozmen, O., Klasky, H., Olama, M. M., Pullum, L., Kuruganti, T., Ward, M., Laurio, A., Scott, J., Drews, F., & Nebeker, J. R. (2020). Anomaly detection in sequential health care data using higher-order network representation. United States.

Nasaruddin, N., Muchtar, K., Afdhal, A., et al. (2020). Deep anomaly detection through visual attention in surveillance videos. Journal of Big Data, 7, 87. https://doi.org/10.1186/s40537-020-00365-y

Carrasco, J., Markova, I., López, D., Aguilera, I., García, D., García-Barzana, M., Arias-Rodil, M., Luengo, J., & Herrera, F. (2021). Anomaly detection in predictive maintenance: A new evaluation framework for temporal unsupervised anomaly detection algorithms. arXiv:2105.12818. https://doi.org/10.1016/j.neucom.2021.07.095.

Carrasco, J., López, D., Aguilera-Martos, I., García-Gil, D., Markova, I., García-Barzana, M., Arias-Rodil, M., Luengo, J., & Herrera, F. (2021). Anomaly detection in predictive maintenance: A new evaluation framework for temporal unsupervised anomaly detection algorithms. Neurocomputing, 462, 440–452. https://doi.org/10.1016/j.neucom.2021.07.095

Park, H. J., et al. (2021). Machine Health Assessment based on an anomaly indicator using a generative adversarial network. International Journal of Precision Engineering and Manufacturing, 22(6), 1113–1124. https://doi.org/10.1007/s12541-021-00513-1

Staar, B., Lütjen, M., & Freitag, M. (2019). Anomaly detection with convolutional neural networks for industrial surface inspection. Procedia CIRP, 79, 484–489. https://doi.org/10.1016/j.procir.2019.02.123

Byun, Y., & Baek, J.-G. (2021). Pattern classification for small-sized defects using multi-head CNN in semiconductor manufacturing. International Journal of Precision Engineering and Manufacturing, 22(10), 1681–1691. https://doi.org/10.1007/s12541-021-00566-2

Pang, G., Shen, C., Cao, L., & van den Hengel, A. (2020). Deep learning for anomaly detection: A review. ACM Computing Surveys. https://doi.org/10.1145/3439950

Baiocco, G., Genna, S., Leone, C., et al. (2021). Prediction of laser drilled hole geometries from linear cutting operation by way of artificial neural networks. International Journal of Advanced Manufacturing Technology, 114, 1685–1695. https://doi.org/10.1007/s00170-021-06857-2

Biswas, R., Kuar, A. S., Biswas, S. K., & Mitra, S. (2009). Artificial neural network modelling of Nd:YAG laser microdrilling on titanium nitride–alumina composite. Proceedings of the Institution of Mechanical Engineers, Part B: Journal of Engineering Manufacture, 224(3), 473–482. https://doi.org/10.1243/09544054jem1576

Ranjan, J., Patra, K., Szalay, T., Mia, M., Gupta, M. K., Song, Q., Krolczyk, G., Chudy, R., Pashnyov, V. A., & Pimenov, D. Y. (2020). Artificial intelligence-based hole quality prediction in micro-drilling using multiple sensors. Sensors, 20, 885. https://doi.org/10.3390/s20030885

Zuric, M., & Brenner, A. (2022). Real-time defect detection through lateral monitoring of secondary process emissions during ultrashort pulse laser microstructuring. Laser-Based Micro- and Nanoprocessing XVI. https://doi.org/10.1117/12.2607267

Valtonen, V.-M., et al. (2022). Real-time monitoring and defect detection of laser scribing process of CIGS solar panels utilizing photodiodes. IEEE Access, 10, 29443–29450. https://doi.org/10.1109/access.2022.3158355

Lott, P., Schleifenbaum, H., Meiners, W., Wissenbach, K., Hinke, C., & Bültmann, J. (2011). Design of an optical system for the in situ process monitoring of selective laser melting (SLM). Physics Procedia, 12, 683–690. https://doi.org/10.1016/j.phpro.2011.03.085

De Bono, P., Allen, C., D’Angelo, G., & Cisi, A. (2017). Investigation of optical sensor approaches for real-time monitoring during fibre laser welding. Journal of Laser Applications. https://doi.org/10.2351/1.4983253

Casalino, G., Losacco, A., Arnesano, A., Facchini, F., Pierangeli, M., & Bonserio, C. (2019). Statistical analysis and modelling of an Yb: KGW femtosecond laser micro-drilling process. Procedia CIRP, 62, 275–280. https://doi.org/10.1016/j.procir.2016.06.111

Bandyopadhyay, S., et al. (2002). Geometrical features and metallurgical characteristics of Nd:YAG laser drilled holes in thick in 718 and Ti–6Al–4V Sheets. Journal of Materials Processing Technology, 127(1), 83–95. https://doi.org/10.1016/s0924-0136(02)00270-4

Conrad, E., Misenar, S., & Feldman, J. (2012). Chapter 2—Domain 1: Access control. In E. Conrad, S. Misenar, & J. Feldman (Eds.), CISSP study guide (second edition) (pp. 9–62). Syngress. https://doi.org/10.1016/B978-1-59749-961-3.00002-9.

Acknowledgements

This research was supported by the MSIT(Ministry of Science and ICT), Korea, under the Grand Information Technology Research Center support program (IITP-2023-2020-0-01741) supervised by the IITP (Institute for Information & communications Technology Planning & Evaluation. This work was supported by project for Smart Manufacturing Innovation R&D funded Korea Ministry of SMEs and Startups in 2022. (Project No. RS-2022-00141076)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This paper was presented at PRESM2022.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lee, Y.K., Lee, S. & Kim, S.H. Real-Time Defect Monitoring of Laser Micro-drilling Using Reflective Light and Machine Learning Models. Int. J. Precis. Eng. Manuf. 25, 155–164 (2024). https://doi.org/10.1007/s12541-023-00849-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12541-023-00849-w