Abstract

In statistics, log-concave density estimation is a central problem within the field of nonparametric inference under shape constraints. Despite great progress in recent years on the statistical theory of the canonical estimator, namely the log-concave maximum likelihood estimator, adoption of this method has been hampered by the complexities of the non-smooth convex optimization problem that underpins its computation. We provide enhanced understanding of the structural properties of this optimization problem, which motivates the proposal of new algorithms, based on both randomized and Nesterov smoothing, combined with an appropriate integral discretization of increasing accuracy. We prove that these methods enjoy, both with high probability and in expectation, a convergence rate of order 1/T up to logarithmic factors on the objective function scale, where T denotes the number of iterations. The benefits of our new computational framework are demonstrated on both synthetic and real data, and our implementation is available in a github repository LogConcComp (Log-Concave Computation).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In Statistics, the field of nonparametric inference under shape constraints dates back at least to [33], who studied the nonparametric maximum likelihood estimator of a decreasing density on the non-negative half line. But it is really over the last decade or so that researchers have begun to realize its full potential for addressing key contemporary data challenges such as (multivariate) density estimation and regression. The initial allure is the flexibility of a nonparametric model, combined with estimation methods that can often avoid the need for tuning parameter selection, which can often be troublesome for other nonparametric techniques such as those based on smoothing. Intensive research efforts over recent years have revealed further great attractions: for instance, these procedures frequently attain optimal rates of convergence over relevant function classes. Moreover, it is now known that shape-constrained procedures can possess intriguing adaptation properties, in the sense that they can estimate particular subclasses of functions at faster rates, even (nearly) as well as the best one could do if one were told in advance that the function belonged to this subclass.

Typically, however, the implementation of shape-constrained estimation techniques requires the solution of an optimization problem, and, despite some progress, there are several cases where computation remains a bottleneck and hampers the adoption of these methods by practitioners. In this work, we focus on the problem of log-concave density estimation, which has become arguably the central challenge in the field because the class of log-concave densities enjoys stability properties under marginalization, conditioning, convolution and linear transformations that make it a very natural infinite-dimensional generalization of the class of Gaussian densities [60].

The univariate log-concave density estimation problem was first studied in [68], and fast algorithms for the computation of the log-concave maximum likelihood estimator (MLE) in one dimension are now available through the R packages logcondens [27] and cnmlcd [49]. [20] introduced and studied the multivariate log-concave maximum likelihood estimator, but their algorithm, which is described below and implemented in the R package LogConcDEAD [18], is slow; for instance, [20] report a running time of 50 s for computing the bivariate log-concave MLE with 500 observations, and 224 min for computing the log-concave MLE in four dimensions with 2,000 observations. An alternative, interior point method for a suitable approximation was proposed by [46]. Recent progress on theoretical aspects of the computational problem in the computer science community includes [2], who proved that there exists a polynomial time algorithm for computing the log-concave maximum likelihood estimator. We are unaware of any attempt to implement this algorithm. [57] compute an approximation to the log-concave MLE by considering \(-\log p\) as a piecewise affine maximum function, using the log-sum-exp operator to approximate the non-smooth operator, a Riemann sum to compute the integral and its gradient, and obtain a solution via L-BFGS. This reformulation means that the problem is no longer convex.

To describe the problem more formally, let \({\mathcal {C}}_d\) denote the class of proper, convex lower-semicontinuous functions \(\varphi :\mathbb {R}^d \rightarrow (-\infty ,\infty ]\) that are coercive in the sense that \(\varphi ({\varvec{x}}) \rightarrow \infty \) as \(\Vert {\varvec{x}}\Vert \rightarrow \infty \). The class of upper semi-continuous log-concave densities on \(\mathbb {R}^d\) is denoted as

Given \({\varvec{x}}_1,\ldots ,{\varvec{x}}_n \in \mathbb {R}^d\), [20, Theorem 1] proved that whenever the convex hull \(C_n\) of \({\varvec{x}}_1,\ldots ,{\varvec{x}}_n\) is d-dimensional, there exists a unique

If \({\varvec{x}}_1,\ldots ,{\varvec{x}}_n\) are regarded as realizations of independent and identically distributed random vectors on \(\mathbb {R}^d\), then the objective function in (1) is a scaled version of the log-likelihood function, so \({\hat{p}}_n\) is called the log-concave MLE. The existence and uniqueness of this estimator is not obvious, because the infinite-dimensional class \({\mathcal {P}}_d\) is non-convex, and even the class of negative log-densities \(\bigl \{\varphi \in {\mathcal {C}}_d:\int _{\mathbb {R}^d} e^{-\varphi } = 1\bigr \}\) is non-convex. In fact, the estimator belongs to a finite-dimensional subclass; more precisely, for a vector \({\varvec{\phi }}= (\phi _1,\ldots ,\phi _n) \in \mathbb {R}^n\), define \(\textrm{cef}[{\varvec{\phi }}] \in {\mathcal {C}}_d\) to be the (pointwise) largest function with

for \(i=1,\ldots ,n\). [20] proved that \({\hat{p}}_n = e^{-\textrm{cef}[{\varvec{\phi }}^*]}\) for some \({\varvec{\phi }}^* \in \mathbb {R}^n\), and refer to the function \(-\textrm{cef}[{\varvec{\phi }}^*]\) as a ‘tent function’; see the illustration in Fig. 1. [20] further defined the non-smooth, convex objective function \(f:\mathbb {R}^n \rightarrow \mathbb {R}\) by

and proved that \({\varvec{\phi }}^* = \mathop {\textrm{argmin}}\nolimits _{{\varvec{\phi }}\in \mathbb {R}^n} f({\varvec{\phi }})\).

An illustration of a tent function, taken from [20]

The two main challenges in optimizing the objective function f in (2) are that the value and subgradient of the integral term are hard to evaluate, and that it is non-smooth, so vanilla subgradient methods lead to a slow rate of convergence. To address the first issue, [20] computed the exact integral and its subgradient using the qhull algorithm [4] to obtain a triangulation of the convex hull of the data, evaluating the function value and subgradient over each simplex in the triangulation. However, in the worst case, the triangulation can have \(O(n^{d/2})\) simplices [50]. The non-smoothness is handled via Shor’s r-algorithm [66, Chapter 3], as implemented by [42]. In Sect. 2, we characterize the subdifferential of the objective function in terms of the solution of a linear program (LP), and show that the solution lies in a known, compact subset of \(\mathbb {R}^n\). This understanding allows us to introduce our new computational framework for log-concave density estimation in Sect. 3, based on an accelerated version of a dual averaging approach [53]. This relies on smoothing the objective function, and encompasses two popular strategies, namely Nesterov smoothing [52] and randomized smoothing [25, 48, 73], as special cases. A further feature of our algorithm is the construction of approximations to gradients of our smoothed objective, and this in turn requires an approximation to the integral in (2). While a direct application of the theory of [25] would yield a rate of convergence for the objective function of order \(n^{1/4}/T + 1/\sqrt{T}\) after T iterations, we show in Sect. 4 that by introducing finer approximations of both the integral and its gradient as the iteration number increases, we can obtain an improved rate of order 1/T, up to logarithmic factors. Moreover, we translate the optimization error in the objective into a bound on the error in the log-density, which is uncommon in the literature in the absence of strong convexity. A further advantage of our approach is that we are able to extend it in Sect. 5 to the more general problem of quasi-concave density estimation [46, 65], thereby providing a computationally tractable alternative to the discrete Hessian approach of [46]. Section 6 illustrates the practical benefits of our methodology in terms of improved computational timings on simulated data. Additional experimental details and applications on real data sets are provided in Appendix A. Proofs of all main results can be found in Appendix B, and background on the field of nonparametric inference under shape constraints can be found in Appendix C.

Notation: We write \([n]:= \{1,2,\ldots , n\}\), let \({\varvec{1}} \in \mathbb {R}^n\) denote the all-ones vector, and denote the cardinality of a set S by |S|. For a Borel measurable set \(C\subseteq \mathbb {R}^d\), we use \(\textrm{vol}(C)\) to denote its volume (i.e. d-dimensional Lebesgue measure). We write \(\Vert \cdot \Vert \) for the Euclidean norm of a vector. For \(\mu > 0\), a convex function \(f:\mathbb {R}^n \rightarrow \mathbb {R}\) is said to be \(\mu \)-strongly convex if \({\varvec{\phi }}\mapsto f({\varvec{\phi }})-\frac{\mu }{2}\Vert {\varvec{\phi }}\Vert ^2\) is convex. The notation \(\partial f({\varvec{\phi }})\) denotes the subdifferential (set of subgradients) of f at \({\varvec{\phi }}\). Given a real-valued sequence \((a_n)\) and a positive sequence \((b_n)\), we write \(a_n = {\tilde{O}}(b_n)\) if there exist \(C,\gamma > 0\) such that \(a_n \le C b_n \log ^\gamma (1+n)\) for all \(n \in \mathbb {N}\).

2 Understanding the structure of the optimization problem

Throughout this paper, we assume that \({\varvec{x}}_1,\ldots ,{\varvec{x}}_n \in \mathbb {R}^d\) are distinct and that their convex hull \(C_n:=\textrm{conv}({\varvec{x}}_1,\ldots ,{\varvec{x}}_n)\) has nonempty interior, so that \(n \ge d+1\) and \(\varDelta := \textrm{vol}(C_n)>0\). This latter assumption ensures the existence and uniqueness of a minimizer of the objective function in (2) [28, Theorem 2.2]. Recall that we define the lower convex envelope function [59] \(\textrm{cef}: \mathbb {R}^n \rightarrow {\mathcal {C}}_d\) by

As mentioned in the introduction, in computing the MLE, we seek

where

Note that (4) can be viewed as a stochastic optimization problem by writing

where \({\varvec{\xi }}\) is uniformly distributed on \(C_n\) and where, for \({\varvec{x}}\in C_n\),

Let \({\varvec{X}}:= [{\varvec{x}}_1 \, \cdots \, {\varvec{x}}_n]^\top \in \mathbb {R}^{n \times d}\), and for \({\varvec{x}}\in \mathbb {R}^d\), let \(E({\varvec{x}}):= \bigl \{{\varvec{\alpha }}\in \mathbb {R}^n:{\varvec{X}}^\top {\varvec{\alpha }}= {\varvec{x}}, {\varvec{1}}_n^\top {\varvec{\alpha }}=1, {\varvec{\alpha }}\ge 0\bigr \}\) denote the set of all weight vectors for which \({\varvec{x}}\) can be written as a weighted convex combination of \({\varvec{x}}_1,\ldots ,{\varvec{x}}_n\). Thus \(E({\varvec{x}})\) is a compact, convex subset of \(\mathbb {R}^n\). The \(\textrm{cef}\) function is given by a linear program (LP) [2, 46]:

If \({\varvec{x}}\notin C_n\), then \(E({\varvec{x}}) =\emptyset \), and, with the standard convention that \(\inf \emptyset := \infty \), we see that (\(Q_0\)) agrees with (3). From the LP formulation, it follows that \({\varvec{\phi }}\mapsto \textrm{cef}[{\varvec{\phi }}]({\varvec{x}})\) is concave, for every \({\varvec{x}}\in \mathbb {R}^d\).

Given a pair \({\varvec{\phi }}\in \mathbb {R}^n\) and \({\varvec{x}}\in C_n\), an optimal solution to (\(Q_0\)) may not be unique, in which case the map \({\varvec{\phi }}\mapsto \textrm{cef}[{\varvec{\phi }}]({\varvec{x}})\) is not differentiable [7, Proposition B.25(b)]. Noting that the infimum in (\(Q_0\)) is attained whenever \({\varvec{x}}\in C_n\), let

Danskin’s theorem [7, Proposition B.25(b)] applied to \(-\textrm{cef}[{\varvec{\phi }}]({\varvec{x}})\) then yields that for each \({\varvec{x}}\in C_n\), the subdifferential of \(F({\varvec{\phi }},{\varvec{x}})\) with respect to \({\varvec{\phi }}\) is given by

Since both f and \(F(\cdot ,{\varvec{x}})\) are finite convex functions on \(\mathbb {R}^n\) (for each fixed \({\varvec{x}}\in C_n\) in the latter case), by [17, Proposition 2.3.6(b) and Theorem 2.7.2], the subdifferential of f at \({\varvec{\phi }}\in \mathbb {R}^n\) is given by

Observe that given any \({\varvec{\phi }}\in \mathbb {R}^n\), the function \({\varvec{x}}\mapsto -\textrm{cef}\bigl [{\varvec{\phi }}+ \log I({\varvec{\phi }}){\varvec{1}}\bigr ]({\varvec{x}})\) (where \(I({\varvec{\phi }})\) is the integral defined in (5)) is a log-density. It is also convenient to let \({\bar{{\varvec{\phi }}}} \in \mathbb {R}^n\) be such that \(\exp \{-\textrm{cef}[{\bar{{\varvec{\phi }}}}]\}\) is the uniform density on \(C_n\), so that \(f({{\bar{{\varvec{\phi }}}}})=\log \varDelta +1\). Proposition 1 below (an extension of [2, Lemma 2]) provides uniform upper and lower bounds on this log-density, whenever the objective function f evaluated at \({\varvec{\phi }}\) is at least as good as that at \({\bar{{\varvec{\phi }}}}\). In more statistical language, these bounds hold whenever the log-likelihood of the density \(\exp \bigl \{-\textrm{cef}\bigl [{\varvec{\phi }}+ \log I({\varvec{\phi }}){\varvec{1}}\bigr ](\cdot )\bigr \}\) is at least as large as that of the uniform density on the convex hull of the data, so in particular, they must hold for the log-concave MLE (i.e. when \({\varvec{\phi }}= {\varvec{\phi }}^*\)). Let \(\phi ^0:= (n-1) +d(n-1)\log \bigl (2n + 2nd \log (2nd)\bigr ) +\log \varDelta \) and \(\phi _0:=-1 - d \log \bigl (2n + 2nd \log (2nd)\bigr ) +\log \varDelta \).

Proposition 1

For any \({\varvec{\phi }}\in \mathbb {R}^n\) such that \(f({\varvec{\phi }})\le \log \varDelta +1\), we have \(\phi _0\le \phi _i+\log I({\varvec{\phi }})\le \phi ^0\) for all \(i \in [n]\).

The following corollary is an immediate consequence of Proposition 1.

Corollary 1

Suppose that \({\varvec{\phi }}\in \mathbb {R}^n\) satisfies \(I({\varvec{\phi }})=1\) and \(f({\varvec{\phi }})\le f({{\bar{{\varvec{\phi }}}}}) = \log \varDelta +1\). Then \({\varvec{\phi }}^* \in \mathbb {R}^n\) defined in (4) satisfies

Corollary 1 gives a sense in which any \({\varvec{\phi }}\in \mathbb {R}^n\) for which the objective function is ‘good’ cannot be too far from the optimizer \({\varvec{\phi }}^*\); here, ‘good’ means that the objective should be no larger than that of the uniform density on the convex hull of the data. Moreover, an upper bound on the integral \(I({\varvec{\phi }})\) provides an upper bound on the norm of any subgradient \({\varvec{g}}({\varvec{\phi }})\) of f at \({\varvec{\phi }}\).

Proposition 2

Any subgradient \({\varvec{g}}({\varvec{\phi }}) \in \mathbb {R}^n\) of f at \({\varvec{\phi }}\in \mathbb {R}^n\) satisfies \(\Vert {\varvec{g}}({\varvec{\phi }})\Vert ^2\le \max \bigl \{1/n + 1/4,I({\varvec{\phi }})^2\bigr \}.\)

3 Computing the log-concave MLE

As mentioned in the introduction, subgradient methods [56, 66] tend to be slow for minimizing the objective function f defined in (5) [20]. Our alternative approach involves the minimizing the representation of f given in (6) via smoothing techniques, which offer superior computational guarantees and practical performance in our numerical experiments.

3.1 Smoothing techniques

We present two smoothing techniques to find the minimizer \({\varvec{\phi }}^* \in \mathbb {R}^n\) of the nonsmooth convex optimization problem (4). By Proposition 1, we have that \({\varvec{\phi }}^* \in {{\varvec{\varPhi }}}\), where

with \(\phi _0,\phi ^0 \in \mathbb {R}\). In what follows we present two smoothing techniques: one based on Nesterov smoothing [52] and the second on randomized smoothing [25].

3.1.1 Nesterov smoothing

Recall that the non-differentiability in f in (5) is due to the LP (\(Q_0\)) potentially having multiple optimal solutions. Therefore, following [52], we consider replacing this LP with the following quadratic program (QP):

where \({\varvec{\alpha }}_0:=(1/n){\varvec{1}}\in \mathbb {R}^n\) is the center of \(E({\varvec{x}})\), and where \(u\ge 0\) is a regularization parameter that controls the extent of the quadratic regularization of the objective. With this definition, we have \(q_0[{\varvec{\phi }}]({\varvec{x}})=\textrm{cef}[{\varvec{\phi }}]({\varvec{x}})\). For \(u>0\), due to the strong convexity of the function \({\varvec{\alpha }} \mapsto {\varvec{\alpha }}^\top {\varvec{\phi }}+ ({u}/2)\Vert {\varvec{\alpha }}-{\varvec{\alpha }}_0\Vert ^2\) on the convex polytope \(E({\varvec{x}})\), (\(Q_u\)) admits a unique solution that we denote by \({\varvec{\alpha }}^*_u[{\varvec{\phi }}]({\varvec{x}})\). It follows again from Danskin’s theorem that \({\varvec{\phi }}\mapsto q_u[{\varvec{\phi }}]({\varvec{x}})\) is differentiable for such u, with gradient \(\nabla _{{\varvec{\phi }}} q_u[{\varvec{\phi }}]({\varvec{x}}) = {\varvec{\alpha }}^*_u[{\varvec{\phi }}]({\varvec{x}})\).

Using \(q_{u}[{\varvec{\phi }}]({\varvec{x}})\) instead of \(q_{0}[{\varvec{\phi }}]({\varvec{x}})\) in (5), we obtain a smooth objective \({\varvec{\phi }}\mapsto {\tilde{f}}_u({\varvec{\phi }})\), given by

where \({\tilde{F}}_u({\varvec{\phi }},{\varvec{x}}):=({1}/{n}){\varvec{1}}^\top {\varvec{\phi }}+\varDelta \exp \{-q_u[{\varvec{\phi }}]({\varvec{x}})\}\), and where \({\varvec{\xi }}\) is again uniformly distributed on \(C_n\). We may differentiate under the integral (e.g. [45, Theorem 6.28]) to see that the partial derivatives of \({\tilde{f}}_u\) with respect to each component of \({\varvec{\phi }}\) exist, and moreover they are continuous (because \({\varvec{\phi }}\mapsto {\varvec{\alpha }}^*_u[{\varvec{\phi }}]({\varvec{x}})\) is continuous by Proposition 5), so \(\nabla _{{\varvec{\phi }}} {\tilde{f}}_u({\varvec{\phi }})= \mathbb {E}[\tilde{{\varvec{G}}}_u({\varvec{\phi }},{\varvec{\xi }})]\), where

Proposition 3 below presents some properties of the smooth objective \({\tilde{f}}_u\).

Proposition 3

For any \({\varvec{\phi }}\in {{\varvec{\varPhi }}}\), we have

(a) \(0 \le {\tilde{f}}_u({\varvec{\phi }})-{\tilde{f}}_{u'}({\varvec{\phi }})\le \frac{u-u'}{2}e^{u'/2}I({\varvec{\phi }})\) for \(u' \in [0,u]\);

(b) For every \(u \ge 0\), the function \({\varvec{\phi }}\mapsto {\tilde{f}}_u({\varvec{\phi }})\) is convex and \(\varDelta e^{-\phi _0+u/2}\)-Lipschitz;

(c) For every \(u \ge 0\), the function \({\varvec{\phi }}\mapsto {\tilde{f}}_u({\varvec{\phi }})\) has \(\varDelta e^{-\phi _0+u/2}(1+u^{-1})\)-Lipschitz gradient;

(d) \(\mathbb {E}\bigl (\Vert {\tilde{{\varvec{G}}}_u({\varvec{\phi }},{\varvec{\xi }})-\nabla _{{\varvec{\phi }}}{\tilde{f}}_u({\varvec{\phi }})}\Vert ^2\bigr ) \le (\varDelta e^{-\phi _0+u/2})^2\) for every \(u \ge 0\).

3.1.2 Randomized smoothing

Our second smoothing technique is randomized smoothing [25, 48, 73]: we take the expectation of a random perturbation of the argument of f. Specifically, for \(u \ge 0\), let

where \({\varvec{z}}\) is uniformly distributed on the unit \(\ell _{2}\)-ball in \(\mathbb {R}^n\). Thus, similar to Nesterov smoothing, \({\bar{f}}_0 = f\), and the amount of smoothing increases with u. From a stochastic optimization viewpoint, we can write

where \({\varvec{G}}({\varvec{\phi }}+u{\varvec{v}},{\varvec{x}}) \in \partial F({\varvec{\phi }}+u{\varvec{v}},{\varvec{x}})\), and where the expectations are taken over independent random vectors \({\varvec{z}}\), distributed uniformly on the unit Euclidean ball in \(\mathbb {R}^n\), and \({\varvec{\xi }}\), distributed uniformly on \(C_n\). Here the gradient expression follows from, e.g., [48, Lemma 3.3(a)], [73, Lemma 7]; since \(F({\varvec{\phi }}+u{\varvec{v}},{\varvec{x}})\) is differentiable almost everywhere with respect to \({\varvec{\phi }}\), the expression for \({\bar{f}}_u({\varvec{\phi }})\) does not depend on the choice of subgradient.

Proposition 4 below lists some properties of \({\bar{f}}_{u}\) and its gradient. It extends [73, Lemmas 7 and 8] by exploiting special properties of the objective function to sharpen the dependence of the bounds on n.

Proposition 4

For any \(u \ge 0\) and \({\varvec{\phi }}\in {{\varvec{\varPhi }}}\), we have

(a) \(0\le {\bar{f}}_u({\varvec{\phi }})-f({\varvec{\phi }})\le I({\varvec{\phi }})ue^u\sqrt{\frac{2\log n}{n+1}}\);

(b) \({\bar{f}}_{u'}({\varvec{\phi }})\le {\bar{f}}_u({\varvec{\phi }})\) for any \(u' \in [0,u]\);

(c) \({\varvec{\phi }}\mapsto {\bar{f}}_u({\varvec{\phi }})\) is convex and \(\varDelta e^{-\phi _0+u}\)-Lipschitz;

(d) \({\varvec{\phi }}\mapsto {\bar{f}}_u({\varvec{\phi }})\) has \(\varDelta e^{-\phi _0+u}n^{1/2}/u\)-Lipschitz gradient;

(e) \(\mathbb {E}\bigl (\bigl \Vert {\varvec{G}}({\varvec{\phi }}+u{\varvec{z}},{\varvec{\xi }})-\nabla {\bar{f}}_u({\varvec{\phi }})\bigr \Vert ^2\bigr ) \le (\varDelta e^{-\phi _0+u})^2\) whenever \({\varvec{G}}({\varvec{\phi }}+u{\varvec{v}},{\varvec{x}}) \in \partial F({\varvec{\phi }}+u{\varvec{v}},{\varvec{x}})\) for every \({\varvec{v}} \in \mathbb {R}^n\) with \(\Vert {\varvec{v}}\Vert \le 1\) and \({\varvec{x}}\in C_n\).

3.2 Stochastic first-order methods for smoothing sequences

Our proposed algorithm for computing the log-concave MLE is given in Algorithm 1. It relies on the choice of a smoothing sequence of f, which may be constructed using Nesterov or randomized smoothing, for instance. For a non-negative sequence \((u_t)_{t \in \mathbb {N}_0}\), this smoothing sequence is denoted by \((\ell _{u_t})_{t \in \mathbb {N}_0}\), where \(\ell _{u_t}:={{\tilde{f}}}_{u_t}\) is given by (11) or \(\ell _{u_t}:={{\bar{f}}}_{u_t}\) is given by (13). In Algorithm 1, \(P_{\varvec{\varPhi }}:\mathbb {R}^n \rightarrow \varvec{\varPhi }\) denotes the projection operator onto the closed convex set \(\varvec{\varPhi }\), which is essentially a threshold clipping operator. In fact, Algorithm 1 is a modification of an algorithm due to [25], and can be regarded as an accelerated version of the dual averaging scheme [53] applied to \((\ell _{u_t})\).

3.2.1 Approximating the gradient of the smoothing sequence

In Line 3 of Algorithm 1, we need to compute an approximation of the gradient \(\nabla _{{\varvec{\phi }}} \ell _{u}\), for a general \(u \ge 0\). A key step in this process is to approximate the integral \(I(\cdot )\), as well as a subgradient of I, at an arbitrary \({\varvec{\phi }}\in \mathbb {R}^n\). [20] provide explicit formulae for these quantities, based on a triangulation of \(C_n\), using tools from computational geometry. For practical purposes, [21] apply a Taylor expansion to approximate the analytic expression. The R package LogConcDEAD [18] uses this method to evaluate the exact integral at each iteration, but since this is time-consuming, we will only use this method at the final stage of our proposed algorithm as a polishing stepFootnote 1.

An alternative approach is to use numerical integration.Footnote 2 Among deterministic schemes, [57] observed empirically that the simple Riemann sum with uniform weights appears to perform the best among several multi-dimensional integration techniques. Random (Monte Carlo) approaches to approximate the integral are also possible: given a collection of grid points \({\mathcal {S}}=\{{\varvec{\xi }}_1,\ldots ,{\varvec{\xi }}_m\}\), we approximate the integral as \(I_{{\mathcal {S}}}({\varvec{\phi }}):= ({\varDelta }/{m})\sum _{\ell =1}^m \exp \{-\textrm{cef}[{\varvec{\phi }}]({\varvec{\xi }}_\ell )\}.\) This leads to an approximation of the objective f given by

Since \(f_{{\mathcal {S}}}\) is a finite, convex function on \(\mathbb {R}^n\), it has a subgradient at each \({\varvec{\phi }}\in \mathbb {R}^n\), given by

As the effective domain of \(\textrm{cef}[{\varvec{\phi }}](\cdot )\) is \(C_{n}\), we consider grid points \({\mathcal {S}} \subseteq C_{n}\).

We now illustrate how these ideas allow us to approximate the gradient of the smoothing sequence, and initially consider Nesterov smoothing, with \(\ell _u={\tilde{f}}_u\). If \({\mathcal {S}}=\{{\varvec{\xi }}_1,\ldots ,{\varvec{\xi }}_m\} \subseteq C_n\) denotes a collection of grid points (either deterministic or Monte Carlo based), then \(\nabla _{{\varvec{\phi }}} \ell _{u}\) can be approximated by \(\tilde{{\varvec{g}}}_{u,{\mathcal {S}}}\), where

In fact, we distinguish the cases of deterministic and random \({\mathcal {S}}\) by writing this approximation as \(\tilde{{\varvec{g}}}_{u,{\mathcal {S}}}^{\textrm{D}}\) and \(\tilde{{\varvec{g}}}_{u,{\mathcal {S}}}^{\textrm{R}}\) respectively.

For the randomized smoothing method with \(\ell _u={\bar{f}}_u\), the approximation is slightly more involved. Given m grid points \({\mathcal {S}}=\{{\varvec{\xi }}_1,\ldots ,{\varvec{\xi }}_m\} \subseteq C_n\) (again either deterministic or random), and independent random vectors \({\varvec{z}}_1,\ldots ,{\varvec{z}}_m\), each uniformly distributed on the unit Euclidean ball in \(\mathbb {R}^n\), we can approximate \(\nabla _{{\varvec{\phi }}} \ell _{u}({\varvec{\phi }})\) by

with \(\circ \in \{\textrm{D},\textrm{R}\}\) again distinguishing the cases of deterministic and random \({\mathcal {S}}\).

3.2.2 Related literature

As mentioned above, Algorithm 1 is an accelerated version of the dual averaging method of [53], which to the best of our knowledge has not been studied in the context of log-concave density estimation previously. Nevertheless, related ideas have been considered for other optimization problems (e.g. [25, 70]). Relative to previous work, our approach is quite general, in that it applies to both of the smoothing techniques discussed in Sect. 3.1, and allows the use of both deterministic and random grids to approximate the gradients of the smoothing sequence. Another key difference with earlier work is that we allow the grid \({\mathcal {S}}\) to depend on t, so we write it as \({\mathcal {S}}_t\), with \(m_t:= |{\mathcal {S}}_t|\); in particular, inspired by both our theoretical results and numerical experience, we take \((m_{t})\) to be a suitable increasing sequence.

4 Theoretical analysis of optimization error of Algorithm 1

We have seen in Propositions 3 and 4 that the two smooth functions \({\tilde{f}}_{u}\) and \({\bar{f}}_{u}\) enjoy similar properties — according to Proposition 3(a) to (c) and Proposition 4(a) to (d), both \({\tilde{f}}_{u}\) and \({\bar{f}}_{u}\) satisfy the following assumption:

Assumption 1

[Assumptions on smoothing sequence] There exists \(r \ge 0\) such that for any \({\varvec{\phi }}\in {{\varvec{\varPhi }}}\),

(a) we can find \(B_0 > 0\) with \(f({\varvec{\phi }})\le \ell _{u}({\varvec{\phi }})\le f({\varvec{\phi }})+B_0I({\varvec{\phi }})u\) for all \(u \in [0,r]\);

(b) \(\ell _{u'}({\varvec{\phi }})\le \ell _u({\varvec{\phi }})\) for all \(u' \in [0,u]\);

(c) for each \(u \in [0,r]\), the function \({\varvec{\phi }}\mapsto \ell _u({\varvec{\phi }})\) is convex and has \({B_1}/{u}\)-Lipschitz gradient, for some \(B_1>0\).

Recall from Sect. 3 that we have four possible choices corresponding to a combination of the smoothing and integral approximation methods, as summarized in Table 1.

Once we select an option, in line 3 of Algorithm 1, we can take

where \(\check{~} \in \{\tilde{~},~\bar{~}\}\) and \(\circ \in \{\textrm{D},\textrm{R}\}\). To encompass all four approximation choices in Line 3 of Algorithm 1, we make the following assumption on the gradient approximation error \({\varvec{e}}_t:= {\varvec{g}}_t - \nabla _{{\varvec{\phi }}} \ell _{u_t}({\varvec{\phi }}_t^{(y)})\):

Assumption 2

[Gradient approximation error] There exists \(\sigma > 0\) such that

where \({\mathcal {F}}_{t-1}\) denotes the \(\sigma \)-algebra generated by all random sources up to iteration \(t-1\) (with \({\mathcal {F}}_{-1}\) denoting the trivial \(\sigma \)-algebra).

When \({\mathcal {S}}\) is a Monte Carlo random grid (options 2 and 4), the approximate gradient \({\varvec{g}}_t\) is an average of \(m_t\) independent and identically distributed random vectors, each being an unbiased estimator of \(\nabla \ell _{u_t}({\varvec{\phi }}_t^{(y)})\). Hence, (17) holds true with \(\sigma ^2\) determined by the bounds in Proposition 3(d) (option 2) and Proposition 4(e) (option 4). For a deterministic Riemann grid \({\mathcal {S}}\) and Nesterov’s smoothing technique (option 1), \({\varvec{e}}_t\) is deterministic, and arises from using \(\tilde{{\varvec{g}}}_{u,{\mathcal {S}}}({\varvec{\phi }})\) in (15) to approximate \(\nabla _{{\varvec{\phi }}} {\tilde{f}}_u({\varvec{\phi }}) =\mathbb {E}[\tilde{{\varvec{G}}}_u({\varvec{\phi }},{\varvec{\xi }})]\). For the deterministic Riemann grid and randomized smoothing (option 3), the error \({\varvec{e}}_{t}\) can be decomposed into a random estimation error term (induced by \({\varvec{z}}_1,\ldots ,{\varvec{z}}_{m_t}\)) and a deterministic approximation error term (induced by \({\varvec{\xi }}_1,\ldots ,{\varvec{\xi }}_{m_t}\)) as follows:

It can be shown using this decomposition that \(\mathbb {E}(\Vert {\varvec{e}}_t\Vert ^2|{\mathcal {F}}_{t-1}) = O(1/m_t)\) under regularity conditions.

Theorem 1 below establishes our desired computational guarantees for Algorithm 1. We write \(D:=\sup _{{\varvec{\phi }},{\tilde{{\varvec{\phi }}}}\in {{\varvec{\varPhi }}}} \Vert {\varvec{\phi }}-{\tilde{{\varvec{\phi }}}} \Vert \) for the diameter of \({\varvec{\varPhi }}\).

Theorem 1

Suppose that Assumptions 1 and 2 hold, and define the sequence \((\theta _t)_{t \in \mathbb {N}_0}\) by \(\theta _0:= 1\) and \(\theta _{t+1}:= 2\bigl (1+\sqrt{1+4/\theta _t^2}\bigr )^{-1}\) for \(t \in \mathbb {N}_0\). Let \(u > 0\), let \(u_t:= \theta _t u\) and take \(L_t=B_1/u_t\) and \(\eta _t=\eta \) for all \(t \in \mathbb {N}_0\) as input parameters to Algorithm 1. Writing \(M_T^{(1)}:=\sqrt{\sum _{t=0}^{T-1}m_t^{-1}}\) and \(M_T^{(1/2)}:=\sum _{t=0}^{T-1}m_t^{-1/2}\), we have for any \({\varvec{\phi }}\in {{\varvec{\varPhi }}}\) that

In particular, taking \({\varvec{\phi }}={\varvec{\phi }}^*\), and choosing \(u=({D}/{2})\sqrt{B_1/B_0}\) and \(\eta =({\sigma M_T^{(1)}})/{D}\), we obtain

Moreover, if we further assume that \(\mathbb {E}({\varvec{e}}_t|{\mathcal {F}}_{t-1})={\varvec{0}}\) (e.g. by using options 2 and 4), then we can remove the last term of both inequalities above.

For related general results that control the expected optimization error for smoothed objective functions, see, e.g., [52, 67, 25, 70]. With deterministic grids (corresponding to options 1 and 3), if we take \(|{\mathcal {S}}_{t}| = m\) for all t, then \(M_T^{(1/2)}=T/\sqrt{m}\), and the upper bound in (19) does not converge to zero as \(T \rightarrow \infty \). On the other hand, if we take \(|{\mathcal {S}}_t|=t^2\), for example, then \(\sup _{T \in \mathbb {N}} M_T^{(1)} < \infty \) and \(M_T^{(1/2)}= {\tilde{O}}(1)\), and we find that \(\varepsilon _{T} = {\tilde{O}}(1/T)\). For random grids (options 2 and 4), if we take \(|{\mathcal {S}}_{t}| = m\) for all t, then \(M_T^{(1)}=\sqrt{T/m}\) and we recover the \(\varepsilon _{T} = O(1/\sqrt{T})\) rate for stochastic subgradient methods [56]. This can be improved to \(\varepsilon _{T} ={\tilde{O}}(1/T)\) with \(m_t = t\), or even \(\varepsilon _{T} = O(1/T)\) if we choose \((m_t)_t\) such that \(\sum _{t=0}^\infty m_t^{-1} < \infty \).

A direct application of the theory of [25] would yield an error rate of \(\varepsilon _{T} = O(n^{1/4}/T + 1/\sqrt{T})\). On the other hand, Theorem 1 shows that, owing to the increasing sequence of grid sizes used to approximate the gradients in Step 3 of Algorithm 1, we can improve this rate to \({\tilde{O}}(1/T)\). Note however, that this improvement is in terms of the number of iterations T, and not the total number of stochastic oracle queries (equivalently, the total number of LPs (\(Q_0\))), which is given by \(T_{\textrm{query}}:=\sum _{t=0}^{T-1}m_t\). [1] and [51] have shown that the optimal expected number of stochastic oracle queries is of order \(1/\sqrt{T_{\textrm{query}}}\), which is attained by the algorithm of [25]. For our framework, by taking \(m_t=t\), we have \(T_{\textrm{query}}=\sum _{t=0}^{T-1} m_t={\tilde{O}}(T^2)\), so after \(T_{\textrm{query}}\) stochastic oracle queries, our algorithm also attains the optimal error on the objective function scale, up to a logarithmic factor. Other advantages of our algorithm and the theoretical guarantees provided by Theorem 1 relative to the earlier contributions of [25] are that we do not require an upper bound on \(I({\varvec{\phi }})\) and are able to provide a unified framework that includes Nesterov smoothing and an alternative gradient approximation approach by numerical integration in addition to randomized smoothing scheme with stochastic gradients. Moreover, we can exploit the specific structure of the log-concave density estimation problem to provide much better Lipschitz constants for the randomized smoothing sequence than would be obtained using the generic constants of [25]. For example, our upper bound in Proposition 4(a) is of order \(O(n^{-1/2}\log ^{1/2}n)\), whereas a naive application of the general theory of [25] would only yield a bound of O(1). A further improvement in our bound comes from the fact that it now involves \(I({\varvec{\phi }})\) directly, as opposed to an upper bound on this quantity.

In Theorem 1, the computational guarantee depends upon \(B_0,B_1,\sigma \) in Assumptions 1 and 2. In light of Propositions 3 and 4, Table 2 illustrates how these quantities, and hence the corresponding guarantees, differ according to whether we use Nesterov smoothing or randomized smoothing.

The randomized smoothing procedure requires solving LPs, whereas Nesterov’s smoothing technique requires solving QPs. While both of these problems are highly structured and can be solved efficiently by off-the-shelf solvers (e.g., [36]), we found the LP solution times to be faster than those for the QP. Additional computational details are discussed in Sect. 6.

Note that Theorem 1 presents error bounds in expectation, though for option 1, since we use Nesterov’s smoothing technique and the Riemann sum approximation of the integral, the guarantee in Theorem 1 holds without the need to take an expectation. Theorem 2 below presents corresponding high-probability guarantees. For simplicity, we present results for options 2 and 4, which rely on the following assumption:

Assumption 3

Assume that \(\mathbb {E}({\varvec{e}}_t|{\mathcal {F}}_{t-1})={\varvec{0}}\) and that \(\mathbb {E}\bigl (e^{\Vert {\varvec{e}}_t\Vert ^2/\sigma _t^2} \mid {\mathcal {F}}_{t-1}\bigr ) \le e\), where \(\sigma _t=\sigma /\sqrt{m_t}\).

Theorem 2

Suppose that Assumptions 1 and 3 hold, and define the sequence \((\theta _t)_{t \in \mathbb {N}_0}\) by \(\theta _0:= 1\) and \(\theta _{t+1}:= 2\bigl (1+\sqrt{1+4/\theta _t^2}\bigr )^{-1}\) for \(t \in \mathbb {N}_0\). Let \(u > 0\), let \(u_t:= \theta _t u\) and take \(L_t=B_1/u_t\) and \(\eta _t=\eta \) for all \(t \in \mathbb {N}_0\) as input parameters to Algorithm 1. Writing \(M_T^{(2)}:=\sqrt{\sum _{t=0}^{T-1}m_t^{-2}}\) and \(M_T^{(1)}:=\sqrt{\sum _{t=0}^{T-1}m_t^{-1}}\), and choosing \(u=({D}/{2})\sqrt{B_1/B_0}\) and \(\eta =({\sigma M_T^{(1)}})/{D}\) as in Theorem 1, for any \(\delta \in (0,1)\), we have with probability at least \(1-\delta \) that

For option 3, we would need to consider the approximation error from the Riemann sum, and the final error rate would include additional O(1/T) terms. We omit the details for brevity.

Finally in this section, we relate the error of the objective to the error in terms of \({\varvec{\phi }}\), as measured through the squared \(L_2\) distance between the corresponding lower convex envelope functions.

Theorem 3

For any \({\varvec{\phi }}\in {{\varvec{\varPhi }}}\), we have

5 Beyond log-concave density estimation

In this section, we extend our computational framework beyond the log-concave density family, through the notion of s-concave densities. For \(s\in \mathbb {R}\), define domains \({\mathcal {D}}_s\) and \(\psi _s:{\mathcal {D}}_s \rightarrow \mathbb {R}\) by

Definition 1

[s-concave density, [65]] For \(s\in \mathbb {R}\), the class \({\mathcal {P}}_s(\mathbb {R}^d)\) of s-concave density functions on \(\mathbb {R}^d\) is given by

For \(s=0\), the family of s-concave densities reduces to the family of log-concave densities. Moreover, for \(s_1 < s_2\), we have \({\mathcal {P}}_{s_2}(\mathbb {R}^d)\subseteq {\mathcal {P}}_{s_1}(\mathbb {R}^d)\) [23, p. 86]. The s-concave density family introduces additional modelling flexibility, in particular allowing much heavier tails when \(s < 0\) than the log-concave family, but we note that there is no guidance available in the literature on how to choose s.

For the problem of s-concave density estimation, we discuss two estimation methods, both of which have been previously considered in the literature, but for which there has been limited algorithmic development. The first is based on the maximum likelihood principle (Sect. 5.1), while the other is based on minimizing a Rényi divergence (Sect. 5.2).

5.1 Computation of the s-concave maximum likelihood estimator

[65] proved that a maximum likelihood estimator over \({\mathcal {P}}_s(\mathbb {R}^d)\) exists with probability one for \(s\in (-1/d,\infty )\) and \(n > \max \bigl (\frac{dr}{r-d},d\bigr )\), where \(r:= -1/s\), and does not exist if \(s<-1/d\). [24] provide some statistical properties of this estimator when \(d=1\). The maximum likelihood estimation problem is to compute

or equivalently,

We establish the following theorem:

Theorem 4

Let \(s \in [0,1]\) and suppose that the convex hull \(C_n\) of the data is d-dimensional (so that the s-concave MLE \({\hat{p}}_n\) exists and is unique). Then computing \({\hat{p}}_n\) in (21) is equivalent to the convex minimization problem of computing

in the sense that \({\hat{p}}_n = \psi _s \circ \textrm{cef}[{\varvec{\phi }}^*]\).

Remark 1

The equivalence result in Theorem 4 holds for any s (outside [0, 1]) as long as the s-concave MLE exists. However, when \(s\in [0,1]\), (23) is a convex optimization problem. The family of s-concave densities with \(s<0\) appears to be more useful from a statistical viewpoint as it allows for heavier tails than log-concave densities, but the MLE cannot be then computed via convex optimization. Nevertheless, the entropy minimization methods discussed in Sect. 5.2 can be used to obtain s-concave density estimates for \(s > -1\).

5.2 Quasi-concave density estimation

Another route to estimate an s-concave density (or even a more general class) is via the following problem:

where \(\varPsi :\mathbb {R}\rightarrow (-\infty ,\infty ]\) is a decreasing, proper convex function. When \(\varPsi (y) = e^{-y}\), (24) is equivalent to the MLE for log-concave density estimation (1), by [20, Theorem 1]. This problem, proposed by [46], is called quasi-concave density estimation. [46, Theorem 4.1] show that under some assumptions on \(\varPsi \), there exists a solution to (24), and if \(\varPsi \) is strictly convex, then the solution is unique. Furthermore, if \(\varPsi \) is differentiable on the interior of its domain, then the optimal solution to the dual of (24) is a probability density p such that \(p=-\varPsi '(\varphi )\), and the dual problem can be regarded as minimizing different distances or entropies (depending on \(\varPsi \)) between the empirical distribution of the data and p. In particular, when \(\beta \ge 1\) and \(\varPsi (y) = \mathbb {1}_{\{y \le 0\}}(-y)^\beta /\beta \), and when \(\beta < 0\) and \(\varPsi (y) = -y^\beta /\beta \) for \(y \ge 0\) (with \(\varPsi (y) = \infty \) otherwise), the dual problem of (24) is essentially minimizing the Rényi divergence and we have the primal-dual relationship \(p=|\varphi |^{\beta -1}\). In fact, this amounts to estimating an s-concave density via Rényi divergence minimization with \(\beta =1+1/s\) and \(s \in (-1,\infty ) {\setminus } \{0\}\). We therefore consider the problem

The proof of Theorem 5 is similar to that of Theorem 4, and is omitted for brevity.

Theorem 5

Given a decreasing proper convex function \(\varPsi \), the quasi-concave density estimation problem (24) is equivalent to the following convex problem:

in the sense that \(\check{\varphi } = \textrm{cef}[{\varvec{\phi }}^*]\), with corresponding density estimator \({\tilde{p}}_n = -\varPsi ' \circ \textrm{cef}[{\varvec{\phi }}^*]\).

The objective in (26) is convex, so our computational framework can be applied to solve this problem.

6 Computational experiments on simulated data

In this section, we present numerical experiments to study the different variants of our algorithm and compare them with existing methods based on convex optimization for the log-concave MLE. Our results are based on large-scale synthetic datasets with \(n \in \{5{,}000,10{,}000\}\) observations generated from standard d-dimensional normal and Laplace distributions with \(d=4\). Code for our experiments is available from the github repository LogConcComp available at:

https://github.com/wenyuC94/LogConcComp.

All computations were carried out on the MIT Supercloud Cluster [58] on an Intel Xeon Platinum 8260 machine, with 24 CPUs and 24GB of RAM. Our algorithms were written in Python; we used Gurobi [36] to solve the LPs and QPs.

Our first comparison method is that of [20], implemented in the R package LogConcDEAD [18], and denoted by CSS. The CSS algorithm terminates when \(\Vert {\varvec{\phi }}^{(t)} - {\varvec{\phi }}^{(t-1)}\Vert _\infty \le \tau \), and we consider \(\tau \in \{10^{-2},10^{-3},10^{-4}\}\). Our other competing approach is the randomized smoothing method of [25], with random grids of a fixed grid size, which we denote here by RS-RF-m, with m being the grid size. To the best of our knowledge, this method has not been used to compute the log-concave MLE previously.

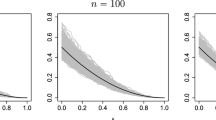

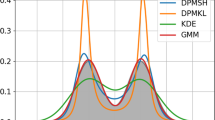

Plots on a log-scale of relative objective versus time (mins) [left panel] and number of iterations [right panel]. For each of our four synthetic data sets, we ran five repetitions of each algorithm, so each bold line corresponds to the median of the profiles of the corresponding algorithm, and each thin line corresponds to the profile of one repetition. For the right panel, we show the profiles up to 128 iterations

We denote the different variants of our algorithm as \(\hbox { Alg-}\ V\), where \(\text {Alg}\in \{\text {RS,NS}\}\) represents Algorithm 1 with Randomized smoothing and Nesterov smoothing, and \(V\in \{\text {DI},\text {RI}\}\) represents whether we use deterministic or random grids of increasing grid sizes to approximate the gradient. Further details of our input parameters are given in Appendix A.3.

Figure 2 presents the relative objective error, defined for an algorithm with iterates \({\varvec{\phi }}_1,\ldots ,{\varvec{\phi }}_t\) as

against time (in minutes) and number of iterations. In the definition of the relative objective error in (27) above, \({\varvec{\phi }}^*\) is taken as the CSS solution with tolerance \(\tau =10^{-4}\). The figure shows that randomized smoothing appears to outperform Nesterov smoothing in terms of the time taken to reach a desired relative objective error, since the former solves an LP (\(Q_0\)), whereas the latter has to solve a QP (\(Q_u\)); the number of iterations taken by the different methods is, however, similar. There is no clear winner between randomized and deterministic grids, and both appear to perform well.

Table 3 compares our proposed methods against the CSS solutions with different tolerances, in terms of running time, final objective function, and distances of the algorithm outputs to the optimal solution \({\varvec{\phi }}^*\) and the truth \({\varvec{\phi }}^{\text {truth}}\). We find that all of our proposals yield marked improvements in running time compared with the CSS solution: with \(n=10{,}000\), \(d=4\) and \(\tau = 10^{-4}\), CSS takes more than 20 h for all of the data sets we considered, whereas the RS-DI variant is around 50 times faster. The CSS solution may have a slightly improved objective function value on termination, but as shown in Table 3, all of our algorithms achieve an optimization error that is small by comparison with the statistical error, and from a statistical perspective, there is no point in seeking to reduce the optimization error further than this. Table 4 shows that the distances \(\Vert {\varvec{\phi }}^* - {\varvec{\phi }}^{\textrm{truth}}\Vert /n^{1/2}\) are well concentrated around their means (i.e. do not vary greatly over different random samples drawn from the underlying distribution), which provides further reassurance that our solutions are sufficiently accurate for practical purposes. On the other hand, the CSS solution with tolerance \(10^{-3}\) is not always sufficiently reliable in terms of its statistical accuracy, e.g. for a Laplace distribution with \(n=5{,}000\). Our further experiments on real data sets reported in Appendix A.4 provide qualitatively similar conclusions.

Finally, Fig. 3 compares our proposed multistage increasing grid sizes (RS-DI/RS-RI) (see Tables 5 and 6) with the fixed grid size (RS-RF) proposed by [25], under the randomized smoothing setting. We see that the benefits of using the increasing grid sizes as described by our theory carry over to improved practical performance, both in terms of running time and number of iterations.

Data availability

All real data analyzed during this study are publicly available. URLs are included in this published article. The code to generate synthetic data is available in the LogConcComp repository: https://github.com/wenyuC94/LogConcComp.

Code availability

The full code for computation in the current study is available in the LogConcComp repository: https://github.com/wenyuC94/LogConcComp.

Notes

Once our algorithm terminates at \({\tilde{{\varvec{\phi }}}}_T\), say, we evaluate the integral \(I({\tilde{{\varvec{\phi }}}}_T)\) in the same way as [20]. Our final output, then is \({\varvec{\phi }}_T:= {\tilde{{\varvec{\phi }}}}_T + \log I({\tilde{{\varvec{\phi }}}}_T){\varvec{1}}\); this final step not only improves the objective function, but also guarantees that \(\exp [-\textrm{cef}[{\varvec{\phi }}_T](\cdot )]\) is a log-concave density.

International Business Machines Corporation (IBM.US), JPMorgan Chase & Co. (JPM.US), Caterpillar Inc. (CAT.US), 3 M Company (MMM.US)

pct_Pop_18_24_ACS_15_19, pct_Pop_25_44_ACS_15_19, pct_Pop_45_64_ACS_15_19, pct_Pop_65plus_ACS_15_19.

Ambient temperature (AT), Ambient pressure (AP), Carbon monoxide (CO), Nitrogen oxides (NOx).

In fact, the factor of e is omitted in the statement of [12, Lemma 8] but one can see from the authors’ inequalities (27) and (28) that it should be present.

References

Agarwal, A., Bartlett, P.L., Ravikumar, P., Wainwright, M.J.: Information-theoretic lower bounds on the oracle complexity of stochastic convex optimization. IEEE Trans. Inf. Theory 5(58), 3235–3249 (2012)

Axelrod, B., Diakonikolas, I., Stewart, A., Sidiropoulos, A., Valiant, G.: A polynomial time algorithm for log-concave maximum likelihood via locally exponential families. In: Advances in Neural Information Processing Systems, pp. 7723–7735 (2019)

Azuma, K.: Weighted sums of certain dependent random variables. Tohoku Math. J. Second Ser. 19(3), 357–367 (1967)

Barber, C.B., Dobkin, D.P., Huhdanpaa, H.: The quickhull algorithm for convex hull. Tech. rep., Technical Report GCG53, Geometry Center, University of Minnesota (1993)

Barber, R.F., Samworth, R.J.: Local continuity of log-concave projection, with applications to estimation under model misspecification. Bernoulli, to appear (2021)

Bellec, P.C.: Sharp oracle inequalities for least squares estimators in shape restricted regression. Ann. Stat. 46(2), 745–780 (2018)

Bertsekas, D.: Nonlinear Programming. Athena Scientific Optimization and Computation Series. Athena Scientific (2016). https://books.google.com/books?id=TwOujgEACAAJ

Bertsekas, D.P.: Convex Optimization Theory. Athena Scientific Belmont (2009)

Brunk, H., Barlow, R.E., Bartholomew, D.J., Bremner, J.M.: Statistical Inference under Order Restrictions: The Theory and Application of Isotonic Regression. John Wiley & Sons (1972)

Buldygin, V.V., Kozachenko, Y.V.: Metric Characterization of Random Variables and Random Processes, vol. 188. American Mathematical Society (2000)

Cai, T.T., Low, M.G.: A framework for estimation of convex functions. Stat. Sin. 25, 423–456 (2015)

Carpenter, T., Diakonikolas, I., Sidiropoulos, A., Stewart, A.: Near-optimal sample complexity bounds for maximum likelihood estimation of multivariate log-concave densities. In: Conference On Learning Theory, pp. 1234–1262 (2018)

Chatterjee, S., Guntuboyina, A., Sen, B.: On risk bounds in isotonic and other shape restricted regression problems. Ann. Stat. 43(4), 1774–1800 (2015)

Chen, W., Mazumder, R.: Multivariate convex regression at scale. arXiv preprint arXiv:2005.11588 (2020)

Chen, Y., Samworth, R.J.: Smoothed log-concave maximum likelihood estimation with applications. Stat. Sin. 23, 1373–1398 (2013)

Chen, Y., Samworth, R.J.: Generalized additive and index models with shape constraints. J. R. Stat. Soc. B 78, 729–754 (2016)

Clarke, F.H.: Optimization and Nonsmooth Analysis, vol. 5. SIAM, Philadelphia (1990)

Cule, M., Gramacy, R.B., Samworth, R.: LogConcDEAD: an R package for maximum likelihood estimation of a multivariate log-concave density. J. Stat. Softw. 29(2), 1–20 (2009). https://doi.org/10.18637/jss.v029.i02. (https://www.jstatsoft.org/v029/i02)

Cule, M., Samworth, R.: Theoretical properties of the log-concave maximum likelihood estimator of a multidimensional density. Electron. J. Stat. 4, 254–270 (2010)

Cule, M., Samworth, R., Stewart, M.: Maximum likelihood estimation of a multi-dimensional log-concave density. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 72(5), 545–607 (2010)

Cule, M.L., Dümbgen, L.: On an auxiliary function for log-density estimation. arXiv preprint arXiv:0807.4719 (2008)

Dalalyan, A.S.: Theoretical guarantees for approximate sampling from smooth and log-concave densities. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 79(3), 651–676 (2017)

Dharmadhikari, S., Joag-Dev, K.: Unimodality, Convexity, and Applications. Elsevier (1988)

Doss, C.R., Wellner, J.A.: Global rates of convergence of the MLEs of log-concave and \(s\)-concave densities. Ann. Stat. 44(3), 954 (2016)

Duchi, J.C., Bartlett, P.L., Wainwright, M.J.: Randomized smoothing for stochastic optimization. SIAM J. Optim. 22(2), 674–701 (2012)

Dümbgen, L., Rufibach, K.: Maximum likelihood estimation of a log-concave density and its distribution function: basic properties and uniform consistency. Bernoulli 15(1), 40–68 (2009)

Dümbgen, L., Rufibach, K.: logcondens: computations related to univariate log-concave density estimation. J. Stat. Softw. 39, 1–28 (2011)

Dümbgen, L., Samworth, R., Schuhmacher, D.: Approximation by log-concave distributions, with applications to regression. Ann. Stat. 39(2), 702–730 (2011)

Dümbgen, L., Samworth, R.J., Wellner, J.A.: Bounding distributional errors via density ratios. Bernoulli 27, 818–852 (2021)

Durot, C., Lopuhaä, H.P.: Limit theory in monotone function estimation. Stat. Sci. 33(4), 547–567 (2018)

Fang, B., Guntuboyina, A.: On the risk of convex-constrained least squares estimators under misspecification. Bernoulli 25(3), 2206–2244 (2019)

Feng, O.Y., Guntuboyina, A., Kim, A.K., Samworth, R.J.: Adaptation in multivariate log-concave density estimation. Ann. Stat. 49, 129–153 (2021)

Grenander, U.: On the theory of mortality measurement: part ii. Scand. Actuar. J. 1956(2), 125–153 (1956)

Groeneboom, P., Jongbloed, G.: Nonparametric Estimation under Shape Constraints, vol. 38. Cambridge University Press, Cambridge (2014)

Guntuboyina, A., Sen, B.: Global risk bounds and adaptation in univariate convex regression. Probab. Theory Relat. Fields 163(1–2), 379–411 (2015)

Gurobi Optimization, LLC: Gurobi optimizer reference manual (2021). http://www.gurobi.com

Han, Q.: Global empirical risk minimizers with “shape constraints" are rate optimal in general dimensions. The Annals of Statistics, to appear (2021)

Han, Q., Wang, T., Chatterjee, S., Samworth, R.J.: Isotonic regression in general dimensions. Ann. Stat. 47(5), 2440–2471 (2019)

Han, Q., Wellner, J.A.: Approximation and estimation of \(s\)-concave densities via rényi divergences. Ann. Stat. 44(3), 1332 (2016)

Han, Q., Wellner, J.A.: Multivariate convex regression: global risk bounds and adaptation. arXiv preprint arXiv:1601.06844 (2016)

Hildreth, C.: Point estimates of ordinates of concave functions. J. Am. Stat. Assoc. 49(267), 598–619 (1954)

Kappel, F., Kuntsevich, A.V.: An implementation of Shor’s \(r\)-algorithm. Comput. Optim. Appl. 15(2), 193–205 (2000)

Kim, A.K., Guntuboyina, A., Samworth, R.J.: Adaptation in log-concave density estimation. Ann. Stat. 46(5), 2279–2306 (2018)

Kim, A.K., Samworth, R.J.: Global rates of convergence in log-concave density estimation. Ann. Stat. 44(6), 2756–2779 (2016)

Klenke, A.: Probability Theory: A Comprehensive Course. Springer Science & Business Media (2014)

Koenker, R., Mizera, I.: Quasi-concave density estimation. Ann. Stat. 38(5), 2998–3027 (2010)

Kur, G., Dagan, Y., Rakhlin, A.: The log-concave maximum likelihood estimator is optimal in high dimensions. arXiv preprint arXiv:1903.05315v3 (2019)

Lakshmanan, H., De Farias, D.P.: Decentralized resource allocation in dynamic networks of agents. SIAM J. Optim. 19(2), 911–940 (2008)

Liu, Y., Wang, Y.: A fast algorithm for univariate log-concave density estimation. Austr. N. Z. J. Stat. 60(2), 258–275 (2018)

McMullen, P.: The maximum numbers of faces of a convex polytope. Mathematika 17(2), 179–184 (1970)

Nemirovsky, A.S., Yudin, D.B.: Problem Complexity and Method Efficiency in Optimization. Wiley (1983)

Nesterov, Y.: Smooth minimization of non-smooth functions. Math. Program. 103(1), 127–152 (2005)

Nesterov, Y.: Primal-dual subgradient methods for convex problems. Math. Program. 120(1), 221–259 (2009)

Pal, J.K., Woodroofe, M., Meyer, M.: Estimating a Polya frequency function. Lecture Notes-Monograph Series pp. 239–249 (2007)

Pananjady, A., Samworth, R.J.: Isotonic regression with unknown permutations: Statistics, computation, and adaptation. arXiv preprint arXiv:2009.02609 (2020)

Polyak, B.T.: Introduction to Optimization. Optimization Software Inc., Publications Division, New York (1987)

Rathke, F., Schnörr, C.: Fast multivariate log-concave density estimation. Comput. Stat. Data Anal. 140, 41–58 (2019)

Reuther, A., Kepner, J., Byun, C., Samsi, S., Arcand, W., Bestor, D., Bergeron, B., Gadepally, V., Houle, M., Hubbell, M., et al.: Interactive supercomputing on 40,000 cores for machine learning and data analysis. In: 2018 IEEE High Performance extreme Computing Conference (HPEC), pp. 1–6. IEEE (2018)

Rockafellar, R.T.: Convex Analysis. Princeton University Press (1997)

Samworth, R.J.: Recent progress in log-concave density estimation. Stat. Sci. 33(4), 493–509 (2018)

Samworth, R.J., Sen, B.: Editorial: special issue on nonparametric inference under shape constraints. Stat. Sci. 33, 469–472 (2018)

Samworth, R.J., Yuan, M.: Independent component analysis via nonparametric maximum likelihood estimation. Ann. Stat. 40(6), 2973–3002 (2012)

Schuhmacher, D., Hüsler, A., Dümbgen, L.: Multivariate log-concave distributions as a nearly parametric model. Stat. Risk Model. Appl. Finance Insur. 28(3), 277–295 (2011)

Seijo, E., Sen, B.: Nonparametric least squares estimation of a multivariate convex regression function. Ann. Stat. 39(3), 1633–1657 (2011)

Seregin, A., Wellner, J.A.: Nonparametric estimation of multivariate convex-transformed densities. Ann. Stat. 38(6), 3751–3781 (2010)

Shor, N.Z.: Minimization Methods for Non-Differentiable Functions. Springer-Verlag (1985)

Tseng, P.: On accelerated proximal gradient methods for convex-concave optimization. submitted to SIAM Journal on Optimization 1 (2008)

Walther, G.: Detecting the presence of mixing with multiscale maximum likelihood. J. Am. Stat. Assoc. 97(458), 508–513 (2002)

Walther, G.: Inference and modeling with log-concave distributions. Stat. Sci. 24(3), 319–327 (2009)

Xiao, L.: Dual averaging methods for regularized stochastic learning and online optimization. J. Mach. Learn. Res. 11, 2543–2596 (2010)

Xu, M., Samworth, R.J.: High-dimensional nonparametric density estimation via symmetry and shape constraints. Ann. Stat. 49, 650–672 (2021)

Yang, F., Barber, R.F.: Contraction and uniform convergence of isotonic regression. Electron. J. Stat. 13(1), 646–677 (2019)

Yousefian, F., Nedić, A., Shanbhag, U.V.: On stochastic gradient and subgradient methods with adaptive steplength sequences. Automatica 48(1), 56–67 (2012)

Zhang, C.H.: Risk bounds in isotonic regression. Ann. Stat. 30(2), 528–555 (2002)

Acknowledgements

The authors acknowledge MIT SuperCloud and Lincoln Laboratory Supercomputing Center for providing HPC resources that have contributed to the research results reported within this paper. We also thank the anonymous reviewers for constructive comments on an earlier version that helped to improve this paper.

Funding

‘Open Access funding provided by the MIT Libraries’ Research supported in part by grants from the Office of Naval Research: N000141812298, N000142112841, N000142212665, the National Science Foundation and IBM to Rahul Mazumder. The research of Richard J. Samworth was supported by EPSRC grants EP/N031938/1 and EP/P031447/1, as well as ERC Advanced Grant 101019498.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Research supported in part by grants from the Office of Naval Research: N000141812298, N000142112841, the National Science Foundation and IBM to Rahul Mazumder. The research of Richard J. Samworth was supported by EPSRC Grants EP/N031938/1 and EP/P031447/1, as well as ERC Advanced Grant 101,019,498.

Appendices

Additional implementational and experimental details

1.1 Initialization: non-convex method

[20] show that the negative log-density \(-\log {\hat{p}}_n(\cdot )\) of the log-concave MLE is a piecewise-affine convex function over its domain \(C_n\). This allows us to parametrize these functions as \(\varphi ({\varvec{x}}):=\max _{j \in [m]}\{{\varvec{a}}_j^\top {\varvec{x}}+b_j\}\) for \(x \in C_n\), where \({\varvec{a}}_1,\ldots ,{\varvec{a}}_m \in \mathbb {R}^d\) and \(b_1,\ldots ,b_m \in \mathbb {R}\). We can then reformulate the problem as \(\min _{{\varvec{a}}_1,\ldots ,{\varvec{a}}_m \in \mathbb {R}^d,b_1,\ldots ,b_m \in \mathbb {R}} f_0({\varvec{a}},{\varvec{b}})\), with non-convex objective

To approximate the integral, we use the same simple Riemann grid points mentioned in Sect. 3.2.1. Subgradients of the objective (28) are straightforward to compute via the chain rule and the subgradient of the maximum function (see, e.g., [8]). After standardizing each coordinate of our data to have mean zero and unit variance, which does not affect the final outcome due to the affine equivariance of the log-concave MLE [28, Remark 2.4], we generate \(m=10\) initializing hyperplanes from a standard \((d+1)\)-dimensional Gaussian distribution. We then obtain the initializer for our main algorithm by applying a vanilla subgradient method to the objective (28) [56, 66] with stepsize \(t^{-1/2}\) at the tth iteration, terminating when the difference in the objective function at successive iterations drops below \(10^{-4}\), or after 100 iterations, whichever is the sooner. This technique is related to the non-convex method for log-concave density estimation proposed by [57], who considered a smoothed version of (28). Our goal here is only to seek a good initializer rather than the global optimum, and we found that the approach described above was effective in this respect, as well as being faster to compute than the method of [57].

1.2 Final polishing step

As mentioned in Sect. 3.2.1, once our algorithm terminates at \({\tilde{{\varvec{\phi }}}}_T\), say, we evaluate the integral \(I({\tilde{{\varvec{\phi }}}}_T)\) in the same way as [20]. Our final output, then is \({\varvec{\phi }}_T:= {\tilde{{\varvec{\phi }}}}_T + \log I({\tilde{{\varvec{\phi }}}}_T){\varvec{1}}\); this final step not only improves the objective function, but also guarantees that \(\exp [-\textrm{cef}[{\varvec{\phi }}_T](\cdot )]\) is a log-concave density. This can be shown by following the same arguments as in Steps 2-3 in the proof of Theorem 4.

1.3 Input parameter settings

According to Theorem 1, we should take \(u=\frac{D}{2}\sqrt{\frac{B_1}{B_0}}\). By Table 2, for randomized smoothing, this is approximately \(\frac{D}{2}C_1\sqrt{n}\), where \(C_1=\sqrt{\varDelta e^{-\phi _0}}\). In our experiments for randomized smoothing, we chose \(u=Dn^{1/4}/2\), while for Nesterov smoothing, we chose \(u= D/2\). According to Theorem 1, \(\eta = \sigma M_T^{(1)}/D\), where we took \(\sigma =10^{-4}\) for RS-RI and RS-DI, and \(\sigma =10^{-3}\) for NS-RI and NS-DI. For the competing RS-RF-m method, we present the better of the results from \(\sigma \in \{10^{-3},10^{-4}\}\).

To illustrate the increasing grid size strategy we take in the experiments, we first present in Table 5 some potential schemes to achieve the \({{\tilde{O}}}(1/T)\) error rate on the objective function scale. In our experiments, we used the multi-stage increasing grid size scheme with \(C_1=8\) and \(\rho _1=2\). For the random grid (RI), we take \(C=5{,}000\) and \(\rho = 2\). For the deterministic grid (DI), we first choose an axis-aligned grid with \(m_{0,t}\) points in each dimension that encloses the convex hull \(C_n\) of the data. Then \(m_t\) is the number of these grid points that fall within \(C_n\). Table 6 provides an illustration of this multi-stage strategy used in the numerical experiments for a Laplace distribution with \(n=5{,}000\) and \(d=4\). Code for the other settings is available in the github repository LogConcComp.

1.4 Experimental results on real data sets

We provide additional simulation results on three real data sets:

-

Stock returns: The Stock returns real data consist of daily returns of four stocksFootnote 3 over \(n=10{,}000\) randomly sampled days between 1970 and 2010, normalized so that each dimension has unit variance. The real data are available at https://stooq.com/db/h/.

-

Census: The Census real data consist of percentages of the population of different age groups (18-24, 25-44, 45-64 and 65+) for \(n=10{,}000\) randomly sampled Census tracts based on the 2015–2019 5-year ACS (American Community Survery),Footnote 4 and the data are normalized so that each dimension has unit variance. The data and description are available at https://www.census.gov/topics/research/guidance/planning-databases/2021.html.

-

Gas turbine: The Gas turbine real data consist of 4 sensor measuresFootnote 5 aggregated over one hour from a gas turbine for \(n=10{,}000\) hours between 2011 and 2015, normalized so that each dimension has unit variance. The data are available at https://archive.ics.uci.edu/ml/datasets/Gas+Turbine+CO+and+NOx+Emission+Data+Set.

Additional plots on a log-scale of Relative objective versus time (mins) [left panel] and number of iterations [right panel]. Details are given in the caption of Fig. 2

Table 7, Figs. 4 and 5 provide simulation results that correspond to those in Table 3, Figs. 2 and 3 respectively, but for three three real data sets. The table and figures reveal a qualitatively very similar story to that presented for the simulated data in Sect. 6: the main conclusion is that our randomized smoothing approaches are significantly more computationally efficient than both the Nesterov smoothing and CSS methods.

Proofs

1.1 Proofs of Propositions 1 and 2

The proof of Proposition 1 is adapted from the proof of [2, Lemma 2], which in turn is based on [12, Lemma 8].

Proof (Proof of Proposition 1)

The proof has three parts.

Part 1. We first prove that \(\phi ^*_{i} \in [\phi _0,\phi ^0]\) for all \(i \in [n]\); or equivalently

for all \(i \in [n]\). Define

We proceed to obtain an upper bound on R. To this end, let \({\bar{p}}_{n}\) denote the uniform density over \(C_{n}\). If \({\hat{p}}_{n}({\varvec{x}}_{i})={\bar{p}}_{n}({\varvec{x}}_{i})=1/\varDelta \) for all i, then (29) holds. So we may assume that \({\hat{p}}_{n} \ne {\bar{p}}_{n}\), so that \(R>1\) and \(M>1/\varDelta \). For a density p on \(\mathbb {R}^d\) and for \(t \in \mathbb {R}\), let \(L_p(t):= \{{\varvec{x}}\in \mathbb {R}^d:p({\varvec{x}})\ge t\}\) denote the super-level set of p at height t. Since \({\hat{p}}_n\) is supported on \(C_n\), and since \({\hat{p}}_n({\varvec{x}})\ge \min _{i\in [n]}{\hat{p}}_n({\varvec{x}}_i)=M/R\) for \({\varvec{x}}\in C_n\), it follows by [12, Lemma 8],Footnote 6 that when \(R \ge e\),

On the other hand, since \(\inf _{{\varvec{x}}\in C_n}{\hat{p}}_n({\varvec{x}}) = M/R\), we have \((M/R) \cdot \varDelta \le 1\), so for \(R < e\), we have \(M \le R/\varDelta < e/\varDelta \). We deduce that

for all \(R > 1\), where \(\log _+(x):= 1 \vee \log x\). Now, by the optimality of \({\hat{p}}_n\), we have

so that \(R \le (M\varDelta )^n\). It follows that when \(R \ge e\), we have from (31) and the fact that \(\log y \le y\) for \(y > 0\) that

Since (33) holds trivially when \(R < e\), we may combine (33) with (31) to obtain

Moreover, from (32) and (34), we also have

as required.

Part 2. Now we extend the above result to all \({\varvec{\phi }}\in \mathbb {R}^n\) such that \(I({\varvec{\phi }})=1\) and \(f({\varvec{\phi }})\le f({{\bar{{\varvec{\phi }}}}})\), where \({{\bar{{\varvec{\phi }}}}}\) is defined just after Proposition 1. The key observation here is that the proof of Part 1 applies to any density with log-likelihood at least that of the uniform distribution over \(C_{n}\). In particular, for any \({\varvec{\phi }}\) satisfying these conditions, the density \(p \in {\mathcal {F}}_d\) given by \(p({\varvec{x}})=\exp \{-\textrm{cef}[{\varvec{\phi }}]({\varvec{x}})\}\) has log-likelihood at least that of the uniform distribution over \(C_{n}\), so

as required.

Part 3. We now consider the case for a general \({\varvec{\phi }}\in \mathbb {R}^n\) with \(f({\varvec{\phi }})\le f({{\bar{{\varvec{\phi }}}}})\). Let \({\tilde{{\varvec{\phi }}}}:= {\varvec{\phi }}+\log I({\varvec{\phi }}){\varvec{1}}\), so that \(I({\tilde{{\varvec{\phi }}}})=1\) and \(\textrm{cef}[{\tilde{{\varvec{\phi }}}}](\cdot ) =\textrm{cef}[{\varvec{\phi }}](\cdot )+\log (I({\varvec{\phi }}))\). Furthermore,

The result therefore follows by Part 2. \(\square \)

Proof (Proof of Proposition 2)

Recall our notation from Sect. 2 that \({\varvec{\alpha }}^*[{\varvec{\phi }}]({\varvec{x}})\in A[{\varvec{\phi }}]({\varvec{x}})\) denotes a solution to (\(Q_0\)) at \({\varvec{x}}\in C_n\). Recall further from (9) that any subgradient \({\varvec{g}}({\varvec{\phi }})\) of f at \({\varvec{\phi }}\) is of the form

where \({\varvec{\gamma }}:=\varDelta \mathbb {E}\bigl [{\varvec{\alpha }}^*[{\varvec{\phi }}]({\varvec{\xi }})\exp \{-\textrm{cef}[{\varvec{\phi }}]({\varvec{\xi }})\}\bigr ]\) and \({\varvec{\xi }}\) is uniformly distributed on \(C_n\). Since \({\varvec{\alpha }}^*\) lies in the simplex \(\{{\varvec{\alpha }}\in \mathbb {R}^n:{\varvec{\alpha }}\ge 0,{\varvec{1}}^\top {\varvec{\alpha }}=1 \},\) we have \({\varvec{\gamma }}\ge 0\) and

In particular, \(\Vert {\varvec{\gamma }}\Vert _1=I({\varvec{\phi }})\), so

If \(I({\varvec{\phi }}) \le 1/2\), then \(\Vert {\varvec{g}}({\varvec{\phi }})\Vert ^2\le 1/4+ 1/n\); if \(I(\phi )> 1/2\), then \(\Vert {\varvec{g}}({\varvec{\phi }})\Vert ^2\le I({\varvec{\phi }})^2\). Therefore, \(\Vert {\varvec{g}}({\varvec{\phi }})\Vert ^2\le \max \bigl \{1/4+ 1/n,I({\varvec{\phi }})^2\bigr \}\). \(\square \)

1.2 Proofs of Proposition 3 and Proposition 4

The proof of Proposition 3 is based on the following properties of the quadratic program \(q_u[{\varvec{\phi }}]({\varvec{x}})\) defined in (\(Q_u\)), as well as its unique optimizer \({\varvec{\alpha }}_u^*[{\varvec{\phi }}]({\varvec{x}})\):

Proposition 5

For \({\varvec{\phi }}\in {\varvec{\varPhi }}\) and \({\varvec{x}}\in C_n\), we have

(a) \(\Vert {\varvec{\alpha }}_u^*[{\varvec{\phi }}]({\varvec{x}})\Vert \le 1\) for any \({\varvec{x}}\in C_n\) and \({\varvec{\phi }}\in {\varvec{\varPhi }}\);

(b) \(q_{u'}[{\varvec{\phi }}]({\varvec{x}})+(u'-u)/2\le q_u[{\varvec{\phi }}]({\varvec{x}})\le q_{u'}[{\varvec{\phi }}]({\varvec{x}})\) for \(u' \in [0,u]\);

(c) \(\phi _0-\frac{u}{2} \le q_u[{\varvec{\phi }}]({\varvec{x}})\le \phi ^0 \) for all \(u\ge 0\), \({\varvec{\phi }}\in {\varvec{\varPhi }}\) and \({\varvec{x}}\in C_n\);

(d) \(\Vert {\varvec{\alpha }}_u^*[{{\tilde{{\varvec{\phi }}}}}]({\varvec{x}})-{\varvec{\alpha }}_u^*[{\varvec{\phi }}]({\varvec{x}})\Vert \le ({1}/{u})\Vert {{\tilde{{\varvec{\phi }}}}}-{\varvec{\phi }}\Vert \) for any \(u > 0\), \({\varvec{\phi }},{\tilde{{\varvec{\phi }}}}\in {\varvec{{\varvec{\varPhi }}}}\), and any \({\varvec{x}}\in C_n\).

Proof

The proof exploits ideas from [52]. For (a), observe that \({\varvec{\alpha }}_u^*[{\varvec{\phi }}]({\varvec{x}})\in E({\varvec{x}}) \subseteq \{{\varvec{\alpha }}\in \mathbb {R}^n: {\varvec{1}}_n^\top {\varvec{\alpha }}=1, {\varvec{\alpha }}\ge 0\bigr \}\), and this simplex is the convex hull of \(n+1\) points that all lie in the closed unit Euclidean ball in \(\mathbb {R}^n\).

The lower bound in (b) follows immediately from the definition of the quadratic program in (\(Q_u\)). For the upper bound, for \(u' \in [0,u]\), we have

(c) For all \(u\ge 0\), \({\varvec{\phi }}\in {\varvec{\varPhi }}\) and \({\varvec{x}}\in C_n\), we have

Similarly,

(d) Observe that

so \({\varvec{\alpha }}_u^*[{\varvec{\phi }}]({\varvec{x}})\) is the Euclidean projection of \({\varvec{\alpha }}_0- ({{\varvec{\phi }}}/{u})\) onto \(E({\varvec{x}})\). Since this projection is an \(\ell _2\)-contraction, we deduce that

as required. \(\square \)

Proof (of Proposition 3)

(a) For \(u \ge 0\) and \({\varvec{\phi }}\in {\varvec{\varPhi }}\), let

where \({\varvec{\xi }}\) is uniformly distributed on \(C_n\), so that \({\tilde{I}}_0({\varvec{\phi }}) = I({\varvec{\phi }})\). By definition of \({\tilde{f}}_u\), we have for \(u' \in [0,u]\) that

where the inequality follows from Proposition 5(b). Hence, for every \(u \ge 0\) and \({\varvec{\phi }}\in {\varvec{\varPhi }}\),

Now, from (35), Proposition 5(b) and (36), we deduce that

as required.

(b) For each \({\varvec{x}}\in C_n\), the function \({\varvec{\phi }}\mapsto q_u[{\varvec{\phi }}]({\varvec{x}})\) is the infimum of a set of affine functions of \({\varvec{\phi }}\), so it is concave. Moreover, \(y \mapsto e^{-y}\) is a decreasing convex function, so \({\varvec{\phi }}\mapsto e^{-q_u[{\varvec{\phi }}]({\varvec{x}})}\) is convex, and it follows that \({\varvec{\phi }}\mapsto (1/n){\varvec{1}}^\top {\varvec{\phi }}+ \varDelta \mathbb {E}(e^{-q_u[{\varvec{\phi }}]({\varvec{\xi }})}) = {\tilde{f}}_u({\varvec{\phi }})\) is convex. Similarly to the proof of Proposition 2, any subgradient \(\tilde{{\varvec{g}}}_u(\phi )\) of \({\tilde{f}}_u\) at \({\varvec{\phi }}\) satisfies

But \({\varvec{\phi }}^*\in {\varvec{\varPhi }}\), so \(\varDelta ^2 e^{-2\phi _0+u}\ge (\varDelta e^{-\phi _0})^2 \ge I({\varvec{\phi }}^*)^2 = 1 \ge 1/4 + 1/n\). Hence, \({\tilde{f}}_u\) is \(e^{-\phi _0+u/2}\)-Lipschitz.

(c) To establish the Lipschitz property of \(\nabla _{{\varvec{\phi }}}{\tilde{f}}_u\), for any \({\varvec{x}}\in C_n\), any \({\varvec{\phi }},{{\tilde{{\varvec{\phi }}}}}\in {\varvec{\varPhi }}\), and \(t\in [0,1]\), we define

Then

By the mean value theorem there exists \(t_0\in [0,1]\) such that

where the final bound follows from Proposition 5(a) and (c). Now, for any \({\varvec{x}}\in C_n\), we have by (38) as well as Proposition 5(a), (c) and (d) that

It follows that for any \({\varvec{\phi }},{{\tilde{{\varvec{\phi }}}}}\in {\varvec{\varPhi }}\), we have

as required.

(d) For \(u \ge 0\) and \({\varvec{\phi }}\in {\varvec{\varPhi }}\), it follows from Proposition 5(a) and (c) that

as required. \(\square \)

Proposition 6

If \({\varvec{z}}\) is uniformly distributed on the unit \(\ell _{2}\)-ball in \(\mathbb {R}^n\), then \(\mathbb {E}(\Vert {\varvec{z}}\Vert _\infty )\le \sqrt{\tfrac{2\log n}{n+1}}\).

Proof

By [71, Proposition 3], we have that \({\varvec{z}}\overset{d}{=}\ U^{1/n} {\varvec{z}}'\), where \(U\sim {\mathcal {U}}[0,1]\), where \({\varvec{z}}'\) is uniformly distributed on the unit sphere in \(\mathbb {R}^n\), and where U and \({\varvec{z}}'\) are independent. Thus,

Moreover, if \({\varvec{\zeta }}\sim {\mathcal {N}}(0,I_n)\), then \(\Vert {\varvec{\zeta }}\Vert \) and \({\varvec{\zeta }}/\Vert {\varvec{\zeta }}\Vert \) are independent, and \({\varvec{z}}'\overset{d}{=}{\varvec{\zeta }}/\Vert {\varvec{\zeta }}\Vert \). It follows that

where the final bound follows from bounds on the gamma function, e.g. [29, Lemma 12]. The result follows from (39) and (40). \(\square \)

Proof (of Proposition 4)

(a) By Jensen’s inequality,

For the upper bound, let \({\varvec{v}}\in \mathbb {R}^n\) have \(\Vert {\varvec{v}}\Vert \le 1\) and, for some \({\varvec{\phi }}\in {\varvec{\varPhi }}\), let \({{\bar{{\varvec{\phi }}}}}:= {\varvec{\phi }}+u{\varvec{v}}\). For any \({\varvec{\alpha }}\in E({\varvec{x}})\), we have

Therefore, for any \({\varvec{x}}\in C_n\), we have \(\textrm{cef}[{{\bar{{\varvec{\phi }}}}}]({\varvec{x}})\ge \textrm{cef}[{\varvec{\phi }}]({\varvec{x}})-u.\) Hence

Recall that all subgradients of I at \({\tilde{{\varvec{\phi }}}} \in \mathbb {R}^n\) are of the form \(-{\varvec{\gamma }}({\tilde{{\varvec{\phi }}}})\), where

for some \({\varvec{\alpha }}\in A[{\tilde{{\varvec{\phi }}}}]({\varvec{x}})\). Moreover, as we saw in the proof of Proposition 2, \(\Vert {\varvec{\gamma }}({\tilde{{\varvec{\phi }}}})\Vert _1=I({\tilde{{\varvec{\phi }}}})\). We deduce from [59, Theorem 24.7] that

where the final inequality uses Proposition 6.

(b) By the convexity of f, we have

where the last inequality uses property (a).

(c) For each \({\varvec{v}} \in \mathbb {R}^n\) with \(\Vert {\varvec{v}}\Vert = 1\), the map \({\varvec{\phi }}\mapsto f({\varvec{\phi }}+u{\varvec{v}})\) is convex, so \({\varvec{\phi }}\mapsto \mathbb {E}\bigl (f({\varvec{\phi }}+u{\varvec{z}})\bigr ) = {\bar{f}}_u({\varvec{\phi }})\) is convex. The proof of the Lipschitz property is very similar to that of Proposition 3(b) and is omitted for brevity.

(d) As in the proof of (a), for any \({\varvec{v}} \in \mathbb {R}^n\) with \(\Vert {\varvec{v}}\Vert \le 1\), \({\varvec{x}}\in C_n\) and \({\varvec{\alpha }}\in A[{\varvec{\phi }}+u{\varvec{v}}]({\varvec{x}})\), we have

Since \(\nabla _{{\varvec{\phi }}} {\bar{f}}_u({\varvec{\phi }})=n^{-1}{\varvec{1}} -\varDelta \mathbb {E}\bigl ({\varvec{\alpha }}^*[{\varvec{\phi }}+u{\varvec{z}}]({\varvec{\xi }}) e^{-\textrm{cef}[{\varvec{\phi }}+u{\varvec{z}}]({\varvec{\xi }})}\bigr )\), where \({\varvec{\alpha }}^*[{\varvec{\phi }}+u{\varvec{v}}]({\varvec{x}}) \in A[{\varvec{\phi }}+u{\varvec{v}}]({\varvec{x}})\), we have by [73, Lemma 8] that \(\nabla _{{\varvec{\phi }}} {\bar{f}}_u\) is \(\varDelta e^{-\phi _0+u}n^{1/2}/u\)-Lipschitz.

(e) The proof is very similar to the proof of Proposition 3(d) and is omitted for brevity. \(\square \)

1.3 Proofs of Theorem 1 and Theorem 2

We will make use of the following lemma:

Lemma 1

[Lemma 4.2 of [25]] Let \(\bigl (\ell _{u_t}({\varvec{\phi }})\bigr )_t\) be a smoothing sequence such that \({\varvec{\phi }}\mapsto \ell _{u_t}({\varvec{\phi }})\) has \(L_t\)-Lipschitz gradient. Assume that \(\ell _{u_t}({\varvec{\phi }})\le \ell _{u_{t-1}}({\varvec{\phi }})\) for \({\varvec{\phi }}\in {\varvec{\varPhi }}\). Let \(({\varvec{\phi }}_t^{(x)})_{t=0}^T, ({\varvec{\phi }}^{(y)}_t)_{t=0}^T, ({\varvec{\phi }}^{(z)}_t)_{t=0}^T\) be the sequences generated by Algorithm 1. Let \({\varvec{g}}_t\) denote an approximation of \(\nabla \ell _{u_t}({\varvec{\phi }}_t^{(y)})\) with error \({\varvec{e}}_t= {\varvec{g}}_t - \nabla \ell _{u_t}({\varvec{\phi }}_t^{(y)})\). Then for any \({\varvec{\phi }}\in {\varvec{\varPhi }}\) and \(t \in \mathbb {N}\), we have

Recall the definition of the diameter D of \({\varvec{\varPhi }}\) given just before Theorem 1.

Corollary 2

Fix \(u, \eta > 0\), and assume that Assumption 1 holds with \(r \ge u\). Suppose in Algorithm 1 that \(u_t=\theta _tu\), \(L_t={B_1}/{u_t}\) and \(\eta _t=\eta \). Let \(({\varvec{\phi }}_t^{(x)})_{t=0}^T, ({\varvec{\phi }}^{(y)}_t)_{t=0}^T, ({\varvec{\phi }}^{(z)}_t)_{t=0}^T\) be the sequences generated by Algorithm 1 and let \({\varvec{e}}_t= {\varvec{g}}_t - \nabla \ell _{u_t}({\varvec{\phi }}_t^{(y)})\). Then for any \({\varvec{\phi }}\in {\varvec{\varPhi }}\), we have

Proof

By induction, we have that \(\theta _t\le {2}/{(t+2)}\) and \(\sum _{\tau =0}^t {1}/{\theta _\tau }={1}/{\theta _t^2}\) for all \(t = 0,1,\ldots ,T\) [25, 67]. Using Assumption 1, we have

Hence, by Lemma 1,

where we have used the facts that \(\theta _{T-1}^2/\theta _T = \theta _T/(1-\theta _T) \le 2/T\) and \(\theta _{T-1}^2/\theta _t \le \theta _{T-1} \le 2/T\) for \(t \in \{0,1,\ldots ,T-1\}\) and \(\theta _{T-1}^2\le 4/T^2\). \(\square \)

Proof (of Theorem 1)