Abstract

Utilizing vast annotated datasets for supervised training of deep learning models is an absolute necessity. The focus of this paper is to demonstrate a supervisory training technique using perspective transformation-based data augmentation to train various cutting-edge architectures for the ego-lane detection task. Creating a reliable dataset for training such models has been challenging due to the lack of efficient augmentation methods that can produce new annotated images without missing important features about the lane or the road. Based on extensive experiments for training the three architectures: SegNet, U-Net, and ResUNet++, we show that the perspective transformation data augmentation strategy noticeably improves the performance of the models. The model achieved validation dice of 0.991 when ResUNET++ was trained on data of size equal to 6000 using the PTA method and achieved a dice coefficient of 96.04% when had been tested on the KITTI Lane benchmark, which contains 95 images for different urban scenes, which exceeds the results of the other papers. An ensemble learning approach is also introduced while testing the models to achieve the most robust performance under various challenging conditions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Deep Learning (DL) models have made massive progress in the field of computer vision (Simonyan and Zisserman 2014). This progress is mainly promoted by training different convolutional neural networks (CNNs) architectures on various tasks like image classification, image segmentation, and object detection. The layers of these neural networks work mainly on preserving the spatial characteristics of images (Simonyan and Zisserman 2014; Srivastava et al. 2021; Szegedy et al. 2015). So far, there have been many types of developed and improved DL models, including different layers and connections. Special handling of the used training data is crucial to train such architectures to achieve state-of-the-art performance. A DL model’s performance is typically improved by increasing the quality, diversity, and amount of training data (Shorten and Khoshgoftaar 2019). However, the unavailability of training data is considered a limitation for achieving the desired performance (Yousri et al. 2021; Perez and Wang 2017). This unavailability can be in the difficulty of finding large-sized datasets or the lack of annotated data required for training the DL architectures supervisely. Accordingly, an efficient way to acquire more annotated data is to enlarge their amount by basically extending the available dataset. This is called data augmentation (DA), where there are different methods for performing augmentation (Perez and Wang 2017; Han et al. 2018). DA methods automatically and artificially inflate the size of the available training data, including the data and their labels. Data warping and oversampling are commonly used for augmentation (Khosla and Saini 2020; Wong et al. 2016; Shorten and Khoshgoftaar 2019). Data warping transforms the existing images by mainly geometric and color transformations (Khosla and Saini 2020). Rotation and flipping of the images are considered common forms of geometric transformation, while color shifting is the most common form of color transformation (Zheng et al. 2020). On the other hand, oversampling augmentation encompasses creating synthetic instances by methods like feature space augmentations and generative adversarial networks (GANs).

In the context of automatic lane detection, recently, there has been an increasing tendency toward considering this task a semantic segmentation task. Thus, many DL models based on various architectures have been developed to perform such tasks robustly. The main benefit of the DL approach for lane detection is that the DL models are capable of showing accurate detection under various road conditions that the traditional computer vision techniques cannot deal with Yousri et al. (2021); Zou et al. (2019). Yet, to achieve this robustness in automatic lane detection, it is crucial to train such DL models using big-size data and various road conditions like the different road lighting and the various lane types. In automatic lane detection, simple data augmentation techniques such as flipping, cropping, and rotation are commonly used either to increase the data size or to overcome data imbalance (Zou et al. 2019). However, while dealing with data augmentation, it is crucial not to use methods that can eliminate some important features of the lane lines, like their bright colors or frontal heading nature. Moreover, methods like GANs cannot be applied directly to detection tasks because this produces only new images without their corresponding masks (Shorten and Khoshgoftaar 2019).

In this paper, perspective transformation (PT) is utilized to inflate the training data size by increasing the number of images taken by a frontal camera mounted on the top of the autonomous vehicle. We can mimic various images taken at different angles and positions for the same scene using PT from just one image (Wang et al. 2020). This can be beneficial in the context of data augmentation, especially when the method is easy to implement. Furthermore, three architectures based on the fully convolutional networks (FCNs) are involved in studying the impact of using PT during various experiments to be trained on the ego-lane detection task. Eventually, the usefulness of ensemble learning is utilized during the testing stage. Accordingly, the main contributions of this work can be listed as follows:

-

Employing the perspective transformation as a data augmentation method to mimic realistic images taken at different camera angles for the road scenes without eliminating essential features.

-

Using the same perspective transformations to generate the corresponding labels for the augmented images.

-

Investigating the effect of perspective transformation-based data augmentation on the performance of three different state-of-the-art architectures.

-

Adopting a stacking ensemble approach while testing the developed models to achieve the best possible performance in complex and challenging scenes.

The remaining sections of the paper are organized as follows. Section 2 conducts the related work. While Sect. 3 describes the methodology of using perspective transform as a data augmentation method and introduces the adopted ensemble learning approach. Section 4 shows the conducted experiments, results, and discussion. Eventually, the conclusion is found in Sect. 5.

2 Related work

This paper investigates the effect of using the perspective transform as a data augmentation method while training deep learning models on the ego-lane detection task. Accordingly, this section focuses on briefly reviewing the previous related work that considered deep learning models for the lane detection task and the different data augmentation methods used to overcome the limitation of data unavailability.

2.1 Deep learning for lane detection

In Chao et al. (2019), a robust multi-lane detection algorithm was proposed where an architecture based on a fully convolutional network (Neven et al. 2018) was used for lane boundary feature extraction. Then, Hough variation and the least square method were used to fit the lane lines accurately as done previously in Sun and Chen (2014). The algorithm results were evaluated based on Tusimple and Caltech datasets showing robust lane detection. Another work (Mendes et al. 2016) presented a detection system using CNN-based architecture, which was designed to be easily converted into FCN one after training to allow the use of a large contextual window. This methodology was compared with other state-of-the-art methods and showed high performance except in scenes with extreme lighting conditions. Furthermore, an architecture based on up-convolutional layers was proposed in Oliveira et al. (2016) while another one called RBNet was developed in Chen and Chen (2017). Both architectures showed robust performance in lane segmentation upon testing them on the KITTI benchmark dataset. Chen and Chen (2017) ’s limitation was to have a road segmentation model robust to all the weather conditions.

An end-to-end lane detection based on LaneNet was introduced in Wang et al. (2018). LaneNet is an architecture inspired by SegNet (Badrinarayanan et al. 2017); however, LaneNet has two decoders: one acts as a segmentation branch that detects lanes in a binary mask, and the other is an embedding branch. The network outputs a feature map; thus, clustering and curve fitting using H-Net were then done to produce the required final output results. In Gad et al. (2020), an encoder-decoder network-based SegNet architecture was trained to produce a binary segmentation map by labeling each pixel as a lane pixel or non-lane pixel. The authors used tuSimple dataset to test their approach; then, a real-time evaluation was provided. By looking at lane detection as a semantic segmentation task, the authors in Zou et al. (2019) were inspired by the success of encoder-decoder architectures of SegNet and U-Net (Ronneberger et al. 2015). Accordingly, they built their network by embedding the ConvLSTM to detect lane lines from the information of many frames rather than a single one.

In Yousri et al. (2021), five state-of-the-art FCN-based architectures: SegNet, Modified SegNet, U-Net, ResUNet (Diakogiannis et al. 2020), and ResUNet++ (Jha et al. 2019) were trained for the host lane detection. The performance evaluation of the trained models was done visually and quantitatively by considering lane detection a binary semantic segmentation task. The output results showed robust performance while testing the models on challenging road scenes with various lighting conditions and dynamic scenarios.

2.2 Data augmentation

Image classification and segmentation have a wide scale of data augmentation techniques that vary from simple ones to learned strategies. Image data augmentation techniques can be classified generally into three main approaches: basic image manipulations, deep learning, and meta-learning techniques (Shorten and Khoshgoftaar 2019). However, basic image manipulations are preferably used in supervised learning, which requires images and their corresponding labels. These manipulations include applying the geometrical transformation, kernel filters, color space transformation, mixing, and random erasing. The sharpening and blurring filters are widely used to augment images (LeCun 2015).

The authors of Zhang et al. (2022) have proposed an approach to enhance the image pixels by using a convolution neural network (CNN) and robust deformed denoising convolution neural network (RDDCNN) by extracting the noise features, combining the rectified linear unit and batch normalization to improve the ability of the RDDCNN to learn, and eventually having a clean image. In Tian et al. (2023), the authors have used wavelet transform and multistage convolution neural networks in image denoising. In situations where the noise level is uncertain, the initial stage of the process dynamically adapts the network parameters, which can be advantageous. The second stage uses the residual blocks and wavelet transformations to suppress the noise. The third stage is removing the redundant features by using residual layers. A limitation of this paper that it was difficult to have clean reference images. The authors of Tian et al. (2022) have proposed a heterogeneous architecture of super-resolution convolution neural network to generate a higher quality image. This architecture contained a complementary convolution block and a symmetric group convolution block in parallel such that it improved the channels’ internal and external relations. On the other hand, color space transformation allows altering the color distribution among images. There are many ways of representing digital images, like the grayscale representation, which is considered simple. However, color is a significant distinctive feature for some tasks. A study done in Jurio et al. (2010) showed the performance of the image segmentation task on HSV (Hue, Saturation, and Value) and other color space representations.

Rotation, flipping, and cropping are considered standard geometrical transformation augmentation (GTA) techniques. The simple rotational and flipping affine transformations are commonly used to increase the size of the training data by changing the orientation of the image content. The main advantage of using such simple techniques is their easy implementation and capability to augment the data along with their corresponding annotations. Accordingly, GTA is the desired data augmentation technique for supervised learning. In vision-based lane detection, much previous research adopted the rotational and flipping processes as in Zou et al. (2019); Yousri et al. (2021); Jaipuria et al. (2020). GAN was proposed in Goodfellow et al. (2014), and it is considered a powerful data augmentation method that tries to produce unprecedented images. In Li et al. (2022), the authors used least squares GAN to augment a small dataset for which increased their testing accuracy by 3.57%, but there was class imbalance because of some rare instability incidents. In Ghafoorian et al. (2018), the authors employed a generative adversarial network that consisted of a generator and a discriminator for the lane detection task. An embedding-loss GAN was proposed to segment driving scenes in their approach semantically. The advantage of this model was that the output lanes were thin and accurate. Many variants of GAN have been proposed to improve the quality of the output synthetic images (Radford et al. 2015). Yet, the GAN approaches are hard and can be used for road scenes because the generated images have no corresponding annotations.

3 Proposed approach

3.1 Perspective transformation

In real-world road scenes, all the objects are three-dimensional. This means that any captured image from such scenes is considered a geometrical representation mapped from 3D to 2D (Brynte et al. 2022). This mapping is done through an arbitrary window and camera lens and placed onto the 3D space of the real world. This change in the geometrical description is done through geometric transformation. In digital systems, a 2D image mapped from the real world is represented by discrete values (x, y) on the image space (Marzougui et al. 2020).

When a camera captures, or the human eye views a scene, the objects of the real world are mapped with sizes different from their actual ones. The distant objects appear smaller than the near objects due to the concept of perspective (Do 2013). By transforming the perspective, we can realize the same scene at different positions and orientations. Accordingly, perspective transformation can be very beneficial when dealing with images in computer vision, as many warped versions can be generated from just one image. In this approach, perspective transformation is used as a data augmentation technique to mimic various road frontal images taken for the same scenes with different viewpoints. Consequently, the number of images that will be used to train the FCN-based architectures on the ego-lane detection task and their corresponding labels will be increased. Shortly, perspective transformation is considered a projection mapping that turns the projection of an image into another unique visual plane. This pointwise transformation can be formulated as follows (Li et al. 2017; Do 2013):

and,

where \({P}^{\;src}_{i}\) is a source point of quadrangle vertices on the original image represented by the coordinates \({x}_{src_{i}}\) and \({y}_{{src_{i}}}\). While \({P}^{\;dst}_{i}\) is a destination point of quadrangle vertices on the warped image represented by the coordinates \({x}_{dst_{i}}\) and \({y}_{{dst_{i}}}\), where \({x_{dst_{i}} = {{x^{'}}_{dst_{i}}} / {w}^{'} }\) and \(y_{dst_{i}} = {{y^{'}}_{dst_{i}}} / {w}^{'} \). The 3\(\times \)3 matrix found in Eq. (1) is the perspective transformation matrix or map matrix, which can turn \({P}^{\;src}_{i}\) into a new point \({P}^{\;dst}_{i}\) and they are homogeneous coordinates. Perspective matrix should only have 8 free elements as generally \(m_{33}\) = \(w_{i}\) =1 after normalization. Now by setting the source and destination points, the perspective transformation matrix can be calculated. To find the 8 unknown elements, 8 equations are needed to solve. Accordingly, four pairs of points represented as quadrangle vertices are used as follows: \(P_{1}=(0, 0)\), \(P_{2}=(W, 0)\), \(P_{3}=(0, H)\), \(P_{4}=(W, H)\).

Where H and W are the height and width of the image. Figure 1 shows the four points of the quadrangle vertices on an image. By changing the coordinates of these points, the source and destination points can be defined. Each output pixel is calculated by multiplying with the given perspective matrix to perform a perspective transformation for an input image.

As the aim is to train supervised deep learning models, the same perspective matrix must be applied to both the road image and its corresponding label. Figure 2 represents a sample image and its corresponding label from the nuScenes dataset.

In this study, after applying the perspective transformation, the output image has the same size as the input image. This is because any points outside the original image boundary are filled with black pixels of zero values and will need further cropping. In Table 1, the destination points of the eight adopted perspectives are illustrated. For a better realization of the destination points, Figure 3 shows them as quadrangle vertices. Many other destinations point can be used for more perspectives. However, only the eight perspectives will be used in the augmentation process in this study. The perspective transformation of the input image is performed by multiplying each output pixel with the given perspective matrix, and the output image has the same size as the input image, which is \(1280\times 720\).

Figure 4 shows the eight warped images of the same road frame of Figure 2 after applying the PTA algorithm. The warped images shown in the figure obviously give a better realization of the ego-lane features without missing any needed information. Hence, this is the main motivation behind adopting PTA as a data augmentation method in the context of ego-lane detection.

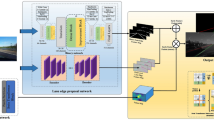

3.2 Used architectures

Ego-lane detection is recognized as a semantic segmentation task of the lane and off-lane classes in this work. The existing CNNs are powerful visual networks that are capable of learning hierarchies of features. However, FCN-based architectures have become the cornerstone of deep learning applied in the context of semantic segmentation. A full convolution network is an architecture that only performs convolution operations by either downsampling or upsampling without dense layers. Many developed FCN-based architectures can be trained supervisely to produce a pixel-to-pixel semantic segmentation map, making them easily trained in an end-to-end manner. SegNet (Badrinarayanan et al. 2017), and U-Net (Ronneberger et al. 2015) are successful architectures that can perform the semantic segmentation task efficiently. Both networks are based on the encoder-decoder architecture, where The encoder block and decoder block are fully convolutional networks.

In SegNet, each encoder produces a set of feature maps by performing convolution with a filter bank. Each convolution operation is followed by batch normalization then an element-wise rectified linear unit (ReLU) is applied before the max-pooling. The decoder stage of SegNet consists of a set of upsampling and convolution layers where each upsampling layer corresponds to a max-pooling one in the encoder part. The resultant upsampled maps are then convolved to produce a high-dimensional feature representation. At last, a softmax classifier is used for a pixel-wise prediction to produce the final segmentation.

On the other hand, the architecture of U-Net has a contracting path and an expansive path. The contracting path represents the encoder part where each convolution operation is followed by applying the ReLU activation function and max-pooling operation for downsampling without any batch normalization (Ozturk et al. 2020). At each downsampling step, the number of feature channels is doubled. In contrast, the decoding in the expansive path includes feature maps upsampling and then up-convolution operations. At each step, concatenating with the corresponding cropped feature map from the contracting path occurs, halving the number of feature channels to restore the original number of pixels. A convolution operation is performed at the final layer, which maps the segmentation output.

The architecture of the U-net is symmetric, easy to be implemented, fast, and produces a precise segmentation of images. Accordingly, many recent architectures have been using and developing U-Net with some modifications or added blocks. According to Jha et al. (2019), ResUNet++ significantly outperforms U-Net. The ResUNet++ architecture is based on ResUNet (Diakogiannis et al. 2020), which stands for the Deep Residual U-Net, where it takes advantage of both the deep residual learning and U-Net. ResUNet++ architecture contains one stem block followed by three encoder blocks, Atrous Spatial Pyramid Pooling (ASPP), and three decoder blocks. For experimenting with PT as a data augmentation method while supervisely training DL models on the ego-lane detection task, SegNet, U-Net, and ResUNet++ are utilized here.

3.3 Ensemble learning

Lane detection is a challenging semantic segmentation task due to the diversity of lane kinds, road illumination conditions, and possible dynamic scenarios. Accordingly, training just one model that can robustly and efficiently detect the ego-lane under all the possible road conditions is challenging (Yousri et al. 2021; Chao et al. 2019). Also, another challenging part of the lane detection task is the class imbalance nature within the images, where the lane information class makes up only a small portion of the image. Eventually, this can lead up to further misclassifications that must be considered. So here, we can take advantage of ensemble learning which is considered a powerful machine learning technique which learns by gaining knowledge by ensembling the results of several machine learning algorithms to have enhanced generalization than a single machine learning algorithm (Li and Yang 2017). Several models can be trained separately, and their prediction can then be combined or averaged or get the majority vote to produce a better overall prediction by relying on more realizations rather than just one (Dietterich 2002; Cutler et al. 2012). Bagging, boosting, and stacking are the main classes of ensemble learning methods (Dietterich 2002). Stacking, which is the type we use in this study, is about training different models on the same data to learn how to best combine predictions.

In the context of ego-lane detection, the main motivation for adopting an ensemble prediction of models is to overcome the challenges addressed earlier, and hence better and more accurate pixel classification can be achieved. However, to achieve effective ensemble learning, it is important to carefully select and train the models and then pick the type of ensembling technique according to the problem (Sagi and Rokach 2018). The selection of the network architectures to be trained is better to be based on diversity. Moreover, an essential criterion while selecting is to guarantee that the trained models will output the same predictive type. In this work, three state-of-the-art semantic segmentation architectures: SegNet, U-Net, and ResUNet++, will be used in ensemble learning, and their predictions will be merged and averaged to produce the final prediction.

4 Experiments and results analysis

This section presents the steps for validating the reliability of the proposed augmentation approach, including the implementation details, the datasets used to evaluate the performance of FCN-based models, and analyzing the results obtained quantitatively and qualitatively. The proposed approach phases were executed using Python programming language and on Google Colaboratory (Carneiro et al. 2018).

4.1 Datasets description

This work considers FCN-based architectures that can be supervisely trained to produce a pixel-to-pixel semantic segmentation map by identifying each output pixel as a lane or non-lane. Accordingly, images with their corresponding ego-lane/host lane ground truth annotations are crucial for the training and validation of such architectures. In this study, two datasets are involved:

-

1.

The extended version from nuScenes dataset (Caesar et al. 2020) that was developed and used in Yousri et al. (2021) where some of the frontal camera frames were labeled based on a proposed sequence of traditional computer vision techniques for the ego-lane detection task.

-

2.

Open-source benchmark KITTI dataset (Geiger et al. 2013) which has lane estimation benchmark comes with ground truth annotations for the ego-lane.

The extended version from the nuScenes dataset is the only chosen data for training FCN-based architecture for the training stage. The reason behind excluding KITTI from the training stage is the unavailability of a sufficient number of labeled frames. NuScenes dataset originally contains 1.4 million RGB (Red Green Blue) images taken via 6 different cameras mounted on the autonomous vehicle. However, the extended version that was developed in Yousri et al. (2021) contains only a subset of the frontal images of the original nuScenes dataset along with their corresponding ego-lane annotations. The images within this extended version have diverse road illumination conditions, lane lines kinds, and dynamic scenarios. At the same time, the reliability of the generated labels was qualitatively validated in Yousri et al. (2021) by visual examination. Accordingly, upon this rich annotated dataset, the PTA method is applied to train the three FCN architectures. For the testing stage, both nuScenes and KITTI are utilized as this expands the diversity of the testing experiments. Using KITTI in performance testing has two advantages. The first is that there have been many previous studies that tested their lane detection methods on the KITTI benchmark. Thus, we can by this means, easily compare the results of our work with theirs. Secondly, achieving high performance upon testing on images of another dataset will intuitively assure that no overfitting occurred due to the PTA augmentation.

4.2 Training stage setup

In this study, all the FCN-based models are supervisely trained end-to-end to detect the ego-lane in various road conditions. In the training stage of all the experiments that will be conducted later, we randomly split the training data into a training set and a validation set, where the ratio between them respectively is chosen to be 0.9 : 0.1. The number of the training epochs is set to be 100, and it is fixed along with all the experiments for the different architectures and data sizes. To improve the overall performance of the deep learning models, it is crucial to obtain the optimal parameters during the training stage. This can be done by carefully defining the loss function(s) suitable for the semantic segmentation task. In this approach, a hybrid loss function using the Binary Cross-Entropy and the Dice Loss is utilized, and they can be mathematically presented as Jadon (2020):

where v is the predicted value by the prediction model and \({\hat{v}}\) is the actual value.

4.3 Results and discussion

During the training stage of the three deep learning architectures, the images are sampled to a resolution of 265 x 128. The hypothesis that we want to test during the conducted experiments is mainly that the PTA method can boost the performance of FCN-based models in the ego-lane detection task more than the standard GTA. The \(90^o\) rotation in both directions is adopted in this study as the standard GTA technique. The reasons behind choosing it are:

-

1.

It is commonly used with the lane detection task.

-

2.

Easy to be implemented.

-

3.

It eliminates a very important feature about the ego-lane lines: their frontal and central position on the image.

Accordingly, no overfitting is likely to occur as in flipping, which nearly produces the same road scene. The first step in investigating and understanding the impact of using perspective transform for augmenting images in the context of lane detection tasks is to compare models trained on augmented data using PTA versus the other adopted augmentation method. The experiments include three different deep learning architectures to expand and strengthen the evaluation. The conducted experiments included training the three detectors: SegNet, U-Net, and ResUNet++ for 100 epochs on:

-

Three different data sizes.

-

Three different augmented datasets using PTA, GTA, and both of them.

By performing these 27 experiments, we can comprehensively evaluate the effect of using PTA as an augmentation method. In PTA trials, the augmentation was done for every image by a factor of 2 from the adopted perspectives presented earlier. As ego-lane detection is recognized here as a semantic segmentation task of two classes, the dice coefficient is the main evaluation metric. Table 2 shows the validation results of the training stage.

By looking at the table, we can observe that all the models trained on the augmented dataset using the PTA method result in higher performances on the different data sizes. Also, it is obviously noticeable that by using the PTA method, the validation dice of ResUNet++ outperforms both U-Net and SegNet by nearly \(0.3\%\) and \(1.2\%\), respectively, on data size equals 2000 before the augmentation. As the data size increases, the quantitative variations among the validation results decrease due to the reduction of the augmentation impact generally (Shorten and Khoshgoftaar 2019). The scarcity of data results in a lack of samples essential for training the models sufficiently, and thus the importance of augmentation appears in this context. This behavior was addressed before in Diakogiannis et al. (2020) that the FCNs use contextual information to increase their performance. Thus, the impact of the PTA method can prove this concept as it does not eliminate the important information from the images, unlike the standard GTA methods. The behavior of ResUNet++ during the experiments shows that it gets affected noticeably by the augmentation process and that it outperforms the other two architectures. Jha et al. concluded before in their study results (Jha et al. 2021) the considerable effect of data augmentation on ResUNet++ performance in the segmentation task. Accordingly, we investigated the effect of the PTA method on ResUNet++ architecture by using training data augmented by different augmentation factors from the adopted perspectives. Figure 5 shows the validation dice coefficient and loss of ResUNet++ trained on a dataset of 3000 images augmented by factors of 2, 4, 6, and 8.

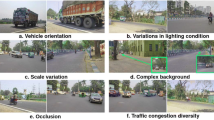

For testing the models, different road conditions, as shown in Table 3 were chosen for investigating quantitatively to what extent the PTA method boosts the detection performance of the three models trained on data of size equal to 6000 and augmented by a factor of 2. The road environment is dynamic and challenging in nature due to the diversity of possible scenarios, including changes in illumination, road condition, and lane line types. Consequently, testing the performance of the models in such diverse driving situations is crucial to evaluate their robustness. For every category found in Table 3, 200 images were used in the testing. The testing set contains all the needed condition classes to investigate the performance of the models in the most complex and harsh environments. The results shown in Table 3 prove that the models trained on images augmented using the PTA method outperform those augmented using the standard GTA method. During the performance analysis on the testing sets, ResUNet++ showed the most reliable ego-lane detection task performance in most testing classes. At the same time, U-Net achieved the highest dice coefficients in the double lane lines and the crowded and curved road conditions. This gives intuition that U-Net can adapt more robustly in detecting the ego-lane in scenes with fine and complex details. Furthermore, by studying the testing results, the models show insufficient performance in conditions like dark, rural, and crowded scenes. The model has an average processing time for lane detection equals 21.3 ms/frame for sunny condition, 21.9 ms/frame for cloudy weather, and 23.1 ms/frame for dark condition.

Even though we have achieved using PTA high dice coefficients in the previous testing trial, we still need to improve the performance robustness of ego-lane detection. A high-performance detector is supposed to be able to cope with nearly all the driving situations that are likely to occur on a daily basis. Bad illumination, like in the case of the dark night scenes and the shadows of rural roads, is very challenging. At the same time, harsh rainy scenes are considered a serious limitation for accurate lane detection due to the bad lighting, the distracting water droplets, and the wet road with a mirrory and distorting effect. Also, the dynamic road scenarios affect the robustness of lane detection. These scenarios can be in the form of distorting ego-lane obstacles like a preceding car or pedestrians. Moreover, the distortion can be in the form of complex lane positions or lane lines that are hard to adapt to, like curvy, zigzag, or multi-line ones. Ensemble learning can provide an excellent solution for achieving the best possible results in the addressed harsh road environments. By stacking and averaging the predictions of the models used in the previous testing experiment, a more robust performance in the ego-lane detection task can be attained, as shown in Table 4.

The results show that the performance for finding the ego-lane detection has been noticeably boosted. The ensemble learning approach has increased the testing results on the challenging rural scenes by nearly \(7\%\). Also, for the dark and rainy scenes, the dice coefficient has reached 0.986 and 0.987, respectively. Moreover, the ensemble prediction is considered robust in the dynamic scenarios of distorting and crowdy road environments. This gives intuition regarding the reliability of the ensemble predictions of models trained on data augmented using the PTA method. The usefulness of PTA can also be evaluated by training new models on other different data augmentation techniques. However, this can be done in other contexts rather than lane detection, like object detection. But when it comes to lane detection, augmentation methods like random noise adding or color space transformation can dramatically affect the models. The affine transformation-like rotation used in this study is commonly used. The usage of the PTA method gives out enhanced results for lane detection tasks. Thus, this study can be considered a weak supervision approach as it utilizes a limited number of images with their corresponding weak labels for efficiently training FCN-based models.

In the context of semantic segmentation, the visual examination is an excellent choice to evaluate the overall performance qualitatively. The prediction is supposed to represent a precise segmentation of the input image into two different parts in the coarse and fine levels. Accordingly, the qualitative evaluation is important in the context of the ego-lane detection task as it reveals the prediction quality that is crucial for advanced driver assistance systems (ADAS). This study aims to achieve the most precise detection after training the models on a dataset of big size and augmented using PTA. Thus, we consider evaluating the performance in terms of the fine level, seeking that the models can precisely process the details under challenging road conditions. There should not be a noticeable space between the green prediction and the ego-lane lines during the visual evaluation. Figure 6 shows the high performance of the ensemble prediction on very challenging scenes, including bad illumination, rain, shadows, different complex lane orientations, and various dynamic scenarios. As a future work, time series prediction as in Xing et al. (2022); Xiao et al. (2022); Xing et al. (2022) could be taken into consideration.

4.4 Comparing with others

KITTI Lane benchmark (Geiger et al. 2013) is commonly used for evaluating different ego-lane detection methods. Accordingly, we utilize it in testing our work to compare the result of our ensemble learning approach with other state-of-the-art lane detection methods. The KITTI Lane benchmark contains 95 sample images collected in various urban scenes with ground truth. The comparison is done in terms of the dice coefficient, which is equivalent to the F1-measure or MaxF in the binary segmentation context. The proposed approach is compared with FCN_LC (Mendes et al. 2016), Up-Conv-Poly (Oliveira et al. 2016), RBNet (Chen and Chen 2017), and NVLaneNet (Wang et al. 2020) as shown in Table 5 as the highest Dice Coefficient is 91.86% for the NVLaneNet (Wang et al. 2020), while the proposed approach has Dice Coefficient of 96.04%.

5 Conclusion

A data augmentation approach based on perspective transformation is developed in this paper. It supervisely trains different FCN-based architectures on ego-lane detection. Through several experiments for training three FCN-based models: SegNet, U-Net, and ResUNet++, we demonstrate that perspective transformation data augmentation effectively enhances the performance of the models. The results are evaluated quantitatively and qualitatively by testing the models on various illumination and road conditions, different lane line kinds, and diverse, dynamic scenarios. Realistic images of roads can be generated through perspective transformation augmentation, including the necessary labels, without missing important features. Eventually, ensemble learning is used to boost the ego-lane detection task in the most challenging road conditions. The performance result is compared with other previous lane detection methods on the KITTI Lane benchmark dataset, which resulted in a dice coefficient equal to 96.04%, which exceeds the other papers’ results.

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author upon reasonable request.

References

Badrinarayanan V, Kendall A, Cipolla R (2017) Segnet: a deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans Pattern Anal Mach Intell 39(12):2481–2495

Brynte L, Bökman G, Flinth A, Kahl F (2022) Rigidity preserving image transformations and equivariance in perspective. arXiv preprint arXiv:2201.13065

Caesar H, Bankiti V, Lang A.H, Vora S, Liong V.E, Xu Q, Krishnan A, Pan Y, Baldan G, Beijbom O (2020) nuscenes: A multimodal dataset for autonomous driving. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 11621–11631

Carneiro T, Da Nóbrega RVM, Nepomuceno T, Bian G-B, De Albuquerque VHC, Reboucas Filho PP (2018) Performance analysis of google colaboratory as a tool for accelerating deep learning applications. IEEE Access 6:61677–61685

Chao F, Yu-Pei S, Ya-Jie J (2019) Multi-lane detection based on deep convolutional neural network. IEEE Access 7:150833–150841

Chen Z, Chen Z (2017) Rbnet: A deep neural network for unified road and road boundary detection. In: International conference on neural information processing. Springer, pp 677–687

Cutler A, Cutler D, Stevens J (2012) Random forests. In: Ensemble machine learning. Springer, New York, pp 157–175

Diakogiannis FI, Waldner F, Caccetta P, Wu C (2020) Resunet-a: a deep learning framework for semantic segmentation of remotely sensed data. ISPRS J Photogramm Remote Sens 162:94–114

Dietterich TG et al (2002) Ensemble learning. Handbook Brain Theory Neural Netw 2(1):110–125

Do Y (2013) On the neural computation of the scale factor in perspective transformation camera model. In: 2013 10th IEEE International conference on control and automation (ICCA). IEEE, pp 418–423

Gad GM, Annaby AM, Negied NK, Darweesh MS (2020) Real-time lane instance segmentation using segnet and image processing. In: 2020 2nd Novel intelligent and leading emerging sciences conference (NILES). IEEE, pp 253–258

Geiger A, Lenz P, Stiller C, Urtasun R (2013) Vision meets robotics: the kitti dataset. Int J Robot Res 32(11):1231–1237

Ghafoorian M, Nugteren C, Baka N, Booij O, Hofmann M (2018) El-gan: Embedding loss driven generative adversarial networks for lane detection. In: Proceedings of the European conference on computer vision (ECCV) workshops, pp 1–11

Goodfellow IJ, Shlens J, Szegedy C (2014) Explaining and harnessing adversarial examples. arXiv preprint arXiv:1412.6572

Han D, Liu Q, Fan W (2018) A new image classification method using cnn transfer learning and web data augmentation. Expert Syst Appl 95:43–56

Jadon S (2020) A survey of loss functions for semantic segmentation. In: 2020 IEEE conference on computational intelligence in bioinformatics and computational biology (CIBCB). IEEE, pp 1–7

Jaipuria N, Zhang X, Bhasin R, Arafa M, Chakravarty P, Shrivastava S, Manglani S, Murali VN (2020) Deflating dataset bias using synthetic data augmentation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition workshops, pp 772–773

Jha D, Smedsrud PH, Riegler MA, Johansen D, De Lange T, Halvorsen P, Johansen HD (2019) Resunet++: An advanced architecture for medical image segmentation. In: Proceedings of IEEE international symposium on multimedia (ISM), pp 225–2255

Jha D, Smedsrud PH, Johansen D, de Lange T, Johansen HD, Halvorsen P, Riegler MA (2021) A comprehensive study on colorectal polyp segmentation with resunet++, conditional random field and test-time augmentation. IEEE J Biomed Health Inform 25(6):2029–2040

Jurio A, Pagola M, Galar M, Lopez-Molina C, Paternain D (2010) A comparison study of different color spaces in clustering based image segmentation. In: International conference on information processing and management of uncertainty in knowledge-based systems. Springer, pp 532–541

Khosla C, Saini BS (2020) Enhancing performance of deep learning models with different data augmentation techniques: a survey. In: 2020 International conference on intelligent engineering and management (ICIEM). IEEE, pp 79–85

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436–444

Li Y, Yang Z (2017) Application of eos-elm with binary jaya-based feature selection to real-time transient stability assessment using pmu data. IEEE Access 5:23092–23101. https://doi.org/10.1109/access.2017.2765626

Li X, Li S, Bai W, Cui X, Yang G, Zhou H, Zhang C (2017) Method for rectifying image deviation based on perspective transformation. In: IOP conference series: materials science and engineering, vol. 231. IOP Publishing, p 012029

Li Y, Zhang M, Chen C (2022) A deep-learning intelligent system incorporating data augmentation for short-term voltage stability assessment of power systems. Appl Energy 308:118347. https://doi.org/10.1016/j.apenergy.2021.118347

Marzougui M, Alasiry A, Kortli Y, Baili J (2020) A lane tracking method based on progressive probabilistic hough transform. IEEE Access 8:84893–84905

Mendes CCT, Frémont V, Wolf DF (2016) Exploiting fully convolutional neural networks for fast road detection. In: 2016 IEEE international conference on robotics and automation (ICRA). IEEE, pp 3174–3179

Neven D, De Brabandere B, Georgoulis S, Proesmans M, Van Gool L (2018) Towards end-to-end lane detection: an instance segmentation approach. In: 2018 IEEE intelligent vehicles symposium (IV). IEEE, pp 286–291

Oliveira GL, Burgard W, Brox T (2016) Efficient deep models for monocular road segmentation. In: 2016 IEEE/RSJ international conference on intelligent robots and systems (IROS). IEEE, pp 4885–4891

Ozturk O, Saritürk B, Seker DZ (2020) Comparison of fully convolutional networks (fcn) and u-net for road segmentation from high resolution imageries. Int J Environ Geoinform 7(3):272–279

Perez L, Wang J (2017) The effectiveness of data augmentation in image classification using deep learning. arXiv preprint arXiv:1712.04621

Radford A, Metz L, Chintala S (2015) Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv preprint arXiv:1511.06434

Ronneberger O, Fischer P, Brox T (2015) U-net: Convolutional networks for biomedical image segmentation. In: Proceedings of international conference on medical image computing and computer-assisted intervention, pp 234–241

Sagi O, Rokach L (2018) Ensemble learning: a survey. Wiley Interdiscip Rev 8(4):1249

Shorten C, Khoshgoftaar TM (2019) A survey on image data augmentation for deep learning. J Big Data 6(1):1–48

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556

Srivastava S, Narayan S, Mittal S (2021) A survey of deep learning techniques for vehicle detection from uav images. J Syst Architect 117:102152

Sun P, Chen H (2014) Lane detection and tracking based on improved hough transform and least-squares method. In: International symposium on optoelectronic technology and application 2014: image processing and pattern recognition, vol. 9301. International Society for Optics and Photonics. p 93011

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2015) Going deeper with convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1–9

Tian C, Zhang Y, Zuo W, Lin C-W, Zhang D, Yuan Y (2022) A heterogeneous group cnn for image super-resolution. IEEE Trans Neural Netw Learn Syst. https://doi.org/10.1109/tnnls.2022.3210433

Tian C, Zheng M, Zuo W, Zhang B, Zhang Y, Zhang D (2023) Multi-stage image denoising with the wavelet transform. Pattern Recogn 134:109050. https://doi.org/10.1016/j.patcog.2022.109050

Wang Z, Ren W, Qiu Q (2018) Lanenet: real-time lane detection networks for autonomous driving. arXiv preprint arXiv:1807.01726

Wang X, Wang K, Lian S (2020) A survey on face data augmentation for the training of deep neural networks. Neural Comput Appl 32(19):15503–15531

Wang X, Qian Y, Wang C, Yang M (2020) Map-enhanced ego-lane detection in the missing feature scenarios. IEEE Access 8:107958–107968

Wong SC, Gatt A, Stamatescu V, McDonnell MD (2016) Understanding data augmentation for classification: when to warp? In: 2016 International conference on digital image computing: techniques and applications (DICTA). IEEE, pp 1–6

Xiao Z, Zhang H, Tong H, Xu X (2022) An efficient temporal network with dual self-distillation for electroencephalography signal classification. 2022 IEEE international conference on bioinformatics and biomedicine (BIBM). https://doi.org/10.1109/bibm55620.2022.9995049

Xing H, Xiao Z, Qu R, Zhu Z, Zhao B (2022) An efficient federated distillation learning system for multitask time series classification. IEEE Trans Instrum Meas 71:1–12. https://doi.org/10.1109/tim.2022.3201203

Xing H, Xiao Z, Zhan D, Luo S, Dai P, Li K (2022) Selfmatch: robust semisupervised time- series classification with self- distillation. Int J Intell Syst 37(11):8583–8610. https://doi.org/10.1002/int.22957

Yousri R, Elattar MA, Darweesh MS (2021) A deep learning-based benchmarking framework for lane segmentation in the complex and dynamic road scenes. IEEE Access 9:117565–117580

Zhang Q, Xiao J, Tian C, Chun- Wei Lin J, Zhang S (2022) A robust deformed convolutional neural network (cnn) for image denoising. CAAI Trans Intell Technol. https://doi.org/10.1049/cit2.12110

Zheng Q, Yang M, Tian X, Jiang N, Wang D (2020) A full stage data augmentation method in deep convolutional neural network for natural image classification. Discrete Dyn Nat Soc 2020

Zou Q, Jiang H, Dai Q, Yue Y, Chen L, Wang Q (2019) Robust lane detection from continuous driving scenes using deep neural networks. IEEE Trans Veh Technol 69(1):41–54

Funding

Open access funding provided by The Science, Technology & Innovation Funding Authority (STDF) in cooperation with The Egyptian Knowledge Bank (EKB).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest or competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yousri, R., Moussa, K., Elattar, M.A. et al. A novel data augmentation approach for ego-lane detection enhancement. Evolving Systems 15, 1021–1032 (2024). https://doi.org/10.1007/s12530-023-09533-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12530-023-09533-w