Abstract

Online labor platforms have been criticized for fueling precarious working conditions. Due to their platform-bound reputation systems, switching costs are prohibitively high and workers are locked-in to the platforms. One widely discussed approach to addressing this issue and improving workers’ position is the portability of reputational data. In this study, we conduct an online experiment with 239 participants to test the effect of introducing reputation portability and to study the demand effect of imported ratings. We find that the volume of imported ratings stimulates demand, although to a lower degree than onsite ratings. Specifically, the effect of imported ratings corresponds to about 35% of the effect of onsite ratings. The results imply the possibility of unintended cross-market demand concentration effects that especially favor workers with high rating volumes (“superstars”).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Online labor platformsFootnote 1 have become a ubiquitous means for coordinating the supply and demand of work—particularly for well-partitionable and remotely processable tasks, commonly referred to as crowdwork (De Stefano, 2016; Vallas & Schor, 2020). Platforms serve as intermediaries connecting clients and workers, leveraging internet and mobile technology to coordinate geographically dispersed participants and decentralized work processes (Howcroft & Bergvall-Kåreborn, 2019; Kenney & Zysman, 2019). Over the last decade, the number of workers earning a substantial part of their income via online labor platforms has been continuously rising (Stephany et al., 2021).

With more and more individuals earning a substantial portion of their income via platforms, such work arrangements are transforming permanent jobs into atypical, project-based work (Fernández-Macías et al., 2023). Despite the opportunities of flexible platform work for some (e.g., older persons who are put at disadvantage in traditional labor markets (Huang et al., 2020)), platforms have increasingly been criticized for fueling precarious working conditions (Cutolo & Kenney, 2021), with severe implications for workers’ social welfare.Footnote 2

Working conditions on digital labor platforms are often precarious as they involve temporary, part-time, and remote work arrangements (Fieseler et al., 2019). The outsourcing of entrepreneurial risks to workers results in instability (Wood et al., 2019b) which is further exacerbated by unclear procedures favoring clients over workers (Fieseler et al., 2019; Zhen et al., 2021). Workers’ self-employed status leads to unpredictable hours, volatile earnings, and limited social protection (Berg, 2018; Degryse, 2016), resulting in lower earnings compared to equivalent employees (Bergvall‐Kåreborn & Howcroft, 2014; Felstiner, 2011). These dynamics create monopolistic structures and increase worker dependence on platforms as switching entails high costs (Cutolo & Kenney, 2021; Dube et al., 2020).

On platforms, elevated switching costs stem from platform-specific reputation systems, which compile transaction history data such as star ratings, written feedback, and completed jobs. Clients heavily rely on this reputational data for hiring decisions, as it offers valuable insights into workers’ skills and performance (Wood-Doughty, 2016). Due to its high significance, the non-portability of reputation imposes prohibitive costs when workers switch to a new platform as this would require rebuilding their reputation from scratch (Ciotti et al., 2021). The workers’ dependence enables platforms to exploit their position of power via unclear governance structures and information asymmetry (Gegenhuber et al., 2022; Trittin-Ulbrich et al., 2021).

Consequently, policymakers in the European Union have recognized that the current non-portability of reputation is locking-in workers to the platform, impeding competition between platforms (European Union, 2022; Lambrecht & Heil, 2020). Beyond that, these systems hamper innovation and lead to excessive rents for incumbent firms (Farrell & Klemperer, 2007; Katz & Shapiro, 1994). Therefore, the academic and public debates center around the challenges associated with this development.

One widely discussed approach to improving working conditions in the platform economy is to make workers’ reputational data portable between platforms (Basili & Rossi, 2020; Ciotti et al., 2021; Hesse et al., 2022). In theory, allowing workers to transfer their reputational data from one platform to another would reduce lock-in effects. By substantially decreasing switching costs, workers would gain independence and bargaining power (Ciotti et al., 2021). With workers’ mobility across platforms being significantly enhanced, competition among platforms for workers intensifies, and the power dynamics between workers and platforms become more balanced. This shift would provide an incentive to cease exploitative practices and improve working conditions in order to remain competitive. Therefore, the European Union has suggested studying the “mechanisms for reputation portability, assessing its advantages and disadvantages and technical, legal and practical feasibility” (Hausemer et al., 2017, p. 131). These calls have been answered by a steady stream of research, indicating the trust-building potential and, thus, the effectiveness of such ported reputation for workers (Hesse et al., 2022; Otto et al., 2018; Teubner et al., 2020).

Nonetheless, the overall implications of reputation portability remain ambiguous in the academic debate. On the one hand, cross-platform reputation transfer can alleviate lock-in effects and reduce vulnerability to platform exploitation (Ciotti et al., 2021). This may foster heightened competition and innovation among platforms (Engels, 2016). Additionally, reputation portability enhances economic efficiency by lowering multi-homing costs and entry barriers for workers with established reputations on other platforms. On the other hand, the anticipated technical challenges may result in high implementation costs, which could be passed on to consumers (Swire & Lagos, 2013). Furthermore, platforms may intensify data collection while having weaker incentives for data protection (Krämer & Stüdlein, 2019).

Despite consensus regarding positive effects on the macro level, large-scale reputation portability may entail risks, particularly in concentrated markets with a few “superstars” and numerous less reputable users. Thus, “short-circuiting” reputation systems could harm the majority of workers as demand concentration and inequality rise. This aspect, however, has been neglected in previous research on reputation portability (Hesse & Teubner, 2020).

Research objective

In this paper, we study how the availability of transferred reputation, particularly the volume of imported ratings affects clients’ choice of workers. To do so, we conducted an online experiment in which subjects chose from four workers for whom we systematically varied whether ratings from another platform were present. In addition, we varied the rating volume of both onsite and imported ratings (n = 239). In a nutshell, we find that the volume of imported ratings has a positive demand effect. Hence, we contribute to a more comprehensive understanding of the demand effect of ported reputation which extends beyond the impact of rating valence.

Complementing our findings with empirical data suggests the emergence of demand concentration dynamics. Specifically, workers who are already successful profit most from importing their reputational data. With that, we contribute to the emerging social welfare computing literature by shedding light on potential adverse effects of data portability in the field. Moreover, our study reveals that imported rating volume has a demand-increasing effect equivalent to roughly 35% of the effect of platform-specific ratings. Thus, we contribute to a more differentiated notion of ported reputation. Additionally, our study holds practical implications for workers, platform providers, and policymakers.

The remainder of this paper is organized as follows: The next section reviews related work on hiring decisions in crowdwork, with particular focus on the role of trust, reputation, and reputation portability. The following section is dedicated to hypothesis development, where we employ the theoretical lens of signaling theory as a conceptual point of departure. Subsequently, we outline the study’s method and present the results. Finally, we discuss our findings’ theoretical and practical implications and critically reflect on the study’s limitations and opportunities for future research.

Background and related work

To contextualize our study within the existing literature, we review prior research on hiring decisions in crowdwork, focusing on the significance of trust and reputation as well as the transferability of reputation. We also briefly illustrate the legal context in which our study is embedded, as the legal framework significantly shapes how theoretical findings would be implemented in practice.

Hiring decisions on platforms

Most platforms position themselves as neutral technology providers rather than employers and categorize workers as self-employed contractors (Kuhn & Maleki, 2017). However, some parallels to the traditional employer-employee relationship can be drawn due to the platforms’ extensive level of control over workers (Meijerink & Keegan, 2019). To be more precise, platforms employ mechanisms and governance practices to organize the labor market by defining the users’ scope of action, thus taking over the tasks of the traditional employer (L. Chen et al., 2015; Kirchner & Schüßler, 2019). Hiring follows a dual selection process whereby workers are first ranked by algorithms based on their qualifications, skills, experience, and rating scores and subsequently chosen by clients (Waldkirch et al., 2021; Wood et al., 2019a). Based on algorithmic decisions, poor-performing worker accounts are deactivated, analogous to human resource practices for dismissals (Rosenblat & Stark, 2016). Lastly, platforms administer payments and fees and manage workers’ performance through appraisal from clients that form workers’ reputations (Kenney & Zysman, 2019; Zhen et al., 2021).

Nonetheless, traditional hiring practices are built on the foundation of long-term employer-employee relationships, whereas platform workers are often engaged for short-term, project-based work. Consequently, many traditional practices for recruiting, such as interviews, assessment centers, and psychological tests, are less applicable to online hiring (Batt & Colvin, 2011). Therefore, clients’ hiring decisions on platforms have been subject to extensive research.

Core to the hiring decision are clients’ expectations of workers’ future performance, which are based on observable and latent information on profiles (Kokkodis & Ransbotham, 2023). Some of these observable attributes are less obviously linked to work quality. One stream of research found that demographic features impact workers’ likelihood of being hired. For instance, clients prefer workers who are closer in culture, language, and time zones (Hong & Pavlou, 2017; Ren et al., 2023). In contrast, workers from developing countries (Mill, 2011), foreigners (Galperin & Greppi, 2017), people of color, and less attractive workers (W. Leung et al., 2020) are less likely to be hired. Conversely, women are preferably hired (Chan & Wang, 2018), especially for female-dominated jobs (Leung & Koppman, 2018). Apart from workers’ characteristics, fast applications (Kokkodis et al., 2015), as well as long membership on a platform (Ren et al., 2023), improve workers’ probability of being hired.

However, other factors have been found to be more predictive of workers’ hiring success. For one, the quantity of successfully completed tasks serves as a robust indicator of workers' experience and their work quality (A. Kathuria et al., 2021). Similarly, clients are more likely to rehire workers with whom they have previously collaborated (Kokkodis et al., 2015). Research on skill certifications, however, is rather inconsistent, with studies demonstrating positive effects on hiring chances (A. Kathuria et al., 2021; Moreno & Terwiesch, 2014; Sengupta et al., 2023), whereas others found negative effects (Chan & Wang, 2018). As for any transaction, clients also consider costs carefully. Often, clients initially hire cheaper workers, but when these turn out to be unsuccessful, they become less price-sensitive and rely on reputation as a quality indicator (Kokkodis & Ransbotham, 2023). In fact, prior research has shown that clients are willing to trade off price and reputation and accept higher bids from more reputable workers (Moreno & Terwiesch, 2014). In this context, the bid format also plays a role whereby open bid auctions increase workers’ likelihood of being chosen (Hong et al., 2016). Beyond these factors, many researchers have consistently emphasized the high significance of reputation in online hiring (Banker & Hwang, 2008; Gandini et al., 2016; Kokkodis & Ipeirotis, 2016; Lin et al., 2018; Pallais, 2014; Tadelis, 2016; Yoganarasimhan, 2013).

The significance of reputation on platforms

Since the rise of e-commerce marketplaces such as eBay in the mid-1990s, trust-building and reputation mechanisms on platforms have been subject to extensive research (Resnick et al., 2000). Particularly in the last decade, where online labor platforms have received much scholarly attention, an ever-growing number of publications investigate trust and reputation in this context (e.g., Lehdonvirta et al., 2019; Weber et al., 2022; Wood & Lehdonvirta, 2022).

To mitigate uncertainty and risk for clients who cannot verify workers’ skills and intentions in advance (Saxton et al., 2013), platform operators employ a broad range of mechanisms that foster trust between clients and workers (Hesse et al., 2020; Resnick & Zeckhauser, 2002). Apart from allowing workers to personalize their profile, for instance, by uploading a profile picture, most platforms use some form of rating score (Kirchner & Schüßler, 2019; Kornberger, 2017). After a completed transaction, clients rate the worker’s overall performance (commonly on a scale of 1 to 5 stars). On some platforms, clients are invited to provide more nuanced feedback by rating the worker across different sub-categories (e.g., accuracy, communication, speed) and/or to provide written feedback. This reputation does not only indicate workers’ quality and trustworthiness (Gandini et al., 2016; Wood et al., 2019a), but also serves as the basis for the platform’s ranking system (Rosenblat & Stark, 2015). As a result, workers with higher ratings will appear in more prominent positions in the search results and hence be hired more frequently (Gerber & Krzywdzinski, 2019; Kuhn & Maleki, 2017). Given that clients cannot observe and compare all available workers on the platform (Ringel & Skiera, 2016), clients heavily depend on such ratings and rankings (Jabagi et al., 2019; Rahman, 2021; Wood & Lehdonvirta, 2022).

Platforms also create career systems based on ratings (Kirchner & Schüßler, 2019; Kornberger, 2017). Such systems accumulate the available information on workers (e.g., average rating, number of completed tasks) to sort workers into different categories. Workers who are performing extraordinarily well can climb the career ladder and become “super users” (Idowu & Elbanna, 2022). This special status is often indicated by a recognizable badge (Hui et al., 2018; Jabagi et al., 2019). Consequently, the super-user status drives demand in the direction of these workers as it signals high levels of skills, experience, and reliability (Sailer et al., 2017; Seaborn & Fels, 2015). However, these reputation systems are, as of today, platform-bound.

Legal perspective on reputation portability

The importance of reputation in the platform economy has been recognized beyond the academic discourse and reached the broader public sphere by becoming relevant in regulatory debates. In 2018, the European Union took first steps to establish data portability with the General Data Protection Regulation (GDPR). The GDPR provides individuals with the right to obtain their personal data from a data controller in a format conducive to straightforward transfer to another controller. The objective is to empower individuals with greater control over their data and to facilitate its movement between different service providers or platforms (European Union, n.d.). Nonetheless, as ratings and reviews are provided by reviewers, workers are not the legal owners of their reputational data, rendering Article 20 of the GDPR not applicable (Graef et al., 2013).

The European Data Governance Act, introduced in 2020, pursues a similar goal as the GDPR and establishes the mechanisms and frameworks that enable data sharing among corporations, individuals, and the public sector. This legislation eliminates obstacles to data access while incentivizing data creation by ensuring a fair level of control for those who generate the data (European Commission, 2022). However, the enforcement of this right could face potential hindrances arising from legal restrictions, particularly the confidentiality of algorithms, and technical barriers (Brkan & Bonnet, 2020).

Apart from the regulatory approaches on the European level, reputation portability has also become of interest in regulatory debates on a national level. For instance, ministers of the former German government demanded that platform workers must be able to take their reviews to another platform (Lambrecht & Heil, 2020). Likewise, the German Trade Union Confederation demanded reputation portability as a mechanism for workers to circumvent platform lock-in (DGB, 2021).

Effectiveness and boundary conditions of reputation portability

While researchers have examined the topic of data and reputation portability from different angles, such as from a legal perspective (e.g., Graef et al., 2013; V. Kathuria & Lai, 2018; Zanfir, 2012) or from a technical standpoint (e.g., Bozdag, 2018; Turner et al., 2021; Urquhart et al., 2018), this section specifically focuses on studies exploring the psychological impact on users.

Many scholars have investigated the potential of transferred reputation within the context of sharing platforms. For instance, Zloteanu et al. (2018) observed positive effects of ported reputation on users’ booking intention on a fictitious accommodation platform. In line with their findings, Hesse et al. (2020) corroborated that imported ratings increase consumers’ purchase booking intentions. However, by displaying both onsite and imported ratings in their experiment, they showed that discrepancies between onsite and imported rating scores negatively affect booking intentions. In another study, Hesse et al. (2022) experimentally examined the trust-building effect of varying rating levels of imported ratings on a fictional accommodation platform. Their findings indicate that importing reputation can strengthen trust in the complementor, but only if it is of sufficiently high valence. Otherwise, it can have detrimental effects on perceptions of trustworthiness. Using a ride-sharing scenario, Otto et al. (2018) concluded that consumers exhibit higher willingness to pay and trust towards potential drivers if those drivers’ Airbnb ratings were available.

In summary, studies have consistently demonstrated the trust-building and demand-increasing power of cross-platform reputation portability. Yet, few studies have specifically addressed reputation portability in online labor markets. One study that investigated within-platform reputation portability in online labor markets was conducted by Kokkodis and Ipeirotis (2016). The authors developed a model to predict the future performance of workers based on job category-specific feedback on workers. However, they did not directly assess the effect of ported reputation on clients. Teubner et al. (2020) presented prospective consumers with profiles across three common application areas (accommodation sharing, ride/mobility services, and commodity exchange). They, too, found evidence for the trust-building potential of star ratings across platforms. By varying the type of platforms involved, they showed that a high source-target fit (i.e., high comparability of the review-importing and -exporting platform) is crucial to building trust. Therefore, importing ratings from eBay to Uber, even if high in valence, can actually lower consumers’ trust. Corten et al. (2023) conducted an experiment, revealing that the effectiveness of ported reputation is contingent on the source-target fit of job types and the presence of onsite ratings. Specifically, they demonstrated that transferred ratings can increase trust, but only when the imported ratings refer to the same type of job that workers are applying for and when onsite ratings are absent.

In conclusion, there is a consistent stream of research indicating the positive impact of reputation portability on trust and purchase intentions. However, most of these studies focused on sharing platforms in the digital economy. Despite some similarities between sharing and labor platforms, there are also considerable differences that limit the direct application of these findings to the realm of crowdwork. With this study, we therefore seek to fill the gap by investigating how reputation portability affects demand for platform workers.

Hypothesis development

In the following, we develop our hypotheses on the demand effect of rating volume. Building on signaling theory, we focus specifically on the demand effect of imported reputational data volume (i.e., ratings that originate from another platform; H1), given that onsite ratings are available. Moreover, we examine the effect of imported ratings in comparison to onsite ratings (H2). Given the numerous contextual factors that may influence this effect, we limit the scope of this study to onsite and imported ratings for similar services (i.e., high fit between source and target platform). Furthermore, since rating valence has been subject to extensive research and the vast majority of ratings on most platforms is positive (Einav et al., 2016; Filippas et al., 2020), we focus on high rating scores.

From the clients’ perspective, information asymmetries pose uncertainty, hindering the realization of transactions. In the context of this study, we consider two types of information asymmetries. First, clients may not be able to easily assess whether unknown workers are trustworthy. Concerns may emerge, for instance, regarding their intentions, accountability, or misuse of personal data. Second, service providers may differ concerning their quality. Potential risks for clients are low levels of skill, experience, or capacity (or complete lack thereof). On this basis, we draw on signaling theory to conceptualize “imported” reputation as a signaling device, actively deployed by workers to demonstrate their trustworthiness and competence (Mavlanova et al., 2012; Spence, 1973).

In this vein, imported ratings address both types of the aforementioned information asymmetries. For one, ratings indicate workers’ trustworthiness as they reflect that no severe misconduct has occurred as, otherwise, the worker would have been reported and suspended from the platform (Cui et al., 2020). In addition, they act as quality signals by providing external references for workers’ skills, expertise, and competence. This enables prospective clients to form more accurate expectations of workers’ future performance (Spence, 1973).

However, clients can only effectively distinguish skilled from unskilled workers if signals are more costly to obtain for low-quality than for high-quality workers (Dunham, 2011; Spence, 1973). Otherwise, if signals were not substantially more costly for low-quality workers, any worker (irrespective of their quality) would invest in the signal. For unskilled workers, delivering high-quality results is more time-consuming and comes at higher opportunity and mental costs than for skilled workers. Thus, high ratings can be interpreted as costly quality signals that only highly skilled workers will obtain.

If no onsite ratings are available, the effect of imported ratings can be assumed as straightforward, serving as substitutes for native ratings that help workers overcome the “cold start problem” (Hesse et al., 2022; Teubner et al., 2020). The effect when both onsite and imported ratings are simultaneously present, however, is less clear.

From one perspective, imported ratings provide another source of information that allows clients to form a more comprehensive picture of workers. While ratings, regardless of their origin, fundamentally reflect workers’ quality, imported ratings further demonstrate broader working experience, transferable skills, and adaptability in different work settings. Therefore, especially for commissions involving high stakes, considering all signals reduces the risk of employing an unsuitable worker.

Yet, it remains unclear whether clients merely pay attention to the imported rating score or also take the underlying volume into account. Ultimately, if an imported rating is consistent in valence, a high number of imported ratings does not provide any additional information than a low number thereof.

On the other hand, a high imported rating volume could indicate authenticity and consistent work quality. For one, it is more difficult to fake a considerable number of ratings, whether by counterfeiting accounts, making use of third-party service providers, or asking friends and family (Dann et al., 2022). Beyond that, high numbers of positive ratings mitigate the risk of fluctuating quality of work results. In this vein, a high rating volume reduces noise and proneness to outliers of the average total rating score. This rise in statistical reliability, in turn, crucially depends on the rating volume.

Lastly, a common phenomenon on platforms is that ratings (regardless of origin) are inflated as extremely high ratings are most prevalent (Filippas et al., 2020; Kokkodis, 2021; Weber et al., 2022), decreasing the informativeness of these scores (Filippas et al., 2022). Therefore, when rating valence is not a useful indicator due to low variation, consumers rely on rating volume instead (Etzion & Awad, 2007). Accordingly, we hypothesize that demand increases with higher imported rating volume:

-

H1: The volume of imported ratings has a positive effect on how much demand a worker receives.

In a scenario where both onsite and imported ratings are available, the question arises whether the demand effect of the two rating types is of the same magnitude. While both kinds of ratings generally reflect workers’ quality and trustworthiness, their origin may influence how clients perceive these signals.

First, regarding asymmetries concerning workers’ trustworthiness, it might be unclear how strictly each platform (and the users thereon) deals with misconduct. For instance, platforms could differ regarding the technologies they employ to detect fake accounts or in terms of how users report violations of general terms and conditions. Assuming risk aversion, clients are likely to make conservative estimates and expect other platforms to have less strict policies and norms. As a result, we assume imported ratings to be less effective in building trust.

Second, although we focus on similar services between source and target platform, services are still likely to differ to some extent between platforms, for instance, with regard to scope, specialization, and/or complexity (e.g., the umbrella term “programming” can involve distinct services such as scripting and automation, mobile and desktop development). Therefore, the different specializations of the rating-importing and -exporting platform may attract heterogeneous user bases, comprising workers with particular skills and clients with different needs and expectations. Thus, the costs and required skills for building reputation could differ from platform to platform, even when the offered applications are similar. Yet, if the distribution (or rather skewness) of rating scores differs between platforms, comparability is limited (Teubner et al., 2020; Teubner & Dann, 2018). This would imply that imported ratings have a weaker impact on demand than onsite ratings:

-

H2: The positive demand effect of imported ratings is weaker than that of onsite ratings.

Method

To evaluate our hypotheses, we devise an online experiment, systematically testing situations with and without cross-platform reputation portability against each other.

Treatment design

Specifically, we employ a 2 × 2 between-subjects design with the two binary treatment variables platform (A or B) and reputation portability (yes or no). On each platform, we consider a situation in which an initial search has been retrieved and shows four workers (representing the top search results) with different rating volumes and valence. There is a partial overlap between the two platforms in that two workers are active on both platforms A and B. Figure 1 illustrates this setup.

Task and stimulus material

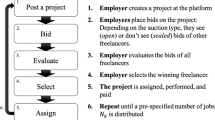

In the experiment, participants were instructed to imagine that they wanted to hire a freelancer to develop and program a mobile application, assuming a job duration of 8–12 h. To find a suitable freelancer for the job, they registered on a fictional crowdwork platform (either “iowork” or “coderr”). They were assured that the platform was similar to existing platforms for tech freelancers—such as Fiverr, Upwork, or Freelancer. Participants were then asked to imagine that they had conducted an initial search, specifying search criteria for a freelancer. Next, the platform showed them four suitable freelancers with varying levels of experience, customer satisfaction, and hourly wages (see Fig. 2 for exact wording).

Participants then saw one of the two platforms on which they were asked to choose one of the four listed workers. In the conditions with imported reputation, participants were informed that two of the four workers also have experience on the respective other, similar platform. Beyond their reputation, workers were represented by profile image, name, city, and hourly wage. The selection of elements as well as the overall visual appeal was informed by the design of the platforms Upwork and Fiverr. Specifically, we varied the following features on workers’ profiles:

-

Rating volume (Based on the actual distribution of the rating count of 19,000 Fiverr workers which we retrieved using a proprietary web scraper. As with every platform, there is a fraction of non-rated users, and we therefore focus on users with at least one rating. To reflect that platforms typically show highly and well-rated workers on top of the search results, we selected the 70%, 74%, 78%, 82%, 86%, 90%, 94%, and 98% quantiles of rating volume from this distribution, yielding 76, 96, 124, 167, 234, 344, 588, and 1475 ratings, respectively.)

-

Names (Michael C., Stacey P., Steven H., and Sarah T.)

-

Cities (Boston, MA, Denver, CO, Portland, OR, and San Francisco, CA)

-

Wages ($70/h to $85/h)

-

Rating scores (4.88, 4.90, 4.91, and 4.93 out of 5.00 stars)

These features were based on common observations from Fiverr and similar platforms, introducing some, but not too large variance. All workers are deliberately US-based, in order to avoid additional noise regarding language, general wage levels, or culture (that would need additional control). Profile photos were sourced from the website ThisPersonDoesNotExist.com (2 male, 2 female) and slightly blurred for the experiment. Note that participants were told that the workers’ profile photos were deliberately blurred. This was done to not direct too much attention to the profile images which would, otherwise, play a predominant role in worker selection. Moreover, the website’s background was also stylized as it was not relevant for the choice (see Fig. 3).

The sequences of all elements were determined individually at random. Importantly, in the treatment conditions with reputation portability, workers’ reputation from the primary platform is complemented by the reputation they hold on the respective other platform. Figure 3 shows one possible combination (including imported reputation).

Measurements

Our main target variable is participants’ worker choice (i.e., which worker is selected for the task). Moreover, we included realism and attention checks throughout the experiment. After the main stage of the experiment, we measured common demographic control variables (e.g., age, gender, nationality, etc.). Beyond that, we surveyed participants on additional instruments, such as reasons for their hiring decisionFootnote 3, their general disposition to trust, and their experience with crowdwork and other online platforms.

Procedure and sample

We recruited 245 participants via the online platform Prolific.com (Palan & Schitter, 2018). After being forwarded to the experiment website, participants were briefed on the scenario and gave informed consent. The experiment instructions and scenario briefing are provided in Fig. 2 above. Each participant was exposed to only one of the four treatment conditions (between-subjects design). Overall, 6 participants did not complete the experiment or failed to answer the attention check correctly. Thus, the final sample size consisted of 239 observations. Experiment completion took 2 min and 45 s on average, and participants were compensated by an equivalent of 12 GBP/hr. Participants’ age ranged from 20 to 79 years with a mean of 40.8 years (median = 39 years). Participants were mostly located in the UK (82%), the US (5%), or European countries (13%; Ireland, France, Germany, Spain). Overall, 67% of participants were female.

To assess whether participants considered the scenario plausible, we added a realism check asking whether “the described and displayed scenario could actually occur on crowdwork platforms.” On a 9-point Likert scale, this measure came out to be 7.2 on average, indicating fairly high realism.

Randomization check

An important feature of any experiment is that it properly randomizes subjects into treatment groups (Nguyen & Kim, 2019). To check whether this was the case, we consider whether there are significant differences among participants across treatments. To do so, we ran a set of ordinary least squares (OLS) and logit regressions using the participant-specific variables age, gender (male = 0, female = 1), realism (i.e., how realistic participants deemed the scenario), time taken (in seconds), and participants’ familiarity with online platforms as dependent variables. The independent variables were binary dummies indicating the two main treatment conditions (platform: iowork = 0, coderr = 1; reputation import: no = 0, yes =1).

Subsequently, we conducted joint F-tests to examine the relationship between participants’ characteristics and the treatment factors. More precisely, we conducted an analysis of variance (ANOVA) for the dependent variables participants’ age, perceived realism, time taken, and familiarity with online platforms. For the logit regression, modeling participants’ gender, we employed an analysis of deviance. Table 1 summarizes the findings.

As expected, all key variables are statistically indistinguishable across treatment groups. Thus, there was no systematic bias of participants across treatment conditions for the variables tested. We hence conclude that the randomization process was successful.

Results

Descriptive summary

As a first step of analysis, we consider how often workers have been selected based on the visual elements on their profiles. Most importantly, rating volume seems to have a very distinct effect, where more ratings are associated with higher selection frequency. Specifically, this holds for both platforms (“iowork” and “coderr”) as well as for both reputation portability conditions (see Fig. 4). On both platforms, importing a substantial volume of ratings can markedly increase a worker’s chances of being selected (167 + 234 and 124 + 1,475).

Moreover, we observe that the other attributes also have an effect on selection frequency. Specifically, female workers as well as workers with higher rating scores and lower wages are selected more often. Table 2 summarizes these findings.

Mixed multinominal logit regression model

To corroborate the above observations statistically, we conduct a set of mixed multinominal logit regressions (MMLR). A Hausman-McFadden test confirms independence of irrelevant alternatives (Hausman & McFadden, 1984). Thus, we proceed to the MMLR, where the chosen worker (i = 1, 2, 3, 4) is modeled as the dependent variable. We estimate the choice with alternative-specific independent variables, including worker’s gender, city, wage, onsite rating score, onsite rating volume, imported rating volume, and gender match (i.e., same gender across worker and subject/client). Moreover, we included three individual-specific control variables, namely, subjects’ age, gender, and familiarity with crowdwork platforms.

The statistical assessment confirms that the volume of imported ratings has a significant and positive effect on workers’ probability of being chosen, in support of H1 (β = 0.001, SE = 0.0002, p < 0.001). Despite the presence of onsite ratings, ratings originating from other platforms can increase demand. Moreover, higher onsite rating volume and onsite rating scores (i.e., valence) as well as lower wages increase workers’ likelihood of being chosen. Furthermore, subjects select female and same-gender workers more often. The worker’s city did not have any significant effect (Boston being used as the benchmark in the regression). Table 3 summarizes the results of the MMLR.Footnote 4

Wald test and marginal effects

To evaluate H2, we run a two-sided Wald test, confirming that the coefficients of onsite rating volume and imported rating volume significantly differ from each other (p < 0.001). Subsequently, to assess which effect is weaker, we compare the marginal effects of onsite and imported rating volume on the probability of being selected. Specifically, we estimate the marginal probability effect for a hypothetical observation (i.e., subject) with sample mean characteristics according to Croissant (2020). For the sake of readability, we multiplied the marginal effects by a factor of 1000. Table 4 presents the effect of an increase of 1000 onsite ratings on a worker’s likelihood of being selected and Table 5 for an increase in imported ratings, respectively. In both tables, the rows display how a worker’s probability of being selected changes if their rating volume increased by 1000. The columns indicate for which worker the additional ratings apply. Comparing Table 4 and Table 5 shows that for all workers, an increase in onsite ratings has a larger impact than an increase in imported ratings. This analysis supports H2; the demand-increasing effect of imported ratings is in fact weaker than that of onsite ratings.

Choice model

Additionally, we were interested in how much weaker the effect of imported ratings is compared to onsite ratings. To quantify this difference, we now estimate the relative weight of ratings originating from a secondary platform. To do so, we take a slightly different perspective and consider worker-specific variables only. Particularly, we consider each of the four workers’ probabilities of being selected in each of the 2 × 2 = 4 treatment conditions. Naturally, all four workers’ probabilities of being selected (within a given treatment condition) will add up to 100%. Moreover, we focus on the workers’ rating volumes as this constitutes the core feature of the study. For each treatment condition and worker, Table 6 shows how often the worker was selected empirically, that is, in the experiment.

To estimate the underlying function of participants’ hiring decisions, we model these empirically observed probabilities employing a probabilistic choice function. This function is based on the workers’ ratings on the primary platform and—if applicable—those imported from the secondary platform. The model has two parameters. We first conflate the onsite (\({r}_{ons}\)) and imported ratings (\({r}_{imp}\)) into a single reputation value of \(v={r}_{ons}+\gamma {r}_{imp}\). If no ratings are imported, \({r}_{imp}\) is zero. Hence, \(v\) denotes the overall reputation of a worker, consisting of onsite and imported ratings (if applicable). Moreover, we apply a linear weighting for the different types of ratings, with the parameter \(\gamma\) representing the relative weight of imported ratings in hiring decisions. Now, worker \(i\)’s reputational value \({v}_{i}\) is modeled to equal their empirically observed likelihood of being selected (\({p}_{i}\)) using the logit choice function

where the second parameter \(\lambda \ge 0\) describes the model’s selectivity (McFadden, 1973). For \(\lambda =0\), each worker is selected with equal probability (i.e., 0.25). For increasing values of lambda, the worker with the highest reputational value is favored increasingly often. In the limit (\(\lambda \to \infty\)), the most reputable worker, even by the smallest margins (e.g., the worker with only a single rating more than the second most reputable worker), is selected with certainty by all clients.

Based on the experimental data, we now estimate the model’s two parameters \(\lambda\) (logit selectivity) and \(\gamma\) (weight of imported ratings). To maximize the fit between our model and the observed data, we minimize the discrepancy between the actual choices of subjects and the predicted choices from our model, for all workers across the four treatment conditions. More precisely, we compute the optimal lambda that minimizes the error, that is, the sum of the squared differences between the empirically observed hiring probability (\({p}_{actual}\)) and the model’s predicted hiring probability (\({p}_{model}\)). This yields \({{\varvec{\lambda}}}^{\boldsymbol{*}}=0.0013\) and accordingly, \({{\varvec{\gamma}}}^{\boldsymbol{*}}=0.3522\). Thus, the effect of imported rating volume is equal to about 35% of the effect of onsite rating volume. Figure 5 illustrates the error for varying values of gamma (and fixed, optimal lambda).

Discussion

As of today, workers’ reputations are platform-bound, creating lock-in effects with several negative consequences for workers and market competition (Ciotti et al., 2021; Kokkodis, 2021). Therefore, advocates for reputation portability emphasize its positive effects, such as lowering market entry barriers, mitigating lock-in effects, and increasing competition among platforms (European Commission, 2019). In the following, we discuss our results in view of prior literature and consider our study’s theoretical implications with respect to competition between workers. We then proceed to provide practical implications for different stakeholder groups. Lastly, we address the limitations of our research and propose starting points for further research.

Summary of results and contributions

In this study, we show that an increase in the number of imported ratings positively affects demand (H1). Our findings are in line with the main tenets of signaling theory: Higher imported rating volume reflects experience and provides a more accurate reference of workers’ skills and, to some extent, trustworthiness. Thus, information asymmetries and the risk for potential clients are reduced. With this, we deepen the understanding of reputation systems and contribute to the literature that previously focused on the demand effects of rating valence (Gandini et al., 2016; Lin et al., 2018; Lukac & Grow, 2021). Our study shows that, beyond rating valence, volume is also a decisive factor in clients’ decision-making process. This is in line with findings of Dann et al. (2022) who observed that rating volume and valence interact and affect the credibility of rating scores. The authors came to the result that higher rating volumes are generally associated with higher trust but with one exception: A high number of ratings only cast doubts on its credibility if the ratings exclusively consist of 5.0 stars. In our experiment, we observe that large imported rating volumes of high, yet sub-perfect rating scores signal experience and competence, enabling workers to attract more demand. Thus, our results indicate that the interplay between rating valence and volume conceptualized by Dann et al. (2022) may also affect imported ratings.

In addition, we complement prior studies that mostly examined the effect of imported ratings when no onsite ratings were available (Otto et al., 2018; Teubner et al., 2020). A notable exception is the study of Hesse et al. (2022) who investigated the simultaneous presence of onsite and imported ratings—while varying rating volume. They found that more reviews drive trust, yet the observed effect is small compared to our findings. This, however, could be due to a difference in experimental designs: Hesse et al. (2022) systematically varied the rating valence of both onsite and imported ratings, using the whole range between 1.0 and 5.0 stars. In contrast, the rating scores in our experiment were drawn from a much narrower range (i.e., 4.88 to 4.93 stars). As such, the effect of rating volume and score variance on clients’ choices might be intertwined, whereby higher score variance could decrease the relative importance of rating volume.

Interestingly, our results contrast findings of Corten et al. (2023) who observed that imported ratings are only effective in building trust when onsite ratings are absent. In our study, however, we find a demand-increasing effect of imported ratings despite the presence of onsite ratings. We presume that this is due to the fact that participants in the study of Corten et al. (2023) evaluated one worker profile in isolation, while subjects in our experiment chose among four workers. This suggests that ported reputation may serve as a competitive advantage in comparison with other workers. In fact, beyond one’s own rating, demand also depends on the mean rating in the market (Yang et al., 2021).

Second, we demonstrate that imported ratings indeed stimulate demand, but to a lesser extent than onsite ratings (H2). While most research implicitly assumed great similarities between onsite and imported ratings, our results suggest that they are not perfect substitutes. Although ratings, regardless of their origin, intend to capture workers’ skills and experience, signaling costs may vary between platforms, leading to different signal strengths. This could be due to differences between the importing and exporting platforms, limiting the ratings’ applicability across contexts. Following Kokkodis and Ipeirotis (2016), we presume that comparability between native and transferred ratings is restricted due to task heterogeneity (i.e., each rating is given for a distinct task that—even given high contextual fit—differs in content and scope) and client heterogeneity (i.e., each rating is given by a distinct client with different expectations and needs). In addition, we propose another level of platform heterogeneity (i.e., each platform’s architecture and governance structure are distinct). In sum, due to these substantial discrepancies between the platforms, clients could consider imported ratings as less relevant for their specific context.

The concept of trust transfer posits that consumers’ trust in a platform extends to the users thereon (i.e., trust in a platform can be inherited by its users). Building on that, the reputation of the platform itself may also affect how transferred ratings are perceived (X. Chen et al., 2015). As such, importing ratings from less well-known and trusted platforms could further weaken their demand effect.

With our study, we build on prior research focusing on sharing economy platforms which showed that onsite and imported ratings function similarly, with positive effects on consumer trust and demand (Hesse et al., 2020; Otto et al., 2018; Qiu et al., 2018). Teubner et al. (2020) reveal that the “source-target fit” between the review-importing and -exporting platform is decisive for imported ratings’ trust-building potential. While close fit is associated with higher levels of trust, poor fit can have detrimental effects. However, the authors did not investigate whether—given high source-target fit—ratings from different sources vary with respect to how much demand they can attract. By providing evidence that imported ratings work similarly as onsite ratings, but to a lesser extent, we corroborate their findings and transfer them to the domain of crowdwork. In addition, we contribute to a more nuanced understanding of ported reputation by shedding light on previously overlooked differences to onsite ratings. In the following section, we turn to the theoretical implications of our results, particularly concerning competition among workers.

Theoretical implications

Reputation portability has been touted as a means of reducing switching costs and alleviating the cold-start problem for workers without onsite ratings (Wessel et al., 2017). By allowing workers to build upon their references from past performances, lock-in effects can be mitigated, fostering competition between workers, but also platforms. However, the effects of reputation portability on competition between workers with onsite ratings are less evident.

On the one hand, given the positive demand effect, workers with few onsite ratings can compensate for their deficit by importing ratings—if these are of sufficiently high valence and not outnumbered by the imports of other workers. This would reduce gaps between ranks, enhancing prospects of lower-ranked workers when competing with higher-ranked workers. In such a scenario, reputation portability can act as a leveler for workers with few onsite ratings, thereby fueling competition.

On the other hand, ported reputation can also exacerbate platforms’ general proneness to demand concentration due to their reliance on rankings (Martens, 2016). The analysis of selectivity in the choice model revealed clients’ (weak) tendency to employ the most reputable worker which may induce a demand concentration dynamic. Experienced workers with many ratings are more likely to be hired, and as they complete more jobs, they can accumulate more ratings, which in turn allows them to secure more jobs (Zhou et al., 2022). This creates a self-reinforcing dynamic, whereby those who already have, receive even more, also known as the Matthew Effect (Merton, 1968). Thus, an initial (small) lead in ratings magnifies over time, allowing a small number of successful workers to leverage their reputation. This contributes to the rise of so-called superstars who control substantial market shares (Rosen, 1981).

Clearly, this highly simplified scenario neglects decisive factors that determine whether reputation portability hampers competition. A key premise of the Matthew effect demand concentration is that there are differences between workers which grow over time. In this context, we consider imported ratings, in particular, as a source of (initial) inequalities between workers. Consequently, to assess the likelihood of demand concentration, we consider three circumstances that may contribute to the dynamic.

First, for reputation portability to cause concentration, workers need to be active on several platforms (i.e., multi-home). Studies reveal that multi-homing is a widespread phenomenon, with roughly half of workers being active on several platforms (Allon et al., 2023; ILO, 2021; Wood et al., 2019a). As a result, there is a considerable share of workers who would be able to import ratings (if they had any) but also a substantial share of single-homing workers who would not be able to import any ratings.

Now, let us take a closer look at those workers who typically multi-home and how many ratings they could potentially import. On several platforms, it has been observed that particularly superstar workers offer their services on various platforms (Armstrong, 2006; Hyrynsalmi et al., 2012, 2016). Since superstars usually have an exceptionally high number of ratings, reputation portability could accelerate the speed of the Matthew effect dynamics.

However, since the demand effect of imported ratings only corresponds to 35% of the effect of onsite ratings, transferring high amounts of ratings may not always be sufficient to increase demand. Whether multi-homing superstars would dominate the market further depends on how many onsite ratings their competitors have. Hence, we need to take the distribution of rating volumes on platforms into account.

A frequent phenomenon on platforms is the uneven distribution of ratings (Velthuis & Doorn, 2020), typically following a power law distribution. Based on the rating volume distribution which we retrieved from Freelancer and Fiverr, we observe that many workers have no ratings at all (approximately 9% on Freelancer and 48% on Fiverr). If workers do have ratings, they typically have few (see Fig. C1 in Appendix C). Nevertheless, the long tail of the distribution indicates that there are superstars with very large rating volumes. If predominantly superstars import ratings, this may strengthen their dominant market position on the respective platform.

Beyond that, cross-platform Matthew effects are possible, which could produce even more powerful superstars on an industry level. Specifically, the possibility of importing reputation allows workers to scale their reputation across platforms and to realize positive spillover effects. This essentially decreases multi-homing costs and supports superstars in maintaining their status across platforms. These implications make reputation portability a double-edged sword: On the one hand, enabling the import of ratings enhances workers’ independence and leverage vis-à-vis the platforms. On the other hand, reputation portability can also exacerbate unequal competition among workers, fueling demand concentration around a few highly reputable workers. The implications of such a dynamic extend beyond mere competition as this can affect workers’ social welfare in various ways.

For one, the rise of superstar workers across platforms can exacerbate income inequality among workers. While superstars can increase their earnings by accumulating demand, non-superstar workers may face difficulties in attracting clients. In such an unbalanced competition, workers may see the need to underbid each other in a race to the bottom, further reducing their wages and earnings.

Moreover, limited job opportunities for less reputable workers can increase uncertainty, income volatility, and precarity for non-superstar workers. Those who are gradually crowded out of the market also lose access to welfare-improving benefits offered by platform work, such as flexible scheduling, diverse job opportunities, autonomy, and (supplementary) income. In addition, the demand concentration around superstars can aggravate the cold-start problem for workers without an established reputation on any platform.

Beyond that, reputational data portability could fuel demand concentration across markets when re-outsourcing allows superstars to take on more tasks. Despite the inherent limitation in scaling most services on crowdwork platforms (in contrast to digital products such as e-books or YouTube videos), there is evidence that superstars re-outsource tasks. When facing excessive demand, superstars simply hire other (subordinate) workers, while keeping a share of the income for themselves (Kässi et al., 2021; Mendonça et al., 2023; Wood et al., 2019b). This practice can exacerbate the unequal distribution of profits to the detriment of subordinate workers. As subcontractors act in the shadow of superstars, they are unable to build up a reputation for themselves and become dependent on superstars. This dependence can be exploited by superstars, putting workers in an even more vulnerable situation, with limited bargaining power vis-à-vis both platforms and superstars.

With these hitherto overlooked effects, we contribute to the social welfare computing literature by revealing the potential far-reaching, adverse consequences of reputation portability. While reputation portability undoubtedly also yields positive effects, which we will discuss in the following, our study highlights the need for careful consideration in its implementation.

Practical implications

Our results have practical implications for several stakeholders. Implications for individual workers on the micro level are straightforward. They may benefit from importing ratings as they help to attract demand and can also represent an important step towards greater data sovereignty (Weber et al., 2022). Therefore, if given the possibility, workers should import ratings—granted that these ratings are of sufficiently high valence (Hesse et al., 2022).

The possibility of transferring reputation from platforms focusing on simpler tasks (e.g., microtasks) to those mediating more complex work (e.g., freelancing projects) yields mixed implications. From one perspective, despite substantial disparities between job types, imported ratings can provide valuable information about workers’ trustworthiness and general qualities (e.g., reliability, punctuality). Hence, imported ratings—even if primarily obtained through less complex work—could help overcome the cold start problem on freelancing platforms. This could increase workers’ social mobility, facilitate their career advancement, and enable access to higher-paying opportunities, thus improving workers’ welfare.

On a contrasting note, these benefits only emerge when workers are sufficiently skilled for more complex freelancing jobs. Otherwise, producer surplus would decrease since workers face increased costs (e.g., time investment to acquire new skills, mental costs). Additionally, lower service quality would reduce consumer surplus. The discrepancy between rating and skills can also be misleading and erode trust in the reputation system. If clients question the relevance of transferred ratings, they may lower their expectations of workers’ quality and willingness to pay for their services. Yet, highly skilled workers would not be willing to work for low wages, potentially leaving the platform. Ultimately, this could lead to market failure as in “The Market for Lemons” (Akerlof, 1970). To counteract this, one viable approach could be the introduction of platform- and job category-specific labels for imported ratings, similar to the proposal of Kokkodis and Ipeirotis (2016). These labels can provide more contextual information and hence serve as more accurate signals of workers’ future performance. Alternatively, multi-dimensional ratings based on general qualities (e.g., punctuality, reliability) and job-specific qualities (e.g., knowledge, skills) may help to mitigate some of the drawbacks of reputation portability.

As the GDPR ensures users’ “right to be forgotten” (Art. 17) as well as the “right to restriction of [data] processing” (Art. 18), another concern arises if workers can selectively choose which ratings to import. When workers exclusively transfer high ratings, imported rating scores become (even more) inflated and lose their informativeness. Therefore, clients may predominantly base their hiring decisions on rating volume due to its higher discriminatory power, which can further exacerbate the demand concentration dynamic.

Apart from that, implications on the meso level (i.e., for platform operators) are also not straightforward. Platforms obviously have an interest in fostering trust between workers and clients—which imported ratings can facilitate. Therefore, platforms would benefit from giving workers the possibility to import ratings from external sources (Rosenblat & Stark, 2015; Shafiei Gol et al., 2019). Yet, this could become challenging in practice as it would require the respective other platform to allow for the export of reputational data. One attempt was already made in the 1990s when Amazon enabled customers to import their ratings directly from eBay. However, eBay threatened to press charges, claiming these ratings as proprietary content (Dellarocas et al., 2006; Resnick et al., 2000). This example highlights that platforms generally do not have an incentive to let users export their data.

Considering the potential demand concentration dynamics, the implications on the macro level (i.e., for policymakers) are rather ambiguous: On the one hand, the sharing of reputational data would stimulate competition between platforms. By breaching lock-in effects for workers, competition among platforms for clients and workers would be fueled (Teubner et al., 2020). This competitive lever has already been implemented before—namely, in the telecommunication industry: Telecommunication providers were obligated to enable phone number portability from one provider to another. This regulation was, just as the data portability proposition today, based on competition considerations (Usero Sánchez & Asimakopoulos, 2012).

Beyond that, it is essential to acknowledge the societal utility derived from platform services and offerings. Thus, a social welfare-maximizing policy must not hamper innovation. In this regard, policymakers need to consider that the increased inter-platform competition induced by reputation portability can promote innovations (Engels, 2016; Farrell & Klemperer, 2007; Katz & Shapiro, 1994).

On the other hand, while reputation portability may fuel competition between platforms, it may have adverse effects for the majority of non-superstar workers. Specifically, demand concentration can exacerbate income inequality and limit job opportunities for non-superstar workers, resulting in insecurity and volatility in earnings. Moreover, the practice of superstars to re-outsource tasks while keeping a share of income can further amplify the unequal distribution of profits and create dependencies. Thus, reputation portability might especially favor those workers who already have a secure (or even dominant) position in the market.

Therefore, it is advisable for policymakers to also consider macro-level dynamics in their propositions for data portability. Table 7 summarizes the discussed benefits and drawbacks of reputation portability.

Limitations and future research

Like any research, our study comes with some limitations. As we elaborate in the following, these limitations often pose a starting point for future research. One limitation lies in the scope of our experiment design: We only tested our hypotheses in a very specific constellation, where we drew workers’ rating volumes and scores from the upper range of our observational data. Consequently, our experimental design does not reflect the whole distribution found on actual platforms, which limits the generalizability of our results to some extent.

To gain deeper insights into how reputation portability would affect demand concentration, a larger-scaled field experiment, testing the entire spectrum of rating volumes and valence, will be required. This would reveal whether the demand effect follows a non-linear function with decreasing marginal effects (i.e., lower impact per rating for increasing rating volumes), which would slow down the Matthew effect concentration dynamic. Beyond that, a systematic test of the whole range of rating scores and volumes, and particularly the interaction between these, is needed to identify the boundary conditions of ported reputation.

A potential source of ambiguity is the way we presented the imported ratings in the stimulus material. While our intention was to show that the rating score was influenced by both onsite and imported rating scores, alternative interpretations are conceivable. More precisely, the wording “completed jobs” could have implied that workers merely imported work experience which did not affect the displayed rating score. These potential disparities in interpretation may add noise to the data.

In addition, we selectively highlighted the information regarding the import of ratings in the scenario description. The visual cue could have drawn subjects’ attention to this characteristic, potentially inducing experimenter demand effects. Furthermore, social desirability bias could have affected subjects’ reasoning for their hiring decisions. It is well-conceivable that subjects were not willing to admit discriminatory practices or that they were not consciously aware of such (gender) biases.

Moreover, a scenario-based study is by design limited in terms of external validity as respondents face a hypothetical situation, without financial incentives. On the flip side, however, there are also no compelling reasons for participants to “lie” or to make random choices (Charness et al., 2021; Hascher et al., 2021). Naturally, a sample of general Internet users may be broader than the actual target group of clients on crowdwork platforms. Consequently, the choices of the participants in our study could differ from those of actual clients. However, as we designed the scenario to be easy to immerse in, we deem this sample adequate, especially since the participants themselves use a similar online platform to get hired for research studies. Lastly, while we controlled for the participants’ familiarity with platforms in our analyses, it remains unclear whether their experience (or lack thereof) could have affected our results.

Conclusion

Reputation portability has a significant influence on demand within and across platforms and can therefore have far-reaching impacts on workers’ social welfare. Despite the indisputable positive effect of reputation portability for workers and platforms, our results indicate potential, adverse effects on the industry level. By examining the demand effect of ratings in conditions with and without reputation portability, we demonstrate that clients rely on imported rating volume for their hiring decisions. When reputation is no longer restrained by platform boundaries, Matthew effect dynamics can be induced, paving the way for demand concentration. Thus, in contrast to the prevailing opinion in the current public debates, making reputational data portable might not necessarily create fairer working conditions on platforms. In light of these findings, we advocate for a carefully considered implementation of the right to data portability that unlocks the potential of reputation portability. To increase social welfare, reputation portability needs to be accompanied by a regulatory framework that takes unintended consequences into account and comprises mechanisms to mitigate those negative effects.

Data Availability

The data that support the findings of this study are available from the corresponding author, upon reasonable request.

Notes

Online labor platforms are henceforth simply referred to as “platforms.”

The concept of social welfare is incremental in the Environmental, Social, and Governance (ESG) approach. The approach underscores social objectives that go beyond the typical financial goals of companies. We use the term social welfare to refer to working conditions, thereby focusing on Goal 8 of the United Nations’ Sustainable Development Goals. We specifically focus on the dimensions of transparency, information distribution, and worker dependence.

To account for the possibility that the demand effect of imported ratings may depend on workers’ characteristics (i.e., onsite rating volume, wage, rating score, and ranking), we estimated four regression models with interaction effects. The results of our supplementary analyses can be found in Appendix B (Table B1).

References

Akerlof, G. A. (1970). The market for “lemons”: Quality uncertainty and the market mechanism. The Quarterly Journal of Economics, 84(3), 488. https://doi.org/10.2307/1879431

Allon, G., Chen, D., & Moon, K. (2023). Measuring strategic behavior by gig economy workers: Multihoming and repositioning. SSRN Electronic Journal. https://doi.org/10.2139/ssrn.4411974

Armstrong, M. (2006). Competition in two-sided markets. The RAND Journal of Economics, 37(3), 668–691. https://doi.org/10.1111/j.1756-2171.2006.tb00037.x

Banker, R. D., & Hwang, I. (2008). Importance of measures of past performance: Empirical evidence on quality of e-service providers. Contemporary Accounting Research, 25(2), 307–337. https://doi.org/10.1506/car.25.2.1

Basili, M., & Rossi, M. A. (2020). Platform-mediated reputation systems in the sharing economy and incentives to provide service quality: The case of ridesharing services. Electronic Commerce Research and Applications, 39, 100835. https://doi.org/10.1016/j.elerap.2019.100835

Batt, R., & Colvin, A. J. S. (2011). An employment systems approach to turnover: Human resources practices, quits, dismissals, and performance. Academy of Management Journal, 54(4), 695–717. https://doi.org/10.5465/amj.2011.64869448

Berg, J. (2018). Digital labour platforms and the future of work towards decent work in the online world. International Labour Office. International Labour Organization (ILO). https://www.ilo.org/global/publications/books/forthcoming-publications/WCMS_645337/lang--en/index.htm

Bergvall-Kåreborn, B., & Howcroft, D. (2014). Amazon mechanical Turk and the commodification of labour. New Technology, Work and Employment, 29(3), 213–223. https://doi.org/10.1111/ntwe.12038

Bozdag, E. (2018). Data portability under GDPR: Technical challenges. SSRN Electronic Journal. https://doi.org/10.2139/ssrn.3111866

Brkan, M., & Bonnet, G. (2020). Legal and technical feasibility of the GDPR’s quest for explanation of algorithmic decisions: Of black boxes, white boxes and fata morganas. European Journal of Risk Regulation, 11(1), 18–50. https://doi.org/10.1017/err.2020.10

Chan, J., & Wang, J. (2018). Hiring preferences in online labor markets: Evidence of a female hiring bias. Management Science, 64(7), 2973–2994. https://doi.org/10.1287/mnsc.2017.2756

Charness, G., Gneezy, U., & Rasocha, V. (2021). Experimental methods: Eliciting beliefs. Journal of Economic Behavior & Organization, 189, 234–256. https://doi.org/10.1016/j.jebo.2021.06.032

Chen, X., Huang, Q., Davison, R. M., & Hua, Z. (2015). What drives trust transfer? The moderating roles of seller-specific and general institutional mechanisms. International Journal of Electronic Commerce, 20(2), 261–289. https://doi.org/10.1080/10864415.2016.1087828

Chen, L., Mislove, A., & Wilson, C. (2015). Peeking beneath the hood of Uber. Proceedings of the 2015 Internet Measurement Conference, 495–508. https://doi.org/10.1145/2815675.2815681

Ciotti, F., Hornuf, L., & Stenzhorn, E. (2021). Lock-in effects in online labor markets. SSRN Electronic Journal. https://doi.org/10.2139/ssrn.3953015

Corten, R., Kas, J., Teubner, T., & Arets, M. (2023). The role of contextual and contentual signals for online trust: Evidence from a crowd work experiment. Electronic Markets, 33, 41. https://doi.org/10.1007/s12525-023-00655-2

Croissant, Y. (2020). Estimation of random utility models in R: The mlogit package. Journal of Statistical Software, 95(11). https://doi.org/10.18637/jss.v095.i11

Cui, R., Li, J., & Zhang, D. J. (2020). Reducing discrimination with reviews in the sharing economy: Evidence from field experiments on Airbnb. Management Science, 66(3), 1071–1094. https://doi.org/10.1287/mnsc.2018.3273

Cutolo, D., & Kenney, M. (2021). Platform-dependent entrepreneurs: Power asymmetries, risks, and strategies in the platform economy. Academy of Management Perspectives, 35(4), 584–605. https://doi.org/10.5465/amp.2019.0103

Dann, D., Teubner, T., & Wattal, S. (2022). Platform economy: Beyond the traveled paths. Business & Information Systems Engineering, 64(5), 547–552. https://doi.org/10.1007/s12599-022-00775-7

Degryse, C. (2016). Digitalisation of the economy and its impact on labour markets. SSRN Electronic Journal. https://doi.org/10.2139/ssrn.2730550

Dellarocas, C., Dini, F., & Spagnolo, G. (2006). Designing reputation mechanisms. In N. Dimitri, G. Piga, & G. Spagnolo (Eds.), Handbook of Procurement (1st ed., pp. 446–482). Cambridge University Press. https://doi.org/10.1017/CBO9780511492556.019

DGB. (2021). Positionspapier zur Plattformarbeit. https://www.dgb.de/++co++f012a364-8c7b-11eb-8bce-001a4a160123

Dube, A., Jacobs, J., Naidu, S., & Suri, S. (2020). Monopsony in online labor markets. American Economic Review: Insights, 2(1), 33–46. https://doi.org/10.1257/aeri.20180150

Dunham, B. (2011). The role for signaling theory and receiver psychology in marketing. In G. Saad (Ed.), Evolutionary Psychology in the Business Sciences (pp. 225–256). Springer Berlin Heidelberg. https://doi.org/10.1007/978-3-540-92784-6_9

Einav, L., Farronato, C., & Levin, J. (2016). Peer-to-peer markets. Annual Review of Economics, 8(1), 615–635. https://doi.org/10.1146/annurev-economics-080315-015334

Engels, B. (2016). Data portability among online platforms. Internet Policy Review, 5(2). https://doi.org/10.14763/2016.2.408

Etzion, H., & Awad, N. (2007). Pump up the volume? Examining the relationship between number of online reviews and sales: Is more necessarily better? ICIS 2007 Proceedings, 120. http://aisel.aisnet.org/icis2007/120

European Commission. (2019). Competition policy for the digital era. Publications Office. https://doi.org/10.2763/407537

European Commission. (2022). Proposal for a regulation of the European parliament and of the council on harmonised rules on fair access to and use of data (Data Act). https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=COM%3A2022%3A68%3AFIN

European Union. (2022). Data Governance Act. https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=celex%3A32022R0868

European Union. (n.d.). What is GDPR, the EU’s new data protection law? Retrieved October 2, 2023, from https://gdpr.eu/what-is-gdpr/

Farrell, J., & Klemperer, P. (2007). Coordination and lock-in: Competition with switching costs and network effects. In Handbook of Industrial Organization (Vol. 3, pp. 1967–2072). Elsevier. https://doi.org/10.1016/S1573-448X(06)03031-7

Felstiner, A. (2011). Working the crowd: Employment and labor law in the crowdsourcing Industry. Berkeley Journal of Employment and Labor Law, 32(1), 143–203. http://www.jstor.org/stable/24052509.

Fernández-Macías, E., Urzì Brancati, C., Wright, S., & Pesole, A. (2023). The platformisation of work: Evidence from the JRC algorithmic management and platform work survey (AMPWork). Publications Office. https://data.europa.eu/doi/https://doi.org/10.2760/801282

Fieseler, C., Bucher, E., & Hoffmann, C. P. (2019). Unfairness by design? The perceived fairness of digital labor on crowdworking platforms. Journal of Business Ethics, 156(4), 987–1005. https://doi.org/10.1007/s10551-017-3607-2

Filippas, A., Horton, J. J., & Zeckhauser, R. J. (2020). Owning, using, and renting: Some simple economics of the “sharing economy.” Management Science, 66(9), 4152–4172. https://doi.org/10.1287/mnsc.2019.3396

Filippas, A., Horton, J. J., & Golden, J. M. (2022). Reputation inflation. Marketing Science, 41(4), 733–745. https://doi.org/10.1287/mksc.2022.1350

Galperin, H., & Greppi, C. (2017). Geographical discrimination in digital labor platforms. SSRN Electronic Journal. https://doi.org/10.2139/ssrn.2922874

Gandini, A., Pais, I., & Beraldo, D. (2016). Reputation and trust on online labour markets: The reputation economy of Elance. Work Organisation, Labour and Globalisation, 10(1). https://doi.org/10.13169/workorgalaboglob.10.1.0027

Gegenhuber, T., Schuessler, E., Reischauer, G., & Thäter, L. (2022). Building collective institutional infrastructures for decent platform work: The development of a crowdwork agreement in Germany. In A. A. Gümüsay, E. Marti, H. Trittin-Ulbrich, & C. Wickert (Eds.), Research in the Sociology of Organizations (pp. 43–68). Emerald Publishing Limited. https://doi.org/10.1108/S0733-558X20220000079004

Gerber, C., & Krzywdzinski, M. (2019). Brave new digital work? New forms of performance control in crowdwork. In S. P. Vallas & A. Kovalainen (Eds.), Research in the Sociology of Work (Vol. 33, pp. 121–143). Emerald Publishing Limited. https://doi.org/10.1108/S0277-283320190000033008

Graef, I., Verschakelen, J., & Valcke, P. (2013). Putting the right to data portability into a competition law perspective. Law: The Journal of the Higher School of Economics, Annual Review, 53–63. https://ssrn.com/abstract=2416537

Hascher, J., Desai, N., & Krajbich, I. (2021). Incentivized and non-incentivized liking ratings outperform willingness-to-pay in predicting choice. Judgment and Decision Making, 16(6), 1464–1484. https://doi.org/10.1017/S1930297500008500

Hausemer, P., Rzepecka, J., Dragulin, M., Vitiello, S., Rabuel, L., Nunu, M., Rodriguez Diaz, A., Psaila, E., Fiorentini, S., Gysen, S., Meeusen, T., Quaschning, S., Dunne, A., Grinevich, V., Huber, F., & Baines, L. (2017). Exploratory study of consumer issues in online peer-to-peer platform markets: Final report. https://doi.org/10.2838/779064

Hausman, J., & McFadden, D. (1984). Specification tests for the multinomial logit model. Econometrica, 52(5), 1219–1240. JSTOR. https://doi.org/10.2307/1910997

Hesse, M., & Teubner, T. (2020). Reputation portability – quo vadis? Electronic Markets, 30(2), 331–349. https://doi.org/10.1007/s12525-019-00367-6

Hesse, M., Teubner, T., & Adam, M. T. P. (2022). In stars we trust – A note on reputation portability between digital platforms. Business & Information Systems Engineering, 64(3), 349–358. https://doi.org/10.1007/s12599-021-00717-9

Hesse, M., Lutz, O., Adam, M., & Teubner, T. (2020). Gazing at the stars: How signal discrepancy affects purchase intentions and cognition. ICIS 2020 Proceedings. 8. https://aisel.aisnet.org/icis2020/sharing_economy/sharing_economy/8

Hong, Y., & Pavlou, P. A. (2017). On buyer selection of service providers in online outsourcing platforms for IT services. Information Systems Research, 28(3), 547–562. https://doi.org/10.1287/isre.2017.0709

Hong, Y., Chong, W. A., & Pavlou, P. A. (2016). Comparing open and sealed bid auctions: Evidence from online labor markets. Information Systems Research, 27(1), 49–69. https://doi.org/10.1287/isre.2015.0606

Howcroft, D., & Bergvall-Kåreborn, B. (2019). A typology of crowdwork platforms. Work, Employment and Society, 33(1), 21–38. https://doi.org/10.1177/0950017018760136