Abstract

Personal virtual assistants (PVAs) based on artificial intelligence are frequently used in private contexts but have yet to find their way into the workplace. Regardless of their potential value for organizations, the relentless implementation of PVAs at the workplace is likely to run into employee resistance. To understand what motivates such resistance, it is necessary to investigate the primary motivators of human behavior, namely emotions. This paper uncovers emotions related to organizational PVA use, primarily focusing on threat emotions. To achieve our goal, we conducted an in-depth qualitative study, collecting data from 45 employees in focus-group discussions and individual interviews. We identified and categorized emotions according to the framework for classifying emotions Beaudry and Pinsonneault (2010) designed. Our results show that loss emotions, such as dissatisfaction and frustration, as well as deterrence emotions, such as fear and worry, constitute valuable cornerstones for the boundaries of organizational PVA use.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Artificial Intelligence (AI) enables smart services and digital transformation that significantly change the way organizations and electronic markets work (Gursoy et al., 2019). As digital transformation progressed, personal virtual assistants (PVAs) based on AI recently gained significance so that they are currently a standard feature of most mobile devices, which people use on a daily basis (Maedche et al., 2019). Through these assistants, users can communicate with and centrally steer their devices with natural language (McTear, 2017), adding convenience and making it easier to use new applications. Additionally, PVAs have the potential to take over repetitive tasks which are easy to automate and as technology progresses, and eventually even to take on more complex and creative tasks (Loebbecke et al., 2020). While private PVA use is increasing, organizational use in conjunction with business software such as enterprise resource planning systems is not (Meyer von Wolff et al., 2019). Database inquiries and analyses, as well as order processing and document management, are only a few of the tasks PVAs could potentially undertake. Using PVAs could give employees more time for valuable, more complex tasks and would save the organization resources and money, even offer a competitive advantage (Maedche et al., 2019). Research on AI-readiness at an organizational level has shown that AI awareness, understanding how AI-based technologies work, and knowing where they can be employed is crucial to successfully implementing such technologies (Jöhnk et al., 2020). Consequently, implementing PVAs in an organizational setting could be the next logical step in digitalization progress. Although PVAs are popular in private use contexts, the organizational context is a new area of application that very few studies have specifically considered, primarily due to the lack of already implemented PVAs in organizational settings. Stieglitz et al. (2018) do not fully embrace the concept of an organizational PVA; yet, they do introduce the concept of an automated user service through enterprise bots that provide interactions with complex organizational systems and processes. Meyer von Wolff et al. (2019) describe specific application scenarios for such enterprise assistants, indicating their potential in information acquisition, employee self-service, collaboration, and training. With so many advantages, what is keeping organizations from introducing AI and allowing it to take over large parts of the workplace?

PVAs act within a socio-technical system where there are users working toward a particular goal, as well as tasks they have to fulfil using specially employed technologies (Maedche et al., 2019). In private PVA use, for example using the smartphone-PVA to execute a specific task, this socio-technical system is manageable; however, in organizational use it becomes more complex. Users are aware that they rarely act on their own, that there is interdependence with the entire organization. PVA failure or error can even have severe financial implications. Additionally, user expectations and how organizational PVAs actually function, often diverge (Luger & Sellen, 2016), so that users perceive the PVA as a nuisance rather than as facilitating their job and saving time (Maedche et al., 2019). While these factors are grounded in cognitive theories, there is reason in this context to deviate from purely logical reasoning to account for human irrationality (Loebbecke et al., 2020). Human resistance to using, working with, and ultimately also trusting AI is generally induced by emotions that can range from dissatisfaction and frustration due to the PVA being a disturbance, to worry and fear that it will make serious mistakes or leak their data. Since emotions are important drivers of behavior, the emotions users experience early in a new application’s implementation significantly influence the use of the technology (Beaudry & Pinsonneault, 2010). If users see new technologies as a threat, they generally avoid them (Liang & Xue, 2009). Regarding PVAs, trust and privacy issues mostly lead to users rejecting this technology (Cho et al., 2020; Liao et al., 2019; Zierau et al., 2020). Failing to address these concerns the human workforce might have in such massive change processes would be dangerous from a strategic point of view, but even more, could lead to them complete rejecting the technology (Laumer & Eckhardt, 2010). Thus, attention to emotions induced by using organizational PVAs is vital, particularly before or in the early stages of implementation, especially to draw clear lines and define the right boundaries. This paper’s aim is to disclose negative emotions and concepts related to these PVAs, to clearly define boundaries of organizational PVA use. Consequently, we draw implications emotional responses have for organizational PVA implementation, and provide recommendations for action.

To achieve our goal, we conducted an in-depth interview study, collecting data first in group discussions, including 45 employees across various industries and sectors, followed by individual interviews. We collected the data to identify and categorize emotions according to Beaudry and Pinsonneault’s (2010) framework for classifying emotions. Further, we related these emotions to one another and, through open coding, also found negative implications regarding emotion-laden concepts such as trust and privacy, related to AI use or non-use. Hereby, we show a dark side of potential organizational PVA use, address concerns raised by the human workforce, and give insight on where boundaries should be drawn.

Overall, our paper is a first step toward systematically revealing basic and specific emotions regarding the potential use of organizational PVAs and thereby show where organizations should draw boundaries before they implement such AI-based technologies into daily routines. We provide empirical groundwork for theorizing on the boundaries of AI use based on basic emotions and related concepts. We also find implications for organizations regarding PVA implementation. Future research could draw on these results to validate and enhance our spectrum of relevant emotions, as well as to incorporate it into AI-based system implementation strategies, and to change management endeavors.

Theoretical background

Since we are bringing together two distinct concepts, the PVA as an information systems (IS) technology and emotions as a psychological concept, this paper refers to several theoretical foundations. In the following sections, we give an overview of current and anticipated features of AI-based agents or assistants, and provide an overview of drawbacks to using AI-based technologies. Then, we show how our work on emotions related to organizational PVAs can be embedded in a well-established emotion framework.

Personal virtual assistants

The origins of PVAs can be traced back to the formative research of Turing (1950) and Weizenbaum (1966) that shape AI-related research to this day. Turing attempted to define AI through an experiment in which a human would unknowingly communicate with a machine that appeared to be human, the machine having to convince the human for as long as possible that they are actually communicating with another human. To date, no machine has successfully passed the Turing test. A large portion of AI literature still follows the question of how to make communication between humans and machines as natural as possible. Weizenbaum (1966) introduced the first dialog system that enabled communication with a computer through natural language processing (NLP). Since the 1980s, dialog systems have been introduced that do not focus solely on communication, but are able to fulfil tasks independent of human control (Dale, 2019). Often, these systems have not been scalable and prone to errors, which explains why they were not commercially successful (McTear, 2017). This changed after 2010 as research on AI and speech recognition advanced and the user’s context information such as current location and user history could be accessed (McTear, 2017; Radziwill & Benton, 2017). Further, assistants that can be integrated into and directly communicate with the user through messengers, have been introduced. This progress is reinforced by investments of large technology corporations such as Microsoft, IBM, Apple, Amazon or Google, that all developed PVAs for end users.

The term ‘personal virtual assistant’ (PVA) is difficult to define clearly because it is not a unique or generally known term. Previous studies have shown various conceptualizations and diverse terminology related to the anticipated or displayed features (see Table 1). The main common denominator of all the described agents or assistants is their human-like communication through natural language. Further, the words ‘smart’ and ‘intelligent’ have been widely established to imply underlying AI technology.

While most of the terms given in Table 1 do not necessarily suggest an organizational context, most of the identified agents or assistants are applied on the interface with the customer, for example, supporting or delivering customer services. Others are responsible for executing (automated) tasks. The most relevant attribute we find in a PVA, as opposed to a plain chatbot or specialized conversational agent, is its ability to act as an interface that connects the user to many different services. For our purposes, we therefore define the PVA as the focal point for numerous functions that can be accessed through natural language, without touching on the logic behind any application, as the PVA is an intermediary.

Drawbacks of using AI-based technologies

Although researchers and society often recognize the merit AI-based technologies such as PVAs have in potentially creating value, they tend to lose sight of the high cost and negative emotional impact they can have on the human workforce. Overall, individuals working in large organizations are sceptical of PVAs, mistrusting them for several reasons, of which privacy concerns rank the highest. Privacy refers to the state of not being in others’ company nor being under others’ observation. Also, it implies freedom from unwanted intrusion (Merriam-Webster, 2005). Wiretapping and listening in to covertly collected recordings, exploiting vulnerabilities in security, and user impersonation are several ways in which malicious actors can breach users’ privacy and security (Chung et al., 2017). Lentzsch et al. (2021) have shown that malicious users can pressure innocent users unintendedly to reveal information after downloading seemingly harmless data onto the PVA they are using. Inability to administer and change privacy as well as content settings for AI-based technologies leads to mistrust, which emphasizes the importance of designing PVAs to be highly privacy-sensitive and trustworthy (Cheng et al., 2021; Cho et al., 2020).

The general human desire to maintain privacy and data ownership stems from the fear of losing autonomy and personal integrity, therefore people are mostly reluctant to trade privacy and control (Ehrari et al., 2020) unless the value they gain exceeds the risk they perceive to be taking. This gap between willingness to protect or share data can be mediated by trust. Trust is based on the expectation that an action important to one person, will be executed by another party (in this case, either another person or the PVA) regardless of whether the other party can be controlled or monitored (Mayer et al., 1995). A trust problem arises if the user’s expectations cannot be fulfilled by the AI-based technologies because often they do not work or behave as the user anticipates. This leads to a large gap in terms of known machine intelligence, system capability, and goals (Luger & Sellen, 2016), and can be attributed to high AI training costs due to reliable training datasets being necessary (Denning & Denning, 2020).

To leverage the potential AI provides for strategic decision-making in an organizational setting, managers must transfer authority and control to AI-based decision systems such as PVAs. However, humans are less likely to delegate strategic decisions to AI than to another person, since they feel more positive emotions when delegating to another person (Leyer & Schneider, 2019). Further, there is a perceived loss of competence and reputation when organizations transfer decision-making from an employee to an AI system, and there is a moral burden on employees that have to face the real-life consequences of the decisions an AI system makes (Krogh, 2018; Mayer et al., 2020). Collaboration between humans and machines does not guarantee better outcomes, and when a PVA errs or shows untoward bias, people often insufficiently intervene to address the problem (Vaccaro & Waldo, 2019) due to the employees’ reduced ability to critically reflect on their work once the PVA has taken over the entire decision-making process (Mayer et al., 2020).

Generally, a PVA can improve human capabilities by enhancing intelligence and cognition and, in turn, also their performance (Siddike et al., 2018). However, these enhanced capabilities and performance are highly dependent on the success of the interaction between the PVA and its user, making it vital to research the factors that influence interaction. One such factor is human emotion.

Emotions and emotion models

Emotions can be viewed as the primary human motivational system (Leeper, 1948; Mowrer, 1960), as well as being a specific, very elementary part of intelligence (Balcar, 2011). The aspect of emotions which drives human action and interaction, is also represented in the emotions humans display in communication through and about IS (Rice & Love, 1987). A great deal of research in psychology has been dedicated to emotions, highlighting different aspects of a particular emotion or of emotions in general (Kleinginna & Kleinginna, 1981). This has led to not one, but many different definitions and conceptualizations of emotions (Chaplin & Krawiec, 1979). Broadly, an emotion is a chronologically evolving sequence: after exposure to a stimulus, a human perceives a state of ‘feeling’ that results in the person displaying externally visible behavior or emotional output (Elfenbein, 2007). Since our research is not based on the physiological response or physically visible behaviors that an emotion might trigger, for our purposes, we define an emotion more narrowly as “a mental state of readiness for action” (Beaudry & Pinsonneault, 2010, p. 690) that activates, prioritizes, and organizes a certain behavior in preparation of the optimal response to the demands of the environment (Bagozzi et al., 1999; Balcar, 2011; Lazarus, 1991).

Existing research on emotions regarding IS is mostly not grounded in emotion theories, but rather refers to basic or discrete emotions that IS users display (Hyvärinen & Beck, 2018). Definable and objective basic emotions are foundational to many complex emotions. Although there is no consensus on which emotions are the basic ones, Kowalska and Wróbel (2017) combined the theories presented in state-of-the-art emotion research and arrived at six basic emotions, namely happiness, sadness, anger, disgust, fear/anxiety, and surprise. Within these six basic emotions, we observe a distinction between positive and negative emotions as two independent dimensions that are universal across cultural, gender and age groups (Bagozzi et al., 1999; Pappas et al., 2014). However, surprise cannot directly be attributed either positive or negative quality (Ortony & Turner, 1990), therefore we decided to omit it further in our investigation.

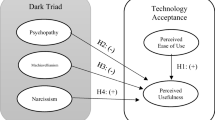

Many emotion models or frameworks given in the literature focus on emotions unrelated to an organizational context (Plutchik 1980; Russell, 1980). Other emotion models that could appropriately assess attitudes toward AI-based technologies in the workplace (Kay & Loverock, 2008; Richins, 1997) do not incorporate different stages of assessment. Abandoning these, we decided to base our investigation on Beaudry and Pinsonneault’s (2010) framework for classifying emotions, since their primary appraisal divides emotions according to whether they constitute an opportunity or pose a threat to the human. Maedche et al. (2019) affirm this appraisal in the context of PVAs. Beaudry and Pinsonneault’s (2010) secondary appraisal incorporates an element of perceived control over expected consequences, or the lack of such control. Foundational to the framework, as given in Fig. 1, is a contextual model of stress (Lazarus & Folkman, 1984) which assumes that coping mechanisms help human efforts to meet requirements that exceed their existing resources. These requirements can be divided into ones that rely on either cognitive or behavioral effort. Cognitive effort, on the one hand, aims to avoid or accept a given situation. Behavioral effort, on the other hand, aims to change the situation by, for example, researching new information. A second foundation for Fig. 1 lies in appraisal theories, according to which emotions arise when humans assess events and situations (Moors et al., 2013). Therefore, emotions are taken as reactions to events or situations, which do not occur without a cause or reason. After completing the first and the second appraisal, any emotion can be classified.

A framework for classifying emotions (Beaudry & Pinsonneault, 2010, p. 694)

According to the two axes, there is a fourfold segmentation. The examples of emotions listed in Fig. 1 help with the classification. Most basic emotions are also mentioned here. The first category, achievement emotions (AE), stems from the first and second appraisals resulting, respectively, in an opportunity and a perceived lack of control over expected consequences. For our purposes, we chose to focus on happiness, satisfaction, and relief, as we interpret pleasure and enjoyment as synonyms for satisfaction (Merriam-Webster, 2005). The second category, challenge emotions (CE), combines an opportunity with perceived control over the expected consequences, revealing itself in excitement, hope, anticipation, playfulness, and flow. We interpret arousal as a synonym for enjoyment, therefore we chose not to code it separately. The third category, loss emotions (LE), combine a threat with a perceived lack of control over expected consequences. Examples here are anger, dissatisfaction, frustration, and disgust. Since we interpret disappointment as a synonym for dissatisfaction and annoyance as directly related to frustration, we omitted these two in the further course of the investigation. Disgust was also discarded as it is an emotion elicited in relation to physical objects (Rozin et al., 2009) which we do not expect to contribute to our findings. The fourth and last category, deterrence emotions (DE), stems from the first and second appraisals, respectively revealing a threat and perceived control over the expected consequences. Fear, worry, and distress are illustrative of this category. We omitted anxiety since we interpret it as a synonym for fear.

Method

We used a qualitative research approach to understand emotions evoked by organizational PVAs (Bhattacherjee, 2012). In doing so, we intended to account for the complexity and novelty of a potential organizational PVA-implementation to gain a thorough understanding of the emotions triggered by such action. We selected apparently relevant emotions from Beaudry and Pinsonneault’s (2010) framework for classifying emotions. Figure 2 summarizes our research approach.

Data collection

We conducted a focus-group and interview study to better understand the emotions the potential use of an AI-based PVA would evoke in the workplace (Myers & Newman, 2007; Rabiee, 2004). Using a non-probability sampling method combining both convenience and voluntary response sampling (Wolf et al., 2016) we arrived at a sample of 45 participants (P01-45) between the ages of 19 and 40 years old. All participants were part-time students (convenience sample) or had recently completed their part time studies (voluntary sample) at the time of the data collection. Also, all participants were employed or self-employed at the time of data collection, and were asked to refer to their current or last workplace when giving statements regarding potential PVA use. Combining these sampling strategies enabled data collection from participants who had shown no previous interest in the topic, as well as from ones who were interested, and had thus chosen voluntarily to respond and take part in our study.

We structured the data collection into two parts. First, we conducted focus-group discussions with groups of 4–7 participants each, who came together and discussed how their workplace could potentially use a PVA. These discussions took place between June and November 2019, and lasted 90–120 min each. Second, we conducted individual one-on-one interviews via telephone or video conference to follow up on the focus-group discussions. These interviews took place between July 2019 and February 2020. All participants’ consented to the interviews being recorded with an audio device. We transcribed the recordings shortly afterwards. The data collection took place in different cities across Germany, therefore focus-groups as well as the interviews were conducted in the participants’ native language, German.

In agreement with our paper’s in-depth approach and to generate rich data we mainly asked open questions (Bhattacherjee, 2012; Myers & Newman, 2007). The focus-group discussions as well as the interviews were semi-structured in order to provide the same stimuli and account for equivalence in meaning (Barriball & While, 1994). In the focus-group discussions, we made sure participants stayed with the given topic of potential organizational PVA use, and we encouraged them to interact with one another (Rabiee, 2004).

Data analysis

For our analysis, we used the qualitative data analysis software Atlas.ti to analyze the full transcripts of the group discussions, as well as excerpts of the individual interviews. One author, the first coder, started to code the emotions according to Beaudry and Pinsonneault’s (2010) framework, while additionally using open coding to find any related occurring themes or phenomena. We assigned the same level of specificity to all codes, arriving at a flat coding frame (Lewins and Silver 2014); however, later we summarized the individual emotions into emotion categories, as in Table 2. Through the selective coding scheme, we arrived at 14 different emotion codes in four clusters, as well as four related phenomenon codes, which the open coding disclosed (trust in humans, trust in PVAs, anthropomorphism, and privacy). Additionally, we used four demographic information codes (job information, field of study, previous PVA experience, and the last technological device purchased). The second coder then used this list of 22 codes to conduct selective coding. Afterwards, the two coders discussed the cases on which they disagreed, either to reach agreement on a code, or to remain in disagreement. Eventually, we arrived at an intercoder-reliability of 92%, “the percentage of agreement of all coding decisions made by pairs of coders on which the coders agree” (Lombard et al., 2002, p. 590). According to most methodologists a coefficient of greater than 0.90 is deemed to be always acceptable (Neuendorf, 2002).

Results

We structured our results in the different emotion categories Beaudry and Pinsonneault (2010) suggested. These categories with their coinciding emotions/codes provided a good basis for understanding human emotions toward organizational PVAs. Then, we looked at the related concepts that emerged during the open coding process and presented them in relation to the coded emotions. A common approach to presenting qualitative research results is to present illustrative quotes from the focus-group discussions and the interviews (Eldh et al., 2020). For convenience, we translated the original German quotes as literally as possible.

Participants’ demographic background

The demographic codes confirmed that all participants had listed business administration or management information systems as their primary field of study, and all were employed or had until recently been employed (at most two months before data collection). The participants’ workplaces were in a variety of different sectors; the roles their various job titles (listed in Table 3) indicate, show that the automotive and public sector as well as software engineering are the most represented. According to Neuner-Jehle's (2019) study on Germany’s job market in 2019, many of these job roles, such as those of software developer, IT administrator, and IT consultant, as well as human resources and project manager, are amongst the most popular and sought-after ones among applicants with university degrees. Further, Germany’s most important business sectors (Statista, 2020) – the automotive industry, mechanical engineering, and pharmaceuticals – are all adequately represented in our sample. Thus, our sample qualifies as a cross-section of office workers with university degrees in Germany. Since, to date, only a very few organizational PVAs have been implemented in Germany, only two participants reported experience with a PVA in an organizational context. Yet, a majority of 60% (27 participants) had used PVAs privately and in their own homes, such as activating Alexa, Siri, or chatbots in using online customer services. Table 3 gives this data as well.

Further, we decided to ask participants about the last technical device they had purchased to find out whether any of them had invested significantly in expensive and advanced technology. This would give an overview of their general technological affinity or possible technological aversion signaled by not having purchased any device in a long while. Most participants did not mention unusual purchases, while the majority mentioned recently having purchased a new smartphone, headphones, speakers, smart TV, smartwatch, tablet, or computer. All participants explained why they had selected the devices they purchased, and none stated other options being unavailable as a reason for their purchase. Thus, we found no remarkable anomalies regarding technology affinity or aversion among our participants that would have suggested excluding them from the sample.

Emotion categories

In total, we found 741 emotion codes across our sample. We found a total of 146 AE and 259 CE, thus totaling 405 (55%) emotions considered to represent positive experience or anticipation, and thus an opportunity. Regarding emotions signaling a threat, we found 154 LE and 182 DE, totaling 336 (45%). At least one emotion code per category was found only rarely in our data. Table 4 shows the emotion categories and their respective frequencies.

Although we focus on DE and LE since we want to investigate and mitigate negative emotions and perceptions toward organizational AI uses, we also coded for and displayed AE and DE. We display these results to ensure completeness.

Achievement and challenge emotions

The 146 AE found in the full sample, subsume emotions associated with opportunities users gain regarding their perceived inability to control expected consequences. These make up 20% of all emotion codes, and 36% of all opportunity emotions. Happiness was expressed only twice, while satisfaction was demonstrated twelve times in the sample.

The most significant emotion within the AE is relief, a positive feeling of being at ease or having a burden lifted. It occurs 132 times, making up 91% of all AE. Most participants recognized that a PVAs could potentially help to ease time pressure and other resource constraints in the workplace, as “the PVA simplifies and supports” their work through “automatization,” as long as they “function well” and are not prone to making mistakes. This was especially applicable to tasks that do not require a great deal of communication, especially concerning (potential) customers:

“I can imagine it in some types of routine tasks; standardized tasks without much personal contact is where I can really see it being applied.” (P05)

There were 259 CE codes, together representing an opportunity associated with perceived control of expected consequences. These responses made up 35% of all emotion codes, i.e., 64% of all opportunity emotions. Excitement occurred 58 times (22% of the CE), and was mainly directed at an organizational PVA’s potential features and the tasks it could fulfil. Participants were “excited” in advance about this possibility, envisaging the PVAs to be “cool” and “great,” a “massive opportunity” of which they were a “fan.”

Hope occurred 76 times, i.e., 30% of all CE, and it was often articulated by indicators such as “it would be nice” or “maybe it would be possible” for the PVA to support certain processes or tasks that participants “wish for.”

“I hope that they will come into our lives soon enough for me to experience [the technology] and the advantages they will bring.” (P14)

These contributions reveal that participants hope for certain outcomes regarding organizational PVAs, but are not necessarily confident that they will materialize.

The most prominent CE is anticipation, expressing the act of looking forward to an occurrence. We found it 114 times, i.e., 44% of all CE in our sample. Participants greatly looked forward to the PVA “fulfilling many tasks,” “increasing efficiency,” and “optimizing processes” by being “very helpful” and “reliable.”

Playfulness, occurring seven times (3% of CE), and flow, occurring four times (1% of CE), were of secondary importance, and will not be further discussed.

Loss emotions

We found 154 LE in the sample, which constitute 21% of all emotion codes and 46% of the threat emotions. We regard LE as signaling threats that occur when users perceive they cannot control expected consequences. Anger, an intense emotional state of displeasure, makes up only 3% (four occurrences) of all LE, and mainly occurs when participants think about PVA failure, as in

“If you command your assistant to do something and it misunderstands or something and does it completely wrong, that is very exasperating and brings an emotional response.” (P43)

The most common emotion among LE is dissatisfaction, which occurred 89 times, i.e., 58% of LE. Users feel this when their expectations are not met. In our analysis, we largely found dissatisfaction in the context of the PVA “not functioning as desired,” “lacking features,” or “without potential for use.” Our data exhibits many different stages of dissatisfaction, from very specifically criticizing features as in

“the system forces the user to use specific voice commands, but when you have to talk like that it’s not natural […] if you say just anything, the system won’t understand.” (P08)

to comprehensively stating that

“after two questions, [the PVA] wasn’t helpful, no positive experience.” (P43)

Dissatisfaction is closely related to frustration, which is a source of irritation. This reference occurred 61 times in our sample, i.e., 39% of all LE. Many participants showed frustration because they assumed they would lose many desired interactions. Further, some encounters could become more difficult than otherwise if they use a PVA instead of directly communicating with colleagues or customers, even to the point of them losing a core competence.

“AI stubbornly follows the pre-programmed rules, but it is mostly the human element that defines a company. Customers often like calling because they like the consultants. Using AI, all companies would be the same; all the friendliness, the human element, having a bit of chitchat – that would suddenly be gone, which I see as a big problem.” (P17)

Further, the PVA’s functions and (in)accuracy could be a source of frustration for users.

“I am really annoyed at technical devices when they do not immediately function as I would like them to, because I expect them to fulfill their potential.” (P32)

Deterrence emotions

A total of 182 DE occurred in our sample, i.e., 24% of all emotion codes and 54% of all threat emotions. DE, as opposed to LE, signal a threat although users simultaneously perceive having control of expected consequences. Fear, coded 82 times, accounts for 45% of DE. It is experienced in the presence or threat of danger. Mostly, participants fear “job loss” through organizational PVAs being implemented; also, they fear “being tracked” and spied on by a PVA, and that their “privacy” and “data security” could be jeopardized.

“I get the feeling that I am completely under surveillance and being scrutinized in everything I do, with allusions that it could be done better – and actually that I can be replaced by the PVA that suggests how I should be doing things anyway.” (P02)

This articulates both fear of total surveillance by the PVA, and fear of being made redundant by it.

Fear is a very strong emotion; worry, in comparison, is a lighter kind of fear, an uneasy state of mind prompted by anticipated trouble. Worry occurred 98 times in the sample, accounting for 54% of the DE. Worry largely became evident regarding “responsibility” if PVA use were to produce “bad decisions” or “poor execution” of tasks. However, it also occurred in the context of the topics mentioned as prompting fear. Further, participants were worried that they would

“have to give up the human factor, which is hard for me and I also don’t think the company would want that.” (P07)

Additionally, discrimination and respect seemed to be of concern to participants.

“I imagine it being hard for the PVA to implement respect and integrity. I wouldn’t even know how [the PVA] can implement it. Since these are soft skills which are not measurable, this might be hard.” (P08)

Distress, coded only twice (1%), indicates a state of great suffering. Only two participants referred to this; one found it “very upsetting” that a PVA could listen to all their conversations (P36). The other participant went even further, stating that

“if there ever would be such PVAs, I do not want to be alive anymore – I find this a very creepy notion.” (P44)

Additional related concepts

During the open coding process, we found a number of recurring themes that mostly appeared in the context of coded emotions. Specifically, we found anthropomorphism, privacy, trust in humans, and trust in PVAs. Table 5 shows their occurrence in relation to the coded emotions.

Anthropomorphism is the attribution of human characteristics to non-human objects (Epley et al., 2007). In our data the PVA is attributed human characteristics, which we found 36 times in the sample. It is not associated with any emotion in particular; rather, it appears in conjunction with most emotions. Recurring themes here are “avatars” and the PVA recognizing and “reacting to moods.” Anthropomorphism and excitement are displayed in statements such as

“I would find it cool if the PVA were something tangible, a type of avatar so that you have a virtual figure, so you don’t have to just talk to a screen, but rather to an animal or so.” (P12)

Further, combining anthropomorphism and anticipation occurred in quotes like

“but this is about the PVA recognizing what mood you are in, and that’s something it should be able to do.” (P15)

Privacy references occurred 78 times, mostly in conjunction with fear (24 times), worry (ten times), or frustration (nine times). Worried participants gave statements along these lines:

“And then data security topics – what about confidential information? Especially regarding thecompetition, the data shouldn’t be spread outside of the company. I have a critical opinion of this.” (P10)

Stronger sentiments came to light when fear and privacy concerns occurred together, leading participants to admit that they saw

“no guarantee that criminals or intelligence or regulatory agencies would not use the devices to wiretap.” (P14)

They reflected fearfully on what would happen if they were to find out that a PVA had been operating.

“And then it started. In that moment I already found it very freaky. I don’t even know how to explain it. In that moment, I was thinking: What did I say in the past half hour? What could this device already have recorded? Already completely paranoid.” (P13)

Trust in humans on the one hand, and in PVAs on the other, were also articulated in the sample, and at almost the same frequency. In our sample, trust in humans is primarily expressed in the context of dissatisfaction regarding the organizational PVA. Participants complained that the PVA was

“only able to reply to what it was programmed to do, which makes it so different from us humans and how we interact […] and this is why I don’t see it being fair, because fairness would have to be a function.” (P17)

Trust in humans was also implied when participants expressed worry that all human care and emotionality would be lost through organizational PVAs’ use, as

“the human component will be lacking, and it doesn’t know all these thousands of people” (P20)

and

“where emotions and humans are involved, things are more conflict-laden.” (P44)

Trust in the PVA, on the other hand, mostly co-occurred with the emotion hope. This shows that participants are willing to trust an organizational PVA, although they remain unsure of the outcome. While trust in machines used to work differently to trust in humans, with increasing machine learning and anthropomorphism, the processes have become more similar (Zierau et al., 2020). In this context, participants showed hope for potential PVA capabilities, often regarding standardized processes which they deemed “easy” for the PVA, even if they do not exist currently, but potentially could be realized in the future:

“The ideal would be for the PVA to take over everything […] I would hand over the entire process.” (P30).

Discussion

In the following section, we discuss the results of our focus-group and interview study, especially regarding possible boundaries to be set for organizational PVAs based on expressed threat emotions. Further, we provide recommendations for action and guidance for organizational PVA implementation, as these emotions can set valuable cornerstones in organizational AI strategy.

First, we have to acknowledge that participants display a fair amount of opportunity-related emotions. The AE we presented in our results focused largely on anticipated features or functionalities that could make participants’ work easier and more convenient, without requiring a high-performance PVA. Such PVAs would hardly tap into the full potential AI can offer, and would not enter domains that have remained exclusive to humans (Schuetz & Venkatesh, 2020). The 27 participants who mentioned experiencing relief mostly focused on “non-complex/simple tasks” which can be “time-consuming”.

“I would expect a PVA to do exactly as expected and not to decide, analyze, and interpret something independently.” (P16)

and

“I think PVAs can be a very sensible support, especially when they relieve people from routine or very standardized tasks so that they can then focus on the real challenges at work.” (P30)

These findings not only show that the discussion of letting AI completely take over the workplace is premature, as the participants clearly direct us toward framing PVAs to take over tasks, but not entire jobs (Sako, 2020). This comes with a high degree of skepticism toward PVA use for critical and non-trivial tasks and processes. Such skepticism frequently stems from LE, mainly dissatisfaction and frustration. In all, 23 participants stated concern regarding the PVA lacking desired functionalities, and 11 participants were concerned about PVAs being prone to errors. Dissatisfaction and frustration can only be avoided by ensuring that the (future) implementation of organizational PVAs are seamlessly integrated and work with very few errors (Luger & Sellen, 2016). Thus PVAs should visibly generate added value for users without blurring the clear distinction between human and AI-system capabilities (Schuetz & Venkatesh, 2020). In this way, organizations can set clear boundaries, avoiding a total loss of control and addressing the fear that future generations might become unduly dependent on the PVA (Reis et al., 2020).

LE involve a perceived inability to control expected consequences in their secondary appraisal and also appear connected to trust in humans due to lacking trust in PVAs. On the one hand, trust is associated with risk-taking without controlling the other party (Mayer et al., 1995), so it is plausible that LE can occur when employees lack trust in PVAs. Trust building is a dynamic process, and continued trust depends not only on the PVA’s performance, but also on its purpose (Siau & Wang, 2018). Creating choice opportunities and providing instrumental contingency (Ly et al., 2019) can increase perceived control, which would lead to less LE coinciding with trust in PVAs. Fostering such trust is, therefore, particularly important for any organization attempting to implement an organizational PVA, and can also reveal where AI strategies reach their boundaries. A lack of clarity over job replacement and displacement through PVAs lead to distrust and hamper continuous trust development (Siau & Wang, 2018):

“If you have many repetitive tasks at work, you might have greater fear of being replaced, And eventually, it will affect the entire company if certain employee groups feel threatened regarding their job security.” (P28)

This can only be mitigated by involving various stakeholders with distinct perspectives and expertise in developing and using AI-based technologies, even if this heterogeneity will create obstacles in communication with each other and with decision-makers (Asatiani et al., 2020).

Privacy, or lack thereof, is strongly associated with the DE of fear and worry. This resonates with state-of-the-art research on new technologies and privacy (Fox & Royne, 2018; Uchidiuno et al., 2018; Xu & Gupta, 2009). Nonetheless, participants displaying fear or worry often refer to lack a perceived control over expected consequences, as 23 participants mentioned. From this we conclude that emotions associated with privacy can be blurry, and the distinction between LE and DE becomes less fixed. Nonetheless, the connection between threat emotions and privacy issues remains clear in the data. To mitigate these issues and find the right boundary for PVA involvement, the core principles of applied ethics, namely respect for autonomy, beneficence, and justice, are helpful (Canca, 2020). Organizational decision-makers must balance the different ethically permissible options, especially by visibly addressing their workforce’s concern or fear regarding autonomy and privacy loss while simultaneously offering prevention or mitigation strategies. Anonymizing data, even partially, until employees feel less threat emotions could be used (Schomakers et al., 2020).

Current research suggests that anthropomorphism or anthropomorphist design of PVAs generally triggers a positive emotional response (Adam et al., 2020; Moussawi et al., 2020). However, our results show an ambiguous response. While some see an opportunity in human-like PVA features, others show fear that can be attributed to the uncanny valley effect (Mori et al., 2012) that has been proven to increase DE.

“I don’t need the PVA to talk to me like an actual human being, and I am not sure I would want that. I could probably get used to it but I still find the idea strange.” (P24)

Additionally, several participants repeatedly stressed the importance to them of absolute transparency on whether they are communicating with or receiving output from the PVA or from a colleague. They want to be able to adjust their reaction accordingly.

“I would lack clear boundaries and get an uneasy feeling if I didn’t know whether the e-mail was actually written and sent by my colleague, or whether the PVA did it without the colleague even knowing what has been sent on their behalf.” (P31)

This constitutes another boundary for PVA design and implementation. Still, it cannot be viewed in isolation from privacy and trust, considering Zarifis et al. (2021) finding that trust is lower and privacy concerns are higher when the user can clearly recognize AI.

To summarize, organizations should adhere to the boundaries of implementing a PVA only for tasks their workforce elect and are relieved to pass on to an AI-based technology. By carefully and transparently introducing PVAs, taking DE into account, they can assuage fear and foster trust regarding job security and stakeholder involvement.

We have noted theoretical implications regarding transparency of interacting with AI, finding ambiguity and the need for deeper investigation. Further, we could show that Beaudry and Pinsonneault’s (2010) framework for classifying emotions not only constitutes a stable basis for investigating emotions regarding organizational AI, but also, through underlying appraisal theories, offers a foundation for investigating and explaining the basic emotions employees reveal when confronted with having to let AI take over.

Conclusion

This paper has provided a first glance at emotions evoked by the potential use of organizational PVAs based on AI. We combined insight gained from ten focus-group discussions and 45 individual interviews to reveal, analyze, and draw implications regarding boundaries concerning AI-based technologies. Further, for organizations planning to implement a PVA, we have made recommendations for action. Thereby we contribute to the research stream on emotions and technologies, and we open up the discussion on human emotions toward AI as well.

Our results are subject to limitations which can encourage further research endeavors in this promising research stream. We suggest expanding the sample by adding additional demographic groups, since in our project all participants had completed or were working toward university degrees in business administration or management information systems. Adding participants with no academic background might bring more application scenarios, as well as disclose further cause for resistance, especially regarding job loss and being replaced by an organizational PVA. Additionally, we found some promising co-occurrences of emotions and related concepts which could be tested empirically through quantitative research. Future qualitative research with open coding could add further related concepts, especially concepts derived from ethics. Also, future research could include different emotion theories and frameworks on which to base the analysis. We believe that applying the framework for classifying emotions by Beaudry and Pinsonneault (2010) delivered promising insights regarding organizational PVA-use, but other theories and models categorizing emotions in a more fine-grained manner, could refine the results. We consider emotion research regarding the organizational use of AI-based technologies, is still in its infancy, yet offers valuable insights and vast avenues for future research.

References

Adam, M., Wessel, M., & Benlian, A. (2020). AI-based chatbots in customer service and their effects on user compliance. Electronic Markets, 31(2). https://doi.org/10.1007/s12525-020-00414-7

Asatiani, A., Malo, P., Nagbøl, P. R., Penttinen, E., Rinta-Kahila, T., & Salovaara, A. (2020). Challenges of explaining the behavior of black-box AI systems. MIS Quarterly, 19(4). Retrieved from https://aisel.aisnet.org/cgi/viewcontent.cgi?article=1488&context=misqe

Bagozzi, R. P., Gopinath, M., & Nyer, P. U. (1999). The role of emotions in marketing. Journal of the Academy of Marketing Science, 27(2), 184–206. https://doi.org/10.1177/0092070399272005

Balcar, K. (2011). Trends in studying emotions.

Barriball, K. L., & While, A. (1994). Collecting data using a semi-structured interview: A discussion paper. Journal of Advanced Nursing, 19(2), 328–335. https://doi.org/10.1111/j.1365-2648.1994.tb01088.x

Beaudry, A., & Pinsonneault, A. (2010). The other side of acceptance: Studying the direct and indirect effects of emotions on information technology use. MIS Quarterly, 34(4), 689. https://doi.org/10.2307/25750701

Bhattacherjee, A. (2012). Social science research: Principles, methods, and practices (2nd ed.). Anol Bhattacherjee; Open Textbook Library; Scholar Commons, University of South Florida.

Burton, N., & Gaskin, J. (2019). “Thank you, Siri”: Politeness and intelligent digital assistants. Proceedings of the Americas Conference on Information Systems (AMCIS) 2019.

Canca, C. (2020). Operationalizing AI ethics principles. Communications of the ACM, 63(12), 18–21. https://doi.org/10.1145/3430368

Chaplin, J. P., & Krawiec, T. S. (1979). Systems and theories of psychology (4 ed.). New York.

Cheng, X., Su, L., Luo, X., Benitez, J., & Cai, S. (2021). The good, the bad, and the ugly: Impact of analytics and artificial intelligence-enabled personal information collection on privacy and participation in ridesharing. European Journal of Information Systems, 1–25. https://doi.org/10.1080/0960085X.2020.1869508

Cho, E., Sundar, S. S., Abdullah, S., & Motalebi, N. (2020). Will deleting history make Alexa more trustworthy? In R. Bernhaupt (Ed.): ACM Digital Library, Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (pp. 1–13). Association for Computing Machinery.

Chung, H., Park, J., & Lee, S. (2017). Digital forensic approaches for Amazon Alexa ecosystem. Digital Investigation, 22, 15–25. https://doi.org/10.1016/j.diin.2017.06.010

Csikszentmihalyi, M. (1992). Optimal experience: Psychological studies of flow in consciousness. Cambridge University Press.

Dale, R. (2019). NLP commercialisation in the last 25 years. Natural Language Engineering, 25(3), 419–426. https://doi.org/10.1017/S1351324919000135

DelleFave, A., Brdar, I., Freire, T., Vella-Brodrick, D., & Wissing, M. P. (2011). The Eudaimonic and hedonic components of happiness: Qualitative and quantitative findings. Social Indicators Research, 100(2), 185–207. https://doi.org/10.1007/s11205-010-9632-5

Denning, P. J., & Denning, D. E. (2020). Dilemmas of artificial intelligence. Communications of the ACM, 63(3), 22–24. https://doi.org/10.1145/3379920

Diederich, S., Brendel, A. B., & Kolbe, L. M. (2019). On conversational agents in information systems research: analyzing the past to guide future work. Proceedings of the Internationale Tagung Wirtschaftsinformatik 2019.

Easwara Moorthy, A., & Vu, K.-P. L. (2014). Voice Activated Personal Assistant: Acceptability of Use in the Public Space. In D. Hutchison, T. Kanade, & J. Kittler (Eds.), Lecture Notes in Computer Science / Information Systems and Applications, Incl. Internet/Web, and HCI: v.8522. Human Interface and the Management of Information. Information and Knowledge in Applications and Services. 16th International Conference, HCI International 2014, Heraklion, Crete, Greece, June 22–27, 2014. Proceedings, Part II (pp. 324–334). Springer International Publishing.

Ehrari, H., Ulrich, F., & Andersen, H. B. (2020). Concerns and trade-offs in information technology acceptance: The balance between the requirement for privacy and the desire for safety. Communications of the Association for Information Systems, 47. Retrieved from https://aisel.aisnet.org/cgi/viewcontent.cgi?article=4221&context=cais

Eldh, A. C., Årestedt, L., & Berterö, C. (2020). Quotations in qualitative studies: Reflections on constituents, custom, and purpose. International Journal of Qualitative Methods, 19, 1–6. https://doi.org/10.1177/1609406920969268

Elfenbein, H. A. (2007). Emotion in organizations: A review in stages. Institute for Research on Labor and Employment, Working Paper Series. Retrieved from Institute of Industrial Relations, UC Berkeley website: https://EconPapers.repec.org/RePEc:cdl:indrel:qt2bn0n9mv

Epley, N., Waytz, A., & Cacioppo, J. T. (2007). On seeing human: A three-factor theory of anthropomorphism. Psychological Review, 114(4), 864–886. https://doi.org/10.1037/0033-295X.114.4.864

Feine, J., Morana, S., & Maedche, A. (2019). Designing a chatbot social cue configuration system. Proceedings of the International Conference on Information Systems (ICIS) 2019.

Fox, A. K., & Royne, M. B. (2018). Private information in a social world: Assessing consumers’ fear and understanding social media privacy. Journal of Marketing Theory and Practice, 26(1–2), 72–89. https://doi.org/10.1080/10696679.2017.1389242

Gursoy, D., Chi, O. H., Lu, L., & Nunkoo, R. (2019). Consumers’ acceptance of artificially intelligent (AI) device use in service delivery. International Journal of Information Management, 49, 157–169. https://doi.org/10.1016/j.ijinfomgt.2019.03.008

Heitmann, M., Lehmann, D. R., & Herrmann, A. (2007). Choice goal attainment and decision and consumption satisfaction. Journal of Marketing Research, 44(2), 234–250. Retrieved from https://www.alexandria.unisg.ch/28676/

Hyvärinen, H., & Beck, R. (2018). Emotions Trump facts: The role of emotions in on social media: A literature review. In T. Bui (Ed.): Proceedings of the Annual Hawaii International Conference on System Sciences, Proceedings of the 51st Hawaii International Conference on System Sciences . Hawaii International Conference on System Sciences.

Jöhnk, J., Weißert, M., & Wyrtki, K. (2020). Ready or not, AI comes— an interview study of organizational AI readiness factors. Business & Information Systems Engineering. https://doi.org/10.1007/s12599-020-00676-7

Kang, Y. L., Nah, F., & Tan, A. H. (2012). Investigating intelligent agents in a 3D virtual world. Proceedings of the International Conference on Information Systems (ICIS) 2012.

Kapoor, A., Burleson, W., & Picard, R. W. (2007). Automatic prediction of frustration. International Journal of Human-Computer Studies, 65(8), 724–736. https://doi.org/10.1016/j.ijhcs.2007.02.003

Kay, R. H., & Loverock, S. (2008). Assessing emotions related to learning new software: The computer emotion scale. Computers in Human Behavior, 24(4), 1605–1623. https://doi.org/10.1016/j.chb.2007.06.002

Kleinginna, P. R., & Kleinginna, A. M. (1981). A categorized list of emotion definitions, with suggestions for a consensual definition. Motivation and Emotion, 5(4), 345–379. https://doi.org/10.1007/BF00992553

Kligyte, V., Connelly, S., Thiel, C., & Devenport, L. (2013). The influence of anger, fear, and emotion regulation on ethical decision making. Human Performance, 26(4), 297–326. https://doi.org/10.1080/08959285.2013.814655

Kowalska, M., & Wróbel, M. (2017). Basic Emotions. In V. Zeigler-Hill & T. K. Shackelford (Eds.), SpringerLink. Encyclopedia of personality and individual differences (pp. 1–6). Springer International Publishing: Springer International Publishing.

Laumer, S., & Eckhardt, A. (2010). Why do people reject technologies?: Towards an understanding of resistance to IT-induced organizational change. International Conference on Information Systems. Retrieved from https://core.ac.uk/download/pdf/301349705.pdf

Lazarus, R. S., & Folkman, S. (1984). Stress, appraisal, and coping. Springer.

Lazarus, R. S., & Folkman, S. (1984). Stress, appraisal, and coping. Springer.

Lee, C. J., & Andrade, E. B. (2015). Fear, excitement, and financial risk-taking. Cognition & Emotion, 29(1), 178–187. https://doi.org/10.1080/02699931.2014.898611

Leeper, R. W. (1948). A motivational theory of emotion to replace emotion as disorganized response. Psychological Review, 55(1), 5–21. https://doi.org/10.1037/h0061922

Lentzsch, C., Shah, S. J., Andow, B., Degeling, M., Das, A., & Enck, W. (2021). Hey Alexa, is this skill safe?: Taking a closer look at the Alexa skill ecosystem. Proceedings 2021 Network and Distributed System Security Symposium. Internet Society.

Lewins, A., & Silver, C. (Eds.). (2014). Using software in qualitative research: A step-by-step guide (2 ed.). SAGE.

Leyer, M., & Schneider, S. (2019). Me, you or AI?: How do we feel about delegation. Proceedings of the 27th European Conference on Information Systems (ECIS).

Liang, & Xue. (2009). Avoidance of information technology threats: A theoretical perspective. MIS Quarterly, 33(1), 71. https://doi.org/10.2307/20650279

Liao, Y., Vitak, J., Kumar, P., Zimmer, M., & Kritikos, K. (2019). Understanding the role of privacy and trust in intelligent personal assistant adoption. In N. G. Taylor, C. Christian-Lamb, & M. H. Martin (Eds.), Lecture Notes in Computer Science. Information in contemporary society. 14th international conference, iConference 2019, Washington, DC, USA, March 31–April 3, 2019: Proceedings (pp. 102–113). Springer International Publishing.

Loebbecke, C., Sawy, O. A. E., Kankanhalli, A., Markus, M. L., Te’eni, D., Wrobel, S., Obeng-Antwi, A. & Rydén, P. (2020). Artificial intelligence meets IS Researchers: Can it replace us? Communications of the Association for Information Systems, 47. Retrieved from https://aisel.aisnet.org/cgi/viewcontent.cgi?article=4224&context=cais

Lombard, M., Snyder-Duch, J., & Bracken, C. C. (2002). Content analysis in mass communication: Assessment and reporting of intercoder reliability. Human Communication Research, 28(4), 587–604. https://doi.org/10.1111/j.1468-2958.2002.tb00826.x

Lu, Y., Lu, Y., & Wang, B. (2012). Effects of dissatisfaction on customer repurchase decisions in E-Commerce-An emotion-based perspective. Journal of Electronic Commerce Research, 13, 224.

Luger, E., & Sellen, A. (2016). “Like having a REALLY bad PA”. In J. Kaye, A. Druin, C. Lampe, D. Morris, & J. P. Hourcade (Eds.), #chi4good. CHI 2016 : San Jose, CA, USA, May 7–12 : Proceedings : the 34th Annual CHI Conference on Human Factors in Computing Systems : San Jose Convention Center (pp. 5286–5297). The Association for Computing Machinery.

Ly, V., Wang, K. S., Bhanji, J., & Delgado, M. R. (2019). A reward-based framework of perceived control. Frontiers in Neuroscience, 13, 65. https://doi.org/10.3389/fnins.2019.00065

Maedche, A., Legner, C., Benlian, A., Berger, B., Gimpel, H., Hess, T., Hinz, O., Morana, S. & Söllner, M. (2019). AI-Based digital assistants: Opportunities, threats, and research perspectives. Business & Information Systems Engineering, (61), 535–544. https://doi.org/10.1007/s12599-019-00600-8

Mayer, R. C., Davis, J. H., & Schoorman, F. D. (1995). An Integrative model of organizational trust. The Academy of Management Review, 20(3), 709. https://doi.org/10.2307/258792

Mayer, A.-S., Strich, F., & Fiedler, M. (2020). Unintended consequences of introducing AI systems for decision making. MIS Quarterly Executive, 19(4). Retrieved from https://aisel.aisnet.org/cgi/viewcontent.cgi?article=1487&context=misqe

McTear, M. F. (2017). The rise of the conversational interface: A new kid on the block? In J. F. Quesada, F.-J. Martín Mateos, & T. López Soto (Eds.), Future and Emerging Trends in Language Technology. Machine Learning and Big Data (pp. 38–49). Springer International Publishing.

Merriam-Webster. (2005). The Merriam-Webster thesaurus Merriam-Webster’s everyday language reference set. Merriam-Webster.

Meyer von Wolff, R., Hobert, S., & Schumann, M. (2019). How may I help you? – State of the Art and Open Research Questions for Chatbots at the Digital Workplace. In T. Bui (Ed.): Proceedings of the Annual Hawaii International Conference on System Sciences, Proceedings of the 52nd Hawaii International Conference on System Sciences. Hawaii International Conference on System Sciences.

Moors, A., Ellsworth, P. C., Scherer, K. R., & Frijda, N. H. (2013). Appraisal theories of emotion: State of the art and future development. Emotion Review, 5(2), 119–124. https://doi.org/10.1177/1754073912468165

Mori, M., MacDorman, K., & Kageki, N. (2012). The uncanny valley [from the field]. IEEE Robotics & Automation Magazine, 19(2), 98–100. https://doi.org/10.1109/MRA.2012.2192811

Moussawi, S., Koufaris, M., & Benbunan-Fich, R. (2020). How perceptions of intelligence and anthropomorphism affect adoption of personal intelligent agents. Electronic Markets, 31(2). https://doi.org/10.1007/s12525-020-00411-w

Mowrer, O. H. (1960). Learning theory and behavior. John Wiley & Sons Inc.

Myers, M. D., & Newman, M. (2007). The qualitative interview in IS research: Examining the craft. Information and Organization, 17(1), 2–26. https://doi.org/10.1016/j.infoandorg.2006.11.001

Neuendorf, K. A. (2002). The content analysis guidebook. SAGE.

Neuner-Jehle, D. (2019). DEKRA Arbeitsmarkt-Report 2019. Stuttgart, Germany. Retrieved from https://www.dekra-akademie.de/media/dekra-arbeitsmarkt-report-2019.pdf

Ortony, A., & Turner, T. J. (1990). What’s basic about basic emotions? Psychological Review, 97(3), 315–331. https://doi.org/10.1037/0033-295x.97.3.315

Otoo, B. A., & Salam, A. F. (2018). Mediating Effect of Intelligent Voice Assistant (IVA), User Experience and Effective Use on Service Quality and Service Satisfaction and Loyalty. Proceedings of the International Conference on Information Systems (ICIS) 2018.

Paech, J., Schindler, I., & Fagundes, C. P. (2016). Mastery matters most: How mastery and positive relations link attachment avoidance and anxiety to negative emotions. Cognition & Emotion, 30(5), 1027–1036. https://doi.org/10.1080/02699931.2015.1039933

Pappas, I. O., Kourouthanassis, P. E., Giannakos, M. N., & Chrissikopoulos, V. (2014). Shiny happy people buying: The role of emotions on personalized e-shopping. Electronic Markets, 24(3), 193–206. https://doi.org/10.1007/s12525-014-0153-y

Plutchik, R. (Ed.). (1980). Emotion: Theory, research, and experience [2. Ed]. Acad. Press.

Rabiee, F. (2004). Focus-group interview and data analysis. The Proceedings of the Nutrition Society, 63(4), 655–660. https://doi.org/10.1079/pns2004399

Radziwill, N. M., & Benton, M. C. (2017). Evaluating quality of chatbots and intelligent conversational agents. Retrieved from http://arxiv.org/pdf/1704.04579v1

Reis, L., Maier, C., Mattke, J., Creutzenberg, M., & Weitzel, T. (2020). Addressing user resistance would have prevented a healthcare ai project failure. MIS Quarterly Executive, 19(4). Retrieved from https://aisel.aisnet.org/cgi/viewcontent.cgi?article=1489&context=misqe

Rice, R. E., & Love, G. (1987). Electronic emotion. Communication Research, 14(1), 85–108. https://doi.org/10.1177/009365087014001005

Richins, M. L. (1997). Measuring emotions in the consumption experience. Journal of Consumer Research, 24(2), 127–146.

Rozin, P., Haidt, J., & McCauley, C. (2009). Disgust: The body and soul emotion in the 21st century. In B. O. Olatunji & D. McKay (Eds.), Disgust and its disorders. Theory, assessment, and treatment implications (1st ed., pp. 9–29). American Psychological Association.

Russell, J. A. (1980). A circumplex model of affect. Journal of Personality and Social Psychology, 39(6), 1161–1178. https://doi.org/10.1037/h0077714

Ryan, J., & Snyder, C. (2004). Intelligent agents and information resource management. Proceedings of the Americas Conference on Information Systems (AMCIS) 2004.

Sako, M. (2020). Artificial intelligence and the future of professional work. Communications of the ACM, 63(4), 25–27. https://doi.org/10.1145/3382743

Schomakers, E.-M., Lidynia, C., & Ziefle, M. (2020). All of me? Users’ preferences for privacy-preserving data markets and the importance of anonymity. Electronic Markets, 30(3), 649–665. https://doi.org/10.1007/s12525-020-00404-9

Schuetz, S., & Venkatesh, V. (2020). Research perspectives: The rise of human machines: How cognitive computing systems challenge assumptions of user-system interaction. Journal of the Association for Information Systems, 21(2), 460–482. https://doi.org/10.17705/1jais.00608

Seeger, A.-M., Pfeiffer, J., & Heinzl, A. (2018). Designing anthropomorphic conversational agents: Development and empirical evaluation of a design framework. Proceedings of the International Conference on Information Systems (ICIS) 2018.

Shen, X. S., Chick, G., & Zinn, H. (2014). Playfulness in adulthood as a personality trait. Journal of Leisure Research, 46(1), 58–83. https://doi.org/10.1080/00222216.2014.11950313

Siau, K., & Wang, W. (2018). Building trust in artificial intelligence, machine learning, and robotics. Cutter Business Technology Journal, 31(2). Retrieved from https://www.cutter.com/article/building-trust-artificial-intelligence-machine-learning-and-robotics-498981

Siddike, M. A. K., Spohrer, J., Demirkan, H., & Kohda, Y. (2018). People’s interactions with cognitive assistants for enhanced performances. In T. Bui (Ed.): Proceedings of the Annual Hawaii International Conference on System Sciences, Proceedings of the 51st Hawaii International Conference on System Sciences. Hawaii International Conference on System Sciences.

Snyder, C. R., Harris, C., Anderson, J. R., Holleran, S. A., Irving, L. M., Sigmon, S. T., Yoshinobu, L., Gibb, J., Langelle, C., & Harney, P. (1991). The will and the ways: Development and validation of an individual-differences measure of hope. Journal of Personality and Social Psychology, 60(4), 570–585. https://doi.org/10.1037/0022-3514.60.4.570

Statista. (2020). Umsätze der wichtigsten Industriebranchen in Deutschland in den Jahren von 2017 bis 2019. Retrieved from https://de.statista.com/statistik/daten/studie/241480/umfrage/umsaetze-der-wichtigsten-industriebranchen-in-deutschland/

Stieglitz, S., Brachten, F., & Kissmer, T. (2018). Defining Bots in an Enterprise Context. Proceedings of the International Conference on Information Systems (ICIS) 2018.

Turing, A. M. (1950). Computing machinery and intelligence. Mind, LIX(236), 433–460. https://doi.org/10.1093/mind/LIX.236.433

Uchidiuno, J. O., Manweiler, J., & Weisz, J. D. (2018). Privacy and fear in the drone era. In R. Mandryk & M. Hancock (Eds.), Extended abstracts of the 2018 CHI Conference on Human Factors in Computing Systems (1–6).

Vaccaro, M., & Waldo, J. (2019). The effects of mixing machine learning and human judgment. Communications of the ACM, 62(11), 104–110. https://doi.org/10.1145/3359338

van Duijvenvoorde, A. C. K., Huizenga, H. M., & Jansen, B. R. J. (2014). What is and what could have been: Experiencing regret and relief across childhood. Cognition & Emotion, 28(5), 926–935. https://doi.org/10.1080/02699931.2013.861800

von Krogh, G. (2018). Artificial intelligence in organizations: new opportunities for phenomenon-based theorizing. Academy of Management Discoveries, 4(4), 404–409. https://doi.org/10.5465/amd.2018.0084

Weizenbaum, J. (1966). ELIZA—a computer program for the study of natural language communication between man and machine. Communications of the ACM, 9(1), 36–45. https://doi.org/10.1145/365153.365168

Winkler, R., Bittner, E., & Soellner, M. (2019). Alexa, Can you help me solve that problem? – Understanding the value of smart personal assistants as tutors for complex problem tasks. Proceedings of the Internationale Tagung Wirtschaftsinformatik 2019.

Wolf, C., Joye, D., Smith, T. E. C., & Fu, Y.-C. (Eds.). (2016). The SAGE handbook of survey methodology. SAGE reference.

Wuenderlich, N., & Paluch, S. (2017). A Nice and Friendly Chat with a Bot: User Perceptions of AI-Based Service Agents. Proceedings of the International Conference on Information Systems (ICIS) 2017.

Xu, H., & Gupta, S. (2009). The effects of privacy concerns and personal innovativeness on potential and experienced customers’ adoption of location-based services. Electronic Markets, 19(2–3), 137–149. https://doi.org/10.1007/s12525-009-0012-4

Zarifis, A., Kawalek, P., & Azadegan, A. (2021). Evaluating if trust and personal information privacy concerns are barriers to using health insurance that explicitly utilizes AI. Journal of Internet Commerce, 20(1), 66–83. https://doi.org/10.1080/15332861.2020.1832817

Zierau, N., Engel, C., Söllner, M., & Leimeister, J. M. (2020). Trust in smart personal assistants: A systematic literature review and development of a research agenda. In N. Gronau, M. Heine, K. Poustcchi, & H. Krasnova (Eds.), WI2020 Zentrale Tracks (pp. 99–114). GITO Verlag.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Responsible Editor: Alex Zarifis

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hornung, O., Smolnik, S. AI invading the workplace: negative emotions towards the organizational use of personal virtual assistants. Electron Markets 32, 123–138 (2022). https://doi.org/10.1007/s12525-021-00493-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12525-021-00493-0

Keywords

- Artificial intelligence

- Emotions

- Personal virtual assistants

- Interview study

- Technology aversion

- Appraisal theory