Abstract

Compared with traditional nadir observations, multi-angle hyperspectral remote sensing can obtain more spatial and spectral information and improve the inversion precision of structure information on the Earth’s surface. However, processing multi-angle remote-sensing images presents new challenges. Owing to the multi-angle sensors used to obtain multi-angle images, there are major differences in the spatial and spectral information between each angle in these images. Data from the Compact High-resolution Imaging Spectrometer (CHRIS) on Project for On-Board Autonomy (PROBA) should be pre-processed to extract the BRDF (Bidirectional Reflectance Distribution Functions). Given the limitations of the pre-processing software currently available for CHRIS/PROBA images, and the lack of metadata and auxiliary origin schedules, some CHRIS multi-angle images cannot be pre-processed correctly. In the study, to promote the application of multi-angle data, a formula for calculating key parameters according to in-orbit geometric imaging relationships is derived to design a multi-angle image process flow including image rollovers, bad-line repairs, orthorectification and atmospheric corrections accounting for terrain effects. Test results indicate that the pre-processing method can quickly and effectively recover multi-angle hyperspectral information and obtain spectral characteristics of multi-angle observations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Multi-angle hyperspectral sensors can obtain spatial and spectral information simultaneously and can also quickly obtain three-dimensional (3D) structure information on spatial distribution features via remote-sensing interpretation analysis. However, during data acquisition, remote-sensing imaging systems can produce a series of errors that reduce the quality and precision of remote-sensing data. Before the interpretation and inversion of the remote-sensing image, pre-processing should be performed to improve the image acquisition process via deformation and distortion correction to reduce geometry and radiation errors. (Zhao, Zhang and Qin 2004).

Before the bidirectional reflectance distribution function (BRDF) is extracted from the multi-angle remote-sensing image, two pre-processing activities must be performed. First, when the multi-angle image is registered, a large deformation occurs when the 3D terrain is projected onto a two-dimensional plane, as indicated by the different azimuth and zenith angles. This makes it difficult to obtain the ground-control points. The error should be less than one pixel for remote-sensing inversion, which should be resolved via accurate registration for the multi-angle remote-sensing images. Second, compared with traditional nadir observations, atmospheric correction of multi-angle remote-sensing images must consider two factors: the non-Lambert characteristics and the adjacent effect of non-vertical observations caused by atmospheric scattering (i.e., spatial mixing of radiance among nearby pixels).

Multi-angle sensors include spaceborne and airborne sensors. The Multi-Angle Imaging Spectral Radiometer (MISR) on the Terra Earth observation system satellite, which is operated by the US National Aeronautics and Space Administration’s Dryden Flight Research Center, comprises nine charge-coupled device (CCD) cameras with four bands each. Each camera has one observation angle (0°, ± 26.1°, ± 45.6°, ± 60° and ± 70.5°). On this satellite, the Moderate Resolution Imaging Spectroradiometer sensor can obtain multi-day bi-directional reflectance distribution function accumulations caused by orbital drift. The French and Japanese space agencies cooperated to develop the Polarization and Directionality of Earth Reflectance (POLDER) system, which obtains multi-angle reflectance of the same target when the POLDER system flies past. The European Space Agency’s Project for On-board Autonomy (PROBA) satellite launched the Compact High-Resolution Imaging Spectrometer (CHRIS) in 2001. It comprised five fly-zenith angles (FZAs) preset before launch (FZA = 0°, ± 36°, ± 55°) (Dong, Zhang and Liu 2006). Other airborne multi-angle sensors, including the Airborne MISR, have obtained multi-angle imagery similar to that obtained by the MISR (Garay and Mazzoni 2004). The Chinese Academy of Forestry Science’s Lidar, CCD and Hyperspectral system is a high-spectral sensor with a multi-angle imaging capability, and also including a CCD camera with high spatial resolution, and a laser radar (Pang, Li and Lu 2016).

CHRIS/PROBA is the spaceborne sensors that can obtain simultaneous high-spectral and multi-angle imagery data for the earth’s surface. They provide a wide spectral range and collect varied biophysical and biochemical data. CHRIS/PROBA has the unique advantage of obtaining imagery of the same location simultaneously from five different observation directions, and thereby plays an important role in estimating the structure of forest vegetation canopies and the leaf area index. Several studies have discussed the pre-processing methods used on CHRIS/PROBA multi-angle images. For example, the CHRIS-Box model in the Basic European Remote Sensing and Envisat Advanced Along-Track Scanning Radiometer and MERIS Toolbox software, provided by the European Space Agency (ESA), is an open-source toolkit that can be used to process CHRIS images. The satellite position, velocity and attitude structure data are used to calculate the geographic coordinates of each pixel of the earth’s surface according to the satellite imaging model, which requires a metadata file that includes the satellite ephemeris data. However, metadata of some regional CHRIS images cannot be obtained, and the auxiliary schedule required by the CHRIS-Box module in some regions cannot also be updated each week. This prevents some images from being pre-processed. Furthermore, the atmospheric correction function of the CHRIS-Box module does not account for the effect of terrain, and atmospheric or terrain correction software is not free. For example, the A spatially adaptive fast Atmospheric and Topographic Correction can load a digital elevation model (DEM) for terrain correction but greatly limits the popularization and application of remote-sensing multi-angle imagery (Schläpfer and Richter 2014).

In this study, a formula was derived according to in-orbit geometric imaging relationships and the key parameters of CHRIS attitude was calculated. The CHRIS multi-angle images of mountain area were used to evaluate the performance of the proposed method. We processed these images according to characteristics including rotation, noise removal, bad-line repair, orthorectification and atmospheric correction. The results showed that orthorectification accuracy was improved comparing with the traditional polynomial geometric correction, and obtain BRDF characteristics from CHRIS multi-angle images.

Site Description and Datasets

Study Area and Auxiliary Data

This study was conducted in two sites (Fig. 1). One study area is located at a forest hydrological experimental area of Da Ye Kou, which is at the south of Qilian Mountains in Gansu province, China. The natural forest comprises mainly Qinghai spruce tree, and background is covered by grass and moss. The geographical coordinates are 38° 29′ ~ 38° 35′N, 100° 11′ ~ 100° 21′E) and the elevation is from 2700 to 3000 m, and the terrain is rugged and mountainous with a steep slope (average 40°), resulting in high image distortion. The other study area is located at Liangshui National Nature Reserve, Yichun City, Heilongjiang Province, China. The geographical coordinates are 47° 6′ ~ 47° 16′N, 128° 17′ ~ 128° 37′E and the elevation is from 280 to 707 m, and the terrain is rugged and mountainous with a steep slope (average15°).

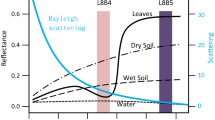

To reduce the geometrical and radiation distortion caused by topographic relief, DEM (digital elevation model) with 1 m resolution derived from LIDAR points that were obtained using laser scanner RieglLMS-Q560, and Enhanced Thematic Mapper Plus (ETM +) images including multispectral and panchromatic band(15*15 m) were required to collect ground-control points. And field spectra of several land surface types were collected with the ASD Field Spectrometer in nadir measurement configuration under clear sky conditions. All spectra were converted to absolute reflectance by reference measurements over a Spectralon panel with known spectral properties. All the auxiliary data was found at dataset of Heihe Watershed Allied Telemetry Experimental Research (http://data.tpdc.ac.cn/zh-hans/special/4e7b97a6-ee1f-4b51-83e5-04a100993973/).

CHRIS Data

CHRIS/PROBA image(18*18 m) was acquired on June 13th, 2004(for Da Ye Kou study area) and May 19th, 2012 (for Liang Shui study area), taken in Land Mode 3. CHRIS data, including metadata, are saved in the hierarchical data format (HDF). Each image file contains metadata, their indices and raw pixel radiance units of μW·nm-1·m-2·str-1. Information is saved using a band-sequential method. The metadata include the sensor type, satellite altitude, observation target name and location, fly-by time and sensor information. The solar zenith and azimuth angles are calculated using the imaging time and ground-target location information. To minimize image time, only one camera was used for CHRIS to capture five images from different angles along the orbit operating in a ‘push-broom’ fashion. The second through fourth images (generally ± 36° ) were upside down, compared with the other images (Wang 2009).

HDFExplorer, a Java-based HDF viewer developed by the National Center for Supercomputing Applications, is a professional software tool for reading CHRIS metadata. However, it could not open the CHRIS image. Therefore, ENVI was used to process the image (Cutter 2006). Table 1 presents the CHRIS metadata and the key angles for orthorectification in the study area. The solar zenith was 21º and the azimuth angle was 155° for Da Ye Kou study area, and solar zenith was 56º and the azimuth angle was 97° for Liang Shui study area. These values were calculated using imaging time and ground-target location. Figure 2 shows the CHRIS image acquisition and illumination geometry of the study area.

Method and Result

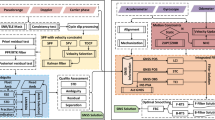

The technical flowchart of the methods applied in this paper is presented in Fig. 3. This figure summarizes the steps of the CHRIS images pre-processing and comparison with the field measured spectra of three typical land surface features.

Rotate and Remove Noise

Owing to the push in the opposite direction, images at ± 36° were reversed from north–south compared with the first image. Prior to geometric correction, the image was rotated using ENVI and then saved in the band-interleaved-by-pixel (BIL) format as required by the Fast Line-of-sight Atmospheric Analysis of Spectral Hypercubes (FLAASH) atmospheric correction module. CHRIS operated in ‘push-broom’ mode, and stripe noise occurred for two reasons. The different CCD response functions were caused by differences in the optical properties and the arrangement of CCD units. The stripe noise can be considered constant. Minor differences in the response functions of the CCD optical element, caused by the influence of heat disturbances, mean that the vertical banding noise is not always constant (Dong et al. 2006). The official processing software provided by ESA, HDFclean, was used to repair bad lines and reduce stripe noise (Cutter 2006).

Geometric Processing

Owing to the attitude, altitude and velocity of the sensor platform, the earth's rotation and the terrain relief, there were geometric distortions, such as extrusions, twists, stretches and migrations of pixels in the image and the ground target. The proposed geometric orthorectification relies on a parametric approach that accounts for the viewing geometry and geometric distortion caused by the sensor, platform and topography, including ground-control points and camera-image modules. This method potentially provides high accuracy, robustness and consistent orthorectification.

The physical geometric model represents the path of light rays from a ground object to the sensor using sensor and platform data and accurately simulates the imaging geometry relationship. However, the solution process is complex and some core parameters of the orbit and sensor cannot be obtained, limiting the application of the model. Thus, the rational polynomial coefficients (RPC) model is widely used to model the sensor geometry using a polynomial approximation to model light rays from a ground location to the focal plane of the sensor (Wang, Liu and Luo 2010; Liu, Zhang and Wang 2006). The model is represented by the following equation:

where \({\text{Num}}_{S} \left( {X,Y,Z} \right)\), \({\text{Den}}_{S} \left( {X,Y,Z} \right)\), \({\text{Num}}_{L} \left( {X,Y,Z} \right)\) and \({\text{Den}}_{L} \left( {X,Y,Z} \right)\) can be expressed as follows:

where (X, Y, Z) is the space coordinates of ground-control point and X is latitude, Y is longitude, and Z is altitude. (S, L) is the normalized image pixel coordinates. Thus, the object and image coordinates are generally normalized to the range of – 1–1, and the first term of the polynomial represents projection distortions from the optical system. The second item represents distortions caused by the earth’s curvature, atmospheric refractions and lens distortion errors. There are also unknown errors (e.g., camera shaking), as represented by the third item (Liu, Wang and Miao 2004; Zhang and Liu 2004).

Given that the test site is situated in high, mountainous and rugged terrain, an RPC model for orthorectification of the five viewing angles was applied. The RPC model provides a precision orthorectification solution for multi-source sensors and can be implemented using commercially available image processing tools such as ENVI/Orthorectification (Ikonos 2001). The RPC model can mathematically describe all distortions in the platform (i.e., position, velocity and orientation), sensor (i.e., viewing angles, instantaneous field-of-view and panoramic effects), earth (i.e., ellipsoid and relief) and cartographic projection. RPCs are computed via a digital photogrammetry technique that uses a collinearity equation to construct the sensor geometry, where the object point, perspective center and image point are all on the same line in space. Such a model requires typical orbit and sensor information required for the sensor type, focal length, principal points (x0 and y0), X and Y pixel size of the CCD, geoid offset and across- and along-track incidence angle. Information on the viewing geometries of the CHRIS images is presented in Table 2.

RPC orthorectification requires the height above the ellipsoid. For DEMs referenced to the mean sea level, accurate orthorectification requires a single geoid offset for the geographic area covered by the image. A geoid height calculator is normally used. The along-/across-track incidence angles shown in Table 1 are the most important parameters to determine accuracy of orthorectification and were calculated using the formula of Equ. 3, which was deduced according to Fig. 4. When viewed from the ground point corresponding to the scene center, the along-track incidence angle has a positive value if the viewing direction is northward. The across-track incidence angle has a positive value if the viewing direction is eastward.

In this study, the multispectral and panchromatic bands of ETM + geocorrected images are fused to get the 15 m resolution images, and combining with 1 m resolution DEM to collect about 40 ground-control points to compute the RPCs for two study areas. This process required a series of transformations involving the pixel, camera, image-space and ground coordinate systems. CHRIS was orthorectified using universal transverse Mercator projection, zones 52 N and a WGS84 ellipsoid. If the error was greater than 1 pixel, automatic registration was used to perform the second registration to make the X, Y direction error smaller than 1 pixel. The root-mean-square error (RMSE) for the CHRIS orthorectification images using RPC model is shown in Tables 3 and 5. In order to highlight the advantages of the orthorectification process designed in this paper, polynomial geometric correction method was used to correct the CHRIS images of Da Ky Kou study area using the same 40 ground-control points, and the root-mean-square error (RMSE) for the CHRIS rectification images is shown in Table 4.

Comparing Table 3 with Table 4, the results showed that total-RMSE of CHRIS orthorectification image using RPC model for five angles is 1.02, 0.8, 0.67, 0.71, 1.11, and they are less than that of CHRIS rectification image using polynomial geometric correction. The same results could be gotten from Tables 5 and 6. Moreover, the RMSE of CHRIS orthorectification image using RPC model for + 36°, 0°,− 36° is less than one pixel and RMSE for + 55°,− 55° is near the value of one pixel, while only the RMSE of CHRIS orthorectification image using polynomial geometric correction for 0° is less than one pixel. The result also showed that total-RMSE, X-RMSE and Y-RMSE of CHRIS orthorectification image using RPC model are all less than those of CHRIS rectification image using polynomial geometric correction for five angles especially when the zenith angle is larger. However, X-RMSE for RPC model and polynomial geometric correction are less than Y-RMSE for both of the two models, which showed that the geometric deformation in Y direction is larger than in X direction for CHRIS. Because of the large topographic relief in a high mountain area, and considering the multiangular imaging characteristics, the deformation of CHRIS images in study area is very large. However, the polynomial geometric correction only uses the simple polynomial to simulate the image deformation and did not consider the deformation factors of multiangular imaging under the complex terrain condition, which lead to bigger image distortion. In the paper, the orthorectification process based on the precise along-track and across-track angle can improve the accuracy of CHRIS images comparing with traditional polynomial geometric correction.

Atmospheric Processing

The atmospheric effect of the sensor radiance was corrected by mathematically modeling the physical behavior of the radiation as it passed through the atmosphere (Freemantle, Pu and Miller 1992; Stancalie and Gastellu–Etchegorry 1994). Atmospheric correction of the CHRIS radiance data was performed using ENVI/FLAASH, which is based on the MODTRAN-4 model (Matthew, Adler–Golden, Berk, Richtsmeier et al. 2000; Berk, Bernstein, Anderson, et al. 1998). FLAASH was recently adapted to include an option to process non-nadir sensors. The point on the ground lying vertically beneath the perspective center of the aerial camera lens or the satellite sensor viewing geometry is located by accounting for varying path lengths through the atmosphere and varying transmittances according to the multipole observation angles (see Table 1). FLAASH is generally used to correct atmospheric data from optical spaceborne sensors assuming flat terrain conditions. Before FLAASH can perform these corrections, a c-correction program based on IDL must be used to account for terrain effects by incorporating DEM data and their derivatives, such as the slope and aspect (Richter 1998; Richter and Scalper 2002). In the present study, this process combined the FLAASH and c-correction program, which makes it suitable for correcting atmospheric sensor data acquired over a rugged terrain (Wang 2017).

The input image for FLAASH must be radiometrically calibrated radiance image in the band-interleaved-by-line (BIL) format. FLAASH requires the viewing and solar angles and the mean surface elevation of the measurement, and it assumes a certain model atmosphere, aerosol type and visible range. The water–vapor column amount, which is important for FLAASH, was retrieved from selected wavelength channels of the CHRIS image. However, the multi-angle sensor CHRIS is not supported by FLAASH. Thus, the wavelengths and full-width at half-maximum values were used to retrieve water vapor. Then, the multi-angle sensor CHRIS was defined in FLAASH. The wavelength information and full-width at half maximum of CHRIS mode 3 are presented in Tables 7 and 8 (Milton 2006). FLAASH can correct images collected in either vertical (nadir) or slant-viewing geometry.

Discussion

The processing of remote-sensing images is indispensable for quantitative inversion and classification, and the processing results directly affect the accuracy. Image processing is required especially for multi-angle hyperspectral imagery. Varying observation angles caused varying transmittance paths. Images with large observations were significantly influenced by the noise and atmosphere, leading to significant differences in the radiance values among the images. Additionally, the geometric distortion increased with the observation angle, and processing was needed to reduce the geometry and radiation errors for improving the image data quality.

Tables 3,4,5 and 6 indicate that the orthorectification result using the RPC model with the accurate along-/across-track incidence angles can effectively eliminate geometry deformation caused by different observation angles and rugged terrain. We compared the five angle images with the reference image, and the RMSE for the X and Y of each angle image was less than 1 pixel or nearby 1 pixel. This is crucial for the BRDF inversion application, because pixels of the same surface object from different angles must be corrected to correspond each other. The registration errors increased with the observation angle, indicating that the geometric deformation increased with the viewing angle. The registration errors from the X direction were larger than those from the Y direction, suggesting that the geometry deformation from the along-track direction caused by viewing angle was larger than that from the across-track direction. The along-/across-track angles in Table 1 indicate that the deformation from the Y direction was larger. The orthorectification method in the paper based on the precise along-track and across-track angle can improve the accuracy of CHRIS images comparing with traditional polynomial geometric correction. The previous orthorectification for CHRIS data did not show the exact a formula for calculating the along-/across-track incidence angles according to in-orbit geometric imaging relationship (Mathias Kneubühler 2005).

The atmospheric correction was processed for the CHRIS based on the terrain correction. Figure 5 shows that comparison of atmospherically corrected CHRIS data of five viewing angles and field measured spectral data for forest, background and grass. For the forest, the field measured spectral curve is close to the spectral curve(FZA = + 55°) within optical wavelength and is close to the spectral curve(FZA = 0°) within near-infrared wavelength, and it can be concluded from the validation that the atmospherically corrected CHRIS data fit the field measurement data well, except for the Land Mode 3 channels 7 (661 nm). For the background and grass, the difference between the ground measurement spectral data and CHRIS data (FZA = 0°) is little bigger and the trend of spectral curve is consistent. Figure 6 shows reflectance of the five angles in the red band(661 nm) and near-infrared band(895 nm), which showed that the reflectance (FZA = 0°) was the largest and closest to the ‘hotspot’, according to the relationship of the sun, sensor and position of the target, as shown in Table 1. This indicates that there was forestry structure information hidden in the BRDF data. The spectral curve for five angles from Fig. 5 corresponds to the CHRIS viewing geometries (see Fig. 6) and exhibits the same trends for forward- and backward-scattering angles in the red and near-infrared wavelength regions. It can be concluded from the results that atmospheric correction of the CHRIS radiance data derived in the paper is effective and universal method, which is performed using free computer program without any limits; however, the previous atmospheric correction of the CHRIS radiance data is performed using ATCOR-2/3 usually (Mathias Kneubühler 2005).

From the result from Fig. 6, the BRDF of three type ground features after atmospheric correction using DEM was bigger than that unusing DEM. Because of influence from topographic relief, the shade slope gets less solar radiation than the flat surface and the reflectance of each angle is lower. Moreover, the hot spot effect was attenuated. The result verified that the atmospheric correction with FLASSH using DEM cloud reduces the geometrical and radiation distortion caused by topographic relief.

Conclusion

In this study, a formula was derived for calculating the key CHRIS parameters for the along-/across-track incidence angles according to the in-orbit geometric imaging relationship, and other auxiliary parameters needed for geometric and atmospheric processing were collected. Then, the pre-processing flow of the CHRIS multi-angle remote-sensing data, where the IDL secondary development was used for processing, was designed. Thus, pre-processing for geometric and atmospheric processing of a CHRIS dataset was successfully applied to a full CHRIS acquisition dataset, using five different viewing angles over rugged terrain. The method leveraged digital elevation data and adapted to account for tilted angle sensors (e.g., varying path length and transmittance). The results indicate that the proposed image processing method can reduce the geometric distortion caused by different observation angles and the atmospheric influence on different angle images. This provides strong support for quantitative inversion using multi-angle remote-sensing image.

Furthermore, in view of the lack of a metadata file and auxiliary schedule data for some regions, which are needed by the CHRIS-Box module, some CHRIS multi-angle images could not be pre-processed. However, CHRIS-Box’s atmospheric correction function did not account for terrain effects. Unfortunately, atmospheric correction software with the terrain correction functionality is usually not free. This significantly limits the popularization and application of remote-sensing multi-angle imagery. In this study, a formula was derived for calculating the along- and across-track incidence angles according to in-orbit geometric imaging relationships, and an effective and universal method for processing CHRIS and other multi-angle imagery was designed. CHRIS is one of the few multi-angle sensors with great potential, and to promote the application of CHRIS data, automatic and accurate pre-processing of data for inversion application using multi-angle imagery to monitor earth resources should be further researched.

References

Berk, A., Bernstein, L. S., Anderson, G. P., Acharya, P. K., Robertson, D. C., Chetwynd, J. H., & Adler-Golden, S. M. (1998). modtran cloud and multiple scattering upgrades with application to AVIRIS. Remote Sensing of the Environment, 65, 367–375.

Cutter, M. A., (2006). HDFclean V2 Help.

Dong, G. X., Zhang, J. X., & Liu, Z. J. (2006). A comparison of several destriping methods for CHRIS/PROBA data. Remote Sensing Information, 6, 36–39.

Freemantle, J. R, Pu, R., and Miller, J. R. (1992). ‘Calibration of Imaging Spectrometer Data to reflectance using pseudo-invariant features’. In Proceedings of the fifteenth Canadian Symposium on Remote Sensing. (pp. 452–457).

Garay, M. J., & Mazzoni, D. (2004). Making sense of large, complex datasets: Using misr’s multiangle and multispectral information to detect clouds and aerosols. Eos Trans, 85(47), SF51A – SF106.

Ikonos, J. G. (2001). ‘Stereo feature extraction-RPC approach.’ The American society for photogrammetry and remote sensing (ASPRS) 2001 annual conference proceedings. Saint Louis: American Society for Photogrammetry and Remote Sensing.

Liu, J., Wang, D. H., & Miao, G. M. (2004). High precision stereo positioning of IKONOS satellite images based on rpc model. Bulletin of Surveying and Mapping, 9, 1–9.

Liu, J., Zhang, Y. S., & Wang, D. H. (2006). Precise positioning of high spatial resolution satellite images based on RPC models. Acta Geodaetica et Cartographica Sinica, 13(1), 1–4.

Kneubühler, M., Koetz, B., Richter, R., Schaepman, M., Itten, K., (2005) Geometric and radiometric pre-processing of chris/proba data over mountainous terrain. Proceedings of the 3rd ESA CHRIS/Proba Workshop: Frascati, (pp. 21–23) Italy: Springer

Matthew, M. W., Adler-Golden, S. M., Berk, A., Richtsmeier, S. C., Levine, R. Y., Bernstein, L. S., et al. (2000). Status of atmospheric correction using a MODTRAN4-based algorithm. SPIE Proceedings, Algorithms for Multispectral, Hyperspectral, and Ultraspectral Imagery VI, 4049, 199–207.

Milton, T., (2006) ‘The contribution of CHRIS/ PROBA to NCAVEO, a knowledge Transfer network for the Calibration and validation of EO data’. Proceeding of 4th ESA CHRIS/PROBA Workshop. Frascati.

Pang, Y., Li, Z. Y., & Ju, H. B. (2016). LiCHy: The CAF’s LiDAR, CCD and hyperspectral integrated airborne observation system. Remote Sensing, 398(8), 1–16.

Richter, R. (1998). Correction of satellite imagery over mountainous terrain. Applied Optics, 37(18), 4004–4015.

Richter, R., & Scalper, D. (2002). Geo-atmospheric processing of airborne imaging spectrometry data. Part 2: Atmospheric/topographic correction. International Journal of Remote Sensing, 23(13), 2631–2649.

Schläpfer, D., and Richter, R. (2014). ‘Evaluation of Brefcor BRDF Effects Correction for Hyspex, CASI, and APEX Imaging Spectroscopy Data.’ IEEE WHISPERS Lausanne: 4.

Stancalie, G., Gastellu-Etchegorry, JP., (1994). Correction of the atmospheric effects for the high-resolution airborne spectrometric data. In Proceedings of the First International Airborne Remote Sensing Conference and Exhibition, Strasbourg, France (Vol.1, pp. 121–130).

Wang, H. P., Liu, X. G., & Luo, H. X. (2010). IRS-P5 satellite image ortho corrections using RPC model. Earth Science—Journal of China University of Geosciences, 3, 485–489.

Wang, Q. 2009. ‘Retrieval of Conifer LAI in Changbai Based on the Multiple-angle Model’. PhD dissertation. Northeast Forestry University.

Wang, Q. (2017). ‘Research on Forest Parameters Retrieval based on Lidar Data and Multi-Angle Remote Sensing Model’. PhD dissertation Harbin Institute of Technology, China.

Zhang, Y. S., & Liu, J. (2004). The positioning algorithm based on RPC model and its optimizing of stereo images from high resolution remote sensing satellites. Engineer of Surveying and Mapping, 13(1), 1–4. ((in Chinese with English abstract)).

Zhao, Y. X., Zhang, S., & Qin, D. H. (2004). Principle and method of remote sensing application analysis. Beijing: Science Press.

Acknowledgements

The paper was supported by the Natural Science Foundation of Heilongjiang Province (Grant No. LH2020D013), Open Fund of State Key Laboratory of Remote Sensing Science (Grant No. OFSLRSS201809), Innovation Team Foundation of the HLJ Institute of Technology (Grant No.2018CX04), National Natural Science Foundation of China (Grant No.41201435), College Innovative Entrepreneurial Training Plan Program (Grant No. 201911802047), and Provincial Echelon Training Program of Heilongjiang Institute of Technology.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

This article is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

About this article

Cite this article

Wang, Q., Pang, Y., Jia, W. et al. Effective and Universal Pre-Processing for Multi-Angle CHRIS/PROBA Images. J Indian Soc Remote Sens 49, 1581–1591 (2021). https://doi.org/10.1007/s12524-020-01288-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12524-020-01288-0