Abstract

For over 3 million years hominins held stone-cutting tools in the hand, gripping the portion of tool displaying a sharp cutting edge directly. During the late Middle Pleistocene human populations started to produce hafted composite knives, where the stone element displaying a sharp cutting edge was secured in a handle. Prevailing archaeological literature suggests that handles convey benefits to tool users by increasing cutting performance and reducing musculoskeletal stresses, yet to date these hypotheses remain largely untested. Here, we compare the cutting performance of hafted knives, ‘basic’ flake tools, and large bifacial tools during two standardized cutting tasks. Going further, we examine the comparative ergonomics of each tool type through electromyographic (EMG) analysis of nine upper limb muscles. Results suggest that knives (1) recruit muscles responsible for digit flexion (i.e. gripping) and in-hand manipulation relatively less than alternative stone tool types and (2) may convey functional performance benefits relative to unhafted stone tool alternatives when considered as a generalised cutting tool. Furthermore, our data indicate that knives facilitate greater muscle activity in the upper arm and forearm, potentially resulting in the application of greater cutting forces during tool use. Compared to unhafted prehistoric alternatives, hafted stone knives demonstrate increased ergonomic properties and some functional performance benefits. These factors would likely have contributed to the invention and widespread adoption of hafted stone knives during the late Middle Pleistocene.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

For over three million years hominins held and used stone-cutting tools directly: a sharp rock was simply gripped in the hand (Braun et al. 2019; Harmand et al. 2015; Semaw et al. 1997, 2003). During the late Middle Pleistocene, however, humans began to also hold and use stone-cutting tools indirectly (Barham 2013; Rots 2013; Rots and Van Peer 2006; Wilkins et al. 2012; Wilson et al. 2020; but see Alperson-Afil and Goren-Inbar 2016). That is, sharp rocks became hafted components of composite tools whereby they were secured into a handle (usually a piece of bone, wood, or antler). By gripping the handle, rather than the sharp rock, hominins invented what we recognize as the modern knife, one of the few technologies introduced during the Palaeolithic that is still habitually used today (Barham 2013). Investigating the question of why knives were invented and selected thus contributes to our broader understanding of at least 300,000 years of human technological development in the realm of composite tools (e.g. Barham 2013; Eren 2012; Graesch 2007; Mazza et al. 2006; Perrone et al. 2020; Rousseau 2004; Shott 1995; Smallwood et al. 2020; Wilkins et al. 2012; Wilson et al. 2020).

The earliest evidence for hafted cutting tools appears in Europe prior to MIS 6 (Mazza et al. 2006; Rots 2013). Subsequently, evidence for the use of hafted technologies becomes steadily more widespread and complex in Africa and Eurasia (Rots et al. 2011), and spreads to the Americas as part of their colonisation by modern humans ca. 15,000–13,000 cal B.P. (Meltzer 2021). There is substantial variation in the size, shape, and technical aspects of the lithic implements hafted into handles, and diverse cultural, functional and technical explanations for this variation (e.g. Lombard 2006; Rots and Van Peer 2006; Iovita 2011). Moreover, at times lithic implements could have been simply fixed into mastic/tar during use (Mazza et al. 2006; Niekus et al. 2019), or held in a supple piece of leather (Barham 2013). The present study is not, however, focused on when hafted knives were first produced, how they vary in form and lithic technology, or how hafting may have influenced stone tool morphology through resharpening. Rather, we seek to better understand why they may have been invented and adopted in prehistory.

Rots (2013) has noted hafting is a complex behaviour requiring multiple technological components and isolating one causal mechanism is not straightforward. Indeed, there may have been several factors acting simultaneously in the emergence, adoption, and evolution of the hafted knife (see Keeley 1982). Prehistoric people may or may not have been consciously aware of these factors (e.g. Thomas et al. 2017). Or an advantageous factor documented by modern experiments may be additional to, subsidiary to, or even incidental to the factor(s) that prehistoric people actually selected (e.g. Bebber et al. 2017). Nevertheless, whatever advantages that arise from a hafted knife relative to an unhafted one come with substantial costs: the handle and haft must be designed and constructed to withstand a variety of mechanical stresses that, if not properly addressed, could damage the tool, the user, or the cut substrate (Barham 2013:179–182; Clarkson et al. 2015:122). This design and construction takes time and energy (e.g. Binford 1986; Weedman 2006; Graesch 2007; Nami 2017), but how much of each depends on production choices. Barham’s (2013:192,199) survey of a sample of ethnographic hafted knives, for example, suggests that time and energy production costs could be altered via the use of different haft types including inclusion hafts, cleft hafts, composite hafts, sandwich hafts, or applied hafts.

Barham (2013) and Rots (2010) speculate that hafting may first have developed in response to high-impact chopping activities, before later being applied within cutting and scraping tasks. Hafting may even have originated during composite spear production, with shorter-handled knives following later (notably, hafted stone spears held close to the point can function in the same way as a knife) (Wilkins et al. 2014). Based on current archaeological evidence, however, it is equally likely that hafting originated to facilitate diverse generalised cutting tasks that include ‘slicing’ actions (c.f., Key 2016). Indeed, hafting allows the production of large cutting tools even when raw material factors limit lithic size potential (Rots 2010). This is particularly important during the late Middle Pleistocene when increasing social networks and population mobility would have made access to lithic raw material sources more unpredictable (Brooks et al. 2018; Potts et al. 2018). Possibly, the advantages of a handle were identified across multiple types of activity at the same time. What is clear, however, is that once hafting is invented, it continued to be selected for until the present day.

Barham (2013:2; see also Keeley 1982) has provided the most synthetic recent exploration into why modern humans invented hafted knives, and composite tools in general: “Almost any activity that involved cutting, scraping, chopping, and piercing could be made more effective by incorporating a handle or shaft with a basic tool” (but see Clarkson et al. 2015). Churchill (2001: 2954) summarises both the ergonomic and functional performance arguments for this increased effectiveness, suggesting that hafted tools possess a mechanical advantage over non-hafted tools such that the relative amount of muscular effort is less when using the former compared to the latter. Systematic empirical or experimental support for this ‘muscular effort’ hypothesis, however, is lacking. During goat butchery, Shea et al. (2002:58) noted that hafted stone points were "vastly superior to stone points held in the hand”. Rots et al. (2020:1) link handles to increases in grip comfort and ‘mechanical or other advantages’. Morin (2004) demonstrated hafted bifacial stone knives to be effective for butchery, even outcompeting steel alternatives in fish butchery, but unfortunately no hand-held stone flake condition was used as a comparative standard (see also Goldstein and Shaffer 2017; Lombard et al. 2004; Rimkus and Slah 2016; Smallwood et al. 2020). Similarly, in an elephant butchery experiment, Gingerich and Stanford (2018) suggest that hafting increases the amount of force and number of performed cutting strokes, but again, there is no hand-held flake comparative standard.

Willis et al. (2008) conducted a fish butchery study with the purpose of investigating the occurrence of cut marks on fish bone. Their results, however, may touch upon the efficiency of hafted versus unhafted tools. They used hand-held stone tools and a modern metal knife to butcher 30 fish. The hand-held stone tools produced substantially more cut marks in fifteen fish (five catfish, five salmon, five flounder) than did the handled metal knife in an identical sample (Willis et al. 2008). If these results can be attributed to the handheld versus handled nature of each implement, then the modern knife clearly facilitated a cleaner, more efficient butchery. Yet, their documented difference in cutmarks could also potentially be due to the differences in raw material (e.g. metal versus stone) or cutting edge form. One way to resolve this issue would be for future experiments to assess several conditions while controlling for edge form: handheld stone flakes; handheld metal blades; hafted stone knife; hafted metal knife; etc.

To our knowledge, the study that comes closest to supporting the mechanical and muscular advantages of hafting is that of Walker and Long (1977). In a cattle metapodial butchery task, they found that a handheld obsidian flake tool could be used effectively at loads up to 4 kg, whereas a handled steel knife could “comfortably be used at a peak pressure of 10 to 12 kg during cutting” (Walker and Long 1977:611). Unfortunately, similarly to Willis et al. (2008), no hafted stone tool was used, and it is therefore difficult to attribute the pressure differences to hafting versus other factors.

To more clearly understand which factors contributed to the invention and widespread use of hafted stone knives over non-hafted prehistoric alternatives it is, therefore, important to understand both ergonomic and functional performance factors (although the two are not mutually exclusive). Indeed, both have the potential to shed light on whether hafted knives provided benefits enough to warrant the substantial costs (time, resources, energy, risk of breakage, etc.) associated with their production (e.g. Binford 1986; Graesch 2007; Nami 2017). To this end, here we investigate whether hafted stone knives perform cutting tasks more efficiently than ‘basic’ flake tools and large bifacially flaked tools. Further, we use electromyography (EMG) to record muscle activity (amplitude) in nine upper limb muscles during the use of each tool type, providing an understanding of their relative ergonomic properties. If stone knives perform cutting tasks more efficiently or effectively than non-hafted alternatives, or they require reduced muscular effort when undertaking the same cutting task, then these benefits are likely to have contributed to the invention and widespread adoption of hafted stone knives.

Methods

Comparing functional efficiency

The cutting performance of stone tools can be assessed in diverse ways. Broadly, previous studies have been divided into actualistic experiments displaying high external validity (Jones 1980; Toth and Schick 2009; Merritt and Peters 2019), controlled cutting tests performed by machines with high internal validity (Collins 2008; Bebber et al. 2019; Calandra et al. 2020), and those in a middle range that recruit large numbers of participants to use tools within controlled laboratory based conditions (Prasciunas 2007; Key and Lycett 2019; Bilbao et al. 2019; Biermann Gürbüz and Lycett 2021). The present experiment falls into the latter category, with participants being recruited to use three stone tools during two laboratory cutting tasks; thus, balancing some aspects of both internal and external validity (Eren et al. 2016; Lycett and Eren 2013; Mesoudi 2011).

Participants

Thirty participants were recruited from the graduate student population at the School of Sports and Exercise Sciences at the University of Kent. A nominal remuneration of £10 (~ $13) was used to encourage participation. Two had previous experience using, or education relating to, stone tools. There was a female to male ratio of 1:2. All confirmed an absence of upper limb injuries or other medical conditions that would inhibit their participation. All gave informed consent and ethical approval was granted by the University of Kent School of Sports and Exercise Science (ref: prop 131_2016_17). Biometric variation within participant samples has repeatedly been demonstrated to impact stone tool performance (Key and Lycett 2019). To ensure grip strength and hand dimension variation did not impact performance or muscle activation levels, each participant used one example of each tool type. This does not control for any impact that form variation within individual tool types may have when used by individuals with different hand sizes. Importantly, however, any impact this may have on performance or muscle recruitment will be minor relative to observed between-tool differences.

Stone tool assemblages

Each participant used one stone knife with a hafted handle and bifacially flaked point (Fig. 1C), one ‘basic’ flake tool held directly in the hand (Fig. 1A), and one non-hafted large biface (a large cutting tool [LCT] biface), which was again held directly in the hand (Fig. 1B). It was not our intention to compare hafted stone knives with technological alternatives present immediately prior to and during their emergence, but rather, to understand their use relative to a least cost alternative (expedient [‘basic’] flake tools 4–6 cm in length [Jeske 1992; Stevens and McElreath 2015; Vaquero and Romagnoli 2018]) and an alternative tool type also displaying marked forward extension, similar bifacial edge morphology, and a dedicated globular gripping area (i.e. a ‘handle’) designed to increase ease-of-use (Gowlett 2006, 2011, 2020).

In total, 90 replica tools were used in this experiment. All were produced using British flint. The knives ranged from 17.5 to 23.7 cm in length and, based on the stone blades’ absolute measures (not technical attributes; Table 1; Fig. 1) and width:thickness ratios (mean = 3.38; range 2.68–4.67), could potentially be representative of any number of Pleistocene or Holocene bifacial styles, including Middle Palaeolithic bifaces (e.g. Iovita 2014; Joris 2006; Kozlowski 2003; Reubens 2013); Szeltian or Bohunician leaf points (e.g. Kaminská et al. 2011; Škrdla 2016); early- or middle-phase Clovis (e.g. Bradley et al. 2010); North American Archaic or Woodland biface types (e.g. Horowitz and McCall 2013; Justice 1987); among others. This does not mean that they are representative of the variation seen in these biface types, but rather, that they fall within observed ranges. A combination of wooden and antler handles were used, hafted using natural fibres and plant resin adhesive. The ‘basic’ flake tools were knapped using free-hand hard hammer percussion from two cores and were selected on the basis of displaying homologous cutting edges. LCT bifaces were either knapped fresh for this experiment using hard and soft hammer percussion, or were repurposed from previous studies (Key and Lycett 2017a) after having been resharpened around their entire circumference. The size of all flakes and LCT bifaces conform to those regularly observed in Palaeolithic assemblages (e.g. Emery 2010; Lin et al. 2013; Gowlett 2015; Reti 2016). It is important to note that this experiment is designed to focus on the impact of between tool-type morphological variation and not more minor variation observed within each tool assemblage (or the more minor differences observed when compared to specific artefact types or assemblages).

Cutting tasks

Stone tool performance varies between technologies dependent on the material context in which they are used (Jones 1980; Jobson 1986; Key and Lycett 2017b; Gingerich and Stanford 2018; Merritt and Peters 2019). Some tools are more suited to precision slicing activities, others to cutting large volumes of material, and some to heavy-duty ‘chopping’ activities. To assess the comparative performance of stone knives, flakes, and LCT bifaces in multiple material contexts, each was required to perform two different cutting tasks. These tasks were undertaken in the same order by all participants. To reduce any impact that the novelty of flakes and LCT bifaces may have had compared to the knives, participants practised with all tool types prior to starting the cutting tasks.

The first consisted of slicing a 2 cm deep incision into fresh potter’s clay while following a 90-cm-long ‘S’ design. The design, which was identical for all tools, included multiple curves to ensure dynamic cutting motions were used. It was traced onto the clay using a stencil (Fig. 2). The clay was fixed into a metal stand measuring 70 × 180 cm, which was placed onto the floor and inclined at an angle of ~ 15–20°.

The second task required tools to cut through 36 pieces of 4 mm thick polypropylene rope which were suspended between metal hoops. There were three sections to the rope cutting task. The first suspended eleven 9–11 cm long rope segments in a circular array, the second suspended five 16 cm segments vertically, while the last suspended twenty 12 cm long rope segments horizontally (Fig. 2). These variable conditions ensured tools cut the rope in dynamic ways using a variety of motions. The clay provides substantial portions of material to cut but with relatively low resistance to a cutting edge, while the rope provides an extended cutting task requiring a degree of precision.

Of course, neither task was undertaken in the Palaeolithic, and nor are the cutting actions as diverse as the varied cutting activities performed during prehistory. Nonetheless, the tasks do imitate similar (not identical) cutting actions to behaviours that were performed (e.g. butchery, woodworking), are easily replicable, and are identical for all tools and participants. Moreover, the ethical benefits of using these materials over animal products are substantial. In each case, participants were asked to perform the tasks as quickly as possible, but were informed they must remain in control of the tool and perform ‘pressing and slicing’ cutting actions in all instances (c.f. Atkins et al. 2004). Participants were free to grip and apply the tools in whatever way felt most comfortable. Videos were taken of all tool use events, from which tool use efficiency was recorded as the time taken in seconds (s) to complete each task. If participants paused, readjusted their position, or ceased cutting for any reason, these periods were not included in the final ‘time taken’ records.

Statistical analyses—functional performance

All statistical tests were performed using PAST (version 3.25). Shapiro–Wilk tests revealed each group of time (to finish) data to not be normally distributed (p = .0329 to < .0001). In turn, Kruskal–Wallis tests were used to statistically compare the time values of the three tool types during the two cutting tasks. Subsequently, we ran Mann–Whitney U tests between the knives and LCT bifaces, knives and flakes and flakes and LCT bifaces (for both cutting tasks), to identify where any differences (if there are any) may lie (α = .05).

Comparing tool-use ergonomics

As an applied science, ergonomic studies investigate how the human body interacts with elements of a physical system, and how variation in the form, use, positioning, and actions of these elements impacts the body and/or ease-of-use and comfort perceptions (Wilson 2014). Ergonomic factors relating to the use of stone tools will principally concern the upper limb, and the ability to use tools of varying types and forms efficiently, comfortably, and with minimal muscular effort and joint stress.

Studies investigating these factors during stone tool use are, however, rare. Key et al. (2020) recently collected EMG data to better understand Palaeolithic tool design and use decisions, recording muscle activation levels during the use of different Lower and Middle Palaeolithic technologies. Further, multiple anatomically-focused studies have indirectly investigated stone tool use ergonomics during biomechanical investigations focused on other phenomena (e.g. Marzke 1997; Churchill 2001; Rolian et al. 2011; Williams-Hatala et al. 2018; Karakostis et al. 2020), including several who used EMG techniques (Hamrick et al. 1998; Marzke et al. 1998).

This is not to say that ideas surrounding stone tool-use ergonomics have not been suggested within Palaeolithic literature. Links between specific stone tool forms and types, and their ability to be applied easily by the hand, have long been inferred from artefacts or supported through the experimental use of replica tools (e.g. Kleindienst and Keller 1976; Jobson 1986; Tomka 2001; Machin et al. 2005; McNabb 2005; Gowlett 2006, 2020; Grosman et al. 2011; Eren and Lycett 2012; Barham 2013; Preysler et al. 2016; Zupancich et al. 2016; Pargeter and Shea 2019; Silva-Gago et al. 2019; Fedato et al. 2020; Wynn 2020). Rather, a majority of hypotheses surrounding the interaction between prehistoric technologies of varying forms and types and the hominin upper limb remain untested using empirical musculoskeletal data derived from experiments or biomechanical modelling.

Electromyography

Here we use electromyography (EMG), a technique commonly used in ergonomic studies of modern hand-held tools (Grant and Habes 1997; Agostinucci and McLinden 2016; Gazzoni et al. 2016), to help understand why hafted stone knives were produced over non-hafted flakes and LCT bifaces. EMG uses surface or intramuscular sensors to record electrical activity (potential) in muscles during their contraction. When muscles are working harder, increased electrical activity is recorded due to the propagation of a greater number of intracellular action potentials through the sarcolemma surrounding each muscle fibre (Milner-Brown et al. 1973). This indicates increased motor nerve firing, and in turn, increased muscle force output via excitation–contraction coupling events. It has been demonstrated that there are linear relationships between EMG records of muscular electrical activity and muscle force in some muscles, including those controlling the fingers (Clancy et al. 2016; Enoka and Duchateau 2016). We use surface electromyography (sEMG) to record electrical activity at nine muscle sites on the dominant arm.

Following international standards and SENIAM guidelines (Hermens et al. 2000; Stegeman and Hermens 2007), silver chloride bipolar surface electrodes were attached above the belly of nine muscles. We used a gain of between 500 and 2000 V/V, dependent on the participant, and sampled data at 2048 Hz using a 12-bit analog-to-digital converter (EMG-USB2 + , OT Bioelettronica, Torino, Italy; bandwidth 10–500 Hz). The nine target muscles cover the whole upper limb, and include those essential to precision and power gripping (first dorsal interosseous, flexor pollicis longus, abductor digiti minimi, flexor pollicis brevis), and those essential to broader ranges of motion at the wrist, elbow, and shoulder (flexor pollicis brevis, brachioradialis, flexor carpi radialis, biceps brachii, triceps brachii, anterior deltoid). Each target muscle, the site of sensor attachment, and its movement actions are also described in Supplementary Table 1. Following established protocols, we minimised the filtering and deforming influence of surface tissues (e.g. skin, subcutaneous fat) on EMG signals by removing hair and cleaning (alcohol swabs) muscle sites prior to sensor attachment, the use of bipolar sensors, standardising inter-electrode distance, and their attachment by the same experienced individual. After sensor attachment, each signal channel was visually checked for movement artefacts and/or crosstalk from neighbouring muscles, and the electrodes were repositioned if necessary. A strap reference electrode was dampened with water and placed around the wrist of the nondominant arm and attached to the amplifier, which was located behind participants. All signals were acquired and analysed using OT BioLab software (OT Bioelettronica).

Collecting EMG data

EMG data were collected simultaneously to the cutting efficiency data, and thus relate to the same tools and cutting tasks outlined already. Due to signal strength variation and site dependent filtration/deforming effects on sEMG signals, raw amplitude data are not directly comparable between different target muscles. Thus, we recorded muscular activity here through both the signal’s root mean square (RMS), which is a raw measure of amplitude, and amplitude normalized as a percentage of that recorded during maximum voluntary contractions (% MVC). To calculate % MVC, six maximum voluntary contraction (MVC) exercises were undertaken by each participant prior to performing the cutting tasks, with MVC amplitude being recorded for each of the nine investigated muscles. In turn, these RMS values allowed us to calculate % MVC values for each muscle during the cutting tasks.

Using a double-pass [zero-lag] 2nd-order digital Butterworth filter, all raw signals were band pass filtered between 10 and 350 Hz before the calculation of RMS values. The filter parameters were chosen to remove high-frequency noise and movement artefacts associated with whole body movement, thereby preserving the signal bandwidth at the targeted muscles relevant to force production. RMS values for each recorded sEMG signal were calculated for 0.4-s epochs (e.g. 75 RMS values would be calculated over a 30-s period).

Videos were used to define when tools were used during EMG data streams, or when participants were moving between sections of the task, readjusting their body position, or were waiting for the task to start. When participants stopped using a stone tool to cut, RMS values from this period of the data stream were removed from consideration. Occasionally, high pressure was exerted onto the surface of the flexor pollicis brevis sensors, resulting in signal clipping, saturation, or motion artefact distortion. Very occasionally, this happened at other sensor sites. If < 25% of values in a trial displayed these features, then these portions were cut and the remaining data were used. If > 25% of data were distorted, then the whole trial was discarded from the study. Hence, data sets for individual target muscles can be below 30.

Statistical analyses—tool use ergonomics

Each muscle had its mean RMS values calculated during the use of each stone tool, for both the clay and rope cutting tasks. These data were used to compare between activation levels for individual muscles dependent on the type of stone tool used. Analysis of variance (ANOVA) tests examined the statistical strength of difference in muscle activation between stone tool types, while Tukey’s honest significance difference (HSD) post hoc tests were run to identify where any significant difference lay (if there were any). Alpha equaled 0.05 in both instances.

To examine activity levels between different muscles during the use of individual tool types, MVC normalized amplitude values, where mean RMS values are expressed as a percentage of mean MVC RMS values (% MVC), were used. % MVC was calculated for each muscle, during the use of each tool type by all individuals for both cutting tasks. This allows assessment of which muscles are working harder on a relative basis during the use of each tool, and how this varies between the three technologies examined here. Data were not normally distributed in some instances (revealed by Kolmogorov–Smirnov tests), which combined with the non-continuous and bounded nature of percentile data, led to the use of Kruskal–Wallis tests to identify whether the nine muscles displayed significantly different % MVC during the use of each tool type (α = .05). Post hoc Mann–Whitney U tests were run between individual muscle’s % MVC values to identify where any potential significant differences lay (α = .0083, due to a Bonferroni correction being applied to control for the increased potential for type I errors through repeat testing).

Results

Functional performance comparisons

Cutting performance data reveal similarities and differences between tools, dependent on the cutting task undertaken (Table 2; Fig. 3). During the clay task, the knives and LCT bifaces displayed identical mean time values. Flake tools took 20% longer on average, while also displaying greater standard deviation. Conversely, during the rope-cutting task, flakes were faster than the larger tool types (14% and 21% faster than knives and LCT bifaces, respectively) and displayed lower coefficient of variation and standard deviation. During the rope task, knives were on average 9% faster than the LCT bifaces. Kruskal–Wallis tests did not identify significant performance differences between the three tool types, for either the rope or clay cutting tasks (p = .6396 [clay] and .3235 [rope]). Mann–Whitney U tests confirmed the differences observed between tool types in the descriptive data to not be significant (for any of the two-way tool comparisons; Table 3). If re-run using repeat measures ANOVA, there are similarly no differences identified between the three tool types.

Muscle recruitment comparisons

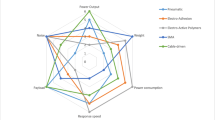

Muscle recruitment was examined through raw amplitude measures (RMS) and amplitude normalized as a percentage of maximum voluntary contractions (% MVC). Raw data for each reveal muscles to be recruited differently depending on the type of tool used (Table 6). Broadly, muscles responsible for digit flexion and in-hand manipulation display low levels of activation during the use of knives (e.g. flexor pollicis longus, first dorsal interosseous), while those linked to broader ranges of motion in the arm and wrist are recruited less during the use of flake tools (e.g. biceps brachii, brachioradialis) (Table 6). The only clear exception was the abductor digiti minimi, which displayed low levels of recruitment during the use of flake tools compared to knives and LCT bifaces (Fig. 4; Table 6). Except for the flexor carpi radialis and triceps brachii, the use of LCT bifaces resulted in most muscles displaying moderate-to-high activation levels (relative to the other tool types).

ANOVA tests used RMS data to identify whether the three tool types recruited individual muscles to significantly different extents, during both the clay and rope cutting tasks. Significant results were returned for the first dorsal interosseous, abductor digiti minimi, flexor pollicis longus, and flexor carpi radialis (Table 4). Meaning that for these four muscles, tool choice had a significant impact on their activation levels. Post hoc Tukey’s HSD tests revealed knives to recruit the first dorsal interosseous and flexor carpi radialis significantly less than flake tools. Similarly, knives recruited the flexor pollicis longus less than LCT bifaces. LCT bifaces recruited the abductor digiti minimi to a significantly greater extent than flake tools.

To investigate relative activation levels between upper limb muscles during the use of each tool type, Kruskal–Wallis tests were performed using % MVC data (Table 5). Significant results were returned in all instances, revealing that irrespective of the tool used, individual muscles were recruited to different extents. Mann–Whitney U tests were performed to identify which specific muscles displayed greater activation than others (SOM Table 3). For all tool types, the first dorsal interosseous, abductor digiti minimi, flexor pollicis brevis, and flexor pollicis longus display greater % MVC levels compared to the other five muscles. In many instances these differences are significant (SOM Table 3). Indicating that muscles responsible in-hand manipulation and digit flexion to be recruited to a relatively greater level than those responsible for rotation and flexion of the wrist, flexion and extension of the forearm, and the abduction of the humerus. These relationships are consistent for both tasks for the flake tools and LCT bifaces. When using a knife, however, there appears to be much greater activation of the flexor carpi radialis, anterior deltoid, triceps brachii, and biceps brachii, compared to flakes and LCT bifaces. This is most evident during the longer-duration rope-cutting task. Indeed, their % MVC activation levels are often equal to or approaching that of muscles located in the hand when using a knife (Table 6). Essentially, these muscles are, on a relative basis, working almost as hard as those in the hand responsible for gripping the tool.

Discussion

Knives are one of the few Palaeolithic inventions still integral to our daily lives. The materials they are made from have changed, but the fundamental concept of being an elongated composite structure (or at least two-section structure) containing a sharp portion secured to a handle remains the same. Yet, for nearly three million years prior to the advent of knives hominins held the stone element containing a sharp edge directly in the hand. Here, we investigated why knives were first invented from an ergonomic and functional performance perspective.

The comparative ergonomics of stone knives

EMG is a widely used biomechanical technique not often applied in archaeology. Yet, its potential to convey ergonomic data important to understanding the tool-design criteria adhered to by past human populations is substantial. Here we investigated electrical activity (potential) in nine muscles across the upper limb of modern humans as they used hafted stone knives, ‘basic’ flake tools, and LCT bifaces. Two broad trends were revealed. First, the use of knives resulted in lower levels of activation for muscles responsible for securing tools in the hand. Second, the use of knives allowed muscles responsible for creating slicing motions and stabilising the arm during tool use to be recruited more heavily. This suggests knives to be ergonomically advantageous relative to flakes and LCT bifaces.

The intrinsic muscles of the hand and some in the forearm ensure the secure gripping of stone tools through the flexion, adduction, and abduction of digits one through five (Marzke et al. 1998; Rolian et al. 2011; Diogo et al. 2012; Marzke 2013). Forceful contraction of these muscles secures tools in the hand and opposes torque, friction and other forces acting on it during use. Muscles in the upper arm and forearm, however, contribute to the slice-push motion required for efficient cutting (Atkins et al. 2004; Key 2016). That is, the wrist, elbow, and shoulder move in such a way that the tool is both pushed into the material being cut and drawn across its surface. It is these muscles, therefore, that create the majority of force transferred through the tool and into the worked material, creating cutting stress at the tip of the cutting edge’s apex (i.e. these muscles create the forces that contribute to the creation of cutting stress). Combined, our data therefore reveal knives to result in the greater activation of muscles responsible for determining cutting forces (upper and fore-arm) while simultaneously reducing activation in those responsible for securing tools in the hand.

Unfortunately, we cannot say precisely how much more cutting force was created (and it may not be significant), but lineal relationships between muscle activation and muscle force output are generally accepted (Clancy et al. 2016; Enoka and Duchateau 2016). Potentially, because the hand was more easily able to grip the knife (i.e. through lower muscle activation), the upper and forearm muscles could exert greater force without increasing tool reaction forces (e.g. torque) to a level that could not be countered by the hand. Indeed, it is revealing that a reduction in hand muscle activation is recorded in spite of the forces created by the upper limb likely increasing (at least in some instances). This supports Gingerich and Stanford’s (2018) observation of increased force when using hafted bifaces relative to unhafted alternatives (see also Walker and Long 1977). Alternatively, it is possible that greater upper and forearm muscle activation reflects reduced ‘ease of use’ in this portion of the arm. We do not think this is likely, as had this been the case, cutting performance would not also have increased in some instances (greater muscular force output creates higher cutting stresses enabling increased ease and speed of cutting [Key 2016]). Further, there is lower activation in the upper arm during the use of LCT bifaces, despite them being nearly as long as the knives and substantially heavier. However, we cannot rule this out entirely and welcome further research on this point.

Multiple differences in RMS data were observed between tool types, but only four returned variance enough to be significant. This included the first dorsal interosseous, flexor pollicis longus, and flexor carpi radialis, which displayed significantly lower activation levels during knife use. The FDI and FPL work to secure tools in the hand by flexing and adducting the thumb, and abducting the index finger (Marzke et al. 1998; Diogo et al. 2012; Marzke 2013). Moreover, both here and elsewhere, they display some of the highest % MVC levels in the upper limb during stone tool use, indicating their importance and their role in determining a stone tool’s ease of use (Marzke et al. 1998; Key et al. 2020). The significant reduction in their activation during the use of knives is, therefore, an improvement to the tool’s ergonomic properties and a tool user’s perception of comfort. A finding supported by participant comments during the tasks (comments were not muscle-specific, but instead referred to increased perceptions of comfort and ease of gripping during the use of the knives). The significantly lower recruitment of the FPL during knife use relative to the LCT biface is particularly interesting, as it indicates the globular ‘handle’ like portion of the latter tool to recruit the hand in a different way compared to hafted knife handles (Gowlett 2006, 2011, 2020).

The FCR flexes and radially abducts the hand at the wrist, contributing to slicing cutting actions by simultaneously drawing an edge across a worked material and pushing it in. A reduction in FCR recruitment therefore suggests knives to increase the ease with which cutting edges can be forcefully drawn across materials. Unfortunately, this was only observed in the clay-cutting task and was not approaching significance in the rope task, so we urge caution with the interpretation of this result. Our finding that the abductor digiti minimi typically displayed the lowest levels of recruitment during the use of flakes is consistent with past research and does not detract from other advantages displayed by knives (Key et al. 2020). Indeed, the ADM is responsible for the abduction of the fifth digit and is unlikely to be recruited during grips associated with flake stone tools (Marzke and Shackley 1986; Marzke and Wullstein 1996; Marzke et al. 1998; Key et al. 2018). During the use of handles and LCT bifaces, however, it contributes to the opposition of the object in a power grip (Marzke and Wullstein 1996; Key et al. 2018), and inevitably displays greater activation as a result.

While it is important to stress that other muscle recruitment differences were not significant, they may, however, reflect greater variance than the RMS data indicate at first. Indeed, the LCT bifaces and flakes are more often above the % MVC threshold (~ 40%) where the force gradation strategy switches for these muscles (Milner-Brown et al. 1973) from fibre recruitment to rate coding (the rates at which activated motor units discharge action potentials [Enoka and Duchateau 2017]). This means that the bifaces and flakes likely recruited all muscles fibres, while knives potentially did not. Alongside potential limitations to blood-flow observed at higher % MVCs, the switch to a reliance on rate coding during force generation would accelerate fatiguing in the non-knife tasks. This supports previous studies by Prasciunas (2007) and others (Jones 1980; Tomka 2001; Marzke 2013; Key and Lycett 2014) who note small flake tools to fatigue the hand when used for extended periods. Further, there is potentially ‘cycling’ behaviour in repetitive tasks like this, such that different activations in different muscles cycle around; likely to reduce fatiguing and overuse injuries. It would be interesting if future experiments using more extended cutting tasks investigated this phenomenon and fatiguing more widely. Indeed, increased fatiguing rates during flake and LCT biface use would similarly impact cutting performance, potentially increasing any functional advantages of using hafted knives.

The comparative functional performance of stone knives

Hafted knives were more efficient than flake tools during the clay cutting task. Although this difference was not significant, it does follow previous research highlighting the limited ability of relatively small flake tools to cut large or resistant portions of material (Jones 1980; Toth 1985; Key and Lycett 2017b), and the high effectiveness of stone knives in similar contexts (Morin 2004; Shea et al. 2002; Smallwood 2015; Gingerich and Stanford 2018; Smallwood et al. 2020). Hafted knives would, then, have conveyed benefits over ‘basic’ flake tools when large portions of material (or those displaying greater material resistance) were required to be cut in prehistory. No demonstrable performance difference was observed between the knives and LCT bifaces during the clay task, meaning that although flake tools may have been selected against in this context, alternative technologies available prior to the appearance of hafted knives could have completed such tasks within a similar timeframe. Thus, as far as our study can demonstrate, the invention of knives does not appear to be linked to the ability to more effectively cut large material masses. Rather, our study highlights just how effective LCT bifaces are when cutting substantial portions of the material, matching composite hafted technologies. Although, as mentioned above, when extended cutting tasks are undertaken and muscular fatiguing sets in, knives may start to outcompete LCT bifaces.

During the rope-cutting task flakes were more efficient than both the hafted knives and the LCT bifaces. Again, this difference was not significant, but it does follow previous findings insofar as flake tools are known to be particularly advantageous during cutting activities that require precision (see Key and Lycett 2017b, and references in Table 1). Potentially, the more acute edges observed on the flake tools contributed to this increased performance by reducing the forces required to initiate cuts (Key 2016). If this were the case, it makes the lower in-hand muscle recruitment observed during the use of knives relative to flakes even more marked, while simultaneously helping to explain why the proximally located muscles in the arm potentially contributed greater forces during knife use. Knives were, however, 9% faster than the LCT bifaces during this task. Indicating differences in the two tool type’s ability to undertake precision cutting tasks and hinting at the greater overall ability of knives. Indeed, when both cutting tasks are combined, there are indications that knives can be best viewed as an optimal generalised cutting tool that may at times be out-competed in specific functional contexts, but is able to be applied effectively and efficiently (or at least relatively so) in most material contexts.

It is important to note that the performance differences identified here were not significant and we ask that readers consider this in future work. We think the above conclusions are robust; differences between 10 and 20% are not marginal, while the flake and LCT biface results are supported by past research (Jones 1980; Toth and Schick 2009; Merritt 2012; Key and Lycett 2017b; Gingerich and Stanford 2018). Rather, our finding of non-significant differences perhaps reflects the experimental procedure used. That is, our participants were connected to EMG equipment throughout, and although we asked participants to complete the cutting tasks ‘as quickly as possible’, the wires trailing off each individual’s arms potentially slowed the upper limits of each individual’s cutting speed. In other words, peak cutting speeds may have been artificially limited by the EMG equipment. To this end, it is possible that the scale of differences observed here could have been larger had the participants not been connected to EMG equipment. Future research may, therefore, benefit from the use of wireless EMG sensors. It is also notable that although participants had time to become familiar with the use of all tool types, the stone knives would have been a more familiar tool concept, and this could have impacted our results. We believe that any potential impact would have been marginal as participants very quickly became comfortable using flakes and LCT bifaces in the practice period, and the slicing cutting action required was the same across all tool types (i.e. all tools performed familiar cutting actions).

Conclusion

The question of why hominins first invented handles, and in turn hafted knives, has often been discussed (e.g. Barham 2013, and references therein). To date, however, hypotheses concerning the Palaeolithic origin of handles have largely remained untested using robust, empirically defined experiments. Here we begin to address this deficiency and tested the two main functional hypotheses concerning the invention of hafted stone knives; whether they display ergonomic and functional performance benefits relative to non-hafted stone alternatives.

We provide evidence that hafted stone knives display ergonomic advantages relative to two key alternative technologies produced prior to their invention. This advantage is twofold. First, muscles responsible for securing tools in the hand during use display lower activation levels during the use of knives. Second, knives may be facilitating greater forces to be transferred through the tool by allowing the larger, more proximal, upper limb muscles that contribute most to cutting forces to be activated to a greater extent. Hafted composite tools would then have displayed ergonomic benefits relative to non-hafted alternatives, likely contributing to their invention and sustained production. Our functional performance data were less conclusive, but there are indications that knives are particularly efficient compared to other stone tool types when considered as a generalised cutting tool, amenable to multiple functional contexts. The advent of handles, or as Barham put it the ‘first industrial revolution’ (Barham 2013), is then most clearly linked to increases in the ergonomics (i.e. ease and comfort) of tools use.

Ergonomic and performance factors cannot, however, be viewed alone when considering why knives were first invented and then recurrently produced and selected over time. For example, Keeley (1982) provides other reasons why stone tools were hafted, including increasing the precision of large cutting tool use or conservation of stone raw materials. Knife blades may perhaps be more apt to be used as multifunctional implements when hafted (e.g. Smallwood 2015; Smallwood et al. 2020). Knife handles may also decrease the chance of injury during use, either in cutting or self-defence activities. Thus, a potential future avenue of research would be to examine the friction and grip ability of stone versus wood in different scenarios, for example when the tools are dry, covered with ochre, or coated with fat or blood. Finally, stone blade size may play a role in the decision to adopt hafting or not—small flakes or bladelets, and associated raw material limitations, may encourage the invention of hafting for more effective cutting edge use (Rots 2010). Nevertheless, by experimentally demonstrating that hafted knives provide ergonomic and potentially performance advantages, these two factors can be invoked as possible contributing motivations for the invention of tools still in use to this day.

Data availability

All data is available from the lead author on reasonable request.

Code availability

Not applicable.

References

Agostinucci J, McLinden J (2016) Ergonomic comparison between a ‘right angle’ handle style and standard style paint brush: An electromyographic analysis. Int J Indust Ergon 56:130–137

Alperson-Afil N, Goren-Inbar N (2016) Acheulian hafting: proximal modification of small flint flakes at Gesher Benot Ya’aqov. Israel Quat Int 411:34–43

Atkins AG, Xu X, Jeronimidis G (2004) Cutting, by ‘pressing and slicing’, of thin floppy slices of materials illustrated by experiments on cheddar cheese and salami. J Mat Sci 39:2761–2766

Barham L (2013) From hand to handle: the first industrial revolution. Oxford University Press, Oxford

Bebber MR, Lycett SJ, Eren MI (2017) Developing a stable point: evaluating the temporal and geographic consistency of Late Prehistoric unnotched triangular point functional design in Midwestern North America. J Anth Arch 47:72–82

Bebber MR, Key AJM, Fisch M, Meindl RS, Eren MI (2019) The exceptional abandonment of metal tools by North American hunter-gatherers, 3000 B.P. Sci Rep 9:5756

Biermann Gürbüz R, Lycett SJ (2021) Could woodworking have driven lithic tool selection? J. Human Evo 156:102999

Bilbao I, Rios-Garaizar J, Arrizabalaga A (2019) Relationship between size and precision of flake technology in the Middle Paleolithic An experimental study. J Arch Sci Rep 25:530–547

Binford LR (1986) An Alyawara day: making men’s knives and beyond. Am Antiq 51:547–562

Bradley BA, Collins MB, Hemmings A (2010) Clovis technology. International Monographs in Prehistory, Ann Arbor

Braun DR, Aldeias V, Archer W, Arrowsmith JR, Baraki N, Campisano CJ, Deino AL, DiMaggio EN, Dupont-Nivet G, Engda B, Feary DA, Garello DI, Kerfelew Z, McPherron SP, Patterson DP, Reeves JS, Thompson JC, Reed KE (2019) Earliest known Oldowan artifacts at >2.58 Ma from Ledi-Geraru, Ethiopia, highlight early technological diversity. Proc Nat Acad Sci 116(24):11712–11717

Brooks AS, Yellen JE, Potts R, Behrensmeyer AK, Deino AL, Leslie DE, Ambrose SH, Ferguson JR, d’Errico F, Zipkin AM, Whittaker S, Post J, Veatch EG, Foecke K, Clark JB (2018) Long-distance stone transport and pigment use in the earliest Middle Stone Age. Science 360(6384):90–94

Calandra I, Gneisinger W, Marreiros J (2020) A versatile mechanized setup for controlled experiments in archeology. Sci and Tech Arch Res 6(1):30–40

Churchill SE (2001) Hand morphology, manipulation, and tool use in Neanderthals and early modern humans of the Near East. Proc Nat Acad Sci 98(6):2953–2955

Clancy EA, Negro F, Farina D (2016) Single-channel techniques for information extraction from the surface EMG signal. In: Merletti R, Farina D (eds) Surface electromyography: physiology, engineering, and applications. Wiley and Sons, Hoboken, pp 91–125

Clarkson C, Haslam M, Harris C (2015) When to retouch, haft, or discard? Modeling optimal use/maintenance schedules in lithic tool use. In: Goodale N, Andrefsky W (eds) Lithic Technological Systems and Evolutionary Theory. Cambridge University Press, Cambridge, pp 117–138

Collins S (2008) Experimental investigations into edge performance and its implications for stone artefact reduction modelling. J Arch Sci 35(8):2164–2170

Diogo R, Richmond BG, Wood B (2012) Evolution and homologies of primate and modern human hand and forearm muscles, with notes on thumb movements and tool use. J Human Evo 63(1):64–78

Emery K (2010) A re-examination of variability in handaxe form in the British Palaeolithic. Unpublished PhD Thesis, University College London

Enoka RM, Duchateau J (2016) Physiology of muscle activation and force generation. In: Merletti R, Farina D (eds) Surface electromyography: physiology, engineering, and applications. Wiley and Sons, Hoboken, pp 1–29

Enoka RM, Duchateau J (2017) Rate coding and the control of muscle force. Cold Spring Harb Perspect Med 7(1):a029702

Eren MI (2012) Were unifacial tools regularly hafted by Clovis foragers in the North American Lower Great Lakes region? An empirical test of edge class richness and attribute frequency among distal, proximal, and lateral tool-sections. J Ohio Arch 2:1–15

Eren MI, Lycett SJ (2012) Why Levallois? A morphometric comparison of experimental ‘preferential’ Levallois flakes versus debitage flakes. PLOS One 7(1):e29273

Eren MI, Lycett SJ, Patten RJ, Buchanan B, Pargeter J, O’Brien MJ (2016) Test, model, and method validation: the role of experimental stone artifact replication in hypothesis-driven archaeology. Ethnoarch 8(2):103–136

Fedato A, Silva-Gago M, Terradillos-Bernal M, Alonso-alcade R, Bruner F (2020) Hand grasping and finger flexion during Lower Paleolithic stone tool ergonomic exploration. Arch Anth Sci 12:254

Gazzoni M, Afsharipour B, Merletti R (2016) Surface EMG in ergonomics and occupational medicine. In: Merletti R, Farina D (eds) Surface electromyography: physiology, engineering, and applications. Wiley and Sons, Hoboken, pp 54–90

Gingerich JAM, Stanford DJ (2018) Lessons from Ginsberg: an analysis of elephant butchery tools. Quat Intern 466B:269–283

Goldstein ST, Shaffer CM (2017) Experimental and archaeological investigations of backed microlith function among Mid-to-Late Holocene herders in southwestern Kenya. Arch Anth Sci 9(8):1767–1788

Gowlett JAJ (2006) The elements of design form in Acheulean bifaces: modes, modalities, rules and language. In: Goren-Inbar N, Sharon G (eds) Axe age: acheulean tool making from quarry to discard. Equinox, London, pp 203–221

Gowlett JAJ (2011) The vital sense of proportion: transformation, golden section, and 1:2 preference in Acheulean bifaces. PaleoAnth 2011:174–187

Gowlett JAJ (2015) Variability in an early hominin percussive tradition: the Acheulean versus cultural variation in modern chimpanzee artefacts. Phil Trans r Soc B 370(1682):20140358

Gowlett JAJ (2020) Deep structure in the Acheulean adaptation: technology, sociality and aesthetic emergence. Adap Behav. https://doi.org/10.1177/1059712320965713

Graesch AP (2007) Modeling ground slate knife production and implications for the study of household labor contributions to salmon fishing on the Pacific Northwest Coast. J Anth Arch 26(4):576–606

Grant KA, Habes DJ (1997) An electromyographic study of strength and upper extremity muscle activity in simulated meat cutting tasks. App Ergon 28(2):129–137

Grosman L, Goldsmith Y, Smilansky U (2011) Morphological analysis of Nahal Zihor handaxes: a chronological perspective. PaleoAnth 2011:203–215

Hamrick MW, Churchill SE, Schimitt D, Hylander WL (1998) EMG of the human flexor pollicis longus muscle: implications for the evolution of hominid tool use. J Human Evo 34(2):123–136

Harmand S, Lewis JE, Feibel CS, Lepre CJ, Prat S, Lenoble A, Boes X, Quinn RL, Brenet M, Arroyo A, Taylor N, Clement S, Daver G, Brugal J-P, Leakey L, Mortlock RA, Wright JD, Lokorodi S, Kirwa C, Kent DV, Roche H (2015) 3.3-million-year-old stone tools from Lomekwi 3, West Turkana, Kenya. Nature 521(7552):310–315

Hermens HJ, Freriks B, Disselhorst-Klug C, Rau G (2000) Development of recommendations for SEMG sensors and sensor placement procedures. J of Electromyog and Kines 10(5):361–374

Horowitz RA, McCall GS (2013) Evaluating indices of curation for Archaic North American bifacial projectile points. J Field Arch 38(4):347–361

Iovita R (2011) Shape variation in Aterian tanged tools and the origins of projectile technology: a morphometric perspective on stone tool function. PLoS One 6(12):e29029

Iovita R (2014) The role of edge angle maintenance in explaining technological variation in the production of Late Middle Paleolithic bifacial and unifacial tools. Quat Intern 350:105–115

Jeske RJ (1992) Energetic efficiency and lithic technology: an Upper Mississippian example. Am Antiq 57(3):467–481

Jobson RW (1986) Stone tool morphology and rabbit butchering. Lithic Tech 15(1):9–20

Jones PR (1980) Experimental butchery with modern stone tools and its relevance for Palaeolithic archaeology. World Arch 12(2):153–165

Jöris O (2006) Bifacially backed knives (Keilmesser) in the central European Middle Palaeolithic. In Axe age: acheulian tool-making from quarry to discard, edited by N. Goren-Inbar and G. Sharon, pp 287–310. Equinox.

Justice ND (1987) Stone Age spear and arrow points of the Midcontinental and Eastern United States: a modern survey and reference. Indiana University Press, Bloomington

Kaminská L, Kozłowski JK, Škrdla P (2011) New approach to the Szeletian-Chronology and cultural variability. Eurasian Prehist 8(1–2):29–49

Karakostis FA, Reyes-Centeno H, Franken M, Hotz G, Rademaker K, Harvati K (2020) Biocultural evidence of precise manual activities in an Early Holocene individual of the high-altitude Peruvian Andes. Am J Phys Anth. https://doi.org/10.1002/ajpa.24160

Keeley LH (1982) Hafting and retooling: effects on the archaeological record. Am Antiq 47(4):798–809

Key AJM (2016) Integrating mechanical and ergonomic research within functional and morphological analyses of lithic cutting technology: key principles and future experimental directions. Ethnoarch 8(1):69–89

Key AJM, Lycett SJ (2014) Are bigger flakes always better? An experimental assessment of flake size variation on cutting efficiency and loading. J Arch Sci 41:140–146

Key AJM, Lycett SJ (2017a) Influence of handaxe size and shape on cutting efficiency: a large-scale experiment and morphometric analysis. J of Arch Meth Theory 24:514–541

Key AJM, Lycett SJ (2017b) Reassessing the production of handaxes versus flakes from a functional perspective. Arch Anth Sci 9:737–753

Key AJM, Merritt SR, Kivell TL (2018) Hand grip diversity and frequency during the use of Lower Palaeolithic stone cutting-tools. J Human Evo 125:137–158

Key AJM, Lycett SJ (2019) Biometric variables predict stone tool functional performance more effectively than tool-form attributes: a case study in handaxe loading capabilities. Archaeom 61(3):539–555

Key AJM, Farr I, Hunter R, Winter SL (2020) Muscle recruitment and stone tool use ergonomics across three million years of Palaeolithic technological transitions. J Human Evo 144:102796

Kleindienst MR, Keller CM (1976) Towards a functional analysis of handaxes and cleavers: the evidence from Eastern Africa. Man 11(2):176–187

Kozlowski JK (2003) From bifaces to leaf points. In: Sorressi M, Dibble H (eds) Multiple approaches to the study of bifacial technologies. University of Pennsylvania Museum of Archaeology and Anthropology, Philadelphia, pp 149–164

Lin SC, Rezek Z, Braun D, Dibble HL (2013) On the utility and economization of unretouched flakes: the effects of exterior platform angle and platform depth. Am Antiq 78(4):724–745

Lombard M, Parsons I, Van der Ryst MM (2004) Middle Stone Age lithic point experimentation for macro-fracture and residue analyses: the process and preliminary results with reference to Sibudu Cave points: Sibudu Cave. S African J Sci 100(3–4):159–166

Lombard M (2006) First impressions of the functions and hafting technology od Still Bay pointed artefacts from Sibudu Cave. S African Human 18(1):27–41

Lycett SJ, Eren MI (2013) Levallois lessons: the challenge of integrating mathematical models, quantitative experiments and the archaeological record. World Arch 45(4):519–538

Machin AJ, Hosfield R, Mithen SJ (2005) Testing the functional utility of handaxe symmetry: fallow deer butchery with replica handaxes. Lithics 26:23–37

Marzke MW (1997) Precision grips, hand morphology, and tools. Am J Phys Anth 102(1):91–110

Marzke MW (2013) Tool making, hand morphology and fossil hominins. Phil Trans r Soc b 368(1630):20120414

Marzke MW, Shackley MS (1986) Hominid hand use in the pliocene and pleistocene: evidence from experimental archaeology and comparative morphology. J Human Evo 15(6):439–460

Marzke MW, Wullstein KL (1996) Chimpanzee and human grips: a new classification with a focus on evolutionary morphology. Int J Prim 17:117–139

Marzke MW, Toth N, Schick K, Reece S, Steinberg B, Hunt K, Linscheid RL, An K-N (1998) EMG study of hand muscle recruitment during hard hammer percussion manufacture of Oldowan tools. Am J Phys Anth 105(3):315–332

Mazza PPA, Martini F, Sala B, Magi M, Colombini MP, Giacho G, Landucci F, Lemorini C, Modugno F, Ribechini E (2006) A new Palaeolithic discovery: tar-hafted stone tools in a European Mid-Pleistocene bone-bearing bed. J Arch Sci 33(9):1310–1318

McNabb J (2005) Hominins and the early-middle pleistocene transition: evolution, culture and climate in Africa and Europe. In: Head MJ, Gibbard PL (eds) Early-Middle Pleistocene Transitions: The Land-Ocean Evidence. Geological Society, London, pp 287–304

Meltzer DJ (2021) First peoples in a new world, 2nd edn. Cambridge, University of Cambridge Press

Merritt SR (2012) Factors affecting Early Stone Age cut mark cross-sectional size: implications from actualistic butchery trials. J Arch Sci 39(9):2984–2994

Merritt SR, Peters KD (2019) The impact of flake tool attributes and butcher experience on carcass processing time and efficiency during experimental butchery trials. Int J Osteoarch 29(2):220–230

Mesoudi A (2011) Cultural evolution. University of Chicago Press, Chicago

Milner-Brown HS, Stein RB, Yemm R (1973) Changes in firing rate of human motor units during linearly changing voluntary contractions. J Phys 23(2):371–390

Morin J (2004) Cutting edges and salmon skin: variation in calmon processing technology on the Northwest Coast. Can J Arch 28(2):281–318

Nami HG (2017) Exploring the manufacture of bifacial stone tools from the Middle Rio Negro Basin, Uruguay: an experimental approach. Ethnoarch 9(1):53–80

Niekus MJLT, Kozowyk PRB, Langejans GHJ, Ngan-Tillard D, van Keulen H, van der Plicht J, Cohen KM, van Wingerden W, van Os B, Smit BI, Amkreutz LWSW, Johansen L, Verbaas A, Dusseldorp GL (2019) Middle Paleolithic complex technology and a Neandertal tar-backed tool from the Dutch North Sea. Proc Nat Acad Sci 116(44):22081–22087

Pargeter J, Shea JJ (2019) Going big versus going small: lithic miniaturization in hominin lithic technology. Evo Anth 28(2):72–85

Perrone A, Wilson M, Fisch M, Buchanan B, Bebber MR, Eren MI (2020) Human behavior or taphonomy? On the breakage of Eastern North American Paleoindian endscrapers. Arch Anth Sci 12(8):1–12

Potts R, Behrensmeyer AK, Faith JT, Tryon CA, Brooks AS, Yellen JE, Deino AL, Kinyanjui R, Clark JB, Haradon CM, Levin NE, Meijer HJM, Veatch EG, Owen RB, Renaut RW (2018) Environmental dynamics during the onset of the Middle Stone Age in eastern Africa. Science 360(6384):86–90

Prasciunas MM (2007) Bifacial cores and flake production efficiency: an experimental test of technological assumptions. Am Antiq 72(2):334–348

Preysler JB, Navas CT, Diaz SP, Bustos-Perez G, Romagnoli F (2016) To grip or not to grip: an experimental approach for understanding the use of prehensile areas in Mousterian tools. Bol De Arque Exp 11:200–218

Reti JS (2016) Quantifying Oldowan stone tool production at Olduvai Gorge, Tanzania. PLOS One 11(1):e0147352

Reubens K (2013) Regional behaviour among late Neanderthal groups in Western Europe: a comparative assessment of late Middle Palaeolithic bifacial tool variability. J Human Evo 65(4):341–362

Rimkus T, Slah G (2016) Experimental and use-wear examinations of flint knives: reconstructing the butchering techniques of prehistoric Lithuania. Arch Lituana 17:77–88

Rolian C, Lieberman DE, Zermeno JP (2011) Hand biomechanics during simulated stone tool use. J Human Evo 61(1):26–41

Rots V (2010) Prehension and hafting traces on stone tools. Leuven University Press, Leuven

Rots V (2013) Insights into early Middle Palaeolithic tool use and hafting in Western Europe. The functional analysis of level IIa of the early Middle Palaeolithic site of Biache-Saint-Vaast (France). J Arch Sci 40(1):497–506

Rots V, Van Peer P (2006) Early evidence of complexity in lithic economy: core-axe production, hafting and use at Late Middle Pleistocene site 8-B-11, Sai Island (Sudan). J Arch Sci 33(3):360–371

Rots V, Van Peer P, Vermeersch PM (2011) Aspects of tool production, use, and hafting in Palaeolithic assemblages from Northeast Africa. J Human Evo 60(5):637–664

Rots V, Hayes E, Akerman K, Green P, Clarkson C, Lepers C, Bordes L, McAdams C, Foley E, Fullagar R (2020) Hafted tool-use experiments with Australian aboriginal plant adhesives: Triodia Spinifex, Xanthorrhoea grass tree and Lechenaultia divaricata Mindrie. EXARC J 2020 (1)

Rousseau MK (2004) Old cuts and scrapes: composite chipped stone knives on the Canadian Plateau. Can J Arch 28:1–31

Semaw S, Renne P, Harris JW, Feibel CS, Bernor RL, Fesseha N, Mowbray K (1997) 2.5-million-year-old stone tools from Gona, Ethiopia. Nature 385(6614):333–336

Semaw S, Rogers MJ, Quade J, Renne PR, Butler RF, Dominguez-Rodrigo M, Stout D, Hart WS, Pickering T, Simpson SW (2003) 2.6-Million-year-old stone tools and associated bones from OGS-6 and OGS-7, Gona, Afar, Ethiopia. J Human Evo 45(2):169–177

Shea JJ, Brown KS, Davis ZJ (2002) Controlled experiment with Middle Paleolithic spear points: Levallois points. In: Mathieu JR (ed) Experimental Archaeology: Replicating Past Objects, Behaviors and Processes. Archaeopress, Oxford, pp 55–72

Shott MJ (1995) How much is a scraper? Curation, use rates, and the formation of scraper assemblages. Lithic Tech 20:53–72

Silva-Gago M, Fedato A, Terradillos-Bernal M, Alonso-Alcalde R, Mertin-Guerra E, Bruner E (2019) Form influence on electrodermal activity during stone tool manipulation. European Society for the study of Human Evolution Conference, September, Liège

Škrdla P (2016) Bifacial technology at the beginning of the Upper Paleolithic in Moravia. Litikum 4:5–7

Smallwood AM (2015) Building experimental use-wear analogues for Clovis biface functions. Arch Anth Sci 7:13–26

Smallwood AM, Pevny CD, Jennings TA, Morrow JE (2020) Projectile? Knife? Perforator? Using actualistic experiments to build models for identifying microscopic usewear traces on Dalton points from the Brand site, Arkansas, North America. J Arch Sci Rep 31:102337

Stegeman, Hermens H (2007) Standards for surface electromyography: the European project Surface EMG for non-invasive assessment of muscles (SENIAM). Roessingh Research and Development, Enschede (2007), pp 108-112

Stevens NE, McElreath R (2015) When are two tools better than one? Mortars, millingslabs, and the California acorn economy. J Anth Arch 37:100–111

Thomas KA, Story BA, Eren MI, Buchanan B, Andrews BN, O’Brien MJ, Meltzer DJ (2017) Explaining the origin of fluting in North American Pleistocene weaponry. J Arch Sci 81:23–30

Tomka SA (2001) The effect of processing requirements on reduction strategies and tool form: a new perspective. In: Andrefsky J (ed) Lithic Debitage: Context, Form, Meaning. The University of Utah Press, Salt Lake City, pp 207–225

Toth N (1985) The Oldowan reassessed: a close look at early stone artefacts. J Arch Sci 12:101–120

Toth N, Schick K (2009) The importance of actualistic studies in Early Stone Age research: some personal reflections”. In: Toh N, Schick (eds) The Cutting Edge: New Approaches to the Archaeology of Human Origins. Stone Age Institute Press, pp 267–344

Vaquero M, Romagnoli F (2018) Searching for lazy people: the significance of expedient behavior in the interpretation of Palaeolithic assemblages. J Arch Meth Theory 25:334–367

Walker PL, Long JC (1977) An experimental study of the morphological characteristics of tool marks. Am Antiq 42:605–616

Weedman KJ (2006) An ethnoarchaeological study of hafting and stone tool diversity among the Gamo of Ethiopia. J Arch Meth Theory 13:188–237

Wilkins J, Schoville BJ, Brown KS, Chazan M (2012) Evidence for early hafted hunting technology. Science 338(6109):942–946

Wilkins J, Schoville BJ, Brown KS (2014) An experimental investigation of the functional hypothesis and evolutionary advantage of stone-tipped spears. PLoS One 9(80):e104514

Williams-Hatala EM, Hatala KG, Gordon M, Key A, Kasper M, Kivell TL (2018) The manual pressures of stone tool behaviors and their implications for the evolution of the human hand. J Human Evo 119:14–26

Willis LM, Eren MI, Rick TC (2008) Does butchering fish leave cut marks? J Archaeol Sci 35(5):1438–1444

Wilson JR (2014) Fundamentals of systems ergonomics/human factors. App Ergon 45(1):5–13

Wilson M, Perrone A, Smith H, Norris D, Pargeter J, Eren MI (2020) Modern thermoplastic (hot glue) versus organic-based adhesives and haft bond failure rate in experimental prehistoric ballistics. Int J of Adhe and Adhes 104:102717

Wynn T (2020) Ergonomic clusters and displaced affordances in early lithic technology. Adap Behav. https://doi.org/10.1177/1059712320932333

Zupancich A, Lemorini C, Gopher A, Barkai R (2016) On Quina and demi-Quina scraper handling: preliminary results from the late Lower Paleolithic site of Qesem Cave. Israel Quat Intern 398:94–102

Funding

This research was supported by a British Academy Postdoctoral Fellowship awarded to AK (pf160022). IF and RH were supported by graduate teaching scholarships awarded by the University of Kent. MIE and AM are supported by the Kent State University College of Arts and Sciences.

Author information

Authors and Affiliations

Contributions

Not applicable/required.

Corresponding author

Ethics declarations

Ethics approval

Ethical approval was granted by the University of Kent School of Sports and Exercise Science (ref: prop 131_2016_17).

Consent to participate

All participants gave informed written consent.

Consent for publication

No applicable (no identifying information or images).

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Key, A., Farr, I., Hunter, R. et al. Why invent the handle? Electromyography (EMG) and efficiency of use data investigating the prehistoric origin and selection of hafted stone knives. Archaeol Anthropol Sci 13, 162 (2021). https://doi.org/10.1007/s12520-021-01421-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12520-021-01421-1