Abstract

A shoreline is defined as the line of contact between land and water surface. Shoreline extraction/mapping is critical in many coastal zone applications such as safe navigation and coastal environmental protection. Digitizing a feature such as the coastline is a very tedious and time-consuming operation. In this research shoreline mapping at a sub-pixel and pixel levels was evaluated in the northern part of the coastal zone of Egypt (Port Said). Three different image fusion techniques have been used to merge two co-registered data, radar (Radarsat) and optical (Landsat), to improve the classification accuracy. Spatial, spectral, and radiometric qualities of the fused images have been evaluated. The resulted three fused images, the Landsat and Radarsat images, were fed to the fuzzy and the maximum likelihood classifiers where the classification delineated two classes; water and nonwater. The accuracy of the classified images was estimated based on a reference data. After classification, the results have been compared where it was found that image fusion has improved the classification accuracy and the accuracy of the fuzzy classification is better than the maximum likelihood classification in all cases. The best resulted classified image from the ten cases is that obtained from the fused Radarsat-Landsat images using IHS technique that was classified with the fuzzy classifier. Shoreline has been extracted from the (IHS-fuzzy), (SAR-fuzzy), and (Landsat-fuzzy). Experimental results showed that the shoreline extraction accuracy is dramatically improved by the effective image fusion of Landsat and SAR data. The accuracies of the extracted shorelines from (Landsat-fuzzy), (Radarsat-fuzzy), and (IHS-fuzzy) data based on twenty differential GPS check points are estimated to be 5.69, 5.26, and 5.14 m, respectively. The three shorelines extracted/mapped from (Landsat-fuzzy), (Radarsat-fuzzy), (IHS-fuzzy) data were compared to a reference shoreline. The RMS has been computed related to thirty checkpoints on the reference shoreline. The accuracies of the extracted shorelines from (Landsat-fuzzy), (SAR-fuzzy), and (IHS-fuzzy) data are estimated to be 8.3, 7.27, and 6.75 m, respectively. The positional quality of the extracted shorelines (using generalization factor) from (Landsat-fuzzy), (SAR-fuzzy), and (IHS-fuzzy) data are estimated to be 0.977, 0.986, and 0.999, respectively.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Shoreline extraction/mapping is critical for safe navigation, coastal resource management, coastal environmental protection, sustainable coastal development, and urban planning. Since shoreline has a dynamic nature, its definition, mapping, and monitoring are complicated tasks. Shoreline changes are critical information for shoreline mapping. Shorelines have never been stable in either their long-term or short-term, because of the dynamic nature of the coastal area due to many physical processes, such as tidal flooding, sea level rise, land subsidence, and erosion–sedimentation.

Shoreline mapping techniques have developed from conventional field survey methods through expensive, airborne coastal mapping techniques to new automatic and semi automatic extraction techniques from remote sensing satellite images (Van and Binh 2008; Ruiz et al. 2007; Buckley et al. 2002). Classification of remotely sensed imagery is one possible method of mapping these features.

Most traditional classification methods are ‘crisp’ or ‘hard’ in partitioning, in which every given object is strictly classified into a certain group. In practice, mixed pixels occur because the pixel size may not be fine enough to capture detail on the ground necessary for specific applications, or where the ground properties, such as vegetation and soil types, vary continuously. The result of hard classification is one class per pixel, whereby much information about the membership of the pixel to other classes is lost. Allowing pixels to have multiple and partial class membership, in which pure and mixed pixels are accommodated in the classification process, has generally been the solution of the mixed pixel problem. This is achieved, among other methods, by soft classification techniques, which assigns a pixel to several land cover classes in proportion to the area of the pixel that each class covers. Fuzzy c-means algorithm is one of the most popular soft classification techniques (Okeke and Karnieli 2006; Kumar et al. 2006; Atkinson 1997).

Remote sensing optical satellite data and synthetic aperture radar (SAR) imagery are now available for automatic or semi automatic shoreline extraction and mapping (Di et al. 2003). A SAR is an active instrument, and is therefore independent on atmospheric circumstances like cloud cover and rain (Gungor and Shan 2006; Dekker 2000), which can remedy the imaging blend areas caused by the restriction of optical sensors. Also SAR has a certain ability of penetration, which can penetrate the earth surface cover and gain the underground information. Furthermore, SAR provides more abundant earth surface information, which can remedy the defect of other sensor’s not getting enough information (Young et al. 2000). SAR images (intensity and coherence) contain information on the surface roughness, texture, dielectric properties, and change of the state of natural and man-made objects (Ganzorig et al. 2006).

The combined use of the optical and radar images has a number of advantages because a specific object or class which is not seen on the passive sensor image might be seen on the active sensor image and vice versa because of the nature of the used electro-magnetic radiation (Burini et al. 2008; Ganzorig et al. 2006).

The objective of image fusion is to enhance the spatial resolution of multispectral images using a panchromatic (Pan) image with a higher spatial resolution. The main purpose is to get a fused image that retains the spatial resolution from the panchromatic image and color content from the multispectral images. Therefore, a good fusion algorithm should not distort the color content of the original multispectral image while enhancing its spatial resolution (Wu et al. 2008; Gungor and Shan 2006; Garzelli 2002). Fusion of optical and SAR imagery is more difficult than the ordinary pan-sharpening because the gray values of SAR imagery do not correlate with those of multispectral imagery. Much of the “image fusion” literature focuses on the problem of combining imagery from multiple sensors on a common platform. The more difficult problem of combining imagery from different platforms (Gordon and Logic 2005) will be examined here. The problem is to maintain, to the extent possible, the spectral content of the original Landsat image while retaining the detail of the high-resolution radar image despite of the large difference (5×) in resolution of the two different sources (Mercer et al. 2008).

The research objective is to evaluate three image fusion techniques for the improvement of classification accuracy, to assess shoreline extraction in the northern part of coastal zone of Egypt (Port Said) from SAR, Landsat, and fused SAR-Landsat images.

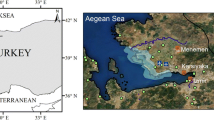

Study area and dataset

The study area is located at the northern part of the coastal zone of Egypt and it covers Port Said Governorate. It extends from the Mediterranean Sea coast at Port Said to a distance of about 31 km to the south and from Port Said along Suez Canal and then for about 31 km to the East in Sinai and 22 km to the West along the Mediterranean Sea with an area of approximately 2,000 km2.

Two types of images have been used Landsat ETM+ and Radarsat-1 CEOS. Actually, the shoreline position changes continuously with time because of beach variation that results from on-shore–off-shore and alongshore sediment transport and it also changes because of the dynamic nature of water levels at the coastal boundary, such as waves and tides. In our case the two images are acquired in the same season (autumn) with a difference in acquisition date of 25 days. This zone is characterized by a relatively stable condition with respect to the tidal effects. No abrupt changes have occurred in the tidal regime in this area in the last 10 years. So, we assume that there are no significant changes between the shoreline in both Landsat and SAR images.

Table 1 summarizes the specifications of these data. Twenty well-distributed differential GPS control points and twenty differential GPS check points that were observed with accuracy ±4 cm in x and y and ±3 cm in z were used. Also topographic maps with scale 1:25,000 (date 2000) produced from aerial photos (date 2000). The maps are published by the Egyptian Surveying Authority in Transverse Mercator projection and used for collection of reference points for accuracy assessment of classifications.

Methodology

Geometric correction of the multisensor images

In order to geometrically correct the SAR image, twenty well-distributed differential GPS control points were used. The image was then geometrically corrected to the Universal Transverse Mercator (UTM) map projection. The SAR image was resampled to a pixel size of 6.25 m. For the actual transformation, a first order transformation and nearest neighborhood resampling approach were applied. The root mean square error of x, y, and total RMS of control points were 2.95, 4.12, and 5.07 m, respectively. The root mean square error of x, y, and total RMS of the twenty check points were 3.22, 4.16, and 5.26 m, respectively. Figure 1 illustrates the geocoded Radarsat image (with speckle noise).

The optical images (multispectral Landsat ETM+ images) were geometrically corrected. Four scenes of Landsat TM images have been mosaiced and a subset of the study area has been obtained. Although the multispectral Landsat ETM+ images were geometrically corrected, their geometrical accuracy was not enough to combine them with the other high-resolution data sets. Therefore, the subset of Landsat ETM+ (bands 3, 4, and 5) was further rectified to a UTM map projection using image to image registration method and was resampled to a pixel size of 6.25 m, using nearest neighborhood resampling. Despite pixel size differences of a factor of 5 between RADARSAT CEOS image and Landsat ETM+ image, they have been resampled to the same pixel size in order to be able to be fused. Twenty control points have been selected on both SAR and Landsat images in clearly delineated crossings of roads, canals, and other clear sites. The root mean square error of x, y, and total RMS of the twenty control points were 4.06, 4.61, and 6.13 m, respectively. The root mean square error of x, y, and total RMS of the twenty check points were 5.05, 2.63, and 5.69 m, respectively. Figure 2 shows the geocoded Landsat ETM+ image.

Filtering of the SAR image

A disadvantage of SAR is that it contains speckle noise that can hamper the interpretation and information extraction, but various filter methods have been developed to reduce this problem (Dupas 2000; Gupta and Gupta 2007). Two speckle filters were selected to be tested in RADARSAT CEOS images: the Gamma-MAP filter and the Lee–Sigma filter. A window size of (3 × 3) was applied for each filter. A visual judgment of the results pointed out that the Gamma-MAP filter was better that the Lee-Sigma filter in smoothing the image while preserving the edges (Fig. 3). All processing steps were performed using ERDAS Imagine 9.2.

Image fusion

Co-registered radar and Landsat data have been then merged on a pixel by pixel basis using three different fusion techniques (IHS, multiplication, and Brovey) embedded in ERDAS Imagine 9.2.

Image fusion techniques

IHS technique:

The basic concept of IHS is replacing the intensity component from the low-resolution multispectral image with the panchromatic image (Eshtehardi et al. 2007; Doua and Chenb 2007). The fusion method first converts an RGB image into intensity (I), hue (H), and saturation (S) components. In the next step, intensity is substituted with the high-spatial resolution panchromatic image. The last step performs the inverse transformation, converting IHS components into RGB colors (Svab and Osˇtir 2006). Figure 4 illustrates the IHS technique and the results of applying IHS is shown in Fig. 5

Where I = Intensity; and V1 and V2 are intermediate values used later for deriving hue and saturation.

Multiplicative:

Multiplicative technique is one of the arithmetic algorithms where it is derived by using the four possible arithmetic methods to incorporate an intensity image into a chromatic image (addition, subtraction, division, and multiplication).Only multiplication is unlikely to distort the color (Svab and Osˇtir 2006).

Where:

DNMsn = digital number of a pixel belonging to nth multispectral band

DNPAN = digital number of a corresponding pixel belonging to panchromatic band

DNnew*Msn = new digital number of corresponding pixel (Mercer et al. 2008)

The advantages of this technique are

-

Simplicity of integrating two images

-

Good for highlighting urban features

The drawback of this technique is that it does not retain the radiometry of the input multispectral image. The results of applying the multiplicative fusion is shown in Fig. 6

Brovey:

In this method each band is divided into all the layers and this normalizes band data then it is multiplied with a panchromatic band to achieve a fused image (Eshtehardi et al. 2007).

The Brovey fusion formula is as follows:

Where B MB is the fusion image, n = bands numbers and dominators denote the summation of the three ETM+ multispectral bands (Wenbo et al. 2008). The results of applying the Brovey fusion is shown in Fig. 7.

Judgment of fusion

Visual interpretation was performed for comparison of all images, which were merged by using the three different fusion techniques. Figures (5, 6 and 7) showed that the merged images have better spectral details than the original images and the improvement in quality of image interpretation is visually depicted. Twenty checkpoints observed with differential GPS have been used to compare the spatial accuracy of the resultant image from each fusion technique. Table 2 summarizes the achieved spatial accuracy for IHS, multiplicative, and Brovey. For radiometric quality control of the fused images two indexes will be examined over the fusion results.

First index:

In this index, the difference between mean gray scale values of the fused image and the original image is considered as an index for comparing the results (Eshtehardi et al. 2007)

Where: fused i is the band i of the fusion result and ms i is the band i from the original input multispectral image (Table 3).

The result of applying the first index is shown in Fig. 8. From the results, it is obvious that by applying the first index, the fused image by IHS has the least difference with the original Landsat image.

Second index:

In this index the difference between standard deviation (STD) of gray scale values of the fused image and the original image is considered as an index in comparing the results.

Where: fusedi is the band i of the fusion result and ms i is the band i from the original input multispectral image (Table 4). The result of applying the second index is shown in Fig. 9. From the results, it is obvious that by applying the second index, the fused image by IHS method has the least difference with the original Landsat image.

Feature identification accuracy of fused image

In order to verify the influence of various fusion methods on the classification accuracy, maximum likelihood classifier, and fuzzy classifier have been used for classifying Radar, Landsat, and fused Radar-Landsat images into two classes (water and nonwater). Signatures collection is the first step in the classification process and they were chosen to represent the different areas of water and nonwater areas. The collected signatures were then evaluated and the result is accepted before the classification process. Accuracy assessment of the classified images of radar, optical, and fused data sets was carried out on reference data obtained from topographic maps of the same year. Random method has been used to select one hundred and fifty reference points from Landsat image. The overall accuracy and Kappa index have been obtained. After classification, the results have been compared (Table 5). It could be observed that the accuracy assessment of the fused IHS obtained of the fuzzy classification has a better accuracy compared to other fusion results.

Shoreline extraction/mapping

The automatic extraction of the shorelines has been applied over classified fuzzy Radarsat, classified fuzzy Landsat, and classified fuzzy fused IHS images using classification to vector method in ENVI 4.4 software. The classification to vector conversion of the water class was done for each of the three classifications separately then the vector of the water class has been converted to shape files. The three shape files have been overlaid resulting in extraction of three different shorelines, that have been compared to the reference line obtained from topographic maps (manually extracted) by analyzing their respective differences. The accuracy of the shoreline was assessed through a comparison of the calculated positions of checkpoints with their known positions. The layout has been done in ArcGIS9.2 for the three mapped shorelines and the reference shoreline. Figure 10 shows a flowchart of shoreline extraction while Fig. 11 illustrates mapping of the detected shorelines from classified fuzzy (Landsat, Radarsat, and fused IHS).

Assessment of quality of shorelines

The three extracted shorelines from (Landsat-fuzzy), (Radarsat-fuzzy), and (IHS- fuzzy) data are compared to a reference shoreline. Two sets of comparisons were performed. The first comparison is computation of RMS related to thirty check points on the reference shoreline and the second comparison for error analysis is using quality metric (generalization factor)

Assessment of planimetric quality:

The three extracted shorelines from (Landsat-fuzzy), (Radarsat-fuzzy), and (IHS-fuzzy) data were compared to a reference shoreline. The RMS has been computed related to thirty check points on the reference shoreline. The accuracies of the extracted shorelines from (Landsat-fuzzy), (SAR-fuzzy), and (IHS-fuzzy) data are estimated to be 8.3, 7.27, and 6.75 m, respectively.

Assessment of quality metrics for shoreline:

Ali (2003) has developed the quality metrics for the positional quality assessment of the linear features. He developed four quality metrics (distortion factor, generalization factor, bias factor, and fuzziness factor) and an automated interface for calculating these metrics in order to access the positional quality of any linear dataset quantitatively. In this research, generalization factor has been calculated for assessment of the positional quality of the shoreline.

Generalization factor

The generalization factor compares the length of two corresponding lines segments (Fig. 12). For example, the shoreline generated from a higher quality data source with the equivalent shoreline generated from lower quality data. One of them will be a more detailed representation of the shoreline. Figure 12 shows error analysis of the shoreline positional quality using quantitative matrices (generalization factor).

Therefore, if the higher quality line is stretched until it is transformed into a straight line, and the same is done for the one with lower quality, the result will be two lines of different lengths

Where AB is the length of the less precise shoreline, A′B′ is the length of the more precise shoreline, and GF is the generalization factor. A value of 1 will indicate no generalization, and a value smaller than 1 will indicate the amount of generalization. For example, 0.7 will indicate a more generalized feature than 0.8 (Ali 2003; Srivastava et al. 2005).

The generalization factor for the three shorelines has been computed; the results have been compared (Table 6). It could be observed that the generalization factor of the shoreline from (Landsat-fuzzy), (SAR-fuzzy), and (IHS-fuzzy) data are estimated to be 0.977, 0.986, and 0.999, respectively. This indicates that the extracted shoreline from (IHS-fuzzy) is better than the extracted shoreline from (Radarsat-fuzzy) followed by the extracted shoreline from (Landsat-fuzzy)

Results

SAR and Landsat data were merged using different fusion methods, the merging effects are evaluated and quantitative statistics results related to spatial accuracy and radiometric accuracy are shown in Tables 2, 3, and 4, respectively. Visual interpretation was performed for comparison of the merged images showed that merged images have better spectral details than original images. Figures 5, 6, and 7 show all images, which were merged by using different fusion techniques. Visual comparison also reveals that all the three methods have spectral distortion, IHS and Brovey are the best two in retaining spectral information of original images, but the Multiplication is the worst. The results reveal that all the fused images have higher spatial frequency information than the original images, and IHS is the best method in retaining spectral information of original image.

By comparing the overall accuracy and kappa index of the maximum likelihood classifier and fuzzy classifier, based on Table 5, it is clear that the accuracies of classification vary from radar image, Landsat image, and fused images. Also image fusion improves the classification accuracy and the use of fuzzy classification gives better results than maximum likelihood classification. The accuracies of the extracted shorelines from (Landsat-fuzzy), (SAR-fuzzy), and (IHS-fuzzy) data related to twenty differential check points are estimated to be 5.69, 5.26, and 5.14 m, respectively. The three shorelines extracted/mapped from (Landsat-fuzzy), (Radarsat-fuzzy), and (IHS-fuzzy) data were compared to a reference shoreline. The RMS has been computed related to thirty check points on the reference shoreline. The accuracies of the extracted shorelines from (Landsat-fuzzy), (SAR-fuzzy), and (IHS-fuzzy) data compared to reference shoreline are estimated to be 8.3, 7.27, and 6.75 m, respectively. The positional quality of the shoreline (using generalization factor) from (Landsat-fuzzy), (SAR-fuzzy), and (IHS-fuzzy) data are estimated to be 0.977, 0.986, and 0.999, respectively, compared to historical shoreline.

Discussion and conclusions

In this research three different fusion techniques have been compared. The resultant fused images were evaluated geometrically using GPS check points and radiometrically by two different indices. According to the achieved results, the geometric aspect of IHS shows higher spatial accuracy. Also from the radiometric aspect and spectral aspect, it can be deduced that IHS mainly has better results followed by Brovey then multiplicative. The decision to choose the most suitable technique is influenced by the specified application and can be supported by statistical validations.

The resulted three fused images, Landsat image, and Radarsat image have been fed to the fuzzy classifier and Maximum likelihood classifier. The classification delineated two classes water and nonwater. Accuracy assessment of the classified images of the radar, optical, and fused data sets on reference data was carried out. After classification, the results have been compared. It was found that image fusion has improved the classification accuracy and the accuracy of fuzzy classification is better than the maximum likelihood classification.

Experimental results show that the shoreline extraction accuracy has dramatically improved by effective image fusion of Landsat and SAR data. Three shorelines have been extracted/mapped from the (IHS-fuzzy), (SAR-fuzzy), and (Landsat-fuzzy).

The accuracies of the extracted shorelines from (Landsat-fuzzy), (SAR-fuzzy), and (IHS-fuzzy) data related to twenty differential check points are estimated to be 5.69, 5.26, and 5.14 m, respectively.

The accuracies of the extracted shorelines from (Landsat-fuzzy), (SAR-fuzzy), and (IHS-fuzzy) data compared to the reference shoreline are estimated to be 8.3, 7.27, and 6.75 m, respectively.

The positional quality of the shoreline (using generalization factor) from (Landsat-fuzzy), (SAR-fuzzy), and (IHS-fuzzy) data are estimated to be 0.977, 0.986, and 0.999, respectively. This indicates that the extracted shoreline from (IHS-fuzzy) is better than the extracted shoreline from (Radarsat-fuzzy ) followed by the extracted shoreline from (Landsat-fuzzy)

It is recommended to extract the shoreline from QuickBird or Geoeye images and compare the extracted shorelines with the three resulted shorelines. For future work wavelet-based technique could be used for image fusion and other quality indices could be tested in order to get better accuracy control.

References

Ali TA (2003) New methods for positional quality assessment and change analysis of shoreline feature. Ph.D. Dissertation, The Ohio State University.Graduate program in Geodetic Science and Surveying

Atkinson PM (1997) Super-resolution target mapping from soft-classified remotely sensed imagery. Int J Remote Sens 18(4):699–709

Buckley SJ, Mills JP, Clarke PJ, Edwards SJ, Pethick JS, Mitchell HL (2002) Synergy of GPS, digital photogrammetry and INSAR in coastal environments. Seventh International Conference on Remote Sensing for Marine and Coastal Environments, Miami, Florida, May 20–22

Burini A, Schiavon G, Solimini D (2008) Fusion of high resolution polarimetric SAR and multi-spectral optical data for precision viticulture. IGARSS http://www.igarss08.org/Abstracts/pdfs/2305.pdf

Dekker R (2000) Monitoring the urbanization of Dar Es Salaam using ERS SAR data. International Archives of Photogrammetry and Remote Sensing, Vol.XXXΙΙΙ, part B1, Amsterdam

Di K, Ma R, Wang J, Li R (2003) Coastal mapping and change detection using high-resolution IKONOS satellite imagery. Proceedings of the 2003 Annual National Conference for Digital Government Research, Boston, MA, May 18–21, 343–346

Doua W, Chenb Y (2008) An improved IHS image fusion method with high spectral fidelity. The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences. Vol. XXXVII. Part B7. Beijing

Dupas C (2000) SAR and LANDSAT TM image fusion for land cover classification in the Barazilian Atlantic forest domain. International Archives of Photogrammetry and Remote Sensing Vol.XXXΙΙΙ, part B1, Amsterdam

Eshtehardi A, Ebadi H, Zoej MJV, Mohammadzadeh A (2007) Image fusion of Landsat ETM+ and SPOT satellite images using IHS, Brovey and PCA. ISPRS Journal of Photogrammetry & Remote Sensing http://www.isprs2007ist.itu.edu.tr/2.pdf

Ganzorig M, Amarsaikhan D, Batbayar G, Bulgan G, Munkherdene A (2006) The investigation of land surface features using optical and SAR images. Asian Conference on Remote Sensing ACRS http://www.aars-acrs.org/acrs/proceeding/ACRS2006/Papers/I-1_I4.pdf

Garzelli A (2002) Wavelet-based fusion of optical and SAR image data over urban area. International Archives of Photogrammetry and Remote Sensing ISPRS Conference http://www.isprs.org/commission3/proceedings02/papers/paper054.pdf

Gordon D, Logic C (2005) Using orthorectification for cross-platform image fusion. Anais XII Simpósio Brasileiro de Sensoriamento Remoto, Goiânia, Brasil, 16–21 April, INPE, 4081–4088

Gungor O, Shan J (2006) An optimal fusion approach for optical and SAR images. ISPRS Commission VII Mid-term Symposium “Remote Sensing: From Pixels to Processes”, Enschede, the Netherlands, 8–11 May

Gupta KK, Gupta R (2007) Despeckle and geographical feature extraction in SAR images by wavelet transform. ISPRS J Photogramm & Remote Sens 62:473–484

Kumar A, Ghosh SK, Dadhwal VK (2006) A comparison of the performance of fuzzy algorithm versus statistical algorithm based sub-pixel classifier for remote sensing data. ISPRS Commission VII Mid-term Symposium. Remote Sensing: From Pixels to Processes, Enschede, the Netherlands, 8–11 May

Mercer JB, Hong G, Edwards D, Maduck J, Zhang Y (2008) Fusion of high resolution radar and low resolution multi-spectral optical imagery. http://www.intermap.com/uploads/1170361541.pdf accessed 13/11/2008

Okeke F, Karnieli A (2006) Methods for fuzzy classification and accuracy assessment of historical aerial photographs for vegetation change analyses. Part I: Algorithm development. Int J Remote Sens 27(10):153–176

Ruiz LA, Pardo JE, Almonacid J, Rodrguez B (2007) Coast line automated detection and multiresolution evaluation using satellite images. Proceedings of Coastal Zone 07 Portland, Oregon July 22 to 26

Srivastava A, Niu X, Di K, Li R(2005) Shoreline modeling and erosion prediction ASPRS 2005 Annual Conference “Geospatial Goes Global: From Your Neighborhood to the Whole Planet” March 7–11, 2005 , Baltimore, Maryland

Svab A, Osˇtir K (2006) High-resolution image fusion: methods to preserve spectral and spatial resolution. ISPRS J Photogramm & Remote Sens 72(5):565–572

Van TT, Binh TT (2008). Shoreline change detection to serve sustainable management of coastal zone in cuu long estuary. International Symposium on Geoinformatics for Spatial Infrastructure Development in Earth and Allied Sciences

Wenbo W, Jing Y, Tingjun K (2008) Study of remote sensing image fusion and its application in image classification. The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences. Vol. XXXVII. Part B7. Beijing

Wu W, Bu L, Ji Y (2008) Application of TM and SAR images fusion for land monitoring in coal mining areas. The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences. Commission VII: WG VII/6, Beijing http://www.isprs.org/congresses/beijing2008/proceedings/7_pdf/6_WG-VII-6/14.pdf

Young F, Benyi C, Wensong H, Hong C (2000) DEM generation from Multisensor SAR images. International Archives of Photogrammetry and Remote Sensing vol.XXXΙΙΙ, part B1, Amsterdam

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License (https://creativecommons.org/licenses/by-nc/2.0), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Taha, L.Gd., Elbeih, S.F. Investigation of fusion of SAR and Landsat data for shoreline super resolution mapping: the northeastern Mediterranean Sea coast in Egypt. Appl Geomat 2, 177–186 (2010). https://doi.org/10.1007/s12518-010-0033-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12518-010-0033-x