Abstract

The relevance of trust on the road to successful human-robot interaction is widely acknowledged. Thereby, trust is commonly understood as a monolithic concept characterising dyadic relations between a human and a robot. However, this conceptualisation seems oversimplified and neglects the specific interaction context. In a multidisciplinary approach, this conceptual analysis synthesizes sociological notions of trust and distrust, psychological trust models, and ideas of philosophers of technology in order to pave the way for a multidimensional, relational and context-sensitive conceptualisation of human-robot trust and distrust. In this vein, trust is characterised functionally as a mechanism to cope with environmental complexity when dealing with ambiguously perceived hybrid robots such as collaborative robots, which enable human-robot interactions without physical separation in the workplace context. Common definitions of trust in the HRI context emphasise that trust is based on concrete expectations regarding individual goals. Therefore, I propose a three-dimensional notion of trust that binds trust to a reference object and accounts for various coexisting goals at the workplace. Furthermore, the assumption that robots represent trustees in a narrower sense is challenged by unfolding influential relational networks of trust within the organisational context. In terms of practical implications, trust is distinguished from acceptance and actual technology usage, which may be promoted by trust, but are strongly influenced by contextual moderating factors. In addition, theoretical arguments for considering distrust not only as the opposite of trust, but as an alternative and coexisting complexity reduction mechanism are outlined. Finally, the article presents key conclusions and future research avenues.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Research in the field of human-robot interaction (HRI) increasingly draws on existing knowledge from human-computer interaction (HCI) and becomes more and more interdisciplinary in view of emerging interactive and social robots [1]. In the past, the majority of studies focussed on technical aspects such as safety mechanisms [2]. Only recently a distinction has been made between cognitive HRI (cHRI) and physical HRI (pHRI), whereby the former explicitly deals with human factors such as humans’ trust in robots [3]. In contrast, pHRI is primarily concerned with the physical and technical realisation of HRI. However, especially with respect to trust, the two aspects are intertwined, since the cognitive attitude of trust depends heavily on observations of the physical world. Non-verbal behaviours such as affect-related expressions have been identified from an evolutionary perspective as an important observable cue for recognizing a cooperative attitude of a social actor [4, 5]. Individuals who exhibit high emotional expressivity, i.e., who have a strong tendency to accurately express their emotions and to reveal their intentions and inner mental states, are more likely to be considered trustworthy. This is because their interaction partners can more easily estimate the risk of being exploited based on their knowledge about those individuals' intentions [4]. In this sense, the physical design of robots and – more precisely – their ability to express non-verbal affect-related cues may help their human counterpart to accurately and intuitively judge the robot’s trustworthiness [6]. Based on this assessment, people decide to which level they trust, which represents a key prerequisite for successful HRI according to common sense [3, 7]. Success in turn can relate to a variety of criteria such as performance-related indicators or satisfaction of involved humans inter alia [8,9,10,11,12,13,14]. The significance of trust within the HRI context primarily arises from the assumption that placing trust in a robot increases the willingness of a trustor to interact with this robot [15] and to rely on the robot’s actions [16]. Thus, trust may have very practical and observable implications. However, this does not imply that trusting a robot is per se desirable, though. Quite the contrary, it is key to achieve an appropriate level of trust corresponding with the robot’s trustworthiness to avoid phenomena of over- and undertrust. The latter are associated with negative consequences such as disuse on the one hand and a lack of situational awareness on the other hand, which may result in serious accidents [16, 17].

Whilst being researched predominantly with regard to social robots [18], the relevance of trust in particular and of human factors in general reaches beyond mere social interaction contexts and likewise concerns human-robot interactions with other kinds of interactive robots, such as so-called collaborative robots (cobots). These typically lightweight robots are designed for use in industrial manufacturing. Opposed to traditional industrial robots, their dedicated security features enable direct human-robot interaction without any physical separation needed [19, 20]. Whilst collaborative robots are intended for practical use in production environments, they nevertheless provide social cues, which might make them appear as quasi-others from a phenomenological viewpoint [21]. Additionally, their relatively small size, their human-like morphology with rounded joints, their slow and fluent movements, or simply the large variety of interaction opportunities including physical contact can easily cause human interaction partners to anthropomorphise the robot despite the subtlety of these cues [22,23,24,25]. In their seminal work on the so-called media equation, Nass and Reeves have raised awareness for the plethora of phenomena in which simple technical devices such as personal computers are anthropomorphised, conceived and treated as humans [26]. Even before their work, Braitenberg has also demonstrated that people tend to describe simple technological devices with anthropomorphic metaphors [27]. Hence, it becomes obvious that modern collaborative robots are likely subjected to anthropomorphisation, particularly when robots are even equipped with simulated pairs of eyes blurring the boundaries between well-established robot categories [28,29,30]. This somehow represents a paradigm shift, since robots at the workplace have long been seen as mere tools [31] and social-relational aspects have been considered irrelevant in the industrial workplace as long as robots possess only very limited social interaction capabilities [32]. However, this argumentation is rooted in a strongly ontological perspective that fails to recognise the extent to which collaborative robots already appear nowadays as cooperation partners, colleagues, social interaction partners or alike from a phenomenological-relational perspective. Anecdotal evidence and empirical observations of production workers addressing their robots with human-like names exemplify that “human-robot interaction at the workplace is more than just working with robots” [33]. Hence, these types of robots challenge traditional dichotomies based on categorial ontological differentiations between tools and teammates, between machines and interactive colleagues, and between human subjects and technological objects [21, 34,35,36]. This leads to the classification of collaborative robots as hybrid robots, bridging the gap between social robots intended for emotional and purely social interaction on the one hand and functional, performance-oriented robots like traditional industrial robots on the other hand [35, 37, 38]. Therefore, human-robot interactions at the workplace involving collaborative robots can be reasonably understood as (quasi-)social interactions, which are consequently largely influenced by attitudes of trust and distrust. This raises attention to the relevance of considering industrial HRI (iHRI) as another application context in which social human-robot interactions are taking place. Additionally, from an application-oriented research perspective, the attractiveness of collaborative robots as a research subject is strengthened by their widespread real-life usage in factory environments, which represent the paradigmatic place of origin and deployment of real existing robots [25]. Hence, in contrast to some spectacular and highly humanoid robots, which have attracted much attention in contemporary media and research, collaborative robots create real-life human-robot interactions with many thousands of production workers day by day. For sure, different robot models and different humans are involved in these interactions, which points to the plurality and heterogeneity that is problematically hidden behind the collective nouns “human” and “robot” [39, 40]. However, findings from interactions with specific types of robots or specific groups of people cannot be generalised to all human-robot interactions but have to be interpreted in the face of the interaction context. In order to improve the synthesis of empirical findings involving different kinds of robots and persons, Onnasch and Roesler [40] suggested a taxonomy to structure and analyse human-robot interactions, which explicitly includes the field of application as a relevant criterion amongst others. In this vein, this article focuses on the interaction of employees in production environments with collaborative robots, a paradigmatic yet often overlooked application context in which trust plays a prominent role in establishing successful human-robot interactions, and aims to apply general considerations on trust and distrust to this context. Furthermore, it seeks to shed light on the different notions of trust with regard to interactions between employees and collaborative robots in the industrial workplace, unfolding the hidden layers and reference objects of multidimensional trust. First, some interdisciplinary perspectives and general considerations on trust are outlined, before presenting some common definitions within the HRI context. The second part of this article focuses on trust in collaborative robots at the workplace. Thereby, it will explain the multidimensional nature of trust, address the question of whether robots (and machines in general) can be trustworthy at all, and conceptualise trust as deeply embedded in relational networks within the organisational context. Afterwards, some practical implications of human’s trust in robots are detailed. At this stage, despite their aforementioned conceptual similarities, trust and technology acceptance will be distinguished and their relationships analysed in more detail with a focus on relevant moderators between a trusting attitude and actual tool usage. The last part of the article focuses on distrust and explores the differences, commonalities, and interlinkages between trust and distrust. Finally, key conclusions are derived, and future research avenues are delineated that should guide further research in order to achieve a multidimensional, context-sensitive and in-depth understanding of trust and distrust phenomena in the human-robot interaction context.

2 Trust

2.1 Functional Characterisation

Trust as a multifaceted construct has been discussed and conceptualised differently in numerous contexts and from a variety of disciplinary research perspectives with contributions from famous scientists such as David Hume, John Locke, Georg Simmel, and Niklas Luhmann only to mention a few. However, hitherto no uniform consensual definition has emerged [41]. At first glance, trust can be characterised as a social phenomenon and thus as a subject of sociology, because placing trust only makes sense given the presence of other agents as potential trustees. However, trust also represents an important subject of psychological research [42, 43]. Apart from the sociologist and the psychological perspective, philosophers, cognitive scientists and economists inter alia have typically been concerned with trust [44, 45].

From a classical and often-cited sociologist viewpoint brought forth by Luhmann [46], the necessity to trust originates from the perceived environmental cognitive overcomplexity, which represents an excessive demand for every human being. The complexity arises from the infinite possibilities of imagining the course of the future, whereby not every depiction and expectation of the future becomes reality. Quite the contrary, from manifold visions and expectations only a single or maybe no one becomes reality. In fact, whilst the course of the future remains unknown until it becomes reality, a basic desire to effectively deal with possible future events and to adapt one’s behaviour with regard to external future events, i.e., the so-called effectance motivation [47], justifies the need to cognitively engage with future scenarios [48]. This need arises when an individual is uncertain about the course of the future and feels vulnerable to at least one possible future scenario. Less hierarchical and pre-structured organisational styles of leadership as well as an increasing amount of interactions with modern technologies that show multifaceted, seemingly non-deterministic actions have led to both, increasing degrees of freedom as well as higher perceived cognitive complexity at the workplace [16]. Employees are obliged to rely on each other in cooperative working settings and are confronted with a sense of ambiguity on who and/or what to trust in novel and unknown circumstances, in particular when “fundamentally ambiguous” [49] technologies such as cobots are in play. This sense of ambiguity further increases cognitive load and unpleasant feelings of uncertainty [50, 51]. In the face of this environmental (over-)complexity, trust serves as an efficient functional mechanism of complexity reduction. Placing trust involves accepting a single depiction of the future as the only one that becomes reality, freeing cognitive resources from processing multiple scenarios simultaneously [46]. In other words, trusting someone relieves the mind from permanent thoughts about existing vulnerabilities and the possibility of being betrayed by others in relationships, just as if these possibilities would no longer exist [52]. Particularly in cooperative teams, corresponding depictions of the futures and well-aligned mental models are key for establishing shared awareness and successful interaction [53]. However, trust implies choosing one depiction of the future and to make this choice may eventually require cognitive capacities. Recent evidence indicates that in case of cognitive overload, e.g. due to complex environmental conditions, people face difficulties in trusting and show a more impulsive trusting process, which to a greater extent is based on situational cues rather than on rational considerations [54]. Hence, whilst trust can reduce cognitive complexity, the process of trusting causes cognitive load, which can hinder people from trusting in cases of high environmental complexity or of a lack of available cognitive capacity.

As already indicated, a lack of knowledge about the future constitutes the need to trust. At the same time, trust also requires some knowledge about past events, which it can be based on [42]. Additionally, trust grounds on the assumption that familiar past circumstances can be rolled forward into the future based on inductive reasoning, resulting in a characteristic process of mentally suspending conflicting scenarios about possible futures. This means that the trustor acts like these conflicts no longer exist in order to overcome the associated insecurities and vulnerabilities [55]. The presumed inferred knowledge about the future ceases the perceived need for control [42]. However, it is noteworthy that trust and control are not mutually exclusive. On the contrary, a trustful interaction might emerge from a reasonable mixture of a certain level of trust and control alike, when the trustor expects not to be exploited by the trustee particularly due to existing control opportunities [56]. However, the tendency to relinquish control when someone decides to trust together with the erratic nature of the underlying inductive reasoning makes trust an apparently risky endeavour because the expectations about the future might prove wrong. Consequently, a trustor always puts himself/herself in danger of exploitation and deception by the trustee and in this respect makes himself/herself dependent on the future actions of the trustee [46]. In this sense, trust can also be characterised “as a situational non-exploitation expectation of the trustor” [56]. This expectation may well be violated since deception is a ubiquitous and efficient strategy amongst animals, which may be even necessary to survive [57]. In this sense, there might be good reasons for deception in complex workplace settings characterised by many interdependent and interacting actors with different interests, not necessarily from an ethical, but definitely from an egocentric perspective. Even in the case of robots as trustees, Floreano et al. [58] indicate that deceptive communication may emerge as part of an evolutionary process and in response to certain situational conditions within artificial colonies of robots interacting with each other. Consequently, there is a relevant risk of being exploited due to the deceptive behaviour of trustees.

Notwithstanding the variety of definitions and conceptualisations, three characteristics of trust seem to form common ground [59]. First, trust becomes relevant in situations of uncertainty and vulnerability, which one can tackle by either controlling the actions of another party under involvement of high cognitive load or by trusting the other party under release of cognitive capacity. If someone decides to trust another party, this person will tend to rely on this party and thereby delegate rights and responsibilities at the price of his/her own control over the situation [16]. Second, trust serves as a decision heuristic involving uncertain expectations regarding future events. If one believes that an agent will react in a certain way and evaluates this reaction positively, this will lead to an attitude of trust [16]. Third, as the aforementioned example illustrates, trust always involves (at least) two parties, whereby the mental states and future actions of at least one party are not fully determined and known [55]. Most commonly, human-technology trust is characterised as a unidirectional trusting relationship between a human as a trustor and a technological system as a trustee. However, this unidirectional nature is controversial, since technological advancements will most likely allow robots to judge the trustworthiness of their human teammate in joint human-robot teams and accordingly to decide whether to rely on this counterpart [60] (cf. Section 3.3 for in-depth discussion).

2.2 Emergence and Evolution

After trust has been characterised in the last subsection, the question remains how trust emerges and evolves. In this context, assessments of trustworthiness play a crucial role, since basically it makes sense to trust whenever a person considers the other party to be trustworthy, that is, he/she does not expect the other party to exploit his/her own vulnerability [43]. For trust to emerge, a certain perceived necessity to trust in face of interdependence and vulnerabilities must be present. In addition, trust requires to some extent the trustor’s cognitive ability to assess a potential trustee’s trustworthiness [61], which in turn requires inter alia accurate information about the actual capabilities and limitations of another party, be it a human being or a technology like a robot [62]. The individual disposition represents a further frame condition for the actual occurrence of trust [63]. Basically, according to the evidential view [64, 65], which draws on Hume’s early work [66], reasoning about trustworthiness seeks to identify empirically grounded reasons for trust and thereby relies on the assumption that humans have the ability to deliberately decide whether or not to trust another entity on the basis of reflective processes incorporating prior experiences. In this sense, trust emerges based on expectancy-value calculations. Hence, the trustor does not regard the potential trustee as an individual, but exclusively pays attention to the trustee’s behaviour in the sense that it provides helpful indications of the trustee’s trustworthiness. Accordingly, high levels of emotional expressiveness facilitate this process. Vice versa, robots which are likely not to display any emotional expressions such as collaborative robots make it hard for their counterpart to intuitively assess their trustworthiness [4]. From a perspective of robot design, the key question is how to design robots that enable human interaction partners to make accurate and well-justified assessments of the robots' trustworthiness in order to achieve an adequate, stable, and sustainable trust level.

Attributions of trustworthiness are typically associated with three assumptions about the other party, namely that the latter has certain abilities to perform the expected action, a benevolent attitude towards the trustor, and integrity. Integrity thereby refers to the presence of sets of overriding principles that guide the trustee’s behaviour and that the trustor approves or at least accepts [67]. It is noteworthy that these criteria for trustworthiness have their roots in the interpersonal trust literature which is concerned with human-human relationships. Although it is unclear what the attribution of benevolence and integrity should mean as properties of a robot, this model of trustworthiness is often applied to the human-robot interaction context [68]. In a much more recent meta-analysis of trust and trustworthiness conceptualisations, Malle and Ullman [68] concluded that although most of the work in the human-robot context is centred around performance-oriented criteria such as reliability, ability, and competence [69], there is also a relevant moral dimension of robot trust which incorporates aspects like sincerity, integrity, and benevolence. As much as moral attributions to nowadays robots seem counterintuitive and controversial, they may make sense from a phenomenological stance that acknowledges people’s tendency to perceive robots as moral actors [70, 71]. Similarly, Law and Scheutz [72] classify trust concepts underlying previous empirical studies as either performance-based trust, which is typically relevant in industrial HRI settings, or as relation-based trust, which is typically relevant in classical application domains of social robotics. Nevertheless, the authors note a “fairly widespread conflation” [72] of both trust concepts. Performance-oriented trust entails expectations regarding the trustee’s capabilities and reliability, whereas moral (or relation-oriented) trust is determined by ethical behaviour (ethical) and sincerity (sincere) [73]. The latter is based on the expectation that the trustee displays moral behaviour as part of the society and will not exploit existing vulnerabilities of the trusting person [68, 72]. This distinction is reminiscent of the criteria already discussed for the trustworthiness of trusted individuals [67], whereby the criteria of a benevolent attitude and integrity are building blocks of relational trust [68].

Trust is usually understood as a gradual and dynamic construct. In contrast to a dichotomous distinction between trust and no trust, the level of trust thus moves along a continuum whose endpoints, i.e., no trust and maximum trust, can hardly be determined objectively. Trust as a subject to a dynamic process of change is updated continuously by new empirical observations and findings, which might deviate from prior expectations. In this process, negatively perceived events, i.e. trust violations, affect the trust level more significantly than positive events, especially because they often entail clearly visible negative consequences [16, 74]. Therefore, the correlation between positive events and the level of trust is by no means proportional. Rather, trust is usually difficult to gain, but easy to lose [74], especially at the beginning of the trust-building process, when it is vulnerable due to its grounding on only a few experiences available [75], and in the case of symbolic trust-violating actions which can take on disproportionate importance [46]. Essentially, such perceived trust violations are hard to repair, particularly when referring to human-machine trust, although recent research shows that they may not necessarily be caused by technical failures, but can also result from robotic behaviour that simply mismatches with users’ expectations and mental models. Consequently, avoiding this kind of perceived trust violations is primarily a matter of human-centered design [76]. Luckily, not every negative experience necessarily leads to a withdrawal of trust. On the contrary, for the sake of cognitive load reduction the trustor might grant a certain credit, leading to a tendency to reinterpret and absorb even unfavourable experiences [46], until accumulated negative experiences exceed a certain threshold. Even more, under some circumstances and despite the danger of losses in trust, recognizing erroneous behaviour of a robot may even lead to a greater likeability [77]. Definitely, much more could have been said about (perceived) trust violations, trust repair and trust recovery mechanisms and clearly, the complex perception and interplay between different constructs when observing robot errors requires much more research. Although these issues have attracted much interest in research within the last years [78,79,80,81], outlining the entire contemporary debate lies out of scope for this article.

3 Human-Robot Trust at the Workplace

3.1 Definitions

The large variety of coexisting context-independent trust definitions is also reflected by a multitude of HRI-specific trust definitions [82, 83]. Since only few empirical studies precisely denominate their underlying trust definition, synthesizing the results of these studies proves challenging. Most definitions either implicitly or explicitly conceptualise trust as different types of mental states as distinguished within the theory of reasoned action (TRA;92, 93), and can thus be clustered according to whether they consider trust as a belief, an attitude, an intention, or a behaviour. Commonly, influential theories on human-technology trust draw on this classification [e.g. 16, 41], which also illustrates the interlinkages between mental states and actual behaviour. Additionally, this classification entails considerable epistemological consequences, because mental states can only be inquired about, whereas actions can be observed by third parties. Table 1 lists some influential definitions with their respective classifications. A more comprehensive overview can be found in [83].

Certainly, conceptual overlaps and ambiguities within the definitions make the classification partly debatable. For example, Lee and See [16] renounce to differentiate between beliefs and attitudes in their much-recognized meta-analysis, but introduce a clear separation between trust and reliance by stressing the behavioural notion of reliance: “in the context of trust and reliance, trust is an attitude and reliance is a behaviour” [16]. The distinction between trust and reliance is also common in philosophy of technology. Drawing on Hardin’s seminal work on trust [87, 88], placing trust in someone goes beyond relying on the trustee’s actions, i.e., expecting the trustee to behave in a desirable manner. As opposed to reliance, trust involves the belief that the trustee takes the trustor’s interests into account and has a certain motivation to adapt his/her behaviour with respect to the trustor’s goals. In that sense, to place trust in another party means to take “an optimistic view of that person and their motivations” [89]. This characterisation implies a feeling of betrayal when the optimistic attitude proves wrong, e.g., in case the trustee violates the trust by acting unexpectedly and taking advantage of the trustor’s vulnerability [90]. In contrast, if someone relies on a robot to function properly, he/she might be surprised or disappointed if this expectation is proven wrong, but he/she would not feel betrayed or be willing to blame the robot in a normative sense, because the robot’s trust-violating actions obviously did not result from bad intentions or motives. Instead, the robot neither possessed intentions nor motives or any other mental states at all. Therefore, “it is always a mistake to trust a tent, a trout, or a turkey, although we may rely upon such things” [91].

While the attitudinal definition by Lee and See [16] emerged in the context of trust in automation technology and was characterised by the authors themselves as a “simple (…) basic definition” [16], it is also widely used in the HRI context [92]. At first, this definition seems to conceptualise trust as a mere belief rather than an attitude which additionally requires a positive evaluation of an expectation [93, 94]. However, the reference to the achievement of a goal implies a positive evaluation of the future scenario, that is, the goal, so that the definition fulfils the criteria for being an attitude. Based on this definition, the components of a trust-building process in a robot in the industrial workplace can be exemplified as follows: the trustor expects a robot to be able to bring workpieces into the right position for further processing (belief) and evaluates this as helpful with regard to achieving his/her goal of performing well at work (subjective evaluation). The person thus develops a positive attitude towards the robot. On the basis of this attitude-based trust, he/she is willing to forego the robot’s permanent control despite the uncertainty about its actual actions. Since the person assumes that other employees also have a positive attitude towards the robot or at least appreciate his/her attitude towards the robot (subjective norm), this results in an intention to actually interact with the respective robot. Subject to other external factors, this leads to the person actually using the robot. However, it should be mentioned here that behavioural intentions or actual behaviour may be induced by external constraints on use, as employees do not have full freedom of choice regarding the use of automation technology [95]. Accordingly, the behaviour of employees with regard to technology does not necessarily express their attitude towards technology, but can, for example, reflect their willingness to follow instructions. Furthermore, this example begs the question of whether it is the robot itself which helps to achieve a goal as some kind of autonomous agent, or the use of the robot as a tool that proves helpful. Lee and See’s definition refers to an agent which helps to achieve a goal [16] and thereby introduces the controversial notion of technology as an autonomously acting being. Transferring this concept to the industrial workplace context requires specifying on the one hand the typical vulnerabilities that employees face and on the other hand, the goals they pursue at the industrial workplace. The next subsection will deal with these aspects in order to demonstrate the multidimensionality of trust in the workplace.

3.2 Multidimensionality

The vulnerabilities and goals of workers can be manifold. For example, employees usually seek to maintain their physical and mental integrity (work safety) as well as their jobs (job security), they want to feel valued as an important and capable part of the company (self-concept), they value opportunities for personal development (self-efficacy), they seek opportunities to interact socially with others (social interaction) and to enjoy their work (perceived enjoyment). These goals are represented in the technology acceptance model for human-robot collaboration (TAM-HRC) [96, 97]. In addition, work safety and job security have been identified as some of the most influential success factors for collaborative robot introductions by German company representatives [98]. Certainly, the presented list of goals is neither exhaustive nor does every employee pursue all these goals. Nevertheless, the list serves to exemplify the diversity of possible goals at the workplace.

The process of deploying a collaborative robot in a factory can easily create uncertainties and vulnerabilities related to the aforementioned goals. For example, the introduction of a collaborative robot may evoke or intensify concerns about technological replacement and increase the uncertainty about whether collaborative robots either complement or substitute human labour. Foremost, the ambiguity of this technology [49] and the existential fears of being replaced with all their economic and psychological consequences [39, 99,100,101] might create a considerably high amount of perceived uncertainty. Additionally, the lack of a physical separation between a collaborative robot and the respective employees might cause significant fears of being harmed physically. The need to adapt to the working speed of the robot in collaborative scenarios might put employees under mental stress [102]. This also implies a threat to the self-concept of being a valuable employee with superior capabilities compared to the ones of a machine [99]. Furthermore, the introduction of robots in production facilities usually triggers depictions of deserted factories which do not offer any opportunities for human contact and interpersonal exchange [103]. In conclusion, these examples demonstrate the juxtaposition between the various possible goals at the workplace and the substantial uncertainties as well as vulnerabilities caused by the deployment of a collaborative robot.

The multitude of possible goals, the existing vulnerabilities and the uncertainties imposed by a collaborative robot have important implications for trust building in the sense that conceptions of trust as a “singular conceptual entity” [83] fall short in accounting for the multidimensionality arising from the different goals and vulnerabilities. Whilst within the application-oriented and psychological research, trust is usually conceptualised as a dyadic relation [59], philosophers of technology argue for a three-dimensional notion of trust [e.g. 91, 104] including a reference object as a third dimension: “X trusts Y to φ”. For instance, one may trust a robot to function properly in a pre-programmed task but refuse to trust the same collaborative robot to autonomously adapt to new tasks. More commonly, people refer either explicitly or implicitly to a two-dimensional understanding of trust by stating that “X trusts Y”, which can either represent a shorthand of the three-dimensional formulation omitting the reference object or an expression of X’s general trustful attitude towards Y, irrespective of a concrete reference object [89]. The latter notion can also be understood as “X’s disposition to trust Y” or – if we further reduce the dimensions – as X’s general disposition to trust irrespective of the trustee in the sense that “X is trusting” [89]. However, evidently, the presence of such a disposition does neither imply that X trusts Y with regard to any possible reference object φ nor does trusting someone with regard to a certain reference object φ indicate a general trusting attitude vice versa. Consequently, in the case of human-robot trust, the two-dimensional conception and the corresponding question “Do you trust this robot?” might be misguided. Whereas in the context of interpersonal relations, a trustee’s (perceived) integrity as a person might motivate another person to generally place trust in this person, putting forward the personality of a machine as a reason to trust does not make sense. Hence, human-machine trust has to be considered as a much more situational concept, which is linked to a concrete reference object and expectation and reaches beyond a “single, uniform phenomenon” [104]. More recent psychological and application-oriented trust approaches begin to take the multidimensional nature of trust into account [105].

To conclude, human-technology trust deviates from interpersonal trust in a sense that it is less holistic and does not holistically address the trustee but refers to situationally relevant facets of the trustee’s characteristics and behaviour. Whilst the various dimensions of trust in a relationship between two entities have become apparent, the next subsection focuses on whether robots can be considered as trustees.

3.3 Robots as Trustees

Trust has been characterised as a social phenomenon requiring at least two involved parties, whose inner mental states and actions are not fully determined. This means that both parties must have free will to act in a certain manner and thereby create a situation of uncertainty for the respective other [55, 106]. However, it seems awkward to grant today’s robots such a high level of autonomy, particularly as long as they are not equipped with advanced artificial intelligence (AI). This “lack of agency” [107] represents the key objection against considering robots (and similar machines) as trustworthy: “machines are not trustworthy; only humans can be trustworthy (or untrustworthy)” [108]. However, in everyday communication, people tend to say that they trust a technical system. Some researchers consider such statements as category errors that erratically classify machines as legitimate trustees and thereby conflate trust with reliability [107, 109], whereas others argue that despite being conceptually confused, such statements may be meaningful [104]. Drawing on work by Latour [110] and Hartmann [111], it can be argued that even a simple tool “can be considered an agent in complex social relationships” [104] and thus can be trusted as it appears as a quasi-other. Following a phenomenological-social approach, Coeckelbergh characterises such trusting relationships as “virtual” or “quasi-trust” [106], which can be reasonably placed in such robots with whom a social relation can be established. Moreover, he argues that even if machines are not considered as quasi-others, the relational bonds people build towards robots suffice for considering trust in robots as meaningful. As already mentioned, people are prone to see the world through the lens of an intentional framework, that is, to attribute intentions and to implicitly anthropomorphise robots in practical situations, although they deny considering them as human-like creatures [47, 112, 113]. Whilst it remains a vividly discussed ontological question for example in the domain of robot ethics to decide whether robots are fully ethical agents with the corresponding rights and duties [114], in most practical situations, they may be perceived as legitimate trustees from a phenomenological viewpoint – lowering the practical relevance of the ontological question in terms of real-world trusting relations between humans and robots. Although it is not foreseeable that machines with real mental states, freewill or consciousness will exist, people primarily tend to rely on appearance and to make their attributions based on intuition rather than on rational considerations [115].

In conclusion, considering robots as legitimate addressees of trust remains controversial. Possible rationales are based on the influence of immediate relational and affective responses to robots. The plausibility of this argumentation depends on the underlying philosophical viewpoint and the assumed degree of anthropomorphism. From a phenomenological-constructivist approach, robots may appear as social others that possess mental states, motives and some kind of autonomy to decide whether to take into account humans’ interests or not. This view may be decisive and most helpful in terms of understanding interaction behaviour in concrete situations. Consider for example a case of life-threatening danger, in which a person is forced to rely on a rescue robot to escape the situation [116]. Under an extreme psychological state dominated by fear, for practical purposes, it is irrelevant whether this person perceives the robot as a social actor, attributes trustworthiness to the robot in a narrow sense or to an unknown, imagined rescue team that teleoperates the robot from another place. In fact, it is key that the person relies on the intention to be rescued in the given situation. Nevertheless, in regular day-to-day interactions within an organisational context, it is reasonable to take a closer look at relational networks of humans, robots, and related institutions.

3.4 Relational Networks

In Sect. 2.2, the evidential view on trust has been introduced as the common-sense perspective on how trust emerges based on rational and cognitive ex-ante expectancy-value calculations that lead to decisions on whether to trust a potential trustee or not. However, Moran [65] regards this functional and reductionistic view of an interaction partner as implausible. Quite the contrary, he argues that trust does not emerge from individualistic cognitive, rational and usually contractual considerations of the trustworthiness of another party, but is deeply rooted in affective responses to social relations by default [90, 106, 117]. This argumentation emphasises the significance of the relationship between humans and robots for trust building. Such relational aspects have also gained significance in related disciplines, for instance, on the course of the so-called relational turn in robot ethics [118,119,120,121] based on Emmanuel Levinas’ claim that relational precede ontological considerations [122]. According to the assurance view [64, 65], trust builds immediately when the other is recognised as an interaction partner, instead of being a result of assessing trustworthiness as part of a strictly sequential process [64]. In that sense, the assurance view emphasises the rooting of trust building in the relationship between two parties and illustrates that trust building goes beyond a mere rational and cognitive process. Thereby, it accounts for people’s unawareness of the premises on which they base their analogical inferences leading to trust-building expectations and of their reasons for the perceived trustworthiness seemingly attributed to a machine. In line with the assurance view, empirical findings from the psychological and human factors literature stress the influence of affective and emotional processes on trust [16, 41, 59, 123, 124]. In addition, an application-oriented study addressing factory workers without prior practical experience with robots recently confirmed the importance of the constructed relation to a collaborative robot as an influential factor for trust building [100].

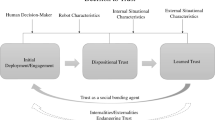

Consequently, it seems reasonable to again focus on the specific relations between humans and robots, which are embedded in a wider network of organisational relationships at the workplace. This organisational context frames the perceived trustworthiness of a machine [16] and leads to so-called learned trust, which inter alia is constituted and shaped by interpersonal interactions spreading rumours and gossip within the organisational context [16, 41]. Trust should be considered as a context-sensitive phenomenon, just as the effects of anthropomorphism [125, 126]. For instance, in terms of the omnipresent goal of job security, the introduction of a collaborative robot often creates significant uncertainty amongst workers. However, whether this uncertainty arises, is not ultimately a question of trust in this robot, but in the organisation’s management, which decides to either keep or fire an employee. Hence, employees presumably have to trust the organisational representatives’ warranties that they do not intend to replace or degrade employees, the regulating institutions’ certificates that a particular machine does not threaten the physical integrity, the manufacturers and developers that the robot is easy-to-use, and so on. However, the scepticism in terms of the institutions involved in developing and promoting technologies may be greater than in terms of the technology itself [127]. That being said, the common understanding of trust building between merely two parties in a dyadic relationship falls short in the workplace context because it overlooks the highly relevant “hidden layers of trustworthiness” [128] and the nesting of possible trustees within organisations [117]. When people speak about trusting a machine, they most likely and implicitly refer to the assumed motives of the machine’s manufacturers and programmers and thereby “indirect[ly] trust in the humans related to the technology”, which represent legitimate trustees even in a narrower sense [106]. In this sense, the people behind the technology build a “network of trust” [90] incorporating all other trusting relations that set the framework, boundaries, and development opportunities for human-technology trust. Accordingly, robots can be considered as proxies for common trustworthiness attributions which ultimately address relevant persons and institutions involved in developing, programming, deploying, and regulating the robot [42]. No matter if one considers trust in collaborative robots as conceptually mistaken, a confusion with reliance, a mere form of virtual or quasi-trust or a legitimate concept for all practical purposes largely overlapping with interpersonal trust from a relational stance, all approaches somehow take further involved institutions, persons, and organisations on a second level into account. While philosophers of technology currently predominantly drive the debate, much more rigorous empirical studies are required to shed light on the relational network layers of trust and possible hidden dimensions of trustworthiness.

4 Practical Implications of Trust

The presumed practical influence of trust and the ubiquitous attempts to foster trust usually ground on two assumptions, namely that first, a trusting attitude leads to technology acceptance and second, high trust levels result in successful human-robot interactions. However, both assumptions are controversial, and the presumed interlinkages are less straightforward than often implied, as discussed in the following subsections.

4.1 Acceptance and Technology Usage

In terms of behavioural consequences of trust, the term acceptance comes into play. Both constructs are often discussed in conjunction, conceptualised as strongly interrelated issues, or even used synonymously. While some research focuses on trust as a central criterion for successful HRI, especially in the context of automation technology, other studies rely predominantly on the construct of acceptance. Whereas a lot of effort has been put into the process of precisely defining trust, acceptance is mostly used without a precise definition, assuming that its meaning is sufficiently clear and precise. Whilst research on trust in HRI was essentially shaped by the model of Lee and See [16], contemporary acceptance research has its roots in the technology acceptance model [129], which initially referred to information technologies. Both concepts share their common origin in the TRA and the theory of planned behaviour (TPB) [93, 94], respectively, but nevertheless models of technology acceptance and human-technology trust coexist as core subjects of two parallel research strands [130]. Similar to trust, technology acceptance can be conceptualised as an attitude, an intention or an action based on the TRA [131]. On an attitudinal level, acceptance implies a positive evaluation of technology usage in the sense that persons have a consenting attitude towards using this technology [131, 132]. Relatedly, acceptance is sometimes also defined ex negativo as the absence of reluctant behaviour [133]. Most frequently acceptance is associated with technology adoption, usage behaviour, or product purchase, though, [134] which underpins that it is often implicitly considered as an action (cf. critique in [135]). Discussions in practical contexts tend to focus on technology acceptance instead of trust and are likely to be concerned with ways to increase the acceptance of robots [136, 137]. In that sense, acceptance is often interpreted dichotomously rather than gradually, i.e., it is meaningless to question how much a particular technology is accepted, whereas this represents an equally meaningful and important question with regard to trust due to its gradual nature. In that sense, acceptance answers the if- and trust the how-question of technology usage. Relatedly, current research increasingly adopts a time-dynamic perspective on trust, which accounts for its continuous evolution with varying levels and consequences, whereas acceptance is rather seen as a static snapshot [138, 139].

Notwithstanding these differences, most researchers also assume a strong relationship between trust and acceptance. Trust is usually considered as an attitude and acceptance as a behaviour, so trust can reasonably be interpreted as an antecedent of acceptance. Indeed, trust was identified as a relevant predictor of acceptance in some application-oriented studies, but apart from the automation acceptance model (AAM) [130], an extension of the classical technology acceptance model (TAM) [129], most acceptance models do not explicitly include trust as a variable [140]. In addition, the relation between both constructs is far from straightforward. There may be a considerable difference between the actual decision to use a robot and a positive attitude towards using this robot, particularly in the workplace context, in which employees lack the freedom to decide whether to use it or not [95]. Thus, a lack of trust at the attitudinal level must not necessarily become apparent in the person’s actions, but can also lead to silent rejection for example. An individual's attitude towards technology can but must not be reflected in observable behaviour, such as boycotting or resisting interaction with technology on one hand, and actively supporting and engaging with it on the other [131]. Indeed, trust represents a promoting but not sufficient criterion for technology usage due to the impact of further external factors [16]. Consequently, neither does the existence of trust necessarily lead to a usage decision nor does each refusal to use technology necessarily point to a lack of trust [15]. For example, a perceived lack of practical usefulness compared to the effort to use technology or a lack of necessity to rely on technology might cause a refusal [16, 141]. Vice versa, the decision to use technology can possibly arise without trusting technology, e.g. if the trustor feels some sense of commitment or is simply obliged to use technology by a supervisor in a mandatory usage context at the workplace [18, 95, 142].

The latent nature of trust as well as the loose coupling between trust as an attitude and actual reliance as behaviour complicates trust measurement in empirical studies, particularly due to other influencing variables being confounded with trust [59]. To date, human-technology and human-robot trust are rarely measured behaviourally, but mostly on the basis of subjective self-assessments in multi-item scales, which, however, bear the risk of only depicting an ex-post-reconstruction of trust [59, 74, 92]. However, the alternative use of behaviour-based measurement methods also proves to be problematic because conclusions on such a complex, social phenomenon as trust can hardly be drawn from observable behaviour [74]. The latter may be influenced, for instance, by people’s general attitude towards risk-taking, since trusting is always a risky endeavour as outlined before [67]. In addition, other dispositional factors such as general attitude towards change, towards technology or users’ innovation adoption style may context-specifically moderate the relationship between a trusting attitude and a reliant behaviour [143]. In this context, the Domain-Specific Risk-Taking (DOSPERT) scale [144, 145] allows one to assess an individual’s disposition to take a risk in a certain domain. The DOSPERT scale thereby differentiates between the degree to which a situation is perceived as risky and an individual’s attitude towards situations that he/she considers as risky [144]. Empirical research on this matter has revealed large within-subject domain-specific differences [145], which is in line with growing evidence that the interaction context is highly relevant for researching HRI [146]. In addition, the Individual Innovativeness Scale (IIS) [147] serves to assess an individual’s likelihood of accepting and adopting an innovative change or technology early in the adoption process [148]. Although both models emerged in other research fields than HRI and describe factors which are not included in contemporary technology acceptance models, they seem relevant in the context of workplace robotics in order to capture all relevant moderators of trust-acceptance relations.

4.2 Over- and Undertrust

It is often implied that fostering trust necessarily improves human-robot interactions. In that sense, establishing trust is often unilaterally perceived as per se desirable and a state of low trust as a deficiency that requires immediate remediation [42]. However, there is growing awareness that efforts to maximize trust can backfire and result in detrimental consequences and thus, trust should be optimised instead of maximised, because only an appropriate level of trust reduces the probability of misuse due to over-/underestimation, over-/undertrust, or over-/underreliance [16, 17, 43, 60]. The more a person relies on technology, the more this person will be willing to relinquish control. However, a complete renunciation of control would be just as undesirable in practice as permanent control. The latter would reduce the time available to carry out one’s tasks, whereas a complete relinquishment of control could, for example, lead to a too-low situational awareness and cause risks of overlooking possible malfunctions of a robot [43, 60]. Additionally, unrealistically high user expectations regarding the technology can undermine trust in a dynamic perspective even if the technology works as intended [16, 18]. Current research increasingly adopts a time-dynamic perspective on trust by accentuating the goal of a sustainable positive development of trust based on high congruence between expectations and observations as opposed to a purely acceptance-creating initial increase in trust [138]. A more conservative expectation and lower level of trust in technology may therefore prove long-lasting and thus comparatively beneficial [149]. Expectations regarding the trustee’s actions have to be aligned with its trustworthiness. However, much contemporary research focuses on trust levels without linking them to the trustworthiness of the trustee, be it individuals, organisations or institutions [61], and overlooks that it is desirable not to trust untrustworthy entities. When researchers and practitioners argue for an increase in trust, the actual trustworthiness should therefore always be taken into consideration [88].

In conclusion, this section has distinguished acceptance as a behavioural and dichotomous construct from trust as a multifaceted, attitudinal, and gradual construct. In addition, potential moderators between trusting attitudes and actual technology use demonstrate the complex and far from straightforward relationship in organisational and mandatory use contexts. Phenomena of over- and undertrust demonstrate the negative implications of inappropriate trust levels and thus argue against the commonly formulated goal of promoting human-technology trust in general. However, so far, the article only addressed the concept of human-robot trust, but omitted to say anything about distrust, which may have given the impression that distrust is just the opposite of trust. Arguing against this thesis, the next section will characterise distrust as a closely related, but conceptually different and alternative mechanism for complexity reduction.

5 Distrust

While research interest in trust is rising both within a broader human-machine and in a narrower human-robot interaction context, many researchers have not explicitly considered distrust in theoretical as well as empirical work [59]. This omission is surprising and crucial alike, since an understanding of trust requires also an understanding of distrust as a contradictory phenomenon [150], particularly in an organizational context in which relations might benefit from a “healthy dose of trust and distrust” [151]. The debate about distrust seems to have far more progressed in the domain of philosophy of technology than in applied psychology and technological research. The following considerations are supposed to help the related findings and thoughts find their way into the more application-oriented and psychological literature.

In everyday language and from a folk psychology perspective, people tend to characterise distrust as the counterpart of trust. Researchers, though, suggest a more differentiated view of the relationship between trust and distrust. Even though there is no consensus yet, both conceptual and empirical work provides indications that trust and distrust are not the endpoints of the same scale, but two different albeit interrelated constructs [42, 152]. Some recent empirical studies from the HRI context conducting a confirmatory factor analysis based on the Trust in Automation-Scale (TAS) [153] support this distinction between trust and distrust. TAS embraces positively and negatively formulated items, which allows the formation of either a one-dimensional trust measure by recoding the negatively formulated items, or of two separate constructs by evaluating the positively and negatively formulated items separately. Whereas Jian et al. [153] argue for a one-dimensional use, Pöhler et al. [154] and Spain et al. [92] found superior model fits for the two-dimensional version, which received further empirical support [152, 155]. The separate consideration of distrust may lead to more sound insights particularly in the domain of human-robot compared to human-human interactions, because people tend not to hesitate to express their distrust in machines, whereas social norms often prevent them from explicitly expressing distrust placed in other human beings [156].

The mentioned empirical findings are also backed up by sociologist theories. Luhmann [46] explicitly regards trust and distrust as different constructs and illustrates his argumentation with an example of purchasing a television. Luhmann claims that while it makes sense to compile a list of advantages and disadvantages, it would be meaningless to additionally compile such a list for the case of non-purchase [46]. Analogously, if distrust was simply the counterpart of trust, one could talk about trust levels and completely forego the use of distrust. However, Luhmann regards distrust as a functional equivalent of trust, lending people two alternatives for reducing complexity. If someone decides not to place trust in someone, this person “restores the original complexity of event possibilities and burdens himself with it” [46]. According to the presented definition in Sect. 3, not trusting would imply not believing in a technology’s helpfulness with regard to achieving one’s goals. This equals a non-expectation of a positive future course and hence, reduces the space of possible depictions of the future by only one scenario. Thereby, this strategy fails to suspend the cognitive overcomplex diversity of possible future scenarios and forces the respective person to resort to another functionally equivalent strategy, namely distrust. To fulfil its complexity-reducing function distrust has to imply a mental commitment to one specific future scenario. In that sense, distrust means to expect a negatively evaluated future course [46], i.e., to expect that the use of a technology will be detrimental to the achievement of one’s goals. Hence, trust and distrust have in common that they lead to the suspension of the diversity of possible future scenarios but differ in the expectation of a subjectively either positively or negatively evaluated course of the future.

In a seminal paper, Lewicki et al. [151] drew on Luhmann’s argumentation and proposed a two-dimensional view of trust and distrust. Firstly, they argue that the two constructs can be distinguished empirically and show different patterns of development. Secondly, trust and distrust can coexist, at least if we apply the usual three-dimensional nature of human-robot trust as explained beforehand in Sect. 3.2 [89]. Since, for example, someone might pursue several even conflicting goals at the same time, technology can be perceived as helpful in terms of some goals and at the same time as disadvantageous in terms of others. This provides good reasons for both a trusting and a distrustful attitude. Additionally, Lewicki et al. [151] argue that “ambivalence is commonplace” in multifaceted relationships. This makes the simultaneous emergence of trusting and distrusting attitudes plausible and thus, demonstrates how the argumentation corresponds to the experience of people to trust and distrust other people at the same time with regard to different vulnerabilities and expectations. With this in mind, the coexistence of trust and distrust towards collaborative robots is supposedly a likely phenomenon, since these kinds of robots are often perceived ambiguously and evaluated ambivalently [157,158,159,160, 49, 34, 161], as already outlined in the introduction of this article.

The suggested conceptual differences between trust and distrust also call for separate consideration of trust and distrust as well as their antecedents and consequences in empirical studies [151], even though the practical relevance of this distinction still needs to be clarified given the fairly high negative correlation between both constructs [162]. Most likely, trust and distrust also lead to varying consequences regarding technology use. Trusting a technology results in the relinquishment of control over the technology during usage, whereby the amount of relinquished control depends on the trust level and the related expectation of faultless functioning. The freed cognitive capacities needed for controlling behaviour can then contribute to successful interaction. In contrast, distrust implies the expectation of a negative impact of technology usage, which represents a strong motivation for the distrusting person to renounce technology usage or at least not to rely on the technology. Apparently, while trust is strongly related to positive items such as perceived enjoyment that reflect the benefits and thus motivate robot usage, distrust is far away from an agnostic mode but instead predominantly associated with feelings of insecurity, contempt or fear that could hamper technology usage [151, 163]. While both trust and distrust are considered gradual constructs, the level of distrust seems to be less influential with regard to behavioural consequences, since irrespective of its degree, placing distrust in technology will lead to usage avoidance, at least as long as someone is not obliged to use the technology by external forces. This practical observation is in line with philosophical considerations by Faulkner and D’Cruz [89, 163] stating that, contrary to trust, distrust does not appear in a three-dimensional structure but does globally address a trustee or at least a certain domain of activities (D). Hence, the relation “X distrusts Y to φ” is very unusual. Instead, the statements “X (generally) distrusts Y” or “X distrusts Y in D” make more sense, since “even (…) competence-based distrust has a tendency to spread across domains” [163]. In the absence of significant external motivations, distrust-indicated avoidance of technology usage leaves “little room (…) for unbiased, objective environmental exploration and adaptation” [46] and prevents (positive) learning experiences. Therefore, from a psychological point of view, it is advantageous to strive for a relationship of trust as opposed to a relationship of distrust. However, apart from this drawback, there is no reason to devalue distrust normatively and categorically over trust or to regard distrust as dysfunctional. Instead, distrust and trust both have their raison d’être as complexity-reducing mechanisms [151].

6 Conclusions and Outlook

Successful HRI requires a healthy level of trust between the interaction partners. Collaborative robots are a novel technology for enabling human-robot interactions at industrial workplaces. They are considered hybrid robots due to the presence of social design features although they are employed in functional usage contexts. They are often perceived ambiguously, which is associated with a high cognitive load for employees and requires them to choose to trust or distrust the technology in order to reduce cognitive complexity. Trust has been a subject of research in contemporary empirical psychology as well as in philosophy of technology. Common definitions of trust in the HRI context stress conceptual relations to expectations about the achievement of one’s goals in a situation of vulnerability. Given the variety of typical goals that employees typically pursue at the workplace and whose achievement is likely to be influenced in different manners by the introduction of collaborative robots, trust in this context should be regarded as a multidimensional construct. However, trust is often considered a monolithic construct in empirical work, partly due to a lack of sufficiently differentiated measurement instruments. In addition, inspired by philosophical considerations, human-technology relationships should be understood as three-dimensional, whose building blocks are not only the (human) trustor and the (non-human) trustee but also a reference object of trust. Accordingly, asking whether one trusts a collaborative robot is an oversimplified and misleading question, since even more than in the interpersonal context a distinction between different trust dimensions is necessary to holistically understand the phenomenon of trust. Moreover, even the three-dimensional view of trust relationships does not adequately reflect the fact that machines, due to their lack of autonomy, cannot represent trust recipients in the narrow sense, but only act as proxies for hidden layers of trust recipients lying behind them. Without detailing the whole debate primarily driven by philosophers of technology, the relational and affective influences on trust-building point to the relevance of the persons and institutions within the organisational context which build the relational network of trust. Hence, human-robot trust in the workplace should always be researched in the face of the organisational context.

In terms of the practical implications of trust, the assumptions that trust necessarily leads to technology acceptance and usage as well as that higher trust levels are per se beneficial for human-robot interactions have been challenged. Whilst trust and acceptance share some conceptual commonalities, they also essentially differ in a sense that acceptance as a dichotomous construct points to the if- and trust as a gradual construct to the how-question of technology usage. Similarly, despite the existing intuitive conceptual interrelations, there are valid theoretical and empirical arguments for considering distrust not just as the opposite of trust but as a separate construct and an alternative mechanism for reducing complexity in the interaction with machines, particularly when they are perceived as ambiguous. Again, drawing on the multidimensional notion, distrust is less situational, tends to address the trustee more holistically and has rather dichotomous consequences, that is, the complete avoidance of technology usage.

In conclusion, by narrowing the context to collaborative robots at the workplace and drawing on the work of philosophy of technology, the article demonstrated that on the way to successful HRI, it is necessary to understand trust as a multidimensional and context-dependent phenomenon embedded in relational networks of indirect trustees, that changes gradually over time and impacts in different manners on various goals at the workplace. Furthermore, research on human-robot interactions will benefit from considering distrust not just as the opposite but as a separate construct that deserves much more attention in future research. Whereas the philosophical discussions have put very much effort into differentiating both concepts, trust and distrust are often seen as monolithic constructs within a merely dyadic relation in much empirical work. This falls short in taking the multidimensionality and context-dependency into account and in that sense hampers a deepened understanding of human-robot trust at the workplace and a needed empirical clarification of its manifold facets. Multidisciplinary approaches could make a valuable contribution to enriching psychological work through philosophical ideas and subjecting the latter to empirical testing, which in turn requires the development of solid multidimensional trust and distrust measurement methods. The latter are of particular importance in terms of workplace-oriented interactions with hybrid robots such as collaborative robots.

Data Availability (Data Transparency)

The datasets analysed during the current study are available from the corresponding author on reasonable request.

Code Availability (Software Application or Custom Code)

Not applicable.

References

Richert A, Müller SL, Schröder S et al (2017) Anthropomorphism in social robotics: empirical results on human–robot interaction in hybrid production workplaces. AI & Soc 1:71–80. https://doi.org/10.1007/s00146-017-0756-x

Vincent J, Taipale S, Sapio B et al (2015) Social Robots from a human perspective. Springer International Publishing, Cham

Mutlu B, Roy N, Šabanović S (2016) Cognitive Human–Robot Interaction. In: Siciliano B, Khatib O (eds) Springer handbook of robotics, 2nd edn. Springer, Berlin, Heidelberg, pp 1907–1934

Boone RT, Buck R (2003) Emotional expressivity and trustworthiness: the role of Nonverbal Behavior in the evolution of Cooperation. J Nonverbal Behav 27:163–182. https://doi.org/10.1023/A:1025341931128

Haidt J (2003) The moral emotions. In: Davidson RJ, Scherer KR, Goldsmith HH (eds) Handbook of affective sciences, 1. Issued as paperback. Oxford University Press, Oxford, New York, pp 852–870

Atkinson DJ (2015) Robot Trustworthiness. In: Adams JA (ed) Proceedings of the Tenth Annual ACMIEEE International Conference on Human-Robot Interaction (Extended Abstracts). ACM, New York, NY, pp 109–110

Xing B, Marwala T (2018) Introduction to Human Robot Interaction. In: Xing B, Marwala T (eds) Smart maintenance for human-robot interaction: an intelligent search algorithmic perspective. Springer-Verlag, Cham, pp 3–19

Phillips EK, Ososky S, Grove J et al (2011) From Tools to Teammates: Toward the Development of Appropriate Mental Models for Intelligent Robots. In: Proceedings of the Human Factors and Ergonomics Society 55th Annual Meeting, pp 1491–1495

Charalambous G, Fletcher SR, Webb P (2016) The development of a scale to Evaluate Trust in Industrial Human-robot collaboration. Int J of Soc Robotics 8:193–209. https://doi.org/10.1007/s12369-015-0333-8

Strohkorb Sebo S, Traeger M, Jung MF et al (2018) The Ripple Effects of Vulnerability. In: Kanda T, Šabanović S, Hoffman G. (eds) Proceedings of the 2018 ACM/IEEE International Conference on Human-Robot Interaction - HRI ‘18. ACM Press, New York, NY, USA, pp 178–186

Ososky S (2013) Influence of Task-role Mental Models on Human Interpretation of Robot Motion Behavior. Dissertation, University of Central Florida

Charalambous G, Fletcher SR, Webb P (2013) Human-Automation Collaboration in Manufacturing: Identifying Key Implementation Factors. In: Anderson M (ed) Contemporary ergonomics and human factors 2013: Proceedings of the international conference on Ergonomics & Human Factors 2013. Taylor & Francis, pp 301–306

You S, Robert LP (2019) Trusting Robots in Teams: Examining the Impacts of Trusting Robots on Team Performance and Satisfaction: Forthcoming. In: Proceedings of the 52th Hawaii International Conference on System Sciences (HICSS 2019)

Broadbent E (2017) Interactions with Robots: the truths we reveal about ourselves. Annu Rev Psychol 68:627–652. https://doi.org/10.1146/annurev-psych-010416-043958

Sanders TL, Kaplan AD, Koch R et al (2019) The relationship between Trust and Use Choice in Human-Robot Interaction. Hum Factors 61:614–626. https://doi.org/10.1177/0018720818816838

Lee JD, See KA (2004) Trust in automation: designing for appropriate reliance. Hum Factors 46:50–80. https://doi.org/10.1518/hfes.46.1.50_30392

Parasuraman R, Riley V (1997) Humans and automation: use, Misuse, Disuse. Abuse Hum Factors 39:230–253. https://doi.org/10.1518/001872097778543886

Powell H, Michael J (2019) Feeling committed to a robot: why, what, when and how? Philos Trans R soc Lond, B. Biol Sci 374. https://doi.org/10.1098/rstb.2018.0039

Peshkin M, Colgate E (1999) Cobots Industrial Robot: An International Journal 26:335–341

Ranz F, Komenda T, Reisinger G et al (2018) A morphology of Human Robot collaboration systems for Industrial Assembly. Procedia CIRP 72:99–104. https://doi.org/10.1016/j.procir.2018.03.011

Coeckelbergh M (2011) You, robot: on the linguistic construction of artificial others. AI & Soc 26:61–69. https://doi.org/10.1007/s00146-010-0289-z

Ferrari F, Eyssel F (2016) Toward a Hybrid Society. In: Agah A, Howard AM, Salichs MA (eds) Social Robotics: Proceedings of the 8th International Conference, ICSR 2016 Kansas City, MO, USA, November 1–3, 2016. Springer International Publishing, Cham, pp 909–918

Marquardt M (2017) Anthropomorphisierung in der Mensch-Roboter Interaktionsforschung: theoretische Zugänge und soziologisches Anschlusspotential. Working Papers - kultur- und techniksoziologische Studien 10:1–44

Złotowski J, Strasser E, Bartneck C (2014) Dimensions of anthropomorphism. In: Sagerer G, Imai M, Belpaeme T (eds) HRI 2014: Proceedings of the 2014 ACM/IEEE International Conference on Human-Robot Interaction. ACM, New York, NY, pp 66–73

Remmers P (2020) Ethische Perspektiven Der Mensch-Roboter-Kollaboration. In: Buxbaum H-J (ed) Mensch-Roboter-Kollaboration. Springer Fachmedien Wiesbaden, Wiesbaden, pp 55–68

Reeves B, Nass CI (1998) The media equation: How people treat computers, television, and new media like real people and places, 1. paperback ed. CSLI Publ, Stanford, Calif

Braitenberg V (1987) Experiments in synthetic psychology, 2nd pr. Bradford books. The MIT Press, Cambridge, Mass

Elprama SA, El Makrini I, Vanderborght B et al (2016) Acceptance of collaborative robots by factory workers: a pilot study on the importance of social cues of anthropomorphic robots. In: The 25th IEEE International Symposium on Robot and Human Interactive Communication, New York, pp 919–924

Sheridan TB (2016) Human-Robot Interaction: Status and challenges. Hum Factors 58:525–532. https://doi.org/10.1177/0018720816644364

Brandstetter J (2017) The Power of Robot Groups with a Focus on Persuasive and Linguistic Cues: Dissertation, University of Canterbury

Onnasch L, Jürgensohn T, Remmers P et al (2019) Ethische Und Soziologische Aspekte Der Mensch-Roboter-Interaktion. Baua: Bericht. Bundesanstalt für Arbeitsschutz und Arbeitsmedizin, Dortmund/Berlin/Dresden

Choi H, Swanson N (2021) Understanding worker trust in industrial robots for improving workplace safety. In: Nam CS, Lyons JB (eds) Trust in Human-Robot Interaction. Elsevier Academic Press, pp 123–141

Wurhofer D, Meneweger T, Fuchsberger V et al (2015) Deploying Robots in a Production Environment: A Study on Temporal Transitions of Workers’ Experiences. In: Abascal González J, Barbosa S, Fetter M. (eds) Proceedings of the 15th IFIP TC 13 International Conference, Bamberg, Germany, September 14–18, 2015. Springer, Cham, pp 203–220

Damiano L, Dumouchel P (2018) Anthropomorphism in Human-Robot co-evolution. Front Psychol 9:1–9. https://doi.org/10.3389/fpsyg.2018.00468

Weiss A, Huber A, Minichberger J et al (2016) First application of Robot Teaching in an existing industry 4.0 environment. Does It Really Work? Societies 6:1–21. https://doi.org/10.3390/soc6030020

Oliveira R, Arriaga P, Alves-Oliveira P et al (2018) Friends or Foes? In: Kanda T, Šabanović S, Hoffman G. (eds) Proceedings of the 2018 ACM/IEEE International Conference on Human-Robot Interaction - HRI ‘18. ACM Press, New York, NY, USA, pp 279–288

Stadler S, Weiss A, Mirnig N et al (2013) Anthropomorphism in the factory - a paradigm change? In: HRI 2013: Proceedings of the 8th ACM/IEEE International Conference on Human-Robot Interaction. IEEE, Piscataway, NJ, pp 231–232

Müller SL, Schröder S, Jeschke S et al (2017) Design of a Robotic Workmate. In: Duffy VG (ed) Digital Human Modeling. Applications in Health, Safety, Ergonomics, and Risk Management: Ergonomics and Design: Proceedings of the 8th International Conference, DHM 2017, Held as Part of HCI International, Vancouver, BC, Canada, July 9–14, 2017, Part I. Springer International Publishing, Cham, pp 447–456

Heßler M (2019) Menschen – Maschinen – MenschMaschinen in Zeit Und Raum. Perspektiven Einer Historischen Technikanthropologie. In: Heßler M, Weber H (eds) Provokationen Der Technikgeschichte: Zum Reflexionszwang Historischer Forschung. Verlag Ferdinand Schöningh, Paderborn

Onnasch L, Roesler E (2021) A taxonomy to structure and analyze human–Robot Interaction. Online-Vorabveröffentlichung. Int J of Soc Robotics 13:833–849. https://doi.org/10.1007/s12369-020-00666-5

Hoff KA, Bashir M (2015) Trust in automation: integrating empirical evidence on factors that influence trust. Hum Factors 57:407–434. https://doi.org/10.1177/0018720814547570

Sumpf P (2019) System Trust: Researching the Architecture of Trust in Systems. Springer Fachmedien, Wiesbaden

Lewis M, Sycara K, Walker P (2018) The role of Trust in Human-Robot Interaction. In: Abbass HA, Scholz J, Reid DJ (eds) Foundations of trusted autonomy. Springer International Publishing, Cham, pp 135–160

Castelfranchi C, Falcone R (2020) Trust: perspectives in Cognitive Science. In: Simon J (ed) The Routledge handbook of trust and philosophy. Routledge Taylor & Francis Group, New York, NY, London

Faulkner P, Simpson T (eds) (2017) The philosophy of trust, first edition. Oxford University Press, Oxford, New York

Luhmann N (1968/2014) Vertrauen: Ein Mechanismus der Reduktion sozialer Komplexität, 5th edn. UTB

Epley N, Waytz A, Cacioppo JT (2007) On seeing human: a three-factor theory of anthropomorphism. Psychol Rev 114:864–886. https://doi.org/10.1037/0033-295X.114.4.864