Abstract

Theory of Mind is crucial to understand and predict others’ behaviour, underpinning the ability to engage in complex social interactions. Many studies have evaluated a robot’s ability to attribute thoughts, beliefs, and emotions to humans during social interactions, but few studies have investigated human attribution to robots with such capabilities. This study contributes to this direction by evaluating how the cognitive and emotional capabilities attributed to the robot by humans may be influenced by some behavioural characteristics of robots during the interaction. For this reason, we used the Dimensions of Mind Perception questionnaire to measure participants’ perceptions of different robot behaviour styles, namely Friendly, Neutral, and Authoritarian, which we designed and validated in our previous works. The results obtained confirmed our hypotheses because people judged the robot’s mental capabilities differently depending on the interaction style. Particularly, the Friendly is considered more capable of experiencing positive emotions such as Pleasure, Desire, Consciousness, and Joy; conversely, the Authoritarian is considered more capable of experiencing negative emotions such as Fear, Pain, and Rage than the Friendly. Moreover, they confirmed that interaction styles differently impacted the perception of the participants on the Agency dimension, Communication, and Thought.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Social and service robots are autonomous agents that operate in human populated environments. They must plan their activities to coordinate safely and efficiently with their human teammates [7, 32]. The ability to create correct mental models of other interacting partners provides a mechanism for achieving fluent and effective teamwork [42]. Moreover, according to [45], correctly perceiving others’ minds is a key requirement for understanding, predicting, and controlling the behaviour of interacting partners, and for establishing a social bond with them.

The ability to understand and predict others’ behaviour represents the cornerstone of most social interactions [19]. Once we have a correct mental model of interacting partners, our interactions with them can become more effective and socially accepted [45]. Since it is not possible to have direct information about others’ mental states, mind attribution requires a leap from observable behaviour to unobservable mental states [10]. Mind attribution is in the “eye of the beholder”. Therefore, in Human–Robot Interaction (HRI), some authors have started to investigate the features of the robot that may trigger the perception of intentionality and goal directed behaviors. It was found that different robot design mechanisms could be used to trigger it [5]. The human likeness of a robot, for example, may also contribute to mind attribution by simulating facial features or movements [14, 16].

However, the behavioural characteristics that may elicit mind attribution to the robot by the interacting human have not yet been sufficiently investigated. According to [28], only a few studies investigated the impact of individual differences on the attribution of anthropomorphism in robots, whereas also individual dispositional factors of the subjects may have an impact on the result. Moreover, beyond design and anthropomorphic appearance, different behavioural features may also play an important role in mind attribution toward a robot [35]. For example, being unpredictable [45] or the lack of performance [26] is associated with more perceived intentionality.

This study contributes to this direction by evaluating the attribution of cognitive and emotional capabilities to the robot as influenced by different interacting styles or behaviours it shows during the interaction. In HRI literature, it emerges that the design of the robot’s personality or, more generally, interaction style with the users may affect how people judge its behaviour [21], the development of uncanny feelings [30], and the perception of the social intelligence of the robot [23]. Hence, a robot’s interaction style may also have a potential impact on mind attribution. In previous works [8, 21, 36], we designed and validated three different robot interaction styles (Friendly, Neutral, and Authoritarian) that we designed by modelling verbal and non-verbal behaviours of a robot (such as tone of voice, speech speed, gestures, eye colours, feedback). Hence, here we evaluated how different interaction styles of the robot impact participants’ robot mind attribution. Understanding how people judge a robot’s mental abilities based on its behaviour could represent a starting point to better understanding whether robots can use such interaction capabilities to improve the naturalness and legibility of the interaction.

We asked the participant to complete the Dimensions of Mind Perception questionnaire [12] adapted for comparing two styles of interaction at a time on 18 mental capacities related to different mind dimensions: Experience and Agency. Particularly, Experience refers to the character’s perceived ability for “feeling” (e.g., to feel pain, rage, joy); conversely, Agency refers to the character’s perceived ability for “doing” (e.g., to plan, though, communicate). This differentiation is related to the two subcomponents of the Theory of Mind (ToM) construct: the ‘cognitive’ ToM defines a cognitive understanding of the difference between the speaker’s knowledge and that of the listener (knowledge about beliefs), instead, the ‘affective’ ToM defines the empathic recognition of the observed person’s emotional state (knowledge about emotions) [40].

Moreover, we aim also to assess whether the humans’ perception of a robot’s abilities may be related to Trust and/or the robot’s characteristics such as Anthropomorphism, Animation, Likeability, Perceived Intelligence, and Perceived Safety. Hence, the participants were asked to complete the Godspeed [4] questionnaire and the Trust Perception Scale-HRI [38]. As mentioned in [27], trust is a critical issue in human–robot interactions as it is the core of the human desire to accept and use a non-human agent and depends on our representation of this agent’s actions, beliefs, and intentions. Mental models promote trust and reliability by alleviating uncertainty in roles, responsibilities, and capabilities while working in a team [42].

2 Background and Related Works

In HRI literature, the concept of mental modelling is often linked with another important concept in psychology: the “Theory of Mind” (ToM) [42]. ToM has been defined as the cognitive ability to infer own and other’s mental and emotional states (i.e., beliefs, desires, thoughts, emotions) [2]. The attribution of mental states to inanimate objects, such as virtual agents, has been used to “describe” and “design” the behaviour of such complex systems whenever their design or physical description was too complex to be easily understood [9]. On the contrary, in the HRI field, several studies are starting to investigate whether human beings attribute mental states to a robot while interacting with it or simply observing its behaviour [31]. Some researchers investigated users’ perception of the mental abilities that a robot showed during the interaction and the possible links with factors such as pleasure, acceptance, etc. For example, in [12] the robot character was rated a moderate score for the agency, higher than animals (i.e., dog, frog, and chimp), but the lowest equal with God for the experience.

Indeed, mind attribution to robots has been related to the perceived physical presence [3, 15] and anthropomorphism [5] that can be modulated by simulating human facial features and movements [14, 16]. In [17], the authors asked the question ‘Why and under which circumstances do we attribute human-like properties to machines?’. To explore this aspect, they have implemented an interactive human–robot game, introducing an increase in human similarity degrees for the gaming partner. They have shown that the tendency to build a model of another’s mind linearly increases with its perceived human likeness.

Beyond physical appearance, also social context [6] and individual differences may affect the degree of anthropomorphism attribution in robots and, consequently, of mind attribution [28]. For example, recent research has suggested that the decrease in the scores of mind perception could be related to the degree of causal attribution to the partner agent in a repeated game [24]. Moreover, Stafford et al. [41] examined predictors of robot use in a retirement village over two weeks and predicted that residents who chose to use the robot had more computer knowledge, held more positive attitudes towards robots, and attributed less mind agency to robots. Conversely, in [1], which investigated the differences between elderly and young adults in ascribing mind perception to a sociable humanoid robot, they found that the elderly attributed higher scores of mind perception to the robot, whereas young adults seemed to have a more positive attitude towards it.

In this work, we are interested in investigating whether, beyond design and anthropomorphic appearance, different behavioural features may play an important role in mind attribution toward a robot [35]. For example, the perceived transparency about robots’ machine nature could influence people’s perception [43]. As confirmation, in [44], the authors identified two factors that could potentially influence mind perception and moral concern in robots: framing (how the robot is introduced) and social behaviour (how the robot acts). Indeed, the way a robot acts, interacts, and modulates its behaviour has an impact on how the robot and the interaction are perceived [21] and so, potentially, also on mind attribution [46]. For example, in [39], participants engaged in a hallway navigation task with a robot over several trials. The display of the robot and its proxemics behaviour was manipulated, and participants made mental state attributions across trials. Results show that proxemics behaviour and robotic display characteristics differently influence the degree to which individuals perceive the robot when making mental state attributions about themselves or others. Moreover, the unpredictability of behaviour corresponded to a greater attribution of mental capabilities [46].

Gray et al. [15] conducted three studies suggesting that the higher experience perceived in a robot, rather than the agency, may be tied to feelings of users’ unease. In [25], the authors constructed two different personalities for a humanoid robot, namely a socially engaged personality that maximised its user interaction (i.e., an emotional and more interactive character) and a competitive personality that was focused on playing and winning the game (i.e., a rational character). Like our study, they assessed each personality with the Godspeed Questionnaire and the Mind Perception Questionnaire. However, no significant differences were found in the Mind Perception results. The researchers of [13] performed a study on the perception of a robot’s characteristics (i.e., anthropomorphism, animation, likeability, perceived intelligence, and perceived safety) and the changes after passive and active interaction with a physically present humanoid robot highlighting that the perception changes mainly after the first interaction.

3 Materials and Methods

We created three online studies using a free web-based application. Each survey has the same structure (see Fig. 1) but compares two different robot behaviour styles: Neutral-Authoritarian (Survey 1), Neutral-Friendly (Survey 2), and Friendly-Authoritarian (Survey 3). This is to better highlight differences in each robot’s behaviour style compared to the other two. To reach a larger audience, we shared each survey on different social media channels, to avoid overlapping of participants, without providing monetary incentives.

3.1 Robot’s Behaviour Styles

In our previous works [20, 21, 36], we explored the impact of different behaviours on human–robot interaction. We designed three different robot behaviour styles called Friendly, Neutral, and Authoritarian. Each behaviour is shaped by verbal and non-verbal interaction features like gaze, speed of speech, tone of voice, gestures, proxemics, etc. In [8], we evaluated that each simulated behaviour is correctly perceived by the participants. Hence, here we decided to use these behavioral styles to evaluate the possible different mental states attributed to the robot during the interaction. In detail, each behavioural style is characterised as follows:

-

Eye colour For the Friendly robot, we used yellow colour as it causes positive emotions like joy; for the Neutral, we used white colour as it is not associated with emotions and for the Authoritarian, we used red colour as it is associated with emotions such as anger.

-

Gaze In the Friendly condition, the robot’s gaze is addressed to the user during the interaction and diverted during the execution of the exercises; in the Neutral, the robot’s gaze is always distracted, while in the Authoritarian, the robot’s gaze is always directed at the user.

-

Feedback The Friendly robot uses encouragements (e.g., ‘good job’, ‘do continue like this’); the Neutral does not use voice signals, while the Authoritarian uses attention recalls (e.g., ‘time is running out’, ‘are you done?’).

-

Gestures The Friendly robot uses gestures to accompany the rhythm of speech; the Neutral robot does not use specific gestures but only a movement of simple involuntary breathing; the Authoritarian robot uses semantic gestures to emphasise commands.

-

Speed/Pitch For the Friendly, we used a high speed in speech (85%) and a low tone of voice (50%), for the Neutral, we used a high speed in speech (90%) and a high tone of voice (100%), and finally for the Authoritarian, we used a high speed in speech (85%) and a medium-high tone of voice (75%).

-

Language The Friendly robot uses informal language while the Neutral and the Authoritarian use formal language.

For a detailed explanation of the design choice please refer to [21].

3.2 Experimental Hypotheses

We set up our experimental design and evaluation from three main hypotheses:

-

H1 The different interaction styles of the robot could have an impact on participants’ perception of the Experience dimension of its Mind Attribution. Particularly, since the Friendly behaviour was designed to look joyful and put the participant at ease, we hypothesised obtaining a higher number of participants that judged it as more capable of experiencing positive emotions such as Joy and Pleasure than the Neutral and the Authoritarian. Conversely, since the Authoritarian behaviour was designed to express authority and with a dominant appearance, we hypothesised obtaining a higher number of participants that judged it as more capable of experiencing negative emotions such as Rage and Pride than the Neutral and the Friendly (confirmatory hypothesis).

-

H2 The different interaction styles of the robot could have a different impact on participants’ perception of the Agency dimension of its Mind Perception (exploratory hypothesis).

-

H3 The robot’s mental abilities perceived by participants might be differently related to the perceived trust level in the robot and/or to the characteristics highlighted in the Godspeed questionnaire, i.e., Anthropomorphism, Animation, Likeability, Perceived Intelligence, and Perceived Safety (exploratory hypothesis).

3.3 Survey

The online survey started with a digital consent form describing the nature of the study. Individuals agreed to participate after reading and accepting the digital informed consent. We asked the participant to complete an anonymous demographic questionnaire, to collect information such as age, sex, employment status, and the prior experience questionnaire with robots [37], to find out if the participant has ever interacted with, built, or controlled a robot.

For each couple of videos, the order of presentation was randomised to balance the number of participants that watched one video first or the other (e.g., for Survey 1: Neutral-Authoritarian vs. Authoritarian–Neutral). In each video, the robot Pepper interacts by carrying out the same cognitive test with a male actor but with a different behaviour style (see Fig. 2). The robot gives the instructions of an exercise, also used in our previous studies, namely the Attentive Matrices, allowing him to complete each matrix in 15 s. Meanwhile, the robot can provide vocal feedback (such as encouragements or recalls) or not.

We designed the interaction so that the robot introduced its name after completing the cognitive task. This is to evaluate if the participant watched the video until the end. For this reason, after watching each video, we introduced a “validation question” asking the participant the robot’s name. In addition, the participant must complete:

-

The Godspeed questionnaire [4]. It is used to investigate how the participant evaluated the robot on aspects like anthropomorphism, animation, perceived intelligence, likeability, and perceived safety. In addition, we defined three subscales (see Table 1) for the Valence-Arousal-Dominance (VAD) model [33] to measure perceived emotional states associated with the robot’s performance. The participant provided a score between 1 and 5 for each feature of the subscales, where the first attribute corresponds to the value 1, while the second attribute corresponds to the value 5.

-

The Trust Perception subscale-HRI [37, 38]. It is made up of only 14 items and is used to investigate how much the participant trusted the robot. The participant provided a percentage score between 0% and 100% for each item of the subscale.

Finally, after watching the two videos and evaluating the individual behaviours, participants were also asked to compare the two styles of interaction through the Dimensions of Mind Perception questionnaire [12].

3.4 Dimensions of Mind Perception Questionnaire

The Dimensions of Mind Perception questionnaire [12] was employed to assess participants’ perceptions of the robot mind. To directly compare the two behaviours on each dimension of the Mind Perception questionnaire, the original scoring system proposed by Gray et al. [12] was adapted as follows: participants were asked to choose which one of the two proposed interaction styles better reflected each dimension of the Mind Perception questionnaire. These mind perception dimensions refer to the capacity to feel Hunger, Fear, Pain, Pleasure, Rage, Desire, Personality, Consciousness, Pride, Embarrassment, and Joy, belonging to the Experience domain (the ability of “feeling”); conversely, the capacity for Self-control, Morality, Memory, Emotion recognition, Planning, Thought, and Communication belonged to the Agency domain (the ability of “doing”). For each dimension, we evaluated the percentage of time the behavioural style was selected, with respect to the other, as better reflecting such capability.

We then computed two subscores for Experience and Agency domains by summing a point whenever the interaction style was selected for that dimension. For example, in the case the Friendly, in comparison to the Neutral interaction style, obtained preferences on 3 dimensions (e.g., Desire, Personality, and Joy) out of 11 of the Experience domain, we computed an Experience score of 3 for Friendly and, consequently, a score of 8 for Neutral interaction style. The resulting values are the averages among the participants’ scores.

3.5 Participants

We recruited a total of 175 participants aged between 18 and 67 years. We eliminated 29 of these since they did not pass the validation questions, which checked if they have correctly heard the name of the robot in the video. Hence, we considered data from 146 participants, of which 78 were males and 68 were females. Particularly, Survey 1 was completed by 52 participants, of which 9 were eliminated, for a total of 43 participants (22 males, 21 females). Survey 2 was completed by 66 participants, of which 6 were eliminated, for a total of 60 participants (29 males, 31 females). Finally, Survey 3 was completed by 57 participants, of which 14 were eliminated, for a total of 43 participants (27 males, 16 females).

3.6 Statistical Analysis

We evaluated the internal consistency of the new Goodspeed-VAD by considering the Cronbach’s alpha coefficient with a value \(\alpha \ge \) 0.70 [22]. The Chi-square (\(\chi ^2\)) goodness of fit test was applied to compare the observed frequencies on each mental capacity of the Dimensions of Mind Perception questionnaire. The two aggregated dimensions of mind (i.e., Experience and Agency) were compared using the non-parametric Wilcoxon signed-rank test. We applied Bonferroni’s correction for multiple comparisons (i.e., \(\alpha / k\) where k is the number of comparisons) to control for the type I error; thus, p values <0.002 (0.05/20) were considered significant. The same statistical analyses were carried out for each survey. Moreover, we performed Spearman’s rank correlation to evaluate the associations between Experience and Agency dimensions with Trust and Godspeed variables codified for each interaction style using a composite dataset. The critical alpha level was set at.05 for correlation analyses. All analyses were performed with IBM SPSS-26.

4 Results

The Goodspeed-VAD demonstrated excellent internal consistency as shown by Cronbach’s alpha of 0.950 for the Neutral interaction style, 0.969 for the Friendly interaction style, and 0.948 for the Authoritarian interaction style. In the following, we present our results on mind attribution using the dimension of mind perception questionnaire on each pair of considered interaction styles. We discuss the possible associations of different mind perception results and Goodspeed-VAD and Trust evaluation.

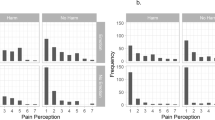

4.1 Comparison between Authoritarian and Neutral

Forty-three participants (22 males, 21 females; age mean: 43.51, standard deviation: 15.31) completed the survey after observing videos of Neutral and Authoritarian interaction styles. Regarding the Experience dimension of mind, we found that there were statistically significant differences, after applying Bonferroni’s correction, in the judgement of Personality and Consciousness items with more preferences for the Authoritarian style than for the Neutral one (Table 2). No significant difference was found between the observed frequencies on the mental capacities of the Agency dimension (Table 2). Moreover, no significant difference was found in Agency and Experience dimensions between the two interaction styles after we applied Bonferroni’s correction. (Table 2 and Fig. 3A).

4.2 Comparison between Friendly and Neutral

Sixty participants (29 males, 31 females; age mean: 29.00, standard deviation: 6.53) judged the Neutral and Friendly interaction styles on the Dimensions of Mind Perception questionnaire. As for the Experience dimension, we found that more participants considered the Friendly interaction style more capable of experiencing Hunger, Pleasure, Desire, Personality, Consciousness, and Joy than the Neutral one (Table 3). Otherwise, more participants believed that the Neutral interaction style was more capable of experiencing Fear and Rage than the Friendly one (Table 3). Regarding the Agency dimension, more participants believed that the Friendly interaction style was more able in Emotion recognition, Communication, and Thought than the Neutral one (Table 3). Furthermore, the Friendly style obtained significantly higher scores on the Experience and Agency dimensions of ToM compared to the Neutral style (Table 3 and Fig. 3B).

4.3 Comparison Between Friendly and Authoritarian

The survey on the comparison between Friendly and Authoritarian interaction styles was completed by 43 participants (27 males, 16 females; age mean: 26.67, standard deviation: 7.95). We found that more participants considered the Friendly interaction style more capable of experiencing Hunger, Pleasure, Desire, Consciousness, and Joy than the Authoritarian one (Table 4). Conversely, more participants deemed that the Authoritarian interaction style was more capable of experiencing Fear, Pain, and Rage than the Friendly one (Table 4). As for Agency, more participants believed that the Friendly interaction style was more able in Morality, Emotion recognition, Communication, and Thought than the Authoritarian one (Table 4). Moreover, we found that the Friendly style obtained significantly higher scores on the Experience and Agency dimensions of ToM compared to the Authoritarian style (Table 4 and Fig. 3C).

4.4 Dimensions of Mind, Goodspeed-VAD, and Trust

A composite dataset merging the data collected from the three surveys was constructed to analyse the relationship of the Dimensions of Mind subscores with the Goodspeed-VAD variables and Trust. As for the Neutral interaction style, we found that Agency was related to the Perceived Safety subscore of Godspeed-VAD (n = 103; \(\rho \) = 0.245; p = 0.013), whereas no significant association between Experience and Goodspeed-VAD and Trust scores was found. Considering the Authoritarian robot, the Agency was related to the Likeability subscore of Godspeed-VAD (n = 86; \(\rho \) = 0.213; p = 0.049), whereas Experience was not related to Goodspeed-VAD and Trust scores. Finally, Experience and Agency were not associated with Goodspeed-VAD and Trust scores considering the Friendly robot.

A graphical representation of the experience and agency subscores for the considered pairs. A For the Authoritarian–Neutral case, we have a statistically significant difference in the Experience subscore (averages 6.30 and 4.70). B For the Friendly-Neutral case, we have statistically significant differences both on the Experience and the Agency subscores (averages experience 6.95 and 4.05, and averages agency 4.68 and 2.32). C For the Friendly-Authoritarian case also, we have statistically significant differences both on the Experience and the Agency subscores (averages experience 6.58 and 4.42, and averages agency 4.88 and 2.12)

5 Discussion

Our first hypothesis is confirmed as the results show that the Friendly behaviour was judged more capable of experiencing positive emotions such as Pleasure, Desire, Consciousness, and Joy than the Neutral and Authoritarian; on the contrary, the last one was judged more capable of experiencing negative emotions such as Fear, Pain, Rage, (H1) with respect to the Friendly one. We discovered statistically significant differences in Personality with more attribution for both Friendly and Authoritarian interaction styles with respect to the Neutral one. To confirm this, the Neutral was designed to appear as a controlling behaviour and to provide no specific social cues. Interestingly, the Friendly interaction style was judged as more capable to express Hunger than the other two interaction styles. It should be noted that the feeling of hunger is inherently motivating since it recruits positive emotions, namely affective interest, desire, and excitement [29].

Our second hypothesis is also confirmed as the different styles of interaction had a different impact on the perception of the participants on the size of the Agency score (H2). In particular, the results showed: the Friendly style scored significantly higher on ToM’s Experience and Agency size than the Neutral style, showing that more participants believed that the Friendly interaction style was more capable of recognising emotions, communicating, and thinking than the Neutral. In addition, the Friendly style scored significantly higher on the Experience and Agency dimensions of ToM compared to the Authoritarian style, showing that more participants believed that the Friendly interaction style was more capable of morality, emotion recognition, communication, and thinking than the Authoritarian one; finally, we have not found any significant difference between Authoritarian and Neutral.

Finally, our third hypothesis is also confirmed as the results show that the perceived mental abilities of the robot are associated with the different characteristics highlighted in the Godspeed questionnaire (H3). In particular, it was found: in the Neutral interaction style, the dimension of the agency is related to the perceived security item of the Godspeed questionnaire; in the Authoritarian interaction style the dimension of Agency is related to the Pleasantness item of the Godspeed questionnaire; while in the Friendly interaction style were not found correlations with the items of the Godspeed questionnaire.

On the other hand, no correlations were found between perceived mental abilities and trust. This could be caused by an indirect interaction, as the participants did not interact directly with the robot but observed a human–robot interaction video. In addition, some studies have shown that in human–robot interaction, a cooperative task can increase the level of trust [34]. In [34], the authors demonstrated that the choice of the experimental task could lead to very different results, having a significant impact on the participants’ willingness to follow the robot’s instructions. Consequentially, trust increases the robot’s ability to be accepted as a collaborative partner [18]. Therefore, we should consider that, in our study, the type of cognitive task proposed (Attentive Matrices) by the robot was not cooperative.

6 Conclusions

In the HRI field, several studies are starting to investigate whether human beings attribute mental states to a robot while interacting with them. The propensity to recognise non-human agents as capable of having a “mind” can vary depending both on the agent’s external features (e.g., appearance and behaviour) and the observer’s internal dispositions (e.g., beliefs, expectations, motivations, individual differences, and experience). Based on this, the purpose of this study is to evaluate the different impact of three different behavioural styles namely Friendly, Neutral and Authoritarian, previously validated [8, 20, 21, 36], on Mind Attribution to the robot with a crowd-sourcing evaluation method enrolling 175 participants aged between 18 and 67 years.

Our results indicate that the personalization of the robot’s interaction style may influence users’ perception of the robot’s mental abilities. More specifically, a Friendly interaction style was judged to be more capable of experiencing positive feelings but also of communicating, thinking, recognising emotions, and behaving morally. These findings further confirm the need to design and validate the robot’s behaviour to facilitate and improve HRI in different application contexts or refrain to use such behavioural interaction styles in the case that mind attribution should be minimised. Indeed, many researchers are starting to evaluate how modelling different behavioural styles of the robot during the interaction may have an impact on performance [21] and acceptance [11]. Hence, our study also contributes to investigating possible reasons behind such differences during the interaction.

The trial was carried out online during the Covid-19 pandemic. For this reason, some of the results obtained, for example, those related to trust were influenced by the protocol adopted. Consequently, we believe that these results can be a starting point for conducting new in-presence experiments. In addition, a future objective is to evaluate the characteristics, of the different interaction styles implemented, which can influence the level of trust, using a cooperative task and through direct human–robot interaction.

References

Alimardani M, Qurashi S (2019) Mind perception of a sociable humanoid robot: a comparison between elderly and young adults. In: Iberian robotics conference, Springer, pp 96–108

Astington JW, Dack LA (2020) Theory of mind. In: Janette B. Benson (ed) Encyclopedia of infant and early childhood development (Second Edition), Elsevier, Oxford, pp 365–379

Banks J (2020) Theory of mind in social robots: replication of five established human tests. Int J Soc Robot 12(2):403–414

Bartneck C, Kulić D, Croft E, Zoghbi S (2009) Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots. Int J Soc Robot 1(1):71–81

Broadbent E, Kumar V, Li X, Sollers J, Stafford RQ, MacDonald BA, Wegner DM (2013) Robots with display screens: a robot with a more humanlike face display is perceived to have more mind and a better personality. PLOS ONE 8(8):1–9

Butler R, Pruitt Z, Wiese E (2019) The effect of social context on the mind perception of robots. Proc Human Fact Ergon Soc Ann Meet 63(1):230–234

Chakraborti T, Zhang Y, Smith DE, Kambhampati S (2016) Planning with resource conflicts in human–robot cohabitation. In: Proceedings of the 2016 international conference on autonomous agents & multiagent systems, pp 1069–1077

Cucciniello I, Sangiovanni S, Maggi G, Rossi S (2021) Validation of robot interactive behaviors through users emotional perception and their effects on trust. In: 2021 30th IEEE international conference on robot & human interactive communication (RO-MAN), IEEE, pp 197–202

Daniel DC (1987) The intentional stance. The MIT Press, Cambridge

Epley N, Waytz A (2010) Mind perception, Chapter 14, Wiley, ISBN 9780470561119

Esterwood C, Essenmacher K, Yang H, Zeng F, Robert LP (2022) A personable robot: meta-analysis of robot personality and human acceptance. IEEE Robot Autom Lett 7(3):6918–6925. https://doi.org/10.1109/LRA.2022.3178795

Gray HM, Gray K, Wegner DM (2007) Dimensions of mind perception. Science 315(5812):619–619

Haring K, Watanabe K, Silvera-Tawil D, Velonaki M, Takahashi T (2015) Changes in perception of a small humanoid robot. In: ICARA 2015—Proceedings of the 2015 6th international conference on automation, robotics and applications, pp 83–89

Hertz N, Wiese E (2017) Social facilitation with non-human agents: possible or not? Proc Human Fact Ergon Soc Ann Meet 61(1):222–225

Kurt G, Wegner DM (2012) Feeling robots and human zombies: mind perception and the uncanny valley. Cognition 125(1):125–130

Kiesler S, Powers A, Fussell SR, Torrey C (2008) Anthropomorphic interactions with a robot and robot-like agent. Soc Cogn 26:169–181

Krach S, Hegel F, Wrede B, Sagerer G, Binkofski F, Kircher T (2008) Can machines think? interaction and perspective taking with robots investigated via fmri. PloS One 3(7):e2597

Lee JJ, Knox B, Baumann J, Breazeal C, DeSteno D (2013) Computationally modeling interpersonal trust. Front Psychol 4:893

Leslie AM (1987) Pretense and representation: the origins of “theory of mind’’. Psychol Rev 94(4):412

Maggi G, Dell’Aquila E, Cucciniello I, Rossi S (2020) Cheating with a socially assistive robot? a matter of personality. In: Companion of the 2020 ACM/IEEE HRI, pp 352–354

Maggi G, Dell’Aquila E, Cucciniello I, Rossi S (2020) “Don’t get distracted”: the role of social robots’ interaction style on users’ cognitive performance, acceptance, and non-compliant behavior. Int J Soc Robot 13:2057–2069

Maggi G, Altieri M, Ilardi CR, Santangelo G (2022) Validation of a short Italian version of the barratt impulsiveness scale (bis-15) in non-clinical subjects: psychometric properties and normative data. Neurol Sci 43(8):4719–4727

Mileounis A, Cuijpers R, Barakova E (2015) Creating robots with personality: the effect of personality on social intelligence. In: Artificial computation in biology and medicine, 06

Miyake T, Kawai Y, Park J, Shimaya J, Takahashi H, Asada M (2019) Mind perception and causal attribution for failure in a game with a robot. In: 2019 28th IEEE international conference on robot and human interactive communication (RO-MAN), IEEE, pp 1–6

Mohammadi HB, Xirakia N, Abawi F, Barykina I, Chandran K, Nair G, Nguyen C, Speck D, Alpay T, Griffiths S et al (2019) Designing a personality-driven robot for a human–robot interaction scenario. In: 2019 international conference on robotics and automation (ICRA), IEEE, pp 4317–4324

Morewedge C (2009) Negativity bias in attribution of external agency. J Expe Psychol Gener 138(535–45):11

Mou W, Ruocco M, Zanatto D, Cangelosi A (2020) When would you trust a robot? a study on trust and theory of mind in human–robot interactions. In: 2020 29th IEEE international conference on robot and human interactive communication (RO-MAN), IEEE, pp 956–962

Nicolas S, Agnieszka W (2021) The personality of anthropomorphism: how the need for cognition and the need for closure define attitudes and anthropomorphic attributions toward robots. Comput Human Behav 122:106841

Ombrato MD, Phillips E (2021) The mind of the hungry agent: hunger, affect and appetite. Topoi 40(3):517–526

Paetzel-Prüsmann M, Perugia G, Castellano G (2021) The influence of robot personality on the development of uncanny feelings. Comput Human Behav 120:106756

Parenti L, Marchesi S, Belkaid M, Wykowska A (2021) Exposure to robotic virtual agent affects adoption of intentional stance. Association for Computing Machinery, New York, pp 348–353

Rossi S, Larafa M, Ruocco M (2020) Emotional and behavioural distraction by a social robot for children anxiety reduction during vaccination. Int J Soc Robot 12(3):765–777

Russell JA, Albert M (1977) Evidence for a three-factor theory of emotions. J Res Personal 11(3):273–294

Salem M, Lakatos G, Amirabdollahian F, Dautenhahn K (2015) Would you trust a (faulty) robot? effects of error, task type and personality on human-robot cooperation and trust. In: 2015 10th ACM/IEEE international conference on human–robot interaction (HRI), IEEE, pp 1–8

Saltik I, Erdil D, Urgen Burcu A (2021) Mind perception and social robots: the role of agent appearance and action types. Association for Computing Machinery, New York, pp 210–214

Sangiovanni S, Spezialetti M, D’Asaro FA, Maggi G, Rossi S (2020) Administrating cognitive tests through hri: an application of an automatic scoring system through visual analysis. In: International conference on social robotics, Springer, pp 369–380

Schaefer K (2013) The perception and measurement of human–robot trust

Schaefer KE (2016) Measuring trust in human robot interactions: development of the “trust perception scale-hri”. In: Robust intelligence and trust in autonomous systems, Springer, pp 191–218

Schreck JL, Newton OB, Song J, Fiore SM (2019) Reading the mind in robots: how theory of mind ability alters mental state attributions during human-robot interactions. In: Proceedings of the human factors and ergonomics society annual meeting, vol. 63, SAGE Publications, Sage, Los Angeles, pp 1550–1554

Shamay-Tsoory Simone G, Syvan S, Liat B-G, Medlovich HH, Yechiel L (2007) Dissociation of cognitive from affective components of theory of mind in schizophrenia. Psychiatry Res 149(1–3):11–23

Stafford RQ, MacDonald BA, Chandimal J, Wegner DM, Elizabeth B (2014) Does the robot have a mind? mind perception and attitudes towards robots predict use of an eldercare robot. Int J Soc Robot 6(1):17–32

Tabrez A, Luebbers MB, Hayes B (2020) A survey of mental modeling techniques in human-robot teaming. Curr Robo Rep 1(4):259–267

van Straten CL, Jochen P, Kühne R, Barco A (2020) Transparency about a robot’s lack of human psychological capacities: effects on child–robot perception and relationship formation. ACM Trans Human–Robot Interact (THRI) 9(2):1–22

Wallkötter S, Stower R, Kappas A, Castellano G (2020) A robot by any other frame: framing and behaviour influence mind perception in virtual but not real-world environments. In: Proceedings of the 2020 ACM/IEEE international conference on human–robot interaction, pp 609–618

Waytz A, Gray K, Epley N, Wegner DM (2010) Causes and consequences of mind perception. Trends Cognit Sci 14(8):383–388

Waytz A, Morewedge CK, Epley N, Monteleone G, Gao J-H, Cacioppo John T (2010) Making sense by making sentient: effectance motivation increases anthropomorphism. J Personal Soc Psychol 99(3):410–435

Funding

Open access funding provided by Università degli Studi di Napoli Federico II within the CRUI-CARE Agreement. This work has been partially supported by the Italian Ministry of Research (MUR) and by the European Union under the CHIST-ERA IV project COHERENT “COllaborative HiErarchical Robotic ExplaNaTions”.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Informed Consent

The survey included a digital consent form describing the nature of the study. Individuals agreed to participate after reading and accepting the digital informed consent.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cucciniello, I., Sangiovanni, S., Maggi, G. et al. Mind Perception in HRI: Exploring Users’ Attribution of Mental and Emotional States to Robots with Different Behavioural Styles. Int J of Soc Robotics 15, 867–877 (2023). https://doi.org/10.1007/s12369-023-00989-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12369-023-00989-z